HSLS_OMB_Supp Statement_20080214

HSLS_OMB_Supp Statement_20080214.doc

High School Longitudinal Study of 2009 (HSLS:09)

OMB: 1850-0852

High School Longitudinal Study of 2009 (HSLS:09)

Supporting

Statement

Request for OMB Review (SF83-I)

OMB# 1850-xxxx

Submitted by:

National Center for Education Statistics

U.S. Department of Education

February 14, 2008

Contents

List of Tables v

A. Justification 5

1. Circumstances Making the Collection of Information Necessary 5

a. Purpose of This Submission 5

b. Legislative Authorization 5

c. Prior and Related Studies 5

2. Purpose and Use of Information Collection 5

3. Use of Improved Information Technology and Burden Reduction 5

4. Efforts to Identify Duplication and Use of Similar Information 5

5. Impact on Small Businesses or Other Small Entities 5

6. Consequences of Collecting the Information Less Frequently 5

7. Special Circumstances Relating to Guidelines of 5 CFR 1320.5 5

8. Consultations Outside NCES 5

9. Explanation of Any Payment or Gift to Respondents 5

10. Assurance of Confidentiality Provided to Respondents 5

11. Justification for Sensitive Questions 5

12. Estimates of Annualized Burden Hours and Costs 5

13. Estimates of Other Total Annual Cost Burden 5

14. Annualized Cost to the Federal Government 5

15. Explanation for Program Changes or Adjustments 5

16. Plans for Tabulation and Publication and Project Time Schedule 5

17. Reason(s) Display of OMB Expiration Date Is Inappropriate 5

18. Exceptions to Certification for Paperwork Reduction Act Submissions 5

B. Collection of Information Employing Statistical Methods 5

1. Target Universe and Sampling Frames 5

2. Statistical Procedures for Collecting Information 5

a. School Frames and Samples 5

b. Student Frames and Samples 5

c. Weighting, Variance Estimation, and Imputation 5

3. Methods for Maximizing Response Rates 5

4. Individuals Consulted on Statistical Design 5

Table Page

1. Incentives by respondent type proposed for field test 5

2. Teacher incentives 5

3. Estimated burden on respondents for field test and full-scale studies 5

4. Estimated burden on parents for field test and full-scale studies 5

5. Estimated burden on teachers for field test and full-scale studies 5

6. Estimated burden on school administrators for field test and full-scale studies 5

7. Estimated burden on school counselors for field test and full-scale studies 5

8. Total costs to NCES 5

9. HSLS:09 Schedule 5

10. Illustrative school sample allocation and expected yields (full-scale study HSLS:09) 5

11. Illustrative school sample allocation and expected yields (field test HSLS:09) 5

12. Illustrative student sample allocation and expected yields for 9th- and 12th-graders (field test HSLS:09) 5

12. Illustrative student sample allocation and expected yields for 9th and 12th graders (field test HSLS:09)—Continued 5

13. Illustrative student sample allocation and expected yields for ninth-graders (full-scale study HSLS:09) 5

13. Illustrative student sample allocation and expected yields for ninth-graders (full-scale study HSLS:09)—Continued 5

14. Consultants on statistical aspects of HSLS:09 5

List of Exhibits

Exhibit Page

1. HSLS:09 data security plan outline 5

2. Preliminary outline for HSLS:09 Base-Year Field Test Report 5

High School Longitudinal Study of 2009 (HSLS:09)

This document has been prepared to support the clearance of study data elements and procedures under the Paperwork Reduction Act of 1995 and 5 CFR 1320 for the study titled High School Longitudinal Study of 2009 (HSLS:09). This study is being conducted by RTI International1—with the American Institutes for Research (AIR), Windwalker Corporation, Horizon Research Inc., Research Support Services (RSS), and MPR Associates (MPR) as subcontractors—under contract to the U.S. Department of Education (Contract number ED-04-CO-0036/0003).

The purpose of this OMB submission is to request emergency clearance for the sampling and recruitment activities for the HSLS:09 field test and main study. We will separately submit a request for clearance that includes the instrument items and data collection procedures. The separate submission is necessary to afford sufficient time to draw the sample and begin to recruit schools for the field test while continuing to work on the assessment and questionnaire items.

In this supporting statement for Standard Form (SF) 83-I, we report the purposes of the study, review the data elements for which clearance is requested, and describe how the collected information addresses the statutory provisions of Section 153 of the Education Sciences Reform Act of 2002 (P.L. 107-279). Subsequent sections of this document respond to the Office of Management and Budget (OMB) instructions for preparing supporting statements to SF 83-I. Section A addresses OMB’s specific instructions for justification and provides an overview of the study’s design and data elements. Section B describes the collection of information employing statistical methods.

A.Justification

1.Circumstances Making the Collection of Information Necessary

a.Purpose of This Submission

The materials in this document support a request for emergency clearance to conduct the sampling and recruiting activities as part of the field test and main study for HSLS:09. The basic components and key design features of HSLS:09 are summarized below:

Base Year

baseline survey of high school 9th graders, in fall term, 2009;

cognitive test in mathematics;

parents and mathematics and science teachers to be surveyed in the base year (School administrator and school counselor information will also be collected.);

administrative records collected on coursetaking behavior in grades 8 and 9;

sample sizes of 800 schools and over 21,000 students (Schools are the first-stage unit of selection, with 9th graders randomly selected within schools.);

oversampling of private schools and Asians/Pacific Islanders; and

First Follow-up

Specifications have not yet been provided for follow-ups to the base year study, though the following have been discussed:

follow-up in 2012 in the spring term, when most sample members are juniors, but some are dropouts or in other grades;

student questionnaires, mathematics assessment, and school administrator questionnaires to be administered;

returning to the same schools, but separately following transfer students; and

high school transcript component in 2013 (records data for grades 9–12).

Second Follow-up

post–high school follow-ups by web survey and computer-assisted telephone interview.

HSLS:09 will provide a link to its predecessor longitudinal studies, which address many of the same issues of transition from high school to postsecondary education and the labor force. At the same time, HSLS:09 will bring a new and special emphasis to the study of youth transition by exploring the path that leads students to pursue and persist in courses and careers in the fields of science, technology, engineering, and mathematics (STEM). HSLS:09 will measure math achievement gains in the first 3 years of high school, but also will relate tested achievement to students’ choice, access, and persistence—both in mathematics and science courses in high school, and thereafter in the science, technology, engineering, and mathematics pipelines in postsecondary education and in STEM careers. That is to say, the HSLS:09 assessments will serve not just as an outcome measure, but also as a predictor of readiness to proceed into STEM courses and careers. Questionnaires will focus on factors that motivate students for STEM coursetaking and careers.

Additionally, HSLS:09 will focus on students’ decisionmaking processes. Generally, the study will question students on when, why, and how they make decisions about courses and postsecondary options, including what factors, from parental input to considerations of financial aid for postsecondary education, enter into these decisions.

HSLS:09 supports two of the three goals of the American Competitiveness Initiative (ACI), which aims to strengthen math and science education, foreign language studies, and the high school experience in the United States. Information collected from students, parents, teachers, counselors, and school administrators will help to inform and shape efforts to improve the quality of math and science education in the United States, increase our competitiveness in STEM-related fields abroad, and improve the high school experience.

There are several reasons why the transition into adulthood is of special interest to federal policy and programs. Adolescence is a time of physical as well as psychological changes. Attitudes, aspirations, and expectations are sensitive to the stimuli that adolescents are exposed to, and environments influence the process of choosing among opportunities. Parents, educators, and those involved in policy decisions in the educational arena all share the need to understand the effects that the presence or absence of good educational guidance from the school, in combination with that from the home, can have on the educational, occupational, and social success of youth.

These patterns of transition cover individual as well as institutional characteristics. At the individual level the study will look into educational attainment and personal development. In response to policy and scientific issues, data will also be provided on the demographic and background correlates of educational outcomes. At the institutional level, HSLS:09 will focus on school effectiveness issues, including tracking, promotion, retention, and curriculum content, structure, and sequencing, especially as these affect students’ choice of and assignment to different mathematics and science courses and achievement in these two subject areas.

By collecting extensive information from students, parents, teachers, school counselors, school administrators, and school records, it will be possible to investigate the relationship between home and school factors and academic achievement, interests, and social development at this critical juncture. The school environment will be captured primarily through student, teacher, and administrator reports. The extent to which schools are expected to provide special services to selected groups of students to compensate for limitations and poor performance (including special services to assist those lagging in their understanding of mathematics and science) will be examined. Base year teachers will report on sampled students’ specific classroom environment, as well as supply information about their own background and training. Moreover, the study will focus (in particular through the base-year parent survey) on basic policy issues related to parents’ role in the educational success of their children, including parents’ educational attainment expectations for their children, beliefs about and attitudes toward curricular and postsecondary educational choices, and the correlates of active parental involvement in the school; these are among the many questions HSLS:09 will address about the home education support system and its interaction with the student and the school.

Additionally, since the survey will focus on ninth-graders, it will also permit the identification and study of high school dropouts and underwrite trend comparisons with dropouts identified and surveyed in the High School and Beyond Longitudinal Study (HS&B), the National Education Longitudinal Study of 1988 (NELS:88), and the Education Longitudinal Study of 2002 (ELS:2002).

In sum, through its core and supplemental components, HSLS:09 data will allow researchers, educators, and policymakers to examine motivation, achievement, and persistence in STEM coursetaking and careers. More generally, HSLS:09 data will allow researchers from a variety of disciplines to examine changes in young people’s lives and their connections with communities, schools, teachers, families, parents, and friends along a number of dimensions, including the following:

academic (especially in math and science), social, and interpersonal growth;

transitions from high school to postsecondary education, and from school to work;

students’ choices about, access to, and persistence in math and science courses, majors, and careers.

the characteristics of high schools and postsecondary institutions and their impact on student outcomes;

family formation, including marriage and family development, and how prior experiences in and out of school correlate with these decisions; and

the contexts of education, including how minority and at-risk status is associated with education and labor market outcomes.

b.Legislative Authorization

HSLS:09 is sponsored by the National Center for Education Statistics (NCES), within the Institute of Education Sciences (IES), in close consultation with other offices and organizations within and outside the U.S. Department of Education (ED). HSLS:09 is authorized under Section 153 of the Education Sciences Reform Act of 2002 (P.L. 107-279, Title 1 Part C), which requires NCES to

“collect, report, analyze, and disseminate statistical data related to education in the United States and in other nations, including —

(1) collecting, acquiring, compiling (where appropriate, on a State-by-State basis), and disseminating full and complete statistics … on the condition and progress of education, at the preschool, elementary, secondary, postsecondary, and adult levels in the United States, including data on—

(A) State and local education reform activities; …

(C) student achievement in, at a minimum, the core academic areas of reading, mathematics, and science at all levels of education;

(D) secondary school completions, dropouts, and adult literacy and reading skills;

(E) access to, and opportunity for, postsecondary education, including data on financial aid to postsecondary students; …

(J) the social and economic status of children, including their academic achievement…

(2) conducting and publishing reports on the meaning and significance of the statistics described in paragraph (1);

(3) collecting, analyzing, cross-tabulating, and reporting, to the extent feasible, information by gender, race, ethnicity, socioeconomic status, limited English proficiency, mobility, disability, urbanicity, and other population characteristics, when such disaggregated information will facilitate educational and policy decisionmaking; …

(7) conducting longitudinal and special data collections necessary to report on the condition and progress of education…”

Section 183 of the Education Sciences Reform Act of 2002 further states that

“all collection, maintenance, use, and wide dissemination of data by the Institute, including each office, board, committee, and Center of the Institute, shall conform with the requirements of section 552A of title 5, United States Code [which protects the confidentiality rights of individual respondents with regard to the data collected, reported, and published under this title].”

c.Prior and Related Studies

In 1970 NCES initiated a program of longitudinal high school studies. Its purpose was to gather time-series data on nationally representative samples of high school students that would be pertinent to the formulation and evaluation of educational polices.

Starting in 1972 with the National Longitudinal Study of 1972 (NLS:72), NCES began providing educational policymakers and researchers with longitudinal data that linked educational experiences with later outcomes, such as early labor market experiences and postsecondary education enrollment and attainment. The NLS:72 cohort of high school seniors was surveyed five times (in 1972, 1973, 1974, 1979, and 1986). A wide variety of questionnaire data were collected in the follow-up surveys, including data on students’ family background, schools attended, labor force participation, family formation, and job satisfaction. In addition, postsecondary transcripts were collected.

Almost 10 years later, in 1980, the second in a series of NCES longitudinal surveys was launched, this time starting with two high school cohorts. High School and Beyond (HS&B) included one cohort of high school seniors comparable to the seniors in NLS:72. The second cohort within HS&B extended the age span and analytical range of NCES’ longitudinal studies by surveying a sample of high school sophomores. With the sophomore cohort, information became available to study the relationship between early high school experiences and students’ subsequent educational experiences in high school. For the first time, national data were available showing students’ academic growth over time and how family, community, school, and classroom factors promoted or inhibited student learning. In a leap forward for educational research, researchers, using data from the extensive battery of cognitive tests within HS&B, were also able to assess the growth of cognitive abilities over time. Moreover, data were now available to analyze the school experiences of students who later dropped out of high school. These data became a rich resource for policymakers and researchers over the next decade and provided an empirical base to inform the debates of the educational reform movement that began in the early 1980s. Both cohorts of HS&B participants were resurveyed in 1982, 1984, and 1986. The sophomore cohort was also resurveyed in 1992. Postsecondary transcripts also were collected for both cohorts.

The third longitudinal study of students sponsored by NCES was the National Education Longitudinal Study of 1988 (NELS:88). NELS:88 further extended the age and grade span of NCES longitudinal studies by beginning the data collection with a cohort of eighth graders. Along with the student survey, it included surveys of parents, teachers, and school administrators. It was designed not only to follow a single cohort of students over time (as had NCES’s earlier longitudinal studies, NLS:72 and HS&B), but also, by “freshening” the sample at each of the first two follow-ups, to follow three nationally representative grade cohorts over time (8th-grade, 10th-grade, and 12th-grade cohorts). This provided not only comparability of NELS:88 to existing cohorts, but it also enabled researchers to conduct both cross-sectional and longitudinal analyses of the data. In 1993, high school transcripts were collected, further increasing the analytic potential of the survey system. Students were interviewed again in 1994 and 2000, and in 2000–2001 their postsecondary educational transcripts were collected. In sum, NELS:88 represents an integrated system of data that tracked students from middle school through secondary and postsecondary education, labor market experiences, and marriage and family formation.

The Education Longitudinal Study of 2002 (ELS:2002) was the fourth longitudinal high school cohort study conducted by NCES. ELS:2002 started with a sophomore cohort and was designed to provide trend data about the critical transitions experienced by students as they proceed through high school and into postsecondary education or their careers. Student questionnaires and assessments in reading and mathematics were collected along with surveys of parents, teachers, and school administrators. In addition, a facilities component and school library/media studies component were added for this study series. Freshening occurred at the first follow-up in 2004 to allow for a nationally representative cohort of high school seniors, which was followed by the collection of high school transcripts. An additional follow-up was conducted in 2006.

These studies have investigated the educational, personal, and vocational development of students, and the school, familial, community, personal, and cultural factors that affect this development. Each of these studies has provided rich information about the critical transition from high school to postsecondary education and the workforce. HSLS:09 will continue on the path of its predecessors while also focusing on the factors associated with choosing, persisting in, and succeeding in STEM coursetaking and careers.

2.Purpose and Use of Information Collection

HSLS:09 is intended to be a general-purpose dataset, that is, it will be designed to serve multiple policy objectives. Policy issues to be studied through HSLS:09 include the identification of school attributes associated with achievement (especially in mathematics); the influence that parent and community involvement have on students’ achievement and development; the factors associated with dropping out of the educational system; changes in educational practices over time; and the transition of different groups (for example, racial and ethnic, gender, and socioeconomic status groups) from high school to postsecondary institutions and the labor market, and especially into STEM curricula and careers. HSLS:09 will inquire into students’ values and goals, investigate factors affecting risk and resiliency, gather information about the social capital available to sample members, inquire into the nature of student interests and decision-making, delineate students’ curricular and extracurricular experiences, and catalogue their school programs and coursetaking experiences and results. HSLS:09 will obtain teacher evaluations of the effort and ability of each student as well as information about the classroom and teacher background. HSLS:09 will include measures of school climate, each student’s native language and language use, student and parental educational expectations, attendance at school, course and program selection, planning for college, interactions with teachers and peers, perceptions of safety in school, parental income, resources, and home education support system. The HSLS:09 data elements will support research that speaks to the underlying dynamics and educational processes that influence student achievement, growth, and personal development over time.

The objectives of HSLS:09 also encompass the need to support both longitudinal and cross-cohort analyses and to provide a basis for important descriptive cross-sectional analyses as well. HSLS:09 is first and foremost a longitudinal study; hence survey items will be chosen for their usefulness in predicting or explaining future outcomes as measured in later survey waves. Compared to its earlier counterparts, there are considerable changes to the design of HSLS:09 that will have some impact on the ability to produce trend comparisons. NELS:88 began with an eighth-grade cohort in the spring term; while this cohort is not markedly different from the fall-term ninth-grade cohort of HSLS:09 in terms of student knowledge base, it differs at the school level in that the HSLS:09 time point represents the beginning of high school rather than the point of departure from middle school. HSLS:09 includes a spring-term 11th-grade follow-up (even though none of the predecessor studies do) because only modest gains have been seen on assessments in the final year of high school and the 11th-grade follow-up minimizes unit response problems associated with testing in the spring term of the senior year. The design of HSLS:09 calls for information to be collected from parents of 12th-graders and the use of transcripts to provide continuous data for grades 9–12. These data elements will provide the basis for trend analysis between HSLS:09 and its predecessor studies.

We are exploring the possibility of conducting a pilot test prior to the field test to determine the feasibility of using school computers and to test out the computer-based assessment. The survey questions would not be included in this pilot test. The purpose of this pilot test would be to help us understand the issues associated with using school computers for the student assessment and to test out issues associated with programming the assessment items. Pilot testing these two issues before the field test allows the field test to be dedicated to testing the efficacy of the items, which could be compromised if we experience unexpected difficulties with the computers themselves or with how the assessment screens were programmed.

As part of the pilot test, we plan to ask a series of questions to a convenience sample of about 20 schools to identify the issues associated with using the school’s computer laboratories and computer equipment for the student component of the HSLS:09. At each school, we will ask about the availability of a computer lab or a location at the school with computers that might be available for the sessions. For schools that have computers available, we will ask about the capacity of the computer lab (or other location with a set of computers) with regard to number of computers and internet connectivity, the security of the computers at the school, and whether RTI and NCES will be permitted to use the computer lab (or comparable location with a set of computers) to conduct HSLS:09. As a back-up, we are prepared to bring in 5 laptops per school to conduct the student assessment and survey. The questions we plan to ask the school are:

Do you have a computer lab in your school or other location with multiple computers?

How many computers are there in the computer lab (or comparable location) that can be connected to the Internet?

What type of internet connections do you have in the computer lab (or comparable location)?

High Speed Connection

Dial-up connection

None

Which operating system (Windows 2000/XP, Mac O/S, Linux, etc.) runs on these computers?

What web browser(s) (name and version) are installed on these computers? (i.e., Internet Explorer 6.0, Mozilla Firefox 2.0, Netscape 6, etc.)

Is the internet activity of these computers recorded and/or monitored in any way?

How many students and/or classes per day use the computers in the computer lab?

Can RTI International use the computers at the school for conducting the web-based student assessment and survey for students participating in the High School Longitudinal Study?

Are the school computers protected by:

antivirus software

anti-spyware software

internet firewall?

Will you allow RTI International to run checks on the school computers to verify that they are not infected with viruses or spyware?

Will you allow RTI International to remove viruses and spyware found as the result of the check proposed in Question 9?

In addition to asking questions of the school, we will ask approximately 3-5 schools to allow us to pilot test the computer-based assessment. We will ask students from these schools to complete preliminary assessment screens to identify issues such as the presentation or display of the items which could impact the responses provided by students.

The content of the assessment battery and the questionnaires will be discussed in a later OMB submission, and data elements for the questionnaires will be explicitly presented at that time.

3.Use of Improved Information Technology and Burden Reduction

For the first time in the series of NCES longitudinal studies, all questionnaire data will be collected in electronic media only. In addition, the student assessment will also be a computer-assisted two-stage adaptive test. For the student component, we will use the school’s computer lab when available, and, as a backup, we will bring multiple laptops into the school for use by the sampled students. A member of the research team will be present to assist students with computer issues as needed.

School administrators, teachers, and parents will be given a username and password and will be asked to complete the questionnaire via the Internet. Follow-up for school administrators, teachers, and parents who do not complete the web questionnaire by self-administration will be in the form of computer-assisted telephone interviewing (CATI). Computer control of interviewing offers accurate and efficient management of survey activities, including case management, scheduling of calls, generation of reports on sample disposition, data quality monitoring, interviewer performance, and flow of information between telephone and field operations.

Additional features of the system include (1) online help for each screen to assist interviewers in question administration; (2) full documentation of all instrument components, including variable ranges, formats, record layouts, labels, question wording, and flow logic; (3) capability for creating and processing hierarchical data structures to eliminate data redundancy and conserve computer resources; (4) a scheduler system to manage the flow and assignment of cases to interviewers by time zone, case status, appointment information, and prior cases disposition; (5) an integrated case-level control system to track the status of each sample member across the various data collection activities; (6) automatic audit file creation and timed backup to ensure that, if an interview is terminated prematurely and later restarted, all data entered during the earlier portion of the interview can be retrieved; and (7) a screen library containing the survey instrument as displayed to the interviewer.

4.Efforts to Identify Duplication and Use of Similar Information

Since the inception of its secondary education longitudinal studies program in 1970, NCES has consulted with other federal offices to ensure that the data collected in this important series of longitudinal studies do not duplicate the information from any other national data sources within the U.S. Department of Education or other government agencies. In addition, NCES staff have regularly consulted with nonfederal associations such as the College Board, American Educational Research Association, the American Association of Community Colleges, and other groups to confirm that the data to be collected through this study series are not available from any other sources. These consultations also provided, and continue to provide through the HSLS:09 Technical Review Panel, methodological insights from the results of other studies of secondary and postsecondary students and labor force members, and they ensure that the data collected through HSLS:09 will meet the needs of the federal government and other interested agencies and organizations.

Other longitudinal studies of secondary and postsecondary students (i.e., NLS:72, HS&B, NELS:88, ELS:2002) have been sponsored by NCES in the past. HSLS:09 builds on and extends these studies rather than duplicating them. These earlier studies were conducted during the 1970s, 1980s, 1990s, and the early 2000s and represent educational, employment, and social experiences and environments different from those experienced by the HSLS:09 student sample. In addition to extending prior studies temporally as a time series, HSLS:09 will extend them conceptually. The historical studies do not fully provide the data that are necessary to understand the role of different factors in the development of student commitment to attend higher education and then to take the steps necessary to succeed in college (take the right courses, take courses in specific sequences, etc.). Using items and inventories, the study will enable researchers to move beyond the traditional covariates to ask, “How do students and parents construct their choice set?” Further, HSLS:09 will focus on the factors associated with choosing and persisting in mathematics and science coursetaking and STEM careers. These focal points present a marked difference between HSLS:09 and its predecessor studies.

The only other dataset that offers so large an opportunity to understand the key transitions into postsecondary institutions and/or the world of work, is the Department of Labor (Bureau of Labor Statistics) longitudinal cohorts, the National Longitudinal Survey of Youth 1979 and 1997 cohorts (NLSY79, NLSY97). Clearly, however, the NLSY youth cohorts represent temporally earlier cohorts than HSLS:09. There are also important design differences between the NLSY79/NLSY97 and HSLS:09 that render them more complementary than duplicative. NLSY is a household-based longitudinal survey; HSLS:09 is school based. For both NLSY cohorts, baseline Armed Service Vocational Aptitude Battery (ASVAB) test data are available, but there is no longitudinal high school achievement measure. While the NLSY97 also gathers information from schools (including principal and teacher reports and high school transcripts), it cannot study school processes in the same way as HSLS:09, given its household sampling basis. Any given school contains only one to a handful of NLSY97 sample members, a number that constitutes neither a representative sample of students in the school, nor a sufficient number to provide within-school estimates. Thus, although both studies provide important information for understanding the transition from high school to the labor market, HSLS:09 is uniquely able to provide information about educational processes and within-school dynamics and how these affect both school achievement and ultimate labor market outcomes, including outcomes in science, technology, engineering, and mathematics education and occupations.

5.Impact on Small Businesses or Other Small Entities

This section has limited applicability to the proposed data collection effort. Target respondents for HSLS:09 are individuals (typically nested within an institutional context) of public and private schools; base-year data collection activities will involve no burden to small businesses or entities.

6.Consequences of Collecting the Information Less Frequently

This submission describes the field test and full-scale data collection for the base year of HSLS:09. Base-year data collection will take place in the fall of 2009, preceded by a field test in 2008. First follow-up data collection will take place 2½ years later, in the spring term of 2012, with a field test in 2011. The initial out-of-school follow-up is tentatively scheduled for 3 years thereafter.

The rationale for conducting HSLS:2009 is based on a historical national need for information on academic and social growth, school and work transitions, and family formation. In particular, recent education and social welfare reform initiatives, changes in federal policy concerning postsecondary student support, and other interventions necessitate frequent studies. Repeated surveys are also necessary because of rapid changes in the secondary and postsecondary educational environments and the world of work. Indeed, longitudinal information provides better measures of the effects of program, policy, and environmental changes than would multiple cross-sectional studies.

To address this need, NCES began the National Longitudinal Studies Program more than 35 years ago with the National Longitudinal Study of 1972 (NLS:72). This study collected a wide variety of data on students’ family background, schools attended, labor force participation, family formation, and job satisfaction at five data collection points through 1986. NLS:72 was followed approximately 10 years later by High School and Beyond (HS&B), a longitudinal study of two high school cohorts (10th- and 12th-grade students). The National Education Longitudinal Study of 1988 (NELS:88) followed an eighth-grade cohort, which, upon completion in 2000, reflected a modal respondent age of about 26 years. The Education Longitudinal Study of 2002 (ELS:2002) followed a 10th-grade cohort and allows for the availability of a 32-year trend line.

The scheduled student follow-ups of HSLS:09 are less frequent than the 2-year interval employed with HS&B, NELS:88, and ELS:2002. The first follow-up takes place at 2½ years after the base year, and the second follow-up 3 years after the first follow-up. However, parent data may be collected at grade 12, and a high school transcripts study to be conducted soon after graduation will provide continuous coursetaking data for the cohort’s high school careers for all on-time or early completers. The initial data collection occurs at the start of the students’ high school careers and will allow researchers to understand decisionmaking processes as they pertain to the selection of STEM-related courses. By following up at the end of the students’ junior year, researchers will be able to measure achievement gain as well as postsecondary planning information. Collecting parent and transcript information in the 12th grade will minimize burden on schools and respondents, while also allowing for further intercohort comparability with the main transition themes of the prior studies. The second follow-up is scheduled to occur in the second year after high school, which is on track with the timing of the predecessor studies, thus facilitating comparisons in the domain of postsecondary access and choice. Despite the changes in grade cohorts and data collection time points for the first two rounds, general trends will still be measurable, since the same key transitions, albeit with slightly different data collection points, will be captured with the HSLS:09 data.

Probably the most cost-efficient and least burdensome method for obtaining continuous data on student careers through the high school years comes through the avenue of collecting school records. In most cases, transcript data are more accurate than self-report data as well. High school transcripts were collected for a subsample of the HS&B sophomore cohort, as well as for the entire NELS:88 cohort retained in the study after eighth grade and the entire ELS:2002 sophomore and senior cohorts. The collection of administrative records will take place at the onset of HSLS:09 to identify coursetaking behaviors in grades 8 and 9, and a full transcript study is tentatively scheduled to take place after high school graduation.

7.Special Circumstances Relating to Guidelines of 5 CFR 1320.5

All data collection guidelines in 5 CFR 1320.5 are being followed. No special circumstances of data collection are anticipated.

8.Consultations Outside NCES

Consultations with persons and organizations both internal and external to the National Center for Education Statistics, the U.S. Department of Education (ED), and the federal government have been pursued. In the planning stage for the HSLS:09, there were many efforts to obtain critical review and to acquire comments regarding project plans and interim and final products. We are in the process of convening the Technical Review Panel, which become the major vehicle through which future consultation is achieved in the course of the project. Consultants outside ED and members of the Technical Review Panel include the following individuals:

Technical Review Panel

Dr. Clifford Adelman

The Institute for Higher Education Policy • Suite 400

1320 19th Street, NW

Washington, DC 20036

Phone: (202) 861-8223 ext 228

Fax: (202) 861-9307

E-mail: [email protected]

Dr. Kathy Borman

Department of Anthropology, SOC 107 University of South Florida

4202 Fowler Avenue Tampa, FL 33620

Phone: (813) 974-9058

E-mail: [email protected]

Dr. Daryl E. Chubin

Director, Center for Advancing Science & Engineering Capacity

American Association for the Advancement of Science (AAAS)

1200 New York Avenue NW

Washington, DC 20005

Dr. Jeremy Finn

State University of New York at Buffalo Graduate School of Education

409 Baldy Hall

Buffalo, NY 14260

Phone: (716) 645-2484

E-mail: [email protected]

Dr. Thomas Hoffer

NORC

1155 E. 60th Street

Chicago, IL 60637

Phone: (773) 256-6097

E-mail: [email protected]

Dr. Vinetta Jones

Howard University

525 Bryant Street NW

Academic Support Building

Washingon DC 20059

Phone: (202) 806-7340 or (301) 395-5335

E-mail: [email protected]

Dr. Donald Rock

Before 10/15: K11 Shirley Lane

Trenton NJ 08648

Phone: 609-896-2659

After 10/15: 9357 Blind Pass Rd, #503

St Pete Beach, FL 33706

Phone : (727) 363-3717

E-mail: [email protected]

Dr. James Rosenbaum.

Institute for Policy Research

Education and Social Policy

Annenberg Hall 110 EV2610

Evanston, Illinois 60204

Phone: (847) 491-3795

E-mail: [email protected]

Dr. Russ Rumberger.

Gevirtz Graduate School of Education

University of California

Santa Barbara, CA 93106.

Phone: (805) 893-3385

E-mail: [email protected]

Dr. Philip Sadler

Harvard-Smithsonian Center

for Astrophysics

60

Garden St.,

MS 71

Cambridge, MA 02138.

Office: D-315, Phone: (617) 496-4709,

Fax: (617) 496-5405.

E-mail: [email protected]

Dr. Sharon Senk

Department of Mathematics

Division of Science and Mathematics Education

D320 Wells Hall

Phone: (517) 353-4691 ( office )

E‑mail: [email protected]

Dr. Timothy Urdan

Santa Clara University

Department of Psychology

500 El Camino Real

Santa Clara, CA 95053

Phone: (408) 554-4495

Fax: (408) 554-5241

E-mail: [email protected].

Other Consultants Outside ED

Dr. Eric Bettinger, Associate Professor, Economics

Case Western Reserve University

Weatherhead School of Management

10900 Euclid Avenue

Cleveland, Ohio 44106

Phone: (216) 386-2184

E-mail: [email protected]

Dr. Audrey Champagne, Professor Emerita

University of Albany

Educational Theory and Practice

Education 119

1400 Washington Avenue

Albany NY 12222

Phone: (518) 442-5982

E-mail: none listed

Dr. Stefanie DeLuca, Assistant Professor

Johns Hopkins University

School of Arts and Sciences

Department of Sociology

532 Mergenthaler Hall

3400 North Charles Street

Baltimore, Maryland 21218

Phone: (410) 516-7629

E-mail: [email protected]

Dr. Laura Hamilton

RAND Corporation

4570 Fifth Avenue, Suite 600

Pittsburgh, PA 15213

Phone: (412) 683-2300 x4403

E‑mail: [email protected]

Dr. Jacqueline King

Director for Policy Analysis

Division of Programs and Analysis

American Council for Education

Center for Policy Analysis

One Dupont Circle NW

Washington DC, 20036

Phone: (202) 939-9551 | Fax: 202-785-2990

E-mail: j[email protected]

Dr. Joanna Kulikowich, Professor of Education

The Pennsylvania State University

232 CEDAR Building

University Park, PA 16802-3108

Phone: (814) 863-2261

E‑mail: [email protected]

Dr. Daniel McCaffrey

RAND Corporation

4570 Fifth Avenue, Suite 600

Pittsburgh, PA 15213

Phone: (412) 683-2300 x4919

E-mail: [email protected]

Dr. Jeylan Mortimer

University of Minnesota - Dept of Sociology

909 Social Sciences Building,

267 19th Avenue South,

Minneapolis, MN 55455

Room 1014a Social Sciences

Phone: (612) 624-4064

E-mail: [email protected]

Dr. Aaron Pallas

Teachers College

Columbia University

New York, NY 10027

Phone: (646) 228-7414

E-mail: [email protected]

Ms. Senta Raizen, Director

WestEd

Nat’l Ctr. For Improving Science Education

1840 Wilson Blvd, Suite 201A

Arlington, Virginia 22201-3000

Phone: (703) 875-0496

Fax: (703) 875-0479

E-mail: [email protected]

9.Explanation of Any Payment or Gift to Respondents

Table 1 shows the incentive structure, by respondent type, requested for HSLS:09. In some cases, incentive experiments have been proposed to determine the effectiveness of the incentive on response rates. A description and rationale for each incentive or incentive experiment is provided below.

Table 1. Incentives by respondent type proposed for field test

Respondent |

Experiment? |

Incentive |

School |

Yes |

$500 technology allowance vs. $0 |

Student (in-school administration) |

Yes |

$20 cash vs. $10 |

Student (web/CATI) |

No |

$20 check mailed to respondent |

School coordinator |

No |

$100 cash base honorarium; up to $150 cash for high student response |

Math or science teacher |

No |

$10 to $40 check mailed to respondent; sliding scale based on the number of students to report |

School counselor |

No |

No incentive |

School administrator |

No |

No incentive |

Parents |

No |

No incentive |

NOTE: CATI = computer-assisted telephone interviewing.

Incentives for schools. Securing the cooperation of schools to participate in voluntary research has become increasingly difficult. Our experience is that many schools already feel burdened by mandated “high stakes” testing and, at the same time, are hampered by fiscal and staffing constraints. Moreover, we will face roadblocks not only at the school, but also at the district level, where research studies must sometimes comply with stringent requirements to submit formal and detailed applications similar to those one would submit to an IRB before individual schools can even be contacted. A successful incentive program can greatly reduce labor costs associated with school recruitment and refusal conversion efforts.

Upon suggestions from the government to consider offering an incentive to schools and that laptop computers are an appropriate level of incentive, we have designed an experiment for the field test to determine if this level of incentive would encourage schools to participate in HSLS:09. We considered offering laptop computers as an incentive, though this idea was ruled out after considering drawbacks such as compatibility, usefulness of equipment, and security issues involved with transferring laptops containing confidential information to school staff. An incentive experiment was proposed at the school-level for the field test to help offset some of the challenges associated with obtaining school cooperation. For the field test, we planned for an experiment comparing the effect of a $500 technology allowance against no incentive. All schools within a given district would receive the same incentive. The technology allowance would be in the form of a check written to the school that can be used at the school’s discretion, though we recommend it be used toward technology for the school to align with the focus of the study.

Incentives for students. We have planned an incentive experiment to test the effectiveness of a $20 student incentive versus a $10 incentive on student response rates in the schools. The $20 incentive is the same incentive as was offered to seniors in the ELS:2002 First Follow-Up Study. As part of ELS:2002 First Follow-Up Field Test, RTI conducted an experiment that suggested the efficacy of using a $20 student incentive for high school students. The efficacy of this incentive was confirmed in the main study, which achieved a 93.5 percent in-school student response rate.

Our experience from several recent large-scale nationally-representative data collections with an assessment component demonstrates that this level of incentive is necessary to achieve target response rates, as shown in Table 2. The HSLS:09 RFP called for a minimum response rate of 92 percent. The ELS:2002 Base Year student data collection fell five percentage points short of the 92% student participation rate with a token incentive. We also fell short of 92 percent with the $15 incentive for the 15-year-old sample in PISA, but we achieved 93% in the ELS First Follow-up with the $20 incentive. It was for that reason that we requested to test the $20 incentive for the HSLS Field Test.

For the ELS:2002 First Follow-Up Study, seniors were offered this incentive as a motivation to attend the spring data collection session at a time when seniors are typically apathetic toward participation in additional testing activities. This level of incentives is requested for the 9th grade cohort to offset some of the stress associated with test taking. Students are reporting more and more frequently that they would prefer to remain in their assigned class than participate in a research study to minimize missing important lessons that would prepare them for high-stakes testing. To encourage these students to leave their assigned class to participate in the study, we request to incentivize 9th grade students at the same level as was successful with 12th grade students in 2004. All participating students at a school will receive the same level of incentive. Student-level incentives also aid in motivating school officials to participate by giving something back to the students. A student incentive has worked successfully on recent studies. Table 2 shows the incentives that were offered on recent NCES studies and the response rate achieved.

Table 2. Student Incentives on Prior Studies

Study |

Grade or Age |

Incentive |

Student Response Rate, % |

PIRLS 2006 |

4th Grade |

Token* |

95.6 |

PISA 2006 |

Age 15 |

$15** |

90.7 |

ELS:2002 First Follow-Up |

12th Grade |

$20 |

93.6 |

ELS:2002 Base Year |

10th Grade |

Token |

87.3 |

* High student response could be attributed, in part, to the classroom sampling model employed on the study and the effectiveness of the token incentive for the younger population.

** About half of the schools took part outside of school hours (either after school or on a Saturday) for a higher student incentive. The response rate provided here is only for the students who participated during school hours.

Incentives for students will be provided only with the permission of the school principal. In cases where the principal is reluctant to have Session Administrators give cash to students, we will offer gift certificates, donations to student groups, or other equivalent contributions approved by the schools.

It is possible that some students will be unable to participate during the in-school session. In these situations, we will work with the school to obtain contacting information for the students and attempt to have the student complete the student questionnaire via a web survey or CATI. We anticipate that the number of students completing the questionnaire via web survey or CATI will be small. We propose to offer these students $20 for participating.

School coordinator honorarium. The role of the school coordinator bears a heavy burden to ensure that data collection is successful in the school. The coordinator is expected to coordinate logistics with the data collection contractor, supply a list of eligible students for sampling to the data collection contractor, supply parent contacting information for sampled students, communicate with teachers about the study, distribute parental consent forms and reminder notices, coordinate the assignment of students to each session, assist the test administrator in ensuring the sampled students attend the testing session, assist the test administrator in arranging for follow-up sessions as needed and distribute materials for the staff components of the study. All of these activities will occur under a tight timeline for HSLS:09 due to the fall data collection and the time at which the student list is ready at the school.

The school coordinator honorarium is based on the percentage of sampled students who participate in the study. The role of the school coordinator is critical for the success of the study. We planned for the same level of honorarium as was offered in PISA 2006 based on the similar timing and burden on the school coordinator. The school coordinator honorarium is planned at a base of $100 with up to an additional $50 for achieving high student response at the school. This is an increase from the honorarium offered to school coordinators in the 2004 round of ELS, which ranged from $50 as the base incentive to $100 for coordinators in schools that had high student response rates. We propose increasing the level of these incentives to $100 and $150 respectively, to compensate for the additional work that is required to ensure that we receive a complete list of ninth graders as quickly as possible at the start of the school year as well as to compensate for the logistical burden of coordinating multiple sessions when required due to computer lab or laptop capacity in the school. This incentive is planned for the field test and main study.

Incentives for teachers. Math and science teachers will provide information on classroom attributes, teaching practices, and teaching experience. Past experience has demonstrated the need for a teacher-level incentive to achieve high response rates and many schools have required that teacher compensation be commensurate with their hourly wage. Thus, we have proposed a $25 teacher incentive for both the field test and main study.

Incentive for counselors. We are not proposing to offer an incentive for the counselors to complete their questionnaires. Counselors would typically provide the information requested in the questionnaire as well as the administrative records as part of their normal duties. Because of the nature of the study, we suspect that many school principals will designate a counselor to perform the school coordinator duties, in which case the counselor will receive the coordinator honorarium described above.

Incentive for school administrators. We have achieved high response rates for the school administrator questionnaire on ELS:2002, the ELS:2002 follow-up conducted in 2004, and in PISA:2006. Based on past experience, we are not offering an incentive for the school administrator questionnaire on HSLS:09.

Incentives for parents. There is no precedent for offering an incentive to complete the parent questionnaire. Thus, we have not included a parent incentive in our budget for the HSLS:09.

Reimbursement of reasonable school expenses. In some cases there may be requests from schools for reimbursement of expenses associated with the testing session (for example, keeping the school open for a special make-up testing session that occurs outside of normal school hours). Such cases will be reviewed by project staff on an individual basis and will be approved if the request is deemed reasonable.

10.Assurance of Confidentiality Provided to Respondents

RTI has developed a data security plan (DSP) for HSLS:09 that was acceptable to Neil Russell and the computer security review board. The HSLS:09 plan will strengthen confidentiality protection and data security procedures developed for ELS:2002 and represents best-practice survey systems and procedures for protecting respondent confidentiality and securing survey data. An outline of this plan is provided in exhibit 1. The HSLS:09 DSP will:

Exhibit 1. HSLS:09 data security plan outline

HSLS:09 Data Security Plan Summary Maintaining the Data Security Plan Information Collection Request Our Promise to Secure Data and Protect Confidentiality Personally Identifying Information That We Collect and/or Manage Institutional Review Board Human Subject Protection Requirements Process for Addressing Survey Participant Concerns Computing System Summary General Description of the RTI Networks General Description of the Data Management, Data Collection, and Data Processing Systems Integrated Monitoring System Receipt Control System Instrument Development and Documentation System Data Collection System Document Archive and Data Library Employee-Level Controls Security Clearance Procedures Nondisclosure Affidavit Collection and Storage Security Awareness Training Staff Termination/Transfer Procedures Subcontractor Procedures |

Physical Environment Protections System Access Controls Survey Data Collection/Management Procedures Protecting Electronic Media Encryption Data Transmission Storage/Archival/Destruction Protecting Hard-Copy Media Internal Hard-Copy Communications External Communications to Respondents Handling of Mail Returns, Hard-Copy Student Lists, and Parental Consent Forms Handling and Transfer of Data Collection Materials Tracing Operations Software Security Controls Data File Development: Disclosure Avoidance Plan Data Security Monitoring Survey Protocol Monitoring System/Data Access Monitoring Protocol for Reporting Potential Breaches of Confidentiality Specific Procedures for Field Staff |

establish clear responsibility and accountability for data security and the protection of respondent confidentiality with corporate oversight to ensure adequate investment of resources;

detail a structured approach for considering and addressing risk at each step in the survey process and establish mechanisms for monitoring performance and adapting to new security concerns;

include technological and procedural solutions that mitigate risk and emphasize the necessary training to capitalize on these approaches; and

be supported by the implementation of data security controls recommended by the National Institute of Standards and Technology (NIST) for protecting federal information systems.

Under this plan, HSLS:09 will conform totally to federal privacy legislation, including:

the Privacy Act of 1974 (5 U.S.C. 552a);

Section C of Education Sciences Reform Act of 2002 (P.L. 107-279);

the USA Patriot Act of 2001 (P.L. 107-56);

the Family Educational Rights and Privacy Act (FERPA) (20 U.S.C. 1232g; 34 CFR Part 99);

the Protection of Pupil Rights Amendment (PPRA) (20 U.S.C. § 1232h; 34 CFR Part 98);

the Freedom of Information Act (5 U.S.C. 552);

the Hawkins-Stafford Elementary and Secondary School Improvement Amendments of 1988 (P.L. 100-297);

Title IV of the Improving America’s Schools Act of 1994 (P.L. 103-382); and

the Office of Management and Budget (OMB) Federal Statistical Confidentiality Order of 1997.

HSLS:09 also will conform to NCES Restricted Use Data Procedures Manual and NCES Standards and Policies. The plan for maintaining confidentiality includes obtaining signed confidentiality agreements and notarized nondisclosure affidavits from all personnel who will have access to individual identifiers. Each individual working in HSLS:09 will also complete the e-QIP clearance process. The plan also includes annual personnel training regarding the meaning of confidentiality and the procedures associated with maintaining confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses. The training will also cover controlled and protected access to computer files under the control of a single database manager; built-in safeguards concerning status monitoring and receipt control systems; and a secured and operator-manned in-house computing facility.

Invitation letters will be sent to states, districts, and schools describing the voluntary nature of this survey. The material sent will include a brochure to describe the study and to convey the extent to which respondents and their responses will be kept confidential. (Materials are provided in appendix A.)

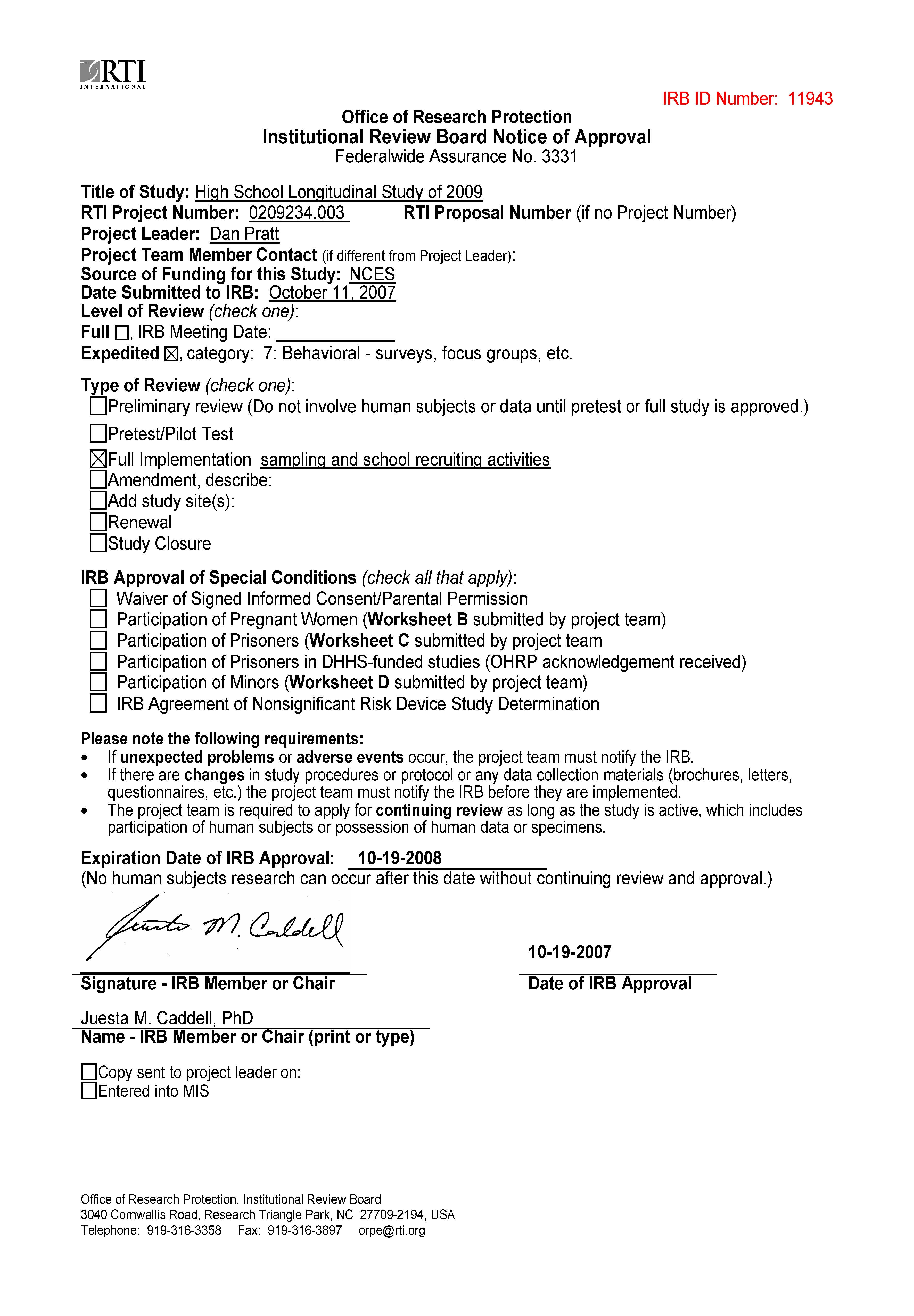

All recruiting materials and procedures will be reviewed and approved by RTI’s Committee for the Protection of Human Subjects prior to sample selection. This committee serves as RTI’s Institutional Review Board (IRB) as required by 45 CFR 46. It is RTI policy that the all RTI research involving human subjects, regardless of funding source, undergoes IRB review in a manner consistent with the regulations in 45 CFR 46 to ensure that all such RTI studies comply with applicable regulations concerning informed consent, confidentiality, and protection of privacy.

11.Justification for Sensitive Questions

The data elements are still in development and will be discussed in a separate submission to OMB.

12.Estimates of Annualized Burden Hours and Costs

Estimates of response burden for the HSLS:09 base-year field test and full-scale data collection activities are shown in tables 3 through 7. Because the proposed field test will be the first application of the proposed instrumentation, the estimates of response burden is based on initial estimates developed from experience with ELS:2002 and other educational longitudinal studies (e.g., NELS:88, HS&B). Please note that the time students will spend completing the cognitive assessment has not been included in the estimated burden. High school seniors will complete the assessment only and not the questionnaire; therefore, they are not represented in the burden estimate.

Table 3. Estimated burden on respondents for field test and full-scale studies

Respondents |

Sample |

Expected response rate |

Number of respondents |

Average burden/ response1 |

Range of response times |

Total burden (hours) |

Freshmen |

|

|

|

|

|

|

Field test (2008) |

1,250 |

92 |

1,150 |

30 minutes |

— |

575 |

Full-scale (2009) |

20,000 |

92 |

18,400 |

30 minutes |

— |

9200 |

Total |

21,250 |

|

19,550 |

|

|

9,775 |

|

|

|

|

|

|

|

Seniors |

|

|

|

|

|

|

Field test (2008) |

1,250 |

92 |

1,150 |

0 minutes (assessment only) |

— |

0 |

Total |

1,250 |

|

1,150 |

|

— |

0 |

1 Please note that the time students will spend completing the cognitive assessments has not been included in the estimated burden.

Table 4. Estimated burden on parents for field test and full-scale studies

Parents |

Sample |

Expected response rate |

Number of respondents |

Average burden/response |

Range of response times |

Total burden (hours) |

Total |

21,250 |

|

19,550 |

|

|

6,842 |

|

|

|

|

|

|

|

Field test (2008) |

1,250 |

92 |

1,150 |

30 minutes |

30 |

575 |

Full-scale (2009) |

20,000 |

92 |

18,400 |

30 minutes |

30 |

9,200 |

Table 5. Estimated burden on teachers for field test and full-scale studies

Teachers (math, science) (linked to students) |

Sample |

Expected response rate |

Number of respondents |

Average burden/response |

Range of response times |

Total burden (hours) |

Total |

10,295 |

|

9,471 |

|

|

6,314 |

|

|

|

|

|

|

|

Field test (2008) |

645 |

92 |

593 |

40 minutes |

4–100 minutes |

395 |

Full-scale (2009) |

9,650 |

92 |

8,878 |

40 minutes |

4–100 minutes |

5,919 |

Table 5a. Estimated burden on teachers for field test and full-scale studies – 9th grade math & science teachers (possible option)

Teachers (math, science) (linked to students) |

Sample |

Expected response rate |

Number of respondents |

Average burden/response |

Total burden (hours) |

Total |

13,253 |

|

12,193 |

|

6,096 |

|

|

|

|

|

|

Field test (2008) |

853 |

92 |

785 |

30 minutes |

392 |

Full-scale (2009) |

12,400 |

92 |

11,408 |

30 minutes |

5,704 |

Table 6. Estimated burden on school administrators for field test and full-scale studies

School administrators |

Sample |

Expected response rate |

Number of respondents |

Average burden/response |

Range of response times |

Total burden (hours) |

Total |

850 |

|

832 |

|

|

420 |

|

|

|

|

|

|

|

Field test (2008) |

55 |

98 |

49 |

30 minutes |

— |

24.5 |

Full-scale (2009) |

800 |

98 |

784 |

30 minutes |

— |

392 |

Table 7. Estimated burden on school counselors for field test and full-scale studies

Counselors |

Sample |

Expected response rate |

Number of respondents |

Average burden/response |

Range of response times |

Total burden (hours) |

Total |

850 |

|

781 |

|

|

390.5 |

|

|

|

|

|

|

|

Field test (2008) |

55 |

92 |

46 |

30 minutes |

— |

23 |

Full-scale (2009) |

800 |

92 |

736 |

30 minutes |

— |

368 |

For high school students, we have used $6.55 per hour for the field test and $7.25 per hour for the main study to estimate the cost to participants. For freshmen, the cost is estimated at $7,533 for the field test and $133,400 for the main study. For seniors, who will participate only in the field test and complete only the cognitive assessment battery, the cost is estimated as $5,024.

For parents, assuming a $20 hourly wage, the cost to parent respondents is estimated to be $11,500 for the 2008 field test and $184,000 for the 2009 base year main study.

For teachers in the linked design (math and science teachers providing contextual data for student analysis), teacher burden is highly variable because teachers may have different numbers of classes to provide information for, or (even more important) different numbers of students to rate. In ELS:2002, for example, based on the same linked design, burden in the student ratings portion of the teacher questionnaire ranged from as few as 1 student (4 minutes student-rating burden) to as many as 25 students (100 minutes student-rating burden) in small schools where there was only a single teacher for a particular subject in the relevant (ninth) grade.

There is a possibility that we may survey all math and science teachers in a sampled school who instruct 9th graders. If that is the case, the estimated burden on teachers actually decreases, because the teachers would not be rating individual students on learning approaches and behavior (see Table 5a). Under this scenario, the incentive would be $25 for teachers to complete a 30-minute questionnaire.

Also, sample sizes for the teacher sample are harder to predict with full accuracy than other sample sizes in HSLS, since the number is not preset for this component and some of the information needed to model probable sample sizes is not available from other national datasets. (Ideally, one would be able to tap comprehensive national statistics for how many science and mathematics teachers, in each school in a simulated stratified probability-proportionate-to-size (PPS) sample, were engaged in teaching ninth-graders.)

Costs to respondents may be estimated as follows. Assuming an hourly wage of $20 for school personnel, field test respondent costs amount to $7,900 and main study respondent costs for this component to $118,373. Under the 9th grade math and science teacher option, the cost for this component increases slightly though exact cost estimates are not available yet. This option has not been accepted or approved yet, but is mentioned only to give complete and thorough context.

For school administrators (the greater part of the questionnaire is typically completed by clerical staff in the school office with the last section completed by the school principal), again assuming a $20 hourly cost, the cost to respondents is $490 in the field test and $7,840 in the main study.

For the counselor questionnaire, the respondent dollar cost, assuming an average hourly rate of $20 for school employees, is estimated to be $460 in the field test and $7,360 in the main study.

Included in the parent, teacher, school administrator, and counselor notification letters will be the following burden statement:

“According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless it displays a valid OMB control number. The valid OMB control number of this information collection is [1850-New], and it is completely voluntary. The time required to complete this information collection is estimated to average 30 minutes for the parent, teacher, and school administrator questionnaires, including the time to review instructions and complete and review the information collection. The student questionnaire will be no more than 35 minutes in length, and the math test will take about 40minutes. If you have any comments concerning the accuracy of the time estimate or suggestions for improving the interview, please write to: U.S. Department of Education, Washington, DC 20202-4651. If you have comments or concerns regarding the status of your individual interview, write directly to: Dr. Laura LoGerfo, National Center for Education Statistics, 1990 K Street NW, Washington, DC 20006.”

13.Estimates of Other Total Annual Cost Burden

There are no capital, startup, or operating costs to respondents for participation in the project. No equipment, printing, or postage charges will be incurred.

14.Annualized Cost to the Federal Government

Estimated costs to the federal government for HSLS:09 are shown in 8. The estimated costs to the government for data collection for the field test and full-scale studies are presented separately. Included in the contract estimates are all staff time, reproduction, postage, and telephone costs associated with the management, data collection, analysis, and reporting for which clearance is requested.

Costs to NCES |

Amount |

|

|

Total HSLS:09 base-year costs |

$14,485,784 |

Salaries and expenses |

$719,900 |

Contract costs |

$15,205,684 |

|

|

Field test (2008) |

$2,820,025 |

Salaries and expenses |

$215,648 |

Contract costs |

$3,035,673 |

|

|

Full-scale survey (2009) |

$11,665,759 |

Salaries and expenses |

$504,252 |

Contract costs |

$12,170,011 |

NOTE: All costs quoted are exclusive of incentive fee. Field test costs represent Tasks 2 and 5 of the HSLS:09 contract; base-year main study costs include tasks 1, 3, 4, and 6.

15.Explanation for Program Changes or Adjustments

This is a new collection. This submission requests data collection approval for the field test and base year of HSLS:09. Thus, there is no precedent for the study in terms of a previously approved collection for which approval has expired.

16.Plans for Tabulation and Publication and Project Time Schedule

The HSLS:09 field test will be used to test and improve the instrumentation and associated procedures. Publications and other significant provisions of information relevant to the data collection effort will be a part of the reports resulting from the field test and main study, and both public use (Data Analysis System) and restricted use (electronic codebook microdata) files will be important products resulting from the full-scale survey. The HSLS:09 data will be used by public and private organizations to produce analyses and reports covering a wide range of topics.

Data files will be made available to a variety of organizations and researchers, including offices and programs within the U.S. Department of Education, the Congressional Budget Office, the Department of Health and Human Services, the Department of Labor, the Department of Defense, the National Science Foundation, the American Council on Education, and a number of other education policy and research agencies and organizations. The HSLS:09 contract requires the following reports, publications, or other public information releases:

detailed methodological reports (one each for the field test and full-scale survey) describing all aspects of the data collection effort;

complete full-scale study data files and documentation for research data users;

a Data Analysis System (DAS) for public access to HSLS:09 results;

an ECB for restricted access to HSLS:09 microdata; and

a “first look” summary of significant descriptive findings for dissemination to a broad audience (the analysis deliverable will include technical appendices).

Final deliverables are scheduled for completion by mid-2010.

The operational schedule for the HSLS:09 field test and full-scale study is presented in 9.

17.Reason(s) Display of OMB Expiration Date Is Inappropriate

The expiration date for OMB approval of the information collection will be displayed on data collection instruments and materials. No special exception to this requirement is requested.

18.Exceptions to Certification for Paperwork Reduction Act Submissions

There are no exceptions to the certification statement identified in the Certification for Paperwork Reduction Act Submissions of OMB Form 83-I.

Activity |

Start |

End |

Field test |

|

|

School sampling |

2/2008 |

2/2008 |

Sample recruitment |

2/2008 |

11/2008 |

List receipt, student sampling |

8/2008 |

11/2008 |

Student/staff data collection |

9/2008 |

12/2008 |

Parent data collection |

10/2008 |

12/2008 |

Nonresponse follow-up |

10/2008 |

12/2008 |

|

|

|

Base year |

|

|

School sampling |

2/2008 |

2/2008 |

Sample recruitment |

2/2008 |

11/2009 |

List receipt, student sampling |

8/2009 |

11/2009 |

Student/staff data collection |

9/2009 |

11/2009 |

Parent data collection |

10/2009 |

2/2010 |

Nonresponse follow-up |

10/2009 |

3/2010 |

B.Collection of Information Employing Statistical Methods

This submission requests clearance for sampling and school recruitment activities for the High School Longitudinal Study of 2009 (HSLS:09) field test and full-scale study to be completed in 2008 and 2009, respectively. This section provides a description of the target universe for this study, followed by an overview of the sampling and statistical methodologies proposed for the field test and the full-scale study. We will also address suggested methods for maximizing response rates and for tests of procedures and methods, and we will introduce the statisticians and other technical staff responsible for design and administration of the study.

1.Target Universe and Sampling Frames

The target population for the HSLS:09 full-scale study consists of 9th grade students in public and private schools that include 9th and 11th grades; their parents; and corresponding math and science teachers, school administrators, and high school counselors. The needed respondent samples will be selected from all public and private schools with 9th and 12th grades in the 50 states and the District of Columbia.2 Excluded from the target universe will be specialty schools such as Bureau of Indian Affairs schools, special education schools for the handicapped, area vocational schools that do not enroll students directly, and schools for the dependents of U.S. personnel overseas.

The primary sampling units (PSU) of schools for this study will be selected from the two databases of the U.S. Department of Education. The Common Core of Data (CCD) will be used for selection of public schools, while private schools will be selected from the Private School Survey (PSS) universe files. To eliminate overlap between the field test and full-scale study samples, the full-scale study sample of schools will be selected prior to the field test sample. However, the early selected full-scale study sample will be “refreshed” by a small supplemental sample of schools that will become eligible in the time between the administration of the field test and of the full-scale study. The secondary sampling units (SSU) of students will be selected from student rosters that will be secured from the sample schools. The PSU and SSU sampling procedures for this study are detailed in the next section.

2.Statistical Procedures for Collecting Information

The following section describes sampling procedures for the field test and full-scale study for which clearance is requested. First discussed is the selection plan for the full-scale study sample of schools, followed by the selection plan for the field test sample, to reflect the sequence that will be observed for PSU selections. Next, selection procedures for the student samples will be presented for the field test and full-scale study that will be conducted in 2008 and 2009, respectively. This section also includes descriptions of the procedures that will be followed after data collection, including survey weight adjustments, to measure and reduce bias due to nonresponse.

a.School Frames and Samples

RTI plans to use NCES’ latest Common Core of Data (CCD:2005–2006) as the public school sampling frame and Private School Survey (PSS:2005–2006) as the private school sampling frame. Given that these two sample sources provide comprehensive listings of schools, and that CCD and PSS data files have been used as school frames for a number of other school-based surveys, it is particularly advantageous to use these files in HSLS:09 for comparability and standardization across NCES surveys.

As mentioned earlier, the survey population for the full-scale study of HSLS:09 consists of all 9th graders in the 50 states and District of Columbia enrolled in

regular public schools, including state department of education schools, that include 9th and 11th grades; and

Catholic and other private schools that have 9th and 12th grades.

Excluded for this study will be the following:

schools with no 9th or 11th grade;

ungraded schools;

Bureau of Indian Affairs schools;

special education schools;

area vocational schools that do not enroll students directly;

Department of Defense schools; and

closed public schools.