PEELS Revised package for part A Wave 5 OMB Justification102208

PEELS Revised package for part A Wave 5 OMB Justification102208.doc

Pre-Elementary Education Longitudinal Study (PEELS) (KI)

OMB: 1850-0809

October 2, 2008

Celia Rosenquist

National Center for Special Education Research

Institute of Education Services

555 New Jersey Avenue, NW

Washington, DC 20208

RE: Contract # ED-04-CO-0059

Dear Celia:

Please find attached a revised copy of the Renewal Package for Forms Clearance and OMB Justification for the above mentioned contract. If you have any questions about the deliverable please feel free to call me at 757-565-4048 or 301-251-4277.

Sincerely,

![]()

Elaine Carlson

Project Director

A. JUSTIFICATION

1. Purpose and Authority

In Establishing a Research Agenda for Reauthorization of IDEA (MSPD Evaluation Support Center, 1995) the Office of Special Education Programs (OSEP) laid out a plan for longitudinal research to inform the reauthorization of the Individuals with Disabilities Education Act (IDEA). The plan called for establishing several cohorts of children and youth with disabilities that, if followed for a long enough period of time, would create a picture of the experiences and achievements of children and youth with disabilities, potentially from birth to young adulthood.

In 1996, OSEP commissioned a longitudinal study of infants and toddlers with disabilities who were receiving early intervention to answer key questions about the children and families served under Part C of IDEA, the services provided, and their achievements. In spring of 2000, OSEP commissioned the Special Education Elementary Longitudinal Study (SEELS) to provide information on the characteristics, experiences, and achievements of 6- to 12-year-olds receiving special education as they transition from elementary to middle and middle to high school. And at the beginning of 2001, OSEP commissioned the second National Longitudinal Transition Study (NLTS2), to provide data on youth as they move through middle school and high school, to transition to the adult world. The only glaring gap on this longitudinal spectrum were the early childhood years—that is, children ages 3 through 5, no longer toddlers, but not yet considered primary school age. This stage is especially complex for children receiving special education services, not just developmentally, but also in regard to the formal and informal structure of the education and social service delivery systems. Therefore, PEELS was commissioned to fill this gap. In 2005, authority for PEELS was moved from OSEP to the new Institute for Education Sciences’ National Center for Special Education Research (NCSER).

2. Use of Information

The U.S. Department of Education (ED) has a variety of ongoing needs for information about the implementation of special education for children ages 3 through 5 with disabilities across the nation and the performance of children receiving those services. These include:

Data that serve as indicators of Government Performance and Results Act (GPRA) objectives. In particular, PEELS addresses IDEA, Part B, Indicator 1.2, which states, “The percentage of preschool children receiving special education and related services who have readiness skills when they reach kindergarten will increase.” The primary data source on children’s early literacy and early numeric skills is the PEELS direct assessment. Direct assessments of (pre-)reading and (early) mathematics skills are conducted in each wave of PEELS. The final preschool assessment could be used to gauge academic readiness for kindergarten.

Information requested by Congress in regular reauthorizations of IDEA.

Information to respond to the many questions that are raised by policymakers, advocates, practitioners, parents, and researchers about children with disabilities, their families, and the programs that serve them.

Data collected from PEELS will supply much-needed information for all of these purposes. Specifically, the following groups of individuals are likely to benefit from the collection of the information:

Federal policymakers, who make decisions about special education and related services for young children with disabilities and the critical interfaces among these programs and other federally funded services and systems that affect children with disabilities and their families.

State early childhood special education policymakers (e.g., 619 coordinators) who make decisions regarding state implementation of special education, state funding levels for special education, and other issues about programs and services for children with disabilities.

LEA and school administrators, who are responsible for implementing programs and services at the local level.

Practitioners and administrators in early childhood special education and related service systems, who will better understand the participation of young children with disabilities in those systems and the contribution of services to achievement.

Parents of children with disabilities who can use information on special education and related services and achievement to increase their own capacity to advocate effectively for their children.

Higher education faculty who conduct preservice training of special education teachers and related service personnel, who can use information on service and program characteristics that facilitate positive outcomes for children to improve the capabilities of future educators and practitioners.

Researchers who have access to this rich data source to conduct a variety of secondary analyses, develop comparable local or statewide follow-up studies, review the technical methods, or use the data for publication.

3. Method of Collection

This is the final data collection for the PEELS study. Wave 5 includes a child status report, a postcard to parents requesting updated school information, and a direct child assessment, which will allow an examination of reading and math skills when children are in mid to late elementary school. The child status report is sent to participating districts to obtain information about the enrollment status of participating children. The postcard is sent to families who move out of their original PEELS district. Burden estimates are reported in Section 12.

4. Avoidance of Duplication

No national data previously existed on the characteristics, experiences, or outcomes of children ages 3 through 5 receiving early childhood special education services—data that are now available through PEELS. The only other national data are state-reported counts of the number of children served at a point in time each year, described by their age, and the settings where the special education and related services are received. None of the data collection instruments used previously in PEELS or proposed for Wave 5 duplicate any existing national data that describe preschool special education programs, the children receiving services in these programs, or the children’s performance on tests of academic knowledge and skills. Although some states and local programs may collect information on samples of their own schools or children, state and local data are too diverse in content and quality to be comparable and are an inappropriate base from which to extrapolate to the nation as a whole.

5. Small Business Impact

No small businesses will be involved as respondents in this data collection. Therefore, there will be no small business impacts.

6. Consequences of Not Collecting Information

In the absence of the data collection for PEELS, federal policy regarding early childhood special education and related services will continue to be made without a solid base of information on such fundamental questions as the nature of the children served, the instructional programs and services they receive, and the achievements of children receiving early childhood special education and related services. The final wave of data collection is particularly important for 1) identifying predictors of literacy and math growth and 2) describing how children who received preschool special education services perform in elementary school.

The timing and frequency of data collection for PEELS is rooted in the nature of both the PEELS population and the nature of the early childhood programs they attend. Because preschool is not governed by traditional American compulsory education, the early childhood programs that the children in PEELS attend differ dramatically from each other and from the more standard formal school system that characterizes elementary and secondary schools. As a result, it was necessary to conduct data collections immediately and repeatedly to capture these vast differences and rapid changes. The schedule of data collection is considered the minimum number and maximum spacing to obtain accurate information on children’s programs and outcomes.

7. Special Circumstances

The proposed data collection is consistent with 5DFR 1320.6 and therefore involves no special circumstances.

8. Consultation Outside the Agency

Study design work was conducted by SRI International, and Westat was contracted to conduct Waves 1-5 data collection, data cleaning, analysis, and reporting. The design phase involved extensive input from experts in the content areas and methods used by PEELS. First, a stakeholder advisory panel was convened that included representatives of many of the audiences that will be keenly interested in PEELS. The panel helped develop the conceptual framework and define and prioritize the research questions. The group met once in person for a day-long meeting and engaged in a priority-setting exercise for the research questions through an exchange of materials and a voting process.

Second, a technical work group (TWG) of researchers experienced in child-based and longitudinal studies, early childhood education, and special education advised on multiple aspects of the design, including the child sampling approach and data collection procedures. TWG members also received copies of all the data collection instruments. The TWG held six phone conferences, and members reviewed all materials produced in the design process. Each member supplied PEELS staff with written comments and notes, and provided verbal feedback through telephone conferences.

In addition, four nationally recognized experts in early childhood special education served as consultants to the PEELS process. They provided advice in all areas, with particular attention to the data collection instruments and administration timeframe.

Finally, experienced researchers from SRI International and RTI guided the development and completion of the PEELS design. Senior Westat staff led the Waves 1-4 data collection, analysis, and reporting effort. Members of the TWG, advisory panel, the four consultants, and senior Westat staff are listed in exhibit 1.

Exhibit 1. TWG, Stakeholder Panel, Consultants, and Contractor Staff Members |

|

Name |

Affiliation |

Technical Work Group |

|

Lizanne DeStefano |

University of Illinois, Urbana-Champaign |

Marsha Brauen |

Westat |

Elvira Hausken |

National Center for Education Statistics |

Mary McEvoy |

CEED-University of Minnesota |

Mabel Rice |

University of Kansas |

Carol Trivette |

Orelena Hawkes, Puckett Institute |

Mark Wolery |

Frank Porter Graham Child Development Center |

Consultants |

|

Donald Bailey |

University of North Carolina at Chapel Hill |

Michelle deFosset |

NECTAS |

Robin McWilliam |

Formerly of the University of North Carolina at Chapel Hill |

Rune Simeonsson |

University of North Carolina at Chapel Hill |

Stakeholder Group |

|

Catherine Burzio |

Parent Representative |

Jo Ann Edelin |

Alexandria City Public Schools, Office of Student Services |

Armineh Hacobian |

Parent Representative |

Debra Jervay-Pendergrass |

Kennedy Institute-Stories Project |

Luzanne Pierce |

National Association of State Directors of Special Education |

Elizabeth Schaefer |

MA 619 Coordinator |

Lou McIntosh |

Merrywing Corporation |

Merle McPherson |

Maternal and Child Health Program |

Jim O'Brien |

Administration for Children, Youth and Families |

Mary Simmons |

Simpson County, RTC |

Gail Solit |

Gallaudet University Child Development Center |

Sharon Walsh |

Advisory Panel |

Pete Weilenmann |

Advisory Panel |

Terris Willis |

Advisory Panel |

Samara Goodman |

U.S. Department of Education, Office of Special Education Programs |

Nancy Treusch |

U.S. Department of Education/OSERS, Office of Special Education |

Design Contractor Staff |

|

SRI International and Research Triangle Institute |

|

Data Collection, Analysis and Reporting Staff at Westat |

|

Elaine Carlson, Project Director |

|

Bill Frey and Ann Webber, Assessment Directors |

|

Ron Hirschhorn and Ed Dolbow, Senior Systems Designers |

|

Linda LeBlanc, Data Collection Manager |

|

Hyunshik Lee, Senior Statistician |

|

9. Reimbursement of Respondents

Research suggests that the use of participant incentives improves response rates, reduces bias, and reduces costs. Incentives enhance the quality of the data by ensuring that nonresponse is kept to a minimum. Recruiting reluctant participants and converting refusals are time-consuming and expensive endeavors, and can introduce bias into survey results.

In previous waves, PEELS provided incentives. The following incentives for the Wave 5 direct child assessment are proposed, which are consistent with those used in earlier waves: a $1 toy for children at the time of testing and a $15 gift card for families that transport their children for testing or allow testing to be done in their home. The toy and gift card are both given at the time of assessment. The child status report does not include any respondent incentives.

10. Assurances of Confidentiality

In Waves 1-4, ED executed a plan for ensuring that all data collected as part of this study remained confidential. ED intends to follow this plan in Wave 5 of the study. ED, in the conduct of the study, will follow procedures for ensuring and maintaining participant privacy, consistent with Education Sciences Reform Act of 2002. Title I, Part E, Section 183 of this Act requires, “All collection, maintenance, use, and wise dissemination of data by the Institute” to “conform with the requirements of section 552 of title 5, United States Code, the confidentiality standards of subsection (c) of this section, and sections 444 and 445 of the General Education Provision Act (20 U.S.C. 1232g, 1232h).” These citations refer to the Privacy Act, the Family Educational Rights and Privacy Act, and the Protection of Pupil Rights Amendment. Respondents were assured that confidentiality was maintained, except as required by law. Specific steps to guarantee confidentiality included the following:

Identifying information about the families and respondents (e.g., respondent name, address, and telephone number) was not entered into the analysis data file, but was kept separate from other data and was password protected. A unique identification number for each participating child and school district was used for building raw data and analysis files.

In emails, participating children were referred to by first name, last initial, and unique identification number. School districts were referred to by PEELS identification number. Files containing more information were password protected.

A fax machine used to send or receive documents containing confidential information was kept in a locked field room, only accessible to study team members. When sending faxes, study staff called ahead to make sure the authorized recipient was waiting for the fax.

Confidential materials were printed on a printer located in a limited access field room. When printing documents containing confidential information from shared network printers, authorized study staff were present and retrieved the documents as soon as printing was complete.

In public reports, findings are presented in aggregate by type of respondent (e.g., parents’ perceptions of service delivery) or for subgroups of interest (e.g., social functioning of children who begin receiving early childhood special education at age 3, compared to age 5). No reports identify individual respondents, local programs, or schools.

Access to the child sample files is limited to authorized study staff only; no others are authorized such access.

All members of the study team were briefed regarding confidentiality of the data. Each person involved in the study signed and had notarized an affidavit of nondisclosure attesting to his/her understanding of the significance of the confidentiality requirement (exhibit 2).

A control system was in place, beginning at sample selection, to monitor the status and whereabouts of all data collection instruments during transfer, processing, coding, and data entry. This included sign in/sign out sheets and the hand-carrying of documents by authorized project staff only.

All data were stored in secure areas accessible only to authorized staff members. Computer-generated output containing identifiable information was maintained under the same conditions.

When any hard copies containing confidential information were no longer needed, they were shredded.

Micro-level data were released through restricted-use data sets and only after the data had been perturbed in accordance with Disclosure Review Board (DRB) instructions.

Exhibit 2. Affidavit of Nondisclosure

(Job Title) (Date of Assignment to PEELS Project)

Westat PEELS

(Organizations, State or local (Data Base or File Containing

agency or instrumentality) Individually Identifiable Information)

1650 Research Blvd.

Rockville, MD 20850

(Address)

I ,

, do solemnly swear (or affirm) that when given access to the

subject data base or file, I will not

,

, do solemnly swear (or affirm) that when given access to the

subject data base or file, I will not

use or reveal any individually identifiable information furnished, acquired retrieved or assembled by me or others, under the provisions of Section 406 of the General Education Provisions Act (20 U.S.C. 1221e-1) for any purpose other than statistical purposes specified in the PEELS surveys, project or contract;

make any disclosure or publication whereby a sample unit or survey respondent could be identified or the data furnished by or related to any particular person under this section can be identified; or

permit anyone other than the individuals authorized by the Department of Education to examine the individual reports.

(Signature)

(The penalty for unlawful disclosure is a fine of not more than $250,000 (under 18 U.S.C. 3559 and 3571) or imprisonment for not more than 5 years, or both. The word “swear” should be stricken out wherever it appears when a person elects to affirm the affidavit rather than to swear to it.)

State of:__________________________

County of;_________________________

Sworn and subscribed to me before a Notary Public in and for the aforementioned County and State this ________ day of ___________2003.

(Notary Public)

My Commission Expires:________________

11. Sensitive Items

No questions of a sensitive nature are included in the direct child assessment.

12. Estimates of Burden

Estimates of respondent burden for each instrument are provided in exhibit 3. The total burden for these instruments is estimated to be 105,510 minutes or 1,759 hours for Wave 5. These estimates are based on several factors:

the length of the instrument,

number of target respondents, and

average time for completion in Wave 4 data collection.

Exhibit 3. Estimates of Waves 5 Respondent Burden

Instrument |

Respondent |

Actual number completed in Wave 1 |

Actual number completed in Wave 2 |

Actual number completed in Wave 3 |

Actual number completed in Wave 4 |

Anticipated number completed in Wave 5 |

Minutes |

Waves 5 burden in minutes |

||

|

|

(a) |

(b) |

(c) |

(d) |

(e) |

(f) |

e x f |

||

Child Status Report |

Site Coordinators |

---- |

205 |

223 |

223 |

223 |

30 |

6,690 |

||

Updated School Information Postcard |

Parents |

---- |

87 |

154 |

220 |

359 |

5 |

1,795 |

||

Wave 5 Direct Assessment |

Participating children |

2,437 |

2,712 |

2,569 |

2,506 |

2,381 |

40 |

95,240 |

||

Woodcock Johnson Letter-Word |

|

2,434 |

2,711 |

2,569 |

2,506 |

2,381 |

5 |

11,905 |

||

Woodcock Johnson Applied Problems |

|

2,437 |

2,711 |

2,569 |

2,504 |

2,381 |

5 |

11,905 |

||

Peabody Picture Vocabulary Test III-R (PPVT III-R) |

|

2,352 |

2,669 |

2,569 |

2,489 |

2,381 |

12 |

28,572 |

||

Woodcock Johnson Passage Comprehension |

|

|

|

|

2,504 |

2,381 |

6 |

14,286 |

||

Woodcock Johnson Calculation |

|

|

|

|

2,423 |

2,381 |

7 |

16,667 |

||

DIBELS Oral Reading Fluency |

|

|

|

|

2,091 |

2,381 |

5 |

11,905 |

||

PEELS Alternate Assessment |

Teachers |

331 |

228 |

165 |

125 |

119 |

15 |

1,785 |

|

|

TOTAL BURDEN |

|

|

|

|

|

|

|

105,510 |

|

|

13. Estimated Annual Cost Burden to Respondents

Respondent costs result from the investment of time in completing questionnaires, (e.g., school staff completing mail questionnaires, families responding to telephone interviews). Estimates of response time for each data collection instrument are presented in exhibit 3 in response to item # 12 above. No dollar costs have been associated with the time estimates because salaries of school personnel vary widely.

14. Estimated Annual Cost Burden to Federal Government

The total cost for Waves 2-4 was $11,589,000. This included costs for all aspects of data collection, data cleaning, coding, and processing; descriptive, explanatory, and longitudinal analyses; preparation of various project reports; and general project management and coordination with the government project officer. NCSER estimates that costs for Wave 5 will be roughly $2,803,000.

15. Program Changes in Burden/Cost Estimates

The previous clearance covered Waves 2-4. We are submitting this package for Wave 5.

16. Plans/Schedules for Tabulation and Publication

Westat will generate descriptive statistics that summarize and describe the data from the Wave 5 assessment. Descriptive statistics (means and standard errors) will be used to describe the data in a series of data tables. This aspect of the analysis will closely mirror analyses conducted in Waves 1-4 to help identify changes over time.

Each of the Waves 1-5 data tables will be accompanied by a corresponding table with standard error estimates. All the data in the descriptive tables will be weighted to represent cross-sectional national estimates for each of Waves 1, 2, 3, 4, and 5. Westat will test for the significance of changes over time (between the first and last relevant wave of data collection) within subgroups defined by age cohort, race/ethnicity, etc.

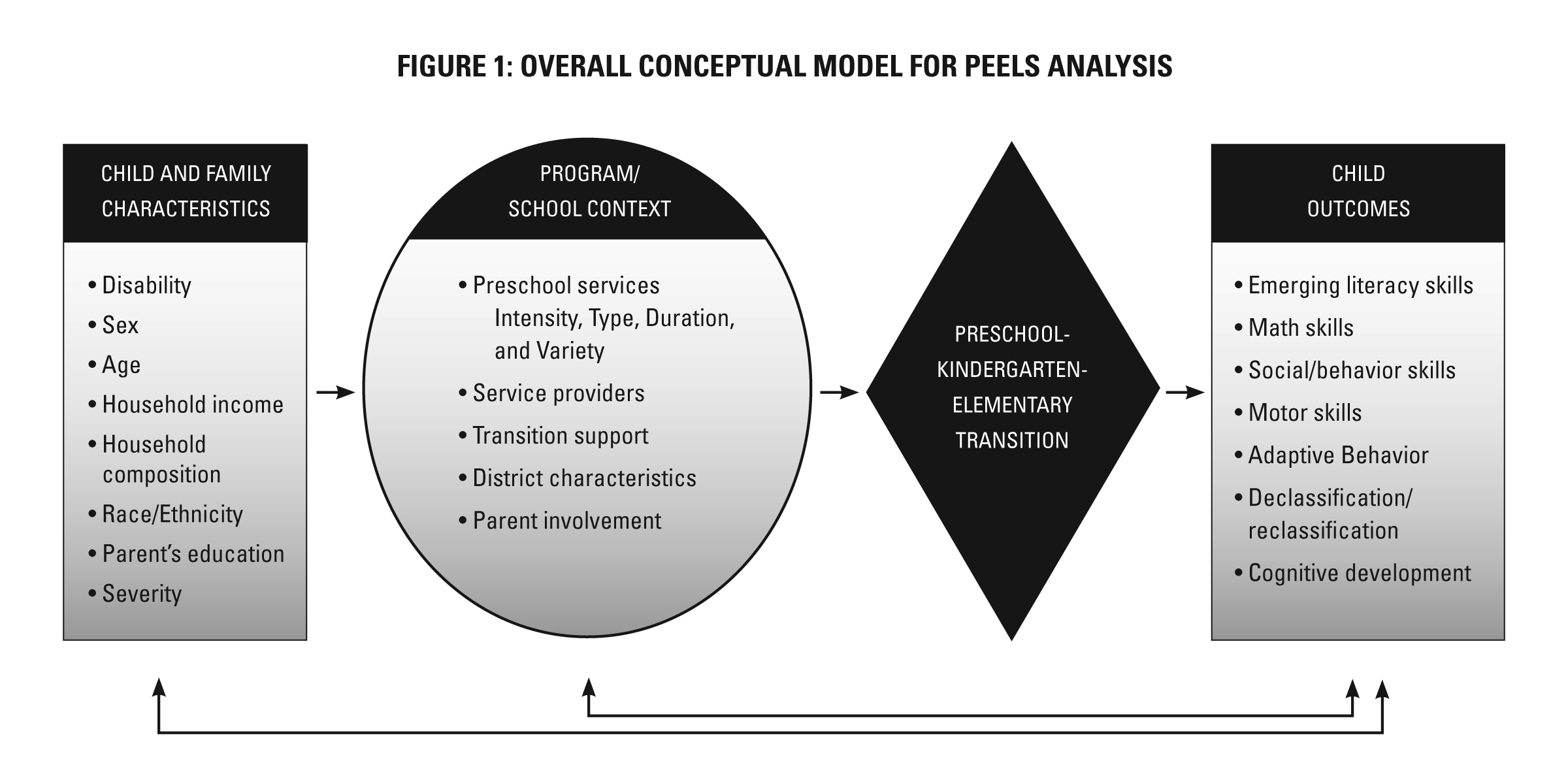

As more data become available, our analysis tasks have increasingly focused on model-building activities, such as achievement growth, and we expect that will continue to be the case in Wave 5. Figure 1 provides a general model that guides the PEELS analyses.

As analysts generate and report descriptive statistics for PEELS, we will make every effort to contextualize those statistics by reporting results of similar analyses from extant data sources. This will help analysts and readers alike to interpret results. A number of ongoing studies share overlapping populations and/or assessment instruments with PEELS, including the Early Childhood Longitudinal Study, Kindergarten Cohort (ECLS-K); Head Start Impact Study; Head Start National Reporting System; Classroom Literacy Interventions and Outcomes; National Early Intervention Longitudinal Study; and Special Education Elementary Longitudinal Study.

Reporting Mechanisms

A variety of mechanisms are being used to make the PEELS data available to the public. In 2006, Westat created a restricted-use data set that contained final Wave 1 and Wave 2 data. Wave 3 was added in 2007, and Wave 4 will be released in 2008. A similar data set will be created following Wave 5. In addition, in 2008, NCSER released a web-based table production system for PEELS. Westat will update the data analysis system (DAS) with data as they become available. The DAS will allow the public to access most of the PEELS data while denying access to micro-level data.

A number of PEELS reports will be prepared under contract with Westat. These include a wave overview report and thematic reports suitable for publication in referred journals. The PEELS reporting agenda also includes some short reports, including 2 two-pagers and an annual newsletter (see exhibit 4).

Exhibit 4. Schedule of Wave 5 reporting activities

Task |

Estimated completion date |

Draft Wave 5 methods report |

9/15/09 |

Final Wave 5 methods report |

11/1/09 |

Rough draft of assessment data |

12/15/09 |

Final report of assessment data |

1/15/10 |

Draft thematic reports (2) |

1/20/10 |

Final thematic reports (2) |

3/20/10 |

Draft Overview Report |

3/15/10 |

Final Overview Report |

5/15/10 |

17. Expiration Date Omission Approval

Not applicable.

18. Exceptions

No exceptions are taken.

B. COLLECTION OF INFORMATION USING STATISTICAL METHODS

1. Sampling

PEELS was designed to include a nationally representative sample of 3- through 5-year-olds who were receiving special education services in 2003-04. The study used a two-stage sample design. The first-stage sample included local education agencies (LEAs) or school districts. The second-stage sample included preschoolers selected from lists of the names of eligible children provided by the participating LEAs.

This section describes the different samples used in PEELS and the ways they were selected. The sample selected following the original sample design is called the main sample. This sample was selected by a two-stage design, LEAs at the first stage and children at the second stage. To address nonresponse bias at the LEA level, a nonresponse bias study sample was selected from the nonparticipating LEAs to examine potential differences between the respondents and nonrespondents. The combined sample of the main and the nonresponse study sample is a three-phase sample, where the first phase is the same as the main sample, the second phase is a combined LEA sample comprising the main sample LEAs and the nonresponse study sample LEAs, and the third phase is the sample of children selected from the combined LEA sample. This combined sample was treated as one, as if it had been selected with the original sample design. It is called the amalgamated sample.

The child sample also includes two components. The first was selected using the initial design. The second component was a supplemental sample selected in Wave 2 (2004-05) from a state that was not covered in Wave 1. The amalgamated sample was augmented by adding the supplemental sample and is named the augmented sample. All these efforts were made to produce a truly nationally representative and efficient sample of preschoolers with disabilities. Further information on the sample design is described in Changes in the Characteristics, Services, and Performance of Preschoolers with Disabilities from 2003-04 to 2004-05: PEELS Wave 2 Overview Report <insert link once available>.

2. Procedures for Data Collection

In Waves 1-4, PEELS included several different data collection instruments and activities, including a series of computer-assisted parent interviews, direct one-on-one assessments of participating children, and several mail questionnaires. In Wave 5, only the Child Status Report and child assessment are planned, which are described below. Completion rates for all the Waves 1-4 data collections are provided in exhibit 5.

Exhibit 5. Total unweighted number of respondents and response rate for each PEELS instrument

Wave 1 |

Wave 2 |

Wave 3 |

Wave 4 |

||||||

Instrument type |

Frequency |

Response rate (%) |

Frequency |

Response rate (%) |

Frequency |

Response rate (%) |

Frequency |

Response rate (%) |

|

Parent interview |

2,802 |

96 |

2,893 |

93 |

2,719 |

88 |

2,488 |

80 |

|

LEA

|

207 |

84 |

† |

† |

† |

† |

† |

† |

|

SEA questionnaire |

51 |

100 |

† |

† |

† |

† |

† |

† |

|

Principal/program

|

852 |

72 |

665 |

77 |

406 |

56 |

† |

† |

|

Teacher mail

|

2,287 |

79 |

2,591 |

84 |

2,514 |

81 |

2,502 |

81 |

|

Early

|

2,018 |

79 |

1,320 |

86 |

346 |

82 |

† |

† |

|

Kindergarten

|

269 |

73 |

957 |

79 |

992 |

81 |

419 |

79 |

|

Elementary

|

† |

† |

314 |

86 |

1176 |

81 |

2083 |

81 |

|

Child assessment |

2,794 |

96 |

2,932 |

94 |

2,891 |

93 |

2,632 |

85 |

|

English/

|

2,463 |

97 |

2,704 |

96 |

2,726 |

93 |

2,507 |

85 |

|

Alternate

|

331 |

93 |

228 |

79 |

165 |

93 |

125 |

84 |

|

Note: Wave 1 frequencies do not include cases in the supplemental sample for which data were imputed.† Not applicable |

|||||||||

Child Assessment

The direct one-on-one assessment is designed to obtain information on the knowledge and skills of preschoolers with disabilities in a number of domains, including emerging literacy and early math proficiency. An alternate assessment is available for children who cannot meaningfully participate in the direct assessment. Five direct assessments are planned for PEELS, one each in 2003-04, 2004-05, 2005-06, 2006-07, and 2008-09.

The Wave 5 direct assessment in English is expected to average about 40 minutes and included the following subtests:

Peabody Picture Vocabulary Test (PPVT) (Dunn and Dunn 1997);

Woodcock-Johnson III: Letter-Word Identification (Woodcock, McGrew, and Mather 2001);

Woodcock-Johnson III: Applied Problems (Woodcock, McGrew, and Mather 2001);

Woodcock-Johnson III: Calculation (Woodcock, McGrew, and Mather 2001);

Woodcock-Johnson III: Passage Comprehension (Woodcock, McGrew, and Mather 2001); and

Dynamic Indicators of Basic Early Literacy Skills (DIBELS) Oral Reading Fluency (Good and Kaminski 2002).

For children who cannot complete the direct assessment, the Adaptive Behavior Assessment System-II (ABAS-II) is used as an alternate assessment. The ABAS-II is a checklist of the child’s functional knowledge and skills and is completed by a teacher or other service provider. It assesses children’s functional performance in several areas: communication, community use, functional (pre) academics, school living, health and safety, leisure, self-care, self-direction, social, and work. It also can be used to produce composite scores in conceptual, social, and practical domains. The scaled scores for each of the skill areas are based on a mean of 10 and a standard deviation of 3.

Child status report

The Child Status Report (CSR) was sent to district Site Coordinators prior to the start of data collection in Waves 2, 3, and 4. It will be administered again in Wave 5. The CSR asks for information on the current school location of all the children originally recruited from the district.

Through the CSR, project staff identified children who were no longer living in their sampled districts and initiated procedures for tracking them to their new districts. Parent interviews and mail questionnaires were attempted for all locatable children. Assessments were attempted for children who lived within 50 miles of a PEELS assessor or when a traveling assessor could easily test a child.

3. Maximizing Response Rates

There are two key aspects to maximizing the number of sample members for whom data are collected: minimizing the number of sample members lost through attrition, and completing data collection with the maximum number of sample members who are retained in the sample.

To minimize sample attrition over the years of data collection, aggressive tracking mechanisms have been used to maintain accurate and up-to-date contact information for sample members. Prior to each wave of data collection, the districts are asked to complete a Child Status Report to indicate whether the children in the district participating in PEELS are attending the same school or program. If children are not attending the same school or program, the districts will be asked to provide as much information as they have about where the children transferred.

4. Testing of Instrumentation

No new instruments are being used in Wave 5, so no testing will be required.

5. Individuals Consulted on Statistical Issues

Persons involved in statistical aspects of the design include staff of the government’s design contractors, SRI International, Research Triangle Institute and Westat. Those consulted at these organizations are listed below.

SRI:

Dr. Harold Javitz, Senior Statistician

Center for Health Sciences

Westat

Dr. Hyunshik Lee

Dr. Frank Jenkins

Ms. Annie Lo

In addition, all aspects of the design, sampling plan, and instrumentation were reviewed by the PEELS TWG and Consultants listed in Exhibit 2 of Section A, Justification.

REFERENCES

Dunn, L.M., and Dunn, L.M. (1997). Peabody Picture Vocabulary Test-Third Edition. Circle Pines, MN: American Guidance Services.

Good, R.H., and Kaminski, R.A. (2002). Dynamic Indicators of Basic Early Literacy Skills (6th ed.). Eugene, OR: Institute for the Development of Educational Achievement.

MSPD Evaluation Support Center. (1995). Establishing a Research Agenda for Reauthorization of Idea. Research Triangle Park, NC: Research Triangle Institute and SRI International.

Woodcock, R.W., McGrew, K.S., and Mather, N. (2001). Woodcock-Johnson III Tests of Achievement. Itasca, IL: Riverside Publishing.

| File Type | application/msword |

| Author | Amy Shimshak |

| Last Modified By | #Administrator |

| File Modified | 2008-10-22 |

| File Created | 2008-10-22 |

© 2026 OMB.report | Privacy Policy