NPSAS_12_FT_Institution_Part B

NPSAS_12_FT_Institution_Part B.doc

National Postsecondary Student Aid Study

OMB: 1850-0666

B. Collection of Information Employing Statistical Methods

A.Collection of Information Employing Statistical Methods

This submission requests clearance for the 2011-12 National Postsecondary Student Aid Study (NPSAS:12) field test and full-scale institution contacting and list collection activities. The purpose of the field test is to fully test all procedures, methods, and systems of the study in a realistic operational environment prior to implementing them in the full-scale study. Specific plans are provided below.

1.Respondent Universe

a.Institution Universe

To be eligible for NPSAS:12, an institution will be required, during the 2010–11 academic year for the field test and the 2011-12 academic year for the full-scale, to:

offer an educational program designed for persons who had completed secondary education;

offer at least one academic, occupational, or vocational program of study lasting at least 3 months or 300 clock hours;

offer courses that are open to more than the employees or members of the company or group (e.g., union) that administered the institution;

be located in the 50 states, the District of Columbia, or Puerto Rico;

be other than a U.S. Service Academy; and

have a signed Title IV participation agreement with the U.S. Department of Education.

Institutions providing only avocational, recreational, or remedial courses or only in-house courses for their own employees will be excluded. U.S. Service Academies are excluded because of their unique funding/tuition base.

b.Student Universe

The students eligible for inclusion in the sample are those who are enrolled in a NPSAS-eligible institution in any term or course of instruction between July 1, 2010 and April 30, 2011 for the field test and between July 1, 2011, and April 30, 2012, for the full-scale, and who are:

enrolled in either (a) an academic program; (b) at least one course for credit that could be applied toward fulfilling the requirements for an academic degree; (c) exclusively non-credit remedial coursework but who the institution has determined are eligible for Title IV aid; or (d) an occupational or vocational program that required at least 3 months or 300 clock hours of instruction to receive a degree, certificate, or other formal award;

not currently enrolled in high school; and

not enrolled solely in a GED or other high school completion program.

2.Statistical Methodology

a.Institution Sample

The NPSAS:12 full-scale and field test institution sampling frames will be constructed from the IPEDS:2008–09 header, Institutional Characteristics (IC), 12-Month Enrollment, and Completions files.1 For the small number of institutions on the frame that have missing enrollment information, we will impute the data using the latest IPEDS imputation procedures to guarantee complete data for the frame.

NPSAS:12 will select the field test institution sample statistically rather than purposively as has been done in past NPSAS cycles. With a purposive sample of institutions, NPSAS has been able to meet response rate requirements and allow for an adequate test of instruments, systems, and procedures within the schedule and budget constraints of the field test while approximating, to the extent possible, the distribution of institutions for the full-scale sample. A statistical sample however, will provide more control to ensure that the field test and the full-scale institution samples have similar characteristics, and will allow inferences to be made to the target population, supporting the analytic needs of the proposed field test experiments (to be described in the forthcoming student data collection OMB package that will be submitted in September 2010).

A likely result of the new institution sampling method for the field test will be a lower institution response rate (expected to be around 50 percent). This will introduce bias into the sample if the responding institutions are systematically different from the noresponding institutions. To address this, nonresponse bias analysis and nonresponse weight adjustments will be conducted at both the institution and student levels. The NPSAS:12 weighted statistical field test sample with a known amount of bias will be representative of, and will allow inferences to be made to, the population.

In addition to using a statistical sample rather than a purposive sample NPSAS:12 will also change the process by which the institution sample is selected. Previous cycles selected the full-scale sample prior to selecting the field test sample from the complement. NPSAS:12 will select both institution samples simultaneously. First, a sample of 1,971 institutions, comprising the institutions needed for both the field test and full scale studies, will be selected from the stratified frame. Then, 300 of the 1,971 institutions will be selected for the field test using simple random sampling within institutional strata. The remaining 1,671 institutions will comprise the full-scale sample. Figure 1 displays the flow of institution sampling activities.

Figure 1. NPSAS:12 institution sample flow

We will select institutions for the initial sample using sequential probability minimum replacement (pmr) sampling (Chromy 1979), which resembles stratified systematic sampling with probabilities proportional to a composite measure of size (Folsom, Potter, and Williams 1987), which is the same methodology that we have used since NPSAS:96. PMR allows institutions to be selected multiple times, but instead of allowing that to happen, all institutions with a probability of being selected more than once will instead be included in the sample one time with certainty, i.e., be a certainty institution. Institution measures of size will be determined using annual enrollment data from the most recent IPEDS 12-Month Enrollment Component. Using composite measure of size sampling will ensure that target sample sizes are achieved within institution and student sampling strata while also achieving approximately equal student weights across institutions. Consistent with past procedures, we will use updated IPEDS files to freshen the institution sample in the summer of 2011 in order to add newly eligible institutions to the sample and produce a sample that is representative of institutions eligible in the 2011-12 academic year.

The 10 institutional strata will be the nine sectors traditionally used for NPSAS analyses with the private for-profit 2-year or more sector split into two strata: 2-year and 4-year. These strata are based on institutional level, control, and highest level of offering:2

1. public less-than-2-year

2. public 2-year

3. public 4-year non-doctorate-granting

4. public 4-year doctorate-granting

5. private not-for-profit less-than-4-year

6. private not-for-profit 4-year non-doctorate-granting

7. private not-for-profit 4-year doctorate-granting

8. private for-profit less-than-2-year

9. private for-profit 2-year

10. private for-profit 4-year.

The ten strata are a collapsed version of what was used in NPSAS:04 and NPSAS:08. The analytic purposes of NPSAS:12, which will oversample FTBs, differ somewhat from those for NPSAS:08, which oversampled baccalaureate recipients. NPSAS:04, which also oversampled FTBs, was conducted in conjunction with the 2004 National Study of Postsecondary Faculty (NSOPF:04). The NPSAS:04 strata were designed to be consistent with the NSOPF:04 strata while also allowing institutions that were ineligible for NSOPF to be sampled for NPSAS. Therefore, not all of the NPSAS:04 and NPSAS:08 strata are necessary for NPSAS:12. Another noteworthy change for NPSAS:12 is that the private for-profit 2-year and 4-year institutions will be split into separate strata to reflect the recent growth in enrollment in for-profit 4-year institutions.

In addition to the certainty institutions discussed above, there can also be certainty strata, i.e., when all institutions in a stratum are selected for the sample. NPSAS:08 had certainty strata to ensure a sufficient number of baccalaureate recipients in the student sample and a sufficient sample size in the states with state representative samples. NPSAS:04 also had certainty strata for some states and to be consistent with NSOPF:04. At the time of this submission, there is no need for certainty strata in NPSAS:12. However, this may change if the option to include state representative samples (described below) is exercised.

For the field test and full-scale, we expect to obtain about an overall 97 percent eligibility rate among sampled institutions. The institutional response rates are expected to be about 52 percent for the field test and 85 percent for the full-scale study.3 The eligibility and response rates will likely vary by institutional strata. Based on these expected rates, the estimated institution sample sizes and sample yield by the ten institutional strata are presented in tables 7 and 8, respectively, for the field test and the full-scale study.

Within each institutional stratum, additional implicit stratification for the full-scale will be accomplished by sorting the sampling frame within stratum by the following classifications: (1) historically Black colleges and universities (HBCU) indicator; (2) Hispanic-serving institutions (HSI) indicator4 (3) Carnegie classifications of postsecondary institutions;5 (4) the Office of Business Economics (OBE) Region from the IPEDS header file (Bureau of Economic Analysis of the U.S. Department of Commerce Region)6 (5) state and system for states with large systems, e.g., the SUNY and CUNY systems in New York, the state and technical colleges in Georgia, and the California State University and University of California systems in California; and (6) the institution measure of size. The objective of this implicit stratification will be to approximate proportional representation of institutions on these measures.

NCES is considering exercising a contract option to include state representative samples for undergraduate students in a subset of sectors in a subset of states. Including state representative samples would affect the institution and student stratification, sample sizes, and sample selection. The decision to move forward with these samples may not be made in time for the initial institution sample selection. In this case, the institution sample would be augmented in 2011. If there will be state representative samples, a memo will be provided to OMB at a later date specifying the changes in the sample design.

Table 7. NPSAS:12 field test estimated institution sample sizes and yield

Institutional sector |

Frame count1 |

Number sampled |

Number eligible |

List respondents |

Total |

6,762 |

300 |

291 |

150 |

|

|

|

|

|

Public |

|

|

|

|

Less-than 2-year |

234 |

16 |

11 |

6 |

2-year |

1,136 |

65 |

65 |

35 |

4-year non-doctorate-granting |

357 |

24 |

24 |

13 |

4-year doctorate-granting |

306 |

42 |

42 |

22 |

|

|

|

|

|

Private |

|

|

|

|

Not-for-profit less-than-4-year |

275 |

13 |

11 |

6 |

Not-for-profit 4-year non-doctorate-granting |

1,018 |

50 |

50 |

25 |

Not-for-profit 4-year doctorate-granting |

580 |

40 |

40 |

19 |

For-profit less-than-2-year |

1,425 |

30 |

28 |

16 |

For-profit 2-year |

895 |

10 |

10 |

4 |

For-profit 4-year |

536 |

10 |

10 |

4 |

1 Institution counts based on IPEDS:2007-08 header files.

Table 8. NPSAS:12 full-scale estimated institution sample sizes and yield

Institutional sector |

Frame count1 |

Number sampled |

Number eligible |

List respondents |

Total |

6,762 |

1,671 |

1,654 |

1,406 |

|

|

|

|

|

Public |

|

|

|

|

Less-than 2-year |

234 |

30 |

26 |

19 |

2-year |

1,136 |

381 |

381 |

335 |

4-year non-doctorate-granting |

357 |

166 |

166 |

148 |

4-year doctorate-granting |

306 |

250 |

250 |

217 |

|

|

|

|

|

Private |

|

|

|

|

Not-for-profit less-than-4-year |

275 |

30 |

30 |

26 |

Not-for-profit 4-year non-doctorate-granting |

1,018 |

281 |

281 |

236 |

Not-for-profit 4-year doctorate-granting |

580 |

250 |

250 |

208 |

For-profit less-than-2-year |

1,425 |

90 |

85 |

68 |

For-profit 2-year |

895 |

90 |

87 |

70 |

For-profit 4-year |

536 |

103 |

98 |

79 |

1 Institution counts based on IPEDS:2007 08 header files.

b.Student Sample

Although this submission is not for student data collection, the field test student sample design is included because part of the design is relevant for list collection, and the sampling of students from the enrollment lists will likely begin prior to OMB approval of the field test student data collection. However, it is anticipated that the sampling of students from the enrollment lists for the full-scale will likely begin after OMB approval of the full-scale student data collection. The full-scale student sample design is also expected to be revised based on the field test and based on decisions to be made in conjunction with NCES. Therefore, the full-scale student sample design will be described in the submission for full-scale student data collection.

Based on past experience, we expect to obtain, minimally, an overall 95 percent student eligibility rate and 70 percent student interview response rates in the field test. The expected field test student sample sizes and sample yield are presented in table 9. As indicated in the table, the field test will be designed to sample about 4,500 students, including 2,529 first-time beginners (FTBs), 1,801 other undergraduate students, and 200 graduate students, with an expected 3,000 interview respondents, including 1,627 FTBs, 1,239 other undergraduate students, and 134 graduate students. We will employ a variable-based (rather than source-based) definition of study member, similar to that used in NPSAS:08 and NPSAS:04, with revisions deemed necessary by NCES. We expect the rate of study membership7 to be about 90 percent (about 3,870 students).

The six student sampling strata will be:

first-time beginning undergraduate students

other undergraduate students

masters students

doctoral-research/scholarship/other students

doctoral-professional practice students8

other graduate students.9

As was done in past rounds of NPSAS, certain student types (potential FTBs, other undergraduates, masters students, doctoral-research/scholarship/other students, doctoral-professional practice students, and other graduate students) will be sampled at different rates to control the sample allocation. Additionally, veterans may be oversampled at a higher rate to look at persistence among veteran FTBs. Differential sampling rates facilitate obtaining the target sample sizes necessary to meet analytic objectives for defined domain estimates in the full-scale study.

Table 9. NPSAS:12 field test expected student sample sizes and yield

Institutional sector |

Sample students |

Eligible students |

Responding students |

Unweighted Response Rate |

||||||||||||

Total |

FTBs |

Other undergraduate students |

Graduate students |

Total |

FTBs |

Other undergraduate students |

Graduate students |

Total |

FTBs |

Other undergraduate students |

Graduate students |

Total |

FTBs |

Other undergraduate students |

Graduate students |

|

Total |

4,530 |

2,529 |

1,801 |

200 |

4,302 |

2,373 |

1,738 |

191 |

3,000 |

1,627 |

1,239 |

134 |

69.7 |

68.6 |

71.3 |

70.2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Public |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Less-than 2-year |

140 |

106 |

34 |

0 |

105 |

79 |

26 |

0 |

77 |

58 |

19 |

0 |

73.0 |

73.4 |

73.1 |

N/A |

2-year |

1,492 |

909 |

583 |

0 |

1,492 |

909 |

583 |

0 |

927 |

567 |

360 |

0 |

62.1 |

62.4 |

61.7 |

N/A |

4-year non-doctorate-granting |

381 |

181 |

182 |

18 |

372 |

177 |

178 |

17 |

290 |

138 |

140 |

12 |

78.0 |

78.0 |

78.7 |

70.6 |

4-year doctorate-granting |

927 |

328 |

559 |

40 |

879 |

312 |

531 |

36 |

639 |

227 |

387 |

25 |

72.7 |

72.8 |

72.9 |

69.4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Private |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Not-for-profit less-than-4-year |

128 |

93 |

35 |

0 |

128 |

93 |

35 |

0 |

85 |

61 |

24 |

0 |

66.7 |

65.6 |

68.6 |

N/A |

Not-for-profit 4-year non-doctorate-granting |

406 |

224 |

154 |

28 |

400 |

221 |

152 |

27 |

316 |

175 |

122 |

19 |

79.0 |

79.2 |

80.3 |

70.4 |

Not-for-profit 4-year doctorate-granting |

416 |

165 |

149 |

102 |

414 |

165 |

149 |

100 |

339 |

135 |

133 |

71 |

82.0 |

81.8 |

89.3 |

71.0 |

For-profit less-than-2-year |

383 |

320 |

63 |

0 |

255 |

213 |

42 |

0 |

142 |

120 |

22 |

0 |

55.8 |

56.3 |

52.4 |

N/A |

For-profit 2-year |

128 |

104 |

24 |

0 |

128 |

104 |

24 |

0 |

93 |

75 |

18 |

0 |

72.1 |

72.1 |

75.0 |

N/A |

For-profit 4-year |

129 |

99 |

18 |

12 |

128 |

99 |

18 |

11 |

92 |

71 |

14 |

7 |

72.1 |

71.7 |

77.8 |

63.6 |

NOTE: Details may not sum to totals because of rounding. Percentages are based on unrounded numbers. FTB = first time beginner.

To ensure a large enough sample for the field test of the Beginning Postsecondary Students (BPS) Longitudinal Study, the base year sample will include a large percentage of potential FTBs. The sampling rates for students identified as potential FTBs and other undergraduate students on enrollment lists will be adjusted to yield the appropriate sample sizes after accounting for the expected false positives10 by sector. This will ensure sufficient numbers of actual FTBs (see table 9). The expected false positive rate will be based on NPSAS:04 and BPS:04/06, as well as on expected improvements due to changed procedures (described below).

Creating student sampling frames. Sample institutions will be asked to provide an electronic student enrollment list. Similar to past NPSAS studies, the following data items will be requested for NPSAS-eligible students enrolled at each sample institution:

Name

Social Security number (SSN)

Student ID number (if different from SSN)

Student level (undergraduate, masters, doctoral-research/scholarship/other, doctoral-professional practice, other graduate)

First-time Beginner (FTB) indicator

Class level of undergraduates (first year, second year, etc.)

Date of birth (DOB)

High school graduation date (month and year)

CIP code or major

Veteran status

Indicator of whether the institution received an ISIR (electronic record summarizing the result of the student’s FAFSA processing) from CPS

Contact information (local and permanent street address and phone number and school and home e-mail address)

These data items are described in greater detail in section C. The following section describes our planned procedures to securely obtain, store, and discard sensitive information collected for sampling purposes.

Obtaining student enrollment lists. To ensure the secure transmission of sensitive information on the enrollment lists, we will provide the following options to institutions: (1) upload encrypted student enrollment list files to the project’s secure website using a login ID and “strong” password provided by RTI, or (2) provide an appropriately encrypted list file via e-mail (RTI will provide guidelines on encryption and creating “strong” passwords).

We expect that very few, if any, institutions will ask to provide a paper list (in the NPSAS:08 full-scale study no institutions submitted a paper list). The data security plan will outline protocols for handling faxed materials that conform to data security requirements. The original file or paper list containing all students with SSNs will be kept through data collection in order to resolve any issues with student identification that occur during data collection. These files will be deleted and lists shredded after data collection. RTI will ensure that the SSNs for non-selected students are securely discarded.

Identifying FTBs during the base year. Accurately qualifying sample members as FTBs is a continuing challenge. This is important because unacceptably high rates of misclassification (e.g., false positives) can and have resulted in (1) excessive cohort loss with too few eligible sample members to sustain longitudinal study, (2) excessive cost to “replenish” the sample with little value added, and (3) inefficient sample design (excessive oversampling of “potential” FTBs) to compensate for anticipated misclassification error.

We will take steps early in the NPSAS:12 listing and sampling processes to improve the rate at which FTBs are correctly classified for sampling. First, in addition to an FTB indicator, we will request that enrollment lists provided by institutions (or institution systems) include class level, student level, date of birth, and high school graduation date. Students identified by the school as FTBs but also identified as in their third year or higher and/or not an undergraduate student will not be classified as FTBs for sampling. Additionally, students appearing to be dually enrolled at the postsecondary school and in high school based on the high school graduation date will not be eligible for sampling. If the FTB indicator is not provided for a student on the list but the student is 18 years old or younger and does not appear to be dually enrolled, the student will be sampled as an FTB. Otherwise, if the FTB indicator is not provided for a student on the list and the student is over the age of 18, then the student will be sampled as an other undergraduate but will be part of the BPS cohort if identified during the interview as an FTB.

Second, prior to sampling we will match students over the age of 18 listed as potential FTBs to National Student Loan Data System (NSLDS) records to determine if any have a federal financial aid history pre-dating the NPSAS year (earlier than July 1, 2010 for the field test). Since NSLDS maintains current records of all Title IV grant and loan funding, any students with data showing disbursements from the prior year can be reliably excluded from the sampling frame of FTBs. Given that about 60 percent of FTBs receive some form of Title IV aid in their first year, this matching process will not be able to exclude all listed FTBs with prior enrollment, but will significantly improve the accuracy of the listing prior to sampling, yielding fewer false positives. Only students over 18 years of age will be sent to NSLDS because most students 18 and younger are FTBs. Matching to NSLDS would be expected to identify about 22 percent of the cases matching to NSLDS as false positives (based on NPSAS:04 data).

An interim step we are considering is sending the results we get from NSLDS to the National Student Clearinghouse (NSC) for further narrowing of potential FTBs based on the presence of evidence of earlier enrollment. Students sent to NSC would include those sent to NSLDS but who did not match and those sent to NSLDS who matched and still appear to be FTBs after the match. Due to the high cost of the NSC service, we are determining the costs and benefits of sending all potential FTBs resulting from NSLDS matching who are 19 or older versus sending a subsample of these potential FTBs or only FTBs in certain sectors or institutions to NSC for matching. Matching to NSC would be expected to identify about 7 percent of the cases matching to NSC as false positives, and matching to both NSC and NSLDS would be expected to identify about 16 percent of all potential FTBs over the age of 18 as false positives (based on NPSAS:04 data). Both NSLDS and NCS file matches require SSNs to ensure accuracy and minimize the potential mis-matching of records.

Third, we will set our FTB selection rates taking into account the error rates observed in NPSAS:04 and BPS:04/06 within each sector, as discussed above. As shown in table 10, some institution sectors were better able to accurately identify their students as FTBs. While the sample selection rates will take into account these false positive error rates, we do anticipate achieving an improvement in accuracy from the NSLDS and NSC record matches and will adjust the selection rates accordingly. To the extent possible, we will examine the sector-level FTB error rates in the field test to determine the rates necessary for full-scale student sampling.

False starters are students who enrolled in a course at the postsecondary level but did not earn credit, and these students have been included as FTBs in the past. Because they are difficult to accurately identify, our experience has shown that many false positives are false starters. We have agreed with NCES to limit the FTB sample to pure FTBs.

Finally, we will revise the screening questions used to identify FTBs in CADE and CATI. Question wording to determine FTB eligibility will be pre-tested in focus groups and in RTI’s cognitive laboratory; both evaluations will be conducted in advance of the field test.

Table 10. Weighted false positive rate observed in FTB identification, by sector: NPSAS:04

Sector in NPSAS:04 |

False positive rate (weighted) |

Public |

|

Less-than 2-year |

64.4 |

2-year |

72.5 |

4-year non-doctorate-granting |

26.8 |

4-year doctorate-granting |

27.0 |

|

|

Private |

|

Not-for-profit less-than-4-year |

63.1 |

Not-for-profit 4-year non-doctorate-granting |

43.4 |

Not-for-profit 4-year doctorate-granting |

15.2 |

For-profit less-than-2-year |

63.1 |

For-profit 2-year or more |

70.0 |

FTB = first time beginner.

Quality control checks for lists. Several checks on quality and completeness of student lists will be implemented before the sample students are selected. The lists will fail quality control checks if student level and/or the FTB indicator are not included on the list. Additionally, the unduplicated number of enrollees on each institution’s student list will be checked against the latest IPEDS 12-month enrollment data. Lists will be unduplicated by student ID number. The comparisons will be made for each student level: undergraduate and graduate. Based on past experience, only counts within 50 percent of non-imputed IPEDS counts will pass edit. The unduplicated FTB counts will be checked separately against the fall enrollment counts from the IPEDS Fall Enrollment Component because IPEDS does not have unduplicated annual FTB counts. The check will fail if the count for any unduplicated list is at least 50 percent less than the IPEDS count. The list counts are expected to almost always be more than the IPEDS counts because the IPEDS counts are not annual counts. This check will identify institutional enrollment lists that under-report FTBs. We will re-evaluate these checks after the field test for use in the full-scale study.

Additionally, contact information will be checked carefully for each enrollment list as well as for each student sample. Past experience shows that some institutions may provide enrollment lists with contact information matched improperly with student name. Comparing e-mail addresses with student names will help ensure that each student’s contact information matches.

Institutions failing the edit checks will be re-contacted to resolve the discrepancy and verify that the institution coordinator who prepared the student list(s) clearly understood our request and provided a list of the appropriate students. When we determine that the initial list provided by the institution is not satisfactory, we will request a replacement list. We will proceed with selecting sample students when we have either confirmed that the list received is correct or have received a corrected list.

Selection of sample students. Students will be sampled on a flow basis as student lists are received using a stratified systematic sampling procedure. Lists will be unduplicated by student ID number prior to sample selection. In addition, all samples will be unduplicated by Social Security number (SSN) between institutions. In prior NPSAS studies, we found several instances in which this step avoided multiple selections of the same student. However, we also learned that the ID numbers assigned to non-citizens may not be unique across institutions; thus when duplicate IDs are detected, but the IDs are not standard SSNs (do not satisfy the appropriate range check), we will check the student names to verify that they are indeed duplicates before deleting the students.

Although no paper lists are expected to be received because there were none in NPSAS:08, we will still be prepared to sample students from paper lists. Any paper lists received will be keyed into an electronic format and then sampled in the same manner as electronic lists. QC will be done for both the keying and sampling of the students.

A modular design for FTBs is being considered, and the details are still being discussed with NCES. Students identified during the interview as FTBs will all be asked a core set of questions. Then, they will be asked another set of questions, but not all FTBs will receive the same set of additional questions. Each set of questions is called a module, and there will be 2-4 modules. One module is intended for nontraditional students, but a portion (to be determined) of the nontraditional students will need to be included in the other modules for representation. Likewise, a second module is expected to focus on remediation and will need to include many, but not all, of the remedial students. Other planned modules will be relevant for all FTBs: traditional and non-remedial students, as well as some nontraditional and remedial students, will be randomly assigned to these other modules. Assignment of FTBs to modules will occur in the instrument based on answers to screening questions about being a nontraditional and/or remedial student and on an embedded randomization function. Additional details about the modular design will be provided in the submission for field test student data collection.

Sample yield for the field test data collection will be monitored by institutional and student sampling strata, and the sampling rates will be adjusted early, if necessary, to achieve the desired sample yield.

Although simpler procedures would suffice for the NPSAS:12 field test, the student sampling procedures planned for the field test will be comparable to those planned for the full-scale study to provide a thorough evaluation before being implemented for the full-scale sampling.

Re-interview. In addition to selecting the student sample from enrollment lists received by participating institutions, a subsample of about 10 percent of interview respondents will be randomly selected to be re-interviewed11 to enable analysis of the reliability of items in the field test instrument. The Case Management System (CMS) will be programmed to randomly select this subsample. The subsampling rate will be set so that all re-interview cases are identified during the first half of field test data collection and all re-interviews can be completed at approximately the same time as the regular interviews, while allowing sufficient time to elapse between each initial interview and the re-interview.

Quality control checks for sampling. Quality control (QC) is very important for sampling and all statistical activities. Statistical procedures used in NPSAS:12 will undergo thorough quality control checks. RTI has developed technical operating procedures (TOPs) that describe how to properly implement statistical procedures and QC checks. We will employ a checklist for all statisticians to use to ensure that appropriate QC checks are performed.

Some specific sampling QC checks will include, but will not be limited to, checking that the:

institutions on the full-scale sampling frame all have a known, non-zero probability of selection

distribution of implicit stratification for institutions is reasonable

weighted institution size measures sum to the frame size

number of institutions and students selected match the target sample sizes

sample weight for each full-scale institution is the inverse of the probability of selection.

Tracing prior to the start of data collection. Once the sample is selected, RTI will conduct several batch database searches to prepare the sample for the start of student interviews. The first steps in the batch tracing process will be to match to the U.S. Department of Education's Central Processing System (CPS) and the National Change of Address (NCOA) database to obtain updated contact information. Any new information collected from CPS or NCOA matches will be added to the NPSAS locator database and will be used to attempt to match to Telematch to capture any updated telephone numbers needed for the start of CATI data collection. Batch locating is the final step before the start of data collection.

3.Institutional Contacting

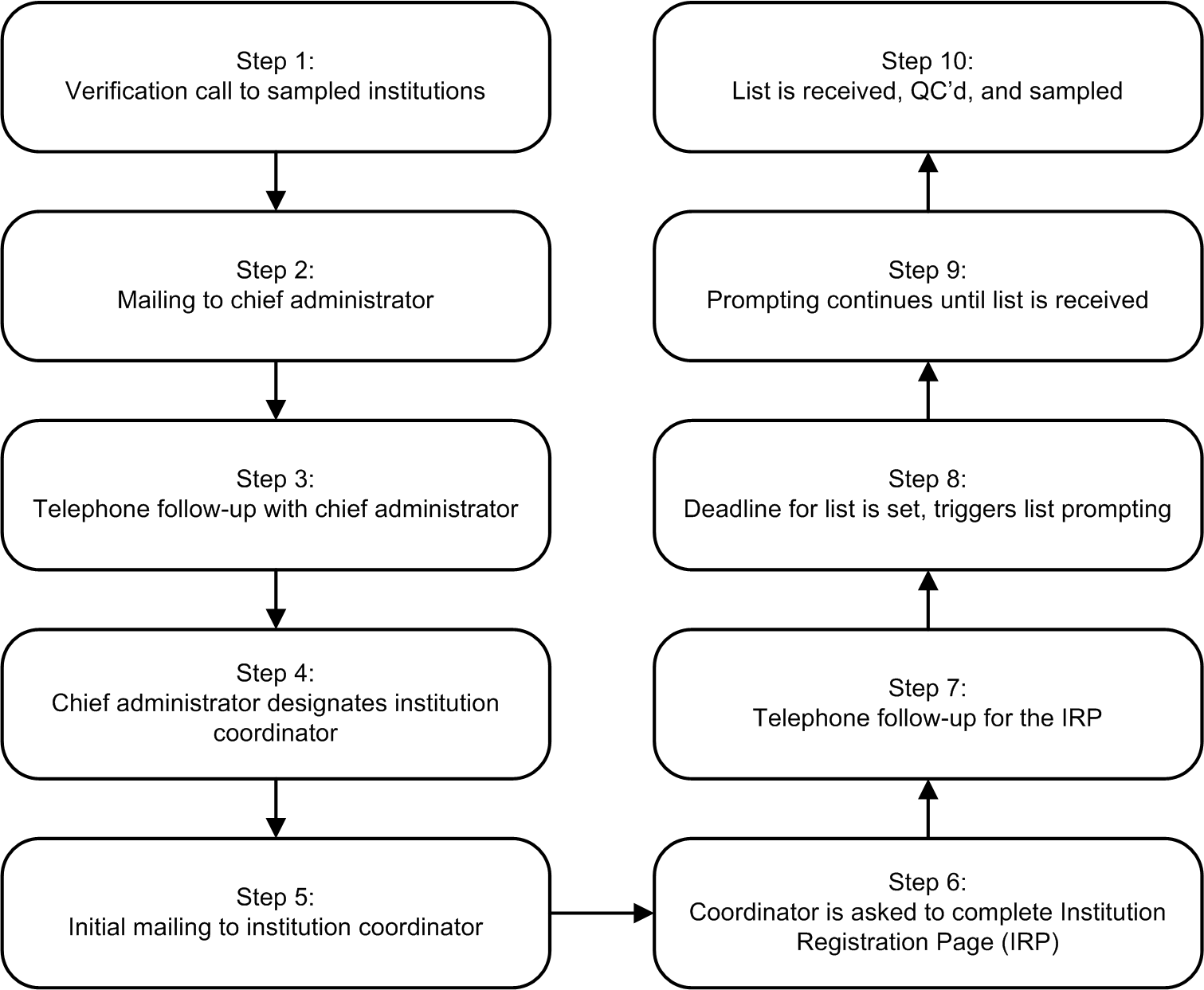

Establishing and maintaining contact with sampled institutions throughout the data collection process is vital to the success of NPSAS:12. Institutional participation is required in order to draw the student sample and collect institutional student records. The process in which institutions will be contacted is depicted in figure 2 and described below.

Figure 2. Flow chart of institutional contacting activities

Experienced staff from RTI’s Call Center Services (CCS) will be responsible for contacting institutions. Each staff member will be assigned a set of institutions that is their responsibility throughout the data collection process. This allows RTI staff members to establish rapport with the institution staff and provides a reliable point of contact at RTI. Staff members are thoroughly trained in basic financial aid concepts and in the purposes and requirements of the study, which helps them establish credibility with the institution staff.

For both the field test and full-scale study, verification calls will be made to each sampled institution to confirm eligibility and verify contact information prior to mailing study information. This information will be obtained from the IPEDS header files. A sample of the script used for these calls can be found in appendix E. Once the contact information is verified, we will prepare and send an information packet to the chief administrators of each sampled institution. A copy of the letter and brochure can be found in appendix D. The materials will provide information about the purpose of the study and the nature of subsequent requests. Approximately 1 week after the information packet is sent out, institutional contactors will conduct follow-up calls to secure study participation and an institutional coordinator.

The choice of an appropriate institutional coordinator will be left to each institution. As with NPSAS:08, the NPSAS:12 institutions will be urged to appoint their Financial Aid director as institution coordinator. Financial Aid directors are typically more familiar than their colleagues in the institutional research or the registrar’s office with the NPSAS study. These staff are most likely to have access to student data, and are equipped to forward each data request to the most appropriate office for completion. RTI institution contactors will work with the chief administrator’s office in attempting to designate the most appropriate coordinator. Campus offices other than financial aid (e.g., registrar, admissions) usually provide part of the institution data for sample students. For NPSAS:12, RTI will identify relevant multicampus systems within the field test and full-scale samples. Multicampus systems can supply enrollment list data at the system level. Even when it is not possible for a state system to supply system-wide data, they can lend support in other ways. Other systems can prompt institutions under their jurisdiction to participate; this was crucial in refusal conversion efforts in many multicampus systems for NPSAS:08.

The institutional coordinator will receive a mailing containing study materials. The first thing the institutional coordinator will be asked to do is complete the online Institutional Registration Page (IRP). A copy of the IRP is included in Appendix D. The primary function of the IRP is to confirm the date the institution will be able to provide the student enrollment list. Based on the information provided, a customized timeline will be created for each institution.

The second step that institutional coordinators will also be asked to do is to provide electronic enrollment lists of all students enrolled during the academic year. Depending on the information provided from the IRP, the earliest enrollment lists will be due to RTI in late January. Since enrollment lists are collected for the entire academic year, January is the earliest enrollment lists can be collected. The lists provided to projects staff will serve as the population for which the student sample will be drawn. Email prompts will be sent to institutional coordinators based on the customized schedule for each institution. A reminder letter directing institution coordinators to the website for complete instructions will be sent, typically three weeks prior to the deadline.

4.Tests of Procedures or Methods

There will be no tests of procedures or methods as part of the NPSAS:12 enrollment list data collection activities.

5.Reviewing Statisticians and Individuals Responsible for Designing and Conducting the Study

Names of individuals consulted on statistical aspects of study design along with their affiliation and telephone numbers are provided below.

Name Affiliation

Dr. Lutz Berkner MPR

Dr. Susan Choy MPR

Ms. Christina Wei MPR

Dr. John Riccobono RTI

Dr. James Chromy RTI

Mr. Peter Siegel RTI

Dr. Jennifer Wine RTI

In addition to these statisticians and survey design experts, the following statisticians at NCES have also reviewed and approved the statistical aspects of the study: Dr. Tom Weko, Dr. Tracy Hunt-White, and Ms. Linda Zimbler.

6.Other Contractors’ Staff Responsible for Conducting the Study

The study is being conducted by the Postsecondary, Adult, and Career Education (PACE) division of the National Center for Education Statistics (NCES), U.S. Department of Education. NCES’s prime contractor is the RTI International (RTI). RTI is being assisted through subcontracted activities by MPR Associates, Branch Associates, Kforce Government Solutions, Inc. (KGS), Research Support Services, Millennium Services 2000+, Inc., and consultants. Principal professional staff of the contractors, not listed above, who are assigned to the study are identified below:

Name Affiliation

Dr. Alvia Branch Branch Associates

Dr. Cynthia Decker Consultant

Ms. Andrea Sykes Consultant

Mr. Dan Heffron KGS

Ms. Carmen Rivera Millennium Services

Ms. Vicky Dingler MPR

Ms. Laura Horn MPR

Ms. Alexandria Radford MPR

Dr. Jennie Woo MPR

Dr. Alisú Shoua-Glusberg RSS

Mr. Jeff Franklin RTI

Mr. Tim Gabel RTI

Ms. Christine Rasmussen RTI

Ms. Melissa Cominole RTI

Ms. Kristin Dudley RTI

Mr. Brian Kuhr RTI

1 A preliminary sampling frame has been created using IPEDS:2007-08 data, and frame counts in tables 1 and 2 are based on this preliminary frame. The frame will be re-created with more recent IPEDS data prior to sample selection.

2 The institutional strata can be aggregated by control or level of the institution for the purposes of reporting institution counts.

3 An institutional response rate of approximately 52 percent is expected for the field test. This response rate is much lower than past NPSAS cycles due to the statistical sampling method for selecting the institutional sample. Any bias that may be introduced due to nonresponse will be addressed through nonresponse bias analysis and nonresponse weight adjustments that will be conducted at both the institution and student levels.

4 The Hispanic-serving institutions (HSI) indicator no longer exists in IPEDS, so an HSI proxy will be created using IPEDS Hispanic enrollment data.

5 We will decide what, if any, collapsing is needed of the categories for the purposes of implicit stratification.

6 For sorting purposes, Alaska and Hawaii will be combined with Puerto Rico in the Outlying Areas region rather than in the Far West region.

7 NPSAS has many administrative data sources along with the student interview. Key variables have been identified across the various data sources to determine the minimum requirements to support the analytic needs of the study. Sample members who meet these minimum requirements will be classified as study members. These study members will have enough information from these multiple sources to be included in the NPSAS analysis files.

8 Past rounds of NPSAS have included samples of first-professional students. However, IPEDS is in the process of replacing the term first-professional with doctoral-professional practice. We will work with the sample institutions when requesting enrollment lists to ensure that they understand how to identify doctoral-research and doctoral-professional practice students.

9 “Other graduate” students are those who are not enrolled in a degree program, such as students in post-baccalaureate certificate programs or students just taking graduate courses.

10 A false positive is a sample member who is sampled as an FTB but is determined in the interview to not be an FTB.

11 Re-interviews will be conducted approximately 3 to 4 weeks after the initial interview and will contain a subset of items (either new items or those that have been difficult to administer in the past). Re-interviews will be conducted in the same administration mode as the initial interview.

NPSAS:12

Supporting Statement Request for OMB Review (SF83i)

| File Type | application/msword |

| File Title | Chapter 2 |

| Author | spowell |

| Last Modified By | #Administrator |

| File Modified | 2010-07-27 |

| File Created | 2010-07-27 |

© 2026 OMB.report | Privacy Policy