Supporting Statement B (Consumer Survey 2011)2-16-11

Supporting Statement B (Consumer Survey 2011)2-16-11.doc

Consumer Survey

OMB: 3060-1144

OMB 3060-1144 February 2011

Title: Consumer Survey

B. Collections of Information Employing Statistical Methods:

The agency should be prepared to justify its decision not to use statistical methods in any case where such methods might reduce burden or improve accuracy of results. When item 17 on the Form OMB 83-I is checked "Yes," the following documentation should be included in the Supporting Statement to the extent that it applies to the methods proposed:

1. Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection methods to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

The potential respondent universe is the U.S. population. The FCC contractor will send the survey to a random sample of the U.S. population, potentially obtaining information from an estimated 5,000 households.

The FCC contractor has hired the online survey company, Knowledge Networks (KN) to conduct the survey. To have this study be both population-based and probability-based, while achieving the data quality benefits of the web mode of data collection, the only feasible approach is to use the KN web-enabled panel. The study requires interviews with that part of the population that does not have Internet access. The KN panel has sample coverage of non-Internet and low-income households while at the same time surveying a probability sample. KN provides free netbook computers and Internet service to panelists without home Internet access. KN selects the respondents from its existing panel members. Its panel members are drawn using its “Address-Based Sampling (ABS)" (see discussion under Point 3 below). KN’s panel is nationally representative with sample demographics that compare very favorably with those reported by the U.S. Census Bureau (2003).1 The contractor will use KN’s online panel to draw a random sample of households that is representative of the different media market structures at the Designated Market Area (DMA) level across the United States. DMAs are television markets in the United States.

There are several advantages with using an online survey company such as KN. First, its online survey instruments are especially well-suited for choice experiments and the dynamic presentation of follow-up questions. The survey methodology uses dynamic programming extensively to solicit accurate information, and this type of programming could not be efficiently conducted by other survey modes. Second, the contractor has worked with KN previously and KN can augment its existing survey template so that it can be deployed very quickly.2 Third, because KN obtains high completion rates, KN’s survey costs are relatively lower, and the sample data are collected in about two to four weeks. Fourth, KN also provides detailed demographic data for each respondent, which can be used in the study that the contractor will perform using the data collected in the Consumer Survey. Finally, because these data are already recorded, the length of the field survey is shortened considerably and ensures quality responses from the respondents.

2. Describe the procedures for the collection of information including:

Statistical methodology for stratification and sample selection,

Estimation procedure,

Degree of accuracy needed for the purpose described in the justification,

Unusual problems requiring specialized sampling procedures, and

Any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Statistical methodology for stratification and sample selection: The sample will have two strata: 1) small television markets, which are markets with 5 or fewer TV stations, and 2) all other television markets. The FCC contractor is not calculating “market level” estimates of willingness to pay (WTP) for each of the 210 DMA markets. Instead, the contractor will be estimating the impact of different market structures (Xj in equation 6 on page f of this supporting statement) on an individual consumer’s WTP for the media environment (yij in equation 6 on page 5 of this supporting statement) they report that they have. As such, all the contractor needs is to have an adequate number of responses for different market structures. Because eight percent of TV households reside in TV markets with five or fewer TV stations, the contractor expects the conservative sample size of 4000 households to contain about 280 to 320 households in these “small TV markets." However, to increase the precision of the estimates in small TV markets, the contractor will have KN oversample small TV markets so they comprise ten percent of the sample, or about 400 observations.

Estimation procedure: The FCC contractor is using choice experiments in the survey to estimate the value of selected features of a household’s media environment. These estimated values (an approximation to consumer satisfaction) will then be used as the dependent variable in a cross-sectional regression analysis of the effects of market structure on consumer satisfaction with their media.

Because media environment is a mixture of public and private services, indirect valuation methods, such as those used in the environmental and transportation choice literature, are appropriate. The conjoint (or choice question) approach, which can be applied to products with or without a functioning market, has several important characteristics. Choice experiments can be designed so the end user considers the direct cost (i.e., the total of all the respondent’s monthly subscriptions to newspapers, satellite radio, cable or satellite TV, and the Internet, as well as any contributions to public radio stations, or COST) and indirect cost (i.e., the amount of advertising in the respondent’s media environment, or ADVERTISING). Moreover, the proposed experimental approach determines the levels of the features of each local media environment offered exogenously and avoids collinearity problems by offering hypothetical alternatives. (e.g., Q52 of the Consumer Survey). For example, the levels for diversity (i.e., diversity of opinion in reporting information) and localism (i.e., amount of information on local news and events) change independently in the hypothetical alternatives as opposed to market data where they often vary together almost perfectly, and cannot be precisely estimated.

The contractor has developed an experimental design for the choice experiments as shown on the survey. Measures developed by Zwerina et. al. (1996) were used to generate an efficient non-linear optimal design for the levels of the features that comprise the media environment options.3 A fractional factorial design created 72 paired descriptions of the media environment, A and B, that are grouped into 9 versions (1, 2, 3, 4, 5, 6, 7, 8, and 9), of eight choice scenarios (or sets), with a single version to be randomly distributed to each respondent. See the Appendix to the survey for the table that details the values for the experimental design. The descriptions of the variables in this table are:

Alt: - hypothetical media environment alternative (A, B);

Vers. – version (1, 2, 3, 4, 5, 6, 7, 8, or 9);

Set – set of eight choice questions within each version;

Advertising – level of advertising (low, medium or high);

Diversity of opinion - level of diversity (low, medium or high);

Community news - level of community news and events (low , medium or high);

Multiculturalism – level of multiculturalism (low, medium or high); and

Cost – cost of median environment ($).

Note that cost varies by five classifications, I, II, III , IV and V. Each individual respondent within a version will receive a cost classification based on the cost of their actual own media environment. For example, if the respondent has version 1, and has indicated that the cost of their actual media environment is $25 (“dov_amt”), the design will assign them to “Cost I.” We do this to ensure that the costs in the choice scenarios provide more realistic experimental values that better reflect the actual costs the respondent pays in the market. Continuing our example, the first row of the “experimental design” table shows that Alternative A will have low advertising, medium diversity, high community news, medium multiculturalism and a cost of $10.

The criteria for cost allocation are provided on page 8 of the survey, Table 2. last row:

-

Table 2. Features of Overall Media Environment

Feature

Levels

Diversity of opinion

Only one viewpoint (Low)

A few different viewpoints (Medium)

Many different viewpoints (High)

Community news

Very little or no information on community news and events (Low)

Some information on community news and events (Medium)

A lot of information on community news and events (High)

Multiculturalism

Very little or no information reflecting the interests of women and minorities (Low)

Some information reflecting the interests of women and minorities (Medium)

A lot of information reflecting the interests of women and minorities (High)

Advertising

Barely noticeable (Low)

Noticeable but not annoying (Medium)

Annoying (High)

Cost

[KN insert appropriate cost range;

$0 to $50 per month if $0 ≤ [DOV_AMT] ≤ $30

$5 to $100 per month if $30 < [DOV_AMT] ≤ $70

$5 to $150 per month if $70 < [DOV_AMT] ≤ $120

$10 to $200 per month if $120 < [DOV_AMT] ≤ $180

$10 to $250 per month if $[DOV_AMT] > $180

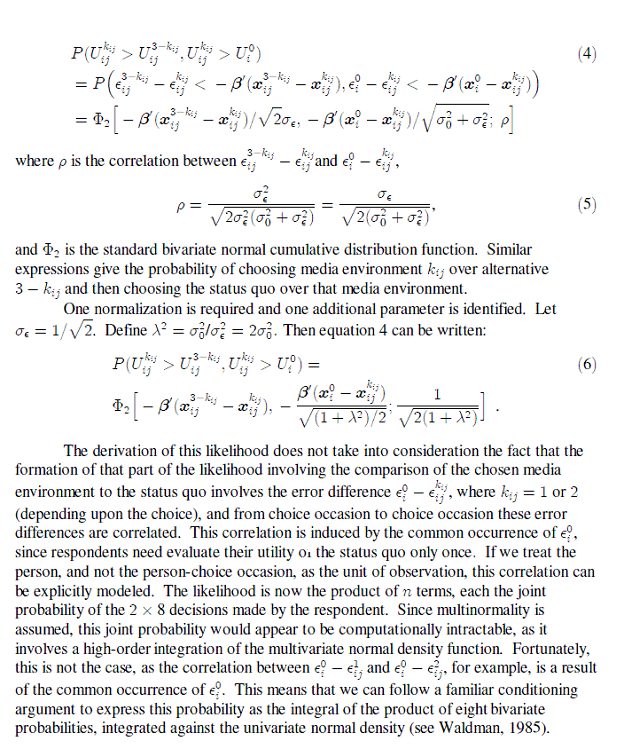

Because the data are from choice experiments, the FCC contractor will address any hypothetical bias. Hypothetical bias arises when the behavior of the respondent is different when making choices in a hypothetical market versus a real market.4 For example, the FCC contractor addresses this potential bias with a follow-up question (e.g., Q24 of the Survey) that asks respondents to make an additional choice between their hypothetical choice, A or B, and their actual service at home. This additional non-hypothetical market information is then incorporated into the likelihood function that is used to estimate marginal utility parameters.

A two-step estimation approach will be employed to answer the research questions. Step 1 will answer questions (i) through (iii):

What are consumers willing to pay for the individual characteristics that comprise their local media environment: DIVERSITY (i.e., diversity of opinion in reporting information on news and current affairs), LOCALISM (i.e., amount of information on local news and events), MULTICULTURALISM (i.e., coverage of ethnic, gender, and minority related issues); and ADVERTISING (i.e., amount of advertising);

What are consumers willing to pay overall for media environment alternatives that are comprised of different levels of the features; and

How does WTP vary between different demographic groups?

Step 2 will answer question (iv):

How do differences in local market media ownership structure affect consumer satisfaction with their local media environment?

In Step 1, the random utility model is used to estimate marginal utilities and calculate WTP from the responses to the choice questions. Survey respondents are assumed to maximize their household’s utility of the media environment option A or B conditional on all other consumption and time allocation decisions. A linear approximation to the household conditional utility function is:

U* = 1COST + 2ADVERTISING + 3DIVERSITY + 4LOCALISM

+ 5MULTICULTURALISM + (1)

where U* is (unobserved) utility, β1 is the marginal disutility of COST, β2, β3, β4 and β5 are the marginal utilities for the media environment features, ADVERTISING, DIVERSITY, LOCALISM and MULTICULTURALISM, and is a random disturbance.

The parameters of the representative individual’s utility function and WTP for the above characteristics will be estimated with data from a survey employing repeated discrete-choice experiments. Each respondent will answer eight choice scenarios.5 In each choice scenario a pair of hypothetical media environment, labeled A and B, will be presented. Respondents will indicate their preference for choice A or B. This information is then enriched with market data by having respondents indicate whether they would stay with their current status quo (SQ) media environment at home, or switch to the hypothetical environment they had just selected.

The marginal utilities have the usual partial derivative interpretation - the change in utility (or, satisfaction) from a one-unit increase in the level of the characteristic. Since the estimates of marginal utility do not have a readily understandable metric, it is convenient to convert these changes into dollar terms. This is done by employing the economic construct of willingness-to-pay, or WTP. For example, the WTP for a one-unit increase in DIVERSITY (e.g., the discrete improvement from “a single journalistic viewpoint” to “a few journalistic viewpoints”) is defined as how much more the media environment would have to be priced to make the consumer just indifferent between the old (cheaper but with less viewpoints) environment and the new (more expensive but with more viewpoints) environment:

1COST + 2ADVERTISING + 3DIVERSITY + 4LOCALISM

+ 5MULTICULTURALISM + =

1(COST + WTPD) + 2ADVERTISING + 3(DIVERSITY + 1) + 4LOCALISM

+ 5MULTICULTURALISM + (2)

where WTPD is the willingness-to-pay for an improvement in DIVERSITY. Solving for WTPD in equation 2 gives the required change in cost to offset an increase of 3 in utility:

WTPD = -3/1 (3)

This

approach is used for all other features. The WTP for ADVERTISING,

LOCALISM and

MULTICULTURALISM

is the negative of the ratio of its marginal utility to the marginal

disutility of COST.

Households may not have identical preferences. Preferences towards DIVERSITY, for example, may differ because of observable demographic characteristics, or may be idiosyncratic. The specification in equation 1 constrains the marginal utility parameters to be the same for all households. To relax this constraint, the contractor can estimate the WTP for DIVERSITY separately for different demographic groups based on, for example, age, disability, education, ethnicity, gender, income, etc. A model that captures this difference is:

U* = 1COST + 2ADVERTISING + (3 + EDUC)DIVERSITY

+ 4LOCALISM + 5MULTICULTURALISM + (4)

where is an additional parameter to be estimated, and EDUC is education. While the WTP for a one-unit improvement in DIVERSITY is -3/1 when education is not important, when education is important, the WTP for a one-unit improvement in DIVERSITY is

-![]() ,

(5)

,

(5)

and

is evaluated at different levels of education.

The

hypothetical utility of each service option U*

is not observed. What is known is which option has the highest

utility. For instance, when a respondent chooses media environment A

over B and then SQ over A, it is assumed that

![]() >

>

![]() and

and

![]() >

>

![]() .

For this kind of dichotomous choice data, a suitable method of

estimation is maximum likelihood (i.e., a form of bivariate probit)

where the probability of the outcome for each respondent-choice

occasion is written as a function of the data and the parameters.

The experimental design uses variation both between and within

subjects. The within variation comes from the eight repeated A-B

choice questions plus the follow up and A-B vs. SQ choice questions

(i.e., the choice between current home media environment and choice A

or B). A detailed description of how the random utility model is

estimated with maximum likelihood is included below:

.

For this kind of dichotomous choice data, a suitable method of

estimation is maximum likelihood (i.e., a form of bivariate probit)

where the probability of the outcome for each respondent-choice

occasion is written as a function of the data and the parameters.

The experimental design uses variation both between and within

subjects. The within variation comes from the eight repeated A-B

choice questions plus the follow up and A-B vs. SQ choice questions

(i.e., the choice between current home media environment and choice A

or B). A detailed description of how the random utility model is

estimated with maximum likelihood is included below:

For

additional details and an application to the demand for broadband

Internet, please see Savage and Waldman, 2008.6

For

additional details and an application to the demand for broadband

Internet, please see Savage and Waldman, 2008.6

Step 2 examines empirically by cross-sectional ordinary least squares regression the effects of local media market structure on consumer satisfaction. The empirical model for consumer i = 1, 2, …, n in market j = 1, 2, …, J is:

yij = α + Xjβ + Zijδ + eij (6)

where yij is consumer satisfaction with their overall local media environment, Xj is a vector of ownership structure variables, Zij is a vector of controls for consumer and/or market factors influencing satisfaction, α, β and δ are parameters to be estimated, and the eij is an error term.

The WTP estimates in Step 1 are used to measure consumer satisfaction with household’s total valuations for their local media environment (yij in equation 6). For this calculation, the FCC contractor uses the information from the cognitive buildup section of the survey to construct each respondent’s actual current media environment in terms of the levels of the features, ADVERTISING, DIVERSITY, LOCALISM and MULTICULTURALISM, that they report. For example, the contractor can assume a baseline household valuation (normalized to zero) for a media environment with a low level of all features: that is, low advertising, low diversity of opinion in reporting information, low information on local news and events; and low coverage of ethnic, gender, and other related issues. For all other media environments, the FCC contractor multiplies the marginal WTP estimates from Step 1 by the level for each feature and sum these individual valuations for each individual consumer. For example, if the estimated marginal WTP for a one level increase in Advertising, Diversity, Localism, and Multiculturalism is -$10, $10, $15, and $25, respectively, a representative consumer’s total ($) valuation for their local media environment comprised of medium Advertising, medium Diversity, high Localism and low Multiculturalism would be (1 x -$10) + (1 x $10) + (2 x $15) + (1 x $25) = $55.

Note that allowing for heterogeneous preferences in step 1, provides an additional source of variation in the dependent variable in step 2. For example, consider two respondents, Jane and John, who have the same media environment but Jane has high education relative to John’s low education. Suppose step 1 shows, all other things being equal, that high education respondents value Diversity at $30 while low education respondents value Diversity at $5. Using our calculation above, Jane’s total ($) valuation for her local media environment comprised of medium Advertising, medium Diversity, high Localism and low Multiculturalism would be (1 x -$10) + (1 x $30) + (2 x $15) + (1 x $25) = $75. John’s total ($) valuation for her local media environment comprised of medium Advertising, medium Diversity, high Localism and low Multiculturalism would be (1 x -$10) + (1 x $5) + (2 x $15) + (1 x $25) = $50.

The parameters of interest are contained in the vector β. If the null hypothesis that β equals zero cannot be rejected, this would be taken as evidence that a local media ownership structure variable has no affect on consumer satisfaction. The finding that β > 0 indicates that an increase in a structure variable increases consumer satisfaction. More specifically, suppose we estimated a parsimonious specification of equation (6) where consumer satisfaction is a function of a single element of X, say, the number of local broadcast stations currently owned by the largest firm in the market. The finding of βX = 1.2 would indicate that if, all other things constant, the largest firm in the market acquired one additional broadcast station the overall satisfaction with the local media environment would increase by $1.20 for the average consumer. . This information is extremely useful for policy analysis. It will not only inform the FCC on the effectiveness of their competition, localism and diversity goals, but will also provide a structural basis for economy-wide analysis of the welfare effects from alternative media policies.

The FCC has used its licensing databases and commercial data sources to develop a detailed profile of each media market consisting of in excess of 100 ownership structure and consumer and/or market factors. The ownership structure variables of most relevance to the FCC's mission are those elements which Congress has given the FCC authority to regulate. These include the number of broadcast stations owned by an individual firm and the ability of a firm to own multiple types of media in a market. These elements are measured by the number of broadcast stations (radio and/or TV) owned by the largest owner in the market, the average number of broadcast stations (radio and/or TV) owned by a firm in the market, the number of media outlets that are owned by firms that own more than one type of media in the market, and the number of firms owning more than one type of media in the market. These effects are mediated by other market characteristics such as population demographics including income, pay-television subscriptions, high-speed internet subscriptions, and the number of media outlets in the market.

Degree of accuracy needed for the purpose described in the justification: In order to be able to confidently estimate consumer valuations of the media environment, the contractor will need to be able to estimate these values with a confidence interval of no more than 10%.

Unusual problems requiring specialized sampling procedures: The contractor expects no unusual problems requiring specialized sampling procedures.

Any use of periodic (less frequent than annual) data collection cycles to reduce burden: The contractor will not use any periodic (less frequent than annual) data collection cycles to reduce burden.

3. Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield "reliable" data that can be generalized to the universe studied

Describe methods to maximize response rates and to deal with issues of non-response. The survey is a web-based (or online) survey, which will allow KN to receive accurate survey answers from the respondents. By using a web mode, KN will be able to use special features of the mode of data collection to enable respondents to understand the survey tasks and questions, and therefore answer the survey questions more accurately and with less error than other survey modes. For example, in the practice choice question (Q22), respondents will compare a pair of alternatives, A and B, where one is dominated. Then, if the respondent chooses the dominated alternative, a screen will be displayed online that shows the mistake and educates the respondent on what alternative they should have chosen.

As reported by Callegaro and DiSogra (2008), the AAPOR cumulative response for KN taking into account all phases of panel recruitment, profiling, and survey participation is approximately 10%.7 This is low, on the order of the response rate for mail surveys. But it is deceptively low, for the following reason: The first refusal (to be part of the KnowledgePanel, agreeing to do various surveys in the future) is not related to the subject of this survey—it is a general refusal. While those who agree to be part of the panel may be different, their differences are measurable, and it is less likely that their responses on any particular survey will be different from the population as a whole. Those who refuse a particular survey, on the other hand, may have particular feelings about the subject of the survey, and hence a selected sample here is more important. Therefore, the contractor believes the 65%+ response rate, discussed below, is a better indicator of the response rate for the survey for this research.

The covered population for the panel recruitment is approximately 97% of the U.S. population as a result of the use of the ABS sampling. The uncovered population consists of persons with post office boxes and rural route addresses; business and institutional addresses (i.e., dormitories, nursing homes, group homes, jails, etc.); military housing; and multi-dwelling residential structures that have only a single address (called a drop point address) and for which there is no unit-level identifying information (mail is internally distributed). For a more detailed discussion of KN’s sampling scheme, see the “Best Practices for ICR Supporting Documentation for KnowledgePanel® Surveys appendix” attached to this supporting statement.

To be confident in the sampling scheme, it may be important to account for any systematic variation in important demographic variables between the population and the KN sample. The contractor will test the equality of means of demographic variables, such as age, race, gender, education and income, between their data and the most recent data from the Current Population Survey data. The contractor will apply post-stratification weights to its KN sample and use weighted, maximum likelihood estimation to estimate the econometric model.8 KN will supply weights according to their usual protocol, which includes two important steps. The first step is to develop panel base weights that correct for any bias emerging from their recruitment of the panel, that is, the entire set of households that have agreed to participate in surveys. The second step is survey-specific and uses an iterative raking procedure starting with the panel base weights to adjust for any survey non-response and any non-coverage due to the study-specific sample design. The characteristics that are considered at both stages are gender, age, race, Hispanic origin, educational attainment, Census region, Metropolitan area, Internet access, and language spoken at home (English/Spanish). For both of these steps, data are mainly taken from the most recent Current Population Survey (CPS), though other reliable sources must be used for Internet access and language spoken at home as these are not available in the CPS.

There are two reasons why the sample may not be representative of the population. In the first stage, there is recruitment into the KnowledgePanel, those households or individuals who have agreed to participate in surveys with KN. The acceptance rate here is low. In the second stage, the contractor’s survey is sent to a sample of respondents, some of whom participate. In this stage acceptance is high, on the order of 65% or greater. This is because the households the survey is sent to have agreed to do surveys for KN. The contractor will examine descriptive statistic comparisons of actual survey respondents and the invited KN panel.9

In order to maximize the response rate in the second stage, KN will send up to three email reminders to respondents who have not completed the survey in a timely manner. In KN’s experience, e-mail reminders have proved to achieve within-survey cooperation rates of 65 percent and greater. KN will also use the contractor’s university name in the email invitation and, if needed, place telephone reminder calls to non-responders. Finally, respondents will have a longer than usual time (two-to-four weeks) period to reply. This will provide additional flexibility for respondents, which will in turn improve response rates. The contractor will also examine descriptive statistic comparisons of responders and nonresponders at this stage.10

KN will employ a technique available to them from long experience with their panel, which they refer to as “Modified Stratified Sampling with Completion Propensity Adjustment.”11 In this procedure demographic groups that tend to have lower completion rates are oversampled relative to demographic groups with high completion rates.

To minimize the problem at the first stage, the contractor will use only those households that are sourced from a sample frame called ABS. KN recommends that the KnowledgePanel sample be restricted to the ABS-sourced sample in order to provide the most representative sample possible. ABS-sourced sample is advantaged by providing improved representation of certain segments, particularly young adults, cell phone only households, and nonwhites. In addition, by restricting the sample to ABS, valuable ancillary person-level and household-level characteristic data are available for the ABS sample units, making possible a descriptive comparison of the characteristics of the entire invited sample and the subset of survey participants.

Randomly sampled addresses are invited to join KnowledgePanel through a series of mailings. Telephone follow-up calls are made to nonresponders when a telephone number can be matched to the sampled address. After initially accepting the invitation to join the panel, respondents are then “profiled” online, answering key demographic questions about themselves. This profile is maintained using the same procedures established for the random digit dialing (RDD)-recruited research subjects. Respondents not having an Internet connection are provided a laptop computer and free Internet service. Respondents sampled from the ABS frame, like those from the RDD frame, are provided the same privacy terms and confidentiality protections that KN has developed over the years and that have been reviewed by dozens of Institutional Review Boards.

The key advantage of the ABS sample frame is that it allows sampling of almost all U.S. households. An estimated 97% of households are “covered” in sampling nomenclature. Regardless of household telephone status, they can be reached and contacted via the mail. Second, ABS pilot project has other advantages beyond the expected improvement in recruiting young adults from cell phone-only households, such as improved sample representativeness for minority racial and ethnic groups and improved inclusion of lower educated and low-income households.

KN surveys are usually completed within a two-to-four week timeframe. All observations, regardless of when they arrive, will be treated equally. Because this is a cross-section, there is no issue of sample attrition.

The accuracy and reliability of information collected must be shown to be adequate for intended uses. To ensure that respondents will understand the features being presented in the choice experiments, the survey begins (Q1-Q21 of the Survey) with a cognitive buildup section that asks respondents some general questions about media, and some more specific questions about the features that comprise their actual media environment. The purpose of this section is to provide context and information ensuring that respondents understand the definitions of diversity, localism and multiculturalism, and are able to accurately reveal their preferences for these features in the choice task (Q22-Q51 of the Survey). The FCC contractor has employed a similar approach when estimating preferences for high-speed Internet in 2002 and obtained very credible valuations.12 Furthermore, the FCC contractor will be testing the Consumer Survey on two focus groups to assess the effectiveness of the cognitive build up section.

The three main features used to describe the media environment, DIVERSITY, LOCALISM and MULTICULTURALISM, have been chosen by the FCC because this is what they are interested in from a policy perspective (see the last two sentences of paragraph 3 on page 6 of this supporting statement). To keep the descriptions of the levels consistent across the features, the contractor will continue to use Low, Medium, High in feature descriptions. However, the contractor will also provide a hyperlink at the top of each choice box in the online survey that permits respondents to review a more detailed summary of the levels of all features. For example, in Q22, a respondent that places their cursor over ADVERTISING would be presented with the screen:

“With low advertising, the amount of space on a newspaper or web page, or the amount of air time devoted to commercial advertising on radio or TV, is barely noticeable. With medium advertising, the space or time devoted to advertising is more noticeable. With high advertising, the space or time devoted to advertising is very noticeable, to the point of being annoying when you are viewing or listening to your media source.”

The contractor favors the advantage of an Internet survey here: only those unsure of the advertising feature will click on the hyperlink and take the time to read the enhanced description, thus reducing potential survey fatigue.

Additionally, with respect to (direct) COST, the contractor arrived at the upper limit of $290 by adding together the cost of two newspaper subscriptions, a premium cable or satellite TV contract, a premium Internet contract, a satellite radio contract, and a annual membership to public TV or radio. The experimental design provides a range of costs within this upper limit that provide good variation in the data used to estimate preferences, in particular the marginal disutility of cost. The levels of COST in the experimental design are realistic enough that respondents will reasonably consider their choice.

4. Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of test may be submitted for approval separately or in combination with the main collection of information.

The contractor will conduct a focus group, a session of in-depth cognitive interviews, and a pre-test. The focus group will have approximately ten subjects and will be administered by the contractor in the seminar room of the Economics building at the University of Colorado at Boulder. The in-depth cognitive interviews will consist of five subjects and will be administered by RRC Associates at their premises in Boulder, Colorado. The pre-test will be administered online by KN to approximately 75 of their online panel members.

The purpose of the focus group is to get a preliminary estimate of average survey completion time and to solicit the first independent feedback from respondents in the “field.” The contractor will be diagnosing any potential problems with the overall structure of the survey and how it flows to the reader. The contractor will also examine potential problems with the gathering of information, specifically, the sequencing and interpretation of questions that ask respondents to indicate the level of each feature of their actual media environment. Specifically, the two questions that immediately follow Q39, Q42, Q45 and Q48, respectively. The contractor has given this much thought and would like to simplify this sequence of questions. The contractor is considering two options and would like feedback from both focus groups before deciding on the most appropriate option:

Option 1 (example is DIVERSITY) uses the contractors algorithm to assign a “Low,” “Medium” or “High” level of DIVERSITY to each respondent’s overall media environment. The algorithm will calculate a score for the overall media environment by first multiplying the level, low (1), medium (2) and high (3), for each individual media source by the share of hours devoted to that media source, and then summing across all media sources. The overall score will be compared to values in a look up table in order to assign “Low,” “Medium” or “High.” Remove the two follow up questions, here Q43 and Q44; and

Option 2 (example is DIVERSITY) reverses the order of the questions. That is, the survey first asks respondents to indicate their assessment of the level of diversity of opinion from their overall media environment (Q44), and then ask them to indicate the level of diversity of opinion for each individual media source (Q42).

Option

2 will be tested in the focus group (while option 1 will be tested

during the in-depth cognitive interviews). More generally, the

information obtained from the focus group will also be used to help

structure the format and questions for the in-depth cognitive

interviews.

For the focus group, the contractor recruits ten subjects, comprised of students, university administrators, professors and shopkeepers from the business district immediately surrounding the Boulder campus. They will be asked to bring with them their most recent bills from their newspaper, cable, Internet companies, etc. The subjects will complete a hard copy version of the draft survey together in the same room, uninterrupted, and at the same time. The hard copy version is the draft version of the survey attached to this supporting statement, but with all programming instructions to KN and electronic skip patterns removed from the document. After they have completed the survey, the contractor will record each subjects’ completion time and ask them to write down their remarks on any aspect of the survey. There will then be an open discussion about the survey. At the conclusion of the session, each subject is paid $25 and the contractor will collect and collate all of the materials. The session will be completed in about one hour.

From the in-depth cognitive interviews, the contractor will obtain more specific information about the beta version of the online survey from individual respondents. The questions that will be asked are included on a separate attachment.

For the in-depth cognitive interviews, RRC will recruit five subjects from the general population of Boulder County to be heterogeneous with respect to gender, age, income, and education. They will be asked to bring with them their most recent bills from their newspaper, cable, Internet companies, etc. Before the subjects arrive, the contractor will meet with the facilitator from RRC to review the survey and discuss the contractor’s objectives and goals for the session. The facilitator has previously had access to the survey and the contractor’s list of questions. They provide input on their role in the procedure.

Each subject completes the beta version of the online survey while being supervised by the facilitator. In contrast to the focus group, the facilitator informs the subject that she will, from time to time, ask for reactions to some of the questions. She also asks the subject to stop at any point during the survey if there is some uncertainty about the question being asked. At the conclusion of the session, each subject is paid $50 for their participation. The contractor will sit behind a one-way mirror observing the session. At any point the contractor can interrupt. In the past, the contractor has usually waited until the end of the session to be introduced as the researcher and ask any questions that may have arisen during the survey. The entire session for five subjects will take about five or six hours and is recorded on a DVD for further study.

The

pre-test, with the final version of the online survey, will be

facilitated by KN just prior to the survey going into the field. The

pre-test group will consist of approximately 75 subjects randomly

selected from KN panel members. The two main goals of the pre-test

are to estimate average survey completion time, and to test technical

and programming aspects of the online survey. Specifically, KN want

to be sure that all skip patterns and random assignments are working

correctly, and that the data is being stored in the appropriate

columns of the final database.

5. Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

The Co-Principle Investigators that have designed this survey and are responsible for analyzing the data received from the respondents are Donald Waldman and Scott J. Savage. Professor Waldman can be reached at 303-492-6781, and Professor Savage can be reached at [email protected]. The survey contractor is Michael Dennis, KnowledgeNetworks.com. Mr. Dennis can be reached at (650) 289-2160.

1 U.S. Census information for 2009 can be found at http://factfinder.census.gov; For a description of the statistical technique used in the KN within-panel sampling methodology and evidence of sample representativeness of the general population, see M. Dennis, Description of Within-Penal Survey Sampling Methodology: The Knowledge Networks Approach (2009), available at http://www.knowledgenetworks.com/ganp/docs/KN-Within-Panel-Survey-Sampling-Methodology.pdf.

2 A list of selected OMB-reviewed research projects where KN was the data collection subcontractor is available at: http://www.knowledgenetworks.com/ganp/docs/OMB-Reviewed-Projects-List.pdf.

3 Zwerina, K., Huber, J., and Kuhfeld, W. 1996. “A General Method for Constructing Efficient Choice Designs. in Marketing Research Methods in the SAS System,” 2002, Version 8 edition, SAS Institute, Cary, North Carolina.

4 K. Blumenschein, G. Blomquist, & M. Johannesson, Eliciting Willingness to Pay without Bias using Follow-up Certainty Statements: Comparisons between Probably/Definitely and a 10-point Certainty Scale, 118 Econ. J. 114-137 (2008).

R. Cummings, & L. Taylor, Unbiased Value Estimates for Environmental Goods: A Cheap Talk Design for the Contingent Valuation Method, 89 Am. Econ. Rev. 649-665 (1999), available at: http://www.jstor.org/stable/117038.

J. List, Do Explicit Warnings Eliminate Hypothetical Bias in Elicitation Procedures? Evidence from Field Auctions for Sportscards, 91 Am. Econ. Rev. 1498-1507 (2001), available at: http://www.jstor.org/stable/2677935.

S. Savage & D. Waldman, Learning and Fatigue During Choice Experiments: A Comparison of Online and Mail Survey Modes, 23 J. of Applied Econometrics 351-371 (2008), available at http://spot.colorado.edu/~waldman/index_files/Learning%20and%20fatigue.pdf.

5 By asking respondents to complete multiple choice experiments, the contractor is able to increase parameter estimation precision and reduce sampling costs by obtaining more information on preferences for each respondent. Carson et al. (1994) review a range of choice experiments and find respondents are typically asked to evaluate eight choice questions. Brazell and Louviere (1997) show equivalent survey response rates and parameter estimates when they compare respondents answering 12, 24, 48 and 96 choice questions in a particular choice task. Savage and Waldman (2008) found there is some fatigue in answering eight choice scenarios when comparing online to mail respondents. To minimize survey fatigue in this study, the contractor is considering dividing the eight choice scenarios into two sub groups of four scenarios. Here, the respondent will be given a break from the overall choice task with an open-ended payment card question between the first and second set of four scenarios.

J. Brazell, & J. Louviere, Respondent's Help, Learning and Fatigue, presented at the 1997 INFORMS Marketing Science Conference, University of California, Berkeley.

R. Carson, R. Mitchell, W. Hanemann, R. Kopp, S. Presser & P. Ruud, Contingent Valuation and Lost Passive Use: Damages from the Exxon Valdez, Resources for the future discussion paper, Washington, D.C (1994).

S. Savage & D. Waldman, supra note 3.

6 S. Savage & D. Waldman, supra note 3.

D. Waldman, Computation in Duration Models with Heterogeneity, 28 J. of Econometrics 127-134 (1985).

7

M. Callegaro & C. Disogra, Computing Response Metrics for

Online Panels, 72(5) Pub.

Opinion Q. 1008-1031 (2008).

8 For a detailed description of the KN protocol, see “Knowledge Networks Statistical Weighting Practices,” June 1, 2010.

9

See Knowledge Networks, “Best Practices for ICR Supporting

Documentation for KnowledgePanel Surveys,” December, 2010, p.

5 (Item 1 of Nonresponse Bias Measurement).

10

See Id. at 7 (Item 2). Due to survey length considerations,

the contractor will not include additional questions in the survey

in an attempt to “benchmark” responses against other

surveys (Item 3), and due to cost considerations, the contractor

will not attempt to directly measure nonresponse bias by attempting

to contact nonresponders at this stage (Item 4).

11 See Id. at 4.

12 S. Savage & D. Waldman, supra note 3.

| File Type | application/msword |

| Author | Jessica.Campbell |

| Last Modified By | cathy.williams |

| File Modified | 2011-02-17 |

| File Created | 2011-02-17 |

© 2026 OMB.report | Privacy Policy