OMB Itemized Responses Final 11.29.12

OMB Itemized Responses Final 11.29.12.docx

Laura Bush 21st Century Librarian Grant Program Evaluation

OMB Itemized Responses Final 11.29.12

OMB: 3137-0086

IMLS Responses to OMB’s November 20, 2012 Itemized Questions:

Laura Bush 21st Century Program Evaluation

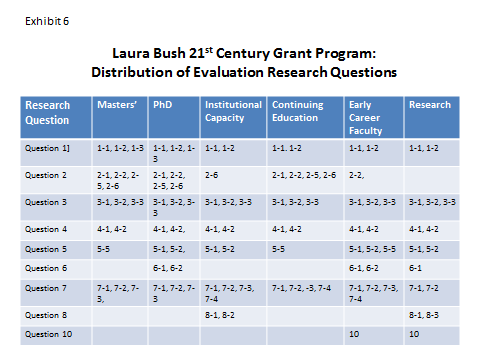

Item 1A. Specification of Research Questions

OMB Statement:

The clarification of the analysis and research plans in responding to question 5 and 23 in the memo have made it very hard to tell exactly what research questions are being answered and how the survey questions map to those broader research questions.

Number of Questions: The text of the response to question 23 posits that IMLS has 9-17 research questions: 9 if going by the main numbers (and combining subparts), 17 if expanding out all the subparts to individual question status. The first column of Exhibit 6, in the response to question 5, suggests that there are 10 numbered research questions, but that one of them (9) “dropped out” for some unknown reason. The response to question 14 suggests that there are 6 research questions. Which is it, and what are the research questions?

IMLS Response:

Number of research questions: We apologize for the confusion regarding the research questions for the evaluation. There are nine sets of research questions. Originally, there also was a tenth set of questions (formerly Research Questions 9-1 and 9-2). These questions corresponded to a grant program category for pre-professionals. However, after this contract was awarded in late 2010, IMLS eliminated this grant subcategory, thus making the research questions less valuable for future program planning and consequently were removed from further consideration in this study. Similarly, as the evaluation evolved, questions linked to the second and fifth questions also were eliminated to narrow the scope of the investigation. The evaluation has nine sets of research questions with 25 individual questions. The cross walk between the general sets of research questions and their corresponding individual questions is as follows:

Question Set 1: Questions 1-1, 1-2 and 1-3

Question Set 2: Questions 2-1, 2-2, 2-5 and 2-6 (Questions 2-3 and 2-4 eliminated)

Question Set 3: Questions 3-1, 3-2 and 3-3

Question Set 4: Questions 4-1 and 4-2

Question Set 5: Questions 5-1, 5-2 and 5-5 (Questions 5-3 and 5-4 eliminated)

Question Set 6: Questions 6-1 and 6-2

Question Set 7: Questions 7-1, 7-2, 7-3 and 7-4

Question Set 8: Questions 8-1, 8-2 and 8-3

Question Set 9: (eliminated)

Question Set 10: Question 10.

The specific content for these questions is listed below:

Question Set 1

What is the range of LIS educational and training opportunities that were offered by grantees under the auspices of LB21 program grants?

How many new educational and training programs were created by the program?

What are the placement outcomes of masters (doctoral) students?

Question Set 2

2-1. Among the sampled institutions, how many students received scholarship funds?

2-2. Were any parts of these scholarship programs sustained with university or private funds?

2-5. What were the important factors for success?

2-6. How effective were the various enhancements to the classroom activities that were provided by the grants (mentoring, internships, sponsored professional conference attendance, special student projects, etc.)?

Question Set 3

3-1. How many of the educational and training programs were sustained after the LB21 grant funds were expended?

3-2. What types of programs were sustained?

3-3. What resources, partnerships or collaborations were used to sustain these programs?

Question Set 4

4-1. Did these new scholarship or training programs have a substantial and lasting impact on the curriculum or administrative policies of the host program, school or institution?

4-2. If so, how were the curricula or administrative policies affected?

Question Set 5

5-1. What impact have these new programs had on the enrollment of master's students in nationally accredited graduate library programs? What impact have these LB-21 supported doctoral programs had on librarianship and the LIS field nationwide?

5-2. How have LIS programs impacted the number of students enrolled in doctoral programs?

5-5. For LB21 master's programs with library partners and/or internships as a program enhancement, did the employment opportunities/outcomes of program participants improve as a result of program participation?

Question Set 6

6-1. What substantive areas of the information science field are LB21 supported doctoral program students working in? What substantive areas of the information science field are LB21 supported early career faculty members working in?

6-2. Are these programs that will prepare faculty to teach master's students who will work in school, public, and academic libraries or prepare them to work as library administrators?

Question Set 7

7-1. What is the full range of "diversity" recruitment and educational activities that were created under the auspices of LB21 program grants?

7-2. What are the varied ways in which grant recipients have defined "diverse populations"?

7-3. Which of these programs were particularly effective in recruiting "diverse populations"

7-4. What were the important factors for success?

Question Set 8

8-1. What is (are) the most effective way(s) to track LB21 program participants over time?

8-2. What is the state of the art in terms of administrative data collection for tracking LB21 program participation among grantee institutions?

8-3. How can social media technologies be employed to identify and track past LB21 program participants?

Question Set 10

10. What has been the impact of the research funded through the LB21 program?

We have amended Part B to include the listing of the 25 questions broken out across the nine sets.

Item 1B. Mapping to Research Questions

OMB Statement:

Mapping to Research Questions: From the title, it’s not immediately clear what the cells of the table are supposed to refer to. The surrounding text, and the title, hints that it shows how many survey questions for a particular grantee group map to a particular research question. For that matter, it’s not clear what the bracketed codes after each question on the survey protocols mean, and whether they’re any part of this mapping; that doesn’t seem to be the case, because the Exhibit 6 number patterning seems strictly tiered within broader questions (i.e., all the entries in the “Question 3” row are of the form 3-x, and not 4-x or the like) while the bracketed codes in the survey protocols are all over the map (e.g., question 5 in the Continuing Ed/Diversity Theme protocol has multiple 1-x and 4-x tags). What are the tag numbers, in the protocols and Exhibit 6, supposed to mean?

IMLS Response:

OMB’s interpretation of Exhibit 6 is correct. The table maps the broad research questions (Questions 1 through 10) to a more detailed set questions for each grant program category. As an example, the questions under 1-1 document the types of educational and training opportunities offered by grantees and will be asked of all across all six grant categories. In contrast questions related to 6-1 and 6-2 -- which document the types of research areas of expertise nurtured through IMLS financial support in this program -- will only be asked of grantees in the categories of doctoral programs and early career faculty.

The numbers at the end of each question in each of the eleven interview protocols refer back to the numbers in the Exhibit 6 cells (relating to individual research questions). As OMB noted, there is not always a one-for-one correspondence between a cell item and a question contained in an interview protocol. This is intentional. Given the nature of the qualitative interview process, it is possible for respondents to provide information relevant to more than one research question when responding to a given question in the interview protocol. Similarly, it also is possible for more than one interview question in an interview protocol to provide evidence to address a single research question. The numbers after items in the interview protocol are there for the research team. They provided a reference point for the development of the instrument and will be used to guide the coding and reviewing of interview transcripts so that analysts know where to look for relevant data.

Item 2: Inter-Rater Reliability Protocols

OMB Statement

The text in response to Q5 makes several references to “initial and periodic” checks wherein interviews would be processed by a separate human reviewer to measure inter-rater reliability. This kind of quality control checking is a good thing, but how periodic is “periodic”? Some specified fraction of a particular coder’s daily/total workload?

IMLS Response:

Periodic checks for inter-rater reliability are planned to occur approximately every five hours each week (about one hour each day) over the four weeks of time allotted for the individuals in the evaluation team assigned to coding and analysis. This equates to an estimate of 12.5 percent for each reviewer’s total workload. Actual level of effort allotted may vary based on the extent of discrepancy found between reviewers.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Matthew Birnbaum |

| File Modified | 0000-00-00 |

| File Created | 2021-01-30 |

© 2026 OMB.report | Privacy Policy