Supporting Statement Part A_130102 docx

Supporting Statement Part A_130102 docx.docx

Experimental Program to Stimulate Competitive Research Jurisdictional Survey

OMB: 3145-0225

Supporting Statement A for

Jurisdictional Survey of Experimental Program to Stimulate Competitive Research (EPSCoR) Project Directors Made by the National Science Foundation, 1980 - Present

Date: November 21, 2012

Name: Dr. Denise Barnes

Address: National Science Foundation, Suite 940, 4201 Wilson Blvd, Arlington, VA, 22230

Telephone: 703-292-5179

Fax: 703-292-9047

Email: [email protected]

Contents

A.1. Circumstances Making the Collection of Information Necessary 5

A.2. Purpose and Use of the Information 5

A.3. Use of Information Technology and Burden Reduction 6

A.4. Efforts to Identify Duplication and Use of Similar Information 7

A.5. Impact on Small Businesses or Other Small Entities 7

A.6. Consequences of Collecting the Information Less Frequently 7

A.7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 7

A.8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside Agency 7

A.9. Explanation of Any Payment or Gift to Respondents 8

A.10. Assurance of Confidentiality Provided to Respondents 8

A.11. Justification for Sensitive Questions 9

A.12. Estimates of Hour Burden Including Annualized Hourly Costs 9

A.13. Estimate of Other Total Annual Cost Burden to Respondents or Recordkeepers 10

A.14. Annualized Cost to the Federal Government 10

A.15. Explanation for Program Changes or Adjustments 11

A.16. Plans for Tabulation and Publication and Project Time Schedule 11

A.17. Reason(s) Display of OMB Expiration Date is Inappropriate 11

A.18. Exceptions to Certification for Paperwork Reduction Act Submissions 12

List of attachments

Attachment 1: Experimental Program to Stimulate Competitive Research Jurisdictional Survey

Attachment 2: Introductory Email from NSF

Attachment 3: Introductory Email from Contractor

Attachment 4: Reminder Email

Attachment 5: Thank You Email

A.1. Circumstances Making the Collection of Information Necessary

The National Science Foundation (NSF) Act of 1950 (Public Law 507-81st Congress, as amended) stated that “……it shall be an objective of the Foundation to strengthen science and engineering research potential and education at all levels throughout the United States; and avoid undue concentration of such research and education, respectively.” This congressional directive recognized the inherent value of having a truly national scientific and engineering (S&E) research enterprise. Over time, however, the nation’s S&E efforts became concentrated on its two coasts and in a limited number of major research universities. Thus, the NSF’s resources became concentrated to the point where in 1977 NSF Director, Dr. Richard C. Atkinson, responded to congressional concerns by establishing a National Science Board task force to examine the geographical distribution of NSF awards. The NSB voted to establish the Experimental Program to stimulate Competitive Research (EPSCoR) in 1978. Five states (AR, ME, MT, SC, WV) received the first EPSCoR awards in 1980. EPSCoR eligibility criteria have changed over time; currently, 28 U.S. states, Puerto Rico, the U.S. Virgin Islands, and Guam participate in EPSCoR.

The mission of EPSCoR is to assist the National Science Foundation (NSF) in its statutory function "to strengthen research and education in science and engineering throughout the United States and to avoid undue concentration of such research and education."

EPSCoR goals are to:

provide strategic programs and opportunities for EPSCoR participants that stimulate sustainable improvements in their R&D capacity and competitiveness;

advance science and engineering capabilities in EPSCoR jurisdictions0 for discovery, innovation, and overall knowledge-based prosperity.

The objectives of EPSCoR are to:

catalyze key research themes and related activities within and among EPSCoR jurisdictions that empower knowledge generation, dissemination and application;

activate effective jurisdictional and regional collaborations among academic, government and private sector stakeholders that advance scientific research, promote innovation and provide multiple societal benefits;

broaden participation in science and engineering by institutions, organizations, and people within and among EPSCoR jurisdictions;

use EPSCoR for development, implementation and evaluation of future programmatic experiments that motivate positive change and progression.

EPSCoR uses three major investment strategies to achieve its goal of improving the R&D competitiveness of researchers and institutions within EPSCoR jurisdictions. These strategies are:

Research Infrastructure Improvement Program:

Track-1 ((RII Track-1) Awards. The RII Track-1 awards (NSF 12-563) are the single largest source of programmatic funding. RII Track-1 awards provide up to $4 million per year for up to five years. They are intended to improve the research competitiveness of jurisdictions by improving their academic research infrastructure in areas of science and engineering supported by the National Science Foundation and critical to the particular jurisdiction’s science and technology initiative or plan. Other Research Infrastructure Improvement awards are a) the RII Track-2 awards (NSF 13-509), which provided up to $2 million per year for up to three years as collaborative awards to consortia of EPSCoR jurisdictions to support research of regional, thematic, or technological importance; and b) RII C-2 awards (NSF 10-598), which provided up to $1 million for up to two years to support the enhancement of inter-campus and intra-campus cyber connectivity within an EPSCoR jurisdiction.

Co-Funding of Disciplinary and Multidisciplinary Research:

EPSCoR co-invests with NSF Directorates and Offices in the support of meritorious proposals from individual investigators, groups, and centers in EPSCoR jurisdictions that are submitted to the Foundation’s research and education programs, and crosscutting initiatives. These proposals have been merit reviewed and recommended for award, but could not be funded without the combined, leveraged support of EPSCoR and the Research and Education Directorates. Co-funding leverages EPSCoR investment and facilitates participation of EPSCoR scientists and engineers in Foundation-wide programs and initiatives. NSF EPSCoR co-funding accounts for roughly $38 million annually.

Workshops and Outreach:

The EPSCoR Office solicits requests for support of workshops, conferences, and other community-based activities designed to explore opportunities in emerging areas of science and engineering, and to share best practices in planning and implementation in strategic planning, diversity, communication, cyberinfrastructure, evaluation, and other areas of importance to EPSCoR jurisdictions (See NSF 12-588). The EPSCoR Office also supports outreach travel that enables NSF staff from all Directorates and Offices to work with the EPSCoR research community regarding NSF opportunities, priorities, programs, and policies. Such travel also serves to more fully acquaint NSF staff with the science and engineering accomplishments, ongoing activities, and new directions and opportunities in research and education in the jurisdictions.

The reauthorization of EPSCoR (under the America Competes Act of 2010, P.L. 111-358; 42 USC 1862p-9) directed the National Science Foundation to continue the EPSCoR program. Additionally, the reauthorization specified new evaluation and reporting requirements. One such requirement is for the EPSCoR Interagency Coordinating Committee (which is chaired by NSF) to develop metrics to “assess gains in academic research quality and competitiveness” (1862p-9(d)(2)(c)). This evaluation more generally, and the survey specifically, will contribute to developing assessment approaches and to applying them historically to the NSF EPSCoR program.

The current evaluation being conducted by STPI is one of three evaluative activities underway. One of these, a legislatively mandated study (under the America Competes Act of 2010, P.L. 111-358; specifically, 42 USC 1862p-9(f)) is being carried out by the National Academies, and seeks to evaluate the active federal EPSCoR and EPSCoR-like programs. The second is from within the NSF EPSCoR community itself entitled “EPSCoR 2030.” This third evaluation being carried out by STPI is the only one which is historical in nature, covering the entire lifespan of EPSCoR.

A.2. Purpose and Use of the Information

The objective of this evaluation is to perform an in-depth, life-of-program assessment of NSF EPSCoR activities and their outputs and outcomes and, based on this assessment, to provide recommendations for better targeting available funding to those jurisdictions for which the EPSCoR investment can result in the largest incremental benefit to their research capacity.

This evaluation will focus on progress in research competitiveness, infrastructure development (physical, human, and cyber), and broadened participation in Science, Technology, Engineering, and Mathematics (STEM) within EPSCoR jurisdictions over the period of their participation in the NSF EPSCoR program. Eligibility criteria for participation in NSF EPSCoR programs will be examined to identify changes that will enhance the effectiveness of the NSF EPSCoR investment toward strengthening research and education in STEM throughout the United States. The evaluation is designed to focus predominantly on the Research Infrastructure Improvement awards.

As mentioned above, the goal of the evaluation is to, “provide recommendations for better targeting of available funding to those jurisdictions for which the EPSCoR investment can result in the largest incremental benefit to their research capacity.”

This objective drives the primary study questions:

Given a range of definitions of “research capacity” that EPSCoR investment is intended to enhance, what is the evidence that EPSCoR has (or has not) led to enhancement of that research capacity?

To the extent to which there is evidence that EPSCoR has led to the enhancement of research capacity, is there evidence that particular strategies are systematically more effective at developing sustainable research capacity than other strategies?

Is there evidence that there are specific institutional arrangements within EPSCoR jurisdictions that are more likely to promote the development of sustainable research capacity than others? Is there a minimum set of institutional capabilities that all EPSCoR jurisdictions must have in order to be effective?

Are there other ways of measuring sustainable “research capacity” that may be more appropriate for the future than current approaches?

NSF will use the results to guide the future management of the program. Depending on the results of the evaluation, changes may be made to:

Overall program goals and objectives

Criteria for EPSCoR eligibility

Specific uses of EPSCoR funding/EPSCoR programmatic activities

Size and duration of EPSCoR awards

EPSCoR award management, including future reporting and evaluation practices

Based on a literature review, the following have been identified as potential EPSCoR effects on research capacity in a jurisdiction:

EPSCoR has lasting effects on university research infrastructure

Fostering careers of individual faculty at those institutions

Formation of large teams/departments seen as being nationally/internationally competitive in a specific area

Provision of first-rate research infrastructure (including cyberinfrastructure as well as research equipment) that is used widely in a specific area

Formation of lasting collaborations and partnerships among faculty in state that continue beyond the lifetime of the award

Catalyzing the development of support functions (e.g., technology transfer offices, offices of sponsored research) and policies (e.g., faculty release time to conduct research) that facilitate faculty participation in research

EPSCoR influences the quality of STEM education in the jurisdiction, broadening the participation in the research enterprise and in the STEM workforce more broadly

EPSCoR supports efforts to motivate K-12 students to participate in STEM higher education

EPSCoR catalyzes the formation of pathways to STEM higher-education training from two-year colleges, high schools to universities

EPSCoR catalyzes the development of new curriculum tools/courses/degree programs

EPSCoR enhances the research capacity of four-year, non-doctoral institutions (e.g., predominantly undergraduate institutions, minority-serving institutions)

Through providing faculty with research experiences

Through enhancing the quality of teaching and infrastructure

Through creating pipelines from undergraduate degrees to higher education

Most broadly, EPSCoR catalyzes lasting effects on economic development that lead to jobs and revenue in the state

Intellectual property from research (either RII research or other) is licensed by existing firms or spun out into new firms

EPSCoR catalyzes workforce development efforts that lead to better trained/more productive workers

EPSCoR convenes university researchers, companies, and other partners (e.g., national labs, nonprofits, VCs), improving the climate for technology-intensive business in the state

EPSCoR research results catalyze public policy changes that improve the climate for economic development in the state overall

The study design is intended to collect information regarding the EPSCoR role with respect to each of these outcome categories.

This proposed information collection is distinct from the study being conducted by the National Academies. That study, which assesses all of the current EPSCoR and EPSCoR-like programs, is intended to complete its deliberations before the survey would be completed; its analyses are not intended to benefit from this data collection. In addition, there are not significant interactions since the goals of the two evaluations are quite different. The NAS study is across federal agencies and is expected to lead to recommendations to enhance coordination across federal agencies with EPSCoR programs. The STPI evaluation is an historical study of only the NSF EPSCoR program.

This proposed collection, and the evaluation more generally, is intended to identify potential future metrics for assessing the programs that fall under the aegis of the EPSCoR Interagency Coordinating Committee (which is chaired by NSF), as required by the America COMPETES reauthorization (1862p-9(d)(2)(c)). Results of this information collection will contribute to developing assessment approaches and to applying them historically to the NSF EPSCoR program.

A.3. Use of Information Technology and Burden Reduction

New data collection for the purpose of the evaluation will consist of an open-ended census survey of Project Directors0 on NSF EPSCoR Research Infrastructure Improvement (RII) awards. The open-ended survey will be administered online using Qualtrics survey software. Qualitative data collected through Qualtrics will be analyzed using NVivo, a qualitative data analysis software tool which facilitates content analysis.

A.4. Efforts to Identify Duplication and Use of Similar Information

Every effort has been made to identify information relevant to the program that can be collected from existing sources rather than from a survey. As previously stated, existing sources of information to be tapped for relevant information as part of the larger study include: 1) EPSCoR program solicitations and other publicly-available documents that describe the EPSCoR program; 2) proposals submitted in application for funding submitted to NSF by EPSCoR jurisdictions; 3) annual reports submitted in compliance with NSF practices for awarded RII proposals; 4) statistical data obtained by NSF and other public and private sources; and 5) public and commercial databases for information on research outputs such as publications, patents, and licensing agreements.

There are four major data sources for the evaluation: longitudinal data, annual progress report analysis, the EPSCoR RII survey, and interviews with EPSCoR state committees.

The study will use time-series regression techniques to identify correlations between EPSCoR participation and changes in dependent variables of interest. The study will be relying on longitudinal data available from a mix of public and private sources, specifically:

Dependent variables

NSF Survey of Federal Science and Engineering Support to Universities, Colleges, and Nonprofit Institutions (Federal agency R&D obligations; NSF R&D obligations) – public source

NSF WebCASPAR (number of Science and Engineering PhDs granted) – public source

Harvard Patent Database, (Patents issued) – public source

Thomson Reuters Web of Science (publications, bibliometrics) – private source

Independent variables

Census Bureau (state population) – public source

Bureau of Economic Analysis (state GDP) – public source

Carnegie Foundation (university quality rankings) – data publicly available, though provided by non-profit organization

Time series analyses using a 0-1 EPSCoR participation dummy variable provide insight into the first of the primary study questions (whether EPSCoR is contributing to jurisdiction-level research capacity), but have strong limitations. It is a truism that correlation does not imply causation; a relationship between EPSCoR participation and changes in NSF funding levels, for example, could occur because investigators not participating in EPSCoR are responsible for the increase in funding. NSF’s funding databases provide information on investigators that receive awards from the Foundation. Matching EPSCoR participants to NSF funded PIs can be used as a measure of attribution of any observed correlations. Another limitation in using only the set of independent variables available longitudinally is that they do not offer insight into the other study questions, such as the relative success of particular EPSCoR strategies (e.g., hiring junior faculty, purchasing fixed equipment). A goal of the study is to collect additional data that can be parameterized and used as independent variables in the time series analyses.

In addition, longitudinal data are not available against which many of the outcome variables can be assessed. For such variables (e.g., EPSCoR role in motivating K-12 students to participate in STEM education, value of EPSCoR in catalyzing the development of university support functions) the study will instead collect data that is intended to identify what EPSCoR has achieved – even though that information may not be analyzable econometrically.

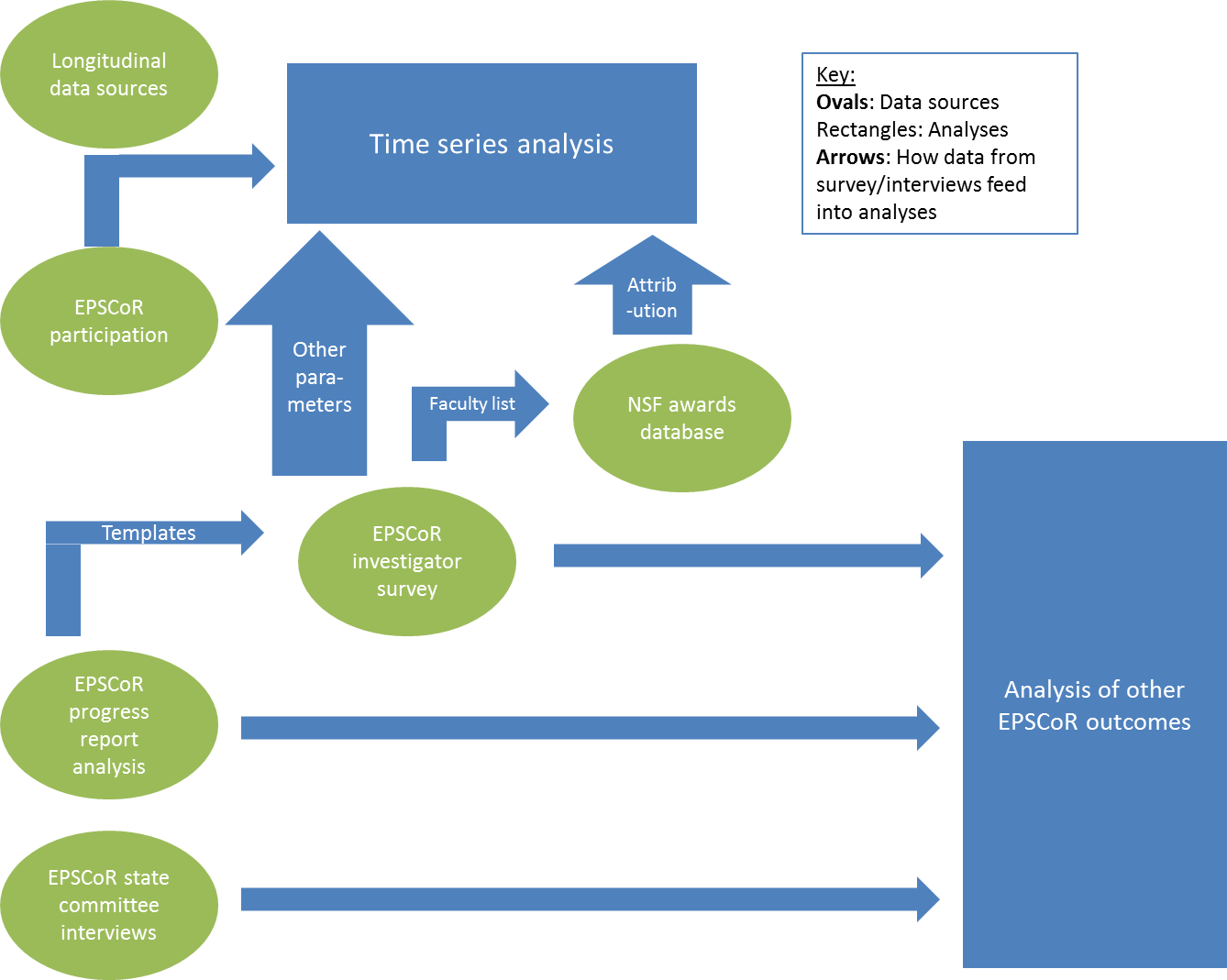

Three sources have been identified for collecting these data (figure 1):

The first is an analysis of EPSCoR proposals and annual reports. NSF maintains an electronic archive of such materials for EPSCoR awards dating back to the mid-1990s. Some additional awards’ materials (from the late 1980s and early 1990s) are available from the National Archives. These documents will be analyzed (using qualitative analysis software such as NVivo) for the purpose of collecting relevant information regarding each of the outcome categories mentioned above.

At the same time, there are several limitations of this approach. First, not all of the materials currently exist. Records from the early years of the program are not found either at NSF or in the National Archives, and there are also some gaps in the records available from the early- and mid-1990s. Relying solely on archival materials would leave out entire EPSCoR iterations.

Figure 1. Data collection and analysis plan

Second, the quality of annual reports as evaluation data sources varies from iteration to iteration and from jurisdiction to jurisdiction; annual reporting from earlier years tends to be more limited than are more recent reports. Even when reports are detailed, their intended purpose is not evaluative; as there has not been a single standardized program logic model that guides reporting and indicates to the jurisdictions what is of interest to NSF, jurisdictions may over-report, or under-report, with respect to relevant activities and outcomes.

In order to minimize burden on the investigator community, the reports are being analyzed for the purpose of extracting information that can be provided to the jurisdictions as a “first draft” of important information. The templates that will accompany the survey will have lists of information (e.g., hired faculty, graduate students, publications) that the jurisdictions can check – rather than asking them to compile information de novo.

At the same time, there is a need for collection of information in a standardized fashion with respect to program outcomes. The survey questions are intended to give each jurisdiction the opportunity to address, in structured fashion, the program’s outcomes. Similarly, the interviews with EPSCoR committee heads provides the opportunity to obtain in structured form answers to common questions regarding committee structure and function.

As figure 1 shows, therefore, the survey fills two functions. The first is to give the jurisdictions the opportunity to validate and correct data that have been extracted from the annual progress reports and EPSCoR proposals. These data will be parameterized and incorporated into the time series analyses, in some cases directly (e.g., number/quality of EPSCoR publications, number of hired faculty) and in some cases indirectly (to identify the likelihood that observed correlations between EPSCoR participation and changes in NSF funding are in fact associated with EPSCoR participants). The second is to collect information regarding other outcome variables of interest.

A.5. Impact on Small Businesses or Other Small Entities

No small businesses will be involved in this study.

A.6. Consequences of Collecting the Information Less Frequently

This will be a one-time only data collection.

A.7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

The proposed data collection fully complies with all guidelines of 5 CFR 1320.5.

A.8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside Agency

As required by 5 CFR 1320.8(d), comments on the information collection activities as part of this study were solicited through publication of a 60 Day Notice in the Federal Register on October 27, 2010 (volume 75, number 207). No comments were received from members of the public.

For outside technical expertise, NSF has consulted with the Science and Technology Policy Institute (STPI) at the Institute for Defense Analyses (IDA), a federally-funded research and development center that will support the data collection and analysis. The staff at STPI includes experts in evaluation of federal research and development programs as well as with substantive knowledge in innovation and competitiveness and research capacity building. Staff members consulted regarding data collection instruments and the study design include:

Dr. Rachel Parker, Research Staff Member, Science and Technology Policy Institute (202-419-5418)

Dr. Brian Zuckerman, Research Staff Member, Science and Technology Policy Institute (202-419-5485)

A.9. Explanation of Any Payment or Gift to Respondents

No payment or gift will be made to respondents as a part of this study.

A.10. Assurance of Confidentiality Provided to Respondents

Data gathered as part of this information request will be identifiable by the name and jurisdiction of the responding Project Directors. Participants will be informed in the introductory letter (Attachment 3) that the information they provide will be kept confidential except as required by law, that data collected by the Project Director in response to the open ended survey questions only be reported to the contractor in an aggregate form, and that their participation is completely voluntary. The survey will be sent to each Project Director. Each jurisdiction has its own individual processes for storing historical data regarding EPSCoR awards, and so a variety of paths may be followed for completing the survey. In some jurisdictions, the Project Director may him/herself complete the survey; in others the Program Administrator or other EPSCoR staff (e.g., Education specialists, evaluators) may assist the Project Director; in other jurisdictions, members of the state committee, former EPSCoR participants, or other faculty members may become involved. As each jurisdiction is unique, discretion regarding how to best complete the survey is being left to the Project Director.

This data collection activity is exempt from 45 CFR 46 Regulations for Protection of Human Subjects because: a) the data will be reported to the contractor in aggregate and therefore individual participants will not be identifiable directly or through identifiers linked to the subjects; and b) because any disclosure of the human subjects' responses outside the research would not place the subjects at risk of criminal or civil liability or be damaging to the subjects' financial standing, employability, or reputation. An exemption has been approved by the contractor’s Human Subjects Review Committee on the grounds that disclosure is not likely to put subjects at risk. The following allows for this collection: 42 U.S.C. 1870 in addition to the NSF Privacy Act System of Records Notice (SORN) NSF-50 Principal Investigator/Proposal file and Associated Records.

A.11. Justification for Sensitive Questions

The proposed survey will not contain questions of a sensitive nature. On the survey, personally identifiable Information will be limited to the names of the participants from whom Project Directors solicited input (if any) to complete the data call. Information gathered by the Project Director in support of any single response will not be tied to a particular individual. Responses given in reply to the pre-filled template will contain the names of individuals who have been supported by EPSCoR awards, for whom there is not currently complete accounting of participation in annual reports.

A.12. Estimates of Hour Burden Including Annualized Hourly Costs

As summarized in Table A.12, the information call will be distributed to the 29 RII Project Directors from each of the eligible and funded jurisdictions currently participating in NSF EPSCoR. There are no jurisdictions that are eligible but ultimately denied funding. All jurisdictions with EPSCoR RII funding will participate. A second activity reflected in the table involves conducting qualitative interviews with the chairs of each jurisdiction’s state committee. For the data call, the expected burden for participants will vary by jurisdiction (e.g. five states have been a part of EPSCoR since 1980). It is estimated that on average, it should take approximately 40 hours to complete the prefilled template portion of the information call. The anticipated total annual burden to respondents is therefore 2610 hours (1160 hours to review and complete the prefilled templates, and 1450 hours to complete the survey). Assuming an average hourly rate of $38.94, (based on an average annual salary for US researchers of $81,000) the annual (and total) cost to respondents is estimated at $101,663.40. It is estimated that on average, it should take 1 hour to complete each interview with chairs of state committees. The anticipated total annual burden to respondents is therefore 29 hours. Using the same figures for estimated hourly rate, the cost to respondents should be $1129.26 ($38.94 per interview). This burden estimate assumes a 100% survey response and interview participation rate. The effort is not mandatory for grantees, but it is nevertheless expected that all jurisdictions will participate. NSF has publicized that the survey will be coming for nearly a year, and all Project Directors have been consulted regarding the survey’s design. RII Track-1 project teams have, as part of the Programmatic Terms and Conditions of their award, the expectation to cooperate with NSF EPSCoR program evaluation activities. Based upon past experience, we therefore believe that the response rate will be near unity. There are no Capital Costs to report.

Table A.12 Annualized Estimate of Burden

Category of Participant |

Expected number of participants |

Average number of responses per participant |

Average burden hours per template response |

Estimated annual burden hours |

Estimated hourly wage |

Estimated annual cost to participants |

Per Funding Iteration Jurisdiction level information call |

||||||

EPSCoR RII PDs survey |

290 |

50 |

10 |

1450 |

$38.94 |

$56463.00 |

EPSCoR RII template |

29 |

1 |

40 |

1160 |

$38.94 |

$45170.40 |

Subtotal |

58 |

- |

- |

2610 |

- |

$101633.40 |

Per Jurisdiction Interviews |

||||||

State Committee Chair interviews |

29 |

1 |

n/a |

29 |

$38.94 |

$1129.26 |

Subtotal |

29 |

- |

- |

29 |

- |

$1129.26 |

Total |

58 |

n/a |

40 |

2639 |

$38.94 |

$102762.66 |

A.13. Estimate of Other Total Annual Cost Burden to Respondents or Recordkeepers

There are no Operating or Maintenance Costs to report.

A.14. Annualized Cost to the Federal Government

In addition to the cost to respondents described in A.12, total annual cost to the Federal Government for this data collection includes the services of a contractor to collect the data and government staff time to manage and support the contractor. The annual cost for the contractor, excluding data analysis and report preparation, is estimated at $50,000. It is estimated that approximately one week of NSF staff time will be associated with the conduct of this study. Using an average salary of $80,000 for NSF staff, this adds $1538.46 in costs.

Thus, total annual cost to the Federal Government is estimated at $51,538.46 (Table A.14). Since data collection will be completed in one year, the annual and total anticipated costs are the same.

Table A.14. Total Cost Burden of Information Collection |

|

Annualized Cost to Respondents (from A.12) |

$102,762.66 |

Annual Cost of Contractor’s Services |

$50,000.00 |

NSF Staff Time |

$1538.46 |

Total |

$154,301.12 |

A.15. Explanation for Program Changes or Adjustments

This is a new collection of information.

A.16. Plans for Tabulation and Publication and Project Time Schedule

Planning for this study began in August 2011. Assuming that clearance is granted in December 2012, the proposed survey and interviews will be fielded in January 2013. Results will be tabulated, and responses will be collated with other data collected for each jurisdiction. Given the high degree of qualitative information to be collected, only basic descriptive statistical analyses will be conducted on a subset of close-ended questions. The primary emphasis of the survey is to enable in depth analysis of qualitative data obtained through open-ended questions. A draft report on the survey findings will be developed and reviewed through the contractor’s internal peer-review process by May 2013. The survey results will likely be incorporated into a report summarizing the contractor’s findings related to the broader portfolio analysis, which will be delivered to NSF by December 2013. The estimated project schedule is summarized in Table A.16.

Table A.16. Estimated Project Schedule |

|

Activity |

Anticipated Time Period |

Collect and analyze data from existing sources |

August 2011-March 2013 |

Field survey and conduct interviews |

Immediately after OMB approval (est. January 2013) |

Analyze data and develop draft findings to incorporate into larger report |

May 2013 |

Final report to NSF |

December 2013 |

A.17. Reason(s) Display of OMB Expiration Date is Inappropriate

No exceptions are sought; the OMB Expiration Date will be displayed on the survey instruments.

A.18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions are sought from the Paperwork Reduction Act or from form 83-I.

0 EPSCoR uses the term jurisdiction in lieu of state to be inclusive of the US territories which participate in the program (e.g. Puerto Rico).

0 Project Directors are the principal investigators/EPSCoR overall leaders. They are either senior academics or leaders of state EPSCoR offices. Program Administrators are administrative staff. They typically do not hold faculty positions.

0 Each project director will receive a two part information call. The first part is a predominantly open ended survey, the second, a pre-filled template.

0 This is based on an average number of funding iterations for all Research Infrastructure Improvement awards supported by NSF EPSCoR.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | How To Prepare a Request For OMB Review |

| Subject | How To Prepare a Request For OMB Review |

| Author | BrierlyE |

| File Modified | 0000-00-00 |

| File Created | 2021-01-30 |

© 2026 OMB.report | Privacy Policy