SS Part B

SS Part B .doc

Health Care Professional Survey of Prescription Drug Promotion

OMB: 0910-0730

Health Care Professional Survey of Prescription Drug Promotion

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

Respondent Universe and Sampling Methods

We propose a nationally representative sample of health care professionals that will yield 2,000 responses from 500 general practitioners, 500 specialists, 500 nurse practitioners, and 500 physician assistants. Such a design will help ensure our ability to discuss not only health care professional perceptions generally but also to assess potential variations between different types of health care professionals. This sample will be recruited (see Appendix C for recruitment emails) from national Internet health care professional panels that include over 70,000 individuals originating from the American Medical Association (AMA) Physician Masterfile and other medical organizations. Because there are not enough individuals in this panel to satisfy the needs of the proposed project, nurse practitioners and physician assistants will be specially recruited from relevant professional organizations.

Health care professionals are a difficult group to recruit, so several strategies will be put into place to achieve a high response rate. These include sending prenotification letters before online invitation, lengthening the data collection period to 8 weeks (from the more typical 4 weeks), tailoring contact materials, disclosing FDA sponsorship on survey materials, and conducting reminder telephone calls. Appropriate weighting will be applied to adjust for any survey nonresponse as well as any noncoverage or under sampling and over sampling resulting from the sample design.

For the entire study, 2,025 participants (25 for the pretest; 2,000 for the main study) will be recruited. These health care professionals will be recruited through two different partners Knowledge Networks frequently uses for health care professionals surveys:

The Provider Care Network panel, and

OptIn

The Provider Care Network (PCN) panel recruits health care professionals using the American Medical Association (AMA) master list of physicians. Once recruited, physicians receive periodic surveys such as the one described here.

For this survey, PCN will conduct a random draw from the panel of physicians. Because PCN does not have sufficient numbers of physician assistants (PAs) or nurse practitioners (NPs) on its panel, they will conduct a custom recruit that consists of PCN purchasing a portion of the NP and PA universe from an approved AMA list for use in this recruit. PCN mails invitations to these groups requesting their participation in the panel. In order to join, they are required to provide a valid email address, which will be used to send an email invitation to participate in the survey. PCN provides incentives to them as they do with the current panel of NPs and PAs. Once a custom recruit is a member of the PCN panel, their selection to a PCN survey is conducted as described above.

The OptIn panel, which will be used to supplement the PCN panel for NPs and PAs, is recruited through an invitation-only process. The firm partners with different companies that have rewards programs (e.g., Ticketmaster, Pizza Hut, Blockbuster) and invites people to join the panel and earn "rewards currency" for their time. They use email, direct mail to Web, and/or fax to Web to recruit individuals for studies (multimode recruitment methodology). Through the course of recruiting individuals for studies via direct mail-to-Web and fax-to-Web invites, they ask if respondents wish to be contacted at a later time for additional studies. Upon agreement, they are opted in to an emailable panel of physicians and health care professionals. The firm also conducts stand-alone recruitment campaigns to increase their emailable resources. The multimode recruitment methodology allows them to reach up to 95 percent of the physician universe and survey up to 15 percent of any given market.

Sample selection from the OptIn panel is conducted in the same way as the PCN panel: a random draw sufficient to meet the sample requirements after allowing for the expected cooperation rate from those individuals who meet the selection criteria.

Weighting procedures

The data will be weighted to the national population of physicians, nurse practitioners, and physician assistants who have prescribing authority. We will develop weights to adjust for known unequal selection probabilities, for unequal response rates, and for any remaining deviations between the sample and population distributions. In the final step, we will use poststratification to calibrate the sample distribution to known population distribution to reduce bias due to frame undercoverage. We believe that poststratification should reduce undercoverage bias to some extent for the same reasons that weighting adjustment reduces nonresponse bias. Population counts for use in poststratification will be obtained from the AMA Physician Masterfile and Medical Marketing Service lists for nurse practitioners and physician assistants. Available variables on which to weight include state of practice and specialty for nurse practitioners and physician assistants. For physicians, these variables include age, gender, specialty, office based/hospital based, degree (MD or DO), and year of medical school graduation.

We will use a raking ratio approach to make the weighted sample distributions match those of the target population. We will analyze the final weight to determine whether truncation is necessary. We will consider trimming extreme weights and redistributing them to avoid losses in precision. In determining how to truncate optimally, we will balance variance and bias to minimize the overall mean square error.

As is the case for virtually all surveys, we anticipate that nonresponse will occur and could potentially affect the quality of the data collected. To measure the impact of nonresponse, we propose measuring nonresponse bias by comparing the distribution patterns of responders with known population distributions. These comparisons will be limited to those variables for which we have population information (e.g., sex, age, race/ethnicity).

Procedures for the Collection of Information

All parts of this study will be administered over the Internet. A total of 2,000 interviews will be completed. Participants will answer questions regarding their attitudes about DTC advertising and professional prescription drug promotion; their perceptions of the Bad Ad program; and their use of new technologies, including social media (see Appendix B for complete questionnaire). Demographic information will also be collected. The entire process is expected to take approximately 20 minutes. This will be a one-time (rather than annual) information collection.

Analysis Plan

Prior to the main analysis, we will perform an outlier analysis for the time spent on any screen visited and total time to complete the survey. We will identify extreme survey time and will make appropriate adjustments prior to final data analysis. We will also assess the extent of any missing information to determine the data quality. Descriptive statistics will afford a look at the frequency of certain key responses, such as the number of respondents in a particular category reporting a negative outcome associated with a patient interaction involving discussion of an advertised drug. Assessment of potential differences between GPs, specialists, NPs, and PAs can be accomplished with pairwise comparisons between groups. We will also produce national-level estimates about attitudes toward DTC advertising and other key questions. Regression will allow us to assess the effect of various independent variables on key outcomes when we control for several variables simultaneously. We can use ordinary least squares regression for continuous variables and logistic regression where the variables are categorical in nature. Regression also offers a framework within which we can assess potential moderating factors, such as the effect of a health care professional’s own exposure to DTC advertising on the relationship, between years of practice experience and perceived benefits or harms from DTC advertising. For questions of mediation, structural equation modeling will allow us to build an overall path analysis model.

We do plan to make comparisons between various groups of respondents. For example, we plan to determine if there are differences in responses based on occupation or demographic variables of sex, ethnicity, and number of years in practice. To make these comparisons, we will use Analysis of Variance (ANOVA) models and regression models where appropriate.

We will implement analyses using SUDAAN (version 10) to take into account the complexity of the study design as a result of weighting, clustering, and stratification. We will also reproduce some of the analysis results using the SPSS computer program; the Complex Samples add-on module will be used to account for the complex survey design and appropriately use the analysis weights.

Sample Size and Statistical Power

Power analysis with a power level of .80 and an alpha of .008

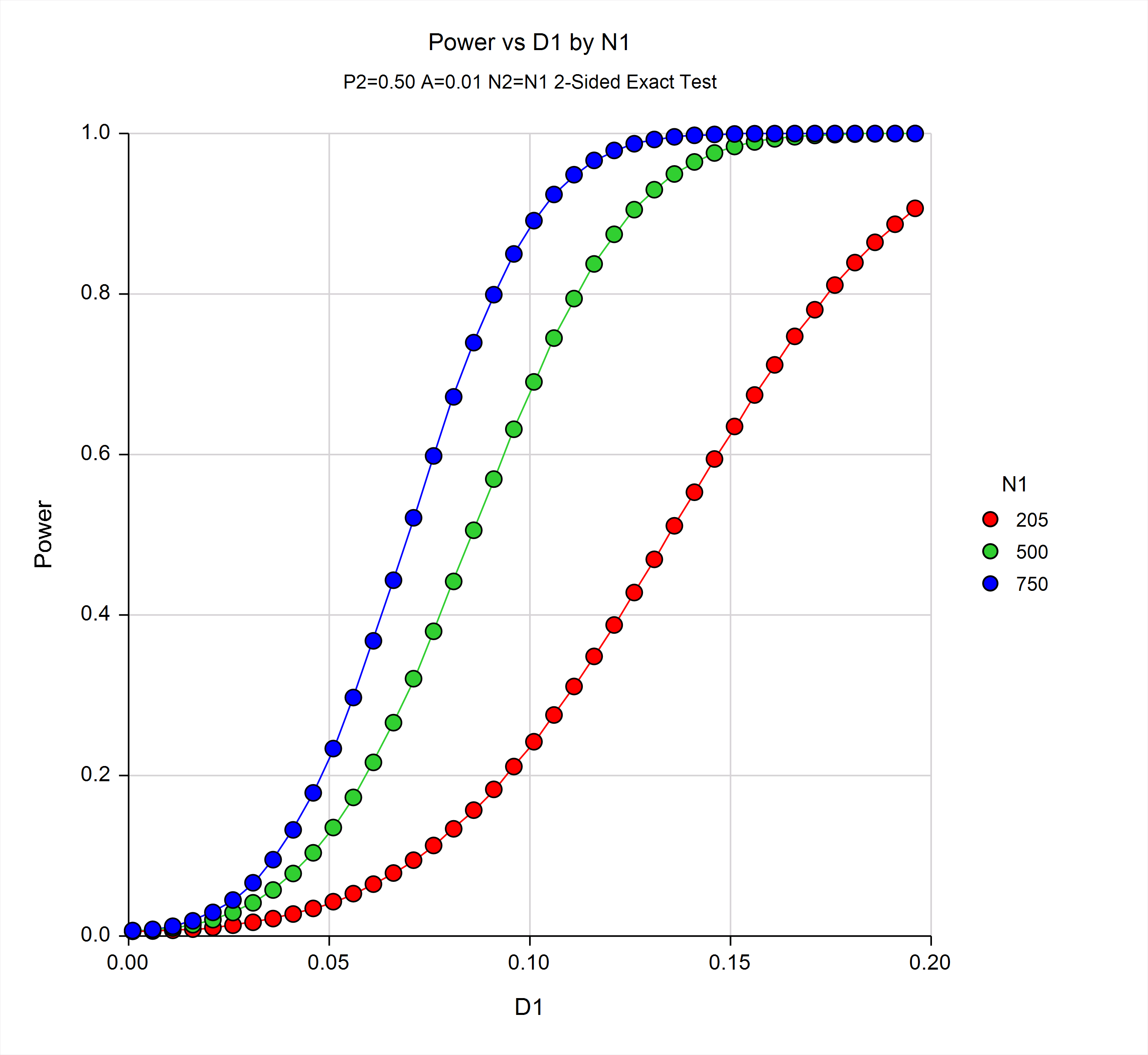

We will implement pairwise comparisons to test for significant differences between GPs, specialists, NPs, and PAs. For between-group comparisons with the sample sizes of 500 per group, we will be able to detect differences (D1) of 11 percentage points with power level of 0.80 and an alpha of 0.008. With power of 0.90, we will be able to detect differences of about 13 percentage points. In this calculation, we assumed equal-sized samples (N1 and N2), a design effect equal to one, an alpha level of 0.008 and an underlying proportion equal to 0.50.

The graph below shows the power and detectable differences for equal sample sizes of 250, 500, and 750. The y-axis is power (0.0 – 1.0), and the x-axis measures the minimum detectable differences in percentage points.

Methods to Maximize Response Rates and to Deal with Issues of Nonesponse

This study will use existing Internet panels to draw a sample. The panels (described in B.1) comprise individuals who share their opinions via the Internet regularly. The panel response rate ranges from 20 percent for general practitioners to 25 percent for specialists. To help ensure that the participation rate is as high as possible, FDA and the contractor will:

Design a protocol that minimizes burden (short in length, clearly written, and with appealing graphics).

Administer the survey over the Internet, allowing respondents to answer questions at a time and location of their choosing.

Email a reminder to the respondents who do not complete the protocol 4 days after the original invitation to participate is sent.

Provide a toll-free hotline for respondents who may have questions or technical difficulty as they complete the survey.

In the absence of additional information, response rates are often used alone as a proxy measure for survey quality, with lower response rates indicating poorer quality. However, lower response rates are not always associated with greater nonresponse bias (Groves 2006)1. Total survey error is a function of many factors, including nonsampling errors that may arise from both responders and nonresponders. (Biemer and Lyberg 2003)2. A nonresponse bias analysis can be used to determine the potential for nonresponse bias in the survey estimates from the main data collection.

Several studies with physicians have shown that higher response rates are not associated with lower response bias (Barton et al. 1980; McCarthy, Loval, and MacDonald 1997; McFarlane, Murphy, Olmsted, and Hill 2007; Thomsen 2000).3 When bias did exist, women, nonspecialists (e.g., GPs), and young physicians were more likely to respond to the survey (Barclay, Todd, Finlay, Grande, and Wyatt 2002; Cull et al. 2005; Kellerman and Herold 2001; Templeton, Deehan, Taylor, Drummond, and Strang 1997).4

There are several approaches to address the potential for nonresponse bias analysis in this study, such as comparing response rates by subgroups, comparing respondents and nonrespondents on frame variables, and conducting a nonresponse follow-up study (OMB 2006). For the proposed project, we will perform two steps: comparing response rates on subgroups and comparing responders and nonresponders on frame variables.

We will first identify the subgroups of interest, such as type of specialist, gender, or age. At the end of the data collection, we will calculate response rates by subgroup. If the response rates are the same within subgroups, then nonresponse bias should not affect the results related to those group categories. For example, if the response rate for males and females is the same, then there will not be a large nonresponse bias in the survey estimates for gender.

To the extent that information is available about all sample cases on the frame and that information is associated with the key survey estimates, this approach can provide additional information about the potential for nonresponse bias. At the end of data collection, we will review the sampling frame to determine if any variables are associated with the key survey estimates, such as age. We will then compare the frame information for the full sample compared with respondents only. Differences between the full sample and the respondents are an indicator of potential bias. For example, if the median age of the full sample is 45, but the median age of the respondents is 60, there is likely bias in the estimates due to age if age is correlated with any of the survey estimates.

Test of Procedures or Methods to be Undertaken

Prior to pretesting, nine participants will respond to the survey while explaining their thoughts and responses, enabling us to assess blatant glitches in questionnaire wording, programming, and execution of the study. We will also conduct pretests with 25 health care professionals before running the main study to ensure that the questionnaire wording is clear. Finally, we will run the main study as described elsewhere in this document.

Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

The contractor, RTI International, will collect the information on behalf of FDA as a task order under Contract No. HHSF223201110333G. Bridget Kelly, Ph.D., M.P.H., is the Project Director, 202-728-2098. Data analysis will be conducted by both RTI and the Research Team, Office of Prescription Drug Promotion (OPDP), Office of Medical Policy, CDER, FDA; and coordinated by Amie C. O’Donoghue, Ph.D., 301-796-0574, and Kathryn J. Aikin, Ph.D., 301-796-0569.

1 Groves, R., “Nonresponse Rates and Nonresponse Bias in Households,” Public Opinion Quarterly, vol. 70(5), pp. 646–675, 2006.

2 Biemer, P. and L. Lyberg, “Introduction to survey quality,” New York: Wiley, 2003.

3 Barton, J., C. Bain, C.H. Hennekens, et al., “Characteristics of Respondents and Nonrespondents to a Mailed Questionnaire,” American Journal of Public Health, vol. 70, pp. 823-825, 1980; McCarthy, G.M., J.J. Koval, and J.K. MacDonald, “Nonresponse Bias in a Survey of Ontario Dentists’ Infection Control and Attitudes Concerning HIV,” Journal of Public Health Dentistry, vol. 57, pp. 59-62, 1997; McFarlane, E. M., J. Murphy, M.G. Olmsted, et al., “Nonresponse Bias in a Mail Survey of Physicians,” Evaluation and the Health Professions, vol. 30(2), pp. 170-185, 2000; Thomsen, S. “An Examination of Nonresponse in a Work Environment Questionnaire Mailed to Psychiatric Health Care Personnel,” Journal of Occupational Health Psychology, vol. 5, pp. 204-2, 2000.

4Barclay, S., S. Todd, I. Finlay, et al., “Not Another Questionnaire! Maximizing the Response Rate, Predicting Non-Response and Assessing Non-Response Bias in Postal Questionnaire Studies of GPs,” Family Practice, vol. 19, pp. 105-111, 2002; Cull, W., K.G. O’Connor, S. Sharp, et al., “Response Rates and Response Bias for 50 Surveys of Pediatricians,” Health Services Research, vol. 40, pp. 213-225, 2005; Kellerman, S. E. and J. Herold, “Physician Response to Surveys: A Review of the Literature,” American Journal of Preventive Medicine, vol. 20(1), pp. 61-67, 2001; Templeton, L., A. Deehan, C. Taylor, et al., “Surveying General Practitioners: Does a Low Response Rate Matter?,” British Journal of General Practice, vol. 47, pp. 91-94, 1997.

| File Type | application/msword |

| File Title | Health Care Professional Survey of Prescription Drug Promotion |

| Author | BRAMANA |

| Last Modified By | Gittleson, Daniel |

| File Modified | 2012-09-11 |

| File Created | 2012-09-11 |

© 2026 OMB.report | Privacy Policy