CJP OMB Supporting Statement 2-20-13 (Final OMB)

CJP OMB Supporting Statement 2-20-13 (Final OMB).docx

Census of Juveniles on Probation

OMB: 1121-0291

OMB Paperwork

Reduction Act Submission

Census of Juveniles on Probation

Consisting of 1 Data Collection Form

OMB No. 1121-0291

Department of Justice Office of Justice Programs Office of Juvenile Justice and Delinquency Prevention

810 7th Street, NW

Washington, DC 20531

Brecht Donoghue Research Coordinator (202) 305-1270

Section 2:

Supporting Statement for

PRA Submission

PAPERWORK REDUCTION ACT SUBMISSION SUPPORTING STATEMENTS A AND B FEBRUARY 2013

Agency: Office of Juvenile Justice and Delinquency Prevention (OJJDP) Title: Census of Juveniles on Probation

Form: 1121-0291

OMB No.: (current approval expired 11/31/2011)

This document presents the Supporting Statements A and B for the 20121 Census of Juveniles on Probation (CJP). The primary goal of the CJP is to develop accurate and reliable statistics regarding the numbers and characteristics of youth on juvenile probation in the United States. OJJDP requests reinstatement, with change, of OMB collection #1121-0291. The CJP (Form CJ-17) was approved in 2009 and expired on 3/31/2011. OJJDP has spent the past year planning improvements to the collection.

Part A. Justification

1. Necessity of Information Collection

Supervising approximately 500,000 young people from about 1,000 offices, juvenile probation has aptly been termed the “cornerstone” of the juvenile justice system.2 Recognizing both the critical role of probation in the juvenile justice system and the dearth of systematic information available, OJJDP embarked upon the first national effort to gather policy- and practitioner-relevant information on juvenile probation in the late 1990s. The result was the design of the National Juvenile Probation Census Project, a two-part data collection project comprising two forms, the Census of Juveniles on Probation (CJP) and the Census of Juvenile Probation Supervision Offices (CJPSO). In 2009, the decision was made to separate the CJP and CJPSO into two distinct data collection efforts. This OMB package includes only the CJP. The CJPSO package is anticipated to be submitted to OMB in the summer of 2013.

OJJDP is authorized to conduct this data collection under the Juvenile Justice and Delinquency Prevention Act of 2002 (the JJDP Act) (see Attachment A). For purposes of this PRA request, the relevant part of the JJDP language reads as follows:

1

Although

the

survey

will

be

administered

in

2013,

the

reference

date

will

be

October

24,

2012.

1

Although

the

survey

will

be

administered

in

2013,

the

reference

date

will

be

October

24,

2012.

2 OJJDP Model Programs Guide. http://www.ojjdp.gov/mpg/progTypesProbation.aspx

(a) plan and identify the purposes and goals of all agreements carried out with funds provided under this subsection; and (b) Statistical Analyses.--The Administrator may--

(1) plan and identify the purposes and goals of all agreements carried out with funds provided under this subsection; and

(2) undertake statistical work in juvenile justice matters, for the purpose of providing for the collection, analysis, and dissemination of statistical data and information relating to juvenile delinquency and serious crimes committed by juveniles, to the juvenile justice system, to juvenile violence, and to other purposes consistent with the purposes of this title and title I.

--42 U.S.C. 5661

One important purpose of the CJP is to gather information that provides a comprehensive national picture of juveniles involved in the juvenile justice system that is both complementary and comparable to OJJDP’s Census of Juveniles on Residential Placement (CJRP). The CJRP is a count of juveniles in residential placement as of a reference date, with details on each youth such as gender, age, race, offense, etc. Currently, there is no state or local data collection effort that is comparable to and consistent with the CJRP other than the CJP. The CJP is designed to be the probation counterpart to the CJRP, with a count of juveniles on probation as of a reference date. Many more youth have probation as their disposition than residential placement, and as the CJRP counts dropped dramatically from 120,563 in 1999 to 79,166 in 2010, policymakers and practitioners have an urgent need to understand the countervailing trends in juvenile probation, and consider them in concert with the information provided by the CJRP.

Currently, the National Center for Juvenile Justice (NCJJ), funded by OJJDP, does collect court data from states that include some information about juveniles on probation, and some states publish this information. However, a significant drawback is that because the data come from the courts, the counts are at the case level, not the unduplicated youth level, so we do not know the actual number of youth on probation at any given point. As mentioned above, information on the unduplicated number of youth under supervision is important for program planning and policymaking purposes. Another drawback to the data currently available is that the data collection/methodology (when, how, and who gets counted) varies extensively among the states that publish their court data, so states are not able to draw comparisons among themselves. In addition, the court data that NCJJ collects present a cumulative count of the annual number of cases ordered to probation for a given year, rather than a count on a given day, making the data noncomparable to the CJRP (and potentially inflating the estimate as youth cycle in and out of the system during the year). Finally, due to data ownership policies, NCJJ publishes and releases only national estimates, not state-level court data. The CJP will provide state-level counts that are consistent and comparable across states as well as with the current CJRP. In turn, this will inform policy decisions at the federal level and will also benefit state policymakers--allowing them to compare themselves to other states, track trends, and establish a frame of reference for system reform.

While it is possible that some of the data collected by the CJP may be available as aggregate counts from some states, OJJDP requires individual-level data to conduct analyses such as how race/ethnicity of juveniles on probation varies by offense and gender, how female and male probation rates vary by race/ethnicity and state, and other analyses with important implications for policy and practice. This information will help track emerging trends (particularly among specific subgroups of juveniles, i.e, females and minorities), and it also will assist in identifying differences among states and with national estimates.

2. Purpose and Uses

OJJDP anticipates that the CJP (and CJPSO) collections will become the backbone of the Office’s information collection efforts on juvenile probation. The CJP collects the most important data elements concerning juveniles on probation, including number and characteristics of these juveniles. This is the only collection that collects comprehensive, national-level information about this population. While there are data on yearly delinquency cases resulting in probation as a court disposition from those courts that voluntarily choose to provide annual data to the National Juvenile Court Data Archive, there are no person-level data that can support characterization of this population and analyses of patterns and trends in the population. The juvenile probation population represents the largest population under juvenile justice supervision, and as such is a critical population for OJJDP to understand. Developing and improving the CJP directly responds to a recent recommendation by the National Research Council, National Academies. Specifically, OJJDP “should develop a data improvement program on juvenile crime and juvenile justice system processing that provides greater insight into state, tribal, and local variations…At the state, local, and tribal levels, data should be collected on the gender, age, race, and ethnicity of offenders as well as offense charged or committed; arrest, detention, and disposition practices.1”

OJJDP intends to use the CJP information in a variety of ways and anticipates this information will also be extremely useful to policymakers at the state and local level. Understanding the changing demographics of juvenile probation as well as emerging trends will allow OJJDP to improve the support and guidance it offers to the field. Several of OJJDP’s national training and technical assistance providers currently offer resources related to the juvenile probation, and as a better understanding of the juvenile probation population emerges, OJJDP plans to develop new, targeted tools and information for juvenile probation professionals. For example, in light of data suggesting that the proportion of girls in the juvenile justice system is growing, OJJDP has begun working with its National Girls Institute to promote evidence-based, gender-specific programs and practices. If this trend is reflected in 2012 CJP data, the case may be made to expand this work to include gender-specific probation programming. In addition, CJP data will be helpful to the states who receive OJJDP formula funds as they develop their 3-year juvenile justice plans. It can assist them in understanding how their juvenile probation population is or is not comparable to those in other states and to the national estimates. Finally, when considering CJP data along with the forthcoming information that will be available through the planned CJPSO, states will be able to compare not only the trends in their juvenile probation population, but also make connections between that demographic data and the policies and practices utilized by their probation supervision offices (i.e, use of intensive supervision, specialized services and treatment, etc.).

As previously discussed, the CJP is designed to complement the Census of Juveniles in Residential Facilities (CJRP), which collects similar data on the population of juveniles in residential placement. Together, these two OJJDP data collections provide the only comprehensive national data sources describing the population and characteristics of those involved with the juvenile justice system. The CJRP has demonstrated that findings based on person-level data are the most requested from the juvenile corrections field.

The CJP allows for a national description of the population of youth supervised by probation offices. The CJP collects information about:

Offense characteristics of youth on formal court-ordered probation,

Offense and youth locations (e.g., city, county, state),

Race /ethnicity of these youth, and

Age and gender distributions.

Only data at this level can support the kinds of analyses that OJJDP is mandated to conduct as part of its mission to provide Congress, other Federal agencies, and the public with an understanding of the numbers, characteristics, and circumstances of justice-involved youth. OJJDP will utilize the information from the CJP data in the following way:

To learn more about how states and localities use juvenile probation as a sanction and monitoring tool;

To compare how states and localities utilize juvenile probation as a sentencing option;

To compare the number and characteristics of juveniles under community supervision with those of juveniles receiving other post-adjudication dispositions;

To compare juvenile probation rates among the states;

To compare characteristics of juveniles on probation among the states;

To identify the unique issues of minorities and females in the juvenile justice system.

OJJDP expects to produce publications that summarize the data findings (as either Fact Sheets or OJJDP Bulletins) for the juvenile justice field. In addition, the National Center for Juvenile Justice (a subcontractor on this project) will prepare an Easy Access online data tool and add the CJP data to the OJJDP Online Statistical Briefing Book (www.ojjdp.gov/ojstatbb/). The data will also be archived and available to the field at the National Archive of Criminal Justice Data (www.icpsr.umich.edu/icpsrweb/NACJD/) through an agreement with the Inter-university Consortium for Political and Social Research (ICPSR).

3. Use of Information Technology

OJJDP considers automated data collection and submission an important venue for data collection. The 2012 CJP data collection will be fully automated, flexible, and accessible to respondents. Respondent burden will be kept low by offering respondents several options for data submission: an online web survey; direct upload of data to a secure FTP site; and secure emailing, mailing, or faxing forms or study-provided templates. In addition, respondents will be able to provide entire “data dumps” or complete uploads of files, and the project will immediately de-identify them and then extract the pertinent data elements. Finally, the online web survey will allow continued access to the automated form, so that respondents can enter data in return visits over several weeks rather than being required to complete the submission in one sitting. Respondent burden will also be reduced through extensive respondent support, including a Help Desk toll-free phone line and dedicated email for the project. See Attachment B.1 for the paper version of the data collection instrument containing all instructions and data elements requested. Please note that while Attachment B.1 reflects the final version of the instrument (and the text and question sequence will not change), the final instrument will be in color and have a more professional appearance. Please see Attachment B.2 to view screen shots of the web log-in page and Section1 of the instrument.

4. Efforts to Identify Duplication

Data collections from OJJDP and other federal agencies, as described below, have provided some information on juvenile probation. However, these efforts do not fully address the needs of OJJDP in developing a more comprehensive data collection on juvenile probation. Briefly, the sources of information include the following:

• The 1991 Census of Probation and Parole Agencies conducted by the Bureau of Justice Statistics included juvenile probation. Unfortunately, this project suffered from numerous technical problems including difficulties with updating the mailing list and problems in receiving timely and complete responses.

• The Census of Adult Probation Supervising Agencies was first conducted by BJS in 2011.This census is designed to provide accurate and reliable national and state-specific statistics that describe the characteristics and operations of probation agencies that supervise adults. Subsequent to the Census of Probation and Parole Agencies, BJS has opted to collect data exclusively on adult probation agencies and therefore will be unable to provide OJJDP with needed information on juveniles on probation.

• The National Juvenile Court Data Archive collects aggregated yearly information on the disposition of delinquency cases in juvenile courts throughout the nation. From automated data and published reports submitted by court jurisdictions covering about 70 percent of the juvenile population, this project produces national annual estimates of court activity. These estimates include the number of cases receiving probation as their ultimate and most serious disposition. This project has produced these estimates for OJJDP since 1974 when the Office was created through the JJDP Act. While this project can provide aggregated information on the juveniles entering probation, it does not allow for more complex analyses using consistent and standardized data on the characteristics of the youth and their offenses. The NJCDA is an important project, but cannot replace the data collection activities in this request.

• Through the Juvenile Probation Officer Initiative (JPOI) funded by OJJDP, the Office had established a routine and continuous contact to juvenile probation administrators and officers. For many years, this project served as a mechanism for training probation officers, informing these professionals of changes in the field, and keeping the Office appraised of emerging issues. The JPOI project used to maintain a list of all probation officers in the country. Due to budget constraints this aspect of the project was discontinued in Fiscal Year 1996.

OJJDP determined that the CJP was necessary after an exhaustive search and analysis of existing Federal and state data sources on juvenile probation. Such a search was conducted as part of OJJDP’s Statistics and Systems Development Project (SSD) that aimed to improve the national and state-level collection of information on juvenile justice. One task of this project was to gather information on all national data systems that could serve to inform policy makers on juvenile delinquency and juvenile justice. No similar information exists, nor can any existing information be modified to serve the purposes described above. The CJP is critical because without these individual-level data, OJJDP will not be able to answer critical policy and practice question or provide effective leadership on probation matters.

In addition to the activities discussed above, OJJDP has continued to consult and network with juvenile justice researchers and individuals involved directly in juvenile probation matters around the country (e.g., representatives from the American Probation and Parole Association) and has found no duplication of this data collection effort.

5. Methods to Minimize Burden

The CJP instrument collects data that are available from the current record-keeping practices of juvenile probation supervision offices. The arrangement of the items on the form reflects a logical flow of information to facilitate comprehension of requested items and to reduce the need for follow-up. Small businesses are not involved in this data collection. The primary entities for this study are state-, county-, or judicial district-level probation supervision agencies. Burden is minimized for all respondents by providing several options for data submission (mail, fax, or web response) and by requesting only the minimum information required to achieve the study’s objectives.

The study’s contractor will coordinate all data collection and will carefully specify information needs. We anticipate that probation agency personnel will be able to retrieve and transfer data with minimal burden and with support as needed from the study’s contractor.

6. Consequences of Less Frequent Collection

If the proposed data were not collected at all, OJJDP would be unable to report a reliable count of the number and characteristics of youth under juvenile probation supervision in the United States. Although an annual survey of Juvenile Probation Supervision Offices to collect information on both the operation of those offices (the CJPSO) and the juveniles on their probation caseload (the CJP) would be ideal, both are designed to be collected every other year because it would not be practical to expect respondents to respond to both on an annual basis. Less frequent collection than the current biannual schedule would negatively impact one of the main purposes of the census: identifying trends in the data. The prevalence and type of juveniles on probation is affected by changes in government budgets, laws, and political sentiment. These changes would not be captured with sufficient sensitivity if data were collected less frequently than every other year.

Please note that although the CJP is planned to be collected biannually, we anticipate that only one CJP collection will occur within the current OMB approval period. The current contractor, Westat, is only funded to complete one CJP collection, and prior to a 2014 CJP collection, OJJDP will submit a new PRA package with a request for extension or revision.

7. Special Circumstances

This request fully complies with the following regulations. Information collection will NOT be conducted in a manner that:

Requires respondents to report information to the agency more often than quarterly;

Requires respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

Requires respondents to submit more than an original and two copies of any document;

Requires respondents to retain records, other than health, medical, government contract, grant- in-aid, or tax records, for more than three years;

In connection with a statistical survey, is not designed to produce valid and reliable results that can be generalized to the universe of study;

Requires the use of statistical classification that has not been reviewed and approved by OMB; or

Requires respondents to submit proprietary trade secrets, or other confidential information, unless the agency can demonstrate that it has instituted procedures to protect the information’s privacy to the extent permitted by law. The collection does not request proprietary information. However, the pledge of privacy provided with the data collection derives directly from statute (42 U.S.C. 3789g).

8. Consultations Outside Agency

In accordance with 5 CFR 1320.8(d), a 60-day Federal Register notice was published on July

2, 2012, Vol. 77, No. 127, page 39264 (see Attachment C). A 30-day Federal Register notice was published in September 5, 2012, Vol. 77, No. 172, page 54613 (see Attachment D). OJJDP will welcome and respond to all questions and comments on the CJP. All such questions or comments will be considered, and logical or necessary changes will be made to the instrument.

The following persons were consulted on the development of the instrument and the procedures for the survey: Janet Chiancone, OJJDP; Brecht Donoghue, OJJDP; Andrea Sedlak, Westat; Liz Quinn, Westat; Karla McPherson, formerly of Westat; Melissa Sickmund, National Center for Juvenile Justice; Douglas Thomas, National Center for Juvenile Justice; Charles Puzzanchera, National Center for Juvenile Justice; Benjamin Adams, formerly of National Center for Juvenile Justice; Nathan Lowe, American Probation and Parole Association; Deena Corso, Multnomah County Juvenile Services Division; Nadine Frederique, National Institute of Justice; and Stephen Simoncini, U.S. Census Bureau.

9. Paying Respondents

OJJDP will not provide compensation to respondents who participate in this data collection. Participation will be purely voluntary.

10. Assurance of Privacy

All information tending to identify individuals (including entities legally considered individuals) will be held strictly private according to Title 42, United States Code Section 3789(g) (see Attachment E). Regulations implementing this legislation (28CFR Part 22) require that OJJDP staff and contractors maintain the privacy of the information and specify necessary procedures for guarding this privacy (see Attachment F). A letter from OJJDP will notify persons responsible for providing these data that their response is voluntary and the data will be kept private. A copy of this letter is included this package (see Attachment G).

11. Justification for Sensitive Questions

The CJP data collection does not contain sensitive questions.

12. Estimate of Hour Burden

Table A.1 contains the estimated burden hours for each respondent. The estimate is based on the average time it takes to complete the form and the number of respondents that are anticipated to report.

We anticipate approximately 1,000 responders spending 15 minutes each to complete the presurvey screener (in Attachment G). Although called a screener, the mailing is not intended to “screen out” respondents, but rather to ensure that the data collection instrument (see attachment C) is directed to the appropriate staff member. The study contractor used the previous CJP roster, rosters from the Bureau of Justice Statistics, association membership lists, directories, websites, and phone contacts to develop a comprehensive universe file. Consequently, we anticipate the need to redirect the instrument will be very low.

Respondents will report their information using the data collection instrument (see Attachment C). Depending on the method the respondents select to report their information: manual, partial automated, or full automated; we estimate the average burden hour range per respondent is from 2 hours (fully automated) to 8 hours (manual). Based on responses from respondents in previous CJP collections, we anticipate that up to 90% of respondents will use the CJP web-based reporting options at least in part. Additionally, because the CJP now offers the option of providing non-uniform submissions, including complete “data dumps.” We anticipate at least 65% of respondents will take advantage of the fully automated reporting option.

TABLE A.1

ESTIMATED RESPONSE BURDEN

Instrument |

Number of Respondents |

Number of Responses Per Respondent |

Average Burden Hours per Response |

Total Burden Hours |

Pre-survey screener |

1,000 |

1 |

0.25 |

250 |

CJP manual1 |

100 |

1 |

8.00 |

800 |

CJP partially automated2 |

250 |

1 |

4.00 |

1,000 |

CJP fully automated3 |

650 |

1 |

2.00 |

1,300 |

Total Response Burden (Hrs) |

|

|

|

3,350 |

Estimated Average Response Burden |

|

|

|

3.35 hours |

|

|

|

|

|

1 Respondents complete the entire instrument using the paper instrument by hand and then mail, fax, or email a scanned version to Westat.

2 Respondents complete the instrument at in least part electronically and in part by hand or by individual data element.

3 Respondents upload a complete “data dump” or spreadsheet of their data file.

13. Estimate of Respondent Cost

The information requested is normally maintained electronically as administrative records in the juvenile probation agencies. The only costs respondents will incur are costs associated with their time. Other than these costs, there are no additional costs to the respondent.

To compute the total estimated cost for the CJP, the total burden hours were multiplied by the average hourly wage for full-time employees over age 25 with a bachelor’s degree or higher ($28.70) according to the Bureau of Labor Statistics, Current Employment Statistics Survey, 2011. The CJP is estimated to take an average 3.35 hours for a total cost of $86 per respondent. The estimated total burden for all respondents is $96,145.

14. Cost to the Federal Government

The total annualized cost to the federal government across three years for gathering the information is estimated to be $195,333, including direct and indirect costs. This includes the costs of the current contractor (Westat) and subcontractors (National Center on Juvenile Justice and American Probation and Parole Association).

15. Reasons for Program Changes

All changes proposed are made in an attempt to improve response rates and increase the accuracy of the data submitted and future analyses, while reducing respondent burden. The options as to how respondents may submit data were expanded. To reduce burden, the CJP will now allow respondents to submit “data dumps” of their electronic records, rather than requiring them to enter specific data elements individually on paper or online forms. Respondents will be encouraged to submit non-identifiable data using any secure method.

Some changes were also made to the instrument data elements. The instrument no longer asks for total counts of youth on informal probation, but only for respondents to report the number of youth on formal probation. This change was made due to experiences with the previous CJP which suggest that the way in which states and localities define and track informal probation differs significantly. Any counts of juveniles on informal probation provided cannot be accurately aggregated and analyzed until we achieve a better understanding of these state-by-state variations. Given the gaps and variability in previously reported data on informal probation, the decision to exclude informal probation from the present collection will not result in a significant loss of trend data. Collecting data on informal probation is a future goal of the CJP, and OJJDP plans to include questions about informal probation on the planned data collection: Census of Juvenile Probation Supervising Offices (not yet submitted to the Office of Management and Budget), so that OJJDP can begin to understand state and locality policy and practices surrounding informal probation. Consequently, all associated questions relating to informal probation were removed from the CJP instrument. These changes are anticipated to reduce respondent burden.

Other revisions to the instrument are expected to increase the accuracy of data analysis and enhance statistical estimates. The offense code categories were changed and expanded to distinguish between different types of crimes. The revised offense codes are modeled after codes used by OJJDP’s National Juvenile Court Data Archive and by the Survey of Youth in Residential Placement. It is anticipated that in addition to providing more detailed information for analyses and increased comparability across surveys, the specificity of the revised codes will increase the consistency of information across jurisdictions, since almost all jurisdictions have different methods of categorizing offenses. In an effort to reduce any additional respondent burden that might result from expanding the list of offense codes, the revised instrument also gives respondents the option of using their own offense codes (provided they include a key or crosswalk so the study team will be able to accurately recode the respondents’ offense codes into the categories used on the CJP instrument).

In order to limit nonresponse, the revised instrument allows respondents to submit proxy (substitute) data when the primary data elements of interest are not available. Respondents are allowed to submit counts of probation cases ONLY if they cannot provide counts of individual youth on probation. The instrument makes it clear that the preferred count is of youth. We made the decision to accept case-level data due to concerns about potential missing data from respondents for whom individual level data is not available. For these respondents, we plan to undertake an effort to unduplicate the case-level data by matching on gender, birthdate, place of residence, and other appropriate variables. We anticipate we will be able to eliminate most or all of the duplication in the case-level data, provided we have sufficient response rates and that missing data is not a significant issue. In addition, allowing for the submission of case-level counts where youth-level data are not available will provide us with more comprehensive information than we can currently collect from juvenile court records, which while they do provide case-level data, represent total annual counts in which the issue of duplication is much more profound. Suggested proxies for alternative state and county data were also added to items asking (1) where the most serious offense was committed and (2) where youth reside. By collecting information that is similar to the original data elements of interest, we will be able to increase the accuracy of our counts. We also added a question asking respondents to provide an explanation for any irregularities in the data they submit. By increasing our understanding of any missing or inaccurate data, we may be able to better account for issues when preparing the data file and conducting analyses.

Finally, to increase the ease of reporting, the instrument instructions were changed. Rather than instructing respondents on the specific type of unique identifier to use on the roster portion of the survey (to be used by study staff in the event a callback is needed), respondents are now encouraged to select any unique identifier that will be meaningful to them--as long as it is not personally identifiable to an individual (i.e., social security number, full name, or address). This is anticipated to reduce respondent burden as well.

16. Project Schedule

OJJDP considers publication of the CJP findings important not only for Federal agencies, but also for enhancing the work of the probation offices themselves. OJJDP has developed a comprehensive system for analysis and distribution of the information collected.

OJJDP has entered into an Interagency Agreement with the National Archive of Criminal Justice Data (NACJD), part of the ICPSR at the University of Michigan, to eventually make the CJP and CJPSO data files available as restricted files to researchers. This effort would also promote the publication of research findings from the two collections. In general, OJJDP produces summary data findings of our large data collections online through OJJDP’s Statistical Briefing Book, OJJDP publications (fact sheets, bulletins) which are written for the juvenile justice field, and numerous conference presentations. OJJDP maintains an ongoing grant with the National Center for Juvenile Justice to produce summary statistics. The study contractor, Westat, and Westat’s subcontractor, the National Center for Juvenile Justice, are responsible for the primary dissemination of the 2012 CJP data under the National Juvenile Justice Data Analysis Program.

OJJDP’s Statistical Briefing Book is located online at www.ojjdp.gov/ojstatbb/default.asp.

As indicated under the left navigational bar, the briefing book provides statistical overviews of all key indicators and points in the system. Currently, the “Juveniles on Probation” section of the briefing book is rather limited, but the CJP data will enable OJJDP to provide Frequently Asked Questions and other resources under this category that will be similar to the type of information that is currently available under Juveniles in Corrections (www.ojjdp.gov/ojstatbb/corrections/index.html).

With the CJP results, some of the FAQs we anticipate adding under Juveniles on Probation include:

• How many juveniles are on probation on a given day in the U.S.?

• What is the female proportion of juveniles on probation?

• How do probation rates vary by race?

• How old are most juveniles on probation?

• Does the race/ethnicity profile of juvenile offenders on probation vary by offense?

• Does the race/ethnicity profile of juveniles on probation vary by offense and gender?

• How do female probation rates vary by race/ethnicity and State?

• How do male probation rates vary by race/ethnicity and State?

Does the offense profile of juveniles on probation vary by State?

• How does the type of offense resulting in probation vary by race/ethnicity?

Numerous other questions can be added; those listed above are examples only. In addition, OJJDP anticipates producing an overall “Juveniles on Probation” bulletin that would summarize the findings, as well as a series of online Fact Sheets that address some of the key issues outlined above. Regarding conference presentations, OJJDP expects to present CJP findings at the American Probation and Parole Annual Meeting, as well as at several juvenile justice related meetings and conferences (including a few sponsored by OJJDP) in 2013.

17. Approval to not display OMB approval expiration date

The expiration date for OMB approval will be displayed.

18. Exceptions to the certification

No exceptions to the certification statement are requested or required.

Part B. Statistical Methods

1. Universe and Respondent Selection

The organization of juvenile probation in the United States is complex. In some states, probation is administered at the state level, in some at the county or judicial district level. Some states have a mixed organizational structure, such as Ohio where half the counties are administered at the state level and the other half at sub-state levels. Some jurisdictions administer probation supervision through the executive branch and some through the courts. Some jurisdictions administer adult and juvenile probation services separately and some as unified systems. In unified systems, officers may have mixed adult and juvenile or unmixed caseloads. The Bureau of Justice Statistics (BJS) has learned that the complexity of organization may result in state-level estimates undercounting significant portions of the population.

The study contractor used BJS rosters, association membership lists, directories, and websites to develop a comprehensive universe file. It is important that probation data be collected at the right level to ensure that the counts are complete and that they do not include duplication. We have found that the office level is not the best reporting level for probation counts. First, as we discovered in discussion with our American Probation and Parole Association (APPA) workgroup, there is wide variation across jurisdictions in the definition of “office,” with its meaning ranging from entire agencies to jurisdictional sub-offices to field offices. The better approach is to identify the highest-level entity for each jurisdictional unit in which the state or territory is administered (e.g., a county, a group of counties, a judicial circuit, etc.), understanding that many states comprise multiple types of organizational units. That entity is the best reporter for its jurisdiction, whether it is an entire state or a municipality. This is the level at which policies and procedures are set and at which record- keeping is consolidated. Not only is it an inefficient use of resources to collect data from every entity that calls itself an “office,” it risks duplication in counts.

After developing a universe file, the study contractor then called and emailed persons on the file to determine the correct person to respond to the information collection. This step has resulted in a comprehensive and accurate frame for collecting the information, with contact information on the highest-level entity appropriate for reporting the data we request. The frame has approximately 750 contacts covering all 50 states, the District of Columbia, and the five territories.

As this is a census, no sampling or respondent selection methods will be used. OJJDP seeks to provide precise national and state-level estimates on juvenile probationers and the agencies that supervise them. Ideally, a census would not require estimation because all units would respond, yielding a complete count, but in most censuses there is at least some item nonresponse. Data collection and follow-up methods have been designed to minimize nonresponse (see item 3 below for details), so the expected response rate for the data collection is 95 percent or higher.

2. Procedures for Collecting Information

The CJP collection will occur only once every 2 years, rather than annually, in order to reduce the reporting burden on agencies. As this is a census, the following information is not relevant to the data collection:

• Stratification and sample selection

• Estimation

• Degree of accuracy needed for the purpose described in the justification

Pre-notification

Data collection is scheduled to begin March 1, 2013. Pending OMB clearance, respondents will be sent a pre-notification packet of information about the study. This will include a letter from OJJDP and Westat introducing the study and encouraging participation and a screener asking respondents to reconfirm that they can provide the requested data and that their contact information on the roster is correct. Westat will send pre-notification messages via email for contacts when available and via mail for contacts with no email information. Westat will revise the roster as responses from the screener are sent back by respondents confirming or updating their agency contact information.

Data-collection

On March 1, 2013 (pending OMB clearance) respondents on the roster will be sent an invitation to complete the survey. This invitation will include an agency username and password to log into the secure web instrument. These invitations will be sent via email and/or mail depending on respondent contact information contained in the roster. Respondents are encouraged to use the web instrument to submit their data, but they may also survey data through whatever alternative method they wish, including paper copies, phone calls, email, and fax. Westat will staff a Help Desk email and phone to answer any questions and offer assistance to respondents.

Quality Control

Westat will provide for several different methods of quality control on the survey data. First, if respondents do submit data using an alternative method, the Help Desk will enter the data into the web survey so that data will be in a consistent format. Help Desk staff will review all data as it is submitted to look for missing data or potential mistakes. These will be flagged for follow-up and respondents will be contacted for clarification.

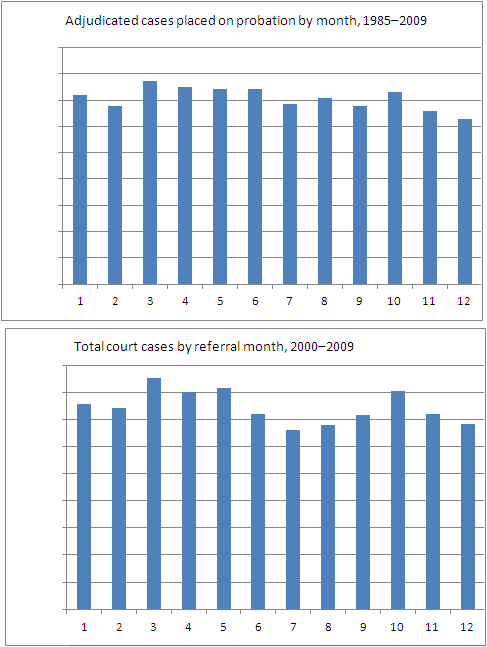

Respondents are also asked to provide information on how their data may differ from the exact specifications the study has requested. For example, if respondents provide proxy data, they will indicate which data field is different from what was requested and what exactly they have provided instead. We are aware that some respondents cannot provide the data exactly as requested and we expect some amount of proxy data; these discrepancies are unavoidable given the complexities involved in gathering information from different case management systems across the nation. Similar proxy data are preferable to missing data when conducting analyses with national data. For example, we decided that if some jurisdictions cannot provide counts for our reference date in October 2012, it preferable to allow them to submit one-day counts of juveniles for a given day during the data collection period rather than to leave those counts as missing. Those data not collected on the specified reference date will be footnoted in reports or publications as appropriate. While we expect that a small number of respondents may need to deviate from the reference date of October 2012; this is not expected to result in large data discrepancies, as there appear to be only small fluctuations in probation data from month to month. For example, according to data provided by the National Center on Juvenile Justice, total nationwide court cases by referral month for the years 2000 through 2009 show that there were a total of 386,000 adjudicated juvenile cases placed on probation in March of all those years, 375,000 in April, 371,000 in May (March – May is the planned data collection period), and 365,000 in October (the target date). And there were a total of 1.7 million juvenile cases referred in March of all those years, 1.6 million in April, 1.6 million in May, and 1.6 million in October. See below for charts summarizing this information.

Specific Survey Question Issues

Information on juveniles’ race and ethnicity will not be collected using the official U.S. Census definitions. This is because OJJDP requests such information in the format most frequently used by respondents to reduce their time burden; currently more than half of data providers use definitions that combine race and ethnicity (i.e., they include “Hispanic/Latino” as a race option instead of listing this separately). Once state and local reporting systems have adopted the current U.S. Census definitions of race and ethnicity, the CJP collection method will be changed to this format.

Information on youth offense history is limited to the most serious offense that resulted in the youth being placed on probation. This decision was made both to limit respondent burden and in recognition that this is most often how offense information is reported in the nation’s juvenile and criminal justice systems. There is generally a hierarchy of offenses, and it is typically the juvenile’s most serious offense which results in their final disposition. Requesting only the most serious offense data limits respondent burden (since we are requesting that respondents re-code their own offenses into the CJP offense categories) while also providing OJJDP with valuable information on the seriousness of the youth’s behavior. In addition, it ensures comparability across the data, both within the CJP, and also as a complementary collection to OJJDP’s Census of Juveniles in Residential Placement which also asks only for the most serious offense.

3. Methods to Maximize Response

There are two types of missing data in surveys: (1) missing data due to item nonresponse, or nonresponse to certain items of the questionnaire, and (2) missing data due to unit nonresponse, or no data collected for a survey unit. For the CJP, the unit is a juvenile on probation at the reference date. The CJP was last conducted in 2009, and the item nonresponse rates were quite low for the most of variables. However, the estimated unit nonresponse rate was about 38 percent. Individual states had varying degrees of unit-level missing data, ranging from zero to 100 percent. The 19 state reporters and Arizona reported 100 percent of juveniles on probation, and we assume their unit-level missing rate was zero. On the other hand, seven states did not provide any data, and their unit-level missing rate was 100 percent. For other states, it is difficult to determine the unit-level missing rates because we do not know the state total number of individual juveniles on probation in 2009. So the primary criterion to decide whether there were unit-level missing data is whether all counties were covered or not. CJP reporters provided information on geographic areas (e.g., counties). Therefore, if there were counties uncovered by any reporters in a state, then we assumed that there were unit-level nonresponses. The unit-level nonresponse rate is shown in Table B1. The table also shows the number and type of reporters. State reporters are coded as 1. Note that it was impossible to precisely compute the response rate because the true total was unknown.

TABLE B1

NUMBER AND TYPE OF REPORTERS IN 2009, AND ESTIMATED UNIT NONRESPONSE RATE BY STATE

-

State

No of Reporters

Types of Reporters1

Estimated Unit Nonresponse Rate (%)

AL

12

4

66.9

AK

1

1

0.0

AZ

3

2, 4

0.0

AR

4

4, 5

93.1

CA

27

4

26.9

CO

1

1

0.0

CT

1

1

0.0

DE

1

1

0.0

DC

0

NA

100.0

FL

1

1

0.0

GA

1

2

45.3

HI

1

4

89.0

ID

3

2, 4

16.4

IL

24

4, 5

45.4

IN

16

4, 6

80.7

IA

1

5

98.1

KS

4

4, 5

83.9

KY

1

1

0.0

LA

9

5, 6

7.9

ME

1

1

0.0

MD

1

1

0.0

MA

1

1

0.0

MI

19

4

78.5

MN

1

2

0.0

MS

1

1

0.0

MO

2

1, 4

0.5

MT

5

4, 5

74.0

NE

1

1

0.0

NV

7

4, 5

25.5

NH

0

NA

100.0

NJ

0

NA

100.0

NM

1

1

0.0

NY

18

3, 4, 5

50.4

NC

1

1

0.0

ND

0

NA

100.0

OH

32

4, 6

48.2

OK

1

1

0.0

OR

19

4

39.5

PA

30

4, 95

54.5

RI

1

1

0.0

SC

1

1

0.0

SD

1

1

0.0

TN

0

NA

100.0

TX

46

4, 5

41.1

UT

1

1

0.0

VT

0

NA

100.0

VA

1

1

0.0

WA

7

4, 5

64.6

WV

3

4

95.0

WI

20

4

74.6

WY

0

NA

100.0

Total

333

37.8

1 Note: 1=an entire state; 2=most of a state; 3=a single county; 4=multiple counties; 5=a single municipality; 6=multiple municipalities; 95=other type of area

The current study contractor has several strategies for improving unit response. The first step has been to devote considerable time and effort to enhancing and confirming the roster of respondents so that the proper person receives the survey notification materials. The next step, pending OMB clearance, will be to send an advance letter on behalf of OJJDP to agency directors to arrive approximately 3 weeks prior to data collection (see Attachment G). The letter will explain the survey and list whom our records indicate is the appropriate agency respondent. (Note that there are four different versions of the prenotification letter, each with slightly different wording depending on the audience: (1) a state-level agency that has not been contacted previously to provide data; (2) a city- or county-level agency that has not been contacted previously to provide data; (3) a state-level agency that has provided data previously; and (4) a city- or county-level agency that has provided data previously.)

The letter (see Attachment G) will contain a return postcard for use in updating respondent information. Staff will follow up by telephone with any agency from which we have not received a returned acknowledgement card within 1 week of fielding. At the beginning of data collection (estimated March 2013), we will email survey invitations to all respondents identified in the frame development process as in-scope. The invitation will contain the URL and user password needed to access and complete the web survey, the toll-free Help Desk telephone number, and instructions for data upload. The field period will be 12 weeks, including nonresponse follow-up.

Our survey tracking system allows us to monitor responses and identify nonrespondents for follow-up. Two weeks after sending the survey invitation, we will send a thank-you email or postcard to all respondents. It will thank those who have responded and encourage those who have not to do so promptly. We will send a second reminder to those who have still not responded 1 week later and then shift to all-telephone follow-up. Help Desk staff will call any respondent who has not completed the survey after receiving two reminders, in order to determine the reason for the nonresponse and try to facilitate a response. They will encourage the nonrespondent to complete the survey on the web and will have the person’s unique password and ID number available to get them started. They will offer to provide other secure response modes if the respondent prefers. The Help Desk staff can also enter the respondent’s data during the telephone call or schedule a later date to do so. Our experience with a wide variety of web surveys (including those with judges, attorneys, and probation and police officers) is that few will request to mail in paper forms or complete the survey over the telephone. However, staff will accommodate the response mode needs of all respondents. We want it to be clear to all agencies that their response is important, and we will accommodate their needs to facilitate their participation in the survey. If we are unable to speak to the designated respondent in our telephone follow-up, continually reaching a “gatekeeper” (usually an assistant), we will email a survey PDF to the assistant and ask them to print it out, leave it for the contact person to complete, and return it to us via fax. Help Desk staff will enter surveys received via fax or PDF into the web instrument.

The 2009 CJP allowed respondents to provide data online through a web-based form, in an Excel spreadsheet template, via an uploaded data file (CSV, Excel or XML files) conforming to required formatting instructions and field definitions, and via paper. However, respondents may have found the reporting burden more significant because it required manipulation of data to meet the reporting requirements, so the current study also will offer respondents the option of providing non-uniform submissions, including complete “data dumps.” Allowing respondents to provide data in the formats of their choice will both increase response rates and result in a more timely completion of the analytic data file. We also provide a toll-free phone number for respondent questions. In cases where respondents choose to submit via data dumps, NCSC will recode offense and race data as needed to be consistent with the study coding system. Respondents will be asked to provide a cross-walk linking their codes to those used by the CJP; project staff will then use this cross-walk during re-coding. Whether individuals choose to recode offenses by hand or by programming, and whether respondents or project staff do the recoding, the same basic decision-making process will be used. This will result in consistent coding decisions and data that can be compared across years, even if respondents chose different submission methods for future survey administrations.

As previously noted, the CJP provides multiple avenues for data submission. One option for respondents without electronic data will be to submit their data via an online web-based form. At the end of the 2005 and 2007 CJPSO, respondents were asked if they would use web-based reporting options; 84 percent of 2007 respondents said that they would. Further options include downloading an Excel template, completing the form, and then uploading the spreadsheet via a secure file transfer site or sending it via encrypted email; or completing the paper questionnaire by hand and either mailing or faxing responses. Respondents will choose their response mode. The surveys, whether paper or electronic, will contain the same questions and follow the same logical sequence of questions.

OJJDP requires accurate and reliable statistics on the number and characteristics of juveniles on probation. The study contractor will produce complete and fully-imputed analytic files within 12 months of the reference date (October 24, 2012) for the survey. As described above, we will make every effort to increase response rates as the first step in improving data quality. Despite our efforts, however, we expect at least some item nonresponse. The study contractor statisticians are internationally known experts in nonresponse adjustment and estimation.

Although weighting is the most common method to address unit nonresponse, imputation can be used for unit nonresponse provided that: (1) the unit nonresponse rate is low (less than 5%); and (2) there is background information available for each unit in the frame (e.g., the past year’s data for similar variables, state published data, or data available in sources such as the 2010-2011 Probation and Parole Directory, ACA, 2011). We expect that our extensive follow-up will minimize unit nonresponse, so imputation will be straightforward. Although weight adjustment can be used for item nonresponse, imputation is preferable. This is because weight adjustment is equivalent to mean imputation for estimation of the population means and totals and mean imputation is usually much less efficient than other imputation methods commonly used (e.g., ratio imputation). Ratio and regression imputation methods are good choices for continuous variables using both historical information and currently observed data, taking account of regional differences in trends.

Imputation methods such as those described above enhance the quality of data and data analysis for both continuous and categorical variables. The study contractor has extensive experience in imputation and powerful proprietary software packages (AutoImpute and WesDeck) that perform imputation for both types of variables. Through imputation, we can create a quality data set without item-missing values. We will deliver complete and documented data files capable of supporting national and state-level estimates.

4. Testing of Procedures

OJJDP is committed to creating the least burdensome, highest quality and utility data collection on juvenile probation. The CJP form was previously pre-tested, pilot-tested, subjected to focus group scrutiny, and administered in 2009. It required only minor modification for the current data collection. The study contractor has improved the roster list and modified the instructions in order to increase clarity. In addition, we created separate instructions for the paper form respondents and the web respondents so that instructions for each instrument are not cluttered with information not pertinent to the respondent.

5. Contacts for Statistical Aspects and Data Collection

The study team consists of a collaboration between Westat (the prime contractor) and Westat’s subcontractor, the National Center for Juvenile Justice (NCJJ). Westat will be managing data collection activities for the CJP. NCJJ will conduct the initial data processing and produce complete unweighted files, returning them to Westat for analysis.

The individual consulted on statistical aspects of the design was Dr. Hyunshik Lee, a senior statistician at Westat.

1 National Research Council. (2012) Reforming Juvenile justice: A Developmental Approach. Committee on Assessing Juvenile Justice Reform, Richard J. Bonnie, Betty M. Chemers, and Julie A. Schuck, Eds. Committee on Law and Justice, Division of Behavioral and Social Sciences and Education. Washington, DC: The National Academies Press.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Paperwork Reduction Act Submission |

| Author | Janet Chiancone |

| File Modified | 0000-00-00 |

| File Created | 2021-01-30 |

© 2026 OMB.report | Privacy Policy