Part_B_AJC_Accessibility_Study_OMB_Supporting_Statement_clean_9_15_14

Part_B_AJC_Accessibility_Study_OMB_Supporting_Statement_clean_9_15_14.docx

Evaluating the Accessibility of American Job Centers for People with Disabilities

OMB: 1290-0010

Supporting Statement for the

Paperwork Reduction Act of 1995

Information Employing Statistical Methods

Evaluating the Accessibility of American Job Centers for People with Disabilities

September 5, 2014

U.S. Department of Labor

200 Constitution Ave., NW

Washington, DC 20210

1. Respondent Universe and Sampling Methods 1

1.2 Site Visits (Interviews) 1

2. Information Collection Procedures 5

2.1 Statistical Methodology for Stratification and Sample Selection 5

2.3 Degree of Accuracy Needed for the Purpose Described in the Justification 7

2.4 Unusual Problems Requiring Specialized Sampling Procedures 8

2.5 Any Use of Periodic Data Collection Cycles to Reduce Burden 8

3. Methods for Maximizing Response Rates and Dealing with Non-Response 8

4. Tests of Procedures or Methods 9

PART B. SUBMISSION FOR COLLECTIONS OF

INFORMATION EMPLOYING STATISTICAL METHODS

1. Respondent Universe and Sampling Methods

The U.S. Department of Labor (DOL) requests clearance for IMPAQ International, LLC and its partner, the Burton Blatt Institute of Syracuse University and Evan Terry Associates, to conduct three principal research activities1: 1) a Web-based survey of America’s Job Center (AJC) directors; 2) in-depth interviews during site visits to AJCs, including interviews with center management and staff; and 3) AJC customer focus groups to be conducted during the site visits. Per the purpose of this research, the customer focus groups will be comprised of people with disabilities (PWD). This is not an audit of compliance with laws and regulations regarding accessibility of AJCs. Rather, the purpose of the study is to gather data to paint a broad picture about the degree to which AJCs as a whole are accessible to people with disabilities. As such, this research seeks to produce a national estimate of the level accessibility of AJCs to PWD.

1.1 Web-Based Survey

The Web-based survey will be sent to the universe of 2,542 AJC directors. No sampling is involved. We expect an 80 percent response rate for the survey, based on extensive pre-survey and follow-up activities coordinated with the U.S. Department of Labor, as well as the IMPAQ team’s experience on related efforts. Examples of similar efforts that yielded such a response rate include the Job Corps National Survey Data Collection Project and Project GATE, both of which were conducted for the Employment and Training Administration, U.S. Department of Labor.

1.2 Site Visits (Interviews)

The site visits play a secondary role in this research. While on site, the data collection team will gather data that corresponds to the questions developed for the AJC Web-based surveys. This will allow for qualitative descriptions of the AJCs to complement the survey data. The site visit team also plays a role in mitigating socially-desirable responses (SDR) on the survey. Immediately following each visit, the team will complete the same survey instrument that the center director completed. At the conclusion of all site visits, significant differences between AJC directors’ survey responses and those of researchers can be adjusted for statistically.

The data collection team will conduct a total of 100 site visits to comprehensive AJCs and up to an additional 30 affiliate centers within close proximity to the selected comprehensive centers. As recommended by the Technical Working Group (TWG), our strategy for identifying sites for in-person data collection will maximize the efficiencies of site visits in terms of cost and informational gains while enhancing the political defensibility and statistical properties of the estimates. This strategy, which will be used to sample 70 sites for the SDR study and 30 sites for a study of survey non-response2 (SNR) bias created where all data is missing for an AJC), involves the steps described below.

First, a sample of AJC sites will be pre-selected and visited soon after the site’s web-based survey is received. The pre-selection of AJC sites is crucial for maximizing efficiencies and achieving the appropriate representation of AJC sites. This step is a non-random and purposeful selection of AJCs, developed based on discussions with the TWG and representatives from OASAM, the Employment and Training Administration (ETA) and the Office of Disability Employment Programs (ODEP) of the U.S. Department of Labor. We will use the following criteria in selecting AJC sites to visit:

Location (research team proximity to AJC)

‘Benchmark’ centers (i.e., high- and low-performing centers)

Centers associated with Disability Employment Initiatives/Disability Program Navigators/Employment Networks

Centers identified by ETA and ODEP of the U.S. Department of Labor

Centers amenable to conducting focus groups

Prioritization of larger AJCs.

Next, a sample of AJC sites will be randomly-selected and visited after all web-based surveys have been received. We propose a stratified random selection of sites rather than weighting AJCs by size. This is because the identification of AJC size – in terms of numbers served or budget – may be difficult to determine and may change over the study period.

A stratified sampling approach using three levels of stratification will be used for selecting sites. These strata include:

Geographic Region3

Northeast (Connecticut, Maine, Massachusetts, New Hampshire, New Jersey, New York, Pennsylvania, Rhode Island, and Vermont)

Midwest (Illinois, Indiana, Iowa, Kansas, Michigan, Minnesota, Missouri, Nebraska, North Dakota, Ohio, South Dakota, and Wisconsin)

South (Alabama, Arkansas, Delaware, District of Columbia, Florida, Georgia, Kentucky, Louisiana, Maryland, Mississippi, North Carolina, Oklahoma, South Carolina, Tennessee, Texas, Virginia, and West Virginia)

West (Alaska, Arizona, California, Colorado, Hawaii, Idaho, Montana, Nevada, New Mexico, Oregon, Utah, Washington, and Wyoming)

Urban/Rural4

Rural population: defined as all persons living outside a Metropolitan Statistical Area (MSA) and within an area with a population of less than 2,500 persons.

Urban population: All persons living in Metropolitan Statistical Areas (MSAs) and in urbanized areas and urban places of 2,500 or more persons outside of MSAs. Urban, defined in this survey, includes the rural populations within an MSA.

Accessibility Levels: As recommended by the TWG, the study will estimate AJC accessibility at four levels. However, for the selection of sites, we propose to estimate two levels of accessibility: below average or above average. This is because it is not possible to obtain precise estimates of accessibility until the completion of data collection. We will estimate an overall accessibility score and classify AJCs as either below average or above average accessibility level. This will be done by dividing the sum of scores5 obtained from actual survey responses by the maximum score possible. This estimated score attempts to represent a rough estimate of AJC accessibility for the selection of sites to visit. Nonetheless, we expect the random selection of AJCs to provide reasonable representation of AJCs across accessibility levels, especially around the average accessibility level. Additionally, our purposeful pre-selection of a number of AJCs (based on input from SMEs and DOL staff) will ensure appropriate coverage of AJCs with high/low accessibility levels.

Each of the three levels of stratification will be used for the study of SDR: geographic region, urban/rural classification and accessibility level estimates. However, for the study of non-response bias, just two levels of stratification will be used: geographic region and urban/rural classification.

To illustrate this approach, we provide a sampling framework for the site visits (i.e. three strata) as shown in Exhibit 1. In each region, we will visit a total of approximately 25 AJCs. The total number of site visits within each stratum will be determined by: pre-selection of AJCs plus random sampling within each cell. Exhibit 1 uses current data to populate the “Region” cells.

Exhibit 1: Possible Sampling Strategy for Comprehensive AJC In-Person Data Collection

Total AJCs |

STRATA – Random Sampling |

# AJCs Randomly Selected |

# AJCs Purposely Sampled |

Total # AJCs Selected per Region |

||

# AJCs per Region* |

AJCs per Urban / Rural Area |

AJCs per Accessibility Level |

||||

1723 |

Northeast 187 |

Urban |

Low |

18 |

7 |

25 |

High |

||||||

Rural |

Low |

|||||

High |

||||||

Midwest 402 |

Urban |

Low |

18 |

7 |

25 |

|

High |

||||||

Rural |

Low |

|||||

High |

||||||

South 488 |

Urban

|

Low |

18 |

7 |

25 |

|

High |

||||||

Rural |

Low |

|||||

High |

||||||

West 655 |

Urban |

Low |

18 |

7 |

25 |

|

High |

||||||

Rural |

Low |

|||||

High |

||||||

* Note that AJCs serve different numbers of customers. For example, the West region includes many AJCs that are in remote areas and serve a small population. The Northeast, by contrast, has fewer AJCs, closer together, which serve large customer-bases.

The selection of affiliate centers will be purposive, based on the selection of sites described above. We will use the list of comprehensive AJCs selected to receive a site visit to identify affiliate centers to visit. The primary criterion to select affiliate sites is efficiency (i.e. proximity from the selected comprehensive site).

1.3 Focus Groups

The IMPAQ team will conduct focus groups during site visits to 10 comprehensive AJCs. To the extent possible, focus group activity will be planned in each stratum (See Exhibit 2). The selection of focus group sites is by practical convenience to maximize efficiency, and is reliant on AJC staff to recruit participants, both from their own customer-base, as well as from other local agencies. We will make every effort to coordinate with AJCs to select focus group sites which will ensure the inclusion of customers with various types of disabilities6. It is expected that approximately 9 individuals will participate in any focus group. Informed consent for focus group participants can be found in Attachment F.

Exhibit 2: Possible Sample of AJCs for Focus Groups

Total AJCs |

STRATA |

Total Focus Groups (# Persons) |

||

# Focus Groups per Region |

# Focus Groups per Urban / Rural Area |

# Focus Groups per Accessibility Level |

||

1751 |

Northeast 3 (27 persons) |

Urban 1 |

n/a |

10 (90 persons)

|

Rural 2 |

Low 1 |

|||

High 1 |

||||

Midwest 2 (18 persons) |

Urban 1 |

n/a |

||

Rural 1 |

n/a |

|||

South 2 (18 persons) |

Urban 1 |

n/a |

||

Rural 1 |

n/a |

|||

West 3 (27 persons) |

Urban 2 |

Low 1 |

||

High 1 |

||||

Rural 1 |

n/a |

|||

2. Information Collection Procedures

2.1 Statistical Methodology for Stratification and Sample Selection

The Web-based survey is being administered to the entire universe of AJC directors; i.e., there is no sampling.

Within this frame, strata were determined and prioritized by the TWG to ensure that the site visits as a whole are representative of major AJC characteristics. The number of strata is limited due to the sample size (see 2.2). There is no weighting of sites in the sample selection procedures.

2.2 Analytical Approach

The IMPAQ team will utilize Item Response Theory7 (IRT) to convert ordinal survey responses including both dichotomously and polytomously scored items, to continuous numeric scores in order to identify accessible AJCs. We will estimate scores from three accessibility constructs (i.e. programmatic, communications and physical accessibilities) separately because of the unidimensionality assumption of the IRT model. The unidimensionality assumption of the IRT model expects one dominant construct to be measured by a set of survey questions. In the AJC survey, each question is specifically designed to measure one of three accessibility constructs. We will conduct additional analysis including a fit analysis8 to ensure that all survey questions within each construct also measures the predominantly same construct and follows the assumptions of the IRT model.

2.3 Estimation Procedures

To identify the appropriate number of in-person data collection visits, IMPAQ conducted a simulation study to determine the number of visits required to assess the bias associated with AJC survey estimates and the in-person data collectors’ effect as a proxy for the SDR effect.

The range of in-person data collection visits used for this simulation study was from 10 to 100, with a total of 32 questions (16 binary questions and 16 rating scale items) based on the specifications of one of the survey domains.9 Survey responses were generated for over 2,000 respondents, which is approximately 75% of all AJCs (including both comprehensive and affiliate Centers) in addition to a varied number (i.e., 10-100) of in-person data collection visits. Accessibility level parameters were generated from the standard normal distribution and the SDR effect (or respondent effect) was -0.5 for 2,000 respondents simulating the expected SDR bias by center directors and +0.5 by in-person data collectors.10

The measurement model for this simulation study was based on the Facet model (see equation 2), which estimates accessibility levels, survey question difficulties, and SDR effects simultaneously. 11 The bias is defined as the difference between the true values and estimated parameters. Exhibit 3 shows the average, standard deviation, 2.5th percentile and 97.5th percentile bias estimates from accessibility levels and respondent effects.

The simulation results showed that the average biases of respondent effects estimates approached the minimal level with a sample size between 60 and 70. Variability of the respondent effect estimates was fairly consistent from the minimal sample size of 10 to 100. Therefore we are conducting 100 in-person data collection visits – 70 in-person data collection visits will be used to gather data for conducting the SDR study. An additional 30 in-person data collection visits will be conducted to sites not responding to the survey for the purpose of measuring non-response bias.

Exhibit 3: Estimation Bias of Respondent Effect Parameter Estimates

2.4 Degree of Accuracy Needed for the Purpose Described in the Justification

The degree of accuracy required to gauge SDR and unit non-response bias of the surveys is well accounted for in the estimation procedures. We will conduct a nonresponse bias analysis to provide some indication of whether the potential for nonresponse bias exists, an indication of the individual data items and specific populations for which survey estimates might have a greater potential for bias, and the possible extent of the potential for nonresponse bias in survey estimates. However, because survey data will not be available for nonrespondents, we cannot be certain if bias does or does not exist in the survey estimates.

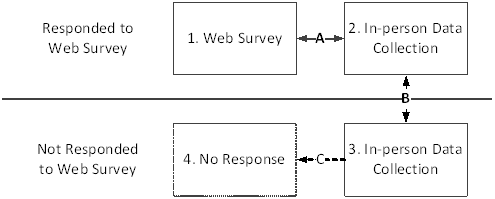

Exhibit 4 illustrates our approach for nonresponse analysis. The goal of the analysis is to estimate the distribution of accessibility levels for Group 4 (centers that did not respond to the Web-based surveys and which did not receive an in-person data collection site visit) from accessibility levels for Group 3 (centers that also did not respond to the Web-based survey, but which did have data collected through in-person data collection site visits).

Exhibit 4: Operationalizing Non-Response Analysis

The proposed analytical model, the Facet model, simultaneously estimates the statistical relationships A and B:

The relationship A accounts for the difference in survey responses between the AJC respondents to the Web-based survey (Group 1) and responses obtained from the in-person data collectors for the same set of AJC sites (Group 2)

The relationship B represents the potential differences in the distribution of accessibility estimates by comparing the responses obtained through in-person data collection site visits at AJCs which responded to the Web-based survey (Group 2) and ones did not respond to the Web-based survey (Group 3).

The accessibility estimates of Group 3 will be adjusted for both effects A and B. The path C represents an extrapolation of distribution characteristics from Group 3 to Group 4 to estimate the accessibility characteristics of AJCs that did not respond to web surveys and which did not receive an in-person data collection site visit.

2.5 Unusual Problems Requiring Specialized Sampling Procedures

There are no unusual problems requiring specialized sampling procedures.

2.6 Any Use of Periodic Data Collection Cycles to Reduce Burden

The data collection efforts for this research occur at a single point-in-time.

3. Methods for Maximizing Response Rates and Dealing with Non-Response

The proposed Web-based survey administration has the potential of experiencing a high SNR rate. As such, we expect an 80 percent response rate to the web-based survey. This rate is based on our experience conducting the Job Corps National Survey Data Collection Project, as well as the Growing America Through Entrepreneurship (GATE) research for the Employment and Training Administration, U.S. Department of Labor. Additionally, extensive efforts have been planned to achieve an 80 percent response rate by sending multiple advance notices via mail and e-mail, as well as extensive follow-up efforts using the same channels. Although we will make every effort to encourage sample members to participate in the study through advance and follow-up letters and emails, we anticipate that there will still be some number of sample members who will not participate in the study.

To systematically assess whether there are differences between responders and non-responders to the Web-based survey, we will conduct in-person data collection visits to 30 AJCs that did not respond to the survey. The data collected during these visits will support the conduct of a non-response analysis to assess whether a non-response bias exists. We will also gather information on the characteristics of the universe of AJCs, including region and urbanicity to assess whether our respondent sample represents the universe. If we find differences between our respondent sample and the universe, we will consider developing weights that will enhance the representativeness of our respondent sample.

To estimate the proportion of accessible centers, the accessibility level scores from in-person data collection at non-responding AJCs will be used to estimate the proportion of accessible AJCs in the non-response group. Similar to the evaluation of SDR effects, we will utilize the Facet model to measure and to adjust, if necessary, the potential systematic effects on survey responses from survey non-responders (i.e. all survey data missing for an AJC). Since all data, including both web-surveys and in-person data collection, are estimated together, we will be able to examine the magnitude of SDR and non-response effects simultaneously. To estimate the proportion of accessibility among AJCs not responding to web surveys, we will apply post-stratification methods based on the strata used for sampling. The post-stratification method will allow us to extrapolate the accessibility profiles of the sample of responding AJCs to all non-responding AJCs.

4. Tests of Procedures or Methods

Research staff and programmers will thoroughly test the Web-based survey. A testing protocol will be developed along with various testing scenarios to ensure that the instrument is performing correctly for all types of respondents. Test scenarios will be used to evaluate whether question wording and response choices are accurate when translated from paper to Web-based administration, whether instructions are clear, and whether skip patterns are functioning properly. Using a convenience sample of nine AJC Directors, testing will ensure that any errors are corrected prior to full survey administration. The test survey willbe administered over the Internet. The nine respondents will be instructed to log in to a specific Web site and complete the survey. After each respondent has completed the survey, we will conduct a telephone interview with the respondent. The pre-test will identify questions that are poorly understood, terms that are ambiguous in meaning, possibly superfluous questions, and difficult transitions between topics. If the changes to the instrument as a result of the pre-test are minor (i.e., changing the order of the questions), the nine AJC Directors who participated in the survey will not take the final version of the survey. If the changes are more significant and additional information is required from the AJC Directors we will administer the added questions over the phone, rather than asking them to complete the survey a second time.

5. Statistical Consultants

To ensure that the best decisions were made regarding the statistical aspects of the design, project staff from the IMPAQ evaluation team, as well as members of a Technical Working Group (TWG) contributed to the sampling design. All consultations were paid under the study’s contract.

All data collection and analysis will be conducted by the following individuals:

Name |

Organization |

Phone Number |

E-mail Address |

Jacob Benus |

IMPAQ |

443.367.0379 |

|

Futoshi Yumoto |

443.718.4355 |

||

Eileen Poe-Yamagata |

443.539.1391 |

||

Anne Chamberlain |

443.718.4343 |

||

Michael Kirsch |

443.539.2086 |

||

Kay Magill |

206.528.3113 |

||

Linda Toms Barker |

206.528.3142 |

||

Kelley Akiya |

206.528.3124 |

||

Michael Morris |

Burton Blatt Institute |

315.443.7346 |

|

Mary Killeen |

703 619 1703 |

||

Deepti Samant |

202 296 5393 |

||

Meera Adya |

315 443 7346 |

||

James Terry |

Evan Terry Associates |

205-972-9100 |

|

Kaylan Dunlap |

205-972-9100 |

||

Charles Swisdak |

205-972-9100 |

||

Steve Flickinger |

205-972-9100 |

1 AJCs will be notified of the research project and data collection activities per a letter to be sent by DOL. A copy of this letter can be found in Attachment D.

2 This is a survey unit non response where the respondent did not take a survey.

3 Based on Bureau of Labor Statistics – Consumer Expenditure Survey (http://www.bls.gov/cex/csxgloss.htm).

4 Based on a Metropolitan Statistical Area (MSA) to classify AJCs into “rural” or “urban” categories. The Consumer Expenditure Survey defines urban households as all households living inside a metropolitan statistical Area (MSA) plus households living in urban areas even if they are outside of an MSA. It is important to note that using this definition means some rural areas could be considered urban areas if they reside in the MSA. For more information please see Office of Management and Budget: Standards for Defining Metropolitan and Micropolitan Statistical Areas (http://www.whitehouse.gov/sites/default/files/omb/fedreg/metroareas122700.pdf)

5 For example, for dichotomously-scored questions 0 will be assigned for No and 1 for Yes. For polytomously-scored questions 1 will be assigned for rarely or not at all, 2 for some of the time, 3 for most of the time and 4 for always.

6 Data collected through the focus groups is intended to provide contextual, qualitative information about the experiences and opinions of PWD who have interacted with the AJC. The data is not intended to provide generalizable results. For this reason, the focus group selection methodology requires only that the participants chosen are individuals who are able to provide insights into the topic of AJC accessibility for PWD. Nonetheless AJCs will be instructed to select participants based on a range of additional criteria (e.g., gender, age, ethnicity, employment status, intensity of service received, length of time since last being served).

7 See Attachment C Section 3 for a detail of IRT model used in this study.

8 See Attachment C Section 7 for a detail of fit analysis

9 See Attachment E for a detailed specification of the simulation study.

10 The range of SDR is one which is same as the standard deviation of distribution of latent abilities. The simulation study aimed to recover parameters with a presence of strong SDR effect.

11 Computer program, Conquest version 2.0 was used for the estimation of parameter, which fits the general form of IRT model, Multidimensional Random Coefficient Multinomial Logit Model (MRCMLM). Conquest syntax allows specifying PCM and Facet models similar to equations 3 to 6 on the attachment C Section 4.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-27 |

© 2026 OMB.report | Privacy Policy