Site Visit Summary Report

CRDC Site Visits 2014 Summary Report Draft.docx

NCES Cognitive, Pilot, and Field Test Studies System

Site Visit Summary Report

OMB: 1850-0803

The CRDC Improvement Project

Recommendations for the 2013–14 and 2015–16 collections and beyond

S

This page is intentionally blank.

The CRDC Improvement Project

Recommendations for the 2013–14 and 2015–16 collections and beyond

Prepared by

American Institutes for Research

Sanametrix

July 2014

Contents

List of Exhibits, Tables, and Figures iii

Goals of the CRDC Improvement Project 3

Goal 1 | Reduce reporting burden 3

Goal 2 | Achieve better respondent engagement through better communication 3

Goal 3 | Achieve better data quality through better data collection tools 4

Goal 4 | Make data more useful and accessible to CRDC stakeholders 4

About the CRDC Improvement Project Research Tasks 6

Task 1 | Review of known issues 6

Task 2 | Expert review of CRDC design 7

Task 4 | Cognitive interviews 10

Recommendations for reducing burden 11

Achieve better respondent engagement through better communication 36

Recommendations for communication 36

Recommendations for the Partner Support Center (PSC) 47

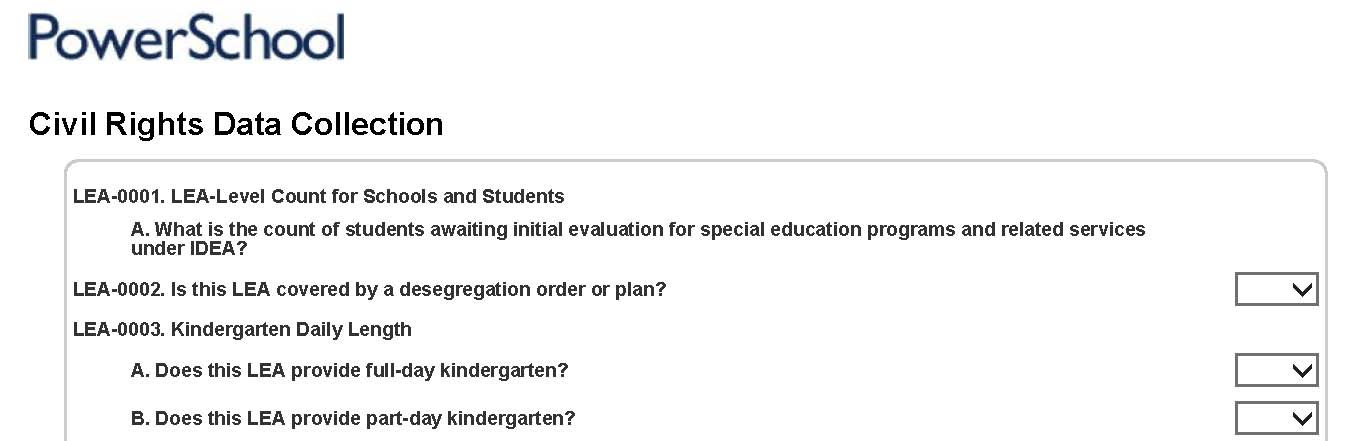

Achieve better data quality through better data collection tools 50

Recommendations for the online data collection tool 50

Recommendations for data validation and error reports 57

Recommendations for flat file submissions 61

Make data more useful and accessible to CRDC stakeholders 66

Recommendations for reporting back to stakeholders 66

Challenges for Implementation 69

List of Exhibits, Tables, and Figures

Exhibit 1|Examples of time burden for CRDC reporting 24

Exhibit 2|List of data elements reported as difficult and reasons for difficulty, by LEA size 27

Introduction

The Civil Rights Data Collection (CRDC) is a legislatively authorized mandatory survey that collects data on key education and civil rights issues in our nation’s public schools. These data are used by the U.S. Department of Education’s (ED) Office for Civil Rights (OCR), by the Institute of Education Sciences’ National Center for Education Statistics (NCES) and other ED offices, and by policymakers and researchers outside the Department of Education.

The CRDC is a longstanding and critical component of the overall enforcement and monitoring strategy used by the OCR to ensure that recipients of the Department’s federal financial assistance do not discriminate on the basis of race, color, national origin, sex, or disability. OCR relies on the CRDC data it receives from public school districts as it investigates complaints alleging discrimination, determines whether the federal civil rights laws it enforces have been violated, initiates proactive compliance reviews to focus on particularly acute or nationwide civil rights compliance problems, and provides policy guidance and technical assistance to educational institutions, parents, students, and others. To meet the purpose and intended uses of the data, the CRDC collects information on public school characteristics and about programs, services, and outcomes for students, disaggregated by race/ethnicity, sex, limited English proficiency, and disability. Information is collected on where students receive instruction so that the data OCR collects accurately reflects students’ access to educational opportunities at the site where they spend the majority of their school day.

CRDC data have been collected directly from local education agencies (LEAs) covering each of the 50 states and the District of Columbia since 1968, primarily on a biennial basis (i.e., in every other school year). Recent CRDC collections have also included hospitals and justice facilities that serve public school students from preschool to grade 12. Rather than rely on samples, recent collections cover the universe of all LEAs and all public schools, as well as state-operated facilities for students who are deaf or blind and publicly owned or operated justice facilities that provide educational services to youth. The universe includes all public entities providing educational services to students for at least 50 percent of the school day. Data are currently collected using an online data collection tool.

Need for improvement

Feedback from prior CRDC collections indicates that LEAs have experienced unacceptable levels of reporting burden. The issues documented fall into two categories: content and the data collection tool. Content issues that contribute to high levels of reporting burden include being asked to provide data already reported by the state education agency (SEA) and being asked to provide data that are not maintained by schools and LEAs at the level of detail required by the CRDC. Another content issue is a lack of clarity in the definitions of key terms. Respondents also reported that the 2011–12 CRDC data collection tool had performance issues; in particular, that there were not enough built-in edits and some edit messages were unclear.

Based on this feedback, the purpose of the CRDC Improvement Project will be to develop a new data collection tool and processes that (1) reduce respondent burden, (2) improve data response, (3) improve data quality, and (4) make CRDC data more useful and accessible to CRDC stakeholders.

To begin, NCES commissioned a set of research tasks to gather information about the challenges that LEAs, SEAs, and schools have in responding to the CRDC. These research tasks consist of a review of known issues; expert review of the survey design; site visits to LEAs, SEAs, and schools; cognitive interviews about survey language and wording; and pilot testing of the new data collection tool. This report, at current writing, is based primarily on the review of known issues and the site visits.

Organization of this report

This report presents an overview of the main goals of the CRDC Improvement Project, a summary of the research tasks, a description of the challenges encountered by LEAs that contribute to the excessive response burden, comprehensive recommendations for achieving the project goals based on known issues and site visits, key challenges for implementing improvements, and a suggested timeline for improvement activities.

Goals of the CRDC Improvement Project

The CRDC Improvement Project aims to achieve four main goals: (1) reduce reporting burden; (2) achieve better respondent engagement through better communication; (3) achieve better data quality through better data collection tools; and (4) make data more useful and accessible to CRDC stakeholders. The justification for these goals is explained below. Within each goal there are actions that can be taken for the 2013–14, 2015–16, or future collection cycles. Figure 1 illustrates the CRDC collections during which primary efforts toward meeting each goal are recommended distribution of work focus for each goal by data collection year.

Goal 1 | Reduce reporting burden

Recommended focus collection: 2013–14, 2015–16, and beyond

A universal theme among site visit respondents and public comments in response to the Office of Management and Budget (OMB) submission was that participation in the CRDC required numerous hours of sometimes multiple staff members to report and validate data, thus making the process exceedingly burdensome. The process of gathering data, preparing data for submission, and submitting data was arduous and could take LEAs weeks to complete; there were over 10,000 calls received or made by the Partner Support Center in the last administration. One site visit respondent described the CRDC as “a huge burden…our largest non-funded mandate.”

The level of burden impacts data quality. Given that staff have a limited number of hours to divert from their intended tasks, the greater number of hours inputing and uploading data limits the amount of time for validation and impacts data accuracy and quality. Further, when respondents view a mandatory data collection as a burdensome requirement, they will provide the minimum information necessary to comply with the request, at the very last minute possible, and avoid important steps in planning and quality control.

Goal 2 | Achieve better respondent engagement through better communication

Recommended focus collection: 2015–16

All of the CRDC points of contact (POCs) at the sites visited noted that the CRDC was a mandatory data collection, they had to fill it out, and that there could be penal consequences if they did not respond. One site visit respondent described the purpose of the CRDC as “punitive” and another reported emails about the CRDC to their SEA as potential email “spam” because they sounded “ominous.” While compliance is a necessary outcome, moving the perception of the CRDC from a negative compliance request to a positive, salient, and useful data tool for CRDC respondents and stakeholders is vital to improving the response propensity of LEAs and SEAs. Engaged respondents are more likely to respond early and plan better. Early response decreases the need for costly and time-consuming follow-up efforts, and planning for the data collection decreases missing data due to incomplete or uncollected data elements and other errors. Communication about the purpose and value of the CRDC is central to changing its perception.

Also critical to developing and sustaining better response propensities among CRDC respondents is a communication plan that adequately sets and maintains expectations about roles, responsibilities, and deadlines. Universally, very few LEA or school staff other than POCs who were involved in collecting data related to the CRDC (e.g., guidance coordinators and school principals) knew that they were providing data for the CRDC. Even the name “CRDC” was unfamiliar to them. Improving the consistency, reach, type, and timing of CRDC communications will help respondents understand, anticipate, and respond to the data request.

Goal 3 | Achieve better data quality through better data collection tools

Recommended focus collection: 2013–14

The data collection tools for the CRDC are the core components of a successful data collection. These tools guide the respondent through the data reporting process and produce the final data files that OCR relies on to measure and monitor civil rights compliance in American public schools. It is crucial that these tools are easy to use, valid, and reliable for requesting and delivering high-quality, accurate data. However, LEAs have reported problems with the prior CRDC online data collection tools that suggest the tools did not effectively meet these criteria.

Best practices in survey methods and web survey design, along with more flexible and powerful software, can improve the use, validity, and reliability of the CRDC data collection tools―resulting in better data quality.

Goal 4 | Make data more useful and accessible to CRDC stakeholders

Recommended focus collection: 2013–14

Another way to assist SEAs and LEAs in understanding the value and importance of the CRDC is to raise awareness about the creation and release of final CRDC data files and reports and to return data back to SEAs and LEAs for their use in strategy and policy planning. Additionally, returning data to SEAs and LEAs can assist them in the review and quality assurance of their own data collection programs.

Most importantly, it is critical for CRDC respondents to feel that they are stakeholders in the data collection. Its value beyond compliance is determined by how helpful the data and data reports are to the people who can directly effect the changes needed to increase or maintain access to educational opportunities for all students.

Figure 1 below illustrates the recommended distribution of work focus for each goal by data collection year. The primary focus for the 2013–14 collection is to improve the data collection tools (Goal 3) and to make the data more useful to stakeholders (Goal 4). These goals were viewed as the priority due to the difficulties respondents experienced with the 2011–12 data collection system, and because developing a new system is the focus of the contract funding the CRDC Improvement Project for the first 2 years. Goals 1 and 2 are viewed as the priority for the 2015–16 and future data collections because, while important, addressing these goals requires more lead time than is available for the 2013–14 collection.

Figure 1 | Recommended distribution of work focus by goal for the 2013–14 and 2015–16 collections and beyond

About the CRDC Improvement Project Research Tasks

NCES has commissioned a set of research tasks for gathering information about problems LEAs, SEAs, and schools have in responding to the CRDC. These tasks consist of a review of known issues; expert review of the survey design; site visits to LEAs, SEAs, and schools; cognitive interviews about survey language and wording; and pilot testing of the new data collection tool.

Task 1 | Review of known issues

Reviewing known issues was the first step in the process of developing new CRDC data collection tools. The American Institutes for Research (AIR) and Sanametrix CRDC Improvement Project team summarized known issues from materials provided by NCES. Materials for review included CRDC 2011–12 requirements documents, 2011–12 CRDC edit specifications, data quality analysis previously conducted by the American Institutes for Research (AIR) and other contractors, Question and Answer responses prepared by the 2011–12 Partner Support Center, the 2013–14/2015–16 OMB package, and public comments on the 2013–14 proposed data collection.

The summary of known issues documented problems or concerns in four broad areas: (1) the survey tool, (2) data quality, (3) data elements, and (4) survey methods.

Issues with the survey tool include performance issues such as edit and range checks, data fills, logic and skip patterns, user controls and navigation, and flat file submission.

Issues with data quality include inconsistencies with other NCES school and district data collections, universe coverage, problems with school IDs, outliers, and inability to accurately report data at the school level or other disaggregated levels.

Issues with particular data elements are those related to item-specific definitions, burden, and access to data.

Issues with the survey methods include those related to the procedures used to solicit, encourage, and assist reporting, such as communication, training, and tools to reduce burden.

Task 2 | Expert review of CRDC design

NORC, at the University of Chicago, was asked by NCES to review the CRDC tool and recommend ways to improve the quality of the data collected by the program. NORC examined data from a variety of sources: stakeholders’ feedback about the data collection tool obtained during a 2013 Management Information Systems (MIS) conference session, a CRDC Work Group meeting led by OCR, and a CRDC tool demonstration led by Acentia; the 2009–10 CRDC and the 2011–12 CRDC restricted-use data files with respondents’ comments; and the 2010–11 Common Core of Data (CCD).1

More specifically, the research encompassed four major analytic activities:

Review and analysis of stakeholders’ feedback on the CRDC tool from conferences, meetings and an independent NORC review. These results were used to make overarching recommendations for changes to the tool to improve accuracy and to reduce the edit failure rate and burden.

Review of CRDC respondents’ comments from the item-level comment fields embedded within the tool. The review focused on understanding what issues respondents experienced and to make informed recommendations for improving the CRDC tool and content (e.g., change item wording or layout, provide more guidance/instructions, add definitions, add skip logic, add or revise the within-tool editing procedures, etc.).

Analysis of a subset of items collecting student enrollment and basic school characteristic data in Part 1 of the CRDC tool. Specifically, NORC explored whether a prior cycle of CRDC data and/or CCD data could be used in the current CRDC’s edit check procedures.

Item-specific analysis of data from the 2011–12 CRDC for selected Part 2 items to identify possible outlier values that could be flagged for editing. As part of this research, NORC examined the extent to which the difference in the reference period for Part 1 (i.e., fall snapshot or point-in-time data) and Part 2 items (i.e., cumulative/end-of year data) contributed to edit check problems and recommended ways of adjusting the edits or the CRDC tool to minimize these problems.

Many of the design issues and recommendations identified in the summary of known issues were also confirmed in the NORC expert review.

NCES, AIR, and Sanametrix conducted a process improvement and feasibility study that consisted of in-person site visits with LEAs, SEAs, schools, and OCR regional offices. The purpose of the site visits was to gather specific information about reporting procedures; periodicity of data availability; problems with specific data elements; suggestions about how the online data collection tool can assist in improving data quality; and the types of feedback reports LEAs, SEAs, schools, and other users would like to be receiving from the CRDC system. A key goal was to understand the process that LEAs use to complete a submission. A set of 13 research questions guided the information gathered from these site visits. The questions were as follows:

To what extent are data collected by the CRDC currently maintained as part of a state longitudinal data system? Do SEAs currently have the capacity to provide LEAs or ED with data that is collected by the CRDC?

When and how often do SEAs/LEAs collect CRDC-related data?

Who are the points of contact (POCs) and what is their data collection role?

What is the data collection cycle like?

What actions do LEAs need to take to complete their submissions?

What format do SEAs/LEAs store the data in?

What current CRDC tools are useful for POCs? (e.g., webinars, PSC, forms, templates, online tools)

Which data elements are difficult for LEAs or schools to report? Which data elements are easy to report?

What is the process for verifying and certifying LEA data? Are subject matter experts consulted for specific elements of the CRDC?

What are the reasons LEAs/SEAs collect the CRDC data? How are the data used? Are there specific data elements collected just for the purpose of the CRDC reporting?

How do schools report data to LEAs? What is the reporting process and cycle?

What can be improved about the CRDC communication process with POCs and other leadership?

What other general feedback do LEAs/SEAs/schools/OCR offices have?

Separate interview protocols were developed for LEA, SEA, school, and OCR staff based on these research questions. Participants for the site visits were selected from a list of 25 LEA and SEA POCs provided by NCES. Participants were recruited via telephone and email, and staff of each recruited LEA provided suggestions of schools to visit and school staff to interview. The two OCR field offices visited were selected from a list of six offices provided by NCES and were chosen based on convenience of scheduling with an LEA site visit. The majority of the interviews with LEA, SEA, school, and OCR staff were conducted in person, onsite over a 2-day period; however, due to scheduling constraints, some interviews were conducted via telephone.

Site visits were conducted in 14 different states at 15 LEAs, 11 SEAs, 9 schools, and 2 OCR regional offices. Visits were conducted between February and May 2014, with the majority occurring in February and March. Members from the American Institutes for Research (AIR) and Sanametrix team conducted the 2-day site visits in pairs. The sites visited varied by region, size, and level of sophistication of SEA and LEA data systems and programs offered.

Table 1 | Number and role of site visit participants at the OCR field offices, SEAs, and LEAs, by size of LEA

-

Role of site visit participants

Size of LEA visited and number of participants

Small

Mid-size

Large

Very large

Total

OCR field office

1

0

0

1

2

SEA

4

5

0

2

11

Data manager

3

4

0

1

8

Federal data coordinator

0

1

0

0

1

EdFacts coordinator

2

2

0

1

5

RA & QA supervisor

0

1

0

0

1

State liaison for CRDC

2

0

0

0

2

Civil rights compliance coordinator

0

2

0

0

2

IT staff

1

2

0

0

3

LEA

5

6

1

3

15

IT staff

2

4

0

2

8

Data manager

3

3

1

3

10

Office of Accountability staff

0

0

0

2

2

Administrative assistant

1

0

0

0

1

Guidance supervisor

0

1

0

0

1

General counsel

0

0

0

1

1

Athletics coordinator

0

1

0

0

1

Gifted & Talented coordinator

0

1

0

0

1

Research analyst

0

1

0

1

2

Charter school overseer

0

0

0

1

1

Director of Student Life & Services

0

1

0

0

1

Human Resources staff

0

2

0

1

3

Finance staff

0

0

1

0

1

School

3

4

1

1

9

Principal

0

2

0

0

2

Assistant principal

0

2

0

1

3

Data manager

0

0

1

0

1

Data processor

0

1

0

0

1

Secretary

3

1

0

0

4

Education specialist

1

0

0

0

1

Counselor

0

1

0

0

1

NOTE: The OCR field office was in the same region as an LEA visited, and SEAs were in the same states as the LEAs visited.

During May 2014, NCES, AIR and Sanametrix conducted telephone interviews with CRDC respondents (primarily LEAs) to gather information on which data elements on the CRDC school form are confusing to respondents and what information can be added to instructions, definitions, tables, and questions to make the data request easier for respondents. The cognitive interview protocol focused on specific data elements that NCES, OCR, and the site-visit research found to be problematic for respondents. Information about problematic data elements will be documented in a separate report.

Interviews were conducted with 20 participants. Each telephone interview was 90 minutes and up to three respondents who were involved in the reporting of CRDC data elements participated in the interview.

Additionally, modules consisting of topically related groups of CRDC data tables were developed and feedback on them was solicited from the cognitive interview participants. The AIR and Sanametrix team requested feedback on the data table groupings from site visit participants by email and from telephone interview respondents during the interview.

Lastly, a pilot test of the new online data collection tool is scheduled for late-summer 2014. The purpose of the pilot will be to test the functionality of the new tool with 40–50 LEAs. The pilot period will last up to 3 weeks, during which time LEAs will respond to the survey. Pilot LEAs will be contacted to provide feedback and suggestions. A feedback mechanism that captures screenshots of user’s computer screeners will be implemented. At least two pilot LEAs will be designated to test the flat file upload. AIR and Sanametrix will evaluate the feedback and make recommendations to NCES/OCR for changes that are feasible to implement prior to the opening of the 2013–14 data collection period.

Goal 1 2 3 4

Recommendations for reducing burden

Challenge: Data elements gathered and stored through decentralized systems represent a large share of the reporting burden

Recommendations:

Design the data collection tool to better align with the way LEAs collect, store, and report CRDC data

Evaluate the utility of difficult-to-report data elements relative to their reporting burden

Establish a task force or task forces to share promising practices for collecting data not typically housed in centralized systems Engage the vendor community in developing tools to better support LEAs in responding to the CRDC Challenge: Expanding and changing data elements inhibit LEAs from improving practices for gathering and reporting CRDC data

Recommendations: Create a consistent, core set of CRDC items Develop flexible special modules to explore policy directives

Evaluate utility of items not regularly used by OCR offices

Challenge: LEAs are duplicating the reporting of data elements to their SEAs and OCRs

Recommendations: Engage SEAs in the reporting process to reduce district burden

Undertake a strategic state-by-state campaign to engage SEAs

Challenge: LEAs perceive that ED is not using all its resources to lower burden

Recommendations:

Evaluate the best match between all ED surveys and non-core data elements

Small parts of the CRDC take the

most time. About 50% of response time

is spent on 5% of the data, for example, AP results –

Small LEA

Challenge: Timing of data collection does not align well with LEA schedules

Recommendation: Consider allowing LEAs to opt for two different submission models

|

Reports from the site visits suggest that the amount of time and effort it takes to respond to the CRDC represents a significant number of staff hours allocated to gathering, reporting, and validating the CRDC data, making the process very burdensome. Exhibit 1 shows examples of time burden as reported by small, mid-size, and large districts that were able to provide this information. Burden as reported by site visit respondents varied across LEAs and LEA sizes and ranged from about 1 week to 6 months. Keep in mind that these examples are just for reporting activities during the reporting period. Many LEAs spend additional time planning, such as one small LEA whose CRDC contact trains all school registrars on the CRDC and leads a data group that meets every month for 2 hours.

[Format instruction - INSERT Exhibit 1 on following page (exhibits in the draft appear at the end of this section)]

Many of the recommendations for improved communications tools and data collection tools will also help reduce reporting burden, but there are additional strategic improvements that can be made to reduce the amount of time and effort schools and districts spend on the CRDC.

Challenge: Data elements gathered and stored through decentralized systems represent a large share of the reporting burden

Every LEA site maintained and utilized a student information system (SIS) to report at least some portion of the student count and program data for the CRDC. The primary purpose of these SIS systems was to meet reporting requirements of the SEA- or LEA-driven policies or practices. A secondary benefit was that data stored in the SIS were the easiest for LEAs to report accurately for the CRDC. CRDC data stored in the SIS system were all coded in a consistent manner, aligned with student demographic data to support most of the disaggregation required by the CRDC, and tied to a uniform set of school codes. For information stored in the SIS, LEAs typically run queries to aggregate and report the information in the format that is needed for the CRDC. The SIS output is either submitted as a flat file or given to a clerk or administrator to enter into the online data tool. An actual screenshot example of an SIS query for Algebra I passing is shown below.

The majority of data collected by the CRDC focuses on schools and their students. However, the CRDC also collects data on school staff and school expenditures, which are typically not stored in the SIS. All LEAs regardless of size had centralized staff and business information systems, and most of the information required for the CRDC was housed in these systems. Some examples of these systems were Alio, Quintessential, and PeopleSoft. The main causes of reporting difficulty were the use of contractor staff and having to report information at the school level that is only provided at the LEA level. To complete the CRDC submission, LEAs request data from the school HR or business departments, sometimes providing spreadsheets or templates for departments to complete. Once data has been returned to the LEA POC, the POC either provides this data to a school or submits the data on a school’s behalf. While this collection process did not vary greatly between LEAs, districts did vary in their procedures for certifying this information. While in some cases the district’s HR department was responsible for certifying the data, in other districts this responsibility fell to school principals or superintendents. A number of SIS systems used by LEAs had vendor-supported tools that supported CRDC reporting. For example, one LEA mentioned that their vendor allows for customization of screens and fields, which allows the district to request specific data from schools. The vendor also works directly with the SEA and LEAs to provide the fields necessary for reporting, as shown in the screenshot below. The same vendor now has a CRDC component specifically designed to assist schools with CRDC reporting. The program will produce a completed CRDC survey in a PDF file that can then be manually entered into the CRDC website.

However, there were cases of data elements included in the SIS system where reporting challenges still existed. The most frequent reporting challenge was unclear data definitions and CRDC definitions that differed from the definitions for SEA requirements. In these instances, respondents needed to create special queries and programs, recode data to fit the CRDC definitions, or go back to comments on school data-entry forms to ensure alignment with the CRDC definitions. This process added several hours to reviewing, recoding, and checking the SIS data element. An example of manual recoding of harassment incidents from one site is shown in the screenshot below. Specific definitional problems are detailed in a separate cognitive interview report.

Data not stored in the SIS or business and staff information systems, or data that are in these systems but not defined correctly for the CRDC, are the most difficult to report and sometimes even prevent LEAs from reporting at all.2 These difficult data elements make up the majority of the excessive burden in both the data collection and validation process. One LEA estimated that about 50 percent of their CRDC reporting time is spent on about 5 percent of the data. The types of data that are difficult to report are presented in Exhibit 2, along with site visit respondents’ descriptions of difficulties for districts of different sizes.

[Format instruction - INSERT Exhibit 2 on following page]

Sometimes we’ll have to

contact school administrators regarding text in a behavior

incident description to fully understand what transpired to know

how to code the incident for CRDC – Mid-size LEA on

difficulty reporting CRDC discipline data

As these ad hoc responses to data requests accumulate, a decentralization of data responsibility, described by one mid-size LEA as “data silos,” results. Data silos were more common in mid-size and small LEAs, but were also present in large and very large LEAs. If decentralized LEA data can be viewed as a data silo, centralized LEA data, like an SIS or finance system, can be viewed as a data “barn.” Data silos typically contain data that are not required to be reported to the SEA and/or contain data elements that are managed at the school level, rather than at the LEA level (school athletics, for example). Exhibit 3 is an illustration of the data barn/silo structure.

[Format instruction - INSERT Exhibit 3 on following page]

The challenges to the CRDC data collection resulting from data being collected in silos are (i) there is no consistent system for coding data for aggregation, (ii) there is no link to student records for breakdowns by race or other CRDC categories, and (iii) in some cases, the raw information (e.g. narrative text data) is never coded at all. Data are sometimes collected using a content-specific commercial software vendor, sometimes collected using an in-house software system, sometimes collected using Excel spreadsheets, and sometimes collected using handwritten forms—and these methods can also vary by school, even in very large districts. With harassment or bullying data, it is often the case that a discipline incident form is filled out by the school personnel reporting it, in narrative format, and this might be the extent of the documentation. A redacted screenshot of an actual example narrative is shown below. When the CRDC requests aggregated data about bullying and harassment in this scenario, the HIB coordinator requests information from the school. The school staff or the HIB coordinator must read all of the narratives, incident by incident, school by school; code each incident; and then aggregate the incidents to meet the CRDC request.

If the CRDC definitions don’t

align with state data collections, we don’t feel confident

in the data submission – SEA respondent

For LEAs where data are stored electronically in decentralized databases, it is a bit easier to merge these data with the data in their SIS, but it is also time consuming. For example, one LEA explained that to complete the CRDC they need to combine data from three other databases, but each database has a different way of identifying their schools (e.g., it’s a three-digit numerical code in one database, the name of the school in another, and a state code in another). Some LEAs reported that they developed SQL code to merge the different databases together to reduce their burden, but others explained they did not have the resources (financial or staff capacity) to do this, so they combined data using Excel spreadsheets and pivot tables. Successful CRDC responders are those who have fewer data silos or who integrate CRDC information that comes from data silos into the SIS or centralized staffing/finance systems.

Recommendation: Design the data collection tool to better align with the way LEAs collect, store, and report CRDC data

Align the design of the CRDC tool to the way CRDC data elements are gathered, stored, and used within an LEA and support the standardization of the collection of data elements gathered through ad hoc processes. Allow for multiple respondents in the online data collection tool and group data elements into topical modules to make it easier for LEAs to assign out data elements to the appropriate staff or centralized databases. This recommendation is discussed under Goal 3, Recommendation: Allow for multiple users and permissions

This submission is a huge burden. It

is our largest non-funded mandate – Very large LEA Recommendation: Evaluate the utility of difficult-to-report data elements relative to their reporting burden

We recommend that, where possible, NCES/OCR evaluate the utility of difficult data elements and eliminate them from the collection where the burden outweighs the utility. Difficult data elements include those that are housed outside of the SIS, data elements with definitions that do not align with those of the LEA, data elements that are not required for reporting by the SEA, and data elements that require extensive disaggregation. Recommendation: Establish a task force or task forces to share promising practices for collecting data not typically housed in centralized systems For those elements deemed essential, we recommend that NCES/OCR establish a task force or task forces to develop a plan for assisting LEAs in collecting, managing, and reporting data that are difficult to obtain in order to share promising practices and suggest ways to standardize the collection of data. This concept was suggested by one LEA who was reporting school expenditure data. The suggestion was to organize a workgroup made up of school finance staff to help understand the complexities of these data, and clarify finance-related data elements (e.g., develop better definitions) to reduce the burden on LEAs. This concept could be expanded to not only understanding the required data, but also to understanding ways to better collect it, as well as ways of achieving agreement on solutions or helpful tools that NCES/OCR could consider developing. Recommendation: Engage the vendor community in developing tools to better support LEAs in responding to the CRDC In some cases, the difficult data are obtained from outside of the LEA. For Advanced Placement (AP) exam data, some sites suggested NCES/OCR should specifically work with the College Board to develop templates or file specifications for delivery of AP and other data from the College Board to LEAs/SEAs. Additionally, other sites suggested that NCES/OCR actively engage with the vendor community to develop better tools to gather and report CRDC data. A software tool for tracking bullying/harassment incidents, called HIBSTER, was used by one LEA, but this tool did not offer all of the reporting formats needed for the CRDC. NCES/OCR could consider making a list of potential data providers for these difficult data elements and communicate CRDC needs to those vendors.

Challenge: Expanding and changing data elements inhibit LEAs from improving practices for gathering and reporting CRDC data

In almost all of the site visits, we heard that changes and additions to the CRDC exponentially increase the amount of time sites spend on the data collection. One LEA explained that the process to create the SQL queries was very time consuming, but it was done to reduce burden in providing data for future CRDC submissions—however, if the data requirements change from submission to submission, it takes a long time to update/recreate their SQL code and this increases their burden. Additionally, if new items added to the CRDC collection are not in the SIS, there is increased burden for the LEA to report these data elements. Recommendation: Create a consistent, core set of CRDC items We recommend that NCES/OCR identify consistent, core items from the 2013–14 and 2015–16 collections. This approach will maintain consistency in the collection for the next two cycles, which will ensure that LEAs and SEAs do not need to modify existing programs and plans for meeting the current core content. By committing to a set of consistent, core items and core disaggregation (ideally aligned with EDFacts race/ethnicity disaggregations) that form the main body of the collection over time, a level of stability to the data collection will be apparent to LEAs and allow them to develop long-term plans and increase the utility of adding core items to centralized data system.

We further recommend that NCES/OCR agree to strict rules and approvals for modifying core content, followed by a lengthy minimum development time and cognitive research or pilot testing to support new item development.

Recommendation: Develop flexible special modules to explore policy directives

The ability of the CRDC to respond to emerging policy directives affecting civil rights is also important. To address the competing concerns of responsiveness and stability, we recommend that NCES/OCR develop a timeline and plan to create flexible special modules for new data demands.

Special modules can be handled separately from the core items and do not need to be repeated in every collection. They should also follow a guiding set of rules and approvals to ensure that only required and relevant content becomes a module; and they should undergo pre-implementation testing.

Recommendation: Evaluate utility of items not regularly used by OCR offices

Another means for reducing items is to eliminate data elements that are not used regularly by OCR offices. We visited two OCR offices serving several states. The OCR respondents identified the most useful and least useful data elements. Both offices noted that their priority data reporting is dependent on the legal compliance review issues that are occurring and other compliance directives from the OCR main office.

The most useful CRDC data elements from the last collection, as identified between the two OCRs were

The least useful CRDC data elements were

We anticipate that additional recommendations about specific data elements to consider for deletion will be generated from the cognitive interview research.

Challenge: LEAs are duplicating the reporting of data elements to their SEAs and OCRs

A common point from LEAs was that CRDC data had been already reported to SEAs for other purposes.

SEAs expressed an interest in supporting LEAs in their reporting of data to the CRDC, but said that additional communication would be needed between the OCR, SEA, and LEAs to be successful. Because this data collection is not coordinated at the SEA level, SEAs need to be included in communications between LEAs and CRDC so that they are aware of deadlines, what has changed, and who is responsible at the LEA. The current lack of knowledge hinders SEAs from providing good data support, prevents SEAs from knowing whom to contact, and inhibits SEAs from answering relevant LEA questions.

Several SEAs expressed a desire to be more active in providing their LEAs with data. One SEA specifically noted that there was a significant overlap between CRDC data and data reported to the state that could potentially be returned to the LEA for CRDC submission. However, the LEA has not had the staff or financial resources to put a system in place. Another SEA mentioned that receiving the specifications for the data collection well in advance would better allow the SEA to plan the process of feeding the data back to the LEAs.

Some SEAs already report on behalf of their LEAs (e.g., Florida) or offer complete or partial data files to their LEAs (e.g., Iowa, Wisconsin, Kansas) for submission to CRDC. Some of the SEAs we spoke to indicated that anywhere from 25 percent to 60 percent of CRDC data for their states could be prepopulated using existing databases. The actual percentages cited were “25%,” “40%,” “40–50%,” “60%,” “60%,” “60,” and “a large percent.” Other SEAs have indicated that they could provide data to their LEAs for some, but not all, CRDC data elements.

If the CRDC definitions don’t

align with state data collections, we don’t feel confident

in the data submission – SEA respondent

States that have engaged in prepopulating the CRDC from existing data do so because they want to reduce burden on the LEAs and increase the quality of the data collection. For example, during one SEA interview, we found out that the majority of LEAs in the state serve less than 1,000 students and one individual does all of the administrative work. This means that the CRDC data collection falls on this individual, so the state has stepped in to help. In states that provide data, the districts are able to review and revise the data they are provided before final submission to CRDC. In the states in which the prepopulation of data takes place, we found that one or several individuals are deeply involved in the CRDC task force group and receive support from the SEA by providing staff time and other resources to the CRDC task. SEA assistance with the CRDC submission also helps to ensure alignment between the data that the LEA reports to the OCR and the data that the LEA reports to the SEA, which provides a foundation for a consistent set of publicly reported data across federal, state, and local governments.

Recommendation: Undertake a strategic state-by-state campaign to engage SEAs

We recommend that NCES/OCR undertake a strategic state-by-state campaign to engage SEAs in data reporting for the CRDC. In all of the states we spoke to who are assisting LEAs, there was considerable planning involved, and we would expect this to be similar for any new SEA assistance program. In each case there was more than one person involved at the SEA. While some individuals fulfilled multiple roles, SEA teams were typically composed of a “policy champion” who advocated to reduce burden on LEAs across the state, context experts responsible for mapping SEA data elements to CRDC definitions, and technical experts who created files for use by LEAs. NCES/OCR should be prepared to offer as much assistance as possible. Suggested steps for engaging SEAs are provided in the box below.

It is unlikely that all SEAs will be willing to provide the same amount of support and involvement. However, any SEA involvement can help reduce burden, so SEAs should be encouraged to provide whatever level of support they are willing to give. In anticipation of this variation in degrees of help that SEAs will provide, we recommend that NCES/OCR develop suggested “levels” of SEA involvement. For example,“Level 1” SEA involvement may simply be assisting with LEA contact and communication for the CRDC, whereas “Level 3” may be assisting with communication, data preparation, and providing a “help desk” during the CRDC reporting period.

Plan for

SEA engagement (1)

Develop clearly defined roles and expectations for SEA

involvement that allow for variation in the amount of support. (2)

Develop “best practices,” instructions, tools,

examples, or other materials that SEAs can use to facilitate

development of their assistance program. (3)

Begin outreach to all SEAs to identify persons with authority to

implement the desired outcome.

(4)

Identify staff who will be responsible for implementing the

assistance program. (5)

Communicate directly with responsible staff to convey

expectations, timelines, and other key information. (6)

Provide training on the concurrent CRDC. (7)

Pilot test (if possible). (8)

Implement program in subsequent collection. Challenge: LEAs perceive that ED is not using all its resources to lower burden

Similar to the use of data submitted to SEAs for other reporting purposes, both SEAs and LEAs argued that the CRDC could be partially prepopulated with data that SEAs submit to NCES for other reporting purposes, mainly the EDFacts data collection. SEAs and LEAs do, however, realize that the data submitted to EDFacts does not always align in either definition and/or disaggregation with the data elements in CRDC. They wish for this to change.

Recommendation: Evaluate the best match between all ED surveys and non-core data elements

Clarifying the rationale, purpose, and importance of the data elements may address the concerns about item redundancy in federal and state reporting. These rationales for inclusion on the CRDC could be linked from the online tool, similar to definitions. Enrollment data was often mentioned as redundant across sources.

Recommendation: Prepopulate available and matching data from EDFacts and better align CRDC to EDFacts:

Fall is very bad. We are way too

busy on state requirements to do anything for the federal

government – Mid-size LEA on timing of the CRDC

A few sites listed specific CRDC data elements that they feel should align better with EDFacts; these are listed below. Overall, however, respondents wanted ALL information that is similar across CRDC and EDFacts to be aligned, namely,

Challenge: Timing of data collection does not align well with LEA schedules

Many sites provided feedback on the timing of the CRDC survey and its effect on reporting burden and data availability―there was no consensus on the best timing for the CRDC data collection period. LEAs’ primary data reporting activities center around SEA requirements; reporting requirements vary by state in terms of the number of times during the year data need to be submitted and when the data need to be submitted. However, there was wide agreement that staff did not have time to work on the CRDC collection at the beginning of the school year, when LEAs are usually busy preparing SEA data submissions.

The summary of known issues also notes that some LEAs do not have a good understanding of when the data collection takes place and are concerned about the single deadline for submission, because not all CRDC data, such as school expenditures, may be finalized in time. September–October (beginning of school year)There was general consensus that the beginning of the school year is a bad time to initiate the CRDC data collection. LEAs and SEAs are extremely busy at the beginning of the school year. November–DecemberOne small and one large LEA expressed that November and December were preferred months for CRDC data reporting. Both indicated that fall and spring/summer are not good times for data reporting, because these times conflict with other reporting requirements for their respective states. Another small district expressed that December is a problem because they are busy responding to data requests for EDFacts at this time. January–February (winter) or springOne mid-size district expressed a preference for a winter (explicitly citing the months of January and February) or a spring data collection. The preference for a spring data collection conflicts with the preferences of the two districts above who requested data collection in November/December. SummerAnother mid-size LEA expressed a preference for conducting the CRDC collection in August. Multiple due dates (EDFacts model)One very large LEA suggested that NCES/OCR adopt multiple due dates for the CRDC collection—the survey could be divided into conceptual groups, and each grouping of data could have its own due date. This model is similar to what is done for the EDFacts data collection.

Recommendation: Consider allowing LEAs to opt for two different submission models

Using multiple due dates for various sections of the survey could diminish burden to those SEAs and LEAs that currently have difficulty reporting data during the current CRDC collection period; conversely, it might add burden to SEAs and LEAs for whom the current collection cycle/calendar aligns well with their needs. We recommend that NCES/OCR consider allowing LEAs to opt for two different submission models—a single-date model and a multiple-date model.

Main challenges for implementing Goal 1 Maintaining consistency while making changes to the content and design of the CRDC are conflicting recommendations for reducing reporting burden. To tackle this problem, we recommend that the pace of change for the CRDC be slow and deliberate, particularly for the content. We also recommend that NCES/OCR inform LEAs and SEAs of the forthcoming plans for change and when the change will happen. |

Exhibit 1|Examples of time burden for CRDC reporting

Site |

Time burden description |

Summary of CRDC reporting activities |

Small LEA |

It takes a few weeks to compile all of the data. |

-one person at district level who receives, inputs, and submits all CRDC data -extracts data from six databases -state not involved in submission -majority of data stored in system, just need to extract it. No timeline for data entry -school personnel enter data into “master bridge” system and then POC extracts for CRDC. Manually enters into CRDC |

Small LEA |

It takes approximately 30 minutes per school and a total of 5 hours to enter data for all the schools in their district into the CRDC website. Small parts of the CRDC take the most time, 50% of time for CRDC is spent on 5% of the data needed for CRDC. For example, AP results take a long time. |

-SEA involved in submission -data constantly being collected in SIS -runs canned queries to get CRDC numbers -numbers are manually entered into the site |

Small LEA |

It takes 52 hours. |

-uses SEA data system -SEA does not collect data for CRDC -LEAs aggregate data from paper reports -data put into spreadsheets, pivot tables are run to create aggregate counts |

Small LEA |

Elementary schools: about 2 days—it’s easier and shorter, there are fewer questions. Middle schools take longer than elementary schools. High schools take longer than middle schools. |

-state not currently involved in submission -LEA data team (school registrars) retrieve data and give it to POC, who manually enters it (they meet for 2 hours every month, have trainings on definitions, data elements) -data team uses SIS to run queries -bullying/harassment data come from counselors -after about 2 weeks, registrars, HR, and finance provide POC with output and she enters manually into CRDC -downloads and gives to schools to review, and then makes edits |

Mid-size LEA |

It requires 40 or more hours to collect all the data and compile it. |

-SEA provides 60% of data -one POC, schools not involved -school personnel enter data into SIS all year long -requests

data from HR/finance departments separately, plus incident

reports/harassment/ -merges data from SIS, HR, finances, SEA -uploads them to CRDC |

Mid-size LEA |

About a week. |

-SEA not involved -runs queries from SIS and then consults subject experts to fill in remaining data -schools collect data on daily basis, stored in SIS -data then keyed into survey tool |

Mid-size LEA |

It took about 3 weeks. |

-SEA involved -school-level data submitted to LEA on regular basis -data for state collected and then converted to meet CRDC -experts consulted for data not included in the SIS (HR, athletic data, AP, LEP, vocational) |

Large LEA |

Creating the flat file takes approximately 2 weeks. |

-single person -SEA not involved -school-level personnel enter data in SIS on an ongoing basis -POC merges data using previous queries to produce single flat file for submission -no special time frame, no interaction with schools |

Very large LEA |

It takes 6 months to do the CRDC. |

-state does not currently provide any data to the LEAs -only LEAs are involved in submission -schools enter data into SIS all year long and then LEA contacts schools with questions if needed -coding system not detailed enough, narratives an not codes for incident report, -four different databases -schools report discipline differently and the LEA has to align the data |

Source: CRDC Improvement Project Site Visits, 2014.

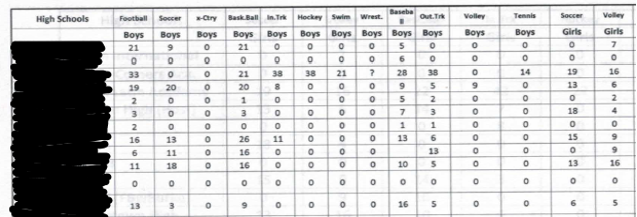

Exhibit 2|List of data elements reported as difficult and reasons for difficulty, by LEA size

Data element |

Site visit respondent description of difficulty, by LEA size |

Athletics data |

Large LEA: Athletics directors or coaches provide information. Rosters of students must be coded and aggregated to meet the CRDC requirements. Rosters often do not include a student’s race, ethnicity, gender, or student IDs, so the LEA must try to match the student names with the information in the SIS, which is very time consuming. School: Collected in hard copy; coaches for this school report to state, not district, so numbers are not accurate at district level Mid-size LEA: They rely on coaches’ lists of individuals who play for them and it is difficult to get accurate data Mid-size LEA: Asks schools for their athletic data; not good at keeping it in SIS Small School: Athletics supervisor keeps athletics data and is it not in SIS Mid-size LEA: Schools put data into SIS, but unsure how accurate Small LEA: Coaches respond to data requests for athletics by hand |

Financial data |

Large LEA: Often need more clarification of who falls into which category. Finance data come from own data source and are pulled by a colleague (senior coordinator for finance) and put in a spreadsheet. The data center manager then pulls the information needed and manipulates the spreadsheet for CRDC Small LEA: Central office staff aren’t tracked by amount of time spent at each school LEA: Finance staff report that their system is not set up to track information needed for CRDC Mid-size LEA: Expenditure at the LEA level might not accurately show what the dollars were spent on by school Very large LEA: Finance department provides these data in PDF format, and it is time-consuming to enter such data into the CRDC school by school Small LEA: Need definitions distinguishing support staff from administrative staff and noninstructional support staff Very large LEA: Need clarity about whether preschool personnel salaries are disaggregated by funding source; question of whether salaries are needed for particular point in time or for teachers who were present for whole period of collection time; and whether CRDC is seeking budget amount or actual expenditure for money questions LEA: The district doesn’t know teacher’s overall experience in teaching, just the amount of time teaching in district |

Security guard, school resource, law enforcement officer |

Mid-size LEA: Security guards, school resource officers, and law enforcement are difficult to track because they contract for services, and it is hard to know who is working at a given time Mid-size LEA: Sworn law enforcement officer is not tracked in student information system—it would need to be hand-counted Small LEA: Have juvenile corrections officers who may serve an overlapping or similar function Large LEA: Often use city police and do not track information about city police |

Nonpersonnel expenditure |

Large School: District does not have accurate numbers because federal and state grant funds go directly to her school, not through district Small LEA: Need clear definition of what is included in nonpersonnel expenditure LEA: Schools are on different schedules for equipment replacement, so it may look like there is inequity within a single year |

Dual enrollment in courses |

Large LEA: Not in system as requested by CRDC, but can be calculated School: Information could be found in the system but system may not have separate codes for these; hard to capture |

Credit recovery |

Large School: In system as a description but not a separate code, so it may be hard to capture Small LEA: Need to be highly defined; students doing credit recovery are in a class with other students who are taking it for the first time, so it is hard to measure Small LEA: They do not report on this element because of the timing of classes Mid-size LEA: Need to be sure of the definition to see whether their programs qualify |

Advanced Placement (AP) exam data |

Many LEAs of all sizes expressed difficulty in reporting AP data because the LEAs do not receive the data from the College Board in a format that facilitates reporting to the CRDC (e.g., the AP data do not include enough identifying information to match with student SIS records). Large LEA: Counting who took exams is hard; probably the most difficult aspect of all; a challenge, because it depends on whether you get the information from the College Board or whether someone cleans it up and matches it to our student IDs School: Data may not exist for all schools; comes from College Board; knowing whether students are allowed to self-select would be difficult to determine Small LEA: Data are collected in SIS, but must be verified by high school guidance counselor Small LEA: Must be obtained from guidance counselor in spreadsheet separately Mid-size LEA: College board does not use student ID number, so it must be matched to student data by student name, which is cumbersome; College Board provides the data as a PDF and data must be manually entered into Excel files by LEA, and then pivot tables are run for aggregations Very large LEA: Data exist in other offices and require a matching process; LEA has no control over the accuracy of the data Small LEA: Passing APs is not something the LEA tracks; what constitutes passing? |

SAT and ACT |

Small LEA: College Board data results don’t include student ID numbers so can’t easily be entered into system Mid-size LEA: Data not in SIS; data are gathered by school guidance counselor and sent to CRDC POC Small LEA: Students sign up for SAT/ACT independently, and the data do not go through the schools |

Number of students absent for more than 15 days |

Large LEA: Is it for entire school year, or just within specific school student attended at end of year? Small LEA: Does “absent” include suspensions, field trips, etc.? How do you define “all day absent” (number of classes missed? the missing of a bridge period?); different definitions of “full-day absent” are used by different schools Mid-size LEA: Clear definition needed Very large LEA: Students may be absent for more than 15 days, but days spread across multiple schools attended—this would require calculation |

Referral to law enforcement agency/official |

Large School: Not sure how it data element entered/coded in system if it is not an arrest (not a black-and-white element) Mid-size LEA: They do not track these data |

Arrests |

Mid-size LEA: Difficult to provide because data element must be requested from schools. It is so rare that it is not thought to be reported in any certain way Mid-size LEA: Don’t keep track of arrests for school-related activity specifically—they would need to look through each suspension to find out if it resulted in an arrest |

Bullying/harassment and other discipline data |

Many LEAs of all sizes reported difficulty in reporting discipline data for the CRDC. In most cases, there was no standard system for coding incidents that match the CRDC definitions. The process of reporting therefore required reviewing incident descriptions for each incident, and then coding them Large LEA: Need to clearly identify who falls into which category LEA: Need more description for types of incidents (e.g., does firearm refer to hand gun, rifle, etc.?) School: Unsure whether there is a code for these details in the system Small LEA: Collect data on the person who bullies or harasses, but do not collect data on the victim or basis for bullying; data collected is not as specific as CRDC, e.g., they track threats of physical attack but do not specify if threats include weapon/firearm/explosive device Mid-size LEA: Must read through specific incident comments and then categorize incidents; no category for rape or sexual battery Small LEA: Time consuming; level of detail needed for reporting for CRDC isn’t in system; must obtain from the schools and create separate database for the data Mid-size LEA: Collect the listed types of incidents but not in the same language LEA: Challenging to pull disciplinary data, because the disciplinary file is huge and requires a lot of data manipulation work; based on the ways schools enter infractions and comments Mid-size LEA: There are no business rules for standardizing entry and coding of data; LEA must disaggregate the reports that are sent to SEA in order to calculate incidents by student characteristics; CRDC report is much more specific than state reports; discipline data is primarily free entry, making it difficult to search Mid-size LEA: Specificity is difficult to report because state does not require level of specificity that CRDC requires; must consult paper records Very large LEA: Some schools do not want to collect victim data because they don’t want the information following the student; CRDC wants number of victims and offenders to match, but that doesn’t always happen LEA: Incidents may have more than one event and student involved; some of the specificity is included in narratives or not at all; this data requires mapping current codes to the CRDC categories Mid-size LEA: Do not have codes that split documented incidents as specifically as CRDC requests Very large LEA: Some incidents may be reported for a building as opposed to a school (some buildings house more than one school) Small LEA: There is no reliable database for answers to CRDC data elements—students are usually reported for fighting or assault, not harassment/bullying Very large LEA: Schools report incidents but not as specifically as is needed for CRDC and other collections Small LEA: Allegations are not in student system; reporting of allegations depends on whether someone gave information to counselors/principals. At the LEA’s charter school, the registrar pulls hard-copy data to report bullying and harassment |

Course information |

Mid-size LEA: Combining students who have taken more than one of the requested courses is difficult LEA: There is no clear definition of failure, so providing information on students who passed/failed certain courses is difficult Large LEA: Need to clearly identify who falls into which category Small LEA: Want to submit all class info in a table and have CRDC manipulate it the way it is needed Mid-size LEA: There is no universal definition of “passing”—it is defined at the local level and data may not be comparable across LEAs; mobility can cause number of students passing a class to be greater than number of students enrolled; NCES needs to provide info on how to define classes (list of SCED codes would be helpful) Mid-size LEA: Number of students taking a certain class/teacher caseload size is a moving target, so it is not accurate from point A to point B; need clear definition of classes that qualify as algebra I, etc. Very large LEA: Schools have somewhat different names for courses and require mapping; number of students enrolled in a course and number of students passing doesn’t always match Very large LEA: Schools, LEAs, SEAs might have differing definitions for courses; in last CRDC, LEA evaluated which of its courses corresponded with given definitions; mobility causes beginning of year numbers to differ from end-of-year numbers Small LEA: Course definitions need clarity—there could be a student in the justice facility who gets an algebra credit after only being in a class for 10 days because he had already done a year of algebra during the previous data collection time period Very large LEA: Enrollment is reported at beginning of year, and number of students taking a course may be different when reporting numbers at end of year. CRDC compares the two numbers, and the LEA will get an error message even though the numbers are accurate |

Noninstructional support personnel |

Mid-size LEA: Need clarity on definition LEA: Noninstructional personnel (security guards, custodians, etc.) are difficult to track because there is a lot of mobility based on needs of schools (need a specific date for the data point or else it will not make sense because of the mobility) Small LEA: Psychologists hired at central office and shared between schools, so there would need to be something defining their FTE Very large LEA: Need definitions for how to differentiate different types of support staff |

Limited English proficiency (LEP) |

Mid-size LEA: Challenging because it is in a whole separate system (e.g., not in the SIS) Mid-size LEA: LEP data is tracked outside of the SIS |

Justice facility |

Mid-size LEA: Does not collect data on when a student is sent to a justice facility outside the district aside from the information that they have left the district (enrollment record) Mid-size SEA: Justice facilities are administered by Department of Justice, and SEA has no control over their actions and the information collected; all justice facility schools are part of a single LEA (state-operated agency) and are not “responsible” for high school completion/graduation Very large LEA: Justice facility is a separate program within an alternative school and is not reported separately; participation in a program for less than 15 days would take a long time to gather because students go in and out of programs throughout the year |

Source: CRDC Improvement Project Site Visits, 2014.

Exhibit 3|Illustration of types of LEA data systems

Data barns:

|

Staff /finance information system(s)

Student Information System (SIS)

|

Data silos:

|

Athletics

Bullying & harassment

Guidance (e.g., AP, SAT, ACT,

graduation)

Distance

education

Security staff

|

LEA data system type 1

|

|

LEA data system type 2

|

|

LEA data system type 3

|

|

Goal 1 2 3 4

Achieve better respondent engagement through better communication

Recommendations for communication

Challenge: Lack of clarity regarding the purpose of the CRDC

Recommendations:

Develop a mission statement and include on all CRDC materials

Develop an FAQ about the use of CRDC data

Challenge: Communications about new data elements or changes to the CRDC are often received too late to allow LEAs to adequately plan for reporting timely and accurate data

Recommendations:

Develop a standard communication timeline

Maintain respondent web space to access information about the CRDC

Challenge: Once communications are sent from OCR, they are not reaching their intended recipients within the LEA

Recommendation: Engage SEAs in communication process

Challenge: POCs did not have adequate information to share with other offices and staff to obtain accurate data

Recommendations:

Strengthen the leadership role of the POC

Develop a welcome packet of key materials for the POC to use in communicating about the CRDC

Challenge: LEAs were either unaware of training materials or did not find them helpful

Recommendations:

Embed training materials within the data collection tool

Develop a CRDC “best practices” guide

Design a process to develop training materials in response to LEA needs, and track their use

Challenge: The CRDC lacks a formal feedback mechanism for learning from and improving the LEA user experience

Recommendations:

Develop feedback mechanism for improving the current and future collections

Create and maintain a consistent email address to be used exclusively by CRDC respondents

|

Challenge: Lack of clarity regarding the purpose of the CRDC

In past CRDC communication efforts, the purpose of the CRDC has been conveyed through documents sent at the beginning of the collection or at other single points of communication. A review of the responses of site visit respondents shows that this does not appear to have been effective in communicating the purpose of the CRDC; many of the respondents said they did not understand the purpose of the CRDC or even know about it. Typically, only one person receives a communication regarding the CRDC, and it then falls on that person to relay the information in the communication to others, which does not appear to happen effectively.

At the LEAs we visited, only a few staff (aside from the POC) who collected data needed for the CRDC knew what the CRDC was. Sites reported that the initial letters to the LEA may have included statements about the purpose of the CRDC, but these communications were often sent directly to the superintendent and were not consistently shared with the primary POC(s) responsible for gathering and reporting the data.

Recommendation: Develop a mission statement and include it on all CRDC materials

We recommend that the CRDC develop a mission statement and FAQs for new respondents about the purpose and other key aspects of the data collection that can be used regularly in all forms of CRDC communication with respondents. For example, it can be included at the bottom of letters, in email signatures, on training materials, in reports, on the website, in the online tool, etc. Regular use of consistent statements will lead, over time, to greater saliency of the CRDC with stakeholders who experience any aspect of the collection. Survey research has consistently shown that topic saliency is a major predictor of survey engagement (Groves, Singer, and Corning 2000).

On the OCR webpage presenting information about CRDC, this tagline appears: “Wide-ranging education access and equity data collected from our nation’s public schools.” This statement usefully describes CRDC data to potential users, but it does not emphasize its purpose for respondents.

The mission statement should emphasize the impact of the CRDC on students’ educational success and opportunity over compliance or data description. It was clear from the site visits that most CRDC respondents understood the importance of CRDC compliance and the kind of information that is collected in it. However, meaningful data for school and district administrators are data that inform their goals as administrators of learning institutions to help students succeed in school; it is recommended that communications about the purpose of the CRDC reflect these goals of schools and districts.

Recommendation: Develop an FAQ about the use of CRDC data

They need to know what it means to

them. What feedback does it provide? What is the product? You

have to build trust that the request is legitimate and useful –

High school principal on conveying the importance of data to

school staff

NCES/OCR should also consider providing item-by-item justifications for the data elements. These rationales could be linked from the online tool, similar to data element definitions.

I’m interested in data that

can help me improve teaching and learning in my school –

high school principal on what data is most useful Challenge: Communications about new data elements or changes to the CRDC are often received too late to allow LEAs to adequately plan for reporting timely and accurate data.

LEAs often reported receiving communications about the CRDC too late to allow LEAs to adequately plan to collect and report data in a timely and accurate manner. During the site visits, LEAs identified data elements for the 2013–14 school year where there was no collection process in place, significantly limiting their ability to respond accurately to the upcoming collection and potentially increasing the burden by creating an ad hoc data collection process not linked to the SIS system. LEAs expressed the desire to plan FTE allocations for responding to the survey and ensuring that the data are validated. As one LEA mentioned, “100% of the data was not accurate 100% of the time.”

Recommendation: Develop a standard communication timeline

Among the SEAs and LEAs that provided feedback on the timing of communications, one message was universal—communication about the CRDC to SEAs and LEAs should begin as early as possible. We agree. SEAs and LEAs benefit from as much lead time as possible to prepare for the CRDC data submission process. Specific suggestions include

This last bullet, creating a calendar, is important. There is currently no detailed schedule of activities to guide LEAs in their planning for the CRDC. This contributes to a lack of understanding of the process and inhibits appropriate planning. We recommend that NCES/OCR agree to and approve for public distribution an official calendar for the 2013–14 and 2015–16 collections. OCR and NCES should develop a communication timeline that is standard and consistent from year to year. This will allow LEAs and SEAs to better anticipate and prepare for key activities. We have provided a sample calendar in Exhibit 4 that includes data collection activities as well as recommended planning activities for POCs.

We also recommend that NCES/OCR make public a timeline of the CRDC improvement activities, particularly for changes to data elements. As referenced in Goal 1, consistent and strict development timelines should apply. A suggested timeline is proposed at the end of this report.

Recommendation: Maintain respondent web space for access to information about the CRDC

Principals, assistant principals,

secretary, whomever the principal designates to provide the data

– Mid-size LEA on who in the school is responsible for

reporting CRDC data The web address for the respondent web space should be used on all CRDC communications with respondents, and the web space should be updated regularly. The address should therefore be simple and easy to remember, such as nces.ed.gov/crdc/participants.

Challenge: Once communications are sent from OCR, they are not reaching their intended recipients within the LEA

In many sites, the channels of communication from OCR to the key LEA staff were ineffective. First, communications shared with superintendents only were not consistently shared with those responsible for data entry or upload for the CRDC. Even getting a communication from a superintendent to a POC was challenging. One very large LEA reported there have been some instances where either the superintendent did not forward the CRDC communication to the correct person in the district or the communication was not handled in a timely manner. Second, communications that did reach the POC were not widely distributed to those in the LEA who routinely collected, validated, or reported the data. Many sites reported that school staff were largely unaware of the collection. In cases where school staff were engaged directly in reporting CRDC data, the POC often lacked the time necessary to adequately plan for the collection. In one site, schools were given a deadline of 10 days prior to the actual submission date to review and correct the data. This means communication is delayed or never gets to the correct CRDC-knowledgeable people at the LEA, which limits their time and ability to respond.

Recommendation: Engage SEAs in communication process

The site visits confirmed that there is a hierarchy of data priorities for school districts, and SEA data requests are at the top. The CRDC should develop a contact and communication strategy that uses the authority and fluency of SEA-to-LEA communication to improve both the timeliness and quality of LEA responses.