NAEP Technology Based Assessments (TBA) Tools and Item Types Usability Study

NCES Cognitive, Pilot, and Field Test Studies System

Volume II - NAEP TBA Tools and Item Types Usability Study 2014

NAEP Technology Based Assessments (TBA) Tools and Item Types Usability Study

OMB: 1850-0803

National Center for Education Statistics

National Assessment of Educational Progress

Volume II

Interview and User Testing Instruments

NAEP Technology Based Assessments (TBA)

Tools and Item Types Usability Study

OMB# 1850-0803 v.112

August 6, 2014

Table of Contents

1 Participant ID and Welcome Script 3

2 Computer and Tablet Familiarity Survey 4

3 User Testing Scenarios 5

3.1 Sample Task 1 – Drag and Drop 7

3.3 Sample Task 3 - Scrolling 8

4 Exit Questions 8

5 Ease of Use Rating Survey/ Comments 9

Paperwork Burden Statement

The Paperwork Reduction Act and the NCES confidentiality statement are indicated below. Appropriate sections of this information are included in the consent forms and letters. The statements will be included in the materials used in the study.

Paperwork Burden Statement, OMB Information

According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless such collection displays a valid OMB control number. The valid OMB control number for this voluntary information collection is 1850-0803. The time required to complete this information collection is estimated to average 75 minutes including the time to review instructions and complete and review the information collection. If you have any comments concerning the accuracy of the time estimate(s) or suggestions for improving this form, please write to: National Assessment of Educational Progress, National Center for Education Statistics, 1990 K Street, NW, Washington, DC 20006.

This is a project of the National Center for Education Statistics (NCES), part of the Institute of Education Sciences, within the U.S. Department of Education.

Your answers may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law [Education Sciences Reform Act of 2002, 20 U.S.C §9573]. OMB No. 1850-0803

The Fulcrum IT interviewer will complete the participant ID information, below, prior to starting the user testing session:

Information |

Observer Notes |

Date |

|

Participant ID |

|

Booklet Version (A, B, or C) |

|

Grade |

|

School Name |

|

Welcome Script

Text in italics is suggested content with which the facilitator should be thoroughly familiar in advance.

The facilitator should project a warm and reassuring manner toward the students and use conversational language to develop a friendly rapport. While the facilitator is talking, the Paperwork Burden Statement will be displayed on the screen of the tablet for the participant.

Hello, my name is [Name of Facilitator] and I work for Fulcrum IT, which is conducting a study for the National Center for Education Statistics (NCES) within the U.S. Department of Education. Thank you for helping us with our study today.

The Department of Education regularly conducts assessments for 4th, 8th, and 12th graders on subjects like mathematics and reading. These assessments are used to see how well our education system is doing, and allow us to compare performance between different states. These assessments used to be given using paper and pencil, then some were given on laptop computers, and now we are using touch-screen tablets. We are creating many new questions and tools for these assessments and need to know if they will work before we send them out to be used in an assessment. We don’t want students to start the assessment and then have no idea what to do! My job is to try out these new questions and tools with 4th, 8th, and 12th-graders to find out if they are easy to understand and use.

This is where you come in. You were selected to participate in our study because you are a typical [grade level]-grader and will be able to give us honest and insightful feedback on these new items and tools. We have created a made-up test with all the different tools we need to evaluate. We are going to walk you through this practice test to see how easy it is to understand and use. We will ask you to perform specific tasks, and will write down what you do. We will be asking you questions like “What do you think this symbol means?” or “How would you go about doing this?” or “How easy do you think that task was?” Even though you will be answering test questions, we don’t care if you get them right or not. We don’t even know the answers to some of them ourselves.

We won’t be scoring any of the questions, but we will use your opinions and results without your name, along with the ideas and answers of other students, to make changes and improve the system for [insert grade]graders all across the country.

While you are doing the tasks, we will be recording everything that happens on the screen. This is so when we get back to the office, we can look at what buttons you clicked on and other things that happened on the screen. We are not videotaping you, so no one will ever know who did all these things on the screen.

Your participation is voluntary and if at any time you decide you do not want to continue, you may stop. Do you have any questions?

[Answer questions as appropriate]

Let’s begin.

The Computer and Tablet Familiarity Survey will be given to student participants at the beginning of the user testing session, after the welcome. The facilitator will place the survey in front of the student and read the questions and answer options aloud. Clarifying language will be used to help guide the students to an accurate response. Some examples of this type of language are “Well, do you use a tablet every day?” and “How about the last time you used a computer? When was that?”

I have a couple of questions for you about your experience with computers, tablets and mobile phones, either in or out of school:

About how much time do you spend using a computer (desktop or laptop) in a typical week?

|

None |

|

Less than 1 hour |

|

1-5 hours |

|

More than 5 hours |

About how much time do you spend using a tablet (like an iPad or Android tablet) in a typical week?

|

None |

|

Less than 1 hour |

|

1-5 hours |

|

More than 5 hours |

Now I’d like to find out how often you use a mobile phone (also known as a cell phone) for different activities.

About how many calls do you make per week on a mobile phone? |

None |

1-2 times |

3-10 times |

>10 times |

About how many texts do you send per week on a mobile phone? |

None |

1-2 times |

3-10 times |

>10 times |

About how many emails do you send per week on a mobile phone? |

None |

1-2 times |

3-10 times |

>10 times |

About how much time do you spend per week surfing the internet or using other apps on a mobile phone? |

None |

<1 hour |

1-5 hours |

More than 5 hours |

Description

The facilitator will set up items on the tablet between each task. Some tasks will be completed using the eNAEP system, while others will be completed using prototypes that require switching from the eNAEP system to a Web browser.

Participants will be given specific tasks to complete using the tablet. They will be asked to explain what they are doing and why they are doing it while completing the tasks. If participants fail to provide sufficient narration of their actions, they will be prompted by the facilitator using questions such as, “What are you doing now?” and “Can you tell me why you did that?”

If a participant performs an action that did not have the desired effect, the facilitator may say, “Hmm, that didn’t work. What else could you try?” in order to get a deeper understanding of student’s mindset regarding a task.

If participants are unable to complete a particular task after a couple of attempts or are not willing to try because they don’t know, they will be told how to perform that task element before moving on to the next item.

The task instructions for all items will fall into one of the four following types:

Identify function of indicated control (e.g., “What do you think happens when you click on this?”)

Identify control to achieve indicated function (e.g., “Tell me how you might change your answer.”)

Perform task (e.g., “Please read the instructions and complete this item.”)

Provide feedback (e.g., “We have tried doing this with two different buttons. Which one do you think we should use on our assessments?”)

The tasks described in this section are examples of the types of tasks that students will be performing, and do not constitute a comprehensive list. For each user testing session, the actual tasks, as well as their order of presentation, will be determined by the nature of the interactions being studied, as well as the counter-balancing needed for accurate interpretation of the results.

The following are descriptions of components of the user testing scenarios:

Task instructions –– The task instructions (shown in italic text below) let the participant know what they should try to accomplish. For example, in the first sample task below, the participant is asked to get the tablet ready to take the test. These instructions are often intentionally vague to see how intuitive the process is for the student.

Task step –– A task step (also frequently called a “task element” and shown in the left column of the table below) is a specific action that is required for completion of a task. Students will not be told what task steps are required for completion of a task. However, as mentioned earlier, prompts may be used to facilitate task completion if the participant is unlikely to do so without them, but the need for prompts is part of the data we will be collecting, so they will be provided only at certain points for each task step.

Control mechanism –– The control mechanism (shown in the right column of the table below) is a part of the interface on the tablet that the user interacts with to complete a task step. Examples of control mechanisms are the NEXT button, the volume control (both hardware and software) and the highlighter tool. The control mechanism, like the task step, is not described or mentioned to the participants. It is included here as an indicator of what is being tested. The task completion data, collected at the control mechanism level, will provide the high level of detail needed to provide sufficient feedback to item developers to maximize the usability of their items for actual NAEP assessments.

A paper containing the Ease of Use scale [section 5] will be placed on the table so both the student and facilitator can refer to it at any time. The Ease of Use scale will be administered at the completion of each of the tasks (time permitting).

The tasks in the scenarios below represent a sample of the types of tasks that participants will be asked to complete using the tablet. Each user testing session will comprise a different series of tasks and instructions depending on the availability and development stage of different assessment mechanisms in the NAEP system.

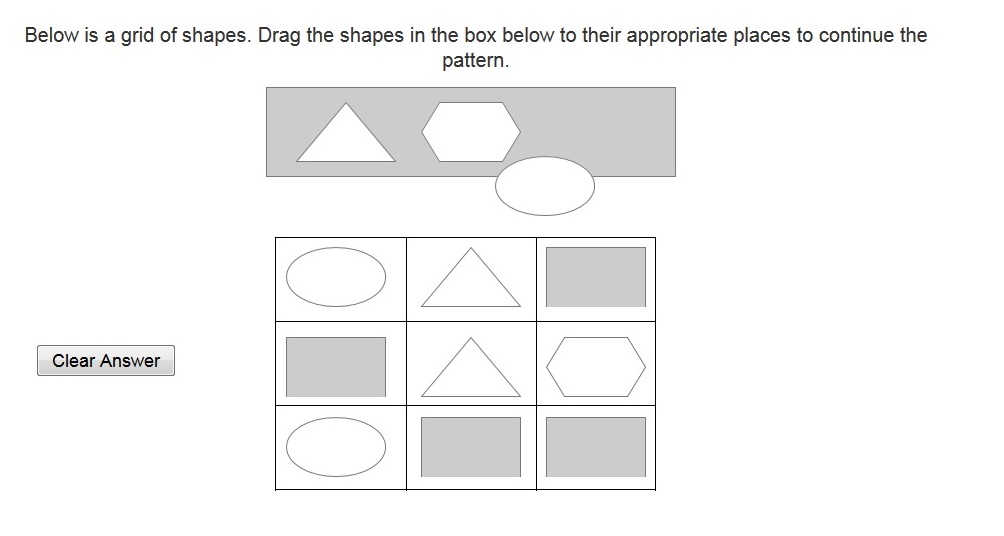

1.1Sample Task 1 – Drag and Drop

[Participant is presented with the following screen.]

Task Instructions: Please read the instructions and complete this item.

Task Step |

Control Mechanism |

Choose mechanism |

Trackpad, stylus, or finger |

Tap and hold draggable shape |

Draggable Shape |

Drag to grid |

Hold and drag action |

Release object on grid |

Let go while target is highlighted |

[Facilitator administers the Ease of Use scale.]

Sample Task 2 – Zoom

[Participant is presented with the following screen.]

Task Instructions: I can’t read those bottom two rows. How would you go about making them larger so I could read them?

Task Step |

Control Mechanism |

Zoom screen |

Zoom in button |

[Facilitator administers the Ease of Use scale.]

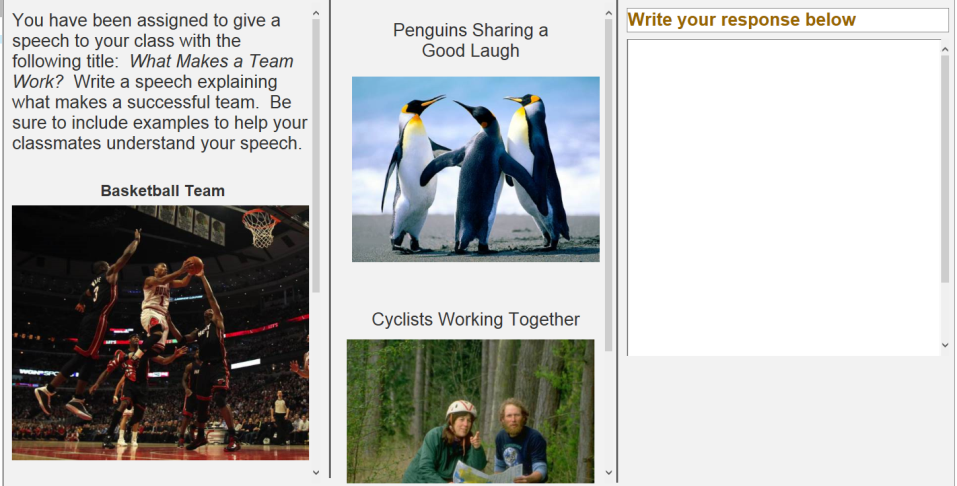

1.2Sample Task 3 - Scrolling

Task Instructions: Now I’m going to ask you to use the stylus for this item. I need you to look at all the pictures for this item. Once you’ve looked at them all, I’d like you to write something in the answer box.

Task Step |

Control Mechanism |

Scroll left content area to BOTTOM |

Scrollbar or pane |

Scroll middle content area |

Scrollbar or pane |

Task Instructions: Let’s type in a sentence related to those pictures.

[Student writes]

Task Instructions: Now I’d like you to select your second word, copy it, and paste it to the end of the sentence.

Task Step |

Control Mechanism |

Select word |

Stylus/Selection gesture |

Copy text |

Popup menu/Key command |

Set cursor at end of sentence |

Stylus/text box |

Paste text |

Stylus/text box |

[Facilitator administers the Ease of Use scale.]

Questions like the examples below will be asked after completion of all tasks.

Instructions: I would like to get your opinion about everything we’ve done so far. (Responses will be transcribed by the researcher).

Now you’ve completed items using the mouse, the trackpad, a stylus, and your finger. Which one did you like best?

Why?

How about your second favorite?

And your least favorite was?

We used tabs to move through test items. Some were on the left side, some were on the right side, and others were on the bottom. Which side do you think makes the most sense?

How about overall? Did you like using the stylus?

What kinds of things on the test was the stylus good for?

How would you rate this test on a tablet? Remember, we are not thinking about how hard the questions are to answer, but how hard or easy it is to move around and do things required for the test on this tablet. Was it easy, difficult, or somewhere in the middle? [continue Ease-of-Use prompts to get final rating]

If you were going to be taking one of our tests, which way would you want to do it? On paper, on a tablet, or on a laptop computer?

What would be the best thing about taking a test using the tablet?

Would there be any bad things about taking a test using the tablet? [prompt for what]

Do you have any other comments for us about giving our tests to kids using tablets?

Thank you for helping us to improve our test.

The following Likert scale will be administered at the conclusion of tasks during the user testing.

Very Difficult |

Somewhat Difficult |

Can’t Decide |

Somewhat Easy |

Very Easy |

1 |

2 |

3 |

4 |

5 |

Initially, the facilitator will use the following script to prompt a response for the Ease of Use scale.

I’d like to ask you about what you just did. How easy was it for you to figure out what to do? Was it easy, difficult, or somewhere in the middle?

If the participant answers to the effect of “easy,” the facilitator will then ask, “Was it somewhat easy, or very easy?”

If the participant answers to the effect of “difficult,” the facilitator will then ask, “Was it somewhat difficult, or very difficult?”

If the participant answers to the effect of “in the middle,” the facilitator will then ask, “Was it a little bit easy, a little bit difficult, or is it hard to decide?”

These prompts will be used initially and as needed by participants, but may be dropped for some students as they become accustomed to responding to the Ease of Use scale.

After a rating has been given for the task, the facilitator will then ask, “Do you have any comments about this task? Do you have any suggestions to help us make it better?”

Responses will be written down verbatim by the facilitator.

Usability Study for Tools and Item Types –

Volume II

| File Type | application/msword |

| File Modified | 0000-00-00 |

| File Created | 0000-00-00 |

© 2026 OMB.report | Privacy Policy