Justification

Volume 1 NHES 2016 PFI & ECPP Cog Labs.docx

NCES Cognitive, Pilot, and Field Test Studies System

Justification

OMB: 1850-0803

Volume I

2016 National Household Education Surveys Program (NHES)

Parent and Family Involvement Survey (PFI) and

Early Childhood Program Participation Survey (ECPP)

Cognitive Interviews

OMB# 1850-0803 v.121

December 15, 2014

Justification

The National Household Education Surveys Program (NHES) is conducted by the National Center for Education Statistics’ (NCES) and is NCES’s principal mechanism for providing data on education topics studied through households rather than establishments, including early childhood care and education, children’s readiness for school, parent perceptions of school safety and discipline, before- and after-school activities of school-age children, participation in adult education and training, parent involvement in education, school choice, homeschooling, and civic involvement. The NHES consists of a series of rotating surveys using a two-stage design in which a household screener collects household membership and key characteristics for sampling and then appropriate topical survey(s) are mailed to eligible households. In addition to providing cross-sectional estimates on particular topics, the NHES is designed to measure changes in key statistics. NHES surveys were conducted approximately every other year from 1991 through 2007 using random digit dial (RDD) methodology; beginning in 2012 NHES began collecting data by mail to improve response rates. In 2016, the National Household Education Surveys Program (NHES) will field the Parent and Family Involvement in Education Survey (PFI), the Early Childhood Program Participation Survey (ECPP), and the Credentials for Work Survey (CWS).

This request is to conduct cognitive interviews to test and revise a subset of items from the PFI questionnaire based on potential issues with a few items that were observed in the 2012 collection and the 2014 Feasibility Study. A description of these items and their potential problems are shown in Exhibit 1. We will also test a series of new and revised ECPP items that cover topics suggested by the Office of Nonpublic Education (ONPE) and the Policy and Program Studies Service (PPSS) in consultation with the Office of Early Learning, including minor wording changes in the child disability section. Lastly, we will test revisions to the format of the questionnaire that make it more similar to the Credentials for Work and Training for Work surveys that will be fielded in the NHES in 2016.

In order to reduce the respondent’s cognitive and time burden, we have included only the sections of the questionnaires that contain items requiring testing. Cognitive testing has been used for other NHES surveys in past years. The objective of the cognitive interviews in 2015 is to identify and correct problems of ambiguity or misunderstanding in question wording and respondent materials. The cognitive interviews should result in a set of questionnaires that are easier to understand and therefore less burdensome for respondents, while also yielding more accurate information. The primary deliverable from this study will be the revised questionnaires.

Exhibit 1. Survey items to be cognitively tested

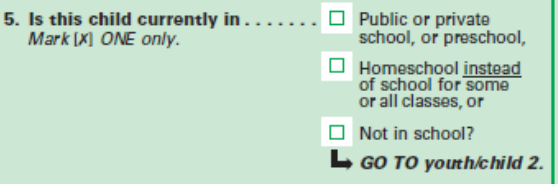

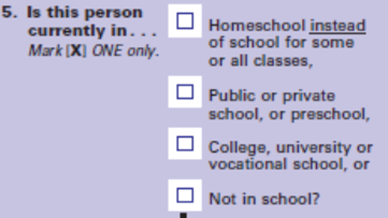

Enrollment

2012 Question |

2014 Feasibility Study Question |

|

|

|

|

2012 Issue: |

NCES was unsure if homeschooling rates were being underreported on the NHES since homeschooling was listed as the second enrollment option. |

|

Testing:

|

A split-panel was used for this item; half of respondents received the 2012 question, with homeschooling listed as the second option. The other half received a revised version, which listed homeschooling as the first response option. |

|

Results:

|

The results demonstrated that there was no significant difference in reported homeschooling rates between the two forms; the percent of household members that were reported to be homeschooled only increased from 0.80% to 0.81% when it was listed as the first option. However, some children were reported as homeschooled in the topical questionnaire who reported they were not homeschooled on the screener.

We will test a revision to the wording. |

|

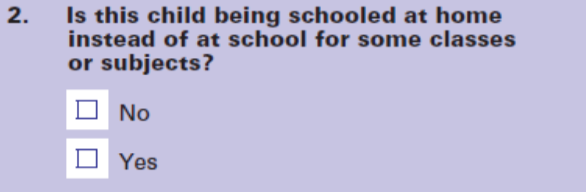

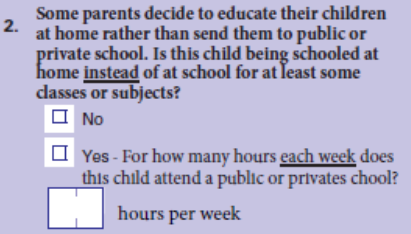

Homeschooling

2012 Question |

2014 Feasibility Study Question |

|

|

|

|

2012 Issue: |

Due to an unexpectedly high number of “yes” responses on the 2012 item, it was suspected that respondents did not understanding that this question was about formal homeschool programs, but instead were reporting about casual instruction in the home. |

|

Testing: |

In the 2014 NHES-FS, the question was revised; those who marked “yes” also had to specify the number of hours per week that the child attended public or private school. |

|

Results: |

This alternative also seemed to confuse respondents; of those who reported that the child was homeschooled, about 66% indicated that the child spent 25 hours or more per week in public or private school, which indicates by NHES’s definition that they are not homeschooled for all or most of their schooling. Additionally, some respondents selected “no” or skipped this item, but wrote that the child spent 25 hours or less in public or private school, which would indicate that they child is homeschooled all or most of the time.

We will test a series of items to capture basic information about homeschooling, taken from the homeschooling questionnaire. |

|

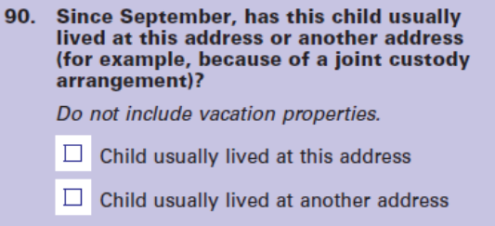

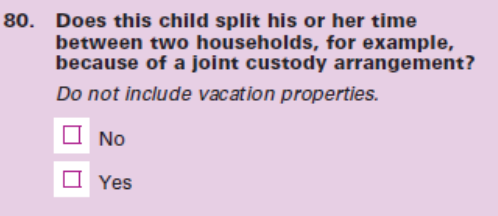

Joint Custody Arrangements

2012 Question |

2014 Feasibility Study Question |

||

|

|

||

2012 Issue: |

Since many children are in split custody arrangements, NCES was uncertain if this wording was capturing all cases where the child might potentially be sampled in two different households. |

||

Testing: |

In the 2014 NHES-FS, the question was revised to a yes/no item that read: “Does this child split his or her time between two households, for example, because of a joint custody agreement?” |

||

Results: |

Although the two versions of the question are not directly comparable, analysis suggested a higher percentage of children living in two households in the 2014 version than the 2012 version. Whereas about 3% of parents reported that the child “usually lived at another address” in 2012, about 13% reported that the “child split his or her time between households” in 2014. The 2014 version also had an item missing rate almost 2 percentage points higher than the 2012 version.

We will test a revision to the wording. |

||

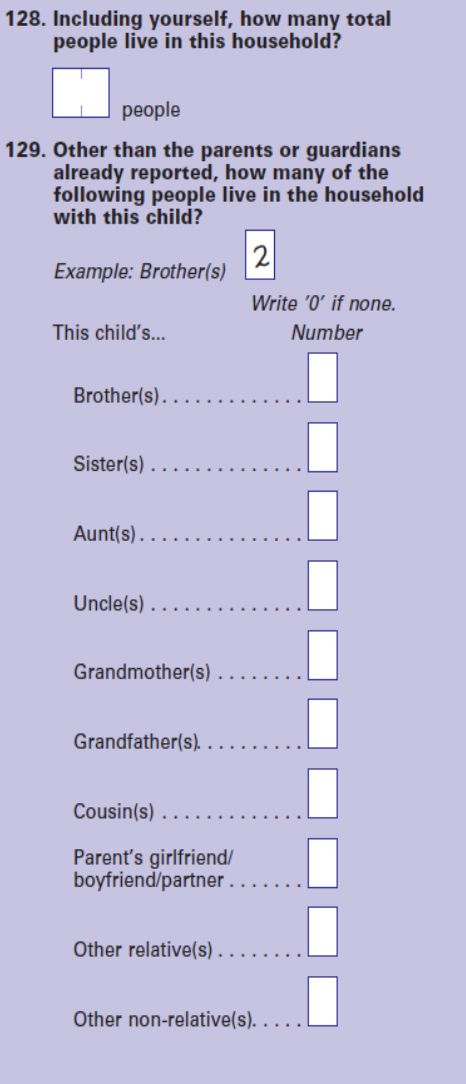

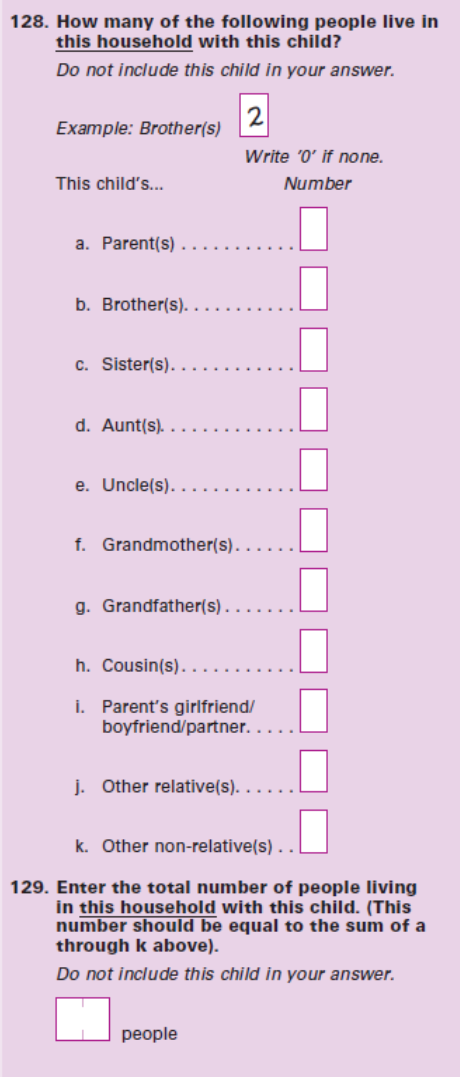

Household Counts

2012 Question |

2014 Feasibility Study Question |

|

|

|

|

2012 Issue: |

After adjusting for the sampled child and parent(s) reported, it was found that in 2012, 14% of respondents reported a total that did not equal the sum of the roster counts. |

|

Testing: |

In the 2014 NHES-FS, the item was revised in that the roster count was presented first (with a new category for “parents”). The household total question was second, with explicit instructions that the total should equal the sum of the previous roster enumeration. |

|

Results: |

Analysis revealed that the 2014 version was less accurate than the 2012 version (57% of respondents did not have an equal sum and total values). Further analysis demonstrated that the two main sources of error were respondents not including the child in the total and the respondent not including the parents in the roster counts but including them in the total.

We will test use of different emphasis, different order of response options, and different placement, using the 2014 version. |

|

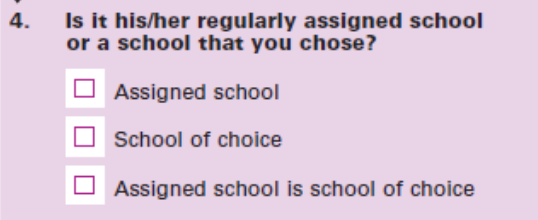

Regularly Assigned School

2012 Question- Proposed for future NHES |

2014 Feasibility Study Question |

|

|

|

|

2012 Issue: |

The results showed that school choice had increased about 10 percentage points over previous years, which raised suspicions about how this item was being interpreted. A comparison between the 2012 PFI and school administrative data (CCD and PSS) revealed significant inconsistencies; while about 77% of parents reported that their child attended regular public schools, this value was 84% based on CCD/PSS data. Of the parents who reported “public school of choice,” 69% were categorized as regular public schools by the CCD/PSS data. This suggests that significantly more parents may be reporting that their child does not attend their regularly assigned school (school of choice) than true estimates. |

|

Testing: |

For the 2014 NHES-FS, a split panel was used to test this item. Half of the respondents received the 2012 version and half received a revised version with three response categories: “Assigned school”, “School of choice”, and “Assigned school is school of choice.” NCES and AIR believed that this third category would enable parents to demonstrate an active role in choosing their child’s school, even if it was assigned. |

|

Results: |

When “Assigned school” and “Assigned school is school of choice” were combined, the percent of students attending assigned schools still dropped significantly (from 87% in 2012 to about 79% in 2014). Additionally, school choice increased from about 12% in 2012 to 20% in 2014.

We will retest current wording and alternative wording. |

|

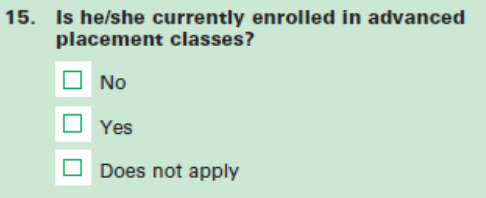

Advanced Placement

2012 Question |

|

|

|

2012 Issue: |

In 2012, parents of children in elementary and middle school were selecting “yes” for this item. Since AP is a college-level academic program offered in high school, it was evident that respondents were misunderstanding this item. It is likely that they were unfamiliar with the program and were reporting about their children’s enrollment in gifted, accelerated, or honors programs.

We will test new wording. |

Design

Cognitive interviews are intensive, one-on-one interviews in which the respondent is asked to “think aloud” as he or she answers survey questions, or to answer a series of questions about the items they just answered. Techniques include asking probing questions, as necessary, clarifying points that are not evident from the think-aloud comments, and responding to scenarios. The following probes will be used:

to verify respondents’ interpretation of the question (e.g. asking for specific examples of activities in which the respondent reports participating in);

about respondents’ understanding of the meaning of specific terms or phrases used in the questions; and

to identify experiences or concepts that the respondent did not think were covered by the questions but we consider relevant.

Interviews are expected to last about 1 hour and will be conducted by trained cognitive interviewers. This submission includes the protocols that will be used to conduct the interviews and the questionnaires to be tested. The research will be iterative, in that question wording and format design may change during the testing period in response to early findings.

To adequately test the surveys, it is necessary to distribute the cognitive interviews across respondents who represent the primary differences in experience of the target population and, correspondingly, to raise the total number of participants to obtain sufficient numbers of respondents with similar characteristics.

We propose to conduct 60 interviews with parents or guardians of students aged K-12, including at least:

15 parents or guardians of a child enrolled in public school and 7 in private school;

12 parents or guardians of a child enrolled in grades K-5 and 7 enrolled in grades 6-12;

12 parents or guardians of a child who is in a joint custody arrangement;

12 parents or guardians for whom English is their second language but who can read English fluently;

6 parents or guardians of a child who is homeschooled;

9 parents or guardians who also have a child or children ages 0 to 5 and not yet in kindergarten and also have a care arrangement for the child;

6 parents or guardians of a child who receives instruction from school over the internet; and

10 parents or guardians with a high school completion or less education.

Note: The sum of the categories listed is greater than the total because several respondents are expected to fall into multiple categories.

Revisions to the questionnaire will be made as needed on an ongoing basis, depending on the results. Typically, we would expect to conduct at least 3 interviews prior to making a change to question wording or presentation. Interviews will be audio-recorded.

Recruiting and Paying Respondents

To assure that participants from all desired populations agree to take part in the cognitive interviews and to thank them for their time and for completing the interview, each will be offered $40.

Participants will be recruited by AIR, and its subcontractor, Nichols Research using multiple sources, including company databases, social media, and personal contacts. An example recruitment e-mail is included at the end of this document. People who have participated in cognitive studies or focus groups in the past 6 months and employees of the firms conducting the research will be excluded from participating. The questions used to screen respondents for participation are included in the submission. We anticipate it will take 3 minutes per screening interview. Interviews will take place in the AIR offices in the DC-Metro area (estimated 25 interviews), in Waltham, MA (10 interviews), and San Mateo, CA as well as in quiet, public places, such as a library or community center in the San Mateo, CA area (25 interviews).

Assurance of Confidentiality

Participation is voluntary and respondents will read a confidentiality statement and sign a consent form before interviews are conducted. This statement is as follows: “The American Institutes for Research is carrying out this study for the National Center for Education Statistics (NCES) of the U.S. Department of Education. This study is authorized by law under the Education Sciences Reform Act (ESRA, 20 U.S.C. §9543). Your participation is voluntary. Your responses are protected from disclosure by federal statute (20 U.S.C. §9573). All responses that relate to or describe identifiable characteristics of individuals may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose, unless otherwise compelled by law.”

No personally identifiable information will be maintained after the cognitive interview analyses are completed. Primary interview data will be destroyed on or before December 31, 2015. Data recordings will be stored on AIR’s secure data servers.

Estimate of Hour Burden

We expect the cognitive interviews to be approximately one hour in length. Screening potential participants will require 3 minutes per screening. We anticipate it will require 12 screening interviews per eligible participant (thus an estimated 720 screenings to yield 60 participants). This will result in 36 hours of burden for the screener, and an estimated total 96 hours of respondent burden for this study.

Table 1. Estimated response burden for PFI and ECPP cognitive interviews

Respondents |

Number of Respondents |

Number of Responses |

Burden Hours per Respondent |

Total Burden Hours |

Recruitment Screener |

720 |

720 |

0.05 |

36 |

Cognitive Interviews |

60 |

60 |

1.0 |

60 |

Total |

720 |

780 |

- |

96 |

Estimate of Cost Burden

There is no direct cost to respondents.

Project Schedule

The project schedule calls for recruitment to begin as soon as OMB approval is received in December 2014. Interviewing is expected to be completed within 3 months of OMB approval. After the interviews are completed, data collection instruments will be revised.

Cost to the Federal Government

The cost to the federal government for testing the PFI and ECPP questionnaires is $52,096. Reporting on the results will cost $35,359. Total cost to the federal government for this cognitive laboratory study is $87,455.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | andy |

| File Modified | 0000-00-00 |

| File Created | 2021-01-27 |

© 2026 OMB.report | Privacy Policy