CNCS Alumni Outcomes Survey OMB Part B 4-17-15_clean

CNCS Alumni Outcomes Survey OMB Part B 4-17-15_clean.docx

Alumni Outcomes Survey

OMB: 3045-0171

SUPPORTING STATEMENT FOR

AMERICORPS ALUMNI OUTCOMES SURVEY

B. Collection of Information Employing Statistical Methods

B1 Respondent Universe and Sampling Methods

Sampling Universe and Stratification

The universe from which the sample will be drawn is all full-time, half-time, and reduced half-time former AmeriCorps members from ACSN, NCCC and VISTA whose most recent service experience ended in 2012, 2009, or 2004. These time frames represent those members whose service was completed two, five, and 10 years prior to the beginning of the study. The universe excludes quarter-time, minimum-time, and Education Award-only members, as it is anticipated that their limited service experiences and exposure to member development activities would not warrant the expenditure of their time in completing the survey.

The sampling unit is the former AmeriCorps member. The sampling frame consists of a CNCS administrative data system that contains records of all members completing service in each of the three streams of service. There are 91,225 full-time, half-time and reduced half time ACSN, NCCC, and VISTA members for 2012, 2009 and 2004 in the AmeriCorps database. The administrative data system represents 99 percent or more of all former AmeriCorps members.

The sampling design has nine strata, a three-by-three design consisting of three streams of service and three time periods: ACSN, NCCC, and VISTA members completing their last number of service in 2012, 2009, and 2004. The strata will be referred to as service stream cohorts. Sampling will be conducted independently for each service stream cohort. Table B1 contains the sizes of each stratum. The design calls for drawing separate equal probability samples from each service stream cohort stratum.

Table B1: Sampling Universe for AmeriCorps Alumni Assessment

Cohort |

AmeriCorps |

NCCC |

VISTA |

Total |

Service ended 2 years prior (2012) |

24,731 |

1,395 |

7,893 |

34,019 |

Service ended 5 years prior (2009) |

29,740 |

872 |

7,711 |

38,323 |

Service ended 10 years prior (2004) |

12,729 |

1,188 |

4,966 |

18,883 |

Total |

67,200 |

3,455 |

20,570 |

91,225 |

Sample Size

The target realized sample size of total completed surveys is 3,465, as shown in Table B2 below. This is the sum of the samples in the nine strata. The proposed sample size for each stratum is 385. The primary measures of interest in the data are mostly events with a probability ranging from 0 to 100 percent that can be calculated using the binomial distribution, the percent of alumni for each service stream cohort showing specific outcomes. The stratum sample size is determined by the sample size needed to achieve a confidence interval of plus or minus five percentage points for an event occurring 50 percent of the time in an equal probability sample.1 This confidence interval was chosen so that hypothesis tests could be done, not only with the full sample, but also within each service stream cohort, across time periods, and across streams of service.

It is anticipated that the aggregate numbers within each cohort and stream, and within the sample as a whole, will yield tighter confidence intervals of three percentage points for the cohorts and streams, and two percentage points for the sample as a whole.

Table B2: Sample Size for Anticipated OMB Burden Estimation by Membership Group

Cohort |

AmeriCorps |

NCCC |

VISTA |

Total |

Service ended 2 years prior (2012) |

385 |

385 |

385 |

1,155 |

Service ended 5 years prior (2009) |

385 |

385 |

385 |

1,155 |

Service ended 10 years prior (2004) |

385 |

385 |

385 |

1,155 |

Total |

1,155 |

1,155 |

1,155 |

3,465 |

Although we are aware that an 80 percent response rate is desired, based on past surveys administered using the Dillman (2000) approach, it is likely the response rate may be closer to 50-60 percent and that the response rates will differ by cohort year, as members from earlier cohorts may be less likely to respond, or more difficult to contact (Monroe and Adams, 2012). In prior studies of AmeriCorps Alumni, response rates from the survey inception to the final round of surveying were 58 percent (Corporation for National and Community Service, 2008a). For a study of AmeriCorps VISTA alumni, the response rate was 38 percent. However, both these studies used phone surveys. In order to minimize nonresponse and respondent contacts, we will use a multimodal survey that employs online, phone, and mail follow-up in sequence and pulls only as many sample members as are needed to attain the target number of 385 within each cell. We will start with an initial sample size based on an 80 percent response rate (482 per service stream cohort), and will pull additional sample once the additional 97 individuals included to account for the target 80 percent response rate are exhausted (i.e., through online, phone, and mail follow-up). Non-respondents beyond the oversample will be replaced by the next available names on the list as soon as they are identified as being non-contactable, or when they refuse.

Sampling Method

This study will employ stratified random sampling with equal probability of selection within stratum. Given the amount of time between program participation and this survey, response rates are uncertain and are anticipated to vary between strata. In order to minimize the actual number of individuals sampled and contacted, the study will use a randomly sorted list within each stratum and will work down the randomly sorted list until the desired sample size in each cell is achieved. Specifically, the survey will start with the first 482 names on the list, and once 20 percent of these names have been determined to be unreachable (i.e., they have received three email reminders or their email bounced, they received three phone calls or their best identified phone number is disconnected, we have mailed them a hard copy survey) the next name on the list will be contacted. This process will continue until the target sample size is achieved. The strength of this approach is that it achieves adequate statistical power, minimizes the number of individuals contacted, and avoids complicated sampling probabilities that can occur if response rates are too low to attain power with a single sample, therefore requiring a second sample. This approach will also allow us to respond to the anticipated uneven and unknown response rates across stratum.

From the list of all ACSN, NCCC, and VISTA members (N=91,225), nine lists (see Table B1) will be generated containing the full set of members falling into each of the nine sampling strata (e.g., ACSN members whose service ended in 2012, NCCC members whose service ended in 2009).

The nine lists will each be randomly sorted. Randomly sorting each list and then pulling participants in that random order as replacement sample is needed will allow for a fixed, single probability of selection within each stratum to be calculated for any group member once the final response rate for that group is determined. For practical purposes, we will start with inviting the first 482 people (385 target sample, with an 80 percent response rate) on each list to participate in the survey.

After the response rate falls below 80 percent, additional individuals will be contacted according to their random order on the list until the target number of 385 completed surveys in that cell is attainted.

B2. Information Collection Procedures/Limitations of the Study

To support this effort, CNCS will employ a data collection approach to maximize efficiency and response rates, while accurately answering the research questions. The primary data collection effort is an online survey administered via an email containing a link to a web address. If the respondent prefers, a survey with a self-addressed, stamped envelope will be sent to him or her, to be returned by mail. Some members that cannot be reached by email will be contacted by telephone and may choose to complete the survey over the telephone with a project evaluator, or request a mailed paper copy.

Prior to survey administration, CNCS will develop and administer email announcements to raise awareness of the survey and its perceived legitimacy among potential respondents (see Attachment B-1 for a draft of the introductory email). CNCS will also work to identify options to increase the visibility and appeal of the survey, including via social media. The study will use up to three phased email reminders at four, eight, and 12 days following the survey rollout.

Data collection procedures for the online survey will follow the Tailored Design Method (TDM; Dillman, 2000) to data collection (see Section B3). The specific steps of the data collection procedure are organized around a series of contacts with the target audience. Once contact is made with a member of a target audience, the success of that contact is monitored (i.e., tracked) and steps for future contact are made.

For surveys administered through the online data collection software (e.g., SurveyMonkey), responses are anonymous and will be downloaded into a database without identifying information. Response status will be tracked for each selected respondent separate from actual responses. For surveys that will be conducted over the telephone when there is not a response to the online survey, data collection staff will work from a list of non-respondents names and phone numbers, but will only use a unique identifying number on top of the survey. This number will be used to differentiate among survey responses in the response database, not to identify any respondents. For mailed surveys, each person’s identifying number will be entered in the database used to generate mailing labels and track responses; the number will also be entered on each individual’s questionnaire so the data collection staff can identify respondents. These procedures will allow the staff to follow-up with individuals who have not responded and to increase the response rates.

Upon completion of the study, the contractor will destroy the database with individuals’ names, phone numbers, addresses, and identification numbers (see Section A10). As discussed in Section A10, the purpose of this unique identifying number is to track responses to determine if additional follow-up is needed to achieve the desired response rate.

Initial Request to Complete Survey

Any of the list’s first 482 names without email addresses will be searched using a LexisNexis search for current physical and/or mailing addresses, which will be used to identify phone numbers and/or email addresses through online sources including reverse look-up directories and/or social media searches. The email invitation will explain the purpose of the survey, encourage a response as soon as possible, assure anonymity to the respondent, and offer the contractor’s "800" number in case any questions arise (see Attachment B-2 for a draft of initial email text). For the first round of sampling and recruitment, the first 482 people on each list will be sent an email invitation to participate in the survey with follow-up emails sent four, eight, and 12 days post-initial recruitment email (see Attachment B-3 for a draft of the follow-up email text). Each email will contain a link to the online survey. Members with no email from the initial batch will be contacted by phone, if a valid phone number is available, or by postal mail if not (see Attachment B-4 for a draft of the telephone outreach script and Attachment B-5 for the draft of the postal mail outreach letter). Members with no contact information available whatsoever, after LexisNexis and online search attempts, will be replaced with the next randomly ordered number on the list using the follow-up procedures identified below.

Follow-up Procedures

If an email bounce notification is received, attempts will be made to locate the member through LexisNexis, other online contact information, by phone, or using social media. Beginning on day 14 following the initial email, evaluators will make a follow-up phone call to each participant on the recruitment list yet to respond. If reached by phone, the individual will be provided with an option of completing the survey over the phone at that moment, receiving a paper survey by postal mail, receiving an email containing a link to the online survey, or being called back at a later time to take the survey by phone. If no phone number is available, the participant will receive a hard-copy survey by postal mail.

Once 20 percent of the initial sample is determined to be non-contactable for each stratum, additional names from the sampling list will be pulled. The exact number of new names to be pulled will be based on the response rate attained to date for the first round. The follow-up procedures noted above will then be applied to the new sample.

Weights

Within each stratum, the sample members have equal probabilities of selection, and therefore weights are not needed to analyze the results. However, in order to combine samples across sampling stream cohorts, weights must be used to account for: a) the differing probabilities of selection in the various strata due to differences in size among strata, and b) differing response rates across strata.

The sampling weights Wts, in each of the nine stratum consists of the inverse of the sampling probability, p. The sampling probabilities for respondents in stratum, i, can be calculated as the sample size, n divided by the population size, N.

pi = ni/Ni where i=1 to 9

Wts = 1/ pi where i=1 to 9

When using a randomly sorted list, the final sample size is the order number on the list of the last person contacted and interviewed. This definition assumes that the procedures above are followed in moving down a list where individuals have been assigned a random number and the list is then sorted by that random number from lowest to highest. The result is that each individual on the randomly sorted list can be assigned an order number, where order number 1 is assigned to the person with the smallest random number and the highest list number is assigned to the person with the highest random number. The highest order number should equal the population of the stratum.

For example, if individuals ordered one to 500 on the list were contacted to obtain 385 survey responses, the final sample size is 500. If this sample were drawn in a stratum with a size of 10,000 then the probability of selection would be 500/10,000 or five percent. The sampling weights would be the inverse of the sampling probability or 1/0.05, which equals 20.

The nonresponse weight Wtr in each stratum, i, consists of the inverse of the response rate, r. Since the response rate is calculated as the number of respondents, ni divided by the sample size, ni, the nonresponse rate is the sample size divided by the number of respondents.

ri = ni/ni where i=1 to 9

Wtri =1/ ri where i=1 to 9

To correctly weight the sample, each survey response would be assigned a composite weight, Wti, that would be the product of the sampling weights and the nonresponse weight.

Wti = Wtsi * Wtri where i=1 to 9

Estimation Procedure

Within each stratum, the point estimates (means and percentages) and their standard errors can be calculated in a straightforward manner using either the normal distribution or the binomial distribution.

When analyzing data involving multiple strata (for example, using the whole sample, multiple streams, or multiple cohorts), there are multiple approaches, including using the sampling and nonresponse weights to remedy any differences in selection probabilities, that result in unbiased estimators. Most statistical packages offer options for calculating design corrected standard errors. For example, SAS offers a series of procedures for calculating design adjusted standard errors for frequencies and means. The following is an excerpt from the SAS documentation for PROC SURVEYMEANS. (SAS Institute, Inc., 2010)

The SURVEYMEANS procedure produces estimates of survey population means from sample survey data. The procedure also produces variance estimates, confidence limits, and other descriptive statistics. When computing these estimates, the procedure takes into account the sample design used to select the survey sample. The sample design can be a complex survey sample design with stratification, clustering, and unequal weighting.

PROC SURVEYMEANS uses the Taylor expansion method to estimate sampling errors of estimators based on complex sample designs. This method obtains a linear approximation for the estimator and then uses the variance estimate for this approximation to estimate the variance of the estimate itself (Woodruff, 1971; Fuller, 1975).

SAS (e.g., PROC SURVEYMEANS) allows the user to specify the details of the first two stages of a complex sampling plan. For the AmeriCorps Alumni Outcomes survey, the stratification levels are specified in PROC SURVEYMEANS (strata year and service stream). At the lower levels of the sampling scheme, the design attempts to mimic, as closely as is practical, simple random sampling. The software is not able to calculate exact standard errors, since it presumes true simple random sampling beyond the first two levels. The sampling weights will remedy any differences in selection probabilities, so that the estimators will be unbiased. The standard errors, however, are only approximate; the within-cluster variances at stages beyond the first two are assumed to be negligible.

In the SURVEYMEANS procedure, the STRATA and WEIGHT statements are used to specify the variables containing the stratum identifiers, the cluster identifiers, and the variable containing the individual weights.

For the AmeriCorps Alumni Outcomes survey, the STRATA are defined as the stream and year combinations used for the first level of sampling (i.e., the service stream cohorts).

The WEIGHT statement references a variable that is for each observation, i, the product of both the sampling weight and the nonresponse weight.

The SURVEYMEANS procedure also allows for a finite population correction. This option is selected using the TOTAL option on the PROC statement. The TOTAL statement allows for the inclusion of the total number of units in each stratum. SAS then determines the number of units selected per strata from the data and calculates the sampling rate. In cases where the sampling rate is different for each stratum, the TOTAL option includes a reference to a data set that contains information on all the strata and a variable _TOTAL_ that contains the total number of units in each stratum.

The following is the sample code for PROC SURVEYMEANS to calculate the standard errors for a key estimator, volunteering:

proc surveymeans data=alumnisurvey total=alumnistrat;

strata year stream;

var volunteering;

weight acweight;

Degree of Accuracy Needed for the Purpose Described in the Justification

The degree of accuracy needed is for point estimates in each stratum to have a 95 percent confidence interval no larger than plus or minus five percent.

Minimum Detectable Differences Between Groups

At the sample size of 385 per subgroup (e.g. service stream/age cohort combination), which is the smallest subgroup comparison we plan, the minimum detectable differences between groups will be ten percentage points for a target proportion of 50% at the 95% confidence interval. For the comparisons between age cohorts or between service streams it is anticipated that there will be a sample size of 1155 per group yielding a minimum detectable difference of 5.8 percentage points for a target proportion of 50% at the 95% confidence interval. For target proportions which are lower or higher than 50% the detectable differences will be smaller.

Table B3: Minimum Detectable Differences in Percentage Points for Comparison Sample Sizes at the 95% Confidence Interval1

Target Proportion |

Service Stream/Cohort (Research Question 1 N=385 per group) |

Focus Areas (Research Question 2 N=577) |

Life Stage (Research Question 3 N=1155) |

10% |

6 |

4.9 |

3.5 |

30% |

9.2 |

7.5 |

5.3 |

50% |

10 |

8.2 |

5.8 |

70% |

9.2 |

7.5 |

5.3 |

90% |

6 |

4.9 |

3.5 |

[1] Calculated using the Survey System Sample Size Calculator, accessed at http://www.surveysystem.com/sscalc.htm

Unusual Problems Requiring Specialized Sampling Procedures

None.

Use of Periodic (Less Frequent than Annual) Data Collection Cycles

The frequency of data collection is at a minimum as the data is being collected once.

B3. Methods for Maximizing the Response Rate and Addressing Issues of Nonresponse

Maximizing the response rate is a key priority for this study. The researchers will employ a variety of strategies to the maximize response rate and to address issues of nonresponse.

Maximizing the Response Rate

The specific strategies that will be used to maximize the response rate and ensure the target sample size is met include:

Oversampling;

Targeted communications via the survey introduction, cover letter, telephone script, and email message;

Using multiple reminders and multiple modes to contact and follow-up with respondents; and

Oversampling

Oversampling of former AmeriCorps members will be used to ensure the target sample size is met. As described earlier, a sorted list will be used in each stratum so that the fielded sample size can adjust to the uncertain response rates that are expected to vary by stratum.

Targeted Communication

The assessment introduction, cover letters, and telephone scripts discussed in Section B2 are designed to encourage participation and reduce nonresponse.

Using Multiple Contacts and Multiple Modes

A multimodal survey will be used to improve the response rate. The Alumni Outcomes survey will be distributed to respondents via email, telephone, and/or mail. The survey will first be distributed via email generated through the online survey data collection software (e.g., SurveyMonkey), including a web-based link, and respondents will be asked to respond electronically. In an effort to increase response rates, participants who do not respond to the survey after four attempts via email (including email bounce notifications) will be contacted by telephone. Finally, if participants cannot be reached by email or telephone, mailed surveys will be distributed. This approach will reduce burden to respondents who choose to use the emailed electronic link to the online survey data collection software by eliminating the time it takes to hand-write responses and mail back a paper survey.

Within four days of survey distribution, reminder emails will be sent to encourage respondents to complete the survey and return their completed responses via the web-based link. Up to two additional follow-up emails will be sent every four days (on Day 8 and Day 12) to any non-respondents, followed by a telephone call two days after the final reminder email, to encourage higher response rates. Finally, for those respondents who do not respond to either email or telephone attempts, as well as those who indicate a preference for the survey to be mailed, a hard copy of the survey will be mailed to them with a return envelope.

All respondents will be informed of the significance of the survey to encourage their participation. In addition, processes to increase the efficiency of the survey (e.g., ensuring that the survey is as short as possible through pilot testing, using branching to ensure that respondents read and respond to only questions that apply to them) and the assurance of confidentiality will encourage survey completion.

Addressing Issues of Nonresponse

Nonresponse Rate Calculation

We will calculate the overall response rate for each of the nine stratum, for the cohort year, for the program stream, and for the survey as a whole, as an indicator of potential nonresponse bias. We will use OMB’s standard formulas to measure the proportion of the eligible sample in each stratum that is represented by the responding units. Because each stratum has an equal probability sample, an unweighted unit response rate will be calculated for the stratum. Weighted and unweighted response rates will be calculated for the cohort year, service stream, and overall sample.

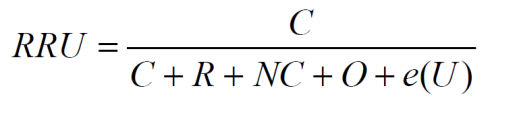

The unweighted response rate will be the ratio of completed cases or sufficient partials to the number of in-scope sample cases. In-scope sample cases comprise the sum of: 1) completed cases or sufficient partials (identified as “C” in the Nonresponse Rate Formula below); 2) refused cases (“R” below); 3) eligible cases that were not reached (“NC” below); and 4) eligible cases not responding for reasons other than refusal (“O” below). The final category of nonresponse specified by the OMB standard formula (“U” below), an estimate of the number of cases whose eligibility was not determined because they were not contacted but that may have been eligible for the study and ultimately not completed, does not apply to this study as all individuals in the sampling universe are anticipated to be eligible.

Nonresponse Rate Formula:

Where:

C = number of completed cases or sufficient partials;

R = number of refused cases;

NC = number of noncontacted sample units known to be eligible;

O = number of eligible sample units not responding for reasons other than refusal;

U = number of sample units of unknown eligibility, not completed; and

e = estimated proportion of sample units of unknown eligibility that are eligible.

A calculation of response rates will be performed separately for each sampling strata (service stream and cohort), as well as for the total sample.

The weighted response rate will be the weighted sum of all the response rates, where the weight of a stratum equals the stratum’s proportion of the total sample. For example, if there were two strata, one with one-third of the population in stratum A and the remainder in stratum B, then the weighted response rate would be one-third of the response rate of stratum A plus two-thirds of the response rate of stratum B.

Nonresponse Bias Analysis

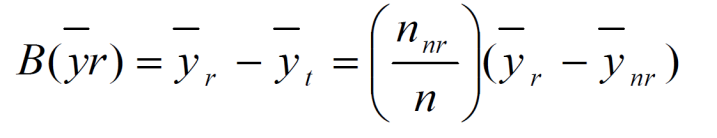

A nonresponse bias analysis will be conducted if the overall response rate is below 80 percent. We will use supplementary data provided by CNCS in the nonresponse bias analysis, including program stream(s) in which the member served, cohort, age, number of terms served, type of terms served (i.e., full-time, half-time, reduced half-time), whether a valid email address was available at the time of surveying (which relates to both contact mode for the survey and the likely extent of connection with AmeriCorps), and state of residence at the time of service (as this is likely connected to the effect of State Commission, State Office, or Campus influence on the member’s service experience). These data are included in the member log files held by CNCS and are available for all members in the sampling universe. For each of these variables available from CNCS records measuring known member characteristics, we will calculate the nonresponse bias using the following formula:

Nonresponse Bias Estimation Formula:

Where:

The nonresponse bias analysis will include a statistical analysis examining whether nonresponse is significantly associated with service history (e.g., service stream(s), number of terms), age, and the respondents’ state of residence at the time of service. We will use a logistic regression analysis, and the models will examine all potential covariates of nonresponse simultaneously. Standard assumptions of the logistic regression model (e.g., multicolinearity of the covariates) will be assessed. We will use this to determine whether non-completed cases and non-sufficient partials are missing at random. The multivariate logistic regression will reduce the number of statistical procedures run on the data, as well as the number of tables included in the report.

Since almost all questions on the survey are going to be required of respondents, item nonresponse will likely be very minimal, and will result primarily from early termination of the survey, and will be classified as non-completed, but included, cases and non-sufficient responses as noted above. We will assess whether both partial survey completion and answers to survey items differ significantly by survey modality (online without follow-up, online after follow-up, by phone, or by mail). We will use multivariate logistic regression for the analysis of response completion by mode and logistic, multinomial, and OLS regressions for the analysis of item response. The analysis used will be determined by the nature (i.e., binomial, categorical with more than two groups, continuous) of the survey item in question. The models will be multivariate, controlling for individual demographic characteristics. Estimates of how item response changes based on response mode can also be calculated using the formula above, substituting different response conditions (e.g., online versus other formats) for ‘response’ and ‘nonresponse’ categories.

B4. Tests of Procedures or Methods

Pilot tests of the biannual survey instrument were conducted with a subsample of the respondent population. An electronic version of the Alumni Outcomes Survey was distributed via email and pilot tested with 14 respondents. Across all respondents, the average time to complete the instrument was 0.33 hours (20 minutes).

Respondents were asked to comment on the clarity of the questions and identify any problems or issues (i.e., content and format of the survey). Participants were also asked to provide feedback on the appropriateness of the questions for the intended purpose. The pilot test results indicated that the survey items made sense to respondents, the response choices provided a sufficient range of options to show variability of response for most items, and respondents were interpreting the items as intended.

In all, 75 participants were selected for the pilot, 14 responded and two emails bounced. Due to the small number of respondents, statistical analysis of items for the pilot test was limited to univariate response frequencies and percentages. The responses showed good variability on almost all items, and no obvious unexpected answer patterns that would indicate a question was not working as intended. One area for possible improvement is that open-ended responses, which were not mandatory, were often skipped, even when a response was requested.

The two respondents who participated in cognitive interviews noted very few difficulties in understanding and responding to survey items. In some cases, they noted opportunities to clarify an item or to modify response options to better accommodate either their own responses or the responses they anticipated other AmeriCorps alumni might provide to specific survey items.

We noted some specific suggestions to improve the survey or respondents’ survey experience stemming from both the online pilot and the two cognitive interviews. The suggestions, and how the survey was altered in response, are provided here:

Allow AmeriCorps State and National alumni to report up to four terms of service. The survey has been amended based on this feedback to allow reporting of up to four terms of service.

Consider whether additional open-ended responses are desirable and whether or not to include additional reminders to complete open-ended responses when possible. The survey has been amended to make completion of open-ended responses required where appropriate.

Allow respondents to select more than one response to the item asking how AmeriCorps service fits with how their career path unfolded. This suggestion was considered, and in response, the survey instructions were modified to ask respondents to select the option that most closely aligned with their experience.

Allow VISTA alumni (and possibly other respondents) to report service hours as hours per week. This suggestion was rejected as potentially confusing to other respondents.

Allow VISTA alumni to indicate whether their service related directly or indirectly to one or more focus areas. This suggestion, which the respondent noted was a reflection of the VISTA emphasis on provision of indirect versus direct service, was rejected as potentially confusing to other respondents.

Finally, only 14 of 75 possible respondents took the survey, even after multiple reminders both through SurveyMonkey, and through individual emails sent from the contrator’s staff member accounts. This suggests that further investigation into whether or not apparently valid email addresses are reaching intended recipients would be useful. In addition, expansion of individual respondent searches or outreach, including LexisNexis searches, earlier-than-planned phone outreach, or other strategies to identify and contact former members may be needed.

B5. Names and Telephone Numbers of Individuals Consulted (Responsibility for Data Collection and Statistical Aspects)

The following individuals were consulted regarding the statistical methodology: Gabbard, Susan Ph.D., JBS International, Inc. (Phone: 650-373-4949); Georges, Annie, Ph.D., JBS International, Inc. (Phone: 650-373-4938); Lovegrove, Peter, Ph.D., JBS International, Inc. (Phone: 650-373-4915); and Vicinanza, Nicole, Ph.D., JBS International, Inc. (Phone: 650-373-4952).

JBS International, Inc. will conduct all data collection procedures. JBS International, Inc. is responsible for all the data analysis for the project. The representative of the contractor responsible for overseeing the planned data collection and analysis is:

Nicole Vicinanza, Ph.D.

Senior Research Associate

JBS International, Inc.

555 Airport Blvd., Suite 400

Burlingame, California 94010

Telephone: 650-373-4952

Agency Responsibility

Within the agency, the following individual will have oversight responsibility for all contract activities, including the data analysis:

Diana Epstein, Ph.D.

Senior Research Analyst

Corporation for National and Community Service

1201 New York Ave, NW

Washington, District of Columbia 20525

Telephone: 202-606-7564

Works Cited Part B:

Corporation for National and Community Service, Office of Research and Policy Development, Still Serving: Measuring the Eight-Year Impact of AmeriCorps on Alumni, Washington, D.C., 2008a.

Dillman, D. A. (2000). Mail and Internet surveys: The tailored design method (2nd ed.). New York, NY: John Wiley and Sons.

Fuller, W. A. (1975), Regression Analysis for Sample Survey. Sankhyā, 37, Series C, Pt. 3, 117–132.

Monroe, M. & Adams, D. (2012). Increasing Response Rates to Web-Based Surveys. Tools of the Trade, 50 (6), 6-7.

SAS Institute Inc. 2010. SAS/STAT® 9.22 User’s Guide. Cary, NC: SAS Institute Inc.

Woodruff, R. S. (1971), A Simple Method for Approximating the Variance of a Complicated Estimate, Journal of the American Statistical Association, 66, 411–414.

Attachments

Attachment B-1: Draft Introductory Email Announcement

Dear [First Name] [Last Name]:

The Corporation for National and Community Service (CNCS) is seeking your input to help us better understand your AmeriCorps service experience and how participation in an AmeriCorps program has affected your career pathway and long-term civic engagement. We will be conducting a short survey and would be grateful for your contribution. This survey will help us make VISTA, AmeriCorps State and National programs, and NCCC more effective for our members throughout their terms of service and afterwards. The valuable feedback you provide will ultimately improve the quality of AmeriCorps programs.

Within the next week, Antonio Da Silva, the Survey Manager at JBS International, will email you a link to an online survey. The survey will take about 20 minutes and is easily accessible on a computer with an internet connection or on a mobile device such as a tablet or smart phone. Your responses will be kept confidential. Please add [email protected] and [email protected] to your accepted addresses to help make sure this email is not filtered.

It is very important that you finish the entire survey so that we collect accurate and complete data.

If you have questions about this project, please contact Diana Epstein at [email protected] or 202-606-7564. If you have technical questions about completing the survey, please contact Antonio Da Silva at 800-207-0750.

We sincerely appreciate your time. Thank you in advance for helping to improve our programs and the support AmeriCorps can offer to its members.

Sincerely,

[CNCS representative]

Attachment B-2: Draft Initial Email

Dear [First Name] [Last Name]:

I am contacting you to ask for your help with a survey of the AmeriCorps State and National, VISTA, and NCCC programs. Through the survey, we hope to learn more about our AmeriCorps alumni. We would like to know how you view the AmeriCorps experience and how it has affected you in the years since you served. The information we gather will help the Corporation for National and Community Service (CNCS) to improve the quality of the service experience for all AmeriCorps members and ensure that the programs enable AmeriCorps members to excel, both in service and beyond.

You can help us better understand your service experience and how participation in an AmeriCorps program has affected your career pathway and long-term civic engagement by taking this survey now: [LINK]

Here is some additional information about this survey:

The survey will document the civic participation and career pathways of AmeriCorps alumni.

The survey will inform current and future programming for AmeriCorps State and National, VISTA, and NCCC programs.

CNCS has hired JBS International to administer the survey.

The survey should take about 20 minutes to complete.

The survey is easily accessible on a computer with an internet connection or on a mobile device such as a tablet or smart phone.

Your responses will be kept confidential.

Please complete the survey by no later than [DATE]. Thank you for your help in improving AmeriCorps programs and the support AmeriCorps can offer to its members.

If you have questions about this project, please contact Diana Epstein at [email protected] or 202-606-7564. If you have technical questions about completing the survey, please contact Antonio Da Silva at 800-207-0750.

Thank you.

Sincerely,

Antonio Da Silva

Survey Manager

JBS International, Inc.

800-207-0750

If you wish to opt out of the survey, please follow the link below.

https://www.surveymonkey.com/optout.aspx

Attachment B-3: Draft Follow-up Email

Dear [First Name] [Last Name],

This is a friendly reminder that we are conducting a survey for the AmeriCorps State and National, VISTA, and NCCC programs. Your prompt response to the survey would be greatly appreciated.

Just a few moments of your time would provide us with valuable information. Your input will help make a significant difference in the quality of AmeriCorps programs. Our survey will ultimately benefit current and future AmeriCorps members like you.

To access the survey, please use the flowing link: [LINK]

Please complete the survey no later than [DATE]. We sincerely thank you for your time.

If you have questions about this project, please contact Diana Epstein at [email protected] or 202-606-7564. If you have technical questions about completing the survey, or to complete the survey by phone, please contact Antonio Da Silva at 800-207-0750.

Sincerely,

Antonio Da Silva

Survey Manager

JBS International, Inc.

800-207-0750

If you wish to opt out of the survey, please follow the link below.

https://www.surveymonkey.com/optout.aspx

Attachment B-4: Draft Phone Survey Script

Instructions to Phone Survey Staff: Dial the telephone number provided in the “Phone” field for the Client and when someone answers, introduce yourself. Be sure to provide your full name and address the respondent with a formal title (for example, “Ms. Harbaugh”).

Here is an example of an introduction:

“(Hello/good morning/good afternoon), is this (client name)?

(Hello/good morning/good afternoon), my name is (your name) and I am calling on behalf of the Corporation for National and Community Service. The purpose of the call is to learn about your AmeriCorps service experience and the effects of your participation in [VISTA, AmeriCorps State and National, or NCCC]. This is a new nationwide survey, developed by AmeriCorps, and your valuable input will help us make AmeriCorps more effective for its members. Your responses will remain confidential.

Would you be willing to take the survey?”

IF “NO,” thank the person for their time and end the call. Then enter, “Contact Date,” “Status,” and if possible, note the reason for refusal under “Notes.”

IF “YES,” say:

“The survey will take about 20 minutes. Do you have some time to answer questions now?”

IF “YES,” conduct the survey.

IF “NO,” ask the person if there is a better time to do the survey.

IF “YES,” update “Status” field and record a summary of the conversation and the date for the rescheduled interview in the “Notes”

IF “NO” or if they will not be available by phone within the timeframe of the survey, ask the person if they would prefer to receive the survey by email or by postal mail. Record a summary of the conversation and the preferred delivery method for the survey in the “Notes” and then immediately send the survey to the person by their preferred delivery method.

Attachment B-5: Draft Follow-up Postal Mail Letter

Dear [First Name] [Last Name]:

The Corporation for National and Community Service is seeking your input to help us better understand your AmeriCorps service experience and how participation in an AmeriCorps program has affected your career pathway and long-term civic engagement. We are conducting a short survey and would be grateful for your contribution. This survey will help us make VISTA, AmeriCorps State and National programs, and NCCC more effective for our members throughout their terms of service and afterwards. The valuable feedback you provide will ultimately improve the quality of AmeriCorps programs. The survey will take about 20 minutes and your responses will be kept confidential.

. It is very important that you finish the entire survey so that we collect accurate and complete data.

If you have questions about this project, please contact Diana Epstein at [email protected] or 202-606-7564. If you have technical questions about completing the survey, or if you would prefer to take the survey by phone or email, please contact Antonio Da Silva at 800-207-0750.

We sincerely appreciate your time. Thank you in advance for helping to improve our programs and the support AmeriCorps can offer to its members.

Sincerely,

[CNCS representative]

1 Calculated using the Survey System Sample Size Calculator, accessed at http://www.surveysystem.com/sscalc.htm

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Introduction: |

| Author | etait |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy