CMS-10551 NH Supporting Statement A 2015 11 10

CMS-10551 NH Supporting Statement A 2015 11 10.docx

(CMS–10551) Nursing Home NationalProvider Survey

OMB: 0938-1291

Measure & Instrument Development and Support (MIDS) Contractor:

Impact Assessment of CMS

Quality and Efficiency Measures

Supporting Statement A:

OMB/PRA Submission Materials for

Nursing Home National Provider Survey

Contract Number: HHSM-500-2013-13007I

Task Order: HHSM-500-T0002

Deliverable Number: 35

Submitted: October 1, 2014

Revised: November 10, 2015

Noni Bodkin, Contracting Officer’s Representative (COR)

HHS/CMS/OA/CCSQ/QMVIG

7500 Security Boulevard, Mailstop S3-02-01

Baltimore, MD 21244-1850

TABLE OF ContentS

SUPPORTING STATEMENT A – JUSTIFICATION FOR the Nursing Home National Provider Survey 1

A1. Circumstances Making the Collection of Information Necessary 4

A2. Purpose and Use of the Information Collection 5

A3. Use of Improved Information Technology and Burden Reduction 7

A4. Efforts to Identify Duplication and Use of Similar Information 8

A5. Impact on Small Businesses or Other Small Entities 8

A6. Consequences of Collecting the Information Less Frequently 8

A7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 8

A8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency 9

A9. Explanation of Any Payment or Gift to Respondents 9

A10. Assurance of Confidentiality Provided to Respondents 9

A11. Justification for Sensitive Questions 10

A12. Estimates of Annualized Burden Hours and Costs 10

A13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers 11

A14. Annualized Cost to Federal Government 11

A15. Explanation for Program Changes or Adjustments 11

A16. Plans for Tabulation and Publication and Project Time Schedule 11

A17. Reason(s) Display of OMB Expiration Date Is Inappropriate 12

SUPPORTING STATEMENT A – JUSTIFICATION

FOR the Nursing Home National Provider Survey

Background

Over the past decade, the Centers for Medicare & Medicaid Services (CMS) has invested heavily in developing and deploying quality and efficiency measures across a range of healthcare settings. CMS actions are intended to promote progress toward achieving the three aims of strengthening the quality of care delivered, improving outcomes, and reducing costs for Medicare beneficiaries.

The Patient Protection and Affordable Care Act (ACA), section 3014(b) as amended by section 10304, states that not later than March 1, 2012, and at least once every 3 years thereafter, the Secretary of Health and Human Services (HHS) shall conduct an assessment of the impact of quality and efficiency measures that CMS uses, as described in section 1890(b)(7)(B) of the Social Security Act, and to make such assessment available to the public. CMS intends to release a comprehensive report once every 3 years.

To fulfill the mandate of assessing the impact of CMS measurement programs, CMS published the 2012 National Impact Assessment of Medicare Quality Measures, 1 which examined trends in performance between 2006 and 2010 on measures in eight CMS measurement programs.2 It also evaluated measures that were under consideration for potential inclusion in the CMS measurement programs.3 Following this first report, CMS published the 2015 National Impact Assessment of CMS Quality Measures Report (2015 Impact Report), 4 which provided a broad assessment of CMS use of quality measures, using data from 2006 to 2013. The 2015 Impact Report encompasses 25 CMS programs and nearly 700 quality measures from 2006 to 2013. Although certain analyses examined all 25 CMS programs, other analyses examined selected measures in a few programs.

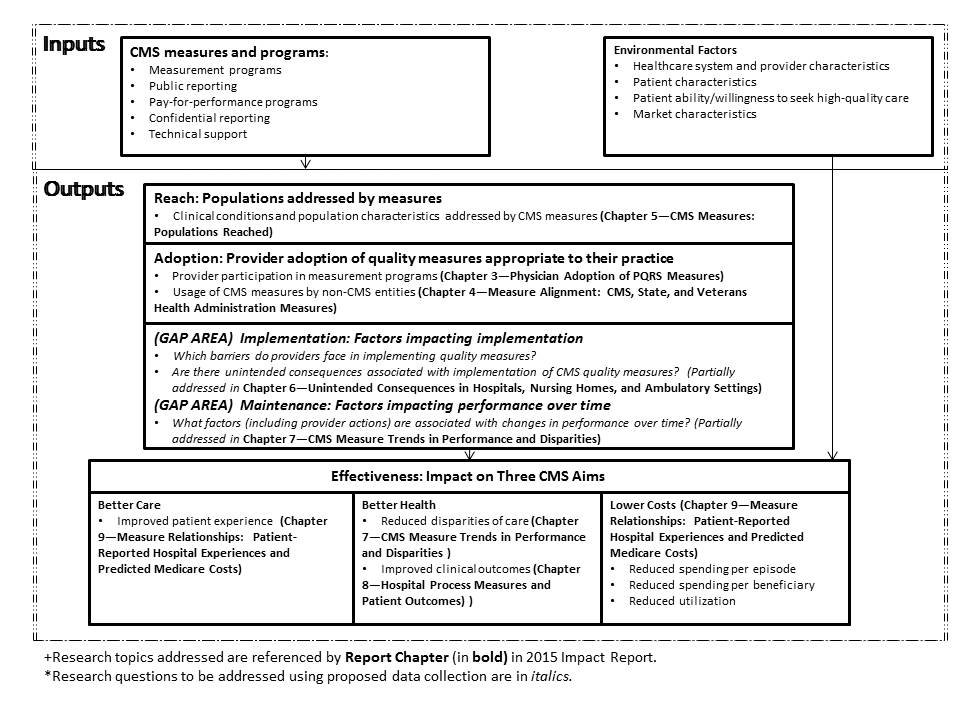

The 2015 Impact Assessment addressed a set of research questions that were developed in consultation with a multidisciplinary Technical Expert Panel (TEP). A logic model was developed to guide the TEP’s work and to frame the 2015 Impact Assessment analyses (Figure 1). In this model, CMS inputs include quality measurement programs and associated incentives and penalties. The potential outputs include greater use of quality measures applicable to the CMS beneficiary population (assessed under “Reach”); providers’ adoption of quality measures appropriate to their practice (assessed under “Adoption”); factors impacting implementation (assessed under “Implementation”), which include barriers to reporting quality measurement data and potential unintended consequences; improved performance over time, including institutional factors underlying such trends (assessed under “‘Maintenance”); and effects on the three aims of better care, better health, and lower costs (assessed under “Effectiveness”).

Figure 1: Logic Model Used to Assess the Impact of CMS Use of Quality Measures (2015 National Impact Assessment)

Figure 2 briefly illustrates the relationship between the logic model in Figure 1 and the analyses that were conducted under the 2015 Impact Assessment. The analyses used a variety of data sources to address the research questions, including CMS documents, administrative data on participation in quality measurement programs, quality measurement data reported to CMS, and claims data (Attachment II). In conducting the 2015 assessment, CMS identified several areas where there was a lack of information to enable impact assessment; the purpose of the proposed data collection is to attempt to fill information gaps associated with how providers perceive factors impacting implementation and performance. Most notably, implementation (i.e., barriers that providers face in implementing measures and potential unintended consequences associated with measure implementation) and maintenance (i.e., factors associated with changes in performance over time) could not be fully addressed due to the lack of appropriate data to measure these impacts. For example, a systematic review of the literature on unintended effects (published as part of the 2015 Impact Report) found that few studies had empirically measured unintended effects and that there was insufficient evidence to gauge whether unintended effects had occurred. Furthermore, few studies have assessed how providers are responding to quality measurement programs, which is a necessary action to improve performance and achieve desired outcomes.

Figure 2: Analyses Completed and Remaining Gaps from 2015 National Impact Assessment

To obtain provider perspectives that address the information gaps, two modes of data collection with nursing home quality leaders are proposed: (1) a semi-structured qualitative interview and (2) a standardized survey. The 2018 Impact Assessment will contain multiple chapters containing various analyses of CMS quality measures. The data from the qualitative interviews and standardized surveys will be analyzed to and the findings summarized as one or more stand-alone chapters of the 2018 Impact Assessment report. Used collectively with the other chapters in the report the analyses of the survey data will provide CMS with information on the impact of quality and efficiency impact of measures that CMS uses to assess care in the nursing home setting. No data other than the two National Provider Surveys will be collected for the 2018 Impact Assessment, although data collected for CMS measurement and payment programs will be used to inform sampling design and analyses of the National Provider Surveys. The latter data sources include Medicare claims, nursing home, and quality measurement data that OMB had previously approved CMS to collect.

The prime contractor to CMS is Health Services Advisory Group, Inc. (HSAG), which will oversee the work of a subcontractor in fielding and analyzing the surveys.

The subcontractor, the RAND Corporation, will generate the sampling frames after conducting analyses of nursing home performance data and other facility characteristics to inform sampling. RAND will oversee the fielding of the surveys (i.e., preparation of the surveys, monitoring response rates, and overseeing the survey vendor). Finally, RAND will conduct qualitative interviews and prepare written summaries of interviews, conduct data analyses of quantitative data from the structured surveys, and prepare the written summary of results for the 2018 report.

CSS, as a subcontractor to RAND, will conduct the two structured surveys, using contact information provided by RAND and HSAG.

While the Nursing Home National Provider Survey OMB/PRA submission is related to the information contained within the Hospital National Provider Survey OMB/PRA submission, it has been submitted as an independent package to allow CMS the flexibility to field the surveys separately.

Section 3014 of the ACA requires that the Secretary of HHS conduct an assessment of the quality and efficiency impact of the use of endorsed measures in specific Medicare quality reporting and incentive programs.5 The ACA further specifies that the initial assessment must occur no later than March 1, 2012, and once every 3 years thereafter. This proposed data collection activity was developed and tested as part of the 2015 Impact Report to be conducted for the 2018 Impact Report, the third such report.

The 2015 analyses focused on addressing the five elements of the logic model; however, two elements of the logic model, (3) implementation (i.e., barriers that providers face in implementing measures and potential unintended consequences associated with measure implementation) and (4) maintenance (i.e., factors associated with changes in performance over time), could not be fully addressed due to the lack of appropriate data to measure these impacts. For example, a systematic review of the literature on unintended effects (published as part of the 2015 Impact Report) found that few studies had empirically measured unintended effects and that there was insufficient evidence to gauge whether unintended effects had occurred. Furthermore, few studies have assessed how providers are responding to quality measurement programs, which is a necessary action to improve performance and achieve desired outcomes. CMS also lacked data about what features differentiate high- and low-performing providers (e.g., use of clinical decision support or investments in quality improvement staff), an improved understanding of which could be used by CMS in the context of its quality improvement work with providers nationally to better inform providers’ investments to advance quality.

As a

result, work was undertaken during the 2015 National Impact

Assessment to develop

data collection tools (i.e., surveys)

that would enable CMS to measure these impacts as part

of the

2018 National Impact Assessment. This OMB package is a request for

review and approval of the surveys that CMS proposes to use to

address the two impact assessment gaps:

1) Implementation

(i.e., are there barriers to implementation and unintended

consequences associated with the use of CMS quality measures?) and 2)

Maintenance (i.e., what factors (including provider actions) are

associated with changes in performance over time?).

The two surveys—a structured survey and a qualitative interview guide—focus on addressing five research questions to assess impact related to the implementation and maintenance elements of the logic model:

Are there unintended consequences associated with implementation of CMS quality measures? (implementation)

Are there barriers to providers in implementing CMS quality measures? (implementation)

Is the collection and reporting of performance measure results associated with changes in provider behavior (i.e., what specific changes are providers making in response?)? (implementation)

What factors are associated with changes in performance over time? (maintenance)

What characteristics differentiate high- and low-performing providers? (maintenance)

The

work conducted to develop these surveys as part of the 2015 Impact

Report included an environmental scan of the literature, formative

interviews to inform construction of the survey, cognitive testing of

draft instruments, and gathering input from the Federal Advisory

Steering Committee (FASC), composed of representatives from federal

agencies (e.g., Agency for Healthcare Research and Quality [AHRQ],

Centers for Disease Control [CDC], Health Resources and Services

Administration [HRSA], Assistant Secretary for Planning and

Evaluation [ASPE]). A document attached to this OMB package (see

Attachment I, “Development of Two National Provider

Surveys”) summarizes the developmental work.

If this

proposal is approved, CMS plans to field these surveys in 2016 and

2017 and to summarize the findings in the 2018 National Impact

Report. Attachment IV to this package contains a crosswalk of the

survey items to the research questions.

Since 1999, CMS has implemented multiple programs and initiatives to require the collection, monitoring, and public reporting of quality and efficiency measures—in the form of clinical, patient experience, and efficiency/resource use measures—to promote improvement in the quality of care delivered to Medicare beneficiaries, close the gap between guidelines for quality care and care delivery, and monitor national progress toward measurable healthcare quality goals outlined in the HHS National Quality Strategy.6 For nursing home care, CMS has implemented quality and efficiency measures through the Nursing Home Quality Initiative (NHQI), Nursing Home Compare, Minimum Data Set or MDS (Versions 2.0 and 3.0), and Short and Long Stay Quality Measures, and reports the results in the form of Star Ratings on the Medicare Nursing Home Compare website.

CMS implementation of quality and efficiency measures has led to positive gains in the use of evidence-based standards of care by providers. To ensure that the nation builds on these gains and to fulfill the requirements of section 3014 of the ACA, CMS has conducted two national assessments, reported in 2012 and 2015. The results from the proposed data collection will be publicly reported as part of the 2018 Impact Report, extending the prior reports by providing CMS with quantitative and qualitative data specific to the use of nursing home quality and efficiency measures. The data will enable CMS to improve measurement programs to achieve the goals identified in the National Quality Strategy.

The data from the qualitative interviews and standardized surveys will be analyzed to provide CMS with information on the quality and efficiency impact of measures that CMS uses to assess care in nursing homes. Specifically, the surveys are designed to help CMS determine whether the use of performance measures has been associated with changes in provider behavior (namely, what investments nursing homes are making to improve performance), what barriers exist related to implementation of the measures, and whether undesired effects are occurring as a result of implementing the quality and efficiency measures.

The results from the standardized survey cannot be used to establish a causal relationship between the use of quality measures by CMS and the investments that providers report they have made. In interpreting associations, it will be impossible to exclude the potential unmeasured effects of other factors that may have led nursing homes to undertake certain actions in response to being measured on their performance. In fact, a variety of payers (both public and private) are measuring the performance of providers using quality measures. However, it is important to note that CMS is the largest payer and the predominant entity measuring performance in the nursing home setting, as Medicare beneficiaries are the largest fraction of patients within nursing homes. Private payers have relied on reusing the results from CMS measurement of nursing home performance rather than creating additional measurement requirements. This minimizes the likelihood that other payers have significantly influenced investments made by nursing homes associated with quality measurement.

The findings from the survey will provide CMS with insights as to whether there have been unintended consequences associated with use of the quality measures that require further investigation by CMS. We illustrate this with an example from the hospital setting: In interviews that RAND conducted with hospitals in 2006 as part of an unrelated project, hospital quality leaders mentioned that a measure requiring receipt of antibiotics within 4 hours of arrival by patients discharged with a diagnosis of pneumonia was leading to unintended effects (i.e., misuse of antibiotics in patients who didn’t have pneumonia). This information led CMS and the measure developer to further investigate the problem, which ultimately led to a change in the measure specification.

By identifying potential barriers that nursing homes face, the survey results will also highlight opportunities where CMS could better support the ability of providers to implement the actions/outcomes called for in the measures, thereby improving the functioning of measurement programs. Lastly, the survey will help CMS identify characteristics associated with high performance, which, if understood, could be used to leverage improvements in care among lower-performing nursing homes.

Other entities are also likely to use the survey results contained in the 2018 report, including nursing homes and organizations that represent nursing homes, quality improvement organizations, researchers who develop measures and evaluate the impacts of quality measurement programs, members of Congress, and measure developers (e.g., The Joint Commission, the National Committee for Quality Assurance, CMS measure development contractors). These entities have been investing significant resources in working to advance quality measurement and performance, and the information will help them gauge the impact of these efforts and to flag areas requiring attention (e.g., problems with individual measures, barriers to improvement, unintended consequences).

Limitations

Although data from the qualitative interviews and standardized surveys will provide information to CMS on the quality and efficiency impact of measures, there are several limitations associated with the interpretation of results from the interviews and surveys.

The qualitative interviews are limited to 40 nursing homes and will capture the experiences and views of a small fraction of all nursing homes participating in the quality measure programs. The purpose of the qualitative interviews is to supplement the national estimates from the structured survey and allow for more in-depth exploration of the topics. The interviews will provide greater detail about what nursing homes are doing in response to quality measures (e.g., contextual factors influencing their behavior, perceived barriers to improvement, reasons that unintended effects might be occurring, and thoughts about how to modify measures to fix those unintended effects). The qualitative interviews are not designed to produce national estimates; rather, the findings will be summarized in a manner such as “Of the 40 nursing homes interviewed, 10 felt that x was a significant barrier to implementation.” These results will not be used to construct national estimates. The findings could identify areas that CMS may wish to explore with nursing homes in more depth as follow-up to the survey.

As described in Supporting Statement B, the standardized survey is designed to produce national estimates as well as subgroup estimates (by nursing home size and performance). The standardized survey will oversample high- and low-performing nursing homes, which will slightly increase the margin of error for national analyses but will significantly improve the ability to determine differences in behavior between high- and low-performing nursing homes.

Neither the qualitative interviews nor the standardized survey was designed to evaluate a causal connection between the use of CMS measures and actions reported by nursing homes. The survey will generate prevalence estimates (e.g., “X% of nursing home quality leaders report hiring more staff or implementing clinical decision support tools in response to quality measurement programs”) and allow examination of the associations between actions reported and the performance of nursing homes, controlling for other factors (e.g., nursing home size, for-profit status).

The standardized survey of nursing home quality leaders will include use of information technology. The initial or primary mode will be a Web-based survey, in which 100 percent of nursing homes in the sample will be asked to respond electronically. Invitations to the Web survey will be sent via email with a United States Postal Service (USPS) letter as backup should an email address not be available. The email will include an embedded link to the Web survey and a personal identification number (PIN) code unique to each nursing home. In addition to promoting electronic submission of survey responses, the Web-based survey will:

Allow respondents to print a copy of the survey for review and to assist response,

Automatically implement any skip logic so that questions dependent on response to a gate or screening questions will appear only as appropriate,

Allow respondents to begin the survey, enter responses, and later complete remaining items, and

Allow sections of the survey to be completed by other individuals at the discretion of the sampled nursing home quality leader.

Nursing home quality leaders who do not respond to emailed and mailed invitations will receive a mailed version of the survey. The mail version will be formatted for scanning.

The semi-structured interview is not conducive to computerized interviewing or collection.

The components of this data collection effort are designed to gather the data necessary to CMS needs for assessing the impact of quality and efficiency measures in the nursing home setting. No similar data collection is currently in use. The proposed information collection does not duplicate any other effort, and the information cannot be obtained from any other source. No data collection using the survey instruments occurred as part of the 2015 Impact Assessment; only formative interview and cognitive testing work with nine nursing homes occurred under the 2015 Impact Assessment project to inform the development of the surveys. Analyses of the surveys will be added as a new component to the 2018 Impact Report.

Survey respondents represent nursing homes participating in the CMS nursing home quality reporting initiative that report the Minimum Data Set Short and Long Stay Quality Measures. These scores are reported on Nursing Home Compare. As classified according to definitions provided in OMB form 837 and by the Small Business Administration,8 a small proportion of responding nursing homes would qualify as small businesses or entities, but this survey is unlikely to have significant impact on them.

This is a one-time data collection conducted in support of the CMS 2018 Impact Report.

There are no special circumstances associated with this information collection request.

The 60-day Federal Register notice published on March 20, 2015. There were no public comments received. As part of the development work, the draft surveys were cognitively tested in July 2014 with nine nursing homes, and changes were made in response to the respondents’ comments. The testing assessed respondents’ understanding of the draft survey items and key concepts and identified problematic terms, items, or response options.

Although nursing homes did not provide comment during the PRA review period, they were involved in developing the semi-structured survey and the standardized survey. The research team conducted formative interviews and cognitively tested the surveys with nursing homes. Nursing home respondents indicated that the content was important and that they could provide answers to the questions. They had knowledge of the CMS measures and provided comments (both positive and negative) about the measures and measurement programs. They also offered suggestions to improve the wording for clarity and ease of responding.

Additionally, the data collection approach and instruments were presented to the project’s Technical Expert Panel and the CMS Federal Advisory Steering Committee (FASC) and other federal agency staff for review and comment. The FASC included representatives from AHRQ, CDC, HRSA, ASPE, and CMS. They reviewed all components of the survey package, including the design, survey instrument, and interview guide, to ensure accuracy, appropriate wording, and rigorous statistical methods. Changes were made in response to the comments provided by the TEP and the FASC. (For more details on this process and the types of changes made in response to comments from affected stakeholders and representatives from federal agencies, please refer to Attachment I, “Development of Two National Provider Surveys.”)

No gifts or incentives will be given to respondents for participation in the survey.

All persons who participate in this data collection, either through the semi-structured interviews or the standardized survey, will be assured that the information they provide will be kept private to the fullest extent allowed by law. Informed consent from participants will be obtained to ensure that they understand the nature of the research being conducted and their rights as survey respondents. Respondents who have questions about the consent statement or other aspects of the study will be instructed to call the RAND principal investigator or RAND’s Survey Research Group survey director and/or the administrator of RAND’s Institutional Review Board (IRB).

The semi-structured interview includes an informed consent and confidentiality script that will be read before any interview. This script is found in the data collection materials contained in Attachment VIII: Interview Topic Guide for Semi Structured Interview of Nursing Home Quality Leaders.

The nursing home quality leaders who participate in the standardized survey will receive informed consent and confidentiality information via the emails and letters inviting them to participate in the Web and mail survey, found in Attachments IX and X.

The study will have a data safeguarding plan to further ensure the privacy of the information collected. For the online survey and semi-structured interviews, a data identifier (ID) will be assigned to each respondent. For the semi-structured interviews, contact information that could be used to link individuals with their responses will be removed from all interview instruments and notes. All interview notes and recordings will be in locked storage in the offices of the staff conducting the interviews. Recordings will be destroyed once notes are reviewed and finalized. The data from the semi-structured interviews will not contain any direct identifiers and will be stored on encrypted media under the control of the interview task lead. Files containing contact information used to conduct semi-structured interviews may also be stored on staff computers or in staff offices following procedures reviewed and approved by RAND’s IRB.

The standardized survey will be collected via an experienced vendor. All electronic files directly related to the administration of the survey will be stored on a restricted drive of the vendor’s secure local area network. Access to data will be limited to those employees identified by the vendor’s chief security officer as working on the specific project. Additionally, files containing survey response data and information revealing sample members’ individual identities are not stored together on the network. No single file will contain both a member’s response data and his or her contact information.

RAND staff and the data collection vendor will destroy participant contact information once all semi-structured and standardized survey data are collected and the associated data files are reviewed and finalized by the project team.

The survey does not include any questions of a sensitive nature.

Table 1 shows the estimated annualized burden and cost for the respondents’ time to participate in this data collection. These burden estimates are based on tests of data collection conducted on nine or fewer entities. The burden estimates represent time that will be spent by respondents completing the survey. Initial work to identify the correct individual to complete the survey within each nursing home was not included in this estimate. As indicated below, the annual total burden hours are estimated to be 639 hours, assuming a response rate of 44 percent.9 The annual total cost associated with the annual total burden hours is estimated to be $63,849.

Table 1: Estimated Annualized Burden Hours and Cost

Collection Task |

Number of Respondents |

Number of Responses per Respondent |

Hours per Response |

Total Burden hours |

Average Hourly Wage Rate* |

Total Cost Burden |

Nursing Home National Provider Survey Semi-structured Interview |

40 |

1 |

1 |

40 |

$99.92 |

$3,997 |

Home National Provider Survey Standardized Survey |

900 |

1 |

.666 |

599 |

$99.92 |

$59,852 |

Totals |

|

|

|

639 |

|

$63,849 |

*Based upon mean hourly wages for General and Operations Managers, “National Compensation Survey: All United States December 2009–January 2011,” U.S. Department of Labor, Bureau of Labor Statistics. The base hourly wage rates have been doubled to account for benefits and overhead.

There are no capital costs or other annual costs to respondents and record keepers.

The cost for sampling, data collection, analysis, and reporting of survey findings for the nursing home quality leader data collection (both semi-structured interviews and a standardized survey) is $964,943.

Nursing Home National Provider Survey cost breakdown:

RAND’s Survey Research Group Internet search to generate phone numbers that will be used to schedule semi-structured interviews: $13,483

RAND’s oversight of nursing home survey vendor: $1,732

Nursing Home National Provider Survey vendor costs: $94,007

Equipment/supplies ($18,802)

Printing ($3,760)

Support staff ($29,142)

Overhead ($42,303)

RAND staff time to layout survey for printing and proofing, prepare the sample file, conduct qualitative interviews, manage the qualitative and quantitative survey data collection, data coding and cleaning, analysis, report production, and revisions: $829,991

CMS staff oversight: $25,730

This is a new information collection request.

For planning purposes, we anticipate data collection will begin no later than January 2016 and conclude in June 2016. Analyses of these data will occur during July through December 2016 to contribute to the draft summary report delivered to CMS in March 2017. The final report will be delivered to CMS no later than April 2017.

Table 2: Timeline of Survey Tasks and Publication Dates

Activity |

Proposed Timing |

Prepare field materials |

October 2015–December 2015 |

Identify target respondent |

October 2015–December 2015 |

Field surveys and conduct qualitative interviews |

January 2016–August 2016 |

Analyze data |

September 2016–December 2017 |

Draft chapter summarizing findings for 2018 Impact Report |

January 2017–March 2017 |

Integrate findings into 2018 Impact Report |

April 2017–June 2017 |

Submit final version of Impact Report to CMS |

July 1, 2017 |

CMS QMVIG Internal Review |

July–August 2017 |

Submit document for SWIFT Clearance |

August 30, 2017 |

Publish 2018 Impact Report |

March 1, 2018 |

Prepare additional products to disseminate findings |

December 2017–June 2018 |

In addition to summarizing the findings for the 2018 National Impact Report, HSAG will work with CMS to develop timelines for broad dissemination of the results, which may include peer-reviewed publications. Such publications will increase the impact of this work by exposing the results to a broader audience of nursing home administrators and policymakers. The publication of the 2018 Impact Report will result in additional dissemination through press releases, open door calls, and other events.

CMS proposes to display the expiration date for OMB approval of this information collection on the document that details the topics addressed in the semi-structured interview and on the standardized survey (introductory screen of Web version, front cover of mailed version). The requested expiration date is 36 months from the approved date.

References

Cycyota CS, Harrison DA. What (not) to expect when surveying executives: a meta-analysis of top manager response rates and techniques over time. Organizational Research Methods. 2006;9:133–160.

Baruch Y, Holton BC. Survey response rate levels and trends in organizational research. Human Relations, 2008;61:1139–1160.

1 Center for Medicare & Medicaid Services. National Impact Assessment of Medicare Quality Measures. March 2012. Available at: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/QualityMeasures/QualityMeasurementImpactReports.html.

2

The eight programs are: 1)

Hospital Inpatient Quality Reporting System (Hospital IQR), 2)

Hospital Outpatient Quality Reporting (Hospital OQR), 3) Physician

Quality Reporting System (PQRS), 4) Nursing Home (NH), 5) Home

Health (HH), 6) End-Stage Renal Disease (ESRD),

7) Medicare

Part C (Part C), and 8) Medicare Part D (Part D).

3 Measures under consideration are measures that have not been finalized in previous rules and regulations for a particular CMS program and that CMS is considering for adoption through rulemaking for future implementation

4 Center for Medicare & Medicaid Services. 2015 National Impact Assessment of the Centers for Medicare & Medicaid Services (CMS) Quality Measures Report, CMS, Baltimore, Maryland, March 2, 2015. Available at: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/QualityMeasures/QualityMeasurementImpactReports.html

5 The Patient Protection and Affordable Care Act - Pub. L. 111-148, 124 STAT. 1023, U.S. Congress (2010).

6 U.S. Department of Health and Human Services. Report to Congress: National Strategy for Quality Improvement in Health Care. 2011.

7 A small entity may be (1) a small business, which is deemed to be one that is independently owned and operated and that is not dominant in its field of operation; (2) a small organization that is any not-for-profit enterprise that is independently owned and operated and is not dominant in its field; or (3) a small government jurisdiction, which is a government of a city, county, town, township, school district, or special district with a population of less than 50,000 (https://www.whitehouse.gov/sites/default/files/omb/inforeg/83i-fill.pdf ).

8 The Small Business Administration classifies non-hospital entities with average annual receipts of no more than $7.5 million as small businesses (https://www.sba.gov/content/summary-size-standards-industry-sector).

9 Supporting Statement B contains the justification for the assumption of a 44% response rate.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Subject | Passback3 |

| Author | Julie Brown |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy