Proposal for provider survey nonresponse follow up

SRCWHCP_Proposal for provider survey nonresponse follow up_8-24-15.docx

Rural Community Wealth and Health Care Provision Survey (RCWHCPS)

Proposal for provider survey nonresponse follow up

OMB: 0536-0072

Survey of Rural Community Wealth and HealthCare Provision

Proposal for Provider Survey Non-Response Follow-Up Activities

USDA Economic Research Service and

Iowa State University Survey & Behavioral Research Services

August 2015

The following report is in reference to:

ICR Reference #201310-0536-001

OMB Control #0536-0072

OMB Action Date 01/23/2014

The Survey of Rural Community Wealth and Health Care Provision (SRCWHCP) is under the direction of John Pender, Senior Economist with the USDA Economic Research Service (ERS) and principal investigator (PI) for the project. Data collection is conducted through a cooperative agreement with Iowa State University’s Center for Survey Statistics & Methodology (CSSM) and Survey & Behavioral Research Services (SBRS).

The primary purpose of the study is to provide information about how rural small towns can attract and retain health care providers, considering the broad range of assets and amenities that may attract providers. The secondary purpose is to provide information on how improving health care may affect economic development prospects of rural small towns. ERS seeks to address these purposes by obtaining input from community leaders (key informants) and primary health care providers in 150 sampled rural small towns and by conducting secondary analysis of existing health and economic indicators. The data collection effort was divided into three phases. Phase 1 consisted of key informant telephone interviews of health facility administrators and community leaders, Phase 2 consisted of mail/web surveys of primary health care providers (physicians, dentists, nurse practitioners (NPs), physician assistants (PAs), and nurse midwives (MWs)), and Phase 3 was designed to include site visits to a sub-set of the study communities.

A pilot study of the Phase 2 health care provider survey was conducted with 12 of the 150 communities and the results were reviewed and approved by OMB prior to implementation of the survey in the remaining 138 communities, per the Notice of Office of Management and Budget Action dated March 4, 2015. The provider survey has been completed in all 150 communities, with 366 web/mail surveys received. In this report, we propose a follow-up effort to address non-response to the health care provider survey.

Proposed Phase 2 Health Care Provider Non-Response Follow-up

Rationale for a Non-Response Follow-up

The health care provider survey was pilot tested in 12 communities with a total sample of 84 providers. After removing 5 ineligible providers, the resulting sample of 79 yielded 20 completed surveys, for a response rate of 25.3%. Additional efforts were proposed, approved, and implemented in an effort to improve the response rate and total number of respondents in the remaining 138 communities. These efforts included i) contacting sampled providers by mail with a follow-up phone call to request or verify the provider’s email address, and sending a follow-up email to the provider with a link to the web survey; and ii) increasing the maximum number of providers sampled per community from 10 to 32 (where more than 10 providers were found). Unfortunately, despite these efforts, and incorporation of many best practices to maximize survey response rates as described in the OMB Guidance on Agency Survey and Statistical Information Collections (dated January, 2006)1, the survey response in the full survey was still much less than anticipated. The final overall provider response rate for all 150 communities was 23.3%. The tables below show provider response for the full sample of 1821 providers in the 150 communities, first by region (Table 1) and second by provider type (Table 2).

Table 1. Health Care Provider Survey Outcomes by Region

|

Upper |

Southern Great Plains |

Mississippi Delta |

Total |

Sample |

951 |

513 |

357 |

1821 |

Not Eligible |

77 |

101 |

73 |

251 |

Eligible Sample |

874 |

412 |

284 |

1570 |

Refuse |

4 |

13 |

1 |

18 |

No Response |

625 |

315 |

246 |

1186 |

Completed Surveys |

245 |

84 |

37 |

366 |

Response Rates |

28.0% |

20.4% |

13.0% |

23.3% |

Table 2. Health Care Provider Survey Outcomes by Provider Type

|

Dentists |

NPs/PAs/MWs |

Physicians |

Total |

Sample |

472 |

562 |

787 |

1821 |

Not Eligible |

57 |

101 |

93 |

251 |

Eligible Sample |

415 |

461 |

694 |

1570 |

Refuse |

5 |

6 |

7 |

18 |

No Response |

287 |

338 |

561 |

1186 |

Completed Surveys |

123 |

117 |

126 |

366 |

Response Rates |

29.6% |

25.4% |

18.2% |

23.3% |

As these tables show, even the highest regional response rate (28.0% in the Upper Midwest) and provider type response rate (29.6% for dentists) were much less than the 80% response initially planned for. This reduces the statistical power of our analysis and increases concern about potential nonresponse bias.

An examination of some of the initial survey findings indicates the value of a non-response follow-up effort. Table 3 provides the estimated share of health care providers, in the entire study population and by region and provider type, who indicated that particular factors were important or very important in their initial decision to work in the town being studied, and the standard errors of these estimates.2 For the survey population as a whole, the standard errors are relatively small, and it is possible to distinguish which responses were more commonly considered to be important among this population. For example, the five most commonly cited factors as being important for the respondents’ decisions to work in the study town were “Friendliness of the people”, “Good place to raise a family”, “Need for providers here”, “Opportunities for professional growth”, and “Reasonable workload”. The estimated mean share of the population of health care providers in the study towns that cited these factors as important was more than 70%, and the standard errors were less than 4% in all of these cases. By contrast, the five factors least commonly cited as important were “Placement through a program”, “Recruitment efforts by the town”, “Cultural amenities”, “Low taxes”, and “Opportunity to own a practice”. The estimated share of health care providers citing these factors as important was less than 40% in all cases, again with standard errors less than 4%. Hence, it is clearly possible to distinguish with statistical confidence the factors that are the most commonly cited as important from those that are the least commonly cited for the study population as a whole.

However, it is less possible to distinguish with statistical confidence the rankings among factors that are more closely ranked. For example, we are not confident that “Quality of the schools” (estimated share = 0.687, standard error = 0.039) actually ranks lower than “Reasonable workload” (estimated share = 0.704, standard error = 0.029) in the study population. Distinguishing the rankings of such factors is potentially important for policy purposes, since some of these factors (such as school quality) are more amenable to direct influence by policies or local community efforts than others (such as reasonable workload). With a larger sample size, our ability to distinguish the relative importance of different factors would be enhanced.

Another important benefit of increased sample size would be to increase the statistical power to test for differences in responses across regions, provider types, or other domains. Differences in mean responses across domains that are statistically significant are indicated with asterisks in Table 3 (*, **, and *** indicate statistically significant differences at the 10%, 5%, and 1% levels, respectively). We find statistically significant differences (at the 5% level or less) across regions for only 5 of the 23 factors investigated, and statistically significant differences across provider types for 9 of the 23 factors. In many cases, rather large estimated mean differences across domains are not statistically significant because of the relatively large standard errors resulting from domain estimation. For example, “Quality of the schools” was cited as an important factor by an estimated 74% of the population of providers in the Upper Midwest (UMW), but only by 64% of the providers in the Southern Great Plains (SGP) and 59% of the providers in the Lower Mississippi Delta (LMD). These differences, while fairly large, are not statistically significant. This limits our ability to draw meaningful conclusions (in this case) about how the importance of different factors affecting health care providers’ decisions to work in rural small towns varies across domains.

A larger sample size would enable us to distinguish more of the differences across domains. As a hypothetical example, Table 4 shows the same estimated means as in Table 3, but estimates the standard errors under the assumption that 25 percent of the current nonrespondents decide to respond as a result of the gift payments that we propose to implement.3 The standard errors would be much smaller in most cases, resulting in many more statistically significant differences across domains. For example, for the factor “Quality of the schools”, the differences across regions in the share of providers citing this as an important factor would be statistically significant in this scenario, though these differences are not statistically significant with the current sample.

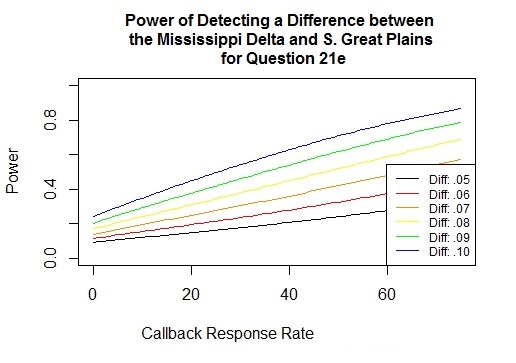

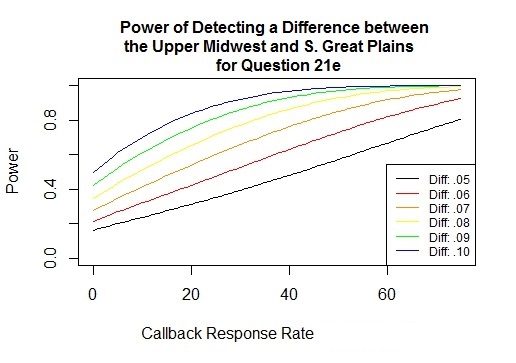

The power curves shown in Figures 1 and 2 show how the statistical power to distinguish differences between regions in the importance of the quality of schools would vary depending on the response rate to a follow-up effort to increase sample response, and on the mean difference that we are seeking to detect. These curves indicate that a 25 percent response rate among current nonrespondents would substantially increase the power to detect a mean difference of 0.10 in the response to this question between the Upper Midwest and Southern Great Plains regions (similar to the difference observed in the current sample) (Figure 2), but would not greatly increase the power to detect a difference less than 0.05 between the Lower Mississippi Delta and Southern Great Plains regions (the magnitude of the difference observed in the current sample was about 0.04) (Figure 1). In general, the power curves for this and other questions indicate that in many cases, substantial increases in the statistical power to detect mean differences of 0.10 between regions or provider types would be possible with a 25 percent response of the current nonrespondents, while the power to detect differences of 0.05 or less would remain low in most cases.

Of course, the mean responses might not be the same in the nonrespondent group as in the initial respondent group. If that is the case, all of our results would be subject to a nonresponse bias of indeterminate magnitude. Another very important benefit of conducting the proposed follow-up effort would be that it would enable us to investigate whether nonresponse bias is a concern, and to correct for it if it is. By comparing differences in mean responses between the initial respondents and the later respondents (and controlling for other factors such as region and provider type), we would be able to test whether there are statistically significant differences between these groups. Failure to find statistically and quantitatively significant differences between the early and late responders would be evidence against a concern about response bias. If significant differences between these groups is found in their responses to the survey, we will investigate whether the differences are associated with differences in respondent characteristics, such as the respondent’s region, provider type, and the demographic and economic indicators collected by the survey. To the extent such factors explain differences between the early and late responders, we can use those measured differences to correct for non-response bias by incorporating a propensity score into the estimator for mean responses, which accounts for the different probabilities of different providers to respond to the survey. The propensity score estimates will be based on the observed factors found to be associated with both the providers’ propensity to respond to the survey and with the survey responses.

Table 3. Importance of factors affecting decision to work in this town (share of responses indicating important or very important, jackknife standard errors in parentheses)

Question Q21_ |

Factor |

All responses |

Rank |

By Region |

By Provider Type |

||||

LMD |

SGP |

UMW |

Dentist |

NP/PA/MW |

Physician |

||||

A |

Familiarity with the area |

0.564 (0.036) |

12 |

0.510 (0.111) |

0.577 (0.084) |

0.569 (0.039) |

0.472* (0.061) |

0.647* (0.060) |

0.560* (0.060) |

B |

Opportunities for spouse/partner |

0.440 (0.037) |

17 |

0.353 (0.082) |

0.426 (0.079) |

0.469 (0.046) |

0.383 (0.059) |

0.521 (0.059) |

0.416 (0.063) |

C |

Relatives or friends nearby |

0.592 (0.029) |

10 |

0.660 (0.104) |

0.553 (0.063) |

0.598 (0.029) |

0.599 (0.059) |

0.665 (0.049) |

0.536 (0.060) |

D |

Good place to raise a family |

0.784 (0.037) |

2 |

0.829 (0.047) |

0.742 (0.092) |

0.798 (0.040) |

0.858*** (0.035) |

0.658*** (0.061) |

0.829*** (0.049) |

E |

Quality of schools |

0.687 (0.039) |

7 |

0.594 (0.084) |

0.636 (0.094) |

0.739 (0.041) |

0.759** (0.057) |

0.559** (0.063) |

0.734** (0.055) |

F |

Size of town |

0.583 (0.040) |

11 |

0.499 (0.101) |

0.604 (0.096) |

0.591 (0.045) |

0.545 (0.062) |

0.551 (0.062) |

0.628 (0.058) |

G |

Recreational opportunities |

0.476 (0.034) |

14 |

0.258*** (0.091) |

0.339*** (0.052) |

0.608*** (0.045) |

0.518 (0.055) |

0.505 (0.059) |

0.429 (0.058) |

H |

Natural amenities |

0.525 (0.038) |

13 |

0.326** (0.095) |

0.458** (0.066) |

0.612** (0.049) |

0.474 (0.060) |

0.552 (0.058) |

0.536 (0.064) |

I |

Cultural amenities |

0.200 (0.027) |

21 |

0.111** (0.047) |

0.123** (0.042) |

0.267** (0.038) |

0.236 (0.047) |

0.202 (0.051) |

0.177 (0.040) |

J |

Social opportunities |

0.454 (0.036) |

16 |

0.543 (0.098) |

0.410 (0.082) |

0.459 (0.040) |

0.436 (0.055) |

0.435 (0.054) |

0.478 (0.057) |

K |

Friendliness of the people |

0.787 (0.036) |

1 |

0.798 (0.081) |

0.776 (0.098) |

0.792 (0.029) |

0.770 (0.059) |

0.827 (0.056) |

0.769 (0.049) |

L |

Availability of goods/services |

0.466 (0.032) |

15 |

0.560 (0.096) |

0.462 (0.069) |

0.446 (0.037) |

0.469 (0.057) |

0.533 (0.058) |

0.417 (0.060) |

M |

Low taxes |

0.224 (0.028) |

20 |

0.232 (0.082) |

0.313 (0.076) |

0.169 (0.022) |

0.191 (0.047) |

0.264 (0.046) |

0.214 (0.050) |

N |

Low cost of living |

0.384 (0.027) |

18 |

0.467*** (0.082) |

0.529*** (0.060) |

0.279*** (0.031) |

0.344 (0.055) |

0.449 (0.055) |

0.362 (0.054) |

O |

Need for providers here |

0.731 (0.037) |

3 |

0.857 (0.068) |

0.700 (0.094) |

0.718 (0.038) |

0.627 (0.062) |

0.749 (0.054) |

0.781 (0.051) |

P |

Recruitment efforts by town |

0.185 (0.026) |

22 |

0.373* (0.090) |

0.138* (0.042) |

0.168* (0.033) |

0.023*** (0.011) |

0.098*** (0.025) |

0.344*** (0.055) |

Q |

Placement through program |

0.086 (0.018) |

23 |

0.171 (0.059) |

0.081 (0.032) |

0.069 (0.023) |

0.024*** (0.012) |

0.109*** (0.032) |

0.108*** (0.031) |

R |

Quality of medical facilities |

0.623 (0.036) |

8 |

0.668** (0.074) |

0.455** (0.078) |

0.711** (0.032) |

0.406*** (0.056) |

0.627*** (0.059) |

0.750*** (0.053) |

S |

Quality of medical community |

0.698 (0.029) |

6 |

0.717* (0.077) |

0.597* (0.064) |

0.753* (0.029) |

0.478*** (0.056) |

0.694*** (0.062) |

0.833*** (0.038) |

T |

Opportunities for professional growth |

0.730 (0.034) |

4 |

0.810 (0.063) |

0.724 (0.088) |

0.715 (0.033) |

0.708 (0.052) |

0.767 (0.057) |

0.718 (0.049) |

U |

Opportunity to own a practice |

0.381 (0.032) |

19 |

0.399 (0.112) |

0.366 (0.068) |

0.385 (0.036) |

0.859*** (0.035) |

0.093*** (0.029) |

0.297*** (0.054) |

V |

Good financial package |

0.616 (0.035) |

9 |

0.672 (0.088) |

0.574 (0.067) |

0.628 (0.047) |

0.392*** (0.058) |

0.772*** (0.045) |

0.641*** (0.059) |

W |

Reasonable workload |

0.704 (0.029) |

5 |

0.818 (0.057) |

0.682 (0.062) |

0.689 (0.038) |

0.632** (0.060) |

0.806** (0.042) |

0.675** (0.056) |

***, **, * indicate that differences in means between these domains are statistically significant at 1%, 5%, and 10% level, respectively.

Table 4. Importance of factors affecting decision to work in this town (same share of responses indicating important or very important as in Table 3, estimated standard errors in parentheses if 25% of current nonrespondents respond to the survey)

Question Q21_ |

Factor |

All responses |

Rank |

By Region |

By Provider Type |

||||

LMD |

SGP |

UMW |

Dentist |

NP/PA/MW |

Physician |

||||

A |

Familiarity with the area |

0.564 (0.017) |

12 |

0.510 (0.058) |

0.577 (0.033) |

0.569 (0.021) |

0.472** (0.053) |

0.647** (0.045) |

0.560** (0.037) |

B |

Opportunities for spouse/partner |

0.440 (0.017) |

17 |

0.353** (0.048) |

0.426** (0.037) |

0.469** (0.020) |

0.383* (0.038) |

0.521* (0.047) |

0.416* (0.031) |

C |

Relatives or friends nearby |

0.592 (0.019) |

10 |

0.660 (0.061) |

0.553 (0.038) |

0.598 (0.021) |

0.599* (0.055) |

0.665* (0.045) |

0.536* (0.037) |

D |

Good place to raise a family |

0.784 (0.021) |

2 |

0.829 (0.044) |

0.742 (0.039) |

0.798 (0.032) |

0.858*** (0.029) |

0.658*** (0.052) |

0.829*** (0.046) |

E |

Quality of schools |

0.687 (0.020) |

7 |

0.594** (0.064) |

0.636** (0.038) |

0.739** (0.024) |

0.759*** (0.056) |

0.559*** (0.048) |

0.734*** (0.035) |

F |

Size of town |

0.583 (0.018) |

11 |

0.499 (0.057) |

0.604 (0.036) |

0.591 (0.021) |

0.545 (0.053) |

0.551 (0.045) |

0.628 (0.036) |

G |

Recreational opportunities |

0.476 (0.016) |

14 |

0.258*** (0.042) |

0.339*** (0.030) |

0.608*** (0.022) |

0.518 (0.047) |

0.505 (0.043) |

0.429 (0.028) |

H |

Natural amenities |

0.525 (0.018) |

13 |

0.326*** (0.043) |

0.458*** (0.035) |

0.612*** (0.024) |

0.474 (0.050) |

0.552 (0.044) |

0.536 (0.030) |

I |

Cultural amenities |

0.200 (0.011) |

21 |

0.111*** (0.034) |

0.123*** (0.015) |

0.267*** (0.016) |

0.236 (0.025) |

0.202 (0.032) |

0.177 (0.024) |

J |

Social opportunities |

0.454 (0.016) |

16 |

0.543 (0.059) |

0.410 (0.030) |

0.459 (0.019) |

0.436 (0.050) |

0.435 (0.043) |

0.478 (0.027) |

K |

Friendliness of the people |

0.787 (0.022) |

1 |

0.798 (0.073) |

0.776 (0.044) |

0.792 (0.025) |

0.770 (0.048) |

0.827 (0.047) |

0.769 (0.038) |

L |

Availability of goods/services |

0.466 (0.018) |

15 |

0.560 (0.062) |

0.462 (0.038) |

0.446 (0.018) |

0.469 (0.053) |

0.533 (0.049) |

0.417 (0.028) |

M |

Low taxes |

0.224 (0.013) |

20 |

0.232*** (0.047) |

0.313*** (0.027) |

0.169*** (0.012) |

0.191 (0.044) |

0.264 (0.037) |

0.214 (0.027) |

N |

Low cost of living |

0.384 (0.016) |

18 |

0.467*** (0.057) |

0.529*** (0.036) |

0.279*** (0.015) |

0.344 (0.050) |

0.449 (0.047) |

0.362 (0.030) |

O |

Need for providers here |

0.731 (0.021) |

3 |

0.857 (0.072) |

0.700 (0.042) |

0.718 (0.024) |

0.627* (0.060) |

0.749* (0.045) |

0.781* (0.040) |

P |

Recruitment efforts by town |

0.185 (0.010) |

22 |

0.373*** (0.041) |

0.138*** (0.016) |

0.168*** (0.012) |

0.023*** (0.007) |

0.098*** (0.020) |

0.344*** (0.023) |

Q |

Placement through program |

0.086 (0.009) |

23 |

0.171* (0.042) |

0.081* (0.021) |

0.069* (0.007) |

0.024*** (0.009) |

0.109*** (0.022) |

0.108*** (0.021) |

R |

Quality of medical facilities |

0.623 (0.019) |

8 |

0.668*** (0.065) |

0.455*** (0.032) |

0.711*** (0.024) |

0.406*** (0.041) |

0.627*** (0.043) |

0.750*** (0.034) |

S |

Quality of medical community |

0.698 (0.021) |

6 |

0.717*** (0.066) |

0.597*** (0.041) |

0.753*** (0.025) |

0.478*** (0.043) |

0.694*** (0.051) |

0.833*** (0.037) |

T |

Opportunities for professional growth |

0.730 (0.021) |

4 |

0.810 (0.062) |

0.724 (0.039) |

0.715 (0.024) |

0.708 (0.048) |

0.767 (0.045) |

0.718 (0.032) |

U |

Opportunity to own a practice |

0.381 (0.015) |

19 |

0.399 (0.053) |

0.366 (0.029) |

0.385 (0.017) |

0.859*** (0.029) |

0.093*** (0.027) |

0.297*** (0.023) |

V |

Good financial package |

0.616 (0.021) |

9 |

0.672 (0.076) |

0.574 (0.042) |

0.628 (0.023) |

0.392*** (0.031) |

0.772*** (0.027) |

0.641*** (0.038) |

W |

Reasonable workload |

0.704 (0.022) |

5 |

0.818 (0.056) |

0.682 (0.060) |

0.689 (0.024) |

0.632** (0.054) |

0.806** (0.036) |

0.675** (0.050) |

***, **, * indicate that differences in means between these domains are statistically significant at 1%, 5%, and 10% level, respectively.

Figure 1. Power of detecting a difference between the Mississippi

Delta and Southern Great Plains in the mean response to Question 21e

Figure 2. Power of detecting a difference between the Upper Midwest and Southern Great Plains in the mean response to Question 21e

Proposed Non-Response Follow-up

The development of an effective non-response follow-up protocol begins with an examination of the protocol already implemented. The sample frame of providers used for Phase 2 data collection included names and workplace addresses but no email addresses. The project survey materials consisted of an attractive printed survey accompanied by a coordinated project brochure, cover letter, and return envelope all in a simple, clean, project folder. The survey folders were sent via U.S. mail to sampled providers at their workplace. The survey mailing was followed by a telephone call to the provider’s workplace. Although these calls did not provide access to the providers themselves, they served several purposes. First, project staff were able to verify provider names and addresses. Second, email addresses for some providers were obtained so that personalized survey links could be emailed directly to those providers. Third, the call served as a reminder to complete the survey, even if only through the receptionist or assistant. The final step in the data collection protocol was a second survey mailing to non-respondents.

Much methodological research has been done to identify ways of maximizing survey response rates both for the general population and specifically for physicians. Traditionally health care providers have been a particularly difficult sample group to survey. Table 5 identifies data collection protocols recommended for physician surveys according to published literature. This literature review suggests that: i) an incentive payment of at least $20 is necessary to stimulate a substantial increase in response rate, and increasing the payment above this level (to as much as $50) is likely to increase the response rate further; ii) an unconditional gift is more effective in stimulating response than an offer of a payment conditional upon completion of the survey; iii) a cash gift is more effective than a gift card or payment in kind; iv) delivery of the gift and survey by courier or Priority Mail is more effective than delivery by First Class mail; and v) inclusion of a personalized letter and a stamped return envelope for the mail survey help to increase response rate. We have designed our proposed follow-up activity based on these recommended best practices and upon consultations with two experts in the Department of Health and Human Services – Doris Lefkowitz, who directs the Medical Expenditure Panel Survey for the Agency for Healthcare Research and Quality, and David Woodwell, who formerly led the National Ambulatory Medical Care Survey for the National Center for Health Statistics, and who was a member of the Technical Advisory Committee for this project. Both of these individuals indicated that an incentive payment of $50 can increase response rates, and Ms. Lefkowitz said that it is not clear that paying more than $50 does much to further increase response rates.

Table 5. Recommended Practices for Physician Surveys

Recommended Practice |

Description |

Citation |

Pre-paid cash incentives yield the highest response |

“Physician participation in surveys has been shown to be effectively increased through the use of incentives, especially when the incentive is monetary and offered in advance of completing a survey (prepaid) versus being offered contingent on completion of a survey (promised).” [4 citations] This article describes a 2009 mail survey experiment with physician survey non-respondents, using four types of $25 incentives: pre-paid cash, pre-paid check, promised check, and promised check requiring a Social Security Number. Response rate of the pre-paid cash group was 14 percentage points higher than the pre-paid check group (34% vs 20%). Response rates for the other two groups were 10% and 8%. This non-response follow-up effort raised overall project response from 44% to 54%. |

James, K.M., J.Y. Ziegenfuss, J.C. Tilburt, A.M. Harris, T.J. Beebe. 2011. “Getting Physicians to Respond: The Impact of Incentive Type and Timing on Physician Survey Response Rates.” Health Services Research 46(1), Part 1:232-242 |

Pre-paid $50 check yielded higher response than pre-paid $20 check |

“A $50 check incentive was much more effective than a $20 check incentive at increasing responses to a mailed survey of moderate length. As physicians become increasingly burdened with surveys, larger incentives may be necessary to engage potential respondents and thus maximize response rates.” This article describes a 2005 mail survey with web option in which $20 and $50 pre-paid check incentives were enclosed with the initial survey mailing (sent priority mail, using stamped return envelopes) as well as with a third survey mailing. Final response rate for the $50 group was 68% and response rate for the $20 group was 52%. |

Keating, N.L., A.M. Zaslavsky, J. Goldstein, D.W. West, J. Z. Ayanian. 2008. “Randomized Trial of $20 Versus $50 Incentives to Increase Physician Survey Response Rates.” Medical Care 46(5). 878-881. |

Stamped return envelopes |

“There is evidence that using a stamped envelope can improve response rates by a few percentage points over sending a business reply envelope.” [3 citations] |

Dillman, D.A., J.D. Smyth, L.M. Christian (2014). Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method. New Jersey: Wiley and Sons. p. 372. |

Special mail delivery methods (Fed-Ex, UPS, Priority Mail, Certified Mail) |

“We have observed many other tests of priority mail by courier in which an increment of additional response is attributable to use of courier or two-day priority U.S. Postal Service mail. For example, Brick et al. (2012) found that sending a final reminder via priority mail resulted in about a 5 percentage point advantage over sending it via first-class mail.” |

Dillman, D.A., J.D. Smyth, L.M. Christian (2014). Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method. New Jersey: Wiley and Sons. p. 381. |

Stamps Special mail delivery Prepaid incentives Mid-range incentives Short mail surveys Multi-mode surveys Postcard/Phone reminders

|

This article reviewed 136 publications from 1987 to 2007. Conclusions identified the following best practices in surveying physicians: Use a personalized pre-notification letter, use real postage stamps, use special mail delivery methods, use letters of endorsement when possible. Use prepaid incentives large enough to be viewed as a reasonable token of appreciation (more than $2 or $5) but not large enough to be considered payment (less than $100 or $200). Keep the survey short, use mail surveys as the primary part of a multi-mode administration method, use postcard or telephone reminders. |

Flanigan, T.S., E. McFarlane, S. Cook. 2008. “Conducting Survey Research among Physicians and other Medical Professionals – A Review of Current Literature.” Proceedings of the Survey Research Methods Section, American Association for Public Opinion Research, pp. 4136-4147. |

The current project faces two specific constraints. First, project funds are available for a non-response follow-up effort, but a significant part of those funds will expire as of September 30, 2015, so the process must proceed quickly. Second, this project is being conducted in small communities. Many of the non-respondents are working in the same clinics as providers who did respond earlier this year, and any non-response efforts are likely to be apparent to both groups. Hence, for considerations of fairness, any payments provided at this point should be provided to the existing respondents as well as non-respondents.

Based on these constraints and the recommendations for physician surveys in published literature, we propose the following non-response follow-up effort:

One final contact will be made to non-respondents of all three provider types in all three regions.

As much as time allows, telephone calls will be made to the clinics of those providers to verify that the providers are still at that address. This could slightly reduce the number of non-respondents who will receive the survey and gift.

The survey will be sent with a personalized letter and a $40 unconditional incentive payment to non-respondents via Priority U.S. Mail, using a flat rate envelope that is tracked and insured.4

Return envelopes will use postage stamps rather than business reply envelopes.

The 366 respondents who completed the survey earlier this year will be sent a “thank you” letter with a $40 cash gift enclosed.

There will be no changes to the mail/web survey questions. The cover letter to non-respondents has been revised to address the additional contact attempt with incentive payment enclosed. A thank you letter has also been developed to accompany the gifts sent to earlier respondents.

Based on the findings of James, et. al. (2011) (see Table 5), we estimate that 25% of the 1186 non-respondents contacted in this manner may complete the survey. This would provide another 296 completed surveys, bringing the total to 662 (approximately 42% of the eligible sample of 1570). While still well below the OMB goal of 80%, this would increase the power for overall analysis and contribute valuable information for the analysis of non-response bias.

Revised Burden Estimate

The estimated burden to date on sampled providers to date is shown in Table 6, which includes the total number of primary health care providers contacted for the mail/web survey, the outcomes, and the burden in minutes. The estimate allows 15 minutes for each sampled provider to read and review the survey materials and decide whether or not to participate and an additional 15 minutes to complete the survey, either online or on paper. The resulting total burden to date for the Health Care Provider Survey is 546.75 hours.

Table 6. Health Care Provider Survey Outcome Totals and Burden to Date

Outcomes |

Number |

Average Minutes per Case |

Total Minutes |

Total Hours |

Not Eligible |

251 |

15 |

3765 |

62.75 |

Refused |

18 |

15 |

270 |

4.5 |

No Response |

1186 |

15 |

17,790 |

296.5 |

Completed Surveys |

366 |

301 |

10,980 |

183.0 |

TOTAL |

1821 |

|

32,805 |

546.75 |

1The 30 minute time allocation for each Completed Survey includes 15 minutes for reviewing the request and reading enclosed materials and 15 minutes for completing the survey.

The estimated burden on sampled providers after incorporating the non-response follow-up is shown in Table 7. This estimate adds 3 minutes for each of the 366 Completed Surveys (Spring) group for receiving the “thank you” letter with a gift card. Because the non-respondents have already been contacted twice in the past, the burden estimate includes an additional 5 minutes to open the mailing and review the materials, both for those who do not respond and for those who do complete the survey. Completing the survey is still estimated as 15 minutes. The resulting total burden to complete the Health Care Provider Survey, including the proposed non-response follow-up, is 735.38 hours. This is 60 hours more than the total burden estimated in the ICR for the health care provider surveys (675 hours). However, the ICR also estimated 547.5 hours of burden for the Phase 3 component. Due to timeline constraints, Phase 3 activities will be much more limited than originally planned; so the overall project burden will be less than the ICR estimate. Revised Phase 3 activities will be proposed in a subsequent document.

Table 7. Health Care Provider Survey Outcome Totals and Burden with Non-Response Follow-up

Outcomes |

Number |

Average Minutes per Case |

Total Minutes |

Total Hours |

Not Eligible |

251 |

15 |

3765 |

62.75 |

Refused (Spring) |

18 |

15 |

270 |

4.5 |

Refused (Fall) |

10 |

5 |

50 |

.8 |

No Response (Spring & Fall) |

880 |

20 |

17,600 |

293.3 |

Completed Surveys (Spring) |

366 |

33 |

12,078 |

201.3 |

Completed Surveys (Fall) |

296 |

35 |

10,360 |

172.7 |

TOTAL |

1821 |

|

44,123 |

735.38 |

1 Among the best practices recommended by OMB that were implemented in the health care provider survey were to send a personally-addressed advance letter and project brochure to the respondents, which provided clear explanations of the who, what, when, why and how of the survey; indicated how long the survey was expected to take (about 15 minutes); clarified that participation was voluntary; included contact numbers for respondents to verify the legitimacy of the survey or ask questions; indicated that the information collected was to be used for statistical purposes only and provided a confidentiality pledge under CIPSEA. Multiple modes for completing the survey (mail and web) were offered to respondents, and follow-up calls and emails (where possible) were attempted. It is difficult to reach health care providers by telephone, as they usually have administrative staff serving as gate-keepers, and it also proved difficult to obtain their email addresses for the same reason. The survey questionnaire was designed to be as simple and user-friendly as possible, with use of cognitive interviews, pretesting, and pilot phase testing to ensure that the questions were understandable and that the time required was as short as possible and consistent with our estimates.

2 The estimated shares in Table 3 are weighted by the inverse probability of each respondent being sampled, so these estimates are representative of the target population of the study. The Jackknife method was used to compute standard errors, accounting for the primary sampling unit (towns), sample weights and stratification.

3 This assumed 25% response rate among current nonrespondents is based on findings from the literature cited below, and is assumed to occur for each provider type within each region.

4 Although the literature reported in Table 5 suggests that an even higher response rate might be achievable with a larger payment of as much as $50, a $40 payment to current and potential respondents is the most that we can afford with available project funds.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Larson, Janice M |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy