EDIT Supporting Statement A

EDIT_SUPPORTING STATEMENT A_0902.docx

Pre-testing of Evaluation Surveys

EDIT Supporting Statement A

OMB: 0970-0355

Assessing Early Childhood Teachers’ Use of Child Progress Monitoring to Individualize Teaching Practices

OMB Generic Information Collection

Request for Pre-testing

0970-0355

OMB

Supporting Statement

Part A

September

2015

Submitted By:

Office of Planning, Research and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

7th Floor, West Aerospace Building

370 L’Enfant Promenade, SW

Washington, D.C. 20447

Laura Hoard

CONTENTS

OMB SUPPORTING STATEMENT A 1

A1. Necessity for the data collection 1

A2. Purpose of survey and data collection procedures 2

A3. Improved information technology to reduce burden 6

A4. Efforts to identify duplication 6

A5. Involvement of small organizations 6

A6. Consequences of less frequent data collection 6

A7. Special circumstances 6

A8. Federal Register Notice and consultation 6

A9. Incentives for respondents 7

A10. Privacy of respondents 7

A11. Sensitive questions 8

A12. Estimation of information collection burden 8

A13. Cost burden to respondents or record keepers 9

A14. Estimate of cost to the federal government 9

A15. Change in burden 9

A16. Plan and time schedule for information collection, tabulation, and publication 9

A17. Reasons not to display OMB expiration date 10

A18. Exceptions to certification for Paperwork Reduction Act Submissions 10

References 11

ATTACHMENTS 14

OMB SUPPORTING STATEMENT A

A1. Necessity for the data collection

The Administration for Children and Families (ACF) at the U.S. Department of Health and Human Services (HHS) seeks approval for the pretest of the Examining Data Informing Teaching (EDIT) measure, which is part of the “Assessing Early Childhood Teachers’ Use of Child Progress Monitoring to Individualize Teaching Practices” project. The EDIT will provide information about how teachers use ongoing assessments and individualize instruction. The entire scope of the proposed data collection involves nine classrooms participating in EDIT observations. The pretest will be conducted by Mathematica Policy Research and its partners, Judith Carta, Ph.D. (University of Kansas) and Barbara Wasik, Ph.D. (Temple University).

Study background

In 2012, the Office of Planning, Research and Evaluation (OPRE) at ACF engaged Mathematica Policy Research and its partners to undertake a project aimed at identifying key quality indicators of preschool teachers’ use of ongoing assessment to individualize instruction and create a measure to examine this process. This measure is the EDIT. Initial development of the EDIT measure took place through pretesting with nine respondents from preschool classrooms in 2014 (primarily Head Start programs). The goal of this information collection request is to continue developing and refining the EDIT measure using a combination of new items and items from the initial pretest.

Research demonstrates that teachers who are supported in using ongoing assessment to individualize their instruction design stronger, more effective instructional programs and have students who achieve better outcomes than teachers who do not assess progress (Connor et al. 2009; Fuchs et al. 1984). The use of ongoing assessment data—often merged with other professional development supports, such as mentoring—is also linked to growth in literacy outcomes in preschool through first grade (Ball and Gettinger 2009; Landry et al. 2009; Wasik et al. 2009).

Recent policies have brought about a rising interest in how teachers use ongoing assessment to adjust their teaching to best meet each child’s needs. In recent years, the Office of Head Start has elaborated on its vision for preschool child and family outcomes, strengthened its focus on monitoring program and classroom quality, and developed tools to support ongoing assessment in daily practice (Administration for Children and Families 2015; U.S. Department of Health and Human Services 2010; Atkins-Burnett et al. 2009). Currently, all Head Start Centers are required to implement some form of assessment to monitor children’s progress and collect individual child information three times a year (Administration for Children and Families 2015). In addition, individualized teaching is a requirement in the Head Start Performance Standards (Administration for Children and Families 2015).

Although Head Start emphasizes the importance of using ongoing assessment data to guide instruction, very little information is available on how early education teachers actually collect and use these assessment data to tailor their instruction (Akers et al. 2014; Yazejian and Bryant 2013; Zweig et al. 2015). In pursuit of better educational outcomes, policymakers, practitioners, and researchers continue to stress the need for research in this area (Bambrick-Santoyo 2010; Buysse et al. 2013; Fuchs and Fuchs 2006; Hamilton et al. 2009; Marsh and Hamilton 2006). To determine whether teachers are implementing ongoing assessments as intended and using the data from them to inform instruction tailored to children’s individual needs and skills, researchers and policymakers need a measure to assess teacher implementation and use of ongoing assessment. ACF is developing the EDIT measure to meet this need.

Legal or administrative requirements that necessitate the collection

There are no requirements that necessitate the collection. ACF is undertaking the collection at the discretion of the agency.

A2. Purpose of survey and data collection procedures

Overview of purpose and approach

This data collection will be used for the purposes of informing OPRE’s and ACF’s development of the EDIT measure. The EDIT measure is designed to examine how a teacher conducts ongoing assessments and uses them to guide instruction. Pending OMB approval, the EDIT team plans to conduct data collection between October 2015 and March 2016 in nine classrooms to further pre-test the EDIT instrument and teacher interview. The primary goals of this pre-test are to implement and refine the newest EDIT rubrics and ratings, and continue to assess the overall feasibility of the EDIT protocols, procedures, and materials.

Research questions

The purpose of this collection is to further refine and develop the EDIT measure and answer the following research questions:

Do the EDIT items capture a range of quality in teacher implementation of recommended practices for ongoing assessment and individualizing instruction?

Are the EDIT procedures feasible in a variety of preschool classrooms using different ongoing assessment tools?

What variation is evident in how these nine teachers implement ongoing assessment and individualizing instruction and how does the EDIT account for this variation? What types of practices are used more frequently? What types of practices are seldom observed?

Are there patterns in teachers’ implementation of ongoing assessment and individualization of instruction, as measured by the EDIT, which vary by teacher characteristics, as measured by a self-administered questionnaire?

Does implementation of ongoing assessment and individualization of instruction, as measured by the EDIT, vary by structural support (such as planning time or assessment system) characteristics, as measured by a self-administered questionnaire?

Study design

The EDIT development team will collect data from preschool classrooms that will be purposively selected based on use of English in the classroom,1 use of a curriculum-embedded ongoing assessment system, use of a standard ongoing assessment tool (for example, general outcomes measures), and willingness to participate. The team sends a letter to the program director in advance of contacting the director by phone (Attachment A). The team will ask a point of contact at each center to help select a teacher in an English-speaking classroom within each center. In Attachment B, we provide the script of the language to be used for recruitment. All selected teachers and the parents of all children in the classroom will be asked to give consent for study participation.2 (Attachments C and D include memos describing the consent process to the program and the teacher respectively. The teacher consent form is provided as Attachment E; the parent consent form is Attachment F.) We will ask each teacher to select two focal children to be video recorded for observation—one child with learning challenges in language and literacy or social-emotional development, and another child who is doing well in these areas (Attachment G includes a brief memo describing the focal child selection and project activities for the program and Attachment H includes this description in more detail for the teacher). We also include illustrated instructions on use of the video equipment for teachers (Attachment I). Prior to our visit, we will call the program to confirm the visit (Attachment J contains the reminder call script).

The focus of the EDIT is on the processes the teacher uses for planning what child assessment information to collect and how to do so, collecting valid data, organizing and interpreting the data, and using the data collected to inform both overall and individualized instruction. EDIT data collection will involve the use of three primary sources—a document review, video-recorded observations, and a teacher interview. The EDIT uses a multimethod approach in gathering evidence, including checklists, ratings, and rubrics that describe how the teacher collects and uses assessment to inform instruction (Attachment K).

EDIT raters will review pre-existing assessment and instructional planning documents gathered by the lead teacher of each classroom, as well as video recordings of classroom assessments and instruction recorded by the teacher. Raters also will conduct a 55-minute individual semi-structured teacher interview to probe for additional explanations about the documents and observations, and obtain information on the teacher’s planning and implementation of instructional adaptations, modifications, and individualized teaching strategies. The protocol for this interview is provided in Attachment L. We also will ask the teacher to complete a 5-minute self-administered questionnaire about teacher and classroom characteristics (Attachment M). Further, the team will invite teachers to debrief for 20 minutes by phone after the visit about the burden and whether the directions were clear. The protocol for this debriefing is provided as Attachment N.

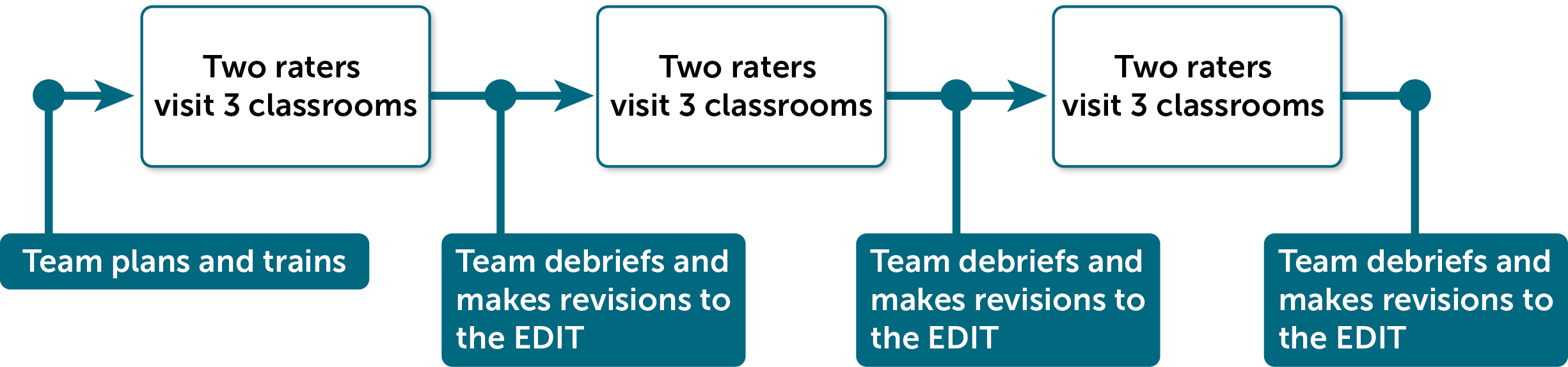

Two EDIT development team members will visit each classroom to score the EDIT; the full development team will debrief between visits to classrooms (see Figure A.1). This iterative process will allow the development team to evaluate the research questions related to the EDIT items and procedures, and to refine the measure as needed. If any changes are made to EDIT items or procedures based on the debriefing sessions we will send updated materials to OMB as a nonsubstantive change. Collection of the self-administered questionnaire will allow ACF to begin to understand how practices in those classrooms may relate to teacher characteristics and structural supports. Once data collection is complete, the updated EDIT measure will be included in a final report for ACF, which will also propose a plan for future development and testing of the EDIT.

This information collection request (ICR) requests clearance for the following instruments and protocols to be used for pretesting of the EDIT measure in nine additional classrooms:

Examining Data Informing Teaching (EDIT) Measure DRAFT Instrument Package (an observational instrument) (Attachment K)

Examining Data Informing Teaching (EDIT) Semi-Structured Teacher Interview Protocol (Attachment L)

Examining Data Informing Teaching (EDIT) Caregiver Questionnaire (Attachment M)

Examining Data Informing Teaching (EDIT) Participant Debrief Protocol (Attachment N)

Universe of data collection efforts

The team will conduct the proposed pre-test with nine English-speaking Head Start or preschool teachers; it will include document review, video-recorded observations, and individual interviews. Teachers may be bilingual, and classrooms may include children from households with Spanish speakers; however, the majority of video-recorded instruction should be in English. Video- recorded observations will provide examples of how the teachers typically collect ongoing assessment information and provide individualized instruction for the consented children in their classrooms. We will inform teachers who has consent and who does not so that only consented children are included in the video recordings. In addition, we will ask each teacher to participate in an individual interview (providing additional clarification and reflecting on assessment and individualization practices), complete a self-administered questionnaire, and debrief about the process by phone after the visit.

The EDIT is an observational instrument completed by the EDIT raters. Two raters will visit each site. (See Figure A.1.) Ultimately, the pretest will include visiting one classroom in each of nine different centers (a total of nine classrooms). Because the goal is to develop the best possible measure, the EDIT instrument is refined through an iterative process, and the EDIT development team will review and discuss observations in periodic team debriefings to refine the EDIT items further.

Figure A.1. Phases of data collection for the iterative EDIT pretest

The EDIT draws from three data sources generated by respondents. The teacher assembles documents for review, records videos of classroom activities, and participates in a semi-structured teacher interview. Data collected from the EDIT measure as well as a subsequent phone call with the teacher (the teacher debriefing) are intended to help determine the answers to the research questions depicted in Table A.1.

Table A.1. Research questions addressed by the study instruments

|

EDIT measure activities |

||||

Research questions |

Gather documents |

Video record classroom activities |

In-person interview |

Teacher questionnaire |

Phone debrief |

1. Do the EDIT items capture a range of quality in teacher implementation of recommended practices for ongoing assessment and individualizing instruction? |

X |

X |

X |

|

|

2. Are the EDIT procedures feasible in a variety of preschool classrooms using different ongoing assessment tools? |

X |

X |

X |

|

X |

3. What patterns are evident in how these nine teachers implement ongoing assessment and individualizing instruction? What types of practices are used more frequently? What types of practices are seldom observed? |

X |

X |

X |

|

|

4. Are there patterns in teachers’ implementation of ongoing assessment and individualization of instruction, as measured by the EDIT, which vary by teacher characteristics, as measured by a self-administered questionnaire? |

X |

X |

X |

X |

|

5. Does implementation of ongoing assessment and individualization of instruction, as measured by the EDIT, vary by structural support (such as planning time or assessment system) characteristics, as measured by a self-administered questionnaire? |

X |

X |

X |

X |

|

The team drew all of the questions on the EDIT teacher questionnaire from a previously approved self-administered questionnaire used in a different project, the Quality of Caregiver–Child Interactions for Infants and Toddlers (Q-CCIIT) (OMB # 0970-0392). Approximately half of the items had minor revisions; these are identified in the teacher questionnaire.

A3. Improved information technology to reduce burden

The team plans to use a multimethod approach to minimize burden on teachers, including the following: limiting the request for assessment and instruction documentation to a specified time frame and requesting only documents the teacher already has; replacing in-person observations with video recordings of typical classroom activities that can be collected at the teacher’s convenience; minimizing the frequency of video-based observations to six total observations over a two-week period; limiting the self-administered questionnaire to 5 minutes; limiting the teacher interview to 55 minutes, scheduled at the teacher’s convenience; and limiting our debrief call to 20 minutes, scheduled at the teacher’s convenience. Because of the size of the sample and the need to implement changes to the EDIT continually in the interest of producing a more refined measure, it is not feasible to develop a computer-assisted telephone interview (CATI) or web instrument.

A4. Efforts to identify duplication

This research does not duplicate any other work being done by ACF. The purpose of this clearance is to better inform and improve the quality of the EDIT measure. The literature review for this data collection indicated that no similar measure exists.

A5. Involvement of small organizations

The research to be completed under this clearance is not expected to impact small businesses.

A6. Consequences of less frequent data collection

This request involves a one-time data collection. If it were not carried out, the EDIT measure would not be able to undergo necessary refinement. This would significantly limit ACF’s ability to consider the measure for future use in studies of Head Start classrooms.

A7. Special circumstances

There are no special circumstances for the proposed data collection efforts.

A8. Federal Register Notice and consultation

Federal Register Notice and comments

In accordance with the Paperwork Reduction Act of 1995 (Pub. L. 104-13 and Office of Management and Budget (OMB) regulations at 5 CFR Part 1320 (60 FR 44978, August 29, 1995)), ACF published a notice in the Federal Register announcing the agency’s intention to request an OMB review of this information collection activity. This notice was published on September 15, 2014, Volume 79, Number 178, page 54985, and provided a 60-day period for public comment. During the notice and comment period, the government did not receive any comments in response to the Federal Register notice.

Consultation with experts outside of the study

During the development of the EDIT measure, team members received valuable input from members of an expert panel. Members of the expert panel are listed in Table A.2.

Table A.2. EDIT expert panel membership

Name |

Affiliation |

Stephen Bagnato |

University of Pittsburgh |

Linda Broyles |

Southeast Kansas Community Action Program |

Virginia Buysse |

FPG Child Development Institute |

Lynn Fuchs |

Vanderbilt University |

Leslie Nabors Oláh |

Educational Testing Service |

Sheila Smith |

Columbia University |

Patricia Snyder |

University of Florida |

A9. Incentives for respondents

We have attempted to minimize burden through our data collection procedures. Nevertheless, we acknowledge the burden that participation entails. Our plan to provide tokens of appreciation is based on one used effectively in the previous pretest of the EDIT with nine participants and attempts to acknowledge the burden to respondents. We propose offering a $50 gift card to centers and a $75 gift card to lead teachers as a token of appreciation. Teachers will also receive a $20 gift card as a token of appreciation after participating in a debrief call following the EDIT site visit. These amounts were determined based on the contractor’s experience with data collection activities in other preschool settings, and the estimated burden to teachers and centers who participate. For example, in FACES 2009, teachers received ten dollars for every report about children that they completed, and each report was estimated to take an average of ten minutes. Teachers completed an average of eight reports. Teachers in this proposed study will be asked to videotape their classrooms, gather data, and participate in a one-hour in person interview, and follow-up call. The gift cards are expected to encourage participation in this important pre-testing activity.

A10. Privacy of respondents

Information collected will be kept private to the extent permitted by law. Respondents will be informed of all planned uses of data, that their participation is voluntary, and that their information will be kept private to the extent permitted by law. We are submitting an Institutional Review Board package to the New England Institutional Review Board. The team will ask teachers and all parents to provide consent for the video-based observations and ask the teacher to share documents about the focal children (Attachments E and F). We will obtain consent from teachers and children before beginning data collection with each classroom.

The Contractor shall protect respondent privacy to the extent permitted by law and will comply with all Federal and Departmental regulations for private information. The Contractor has developed a Data Safety and Monitoring Plan that assesses all protections of respondents’ personally identifiable information. Procedures for maintaining strict data security, include a system for protecting and shipping paper copies of documents with personally identifiable information such as consent forms to the Contractor’s office. Videos are completely removed from their recording devices when collected from each site. Study data and video files are housed on secure servers behind the Contractor’s firewall, thereby enhancing data security. The Contractor shall ensure that all of its employees and subcontractors who perform work under this contract/subcontract, are trained on data privacy issues and comply with the above requirements. All contractor staff are required to sign a confidentiality pledge as a condition of employment and are aware that any breach of confidentiality has serious professional and/or legal consequences.

As specified in the evaluator’s contract, the Contractor shall use Federal Information Processing Standard (currently, FIPS 140-2) compliant encryption (Security Requirements for Cryptographic Module, as amended) to protect all instances of sensitive information during storage. The Contractor shall securely generate and manage encryption keys to prevent unauthorized decryption of information, in accordance with the Federal Processing Standard. The Contractor shall: ensure that this standard is incorporated into the Contractor’s property management/control system; establish a procedure to account for all laptop computers, desktop computers, and other mobile devices and portable media that store or process sensitive information. Any data stored electronically will be secured in accordance with the most current National Institute of Standards and Technology (NIST) requirements and other applicable Federal and Departmental regulations.

A11. Sensitive questions

There are no sensitive questions in this data collection.

A12. Estimation of information collection burden

In total, over three weeks, the lead teacher for each selected classroom is expected to spend approximately three hours participating in activities related to the EDIT. This time includes one hour collecting classroom documents and one hour recording videos of classroom activities; in addition, on-site interviews with the lead teacher and completion of the self-administered questionnaire are expected to last 55 minutes and 5 minutes, respectively. A debrief phone call after the site visit is expected to last 20 minutes. Table A.3 summarizes burden and costs for instruments associated with the EDIT data collection, which are calculated for respondents during one year of data collection. We calculated costs of the time for teachers using the Department of Labor statistics for full-time workers 25 years and older with a Bachelor’s degree only.

Table A.3. Total burden requested under this information collection

Activity/instrument |

Total/Annual number of respondents |

Number of responses per respondent |

Average burden hours per response |

Annual burden hours |

Average hourly wage |

Total annual cost |

Attachment B: EDIT – recruitment call |

27 |

1 |

0.17 |

5 |

$28 |

$140 |

Attachment H: EDIT —assembling documents for review |

9 |

1 |

1 |

9 |

$28 |

$252 |

Attachment I: EDIT—recording videos |

9 |

1 |

1 |

9 |

$28 |

$252 |

Attachment L: EDIT teacher interview protocol |

9 |

1 |

1 |

9 |

$28 |

$252 |

Attachment M: EDIT teacher questionnaire |

9 |

1 |

0.08 |

1 |

$28 |

$28 |

Attachment N: EDIT teacher debriefing protocol |

9 |

1 |

0.33 |

3 |

$28 |

$84 |

Estimated Annual Burden Total |

36 |

|

$1,008 |

|||

Total annual cost

Data collection with nine pretest classrooms is proposed for this pretest over a period of one year. For center directors and teachers, we used the median salary for full-time employees over age 25 with a bachelor’s degree ($28.28 per hour, rounded to $28.00).The total estimated time for center director respondents during recruitment calls for this data collection is 5 hours. The total estimated time for teacher respondents to this data collection is 31 hours. The total estimated annual cost is $1008.00.

A13. Cost burden to respondents or record keepers

There are no additional costs to respondents.

A14. Estimate of cost to the federal government

The total cost for the data collection activities under the current request will be $240,527. Annual costs to the federal government will be $240,527. These costs include recruitment, data collection, revision of the EDIT, and reporting.

A15. Change in burden

This is an additional information collection under generic clearance 0970-0355.

A16. Plan and time schedule for information collection, tabulation, and publication

a. Plans for tabulation

With a small purposive sample and refinements to the EDIT items throughout the data collection, we will not conduct any statistical analysis of the scores for the nine classrooms but will examine the availability of evidence for making ratings on the instrument, identify the need for additional clarifications or behavioral descriptors in the measure, and consider whether the observed variation is captured in the current items (e.g., look at descriptive item level statistics such as the range and mean). The EDIT raters’ experiences using the EDIT will be used to refine the items. Information collected in the teacher self-administered questionnaire and themes that emerge from the debriefing calls will be summarized for ACF in a methodological report with recommended changes for the EDIT instrument. The final EDIT instrument will be included in this report.

b. Time schedule and publications

This study is expected to be conducted over a one-year period, beginning September 2015. This ICR is requesting burden for one year.

Table A.4. Schedule for the data collection

Activity |

Date |

Selection of sites and teachers for inclusion in the data collection |

September 2015–March 2016 |

EDIT site visits (assembling documents, video recordings, teacher interviews) |

October 2015–March 2016 |

Collection of teacher questionnaires |

October 2015–March 2016 |

Debrief phone calls |

October 2015–April 2016 |

A methodological report describing the pretest and summarizing recommended changes to the EDIT measure will be submitted to ACF in May 2016.

A17. Reasons not to display OMB expiration date

All instruments will display the expiration date for OMB approval.

A18. Exceptions to certification for Paperwork Reduction Act Submissions

No exceptions are necessary for this information collection.

References

Administration for Children and Families. “Head Start Performance Standards.” 45 CFR Sec 1307.2. Federal Register, 2015.

Administration for Children and Families. “Head Start Early Learning Outcomes Framework,” 2015. Available at http://eclkc.ohs.acf.hhs.gov/hslc/hs/sr/approach/pdf/ohs-framework.pdf.Akers, L., P. Del Grosso, S. Atkins-Burnett, K. Boller, J. Carta, and B.A. Wasik. “Tailored Teaching: Teachers’ Use of Ongoing Child Assessment to Individualize Instruction (Volume II).” Washington, DC: U.S. Department of Health and Human Services, Administration for Children and Families, Office of Planning, Research & Evaluation, 2014.

Akers, L., P. Del Grosso, S. Atkins-Burnett, K. Boller, J. Carta, and B.A. Wasik. “Tailored Teaching: Teachers’ Use of Ongoing Child Assessment to Individualize Instruction (Volume II).” Washington, DC: U.S. Department of Health and Human Services, Administration for Children and Families, Office of Planning, Research & Evaluation, 2014.

Atkins-Burnett, Sally, Emily Moiduddin, Louisa Tarullo, Margaret Caspe, Sarah Prenovitz, and Abigail Jewkes. “The Learning from Assessment Toolkit.” Washington, DC: Mathematica Policy Research, March 2009.

Ball, C., and M. Gettinger. “Monitoring Children’s Growth in Early Literacy Skills: Effects of Feedback on Performance and Classroom Environments. Education and Treatment of Children, vol. 32, 2009, pp. 189–212.

Bambrick-Santoyo, P. Driven by Data: A Practical Guide to Improve Instruction. San Francisco, CA: Wiley, 2010.

Buysse, V., E.S. Peisner-Feinberg, E. Soukakou, D.R. LaForett, A. Fettig, and J.M. Schaaf. “Recognition & Response.” In Handbook of Response to Intervention in Early Childhood, edited by V. Buysse and E. Peisner-Feinberg. Baltimore, MD: Paul H. Brookes Publishing, 2013, pp. 69–84.

Buzhardt, J., C. Greenwood, D. Walker, J. Carta, B. Terry, and M. Garrett. “A Web-Based Tool to Support Data-Based Early Intervention Decision-Making.” Topics in Early Childhood Special Education, vol. 29, no. 4, 2010, pp. 201–213.

Buzhardt, J., C. Greenwood, D. Walker, R. Anderson, W. Howard, and J. Carta. “Effects of Web-Based Support on Early Head Start Home Visitors’ Use of Evidence-Based Intervention Decision-Making and Growth in Children’s Expressive Communication.” NHSA Dialog: A Research-to-Practice Journal for the Early Childhood Field, vol. 14, no. 1, 2011, pp. 121–146.

Connor, C., S. Piasta, B. Fishman, S. Glasney, C. Schatschneider, E. Crowe, and F. Morrison. “Individualizing Student Instruction Precisely: Effects of Child X Instruction Interactions on First Graders’ Literacy Development.” Child Development, vol. 80, no. 1, 2009, pp. 77–100.

Fuchs, L., and D. Fuchs. “What Is Science-Based Research on Progress Monitoring?” Washington, DC: National Center for Progress Monitoring, 2006.

Fuchs, L., S. Deno, and P. Mirkin. “The Effects of Frequent Curriculum-Based Measurement and Evaluation on Student Achievement, Pedagogy, and Student Awareness of Learning.” American Educational Research Journal, vol. 21, 1984, pp. 449–460.

Hamilton, L., R. Halverson, S. Jackson, E. Mandinach, J. Supovitz, J. Wayman, C. Pickens, E. Sama Martin, and J. Steele. “Using Student Achievement Data to Support Instructional Decision-Making.” NCEE 2009–4067. Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, September 2009.

Landry, S., J. Anthony, P. Swank, and P. Monseque-Bailey. “Effectiveness of Comprehensive Professional Development for Teachers of At-Risk Preschoolers.” Journal of Educational Psychology, vol. 101, no. 2, 2009, pp. 448–465.

Landry, Susan H., Paul R. Swank, Jason L. Anthony, and Michael A. Assel. “An Experimental Study Evaluating Professional Development Activities Within a State-Funded Pre-Kindergarten Program.” Reading and Writing: An Interdisciplinary Journal, vol. 24, no. 8, 2011, pp. 971–1010.

Marsh, J., J. Pane, and L. Hamilton. Making Sense of Data-Driven Decision-Making in Education: Evidence from Recent RAND Research. (OP-170). Santa Monica, CA: RAND Corporation, 2006.

National Center on Student Progress Monitoring. “Common Questions for Progress Monitoring.” 2012. Available at http://studentprogress.org/progresmon.asp#2. Accessed December 11, 2014.

U.S. Department of Health and Human Services. “Improving School Readiness and Promoting Long-Term Success: The Head Start Roadmap to Excellence.” Washington, DC: U.S. Department of Health and Human Services, Administration for Children and Families, Office of Head Start, January 2010. Available at http://eclkc.ohs.acf.hhs.gov/hslc/hs/sr/class/Head_Start_Roadmap_to_Excellence.pdf Accessed December 12, 2014.

Wasik, B., A. Hindman, and A. Jusczyk. “Using Curriculum-Specific Progress Monitoring to Determine Head Start Children’s Vocabulary Development.” NHSA Dialog: A Research-to-Practice Journal for the Early Intervention Field, vol. 12, no. 32, 2009, pp. 257–275.

Yazejian, N., & Bryant, D. (2013). Embedded, collaborative, comprehensive: One model of data utilization. Early Education and Development, 24(1), 68–70.

Zweig, J., Irwin, C. W., Kook, J. F., & Cox, J. (2015). Data collection and use in early childhood education programs: Evidence from the Northeast Region (REL 2015–084). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory Northeast & Islands. Retrieved from http://ies.ed.gov/ncee/edlabs.

ATTACHMENTS

Attachment A EDIT advance letter

Attachment B EDIT recruitment script

Attachment C Consent packet memo for program

Attachment D Lead teacher consent memo

Attachment E Consent form for program staff

Attachment F Parent consent form

Attachment G Video and document collection instructions for program

Attachment H EDIT pretest letter

Attachment I EDIT video equipment instructions

Attachment J EDIT reminder call scripts

Attachment K EDIT measure instrument package

Attachment L EDIT teacher interview protocol

Attachment M EDIT teacher questionnaire

Attachment N EDIT teacher debriefing protocol

1 The members of the research team need to be able to review and discuss the assessment evidence collected during the visits, and the researchers are not all fluent in Spanish.

2 We will let the teacher know who has consented and who has not. The teacher will structure the small groups so that s/he only records those with permission. In prior pretesting, teachers did not report difficulty in video recording only those with consent.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | SFlowers |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy