Research Goals, Recruiting, Question Wording

Attachment A ACS Cognitive Testing to Reduce Burden Research Goals, Recruiting Requirements, Question Wording.docx

Generic Clearance for Questionnaire Pretesting Research

Research Goals, Recruiting, Question Wording

OMB: 0607-0725

American Community Survey (ACS) Cognitive Testing to Reduce the Burden and Difficulty of Questions

Research Goals, Recruiting Requirements, and Question Wording

April 23, 2018

Introduction

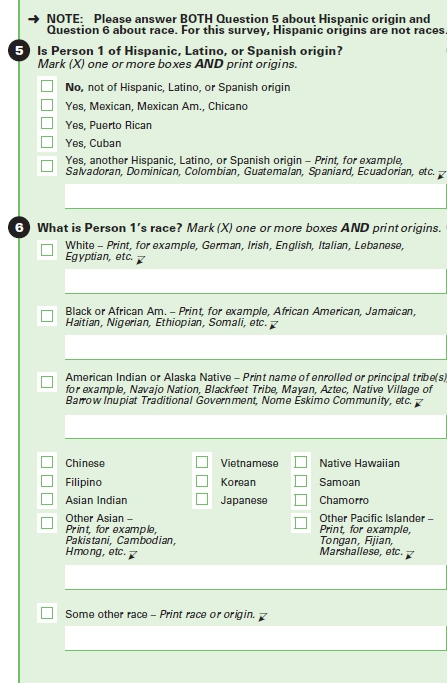

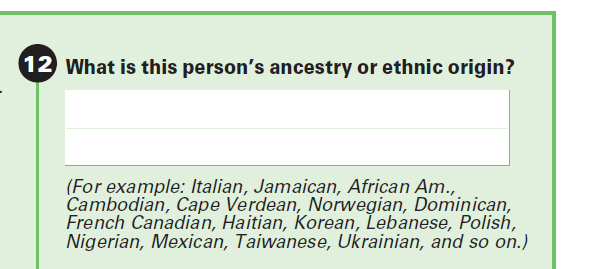

This round of cognitive testing will test the Hispanic Origin/Race and Class of Worker questions using the self-administered (paper) mode.

The 2020 Census will contain two separate questions on Hispanic origin and race, and the questions will be revised to have write-in areas for White and Black, as well as some differences in the instructions (the form now allows more than one Hispanic Origin) and examples (revised examples for Hispanic Origin and American Indian/Alaska Natives). The 2020 ACS will use the same questions as the 2020 Census. The ACS currently asks a question later on the survey about ancestry. The focus of this testing should be on whether or not the ancestry question will now be perceived as repetitive, and how information gathered from the new Hispanic origin and race questions compares to information gathered from the ancestry question.

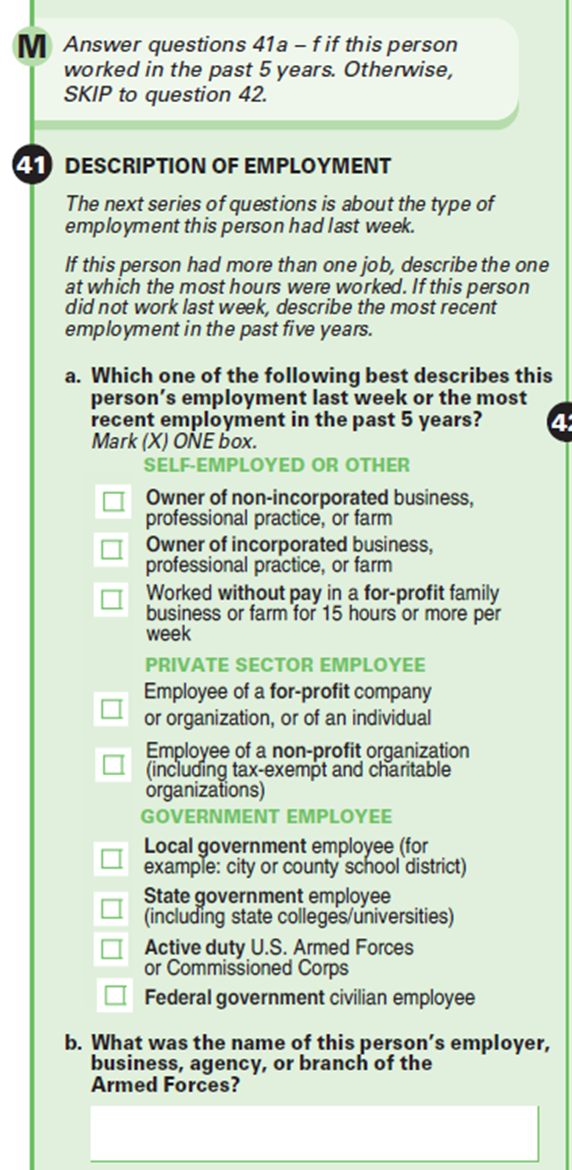

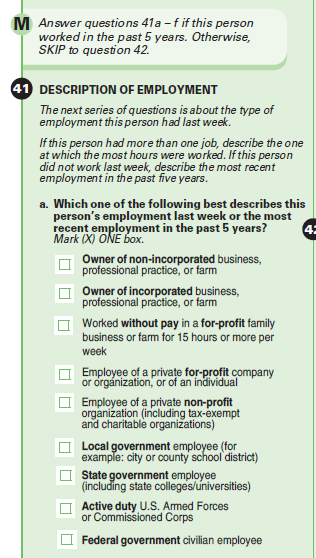

For the 2016 ACS Content Test, the Class of Worker (COW) question grouped response categories under three general headers (private sector employee, government employee, and self-employed or other). The wording for the question and response categories were also revised. The revised format and wording of the COW question produced notable improvements. However, one area we would like to improve upon is the item missing data rates. For the mail mode, where multiple checkboxes marked are treated as a nonresponse, the 2016 test treatment was 4 percent higher than the missing data rate for the control (14.0 percent and 10.4 percent, respectively). Cognitive testing could shed light on why respondents select more than one COW category.

Hispanic Origin/Race and Ancestry

Research Goals

How will respondents react and respond to the ancestry question after answering the Hispanic origin and race questions with write-in areas for White and Black added?

What were respondents’ overall reactions to the Hispanic origin, race, and ancestry questions?

Were they confused, or did they have any difficulty reporting any of the three questions?

Did their response to one question affect their response to the others?

For the race question, did they notice they could provide detailed groups under the White and Black categories?

How do respondents’ answers to the Hispanic origin and race questions differ from their responses to the ancestry question?

Did respondents report the same number of groups for the ancestry question as they did for the Hispanic origin and race questions?

Did respondents report certain groups for the ancestry question that they did not report for the Hispanic origin and race questions, and vice versa?

Did respondents give the same level of detail?

Why did respondents answer differently or similarly?

Recruiting Requirements

For this project, we need to recruit people with specific racial/ancestral identities.

We want to identify persons with specific heritages. See Table 1 below.

The ancestry project is a joint effort from Westat (24 interviews) and Census (15 interviews). The Final Target Numbers are our targets for all 39 total Westat/Census interviews.

Westat is responsible for filling the “Westat Minimum” targets. After minimums are met, Westat should help Census meet final targets.

Multi person households with related and unrelated members.

Table 1. Recruitment Goals for Each Race and Hispanic Origin Group

Recruitment group |

Heritage Group |

Westat Minimum |

Final Target |

White European, Non-Hispanic |

Any |

6 |

6 |

Black, African American, African, Caribbean |

Any |

6 |

6 |

Multiracial |

Multiracial (must be part white or black) |

2 |

2 |

“Difficult” to recruit groups |

|

3 of any |

3 2 4

|

Remaining Interviews – Anyone

After minimums are met, anyone can participate. |

Specific European Heritage:

Other:

|

0 0

0 0 0 0 |

4 2

0 0 0 0 |

Proposed Wording – Paper:

Class of Worker (COW)

Research Goals

How do respondents react and respond to the COW question?

What message do the headers (Version 1) convey to the respondent?

Do respondents take longer to read/process the question with/without additional headers?

Are respondents confused by the question or the response options? Are respondents who receive the header version (Version 1) less confused by the question or response options?

If a respondent selected more than one COW category, why?

Do respondents understand they should only select one category from all categories and not one category from under each header? “Did you have trouble deciding which box to mark, and can you tell me more about that?”

Are self-employed respondents selecting more than one category?

How do self-employed respondents categorize themselves?

Do respondents understand that they must be self-employed and not that they work for someone who is self-employed?

If a respondent works more than one job, how do they answer?

Do respondents read the instructions on how to answer? (Both M and 41) If read, were they clear/understood? If not read, why were they skipped?

Do respondents correctly categorize their main or most recent employment?

Verify if instructions were read/understood (Both M and 41)

What do respondents think the purpose of this question is?

Do respondents understand the purpose of the question? Do they think of their work within these “Class of work” categories?

Recruiting Requirements

All recruited participants must be currently employed for pay. The goal is to recruit people who are more likely to report multiple COWs. See table 2 below for recruitment goals by specific job.

Multi person households with related and unrelated members.

Table 2. Recruitment Goals/Minimums by Occupation for COW

-

Occupation

Minimum

Self-employed

10

Owner/Operator Truck Drivers

Backup: Any Big-rig or 18-wheel truck driver not owner/operator

Avoid: Delivery drivers, UPS, Uber, etc.

3 of self-employed

Real estate brokers and sales agents

Backup: Any employed real-estate agent or broker

3 of self-employed

Other

4

Teachers from charter schools

2 of 4 other

Registered Nurses

Backup: All other nurses

Avoid: Personal care aids

2 of 4 other

Multiple jobs (These can overlap with other jobs)

6

Proposed Wording – Paper:

Version 1: (12 participants)

Version 2: (12 participants)

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Agnes S Kee (CENSUS/ACSO FED) |

| File Modified | 0000-00-00 |

| File Created | 2021-01-21 |

© 2026 OMB.report | Privacy Policy