Part A EDSCLS Benchmarking Study 2017 Updated 2

Part A EDSCLS Benchmarking Study 2017 Updated 2.docx

ED School Climate Surveys (EDSCLS) Benchmark Study 2017 Update

OMB: 1850-0923

Table of Contents

A.1 Circumstances Making Collection of Information Necessary 1

A.1.1 Purpose of This Submission 1

A.1.2 Legislative Authorization 1

A.1.4 Study Design for the EDSCLS National Benchmark Study 1

A.1.5 EDSCLS Cognitive Laboratory Testing and Pilot Test 1

A.1.6 Nonresponse Follow-up Study 2

A.2 Purpose and Uses of the Data 3

A.3 Use of Information Technology 3

A.4 Efforts to Identify Duplication 3

A.5 Method Used to Minimize Burden on Small Businesses 4

A.6 Frequency of Data Collection 4

A.7 Special Circumstances of Data Collection 4

A.8 Consultants outside the Agency 4

A.9 Provision of Payments or Gifts to Respondents 5

A.10 Assurance of Confidentiality 5

A.12 Estimates of Response Burden 7

A.13 Estimates of Cost to Respondents 9

A.14 Costs to the Federal Government 9

A.15 Reasons for Changes in Response Burden and Costs 9

A.16 Publication Plans and Time Schedule 10

A.17 Approval to Not Display Expiration Date for OMB Approval 10

A.18 Exceptions to Certification for Paperwork Reduction Act Submissions 10

Appendices:

Appendix

A – Communication Materials

Appendix B –

Questionnaires

Appendix C – Platform and Administration

Instructions

A.1 Circumstances Making Collection of Information Necessary

A.1.1 Purpose of This Submission

The ED School Climate Surveys (EDSCLS) are a suite of survey instruments being developed for schools, districts, and states by the U.S. Department of Education’s (ED) National Center for Education Statistics (NCES). This national effort extends current activities that measure school climate, including the state-level efforts of the Safe and Supportive Schools (S3) grantees, which were awarded funds in 2010 by the ED’s Office of Safe and Healthy Students (OSHS) to improve school climate. Through the EDSCLS, schools nationwide will have access to survey instruments and a survey platform that will allow for the collection and reporting of school climate data across stakeholders at the local level. The surveys can be used to produce school-, district-, and state-level scores on various indicators of school climate from the perspectives of students, teachers, noninstructional school staff and principals, and parents and guardians.

NCES is requesting to conduct a national EDSCLS benchmark study, collecting data from a nationally representative sample of schools across the United States, to create a national comparison point for users of the ED School Climate Surveys. The proposed data collection follows cognitive laboratory testing conducted in 2014 (OMB #1850-0803 v.102) and a pilot test conducted in 2015 (OMB #1850-0803 v.129). The benchmark study data collection will be carried out on behalf of NCES under a contract by the American Institutes for Research (AIR) and its subcontractor, Sanametrix.

A.1.2 Legislative Authorization

The EDSCLS national benchmark study is conducted by NCES within the Institute of Education Sciences (IES). NCES will conduct this study in close consultation with offices and organizations within and outside ED. The EDSCLS benchmark study is authorized by the Education Sciences Reform Act of 2002 (ESRA; 20 U.S. Code §9543).

A.1.3 Prior Related Studies

This is the first time NCES is collecting benchmark data on a series of school climate topics from multiple perspectives at public schools across the nation.

A.1.4 Study Design for the EDSCLS National Benchmark Study

A nationally representative sample of 500 schools serving students in grades 5-12 will be sampled to participate in the national benchmark study in spring 2017. Three web-based surveys – student, instructional staff, and principal/non-instructional staff surveys – will be administrated through the EDSCLS platform hosted on the NCES server. The data collected from the sampled schools will be used to produce national school climate scores on the various topics covered by EDSCLS. The national scores will be released in the updated EDSCLS platform and provide a basis for comparison between data collected by schools and school systems and the national school climate.

A.1.5 EDSCLS Cognitive Laboratory Testing and Pilot Test

The development of the EDSCLS survey instruments started in 2013 with a review of the existing school climate literature and survey items. The Technical Review Panel (TRP) meeting was held in early 2014 to recommend items to be included in the EDSCLS. Then the EDSCLS draft survey items were created, building on the existing items and recommendations from the TRP. In the summer of 2014, cognitive interviews were conducted on the new and revised items in one-on-one settings with 78 individual participants: students, parents, teachers, principals, and noninstructional staff from the District of Columbia, Texas, and California. In addition to cognitive interviews, usability testing of the survey platform was performed with 32 individual participants: students, parents, teachers, principals, and noninstructional staff from the District of Columbia, Maryland, and Virginia. Changes to both the survey items and survey platform were made based on these interviews and testing. The cognitive interviews and usability testing report was included as Attachment 5 in the submission approved in December 2014 (OMB# 1850-0803 v.119).

The pilot test of the EDSCLS took place from March to May of 2015. It was an operational test, under “live” conditions of all components of the survey system (e.g., survey instruments and data collection, processing, and reporting tools). A convenience sample of 50 public schools that varied across key characteristics (region, locale, and racial composition) participated in the pilot test. The EDSCLS platform was tested at the state level (containing multiple districts in one platform), district level (containing multiple schools in one platform), and the school level (containing only one school in the platform). All survey questionnaires were administrated online through the EDSCLS platform. The data from the pilot test were used to refine the EDSCLS survey items and about one third of the items were dropped from the pilot version. The final EDSCLS instruments have 74 items for students, 83 items for instructional staff, and noninstructional staff (excluding 21 principal only items), and 40 items for parents.

In addition to the items used in the pilot test, the benchmarking survey instruments also include one new item requested by OSHS in June 2015 because school communities nationwide have identified sexual violence and/or teen dating violence as an issue that students and schools are increasingly facing. If schools are going to address this issue, data should be available to provide them with a sense of prevalence to prevent escalation and to enable them to create appropriate supports and protective factors for improvement of the learning environment and student success. Preventing teen dating and gender-based violence is part of creating a safe and healthy learning environment. “The following types of problems occur at this school often: sexual assault or dating violence” is added to the two staff surveys (Isafpsaf143, Nsafpsaf147). Cognitive interviews were conducted in 2015 to test this new item with teachers and instructional staff.

A.1.6 Nonresponse Follow-up Study

During the 2015–16 data collection, the recruitment was not able to begin until the end of October, which proved to be too late in the school schedule to recruit successfully. For the 2016–17 data collection, we began recruitment in April 2016. Given that many schools did not respond to the 2015–16 data collection, a nonresponse follow-up with schools in the 2015–16 sample will be conducted to inform the 2016–17 data collection. The nonresponse follow-up study will involve telephone interviews with personnel at small subsamples of schools that (a) agreed to participate in the 2015–16 data collection, (b) declined to participate, or (c) never responded to the survey request. The interview protocols are tailored to each group, and a supplementary survey instrument is used when those who never responded to the survey request do not remember receiving the request. The study will ask personnel at schools in each group to provide reasons for their response or lack thereof, their assessments of the amount of burden the EDSCLS entails, examples of any barriers that existed to their participation, and suggestions for improving the school recruitment process. The results will inform the school recruitment procedures for the 2016–17 data collection.

A.2 Purpose and Uses of the Data

The U.S. Department of Education has prioritized research about school climate and the use of such research to develop more effective policy and school practices. Although many school climate surveys have been developed, there is no consensus on precise definitions for the factors that make up school climate or a common reference for comparison nationally. The data from the national benchmark study will be used to create national school climate scale scores for 12 out of 13 topics covered by EDSCLS for students, instructional staff, and principals (to reduce the response burden of each participating school, only principals will be surveyed using the noninstructional staff survey). Because most of the pilot schools did not administer the parent survey1 and those that did experienced low response rates to the parent survey, NCES will not include the parent survey in the benchmark study. Figure 1 shows the EDSCLS domains and topics.

Figure 1. EDSCLS model of school climate

A.3 Use of Information Technology

Self-administered web-based surveys will be used to administer EDSCLS. For the benchmark study, the instruments will be hosted on NCES servers. Respondents will be provided with log-in credentials and the web address for the survey. The surveys can be taken on computers as well as mobile devices such as tablets or smart phones.

A.4 Efforts to Identify Duplication

The EDSCLS benchmark study will not be duplicative of other studies. Although the School Survey on Crime and Safety (SSOCS) and the School Crime Supplement (SCS) survey collect school-level information about victimization, crime, and safety, and the Youth Risk Behavior Survey (YRBS) collects data on health risk behaviors in middle and high schools, the benchmark study is the only nationally representative federal study to collect information on multiple aspects of school climate and from multiple stakeholders—students in grades 5-12 and teachers and principals in schools serving students in these grades. The benchmark study will collect data on the 13 aspects of the school climate noted earlier, will open up possibilities for new research related to school climate topics, and will allow important comparisons to be made between school climates locally and nationally.

A.5 Method Used to Minimize Burden on Small Businesses

EDSCLS does not impose burden on small businesses or other small entities.

A.6 Frequency of Data Collection

Currently, the national EDSCLS benchmark study is only planned to be administered one time, in the spring 2017, and the nonresponse follow-up study on schools sampled in the 2015–16 data collection will also only be administered once.

A.7 Special Circumstances of Data Collection

No special circumstances of data collection are anticipated.

A.8 Consultants outside the Agency

Recognizing the significance of the EDSCLS benchmark study data collection, several strategies have been implemented to obtain critical review of planned project activities and interim and final products. These strategies include consultations with persons and organizations both internal and external to NCES, ED, and the federal government. To date, consultations focused on interactions with the TRP in combination with cognitive laboratory testing of instrument content and pilot testing of content and operational aspects of EDSCLS.

The EDSCLS TRP members were consulted again before finalizing the survey items for the national benchmark study. The membership of the TRP (provided below) represents a broad spectrum of the researchers in the field and representatives from school districts and schools. The nonfederal members serve as expert reviewers on item content and format.

EDSCLS Technical Review Panel members:

Elaine Allensworth

Lewis-Sebring Director

University of Chicago Consortium on Chicago School Research

1313 East 60th Street

Chicago, IL 60637

Catherine Bradshaw

Professor and Associate Dean for Research and Faculty Development

Curry School of Education, University of Virginia

Bavaro Hall, Room 139B

Charlottesville, VA 22904

Russell Brown

Chief Accountability and Performance Management Officer

Baltimore County Public Schools

9611 Pulaski Park Drive, Suite 305

Baltimore, Maryland 21220

Joyce Epstein

Director of the Center on School, Family, and Community Partnerships

Johns Hopkins University

National Network of Partnership Schools

2701 N. Charles Street, Suite 300

Baltimore, MD 21218

Miguel Ferguson

Associate Professor

School of Social Work

University of Texas at Austin

1925 San Jacinto Boulevard D3500

Austin, TX 78712

Sabrina Oesterle

Research Associate Professor

University of Washington

4101 15th Avenue NE

Seattle, WA 98105

David Osher

Vice President & Institute Fellow, Health and Social Development Program

American Institutes for Research

1000 Thomas Jefferson Street, NW

Washington, DC 20007

Amanda J. Rose

Associate Professor, Psychological Sciences

University of Missouri

McAlester Hall, Room 212E

Columbia, MO 65201

Veronica Thomas

Professor, Human Development and Psychoeducational Studies

Howard University School of Education

Room 320

Washington, DC 20059

Roger Weissberg

President and Chief Executive Officer

Collaborative for Academic, Social and Emotional Learning

815 West Van Buren Street, Suite 210

Chicago, IL 60607

John Yore

Principal, Meade High School

Anne Arundel County Public Schools

1100 Clark Road

Fort Meade, MD 20755

A.9 Provision of Payments or Gifts to Respondents

There is no monetary payment to respondents in the EDSCLS national benchmark study or the nonresponse follow-up study. Each school that participates in the benchmark study will receive a tablet valued up to $500 to help with the data collection. Additionally, if a participating school chooses to conduct a universe survey of students in grades 5-12 and of teachers and noninstructional staff, after the completion of the national EDSCLS study, it will receive a report with measures of school climate perceptions from the perspectives of the school’s respondents along with comparisons to the perceptions expressed in the EDSCLS national sample. The use of the report incentive may encourage schools to conduct a universe survey of students and staff, while a tablet will enable schools to see how the instruments will display on different mobile devices and allow survey participation during school meetings and events to help encourage participation. More importantly, for staff who don’t regularly use computers for their work, a tablet will provide easy access to online surveys. We have received feedback from some of the districts that participated in the pilot test that tablets were great incentives to obtain their principals’ buy-in to the pilot data collection.

ED released the free-to-use EDSCLS platform in spring 2016. In schools selected for the EDSCLS 2017 benchmark study sample, which are in states or districts that want to conduct their own data collection, the two incentives will be used to encourage their participation in the EDSCLS 2017 benchmark study. The incentive tablet will be delivered to a school once it provides a range of dates for collecting data.

A.10 Assurance of Confidentiality

NCES assures participating individuals and institutions that all identifiable information collected under EDSCLS may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law [Education Sciences Reform Act of 2002 (ESRA 2002), 20 U.S.C. § 9573].

School coordinators will receive materials that both describe the study and clearly indicate that participation is voluntary. The data collection will be hosted on the NCES server. All NCES staff, AIR staff, and any school staff with access to data collection files will sign an affidavit of nondisclosure attesting to the fact that the data will only be used for statistical purposes, and no attempt will be made to identify any individual in the file. Violations are a class E felony with penalties of up to a $250,000 fine and/or up to 5 years in prison.

If a school requests its data, the raw staff response data can be transferred to the school without any directly identifying PII (EDSCLS platform doesn’t retain direct identifying PII for the staff surveys) and student response data can be transferred with or without any directly identifying PII. The school needs to make the choice regarding the type of the student data file they would like to receive when confirming the school’s participation in the benchmark study because the decision affects the informed consent language shown to students.

For schools that are not requesting individual student data, the following text is shown on the informed consent page for the student survey:

“The experiences of students are critical to understanding school climate, and the best way to understand those experiences is to ask students themselves. Apart from improving your school climate, the information that you provide will be combined with responses from students in other schools like yours and will be used by the National Center for Education Statistics (NCES) to provide national data that can be compared to data of individual schools, districts, and states. Data provided to NCES may be used only for statistical purposes and will not be disclosed or used in identifiable form for any other purpose, except as required by law (Education Sciences Reform Act (ESRA) of 2002, 20 U.S.C., § 9573).

The data you provide may also be used by your school, district, and/or state to better understand the current climate in your school. Your answers will be combined with the answers of other students at your school and district and used to create records about the climate of your school. These reports will not identify any person or their responses.”

For schools that request student data without directly identifying PII, the following text is shown on the informed consent page for the student survey:

“The experiences of students are critical to understanding school climate, and the best way to understand those experiences is to ask students themselves. Apart from improving your school climate, the information that you provide will be combined with responses from students in other schools like yours and will be used by the National Center for Education Statistics (NCES) to provide national data that can be compared to data of individual schools, districts, and states. Data provided to NCES may be used only for statistical purposes and will not be disclosed or used in identifiable form for any other purpose, except as required by law (Education Sciences Reform Act (ESRA) of 2002, 20 U.S.C., § 9573).

The data you provide may also be used by your school, district, and/or state to better understand the current climate in your school. Answers to individual questions will not identify any person and the only people who may see answers to individual questions are authorized personnel at your school and district (20 U.S.C. § 1232g; 34 CFR Part 99). Your answers will be combined with the answers of other students at your school and district and used to create records about the climate of your school. These reports will not identify any person or their responses.”

For schools that request student data with directly identifying PII for linkage with other data the schools may have, the following text is shown on the informed consent page for the student survey:

“The experiences of students are critical to understanding school climate, and the best way to understand those experiences is to ask students themselves. Apart from improving your school climate, the information that you provide will be combined with responses from students in other schools like yours and will be used by the National Center for Education Statistics (NCES) to provide national data that can be compared to data of individual schools, districts, and states. Data provided to NCES may be used only for statistical purposes, except as required by law or described here (Education Sciences Reform Act (ESRA) of 2002, 20 U.S.C., § 9573).

The data you provide may also be used by your school, district, and/or state to better understand the current climate in your school. The only people who may see your answers to individual questions are authorized personnel at your school and district (20 U.S.C. § 1232g; 34 CFR Part 99). Your answers will be combined with the answers of other students at your school and district and used to create records about the climate of your school. Although these reports will not identify any person or their responses, your data may be combined with other data about you to help improve the climate of your school.”

The following text is shown for the staff surveys:

“The experiences of students and staff are critical to understanding school climate, and the best way to understand those experiences is to ask all members of the school community. Apart from improving your school climate, the information that you provide will be combined with responses from staff in other schools like yours and will be used by the National Center for Education Statistics (NCES) to provide national data that can be compared to data of individual schools, districts, and states. Data you provide to NCES may be used only for statistical purposes and may not be disclosed or used in identifiable form for any other purpose, except as required by law (Education Sciences Reform Act (ESRA) of 2002, 20 U.S.C., § 9573).

The results of this survey are confidential. The data you provide may also be used by your school and district to better understand the current climate in your school. Your answers will be combined with the answers of other respondents in your school and district and used to create reports about the climate of your school. These reports will not identify any person or their responses.”

All instructions that respondents will see when accessing the data collection system, including the above text and the instructions to survey administrators are provided in Appendix C. Because the benchmark study will be conducted by NCES, the instructions to survey administrators only include guidance on how to support the data collection at the school level. Compared with the guide used in the 2015 pilot test, the instructions do not include information not directly relevant to the benchmark study, such as platform installation, data collection management, or nonresponse bias analysis.

AIR will adhere to NCES Statistical Standards, as described at https://nces.ed.gov/statprog/2012/. All data transfers will be encrypted using Federal Information Processing Standards 140-2 validated encryption tools.

Furthermore, ED has established a policy regarding the personnel security screening requirements for all contractor employees and their subcontractors to secure the confidentiality of EDSCLS respondents. The contractor must comply with these personnel security screening requirements throughout the life of the contract. The requirements are identified and summarized in ED directive OM: 5-101, which was last updated on January 29, 2008. There are several requirements that the contractor must meet for each employee working on the contract for 30 days or more. Among these requirements are that each person working on the contract must be assigned a position risk level. The risk levels are high, moderate, and low, based on the level of harm that a person in the position can cause to ED’s interests. Each person working on the contract must complete the requirements for a “Contractor Security Screening.” Depending on the risk level assigned to each person’s position, background investigations may be conducted for each project staff member.

A.11 Sensitive Questions

The EDSCLS national benchmark study and the nonresponse follow-up study are voluntary surveys. No persons are required to respond to them and respondents may decline to answer any question in either survey. The items in the EDSCLS for students, instructional staff, and noninstructional staff/principal and in the nonresponse follow-up study are not of a sensitive nature and should not pose sensitivity concerns to respondents. All survey items are focused on the perceptions of respondents regarding various aspects of school climate. Respondents are not asked to report any personal incidences or behaviors.

A.12 Estimates of Response Burden

EDSCLS will involve the collection of respondent data by a self-administered web survey with the support from school coordinators. The data collection procedures for the EDSCLS national benchmark survey include contacting school principals of sampled schools and district superintendents of their districts for their support. Once a school agrees to participate in the national study, the school will be asked to designate a school coordinator to assist in the materials distribution and data collection at the school. For schools in school districts that require additional application processes or materials to be sent to the district for consideration, we will obtain approval for our research from the district before soliciting the participation of its schools. Detailed information about recruitment and other data collection procedures can be found in Part B section B.2.

Table 1 shows the possible range of burden for the national benchmark study, which is targeted to be conducted in 500 schools, but the number of responding schools could be up to 700 under the current contact strategy. Based on the maximum number of participating schools, if all schools select the referent grade option and elect for one sampled class per referent grade, then approximately 18,840 students, 1,880 teachers, and 700 principals would participate (based on one class of 20 students and two teachers per 5th, 7th, or 11th grade). If, on the other hand, all participating schools choose to administer the EDSCLS surveys to all of their students in grades 5-12, teachers, and noninstructional staff, we estimate that approximately 365,690 grade 5-12students, 27,520 teachers, 13,340 noninstructional staff other than principals, and 700 principals will be asked to take part in the EDSCLS benchmark study2. In order to request the most conservative estimated response burden, table 1 below shows estimated burden ranges for the numbers of respondents, responses, and burden hours, while the totals in the table show the maximum possible burden amounts.

Based on reported response rates of similar surveys3, NCES estimates 80 percent response rates for student, teacher/instructional staff, and principal/noninstructional staff surveys. The response burden is expected to be about 40 minutes for each student participating in the survey, and about 30 minutes for teachers, noninstructional staff, and principals participating in the survey. These estimates include the time to read instructions and complete all items.

In the nonresponse follow-up study of schools sampled for the EDSCLS 2016, we will conduct an approximately 10 minute interview with personnel from 10 schools that agreed to participate in the EDSCLS 2016 (from a subsample of 20), personnel from 20 schools that declined to participate (from a subsample of 60), and personnel from 30 schools that never responded to the survey request (from a subsample of 100). The initial contact with school personnel to ask the school to participate in the interview is anticipated to take about 3 minutes.

The hourly rates for teachers/instructional staff, principals, and noninstructional staff/coordinators ($28.45, $44.68, $21.34 respectively) are based on Bureau of Labor Statistics (BLS) May 2015 National Occupational and Employment Wage Estimates4, assuming 2,080 work hours per year. The federal minimum wage of $7.25 is used as the hourly rate for students. For the benchmark study, a total of 241,331 burden hours are anticipated, resulting in a cost to respondents of approximately $2,489,293.

Table 1. Estimate of hourly burden for recruitment and participation in EDSCLS Benchmark Study5

Type |

Sample Size |

Expected response rate |

Number of respondents |

Number of responses |

Hours per respondent |

Total hours |

Recruitment |

||||||

School - Initial contact |

1,000 |

-- |

1,000 |

1,000 |

0.05 |

50 |

School - Follow-up via phone or e-mail |

1,000 |

-- |

1,000 |

1,000 |

0.15 |

150 |

School – Confirmation |

1,000 |

0.5 |

500 |

500 |

0.05 |

25 |

Special handling districts - Initial contacts |

108 |

-- |

108 |

108 |

0.05 |

5 |

Special handling districts - Follow-up |

50 |

-- |

50 |

50 |

0.15 |

8 |

Special districts – Approval |

78 |

0.7 |

55 |

55 |

8 |

440 |

School contact (Nonresponse follow-up survey) |

180 |

-- |

180 |

180 |

0.05 |

9 |

Maximum Estimated Subtotal |

-- |

-- |

1,393 |

2,893 |

-- |

687 |

Participation |

||||||

Students |

18,840- 365,690 |

0.8 |

15,072 - 292,552 |

33,144 - 292,552 |

0.67 |

10,098 - 196,010 |

Teachers (Instructional staff survey) |

1,880 - 27,520 |

0.8 |

1,504 - 22,016 |

1,504 - 22,016 |

0.5 |

752 - 11,008 |

Principals (Noninstructional staff survey) |

700 |

0.8 |

560 |

560 |

0.5 |

280 |

Noninstructional staff survey (not principals) |

13,340 |

0.8 |

10,672 |

10,672 |

0.5 |

5,336 |

Coordinators |

700 |

1 |

700 |

700 |

20 - 40 |

14,000 - 28,000 |

Principals (Nonresponse follow-up survey) |

180 |

0.33 |

60 |

60 |

0.17 |

10 |

Maximum Estimated Subtotal |

-- |

-- |

326,560 |

326,560 |

-- |

30,476 - 240,644 |

Maximum Estimated Total Burden |

-- |

-- |

327,953 |

329,453 |

-- |

241,331 |

A.13 Estimates of Cost to Respondents

Respondents will incur no costs for participation in the EDSCLS 2017 benchmark study or the nonresponse follow-up study beyond the time to respond.

A.14 Costs to the Federal Government

The total cost of the EDSCLS National Benchmark Study data collection to the government is approximately $1.3 million. This includes all direct and indirect costs of the data collection. The increase in the cost includes additional recruitment efforts for special districts, increased recruitment efforts (two more rounds of phone contact and sending field staff to schools proximate to AIR) and keeping staff on the recruitment team for a longer period of time to respond to questions from schools and districts (increase from three months planned in 2016 to eight months planned for 2017). There will be no cost to the government for the nonresponse follow-up study.

A.15 Reasons for Changes in Response Burden and Costs

Since the last approval in May 2016 (OMB# 1850-0923 v.4), NCES decided to expand follow up recruitment efforts to all 1,000 schools instead of the primary batch of 714 or so schools to offset low school response rates experienced to-date. The number of corresponding special handling districts has also been updated. NCES kept its burden tables to reflect the maximum possible respondent burden in the event that 700 schools opt to participate and all schools select to survey all of their grade 5-12 students and staff.

A.16 Publication Plans and Time Schedule

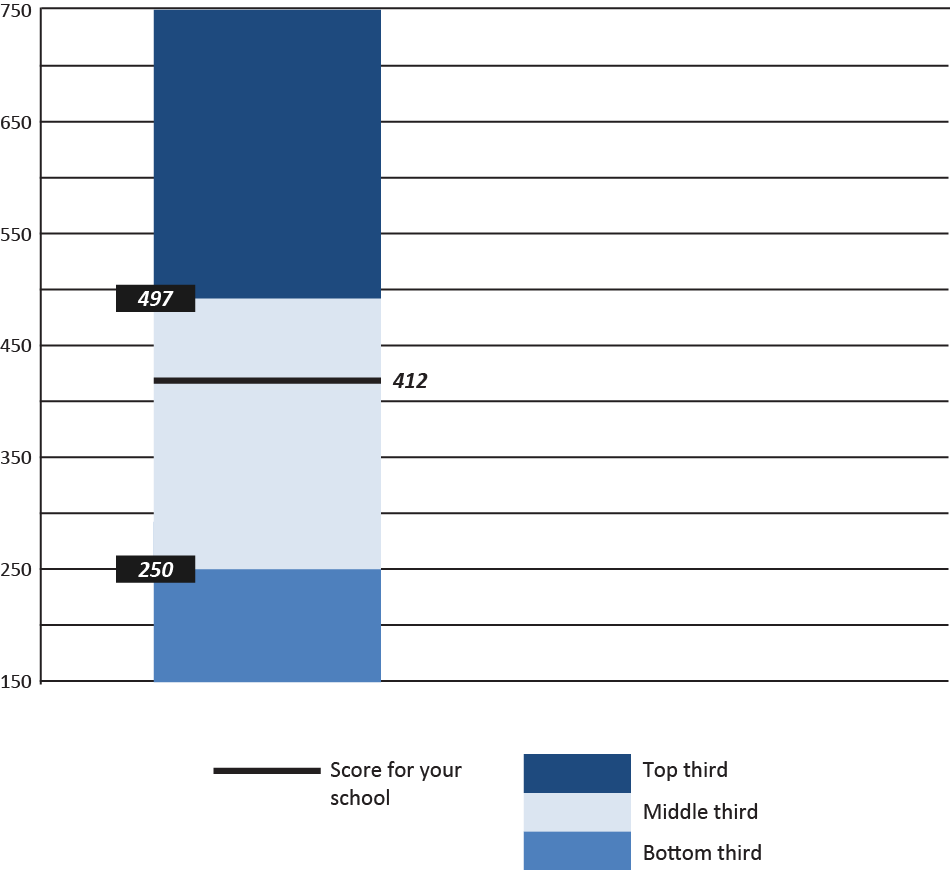

The EDSCLS national benchmark data will be made publically available through the second release of the EDSCLS platform in fall 2017. These national data will be included as the context for school scores. For example, figure 2 shows one possible way of showing tertiles based on the national data along with a school’s score. For more information, please see appendix E. We also plan to provide respondent-level subgroup reporting by grade (student only), sex, and race/ethnicity. If the number of respondents in some racial groups do not meet reporting standards, they will be collapsed into an “other” category.

Figure 2. SCLS scale report: Bar display with national information

After analyses of results, NCES will publish a report with national benchmark results in fall 2017.

Anticipated schedule for the 2017 EDSCLS benchmark study is as follows:

April – December, 2016: Research applications to special handling districts and recruitment of sampled schools.

December 2016 – June 2017: National benchmark data collection from 500 schools.

Fall, 2017: A revised school climate platform with national benchmark reporting available for download; a report with national benchmark results.

The nonresponse follow-up study will be conducted in June of 2016.

A.17 Approval to Not Display Expiration Date for OMB Approval

No special exception is being requested.

A.18 Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions to the certification statement identified in the Certification for Paperwork Reduction Act Submissions of OMB Form 8 are being sought.

1 In the pilot study, of 50 participating schools, 46 fielded the student survey, 37 fielded the instructional staff survey, 30 fielded the noninstructional staff survey, and only eight fielded the parent survey. During the debriefing meetings, some host sites mentioned being unsure of how to contact and administer the surveys to parents who did not have e-mail addresses or internet-capable devices, as these schools primarily contacted parents via phone. In the eight schools where parents were surveyed, log-in credentials were mailed or brought home by students.

2 Based on the 2013-14 CCD data that indicates that the total number of all students in the 700 participating schools is approximately 440,340, the average student/teacher ratio is 16 to 1, and administrative and all other support staff to student ratio is 33 to 1.

3 These surveys include Conditional for Learning Survey in Cleveland, Safe and Supportive Schools Survey in Iowa, Maryland Youth Risk Behavior Survey, and Albuquerque Public Schools Staff School Climate Survey.

4 The average hourly earnings of teachers/instructional staff in the May 2015 National Occupational and Employment Wage Estimates sponsored by the Bureau of Labor Statistics (BLS) is $28.45 (an average hourly rate of Elementary and Middle School Teachers, $27.91, and Secondary School Teachers, $28.98), of noninstructional staff is $21.34, and of principals/education administrators is $44.68. If mean hourly wage was not provided it was computed assuming 2,080 hours per year. The exception is student wage, which is based on the federal minimum wage. Source: BLS Occupation Employment Statistics, http://data.bls.gov/oes/ data type: Occupation codes: Elementary and Middle School Teachers (25-2020) and Secondary School Teachers (25-2030); Education, Training, and Library Workers, All Other (Elementary and Secondary Schools) (25-9099); and Education Administrators, Elementary and Secondary Schools (11-9032); accessed on April 5, 2016.

5 In order to request the most conservative estimated response burden, table 1 shows estimated burden ranges for the numbers of respondents, responses, and burden hours, while the totals in the table show the maximum possible burden amounts.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-23 |

© 2026 OMB.report | Privacy Policy