2558ss01 revised 03-28-18

2558ss01 revised 03-28-18.docx

Willingness to Pay Survey to Evaluate Recreational Benefits of Nutrient Reductions in Coastal New England Waters (New)

OMB: 2080-0084

Supporting Statement for Information Collection Request for

Willingness to Pay Survey to Evaluate Recreational Benefits of Nutrient Reductions in Coastal New England Waters

January 26, 2017

Revision in response to OMB Comments: March 28, 2018

TABLE OF CONTENTS

PART A OF THE SUPPORTING STATEMENT 4

1. Identification of the Information Collection 4

1(a) Title of the Information Collection 4

1(b) Short Characterization (Abstract) 4

2. Need for and Use of the Collection 6

2(a) Need/Authority for the Collection 6

2(b) Practical Utility/Users of the Data 7

3. Non-duplication, Consultations, and Other Collection Criteria 8

3(b) Public Notice Required Prior to ICR Submission to OMB 11

3(d) Effects of Less Frequent Collection 13

4. The Respondents and the Information Requested 14

5(b) Collection Methodology and Information Management 20

5(c) Small Entity Flexibility 20

6. Estimating Respondent Burden and Cost of Collection 22

6(a) Estimating Respondent Burden 22

6(b) Estimating Respondent Costs 23

6(c) Estimating Agency Burden and Costs 23

6(d) Respondent Universe and Total Burden Costs 23

6(e) Bottom Line Burden Hours and Costs 24

6(f) Reasons for Change in Burden 24

PART B OF THE SUPPORTING STATEMENT 26

1. Survey Objectives, Key Variables, and Other Preliminaries 26

2(a) Target Population and Coverage 28

2(c) Precision Requirements 33

2(d) Questionnaire and Mail Materials Design 38

4. Collection Methods and Follow-up 45

4(b) Survey Response and Follow-up 45

List of Attachments

Attachment 1 – Draft survey instrument: general recreation

Attachment 2 – Draft invitation letter

Attachment 3 – Responses to public comments on Federal Register Notices

PART A OF THE SUPPORTING STATEMENT

1. Identification of the Information Collection

1(a) Title of the Information Collection

Willingness to Pay Survey to Evaluate Recreational Benefits of Nutrient Reductions in Coastal New England Waters

1(b) Short Characterization (Abstract)

New England’s coastal social-ecological systems are subject to chronic environmental problems, including water quality degradation that results in important social and ecological impacts. Researchers at the U.S. Environmental Protection Agency’s (EPA) Office of Research and Development (ORD), Atlantic Ecology Division (AED) are piloting an effort to better understand how reduced water quality due to nutrient enrichment affects the economic prosperity, social capacity, and ecological integrity of coastal New England communities. This research is part of two major research efforts within the EPA: (1) Task 4.61 of ORD’s Sustainable and Healthy Communities Research Program (Integrated Solutions for Sustainable Communities: Social-Ecological Systems for Resilience and Adaptive Management in Communities - A Cape Cod Case Study), and (2) Task 3.04A of the Safe and Sustainable Waters Research Program (National Water Quality Benefits: Economic Case Studies of Water Quality Benefits), which is part of a three-office effort within EPA (Office of Research and Development, Office of Policy, and Office of Water) to quantify and monetize the benefits of water quality improvements across the nation.

As part of these two research efforts, we propose to conduct a survey that will allow us to estimate changes in recreation demand and values due to changes in nutrients in northeastern U.S. coastal waters. Our initial geographic focus for these efforts will be Cape Cod, Massachusetts (“the Cape”; Barnstable County), and New England residents within 100 miles of the Cape. We focus on Cape Cod and its surrounding coastal areas both in order to limit the scope of the work to remain feasible within our research budget, and to coordinate this socio-economic analysis with extensive ecological research being conducted on the Cape by ORD researchers, researchers at EPA’s Region 1 office, and other external research groups. Cape Cod is also in the midst of an extensive regional planning effort related to its coastal waters, and this research can provide helpful socio-economic information to decision makers about the use of those waters. Because the 100-mile radius from Cape Cod, to which the researchers would generalize results, includes a large area of southern New England and the largest population centers in New England, the results will be relevant to understanding coastal recreation and water quality perceptions of a large portion of the residents of southern New England.

One of the key water quality concerns on Cape Cod, and throughout New England, is nonpoint sources of nitrogen, which lead to ecological impairments in estuaries with resultant socio-economic impacts. The towns on the Cape are currently in the process of creating plans to address their total maximum daily load (TMDL) thresholds for nitrogen-impaired coastal embayments. There are over 40 coastal embayments and subembayments on the Cape. To date, the EPA has approved 12 TMDLs for embayments on Cape Cod with others pending review (Cape Cod Commission, 2015). The Massachusetts Estuaries Project estimates that wastewater accounts for 65% of the nitrogen sources on the Cape (Cape Cod Commission, 2015). Because Cape Cod’s wastewater is primarily handled by onsite septic systems (85% of total Cape wastewater flows), the main sources are spread across the Cape and are affected by individual household-level decisions as well as community-level decisions. Coordinated through the Cape Cod Commission and based on the Cape’s Clean Water Act Section 208 Plan, communities across the Cape have been tasked with developing a watershed-based approach for addressing water quality to improve valued socio-economic and ecological conditions. The decisions needed to meet water quality standards are highly complex and involve significant cross-disciplinary challenges in identifying, implementing, and monitoring social and ecological management needs. We will focus on understanding recreational uses as valued ecosystem services on the Cape (including beachgoing, swimming, fishing, shellfishing, and boating).

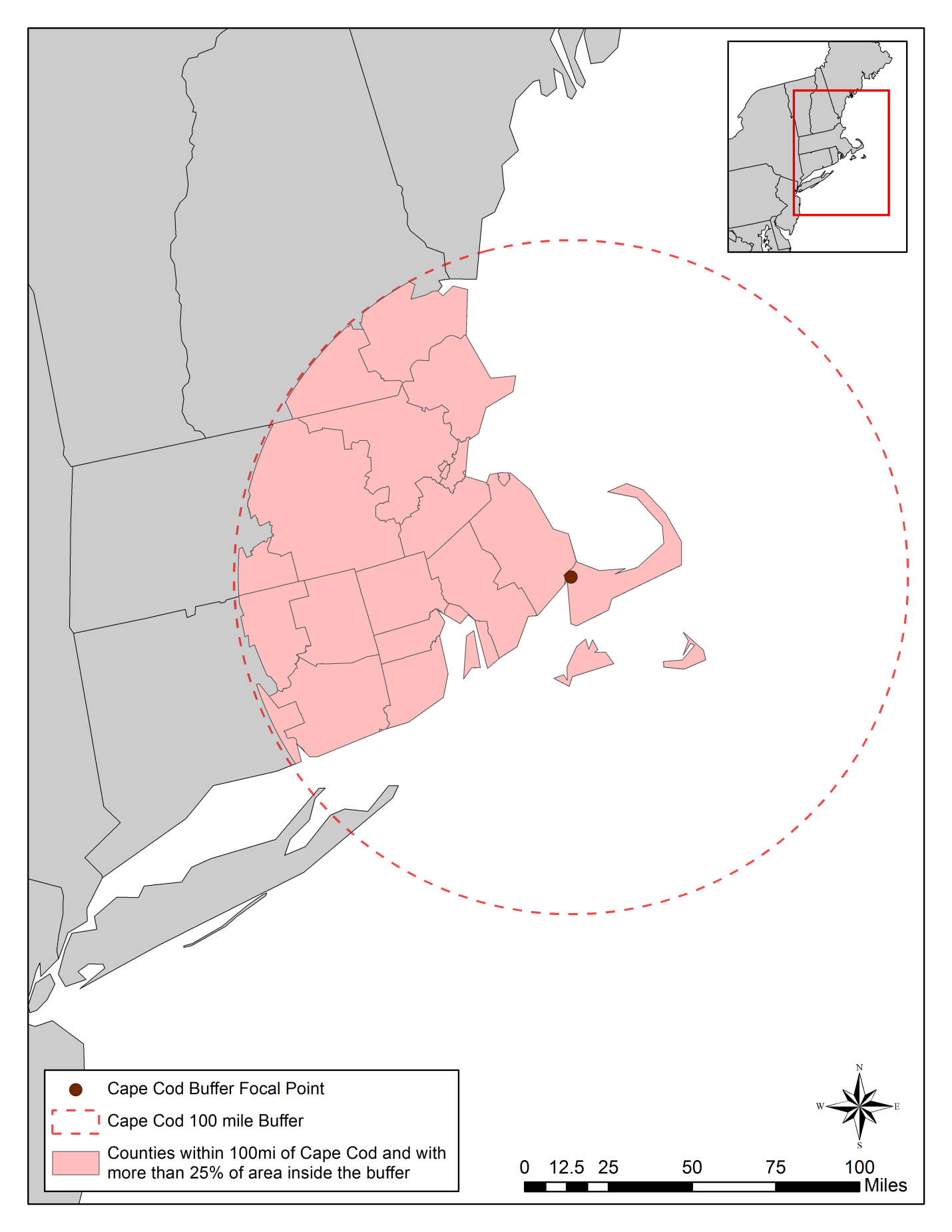

As part of these efforts, EPA’s ORD/AED is seeking approval to conduct a revealed preference survey to collect data on people’s saltwater recreational activities; how recreational values are related to water quality; how perceptions of water quality relate to objective measures; the connections between perceptions of water quality, recreational choices and values, and sense of place; and demographic information. If approved, the survey will be administered using a mixed-mode approach that includes a mailed invitation to a web survey with an optional paper survey for people who are unable or unwilling to answer the web survey. The survey will be sent to 8,400 households (in addition to a pretest of 370 households) in counties where more than 25% of the county’s geographic boundaries fall within 100 miles of the Cape as measured from a beach in Bourne, Massachusetts, which is the first town on Cape Cod heading east. This area includes coastal counties of New Hampshire, the eastern half of Massachusetts, all of Rhode Island, and the eastern part of Connecticut. Table A1 lists the included counties, and Figure A1 shows the sample area on a map. In addition, we will oversample residents of Cape Cod. We will send 750 surveys to this group. Thus, the total sample for the main survey will be 9,150.

ORD will use the survey responses to estimate willingness to pay for changes related to reductions in nutrient and pathogen loadings to coastal New England waters. The analysis relies on state of the art theoretical and statistical tools for non-market welfare analysis. A non-response bias analysis will also be conducted to inform the interpretation and validation of survey responses.

The total national burden estimate for all components of the survey is 563 hours. The burden estimate is based on 90 responses to 370 pretest surveys, and 2,163 responses to 9,150 main surveys. Assuming 15 minutes are needed to complete the survey, the total respondent cost comes to $19,618 for the pre-test and main survey combined, using an average wage rate for New England of $34.83 (United States Department of Labor, 2016).

2. Need for and Use of the Collection

2(a) Need/Authority for the Collection

Within EPA, this work will provide data to two of EPA’s ORD research programs: the Safe and Sustainable Water Resources Research (SSWR) Program and the Sustainable and Healthy Communities (SHC) Research Program. One of the four objectives identified in the SSWR research plan is to quantify benefits of water quality, because the values of many ecosystem services of water systems have not been estimated, or existing estimates are not up to date or comprehensive with regard to geographic and policy scope. Therefore, more effectively valuing the benefits of water quality improvements will aid in the protection or restoration of water quality (U.S. EPA, 2015a). This research also falls within the fourth objective of the SHC research plan: to develop the causal relationships between human well-being and environmental conditions (U.S. EPA, 2015b). Recreation benefits are a cultural ecosystem service obtained from the protection of natural resources, including coastal systems that contribute to human well-being (Millennium Ecosystem Assessment, 2005). This research will improve EPA’s ability to characterize recreational benefits of improved water quality in coastal communities.

Currently, very little is known about recreational uses and values for and attitudes towards waterbodies in New England’s coastal communities that, like Cape Cod, are facing problems of nutrient overenrichment primarily driven by non-agricultural nonpoint sources. This limits the EPA’s ability to assess the full economic and social impacts of nutrient overenrichment. There is also little known about how people’s perceptions of water quality relate to actual water quality measures. The proposed survey will focus on recreational uses of coastal waters. In particular, it will focus on calculating economic values for, and attitudes and perceptions towards, water quality and water-contact recreation. Specifically, the survey instrument will elicit revealed preferences that can be used to estimate non-market economic values associated with recreational uses of coastal and estuarine waterbodies and the related attitudes towards those waterbodies that could affect their elasticity of demand and future uses. Data obtained by the survey are intended to be analyzed in a random-utility model for valuing water-contact recreation, as well as ancillary modeling of water quality perceptions and hypotheses related to people’s sense of place.

The primary purpose of this study is to conduct research on several topics: participation in coastal recreation, including recreation that includes water contact; people’s perceptions of water quality and the factors that most strongly influence those perceptions; the relationship of water quality perceptions to objective measures; and the use of sense of place metrics in economic valuation models. The survey is being proposed by the EPA Office of Research and Development, and is not associated with any regulatory ruling of EPA. Thus, decisions were made in the study design from a perspective of making research contributions. We do not intend that results from this study will be used for any specific policy or rule, but we understand that it may provide valuable input to future decisions. While the primary purpose of the study is to examine these research questions and contribute to understanding of the connections between people and coastal water quality, we anticipate that the findings from this study will be of interest to regional and state partners and communities that are implementing water quality policies and actions for coastal waters regarding the potential recreational benefits of such policies and actions (for example, implementation of required TMDLs, green infrastructure solutions, or other actions).

Specifically, the survey will be used to estimate recreational users’ values for changes in water quality in coastal New England waters. Water quality models will be used to predict how water quality is likely to change under various policy scenarios and baseline conditions. Model predictions and valuation survey data will be combined to estimate recreational economic benefits under different hypothetical policy scenarios. In sum, the primary objective of the collection is to conduct research on important topics regarding coastal recreation and water quality. We are not conducting this research to inform decisions about a specific policy or policies, but to provide information that will further the understanding of our research questions and potentially provide value to future decisions.

We will integrate the economic model with information about the differences in perceptions of water quality and sense of place of the respondents in order to better understand how people respond to and benefit from nutrient reductions. Sense of place is the imbuement of meaning into a physical setting. This is often characterized for a particular setting in terms of a) physical characteristics, b) patterns of interactions and behaviors, c) non-evaluative descriptive meaning, and d) evaluative meaning of attachment, dependence, satisfaction, and identity (Stedman et al., 2006). By combining valuation methods from environmental economics with social science approaches, sense of place specifically, we seek to characterize the social-ecological system in a richer fashion, as well as test the sense of place elicitation methods in explaining differences in economic values associated with changes in water quality.

2(b) Practical Utility/Users of the Data

The primary reason for the proposed survey is exploratory research. A continuing problem for communities dealing with natural resource management problems is the issue of how to integrate natural resource valuation into a feasible decision-making process. One of the primary reasons for conducting economic valuation studies is to improve the way communities frame choices regarding the allocation of scarce resources and to clarify the trade-offs between alternative outcomes. This problem is particularly relevant for coastal recreation in New England, especially on Cape Cod. Despite the deep cultural importance of coastal recreation to New England residents, there is a remarkable lack of valid empirical economic and social studies quantifying this importance to the general public who live in the region.

There are many challenges to managing water quality in New England waters, and decision-makers are often faced with trade-offs when allocating resources to accommodate these uses. The goal of this project is to obtain estimated economic values for, and attitudes and perceptions towards, water quality and water-contact recreation. These estimates of the public value and attitudes will be useful in numerous policy contexts and will support numerous government agencies and community organizations that seek to integrate the value of recreation into their strategic water quality policy and financial decisions. Analysis of the revealed preference survey results, detailed in Part B of this Supporting Statement, will follow standard practices outlined in the literature (Parsons, 2014; Phaneuf & Smith, 2005).

The results of the study will be made available to EPA regional offices and state and local governments which they may use to better understand the preferences of households in their jurisdictions and the benefits they can expect as a result of actions to improve coastal water quality. Additionally, stakeholders and the general public will be able to use this information to better understand the social benefits of improving water quality in coastal waters.

3. Non-duplication, Consultations, and Other Collection Criteria

3(a) Non-duplication

To the best of our knowledge, this study is unique and does not duplicate other efforts. This is the first revealed preference recreational use study related to water quality for Cape Cod/southern New England in over 20 years, and the research will be important to address similar issues in other coastal communities (e.g., Long Island, NY, which is facing similar issues and decisions). This research intends to design a survey for developing a random-utility model for recreation site choice in New England waterbodies that integrates the differences in sense of place and water quality for coastal recreation users.

There are many studies in the environmental economics literature that quantify benefits, or willingness to pay (WTP), associated with water recreation, but few that address WTP as a function of nutrient impacts in coastal waters and fewer still that are recent and relevant for New England. In addition to EPA’s own survey of the literature, EPA contracted a literature review including valuation studies of recreation and water quality (WA 2-35, Contract EP-C-13-039). Of those revealed preference studies of recreation that include water quality, many are freshwater based (Murray et al., 2001; Yeh et al., 2006; Egan, 2009 Feather, 1994; Melstrom & Jayasekera, 2016). Melstrom & Jayasekera (2016), and Feather (1994) deal only with fishing, while the others address more general freshwater beach or lake visits.

There is a set of papers that address bacteria and beach closures in saltwater (Bockstael et al., 1987; Parsons et al., 2009; Hilger & Hanemann, 2008). Bockstael et al. (1987) was conducted in coastal Massachusetts and is a seminal work in recreation demand modeling, but is dated at this point and, like the others, does not address nutrient-related water quality issues. Kaoru et al. (1995) addresses nutrients in coastal waters, again estimating changes in WTP for fishing only. This study uses nutrient loading from point sources in the National Coastal Pollutant Discharge Inventory within ten miles from a fishing site as the proxy for water quality. While it grapples with similar issues to our study, it is over 20 years old, for a different geographic region and for only one specialized coastal activity.

The two works that are closest to ours in the economic valuation literature are Opaluch et al. (1999) and Phaneuf (2002). The latter estimates changes in WTP for water quality improvements in watersheds as a function of watershed-scale water quality metrics including EPA’s Index of Watershed Indicators as well as direct measures of pH, phosphorus, dissolved oxygen and ammonia. The study includes both inland and coastal watersheds and a range of activities, although the results are reported only in terms of WTP for a trip of any activity type to a given watershed in North Carolina under improvement and access scenarios. Opaluch et al. (1999) estimated per trip values for swimming, boating, fishing, and wildlife viewing on the East End of Long Island. They also estimated changes in WTP for swimming trips with changes in nitrogen, bacteria, brown tides, and secchi depth. The estimates are based not on a random utility model (RUM) approach, but on a multiple site count-data model, which relied on assumptions that make welfare measures inconsistent with economic demand theory (Phaneuf and Smith, 2005). Although they are dated, these estimates are still, to this day, the most relevant values for coastal recreation trip days as a function of nutrient related water quality changes for the region. Our planned study will update these estimates in time, methods and geographic extent.

Costs and benefits of water quality improvements/impairments will accrue to different populations. While statistical methods exist to handle these issues in theory—mixed logit and latent variable techniques, for example—using sense of place methods to test the differences in demand for the varying populations of a community in a policy-relevant application would allow us to quantify the disparate impacts of water quality on non-market benefits in a formal way. This location-specific study will be compared to benefit transfer, functional benefit transfer and other higher-level approximation methods in water quality benefit estimation in order to address the appropriateness and transferability of these methods.

Sense of place is an important metric for understanding the implications of recreation and attitudes towards natural resources. Sense of place is the measurement of the meaning that individuals set on a particular geographic area. That meaning is sometimes defined by natural resources, culture, or both (Stedman et al., 2006; Stedman et al., 2004). Sense of place can be a useful indicator for determining the sustainable use of different types of ecosystem services. It serves as a measurement of different landscape features that may be culturally important to people (de Groot et al., 2010). It is listed specifically in the Millennium Ecosystem Assessment (2005) of the United Nations as one of the nonmaterial benefits people obtain from ecosystem services.

There have been studies in other locations investigating the sense of place or place attachment of different communities related to natural resources, including work done for permanent and seasonal residents in Utah (Matarrita-Cascante et al., 2010), second home owners on northern Wisconsin lakes (Jorgenson & Stedman, 2001; 2006), and Arctic residents in Norway (Kaltenborn, 1998). There is also an existing body of literature that connects sense of place or place attachment to recreational opportunities, including users in wilderness areas (Williams et al., 1992) and the Appalachian Trail (Kyle et al., 2003; 200) as well as Smith et al. (2016)’s use of place attachment in exploration of shifting demand for winter recreation in Minnesota. This body of work provides important insights for survey design and the use of sense of place for improving the understanding of attitudes towards natural resources and recreation, but only Kaltenborn (1998) focuses on coastal communities, which are of a different socio-economic and ecological typology. Kaltenborn’s (1998) work is specifically focused on the residents of an archipelago in the high Arctic, which is very geographically and culturally different from our work. In particular, there has also been no effort to connect sense of place with recreational use or water quality in coastal New England. The only related Cape Cod-specific work is that of Cuba and Hummon (1993a; 1993b) who focused their research on understanding how Cape Cod residents who had recently moved constructed a sense of home based on their dwelling, community, and region. While this work provides important context for some of the user groups, it does not connect with the Cape’s waterbodies, recreation, water quality, or other valued commodities.

There are two other works that are relevant to New England coastal recreation, but do not address water quality or non-market benefits. NOAA’s National Marine Fisheries Service (NMFS) is currently processing the results from a national coastal recreation survey that is concerned with market impacts of recreation spending in coastal counties (Steinback & Kosaka, 2016, OMB Control Number: 0648-0652). In designing our survey, we contacted the economists, Scott Steinback and Rosemary Kosaka (personal communication, 2016), who are overseeing the effort, and reviewed the data collected. The NMFS survey complements ours by collecting detailed participation rates and effort estimates about which water-based activities people engage in and how often. It also collects recreational expenditure data to estimate the market economic impacts of coastal recreation. Because we will have access to these data, it will allow us to avoid re-collecting detailed effort estimates, saving space and time on our survey instrument. This complementary survey will allow our survey to focus on collecting data for a single choice occasion RUM of recreation demand including water quality as a determinant. Our survey results will complement the market impacts that will be calculated in the NOAA survey with non-market values for water recreation trips.

The other related work is based on an opt-in sample of coastal recreation conducted by Point 97, SeaPlan, and the Surfrider Foundation (Bloeser et al., 2015). This study collected locations by activity along the coast of New England from a web-mapping survey instrument. The data may help inform geographic areas to use as site choices for our RUM, but does not contain the information to estimate non-market benefits of coastal recreation that would be representative of the general population.

To conclude, while recreation and water quality has been studied, our study adds important information to the literature for the following reasons:

It is relevant to nutrient pollution in coastal waters.

It estimates values for multiple recreational activities beyond fishing.

It collects New England regional estimates.

It provides up-to-date WTP estimates for coastal activities.

It incorporates sense of place concepts to explain varying preferences.

3(b) Public Notice Required Prior to ICR Submission to OMB

The first of the two Federal Register Notices opened on November 9, 2016 and closed on January 9, 2017. The second Federal Register Notice opened on November 13, 2017 and closed on December 13, 2017. See responses to public comments in Attachment 3.

3(c) Consultations

Preliminary consultations have been conducted with several stakeholder organizations related to this effort. Information collected with the survey may be of interest to other federal, state, and local agencies that regulate water quality, promote tourism, and engage with coastal communities. Further, the collection may be of interest to non-profit organizations and other researchers focused on the economy, communities, and environment of Cape Cod and the greater New England area. AED has made concerted efforts to keep interested parties informed of the progress of this project and to solicit feedback, and will continue to do so going forward.

AED has contracted Professor George Parsons, a topic expert from the University of Delaware, to review the survey instrument and research design. We also are working closely with the recreation group of EPA’s three-office national water quality benefits effort, which includes ORD, EPA’s National Center for Environmental Economics, and EPA Office of Water economists. We have also reached out to and consulted with local environmental economics professors, Kathleen Bell, University of Maine, Stephen Swallow, University of Connecticut, and Emi Uchida, University of Rhode Island.

In addition to consultations with local experts, two early presentations have been given on this work to solicit feedback from experts in the environmental economics and social science fields. The presentations were given at the International Symposium for Society and Resource Management (ISSRM) and the Northeast Agricultural and Resource Economics Association (NAREA) Annual Meetings. ISSRM is the annual symposium of the International Association for Society and Natural Resources (IASNR). As described on its website (IASNR, 2016), IASNR is a professional association that brings together diverse social sciences to focus on research of the environment. NAREA is an affiliate organization of the Agricultural and Applied Economics Association that promotes education and research on economic and social problems related to natural resource use and the environment (NAREA, 2016).

As part of the planning and design process for this collection, EPA conducted a series of seven focus groups located within the study area – four in Rhode Island, two in Massachusetts, and one in Connecticut. While early focus group sessions were used to learn about people’s coastal recreational activities, attributes of locations they care about, and the kinds of information respondents would need to answer the questions, later sessions were employed to test the draft survey materials. These consultations with potential respondents were critical in identifying sections of the questionnaire that were redundant and lacked clarity and in producing a survey instrument meaningful to respondents. The later focus group sessions were also helpful in estimating the expected amount of time respondents would need to complete the survey instrument. The focus group sessions were conducted under EPA ICR # 2205.17, OMB # 2090-0028.

As noted in the non-duplication section, EPA reached out to NOAA/NMFS. In designing our survey, we contacted the economists, Scott Steinback and Rosemary Kosaka, who are overseeing NMFS’ coastal recreation data collection effort and reviewed the data collected. We determined that the NMFS survey complements ours by collecting detailed participation rates and effort estimates.

Survey Design Team: Dr. Marisa Mazzotta at the U.S. Environmental Protection Agency, Office of Research and Development, serves as the project manager for this study. Dr. Mazzotta is assisted by Dr. Nathaniel Merrill, Dr. Kate Mulvaney, and Ms. Sarina Lyon, all with the U.S. EPA’s Office of Research and Development. Dr. George Parsons, Professor at the School of Marine Science and Policy, University of Delaware, provided review of the draft survey. Mr. Matthew Anderson, Senior Analyst at Abt Associates, provides contractor support.

Dr. George Parsons, a professor at the School of Marine Science and Policy, University of Delaware, specializes in travel cost, hedonic price, contingent valuation, and choice experiments. His work includes two summary works on travel cost methods and a number of studies on recreation RUM methods and applications. He has worked extensively valuing coastal resources using revealed preference methods.

Mr. Matthew Anderson, a senior analyst at Abt Associates, specializes in data collection and survey implementation. Mr. Anderson is trained in quantitative and behavioral research in the social sciences with strong background in survey research design and analysis. Mr. Anderson is currently directing a mixed-mode survey for the EPA dealing with the removal and repair of lead paint in commercial buildings. He has worked with the EPA to finalize survey instruments, create a project timeline and implementation schedule, refining sampling parameters, and coordinating the field effort. He was previously the deputy survey director for a large-scale mixed-mode national mental health survey for SAMHSA, which collected data from over 22,000 mental health facilities. He has extensive experience managing operations staff, project budgets, instrument design/programming, and creating data cleaning specifications.

3(d) Effects of Less Frequent Collection

The survey is a one-time activity. Therefore, this section does not apply.

3(e) General Guidelines

The survey will not violate any of the general guidelines described in 5 CFR 1320.5 or in EPA’s ICR Handbook.

3(f) Confidentiality

All responses to the survey will be kept confidential to the extent provided by law. To ensure that the final survey sample includes a representative and diverse population of individuals, the survey questionnaire will elicit basic demographic information, such as age, race and ethnicity, number of children under 18, type of employment, and income. However, the survey questionnaire will not ask respondents for personal identifying information, such as names or phone numbers. Instead, each survey response will receive a unique identification number. Prior to taking the survey, respondents will be informed that their responses will be kept confidential to the extent provided by law. The name and address of the respondent will not appear in the resulting database, preserving the confidentiality of the respondents’ identities. The survey data will be made public only after it has been thoroughly vetted to ensure that all other potentially identifying information has been removed. After data entry is complete, the surveys themselves will be destroyed.

The U.S. EPA office location (AED) and U.S. EPA electronic file system used by the principal investigator are highly secure. A keycard possessed only by U.S. EPA employees and contractors is necessary to enter the building. The principal investigators are in a separate keyed office space within the secure building. The computer system where the personal names and addresses associated with respondent numeric codes will be stored during the process of data entry is a secure server requiring principal investigator personal login username and password. At the conclusion of data entry, this file linking personal names and addresses to respondent codes will destroyed (along with hard copy survey responses themselves) and only respondent codes will remain.

3(g) Sensitive Questions

The survey questionnaire will not include any sensitive questions pertaining to private or personal information, such as sexual behavior or religious beliefs.

4. The Respondents and the Information Requested

4(a) Respondents

Eligible respondents for the survey are individuals 18 years of age or older who reside in counties where at least 25 percent of the county’s geographic area falls within a 100-mile radius of Cape Cod. Table A1 lists the states and counties included, and Figure A1 maps this area. The sample will be stratified by geography, with Barnstable County, MA sampled at a rate 3.06 times higher than the rest of the population in the study area. The sample will be drawn from general population addresses of the U.S. Postal Service Delivery Sequence File (DSF).

Households will be selected randomly from the DSF, which covers over 97 percent of residences in the United States. The DSF includes city‐style addresses and post office boxes, and covers single‐unit, multi‐unit, and other types of housing structures. As described in Part B of this Supporting Statement, we assume that 90% of the addresses will be valid and will receive the survey. EPA will request participation from a random stratified sample of 9,520 households in two phases. The first phase, a pretest, will be sent to 370 addresses. In the pretest, we will test the process of administering the survey, and will evaluate whether respondents are able to answer all questions as intended. To evaluate the pretest, we will calculate summary statistics for important variables, including water recreation participation, activities, distance travelled, and demographics (see Part B Section 2(c)(iii) for specific questions). We will also examine item nonresponse for each survey question, and will examine response rates for online and paper surveys and whether there are any major differences between the two modes. We will estimate a basic travel cost model using the pretest results. The second phase, encompassing full survey administration, will be administered to an additional 9,150 addresses. In each phase, we anticipate a response rate of 27 percent, resulting in 90 and 2,163 completed surveys, after correcting for expected undeliverable rates for each county.

Table A1 shows the included counties and anticipated completed survey sample sizes for the geographic regions included in this study. More detail on planned sampling methods and the statistical design of the survey can be found in Part B of this supporting statement.

Table A1: Anticipated Sample Sizes by State and County |

|||||

State |

Counties Included |

Phase 1: Pretest |

Phase 2: Full Survey |

||

Sample Size1 |

Percentage of Sample |

Sample Size1 |

Percentage of Sample |

||

New Hampshire |

Hillsborough, Rockingham |

7 |

8% |

166 |

8% |

Massachusetts |

Barnstable2, Bristol, Dukes, Essex, Hampden, Middlesex, Nantucket, Norfolk, Plymouth, Suffolk, Worcester |

64 |

71% |

1,581 |

73% |

Rhode Island |

Bristol, Kent, Newport, Providence, Washington |

11 |

12% |

250 |

12% |

Connecticut |

New London, Tolland, Windham |

8 |

9% |

166 |

8% |

Total |

|

90 |

100% |

2,163 |

100% |

1 Sample sizes presented in this table reflect total expected completed surveys, accounting for expected undeliverable rates by county. 2 Includes oversampling of Barnstable County. |

|||||

Figure A1. Counties included in sampling area.

4(b) Information Requested

(i) Data items, including recordkeeping requirements

EPA developed the survey based on the findings of a series of seven focus groups conducted as part of survey instrument development (EPA ICR # 2205.17, OMB # 2090-0028). Focus groups provided valuable feedback which allowed EPA to iteratively edit and refine the questionnaire, and eliminate or improve imprecise, confusing, and redundant questions. In addition, later focus groups provided useful information on the approximate amount of time needed to complete the survey instrument. This information informed our burden estimates. Focus groups were conducted following standard approaches in the literature (Desvousges et al., 1984; Desvousges & Smith, 1988; Johnston et al., 1995).

EPA has determined that all questions in the survey are necessary to achieve the goal of this information collection, i.e., to collect data that can be used to support an analysis of recreation and water quality. The draft survey is included as Attachment 1, and described in more detail in Part B of this document. The survey has 5 sections: (1) Your Saltwater Recreation in New England, which gathers participation and effort data for saltwater recreation; (2) Your Most Recent Saltwater Recreation in New England, which gathers information on the last saltwater recreation trip; (3) Other Places for Saltwater Recreation, which elicits water quality perceptions for other locations where the respondent goes for saltwater recreation, asks about the furthest that the respondent would travel on a single day for salt water recreation, and asks about respondents’ responses to bacteria and beach closures; (4) Your Opinions on Coastal Water Quality in New England, which asks for the respondent’s opinions about a set of impacts of water quality issues in New England; and (5) About Your Household, which asks for demographic information, residence zip code and the zip code where the respondent works, and whether the respondent owns a second home and its zip code.

(ii) Respondent activities

EPA expects individuals to engage in the following activities during their participation in the survey:

Go online to answer a web survey, or answer a paper survey that will be mailed to those who do not respond to the web survey within 14 days of mailing the second web survey invitation.

Review the brief background information provided in the beginning of the survey document.

Complete the survey questionnaire, either online or paper version and, if paper version is answered, return paper version by mail.

A typical subject participating in the survey is expected to take 15 minutes to complete the survey. These estimates are derived from focus groups in which respondents were asked to complete a survey of similar length and detail to the current survey.

5. The Information Collected - Agency Activities, Collection Methodology, and Information Management

5(a) Agency Activities

The survey is being developed, conducted, and analyzed by EPA’s Office of Research and Development with contract support provided by Abt Associates Inc. (EPA contract No. EP-C-13-039).

Agency activities associated with the survey consist of the following:

Developing the survey questionnaire and related materials as well as sampling design.

Randomly selecting survey participants from the U.S. Postal Service DSF database.

Programming of web survey.

Printing of paper survey.

Mailing of initial web survey invitation.

Mailing of second web survey invitation.

Sending the paper survey to households who did not respond to the web survey.

Data entry and cleaning.

Analyzing survey results.

Conducting the non-response bias analysis based on available data. This will compare results of questions in Section 1 to existing national studies of participation in coastal recreation, present the geographic distribution of the respondents, and compare demographics to census data. See Part B, Section 2(c)(iii) for additional details.

If necessary, EPA will use results of the non-response bias analysis to adjust weights of respondents to account for non-response and minimize the bias.

EPA will primarily use the survey results to estimate the social value of changes in ecosystem quality, for recreational uses of coastal waters. EPA will also model water quality perceptions relative to objective measures, and explore the use of sense of place measures in conjunction with economic measures and relative to water quality perceptions.

5(b) Collection Methodology and Information Management

EPA plans to implement the proposed survey using a mixed-mode approach, which will invite respondents to answer the questionnaire on the internet. Offering the survey on the internet will allow respondents to select locations from interactive maps and enable them to identify their destination sites more accurately. An internet survey will also use checks and prompts to minimize missing and/or incorrectly entered information. Those who do not reply to the internet survey will be mailed a paper survey to complete. After finalizing the survey instrument, EPA will program the instrument using Confirmit web software. EPA will then use the U.S. Postal Service DSF database to identify households that will receive the survey invitation. The survey invitation letter (Attachment 2), which contains an explanation of the survey’s purpose and a URL to access the web survey, will be mailed to the selected households. The reminder letter will be similar to the initial invitation letter, with modifications to the introductory text.

Our main reason for selecting the mixed-mode approach is to avoid the potential inaccuracies associated with data entry from paper surveys where people will write in the location of their last day of recreation. Our review of the literature on relevant mixed-mode surveys indicates that overall response rates compared to a straight mail survey are slightly lower (Berzelak et al., 2015; Edwards et al., 2014; Brennan, 2011; Messer & Dillman, 2011; Schmuhl et al., 2010; Hohwu et al., 2013). We expect the improvements in data accuracy, leading to more usable responses and reductions in costs of data handling, will compensate for a small decrease in response rate. A similar number of responses from paper surveys using a mail-only approach may be unusable due to the inability to identify the recreation location precisely enough to connect to water quality. As part of our research, we intend to report on these results by comparing the time and cost of preparing the data and the loss of usable responses from the paper surveys to the time, cost, and usable responses from the web surveys. Our literature review of relevant mixed-mode studies using a mail invitation to a web survey, followed up with a paper survey, indicate that from 61% to 76% of total responses to these surveys are completed on the web (Berzelak et al., 2015; Edwards et al., 2014; Brennan, 2011; Messer and Dillman, 2011). Messer and Dillman (2011) also found that respondent demographics for the mixed-mode sample were similar to those of mail only.

We anticipate that there could be differences between the paper responses and internet responses, and intend to test for this in our statistical modeling. We hypothesize, based on existing literature, that people who respond by paper may have different demographics (e.g., older population) and, as a result, possibly different preferences. However, we do not expect the willingness to pay estimates to be biased for these people. What we do expect is a possible lower accuracy in identifying the location of their last recreation trip. So, it is possible that we may have slightly less accurate estimates of travel costs for these people if, for example, we can only identify the town where they recreated rather than a specific beach. We may also lose some observations if we cannot identify the location accurately enough to connect to water quality measures.

EPA will take multiple steps to promote response. Respondents will be sent a reminder letter approximately one week after the initial letter mailing. Approximately three weeks after the request to complete the web survey, all households that have not responded will receive a copy of the paper questionnaire with a cover letter. The cover letter will remind households to complete the survey. Based on this approach to mixed-mode data collection, it is anticipated that approximately 27 percent of the selected households who received the survey invitation will either complete the web survey or return a completed paper survey (Brennan, 2011; Edwards, 2014; Messer and Dillman, 2011).

Since the desired number of completed surveys for the general population is 2,163, it will be necessary to mail survey invitations to 9,150 households, assuming that a portion of the addresses will not be valid (with county-level variations).

Data quality will be monitored by checking submitted surveys for completeness and consistency. Responses to the survey will be stored in an electronic database. This database will be used to generate a data set for a RUM model of recreational values for ecosystem improvements, and regression models to compare water quality perceptions to objective measures and to explore how water quality perceptions and sense of place are related. To protect the confidentiality of survey respondents, the survey data will be released only after it has been thoroughly vetted to ensure that all potentially identifying information has been removed.

5(c) Small Entity Flexibility

This survey will be administered to individuals, not businesses. Thus, no small entities will be affected by this information collection.

5(d) Collection Schedule

The schedule for implementation of the survey is shown in Table A2.

Table A2: Schedule for Survey Implementation |

|

Pretest Activities |

Duration of Each Activity |

Printing of invitation and reminder letters and questionnaires |

Weeks 1 to 3 |

Mailing of Invitation Letters |

Week 4 |

Mailing of Reminder Letters |

Week 5 |

Survey Packet mailing (two weeks after reminder letters) |

Week 7 |

Data entry |

Weeks 6 to 8 |

Cleaning of data file |

Week 9 |

Delivery of data |

Week 10 |

Full Survey Implementation |

|

Printing of invitation and reminder letters and questionnaires |

Weeks 13 to 15 |

Mailing of Invitation Letters |

Week 16 |

Mailing of Reminder Letters |

Week 17 |

Survey packet mailing (one week after reminder letter mailing) |

Week 18 |

Data entry |

Weeks 18-22 |

Cleaning of data file |

Week 23 |

Delivery of data |

Week 24 |

6. Estimating Respondent Burden and Cost of Collection

6(a) Estimating Respondent Burden

Subjects who participate in the survey and follow-up interviews during the pre-test and main surveys will expend time on several activities. EPA will use similar materials in both the pre-test and main stages; it is reasonable to assume the average burden per respondent activity will therefore be the same for subjects participating during either pre-test or main survey stages.

Based on focus groups, EPA estimates that on average each respondent mailed the survey will spend 15 minutes (0.25 hours) reviewing the introductory materials and completing the survey questionnaire. EPA will administer the pre-test survey to 370 households; assuming that 90 respondents will complete and return the survey, the national burden estimate for respondents to the pre-test survey is 23 hours. During the main survey stage, EPA will administer the survey to 9,150 households; assuming that 2,163 respondents will complete and return the survey, the national burden estimate for these survey respondents is 541 hours. These burden estimates reflect a one-time expenditure in a single year.

6(b) Estimating Respondent Costs

Estimating Labor Costs

According to the Bureau of Labor Statistics, the average hourly wage for private sector workers in the northeast region of the United States is $34.83 (2016$) (U.S. Department of Labor, 2016). Assuming an average per-respondent burden of 0.25 hours (15 minutes) for individuals mailed the survey and an average hourly wage of $34.83, the average cost per respondent is $8.71. Of the 9,520 individuals invited to participate in the survey during either pre-test or main implementation, 2,253 are expected to complete a survey. The total cost for all individuals who complete surveys would be $19,618.

EPA does not anticipate any capital or operation and maintenance costs for respondents.

6(c) Estimating Agency Burden and Costs

Agency costs arise from staff costs, contractor costs, and printing costs. EPA staff are expected to expend approximately 3,520 hours for survey development and implementation, analyzing data, and writing reports. Total labor costs for EPA staff time are estimated as $140,985.

Abt Associates will be providing contractor support for this project with funding of $203,507 from EPA contract EP-C-13-039, which provides funds for the purpose of coastal recreation survey development and support. Abt Associates Inc. staff and its consultants are expected to spend 1,228 hours pre-testing the survey questionnaire and sampling methodology, conducting the mixed-mode survey, and tabulating and analyzing the survey results. The cost of this contractor time is $203,507.

Agency and contractor burden is 4,748 hours, with a total cost of $344,492 excluding the costs of survey printing.

Printing of the survey is expected to cost $21,681. Thus, the total Agency and contractor burden would be 4,748 hours and would cost $366,173.

6(d) Respondent Universe and Total Burden Costs

EPA expects the total cost for survey respondents to be $19,618 (2016$), based on a total burden estimate of 563 hours (across both pre-test and main stages) at an hourly wage of $34.83.

6(e) Bottom Line Burden Hours and Costs

The following tables present EPA’s estimate of the total burden and costs of this information collection for the respondents and for the Agency. The bottom line burden for these two together is $385,791.

Table A4: Total Estimated Bottom Line Burden and Cost Summary for Respondents |

||

Affected Individuals |

Burden (hours) |

Cost (2016$) |

Pre-test Survey Respondents |

23 |

$784 |

Main Survey Respondents |

541 |

$18,834 |

Total for All Survey Respondents |

563 |

$19,618 |

Annual Respondent Cost* One-time collection / 3 years |

188 |

$6,539 |

Table A5: Total Estimated Burden and Cost Summary for Agency |

||

Affected Individuals |

Burden (hours) |

Cost (2016$) |

EPA Staff |

3,520 |

$140,985 |

Survey Printing |

|

$21,681 |

EPA's Contractors for the Survey |

1,228 |

$203,507 |

Total Agency Burden and Cost |

4,748 |

$366,173 |

Annual Agency Cost* One-time collection / 3 years |

|

$122,058 |

6(f) Reasons for Change in Burden

This is a new collection. The survey is a one-time data collection activity.

6(g) Burden Statement

EPA estimates that the public reporting and record keeping burden associated with the survey will average 5 minutes per respondent (i.e., a total of 563 hours of burden divided among 90 pre-test respondents, 23 hours, and 2,163 main survey respondents, 541 hours). Burden means the total time, effort, or financial resources expended by persons to generate, maintain, retain, or disclose or provide information to or for a Federal agency. This includes the time needed to review instructions; develop, acquire, install, and utilize technology and systems for the purposes of collecting, validating, and verifying information, processing and maintaining information, and disclosing and providing information; adjust the existing ways to comply with any previously applicable instructions and requirements; train personnel to be able to respond to a collection of information; search data sources; complete and review the collection of information; and transmit or otherwise disclose the information. An agency may not conduct or sponsor, and a person is not required to respond to, a collection of information unless it displays a currently valid OMB control number. The OMB control numbers for EPA's regulations are listed in 40 CFR part 9 and 48 CFR chapter 15.

To comment on the Agency's need for this information, the accuracy of the provided burden estimates, and any suggested methods for minimizing respondent burden, including the use of automated collection techniques, EPA has established a public docket for this ICR under Docket ID No. EPA-HQ- EPA-HQ-ORD-2016-0632, which is available for online viewing at www.regulations.gov, or in person viewing at the Office of Research and Development (ORD) Docket in the EPA Docket Center (EPA/DC), EPA West, Room 3334, 1301 Constitution Ave., NW, Washington, DC. The EPA/DC Public Reading Room is open from 8:30 a.m. to 4:30 p.m., Monday through Friday, excluding legal holidays. The telephone number for the Reading Room is 202-566-1744, and the telephone number for the Office of the Administrator Docket is 202-566-1752.

Use www.regulations.gov to obtain a copy of the draft collection of information, submit or view public comments, access the index listing of the contents of the docket, and to access those documents in the public docket that are available electronically. Once in the system, select “search,” then key in the docket ID number, EPA-HQ- EPA-HQ-ORD-2016-0632.

PART B OF THE SUPPORTING STATEMENT

1. Survey Objectives, Key Variables, and Other Preliminaries

1(a) Survey Objectives

The survey is being proposed by the EPA Office of Research and Development, and is not associated with any regulatory ruling of EPA. Because the primary reason for the proposed survey is research, decisions were made in the study design from a perspective of making research contributions, rather than for conducting a definitive benefits analysis for regulatory purposes. The overall goal of this survey is to understand how reduced water quality due to nutrient enrichment is affecting and may affect economic prosperity and social capacity of coastal New England communities. EPA has designed the survey to provide data to support the following specific objectives:

To estimate the revealed recreational use values that coastal residents of Southern New England place on improving water quality in coastal New England waters.

To understand and connect the social and economic value of improvement in water quality and recreational opportunities.

To estimate use of different types of coastal systems.

To understand how individuals’ perceptions of water quality relate to actual water quality measurements in coastal systems.

To understand how values vary with respect to individuals’ attitudes, awareness, sense of place, and demographic characteristics.

Understanding public values for water quality improvements is necessary to better determine the benefits associated with reductions in nutrients (in this case, nitrogen) to New England coastal waters. Very little data exist on the use of coastal New England waters, and the data that do exist are limited to a few larger beach areas that are rarely exposed to water quality concerns.

1(b) Key Variables

The key questions in the survey ask respondents about the recreation they do and how they perceive and value the water quality in coastal areas. The Random Utility Model (RUM) framework is a type of travel cost method that allows respondents to provide data about their last coastal recreation trip. Travel costs methods are premised upon the idea that the “price” for recreation can be represented by the cost in reaching and entering the location (Parsons, 2003). RUM models are multiple site methods that include substitution among different sites while accommodating quality-change valuation (Parsons, 2003). The questions ask about the costs of a recreational trip including lodging, distance traveled, hours spent on the activity and in traveling to the site, and entrance fees. To understand substitution and quality changes among the sites, the following questions are included: activities the respondent participated in at that location, sense of place, and perceived water quality. The survey design follows well-established revealed preference recreational valuation methodology and format (Parsons, 2014).

The survey focuses on saltwater recreation in coastal New England. It asks respondents for attributes describing their last saltwater recreation trip. Specifically, it includes questions on the following attributes: activity type and frequency, location, and travel costs. As discussed in Parsons (2014), these attributes are important for modeling recreation demand under changing conditions, such as changes in water quality. Variables for demographic characteristics will also be included in the analysis both to control for heterogeneity in preferences for recreation sites, as well as to estimate the cost of time spent on recreation for a respondent.

The study design includes water quality perception and sense of place questions to better understand how these attributes contribute to site choice, and to explore other research hypotheses (as discussed elsewhere in this document). The water quality perception questions are coastal equivalents of a set of questions developed for use in freshwater, nutrient impacted water systems (see Genskow & Prokopy, 2011). As no single study has covered sense of place related to recreation in coastal waters, the sense of place questions are a combined set of questions taken from freshwater studies and recreation studies (Stedman et al., 2006; Mullendore et al., 2015; Smith et al., 2016).

1(c) Statistical Approach

A statistical survey approach in which a randomly drawn sample of households is asked to complete the survey is appropriate for estimating the values associated with improvements in coastal water quality. A census approach is impractical because of the extraordinary cost of contacting all households. Therefore, the statistical survey is the most reasonable approach. Specifically, the target population includes residents living in counties where more than 25 percent of the county falls within 100 miles of Cape Cod, Massachusetts.

EPA developed the survey instrument, and will also analyze the survey results. EPA has retained Abt Associates Inc. (55 Wheeler Street, Cambridge, MA 02138) under EPA contract EP-C-13-039 to assist in the questionnaire design, sampling design, administration of the survey, and data entry and cleaning prior to analysis of the survey results.

1(d) Feasibility

Following standard practice in the non-market valuation literature (Champ et al., 2003), EPA conducted a series of 7 focus groups with 63 people (EPA ICR # 2205.17, OMB # 2090-0028). Based on findings from these activities, EPA made various improvements to the survey instrument to reduce the potential for respondent bias, reduce respondent cognitive burden, and increase respondent comprehension of the survey materials. In addition, EPA solicited input from other experts (see section 3c in Part A), and tested the survey with 10 federal employees at AED. Recommendations and comments received as part of that process have been incorporated into the design of the survey instrument.

Because of the steps taken during the survey development process, EPA does not anticipate that respondents will have difficulty interpreting or responding to any of the survey questions. Furthermore, since the survey will be administered as both a web and a mail survey, it will be easily accessible to all respondents. EPA therefore believes that respondents will not face any obstacles in completing the survey, and that the survey will produce useful results. EPA has dedicated sufficient staff time and resources to the design and implementation of this survey, including funding for contractor assistance under EPA contract No. EP-C-13-039. Given the timetable outlined in Section A 5(d) of this document, the survey results should be available for timely reporting within ORD’s current research cycle (FY16-FY19), with final products due in FY19.

2. Survey Design

2(a) Target Population and Coverage

To assess recreational use values of coastal New England residents for improvements in New England coastal water quality, with a focus on Cape Cod and its surrounding area, the target population is individuals who are 18 years of age or older and includes residents living in counties where more than 25 percent of the county falls within 100 miles of Cape Cod, Massachusetts. Individuals in these areas are more likely to hold use values for improvements to the waters of Cape Cod and surrounding areas than those farther away. The choice of 100 miles is based on typical driving distance to recreational sites (i.e., two hours or 100 miles) for single day or weekend trips. This was supported by our focus groups. In addition, Parsons & Hauber (1998) show that the welfare relevant coefficients in a RUM model of water recreation are stable after the choice set is extended to around a two-hour travel distance.

While we will miss a portion of trips that originate from beyond 100 miles of the coast, which are likely to include more overnight trips, our purposes for the survey do not include a precise estimate of the value of overnight trips as compared to single day trips. Instead, the focus is primarily on the value of water quality changes to New England recreationists. It is likely that those within 100 miles of the coast who take single day trips or have a weekend home or regularly visit the coast for overnight trips will be most sensitive to water quality variations across locations within the region. Those traveling from greater distances are less likely to be aware of water quality variations from place to place within the region and also most likely and able to substitute locations, thus being less affected by the range of water quality variations present within the region.

We found that many trips within this 100-mile buffer are associated with overnight trips, based on our focus groups and knowledge of the local tourism economy. It is important to collect the costs associated with trips originating within the 100 miles of the Cape differently if they were part of a single day or overnight trip (see section 2(d) questions 2.4-2.14b for reasoning for those survey questions). This distinction is standard in the travel cost modeling literature, since the travel costs associated with a recreation trip may be over- or under-estimated if the trips are treated in a uniform manner.

2(b) Sampling Design

(i) Sampling Frame

The sampling frame for this survey is the United States Postal Service Computerized Delivery Sequence File (DSF), the standard frame for address-based sampling (Iannacchione, 2011; Link et al., 2008). The DSF is a non-duplicative list of residential addresses where U.S. postal workers deliver mail; it includes city-style addresses and post office boxes, and covers single-unit, multi-unit, and other types of housing structures with known businesses excluded. In total the DSF is estimated to cover 97% of residences in the U.S., with coverage gradually increasing over the last few years as rural addresses are being converted to city-style, 911-compatible addresses1. The universe of sample units is defined as this set of residential addresses, and hence is capable of reaching individuals who are 18 years of age or older living at a residential address in the four target states. Samples from the DSF are taken indirectly, as USPS cannot sell mailing addresses or otherwise provide access to the DSF. Instead, a number of sample vendors maintain their own copies of the DSF and, through verifying them with USPS, update the list quarterly. The sample vendors can also augment the mailing addresses with additional information (household demographics, landline phone numbers, etc.) from external sources.

For discussion of techniques that EPA will use to minimize non-response and other non-sampling errors in the survey sample, refer to Section 2(b)(ii), below.

(ii) Sample Sizes

The target responding sample size for the main survey is 2,163 completed household surveys. This sample size was chosen to provide statistically robust regression modeling while minimizing the cost and burden of the survey. Given this sample size, the level of precision (see section 2(c)) achieved by the analysis will be more than adequate to meet the analytic needs. For further discussion of the level of precision required by this analysis, see Section 2(c)(i) below.

The sample design includes 22 counties based upon proximity to Cape Cod. EPA plans to oversample residents of Barnstable County on Cape Cod. EPA believes this is appropriate because Cape Cod residents are those who are most likely to receive significant use benefits of water quality improvements on Cape Cod. The sample will be allocated in proportion to the county-level population within each state. The target number of respondents in each county and state is given in Table B1.

Table B1: Population and Expected Number of Completed Surveys for Each County |

|||

Sampled State and County |

Population |

Expected Number of Completed Surveys |

|

Massachusetts Counties |

6,284,793 |

1,581 |

|

Barnstable1 |

215,423 |

176 |

|

Bristol |

551,082 |

129 |

|

Dukes |

17,041 |

4 |

|

Essex |

755,618 |

175 |

|

Hampden |

465,923 |

108 |

|

Middlesex |

1,537,215 |

357 |

|

Nantucket |

10,298 |

2 |

|

Norfolk |

681,845 |

158 |

|

Plymouth |

499,759 |

110 |

|

Suffolk |

744,426 |

178 |

|

Worcester |

806,163 |

183 |

|

Connecticut Counties |

708,910 |

166 |

|

Middlesex |

165,602 |

41 |

|

New London |

274170 |

65 |

|

Tolland |

151,539 |

33 |

|

Windham |

117,599 |

27 |

|

New Hampshire Counties |

700,742 |

166 |

|

Hillsborough |

402,922 |

94 |

|

Rockingham |

297,820 |

72 |

|

Rhode Island Counties |

1,050,292 |

250 |

|

Bristol |

49,144 |

12 |

|

Kent |

164,843 |

42 |

|

Newport |

82,036 |

21 |

|

Providence |

628,323 |

145 |

|

Washington |

125,946 |

30 |

|

Total2 |

8,744,737 |

2,163 |

|

Source: U.S. Census Bureau (2013). Annual Estimates of the Resident Population: April 1, 2010 to July 1, 2012. Retrieved September 22, 2016 from http://factfinder2.census.gov/. 1 Sample design includes oversampling of Barnstable County residents. 2 Total population for selected counties. |

|||

(iii) Stratification Variables

The sample will be effectively stratified by geography, with Barnstable County, Massachusetts, being sampled at a rate 3.06 times higher than the rest of the population in the study area. The sample will be drawn from the general population addresses of the USPS DSF.

(iv) Sampling Method

Using the stratification design discussed above, individuals will be randomly selected from the U.S. Postal Service DSF database. We will send the main survey to 8,400 households from the DSF. In addition, we will administer 750 surveys to Cape Cod residents for a total sample of 9,150. Assuming undeliverable rates equal to county-level vacancy rates and a 27% response rate, we anticipate 2,163 completed surveys from the DSF.2

For obtaining population-based estimates of various parameters, each responding household will be assigned a sampling weight. The weights will be used to produce estimates that:

are generalizable to the population from which the sample was selected;

account for differential probabilities of selection across the sampling strata;

match the population distributions of selected demographic variables within strata; and

allow for adjustments to reduce potential non-response bias.

These weights combine:

a base sampling weight which is the inverse of the probability of selection of the household;

a within-stratum adjustment for differential non-response across strata; and

a non-response weight.

Post-stratification adjustments may be made to match the sample to known population values (e.g., from Census data).

There are various models that can be used for non-response weighting. For example, non-response weights can be constructed based on estimated response propensities or on weighting class adjustments. Response propensities are designed to treat non-response as a stochastic process in which there are shared causes of the likelihood of non-response and the value of the survey variable. The weighting class approach assumes that within a weighting class (typically demographically-defined), non-respondents and respondents have the same or very similar distributions on the survey variables. If this model assumption holds, then applying weights to the respondents reduces bias in the estimator that is due to non-response. Several factors, including the difference between the sample and population distributions of demographic characteristics, and the plan for how to use weights in the regression models will determine which approach is most efficient for both estimating population parameters and for the revealed-preference modeling.

To estimate recreational use values for changes in coastal water quality, data will be analyzed statistically using a standard random utility model framework. Additional regression models will be estimated to examine other research hypotheses regarding the relationship between actual and perceived water quality and the relationship between sense of place and perceived water quality.

(v) Multi-Stage Sampling

Multi-stage sampling will not be necessary for this survey.

2(c) Precision Requirements

(i) Precision Targets

Table B2 presents expected sample sizes for each state. The maximum acceptable sampling error for predicting response probabilities (i.e., the likelihood of choosing a given alternative) in the present case is ±10%, assuming a true response probability of 50% associated with a utility indifference point. Precision of the survey estimates will be affected by the design effect due to unequal weights (i.e., weights assigned to the general population versus residents of Barnstable County). The estimated design effect is 1.32 which is comprised of the design effect due to unequal selection probabilities, equal to 1.04, and the design effect due to calibration and nonresponse adjustments, projected to be 1.27 (see Table B3 below). The effective sample size for this survey (i.e., the equivalent sample size of an independent, identically distributed sample that provides the same precision as this survey) is approximated by dividing the nominal sample size by the design effect due to unequal weighting. Thus, a sample of 2,163 respondents (completed surveys) will provide an effective sample size of 1,642. The margins of error for the estimates of population percentages range from 2.3 percent at the 50 percent population incidence level to 1.5 percent at the 10 percent population incidence level (Table B2). Assuming 30 percent of New England residents participate in coastal recreation (based on a conservative interpretation of participation numbers for counties in our sample from NOAA’s national scale ocean recreation survey, provided to us by NOAA), the projected number of recreational users completing this survey is 649, which provides an effective sample size of 493. For recreational users, the corresponding margins of error are 4.0 percent at the 50 percent population incidence level to 2.6 percent at the 10 percent population incidence level. The projected sample size for recreational users is expected to be sufficient to ensure large sample properties for developing regression models, as it will safely exceed the common rule of thumb of 20 observations per parameter (Harrell, 2015).

Table B2: Sample size and accuracy projections |

|||||

State |

Population size for selected counties1 |

Expected sample size (completed surveys) |

Effective sample size |

Margin of error, 50% incidence2, 3 |

Margin of error, 10% incidence2,3 |

Massachusetts |

6,284,793 |

1,5814 |

1,200 |

2.8% |

1.7% |

Connecticut |

708,910 |

166 |

126 |

8.7% |

5.2% |

New Hampshire |

700,742 |

166 |

126 |

8.7% |

5.2% |

Rhode Island |

1,050,292 |

250 |

190 |

7.1% |

4.3% |

Overall |

8,744,737 |

2163 |

1,643 |

2.4% |

1.5% |

Source for population size: U.S. Census Bureau (2013). Annual Estimates of the Resident Population: April 1, 2010 to July 1, 2012. Retrieved September 22, 2016 from http://factfinder2.census.gov/. 1 Includes individuals who reside in counties where at least 25% of county’s geographic area falls within a 100-mile radius of Cape Cod. 2 The equivalent sample size of an independent, identically distributed sample that provides the same precision as the complex design survey at hand. 3 The margin of error is 1.96 times the standard error. The standard errors are based on the effective sample size. 4 Includes oversampling of the Barnstable County. |

|||||

(ii) Power analysis

Power analysis in this section

is performed for a one-sample t-test of proportions for study as a

whole. The accuracy of the WTP estimates, and hence the power to

detect differences in WTP, depends on the true values of the

parameters of the logistic model used in WTP estimation, and hence

can only be conducted post-hoc after the parameter estimates are

obtained. Given the nature of the survey, the variance of a z-test

statistic when the population incidence is equal to

is

given by

is

given by

where

CV is the coefficient of variation final weights,

is

the design effect due to variable weights, or ‘DEFF’, and

n is the nominal target number of completed surveys. Design

effect due to unequal weighting is computed as demonstrated in Table

B3.

is

the design effect due to variable weights, or ‘DEFF’, and

n is the nominal target number of completed surveys. Design

effect due to unequal weighting is computed as demonstrated in Table

B3.

Table B3: Design effect |

||||

Subsample |

Probability weight |

Expected count |

Sum of weights |

Sum of squared weights

|

Cape Cod/Barnstable county |

144.46 |

176 |

24,894 |

3,596 |

Rest of population |

442.00 |

1,987 |

858,070 |

388,984 |

Total |

|

2,163 |

905,803 |

392,581 |

DEFF =

|

|

1.037 |

||

DEFF due to weight calibration |

|

1.270 |

||

Total DEFF |

|

1.317 |

||

For a given power level (e.g., 80%), the effect size that can be determined by solving

for p1. This is an extension of the standard power analysis for weighted stratified samples.

Table B4 lists effect sizes using the most typical values for significance level (α=5%) and power (β=80%), and for various scenarios concerning variability of weights within strata (which will be caused by differential non-response).

Table B4: Power analysis. |

||

Effect size detectable with power 80% by a test of size 5% |

p0 = 50% |

p0 = 10% |

Full sample ( |

3.5% |

2.1% |

Recreational users ( |

6.3% |

4.0% |

(iii) Non-Sampling Errors

A variety of non-sampling errors may be encountered in revealed preference surveys. Coverage error occurs when some eligible units have zero probability of being selected. For the current survey, the generalizable population is that of residents of the 22 counties in the study area. Recreational users from other parts of the country are not covered. EPA has determined that surveying population further away from Cape Cod is economically impractical.

Measurement error occurs when the answers in the surveys do not accurately reflect the true events. In the current survey, a trip other than the most recent one can be reported, or the dates of the most recent trip, the location visited, saltwater activities or water quality may be reported incorrectly, all due to recall error (i.e., the respondent is unable to correctly recall all of the circumstances of the trip).

Non-response bias is another type of non-sampling error that can potentially occur in revealed preference surveys. Non-response bias can occur when households do not participate in a survey (i.e., not complete a web survey or return the mail survey, in this case; this may be a deliberate refusal after the mail is opened, or the mail simply may be tossed without being opened) or do not answer all relevant questions on the survey instrument (item non-response). EPA has designed the survey instrument to maximize the response rate. EPA will also follow Dillman et al.’s (2014) mixed-mode survey approach (see subsection 4(b) for details). If necessary, EPA will use appropriate weighting or other statistical adjustments to correct for any bias due to non-response.