1. APEC_III_OMB_Part_A (9-27-17) (clean version)

1. APEC_III_OMB_Part_A (9-27-17) (clean version).docx

Third Access, Participation, Eligibility and Certification Study Series (APEC III)

OMB: 0584-0530

Supporting

Statement – Part A

for

OMB Control Number 0584-0530

Third Access, Participation, Eligibility and Certification Study Series (APEC III)

May 2017

Revised September 2017

Devin Wallace-Williams, PhD

Social Science Research Analyst

Office of Policy Support

Food and Nutrition Service

United States Department of Agriculture

3101 Park Center Drive

Alexandria, Virginia 22302

Phone: 703-457-6791

Email: [email protected]

This page is intentionally blank.

Table of Contents

PART A. JUSTIFICATION

1. Circumstances That Make the Collection of Information Necessary 1

2. Purpose and Use of the Information 3

3. Use of Information Technology and Burden Reduction 10

4. Efforts to Identify Duplication 11

5. Impacts on Small Businesses or Other Small Entities 12

6. Consequences of Collecting the Information Less Frequently 12

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 13

8. Comments to the Federal Register Notice and Efforts for Consultation 14

9. Explanation of Any Decisions to Provide Any Payment or Gift to Respondents 15

10. Assurances of Confidentiality Provided to Respondents 17

11. Justification for Any Questions of a Sensitive Nature 18

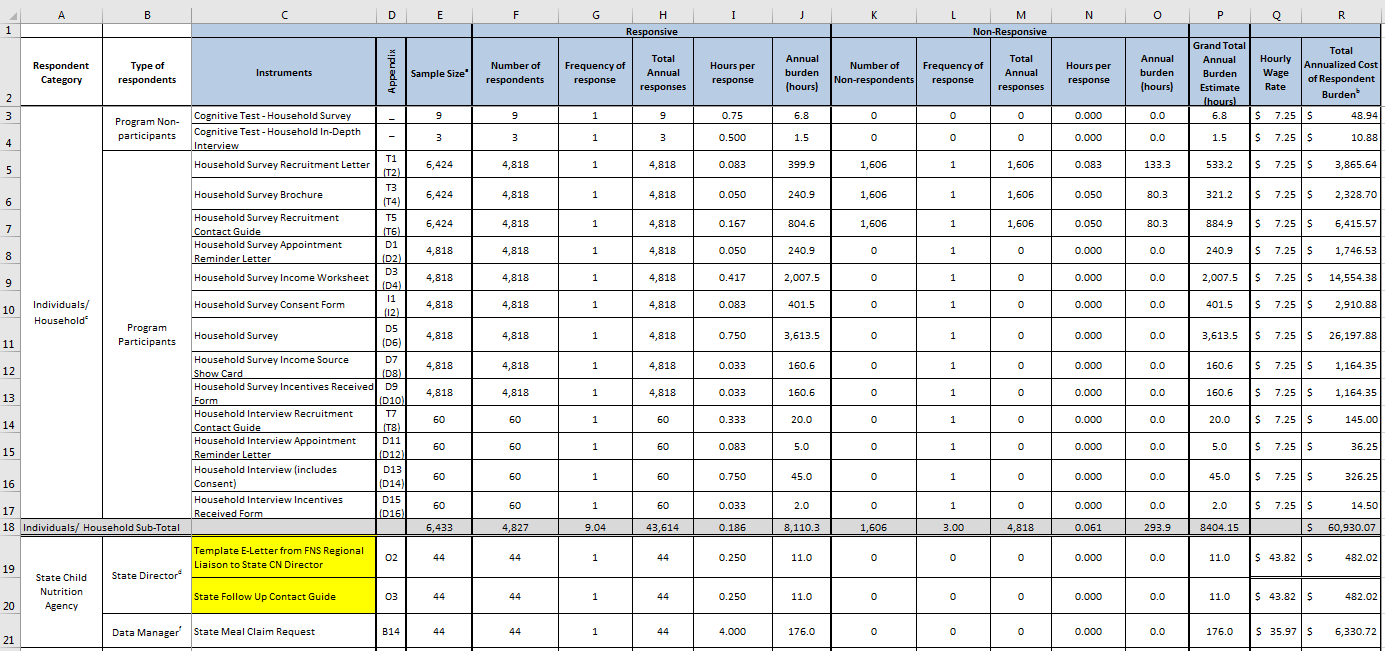

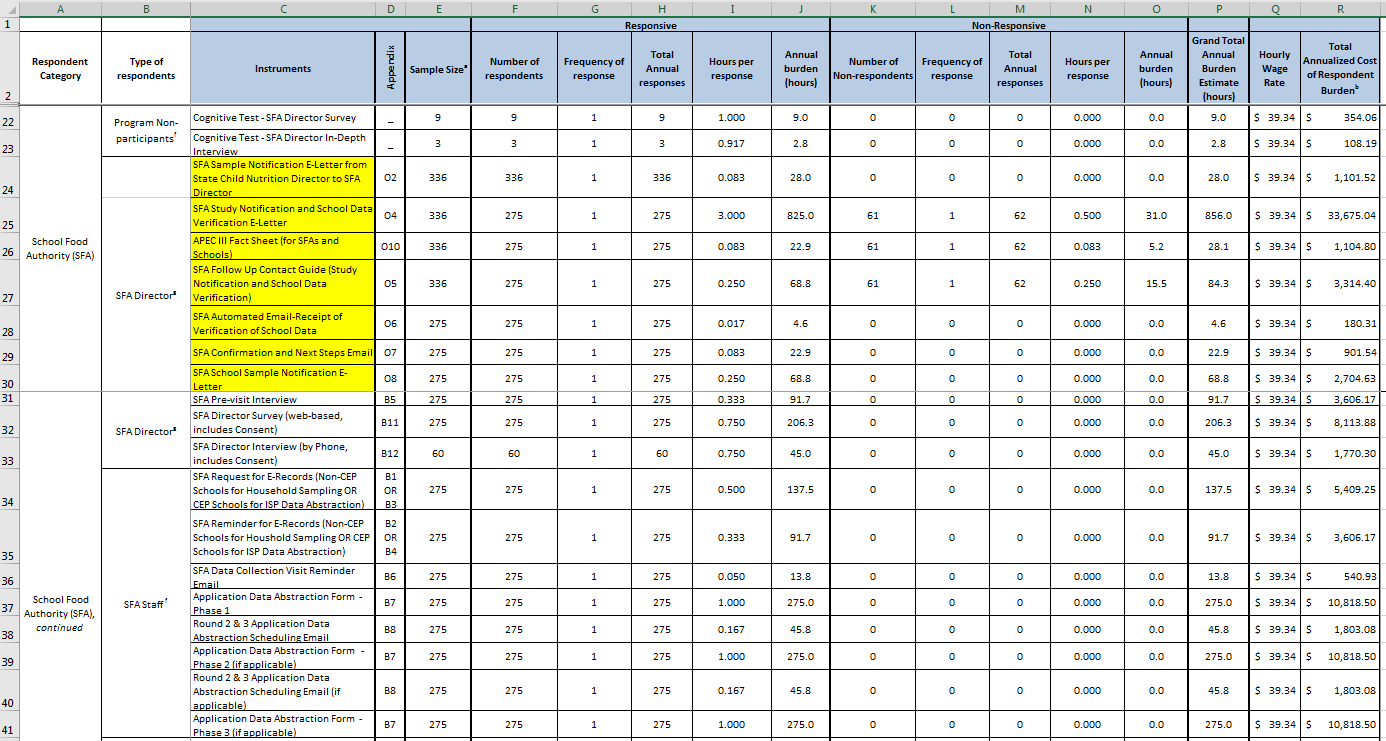

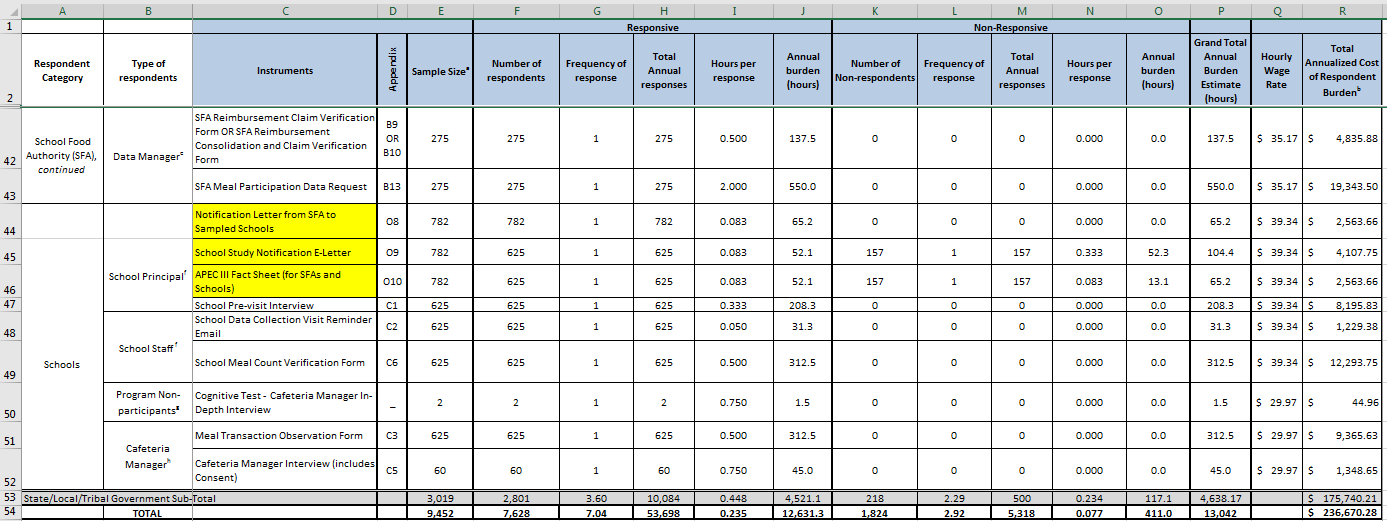

12. Estimates of the Hour Burden of the Collection of Information 20

13. Estimates of Other Total Annual Cost Burden 25

14. Estimates of Annualized Cost to the Federal Government 25

15. Explanation of Program Changes or Adjustments 26

16. Plans for Tabulations, and Publication and Project Time Schedule 27

17. Displaying the OMB Approval Expiration Date 31

18. Exceptions to the Certification Statement Identified in Item 19 31

Tables

A2-1 Summary of data collection instruments and forms 6

A12-1 Estimates of respondent burden including annualized hourly cost 21

A16-1 Proposed study schedule 27

Figures

A2-1 APEC III Data Collection Schedule 9

LIST OF APPENDICES TO APEC III OMB SUPPORTING STATEMENT

Appendix

A Applicable Statutes, Regulations, and Reference Documents

A1. Richard B. Russell National School Lunch Act (as amended through P.L. 113–79, Enacted February 07, 2014)

A2. Improper Payments Information Act (IPIA) of 2002 (P.L. 107-300)

A3. 2009 Executive Order 13520—Reducing Improper Payments

A4. Improper Payments Elimination and Recovery Act (IPERA) of 2010 (P.L. 111-204)

A5. Improper Payments Elimination and Recovery Improvement Act (IPERIA) of 2012 (P.L. 112-248)

A6. Office of Inspector General (OIG) USDA’S FY 2014 Compliance With Improper Payment Requirements

A7. M-15-02 – Appendix C to Circular No. A-123, Requirements for Effective Estimation and Remediation of Improper Payments

A8. FNS FY 2014 Research and Evaluation Plan

A9. Healthy Hunger-Free Kids Act 2010 (P.L. 111-296)

B SFA/State Data Collection Forms

B1. SFA Request for E-Records (Non-CEP Schools for Household Sampling)

B2. SFA Reminder for E-Records (Non-CEP Schools for Household Sampling)

B3. SFA Request for E-Records (CEP Schools for ISP Data Abstraction)

B4. SFA Reminder for E-Records (CEP Schools for ISP Data Abstraction)

B5. SFA Pre-Visit Interview

B6. SFA Data Collection Visit Reminder Email

B7. Application Data Abstraction Form

B8. Round 2 & 3 Application Data Abstraction Scheduling Email

B9. SFA Reimbursement Claim Verification Form―Sampled Schools

B10. SFA Reimbursement Consolidation and Claim Verification Form―All Schools

B11. SFA Director Survey (web based)

B12. SFA Director Interview (by phone)

B13. SFA Meal Participation Data Request

B14. State Meal Claim Data Request

C School Data Collection Forms

C1. School Pre-Visit Interview

C2. School Data Collection Visit Reminder Email

C3. Meal Transaction Observation Form

C4. Example of Meal Transaction Sampling

C5. Cafeteria Manager Interview

C6. School Meal Count Verification Form

LIST OF APPENDICES TO APEC III OMB SUPPORTING STATEMENT (continued)

Appendix

D Household Data Collection Forms

D1. Household Survey Appointment Reminder Letter

D2. Household Survey Appointment Reminder Letter―Spanish

D3. Household Survey Income Worksheet

D4. Household Survey Income Worksheet―Spanish

D5. Household Survey

D6. Household Survey―Spanish

D7. Household Survey Income Source Show Card

D8. Household Survey Income Source Show Card―Spanish

D9. Household Survey Incentives Received Form

D10. Household Survey Incentives Received Form―Spanish

D11. Household Interview Appointment Reminder Letter

D12. Household Interview Appointment Reminder Letter―Spanish

D13. Household Interview

D14. Household Interview―Spanish

D15. Household Interview Incentives Received Form

D16. Household Interview Incentives Received Form―Spanish

E Summary of Public Comments

E1. School Nutrition Association Comments

E2. Food Research and Action Center Comments

F Response to Public Comments

F1. FNS Response to School Nutrition Association Comments

F2. FNS Response to Food Research and Action Center Comments

G National Agricultural Statistics Service (NASS) Comments

H Response to National Agricultural Statistics Service (NASS) Comments

I Household Survey Consent Forms

I1. Household Survey Consent Form

I2. Household Survey Consent Form―Spanish

J Westat Confidentiality Pledge

K Westat Federal-Wide Assurance

LIST OF APPENDICES TO APEC III OMB SUPPORTING STATEMENT (continued)

Appendix

L Westat APEC III IRB Approval Letters

M Westat Information Technology and Systems Security Policy and Best Practices

N APEC III Burden Table

O Sample Frame Development and Selection Process

O1. Sample Frame Development and Selection Procedures

O2. Template E-Letter from FNS Regional Liaison to State Child Nutrition Director

O3. State Follow Up Contact Guide

O4. SFA Study Notification and School Data Verification E-Letter

O5. SFA Follow Up Contact Guide (Study Notification and School Data Verification)

O6. SFA Automated Email―Receipt of Verification of School Data

O7. SFA Confirmation and Next Steps Email

O8. SFA School Sample Notification E-Letter

O9. School Study Notification E-Letter

O10. APEC III Fact Sheet (for SFAs and Schools)

P APEC III SFA Sample Selection Memo

Q APEC III School Sample Selection Memo

R Special School Weights for Non-Certification Errors

S Data Collection Summary

T Household Recruitment

T1. Household Survey Recruitment Letter

T2. Household Survey Recruitment Letter―Spanish

T3. Household Survey Brochure

T4. Household Survey Brochure―Spanish

T5. Household Survey Recruitment Contact Guide

T6. Household Survey Recruitment Contact Guide―Spanish

T7. Household Interview Recruitment Contact Guide

T8. Household Interview Recruitment Contact Guide―Spanish

U APEC III Cognitive Pretest Findings Report

PART A. JUSTIFICATION

1. Circumstances That Make the Collection of Information Necessary

Identify any legal or administrative requirements that necessitate the collection. Attach a copy of the appropriate section of each statute and regulation mandating or authorizing the collection of information.

This information collection request is a reinstatement with change of a previously approved collection [NSLP/SBP Access, Participation, Eligibility, and Certification Study; OMB Number 0584-0530, Discontinued: 08/31/2015]. For this submission, the title has been changed to the Third Access, Participation, Eligibility, and Certification Study Series (APEC III). Appendices A1‒A9 include appropriate statutes, regulations, and reference documents pertaining to the APEC study series, and APEC III in particular.

The National School Lunch Program (NSLP) and the School Breakfast Program (SBP), commonly referred to as the School Meals programs, administered by the Food and Nutrition Service (FNS) of the U.S. Department of Agriculture (USDA), are authorized under sections 10 and 4, respectively, of the Richard B. Russell National School Lunch Act of 1966 (42 U.S.C. 1766) (Appendix A1). The NSLP is the second largest of 15 nutrition assistance programs administered by FNS. The NSLP operates in virtually all public schools and 94 percent of all schools (public and private combined) in the United States.1 More than two-thirds (71.1 percent) of these NSLP lunches were served free or at a reduced price to children from low-income households.2 The SBP is available in approximately 90 percent of all public schools that operate the NSLP. The program serves a greater proportion of children from low-income households, with 84.7 percent of SBP meals served free or at a reduced price in FY 2014.3

The Improper Payment Information Act (IPIA) of 2002 (PL 107-300) (Appendix A2), 2009 Executive Order 13520 - Reducing Improper Payments (Appendix A3); the Improper Payments Elimination and Recovery Act (IPERA) of 2010 (PL 111-204) (Appendix A4); the Improper Payments Elimination and Recovery Improvement Act (IPERIA) of 2012 (Appendix A5); the Office of Inspector General (OIG) USDA FY 2014 Compliance with Improper Payments Requirements (Appendix A6); and the Requirements for Effective Estimation and Remediation of Improper Payments (Appendix A7) set forth the priority, mandate, and requirements for FNS to identify, estimate, and reduce erroneous payments in these programs, including both underpayments and overpayments. Correspondingly, the FNS FY 2014 Research and Evaluation Plan (Appendix A8) includes promoting program integrity by reducing improper payments. FNS relies upon the Access, Participation, Eligibility and Certification (APEC) Study Series to provide reliable, national estimates of erroneous payments made to school districts in which the NSLP and SBP operate. This third study in the APEC series will provide the required information. The process required to provide NSLP and SBP benefits is complicated; the programs have three levels of reimbursement and multiple ways for parents and/or for schools to certify children for reimbursement, and there are multiple standards on the meal components that make a meal reimbursable. While most payments under the program are correct, in SY 2012-2013 approximately 20 percent has been made in error.

2. Purpose and Use of the Information

Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate how the agency has actually used the information received from the current collection.

Based on findings from both APEC I4 and APEC II5, FNS initiated a comprehensive plan to reduce errors in the school meals programs, including (a) providing several approaches to certification that can reduce certification error; (b) implementing training programs and professional certifications; (c) funding investments in State technology improvement; and (d) creating the Office of Program Integrity for Child Nutrition Programs. The objectives of APEC III support FNS’s continuing actions to reduce improper payments in the school meals programs. In order to develop mitigation strategies to reduce errors in the future, FNS needs accurate and precise estimates of the error rates as well as an in-depth understanding of the causes of errors. This collection of primary data during School Year (SY) 2017-2018 will produce national estimates of improper payments (hereafter referred to as erroneous payments) in the NSLP and SBP. Additionally, due to prohibitive costs for conducting large, nationally representative studies each year, these data will also be used to develop estimation models to use in estimating annual improper payments. The APEC study series is FNS’ only source of nationally representative measure of improper payments in the NSLP and SBP. APEC III will meet previous APEC study series objectives (Objectives 1 and 2) in addition to expanding its scope (Objectives 3 and 4) as follows:

Objective 1: Generate a national estimate of the annual amount of erroneous payments based on School Year 2017-2018 by replicating the APEC methodology.

Objective 2: Provide a robust examination of the relationship of student (household), school, and SFA characteristics to error rates.

Objective 3: Conduct a sub-study on the differences in error rates among SFAs using different implementation strategies in their school meals programs.

Objective 4: Conduct qualitative analyses examining the reasons for erroneous payments.

While participation in this study is part of Healthy, Hunger-Free Kids Act (HHFKA) (Appendix A9) requirements to improve the management and integrity of child nutrition programs through program evaluation, State, School Food Authority (SFA), school, and household participation is voluntary and will not impact receipt of any benefits. The information collected from this study may be shared with other departments within the USDA and the government (as determined by FNS), and eventually made publicly available (aggregated with identifying information redacted).

Consistent with APEC II methodology, we propose to implement the study using a multistage clustered-sample design, which will include the following:

A nationally representative sample of SFAs in the contiguous 48 states and the District of Columbia;

A stratified sample of schools (including both schools participating in the Community Eligibility Provision (CEP) and non-CEP schools) within each SFA; and

A random sample of students (households) within each sampled school that applied for free and reduced-price meals, were categorically eligible for free meals, or were directly certified for free meals.6

Table A2-1 provides a summary of the data collection instruments that will be used for analyses to meet the study objectives, including (a) from whom the information will be collected; (b) how the information will be collected; (c) what information is collected; and (d) how frequently the information will be collected. These include SFA/State data collection forms (Appendices B1‒B14), school data collection forms (Appendices C1‒C6), and household data collection forms (Appendices D1‒D16). Table A2-1 does not include administrative materials that do not collect key data elements for analysis (e.g., consent forms, reminder letters, etc.); however, these items are included in the full burden table.

Table A2-1. Summary of data collection instruments and forms*

Respondent category(from whom collected) |

Instrument/form(appendix reference) |

Mode of data collection(how collected) |

Key data elements(what is collected) |

Frequencyper respondent |

Type of error |

SFA |

SFA Request and Reminder Letters for E- Records (CEP and Non-CEP) (Appendices B1, B2, B3, and B4) |

|

|

Up to three times |

|

SFA Pre-Visit Interview (Appendix B5) |

|

|

Once |

|

|

Application Data Abstraction Form (Appendix B7) |

|

|

Up to three times |

Certification |

|

SFA Reimbursement Claim Verification Form—Sampled Schools (Appendix B9) OR SFA Reimbursement Consolidation and Claim Verification Form‒All Schools (Appendix B10) |

|

|

Once |

Aggregation |

|

SFA Director Survey (Appendix B11) |

|

|

Once |

|

|

SFA Director Interview (Appendix B12) |

|

|

Once |

|

|

SFA Meal Participation Data Request (Appendix B13) |

|

|

Once |

Meal Claiming |

|

State Agency |

State Meal Claim Data Request (Appendix B14) |

|

|

Once |

Aggregation |

*NOTE: Table A2-1 only includes data collection instruments that specifically collect the data needed to respond to the study objectives. It does not include administrative materials and forms that do not collect key data elements for analysis (i.e. Appendices: B6, B8, C2, C4, D1, D2, D9-12, D15, D16, I1, I2, T1-T8).

Table A2-1. Summary of data collection instruments and forms (continued)*

Respondent category(from whom collected) |

Instrument/form(appendix reference) |

Mode of data collection(how collected) |

Key data elements(what is collected) |

Frequencyper respondent |

Type of error |

School |

School Pre-Visit Interview (Appendix C1) |

|

|

Once |

|

Meal Transaction Observation Form (Appendix C3) |

|

|

Once |

Meal Claiming |

|

Cafeteria Manager Interview (Appendix C5) |

|

|

Once |

Meal Claiming |

|

School Meal Count Verification Form (Appendix C6) |

|

|

Once |

Aggregation |

|

Households (Parents / Guardians) |

Household Survey Income Worksheet (Appendix D3/D4 ) |

|

|

Once |

|

Household Survey (Appendix D5/D6 ) |

|

|

Once |

Certification |

|

Household Survey Income Source Show Card (Appendix D7/D8) |

|

|

Once |

|

|

Household Interview (Appendix D13/D14 ) |

|

|

Once |

Certification |

*NOTE: Table A2-1 only includes data collection instruments. It does not include administrative materials and forms that do not collect key data elements for analysis

(Appendices: B6, B8, C2, C4, D1, D2, D9-12, D15, D16, I1, I2, T1-T8).

All of the information collection (on individual students/households/records) will be conducted once for APEC III in three phases during school year 2017-2018. The contractor will deliver summary reports (as described in the response to Question 16 of this supporting statement) along with the corresponding data sets and codebook (with de-identified data).

Data Collection Procedures

Table A2-1 provides a description of each data collection instrument by respondent category. As shown in Figure A2-1, which outlines the timeline for data collection, the data will be collected in three phases with the majority of the household survey data collected during the first phase. Sampling and household surveys will take place three times during the study year to ensure coverage of applications submitted at different times during the year. At the mutually agreed upon time, the data collectors will travel to sampled and recruited households to conduct the in-person household survey as a computer assisted personal interview (CAPI). The household in-depth interviews will be conducted via phone with a random sample of 60 parents/guardians who completed the household survey and will include questions on general experience with the application process to identify any areas that were confusing to the respondent, if the respondent used a paper application or web-based version, and if any school staff or other knowledgeable individuals were available to answer questions. Each household will complete a survey (and an in-depth phone interview if selected) once.

Beginning in Phase 2, SFAs and schools will be contacted by phone to complete the pre-visit interviews (to obtain information to prepare for the data collection visits) and to schedule the data collection visits. SFA and school data collection will commence in Phase 2 and continue in Phase 3. During phases 2 and 3 of data collection, SFA directors will also be asked to complete the web-based SFA Director Survey (Appendix B11), which will provide relevant SFA characteristics for later analyses and comparisons. An in-depth SFA Director Interview (Appendix B12) will be conducted by phone with a random sample of 60 SFA directors to garner a better understanding of how SFA policies, procedures, and characteristics affect errors, in addition to actions that would be most effective in reducing errors.

Finally, during phase 3 of data collection, all State-level data will be requested directly from the State via the State Meal Claim Data Request (Appendix B14) for direct, electronic submission. All data will be received and stored in a secure, password protected computer-based system.

Additional information about recruitment and data collection procedures are presented in Question 2 of the Supporting Statement Part B.

Figure A2-1. APEC III Data Collection Schedule

Phase 1 |

Phase 2 |

Phase 3 |

||

August 2017 to November 2017 |

December 2017 to February 2018 |

March 2018 to June 2018 |

||

Household Wave 1 |

SFA/School Visit & Household Survey Wave 2 |

SFA/School Visit (continued) & Household Survey Wave 3 |

||

Sampling for Household Survey |

Sampling for Household Survey |

Sampling for Household Survey |

||

Household Surveys |

Household Surveys |

Household Surveys |

||

Household In-Depth Interviews |

Household In-Depth Interviews |

Household In-Depth Interviews |

||

|

SFA Pre-Visit Interview |

SFA Pre-Visit Interview |

||

|

Application Abstractions |

Application Abstractions |

||

|

CEP Records Reviews |

SFA Meal Participation Data Request |

||

|

|

|

||

|

SFA Director Web Survey and Phone Interview |

SFA Director Web Survey and Phone Interview (continued) |

||

|

|

|

||

|

School Visits for Meal Claiming and Aggregation Data Collection |

School Visits for Meal Claiming and Aggregation Data Collection (continued) |

||

|

School Pre-Visit Interview |

School Pre-Visit Interview |

||

|

Meal Observations |

Meal Observations |

||

|

Cafeteria Manager Interview |

Cafeteria Manager Interview |

||

|

|

School Meal Counts and Claims |

School Meal Counts and Claims |

|

|

|

|

|

|

|

|

|

|

State Meal Claim Data Request |

3. Use of Information Technology and Burden Reduction

Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or other forms of information technology, e.g., permitting electronic submission of responses, and the basis for the decision for adopting this means of collection. Also describe any consideration of using information technology to reduce burden.

FNS is committed to complying with the E-Government Act of 2002 to promote the use of technology. Most of the information to be collected for this study will come from existing records and data collector observations rather than relying on SFA and school staff to provide the needed data. Wherever possible, use of technology has been incorporated into the data collection to reduce respondent burden. This includes the electronic submission of data files and electronic data entry (by data collectors) of abstractions from hard-copy records. These efforts are described below.

Field staff will be equipped with mobile internet hot-spot (MiFi) devices to enable them to connect to the internet anywhere mobile service is available. This will allow access to the study management system for electronic data entry. Laptops will be configured with security settings that feature numerous technical controls to protect study data and maintain the laptop’s integrity during the communication sessions. Access to the private data networks is controlled by many security features, including a firewall for port filtering and an SSL/VPN gateway that requires user authentication.

The administration of the in-person household survey (discussed in Question 2 of Supporting Statement Part B) will use computer-assisted personal interviewing (CAPI). Thus, all of the 4,818 household survey responses will be collected electronically. Use of CAPI automates skip patterns, customizes wording, completes response code validity checks, and applies consistent editing checks. The SFA director survey data will also be collected electronically via a web-based survey (275 survey responses). The research team will be prepared to receive and process electronic records from States and SFAs (discussed in Question 2 of Supporting Statement Part B) in place of the production of hard-copy documents or completion of specific forms. We estimate that all (approximately 319)7 of these records requested will be collected electronically.

FNS estimates that out of the 59,016 total responses8 for this collection, approximately 9.2 percent (5,412) of responses will be submitted electronically.9 An additional 7,324 records from 1,175 requests10 will be electronically entered into the study database by data collectors from abstraction from existing hard-copy SFA and school records, and thus present minimal burden on respondents.11

4. Efforts to Identify Duplication

Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use for the purposes described in Question 2.

Every effort has been made to avoid duplication of data collection efforts. These efforts include a review of USDA reporting requirements, State administrative agency reporting requirements, and special studies by government and private agencies. Specifically, we have obtained extant, district-level administrative data from the SFA Verification Summary Reports (Form FNS-742) (approved under OMB # 0584-0594 Food Programs Reporting System (FPRS), expiration date 9/30/2019) and public school district-level data from the National Center for Education Statistics (NCES) Common Core Data (CCD). The additional information required for this study is not currently reported to FNS on a regular basis in a standardized form or available from any other previous, contemporary study.

5. Impacts on Small Businesses or Other Small Entities

If the collection of information impacts small businesses or other small entities (Item 5 of OMB Form 83-I), describe any methods used to minimize burden.

Information being requested or required has been held to the minimum required for the intended use. Although smaller SFAs are involved in this data collection effort, they deliver the same program benefits and perform the same function as any other SFA. FNS estimates that 2 percent of SFA respondents are small entities, which equates to approximately 19 respondents (7 SFA directors, 6 SFA staff and 6 SFA data managers). Out of the total number of respondents for this study (9,452), FNS estimates that 0.2% of them will be small entities.

6. Consequences of Collecting the Information Less Frequently

Describe the consequence to Federal program or policy activities if the collection is not conducted, or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

If this data collection were not performed, FNS would be unable to meet its Federal reporting requirements under IPERIA to annually measure erroneous payments in the NSLP and SBP and identify the sources of erroneous payments as outlined in M-15-02 - Appendix C to Circular No. A-123, Requirements for Effective Estimation and Remediation of Improper Payments12 (Appendix A7). This study will be conducted once as a one-time study. The household survey respondents will only be contacted once at various times throughout the school year. To ensure that the household survey respondents are representative of applicants throughout the school year, the SFA directors will be contacted three times throughout the school year to provide information on additional income eligibility applications submitted since the prior visit.

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

Explain any special circumstances that would cause an information collection to be conducted in a manner:

Requiring respondents to report information to the agency more often than quarterly;

Requiring respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

Requiring respondents to submit more than an original and two copies of any document;

Requiring respondents to retain records, other than health, medical, government contract, grant-in-aid, or tax records, for more than 3 years;

In connection with a statistical survey, that is not designed to produce valid and reliable results that can be generalized to the universe of study;

Requiring the use of a statistical data classification that has not been reviewed and approved by OMB;

That includes a pledge of confidentiality that is not supported by authority established in statute or regulation, that is not supported by disclosure and data security policies that are consistent with the pledge, or which unnecessarily impedes sharing of data with other agencies for compatible confidential use; or

Requiring respondents to submit proprietary trade secret, or other confidential information unless the agency can demonstrate that it has instituted procedures to protect the information’s confidentiality to the extent permitted by law.

The collection of information is conducted in a manner consistent with the guidelines in 5 CFR 1320.5. None of the special circumstances listed are applicable to this data collection effort.

8. Comments to the Federal Register Notice and Efforts for Consultation

If applicable, provide a copy and identify the date and page number of publication in the Federal Register of the agency’s notice, required by 5 CFR 1320.8 (d), soliciting comments on the information collection prior to submission to OMB. Summarize public comments received in response to that notice and describe actions taken by the agency in response to these comments. Specifically address comments received on cost and hour burden.

Describe efforts to consult with persons outside the agency to obtain their views on the availability of data, frequency of collection, the clarity of instructions and recordkeeping, disclosure, or reporting format (if any), and on the data elements to be recorded, disclosed, or reported.

Consultation with representatives of those from whom information is to be obtained or those who must compile records should occur at least every 3 years even if the collection of information activity is the same as in prior years. There may be circumstances that may preclude consultation in a specific situation. These circumstances should be explained.

A notice was published in the Federal Register on August 10, 2016, Volume 81, Number 154, Pages 52814‒52819. The public comment period ended on October 11, 2016. The two public comments received, detailed in Appendices E1 and E2, were related to (a) data collection methods to reduce burden; (b) special considerations for the study such as meal pattern requirements and CEP; and (c) estimating State and national errors. FNS’s response, detailed in Appendices F1 and F2, included (a) description-specific approaches implemented to streamline the process for SFAs and schools to minimize their burden; (b) enhancements to the APEC III design to incorporate special considerations for NSLP and SBP; and (c) an explanation that supplemental modeling techniques will be added to the data analysis plan.

The research team is supplemented with technical advisors, survey methodologists and sampling experts. In addition, consultations about the research design, sample design, data sources and needs, and study reports occurred during the study’s planning and design phase, and will continue throughout the study. The purpose of these consultations is to ensure the technical soundness of the study and the relevance of its findings and to verify the importance, relevance, and accessibility of the information sought in the study. Other individuals outside the research team who reviewed and commented upon key documents produced by the study include Jennifer Rhorer, 202-720-2616, National Agricultural Statistics Service (NASS), Methods Division. Appendix G provides a summary of NASS comments. Appendix H provides the response to NASS comments.

9. Explanation of Any Decisions to Provide Any Payment or Gift to Respondents

Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees.

The household survey will include questions of a sensitive nature (income related), including a request for documentation of income, and will take place in respondents’ homes. For this reason, FNS is requesting the use of incentives to household members (i.e., parents/guardians).13 Providing survey participants with a monetary incentive reduces nonresponse bias and improves survey representativeness.14,15,16 Given the sensitive nature of the survey questions and the time required in participants’ homes, incentives will be essential for minimizing nonresponse and ensuring that there are non-meaningful differences between respondents and nonrespondents. Finally, incentives improve survey response rates, which in turn reduce nonresponse bias. Having an adequate number of completed surveys is essential to detect statistically significant differences. Incentives are a key component of multi-pronged approaches used to minimize nonresponse bias and time to achieve completion rates without affecting data quality.17

FNS is proposing to offer financial incentives of $30 or $50 (depending on level of participation) for completing the in-person household survey component of the study. All respondents completing the in-person household survey would be provided $30. Respondents who also obtain and provide documents to verify income during the household survey will receive an additional $20, for a total of $50. The lack of this documentation was a limitation from APEC II and a concern for the quality of the data. The additional $20 incentive is added to reflect the additional activity and level of effort for respondents of reviewing and completing the income worksheet in advance, and compiling the necessary documentation. In another meta-analysis of monetary incentives, Mercer and colleagues examined dose-response18 analysis effects of increasing monetary incentive amounts on response rates.19 They found a strong, nonlinear effect for increased response rates through increasing monetary incentive amounts.

Respondents (sub-sample of 60) who are randomly selected for and complete the household in-depth interview by phone will receive a separate $20 incentive. All incentives will be provided in the form of a Visa gift card. The incentive amounts were calculated based on incentive amounts approved for APEC II20 (adjusted for inflation and additional survey burden); and the time and burden on respondents’ in their home. There are no participation costs for the respondents.

10. Assurances of Confidentiality Provided to Respondents

Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

The FNS complies with the Privacy Act of 1974 (5 USC §552a). Study participants will be subject to assurances as provided by the Privacy Act of 1974, which requires the safeguarding of individuals against invasion of privacy. These assurances will be documented in an informed consent form (Appendix I1 and I2). In addition, all project staff, field data collectors, and subcontractors will/have sign(ed) a Westat Confidentiality Pledge (Appendix J). All procedures planned for the study will ensure the privacy and security of electronic data during the data collection and processing period. FNS published a system of records notice (SORN) titled FNS-8 USDA/FNS Studies and Reports in the Federal Register on April 25, 1991, volume 56, pages 19078‒19080, that discusses the terms of protections that will be provided to respondents. Names and phone numbers will not be linked to participants’ responses, survey respondents will have a unique ID number, and analyses will be conducted on data sets that include only respondent ID numbers. Finally, a nondisclosure analysis will be performed on each of the files slated to become public use files, and the appropriate remedial actions will be taken as necessary. In addition, an initial risk analysis will be conducted by evaluating all the potential personal, geographic, and other identifiers in the files that could be disseminated using a rigorous step-by-step process. The outcomes and implications will be summarized, and a disclosure mitigation plan will be developed.

All collected data will be securely transmitted using closed and secure data transmission. Any hard-copy materials will be stored in locked file cabinets. Electronic data can only be accessed using a logon routine with approved user identification and a strong password. Access to records is limited to those persons who process the records for the specific uses stated in the Privacy Act notice. FNS does not have any connection to the personal data collected and will not handle any data containing identifying information. The compiled report for FNS (from the contractor) will contain no personal information and will be publicly posted. Data will be presented in aggregate statistical form only. Names and phone numbers will be destroyed two years after the completion of analysis and delivery of the contractual reports.

Westat’s Institutional Review Board (IRB) serves as the contractor’s administrative body overseeing all research involving human subjects. Westat holds a Federal-Wide Assurance (FWA) of compliance from the U.S. Department of Health and Human Services’ Office of Human Research Protections (DHHS/OHRP) (Appendix K). The study protocol was submitted for review and approval in phases. The initial submission was to obtain approval to conduct the cognitive testing of the instrument. This approval was obtained on February 19, 2016. Subsequent submissions for the main study data collection and amendments to study materials were approved on August 8, 2016, August 9, 2016, and August 12, 2016 (see Appendix L).

11. Justification for Any Questions of a Sensitive Nature

Provide additional justification for any questions of a sensitive nature, such as sexual behavior or attitudes, religious beliefs, and other matters that are commonly considered private. This justification should include the reasons why the agency considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

The household survey includes sensitive questions, including questions on demographics, household composition, income, and receipt of Federal or State public assistance. The consent forms (Appendix I1 and I2) will inform all respondents of their right to decline to participate or answer any question they do not wish to answer without consequences. Similar sensitive questions were asked in the previous APEC studies, with no evidence of harm to the respondents. Participating in this study will not affect any USDA benefits received by programs or families.

Questions on income and the receipt of public assistance are necessary to establish the family’s actual eligibility for free or reduced-price NSLP/SBP meal benefits and will be used to estimate certification error and derive estimates of erroneous payments, which are key objectives of this study. The study letter, brochure, consent form, and the data collector (using the recruitment call guide/protocol) will inform participants that their participation, and any information they provide, will not affect any benefits they are receiving.

The data that will be obtained from the household survey are not accessible to FNS, as the survey requires an independent and one-on-one review of respondents’ household information as reported on their income eligibility application to confirm their current eligibility status for free or reduced-priced meals. The data that will be obtained from the SFAs and schools will be obtained from existing records review.

This study will adhere to Westat’s Information Technology and Systems Security Policy and Best Practices (Appendix M). These protections include management (e.g., certification, accreditation, and security assessments, planning, risk assessment), operational (e.g., awareness and training, configuration management, contingency planning), and technical (e.g., access control, audit and accountability, identification, and authentication) controls that are implemented to secure study data. This research will fully comply with all Government-wide guidance and regulations as well as USDA Office of the Chief Information Officer (OCIO) directives, guidelines, and requirements.

12. Estimates of the Hour Burden of the Collection of Information

Provide estimates of the hour burden of the collection of information. Indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated. The statement should:

A) Indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated. If this request for approval covers more than one form, provide separate hour burden estimates for each form and aggregate the hour burdens in Item 13 of OMB Form 83-I.

With this reinstatement, there are 9,452 respondents, 59,016 responses, and 13,042 burden hours. The estimated burden for this information collection, including the number of respondents, frequency of response, average time to respond, and annual hour burden, is provided in Appendix N. A summary of the burden table appears below (Table A12-1). The 9,452 respondents include 7,628 respondents and 1,824 nonrespondents. The average frequency of response per year for respondents and nonrespondents is 7.04 and 2.92, respectively. The total annualized hour burden to the public is 13,042 hours (including 12,631 for respondents and 411 for nonrespondents). The estimates are based on prior experience with comparable instruments on APEC I and II, as well as estimates from cognitive testing of instruments.

Table A12-1. Estimates of respondent burden including annualized hourly cost (See Appendix N) (Page 1 of 3)

Table A12-1. Estimates of respondent burden including annualized hourly cost (See Appendix N) (Page 2 of 3)

Table A12-1. Estimates of respondent burden including annualized hourly cost (See Appendix N) (Page 3 of 3)

B) Provide estimates of annualized cost to respondents for the hour burdens for collections of information, identifying and using appropriate wage rate categories.

The estimates of respondent cost are based on the burden estimates and use the U.S. Department of Labor, Bureau of Labor Statistics, May 2016 National Occupational and Wage Statistics. Both Occupational Group (999200) State Government (excluding schools and hospitals) and Occupational Group (611000) Educational Services (including private, state, and local government schools) were used to estimate annualized costs for managers or directors at the State agencies, SFAs, and schools. Annualized costs were based on the mean hourly wage for each job category. The hourly wage rate used for the State CN director is $43.82 (Occupation Code 11-9030, State Government-999200).21 The hourly wage rate used for State CN data manager is $35.97 (Occupation Code 15-1141, Database Administraor-999200). The hourly wage rate used for the SFA director and the school principal or other school administrator is $39.34 (Occupation Code 11-9039-611000). The hourly wage rate used for the SFA level data manager is $35.17 (Occupation Code 15-1141, Database Administrator-611000). The hourly wage rate used for food service (cafeteria) manager in schools is $29.97 (Occupation Code 11-9051, Food Service Manger-611000).22 The estimated annualized cost for the household survey respondent uses the Federal minimum wage of $7.25.23 The total estimated annualized cost is $236,670.28.

13. Estimates of Other Total Annual Cost Burden

Provide estimates of the total annual cost burden to respondents or record keepers resulting from the collection of information (do not include the cost of any hour burden shown in items 12 and 14). The cost estimates should be split into two components: (a) a total capital and start-up cost component annualized over its expected useful life; and (b) a total operation and maintenance and purchase of services component.

There are no capital/startup or ongoing operation/maintenance costs associated with this information collection.

14. Estimates of Annualized Cost to the Federal Government

Provide estimates of annualized cost to the Federal government. Provide a description of the method used to estimate cost and any other expense that would not have been incurred without this collection of information.

The total estimated cost of the study to the Federal government is $11,820,923.60,24 including contractor and Federal government employee costs. The average25 annual cost to the Federal government, including contractor and Federal government employee cost, is $2,364,184.72.

The total estimated cost to the contractor is $11,728,218.0026 over 5 years (September 2015 through September 2020), representing an average27 annualized cost of $2,345,643.60. This represents the contractor’s costs for labor, other direct costs, and indirect costs.

The total estimated cost to the Federal government for the FNS employee, social science research analyst/project officer, involved in project oversight with the study is estimated at $92,705.60 over 5 years. This represents an estimated annual cost of $18,541.12 (GS-12, step 6 at $44.57 per hour, 416 hours per year). Federal employee pay rates are based on the Office of Personnel Management (OPM) salary table for 2017 for the Washington, DC, metro area locality (for the locality pay area of Washington-Baltimore-Arlington, DC-MD-VA-WV-PA).28

15. Explanation of Program Changes or Adjustments

Explain the reasons for any program changes or adjustments reported in Items 13 or 14 of the OMB Form 83-1.

This collection is a reinstatement of a previously approved information collection29 as a result of program changes, and will add 13,042 hours of annual burden and 59,016 responses to OMB’s inventory. When this collection was discontinued, it had 5,363 burden hours and 7,974 responses, so this reinstatement reflects an increase from APEC II (approximately 7,679 burden hours and 51,042 responses). This substantial increase from APEC II is due to enhancements to the APEC III design to increase precision for estimating errors; collect qualitative data30 to provide information on the causes of error to help guide policy decisions; address the concern about sample seasonality; and have more accurate measures of improper payments. These design enhancements required the following changes (that in turn increased the burden hours and number of responses): (1) increased sample of SFAs; (2) an increased sample of schools due to the increase of SFAs; (3) expansion of the SFA director survey; (4) addition of an SFA director qualitative interview (for a sub-sample only); (5) addition of a cafeteria manager interview (for a sub-sample only); (6) increased sample of households due to the increased sample of schools; (7) expansion of the household survey to better understand reasons for errors; and (8) addition of a household interview (with a sub-sample only).

16. Plans for Tabulations, and Publication and Project Time Schedule

For collections of information whose results are planned to be published, outline plans for tabulation and publication.

Table A16-1 below provides the schedule for APEC III activities.

Table A16-1. Proposed study schedule

Project activity |

Schedule |

Pre Data Collection Activities (Sample Frame Development)* |

Feb 2016 – Apr 2017 |

Train Data Collectors |

Aug 2017 |

Conduct Data Collection** |

Aug 2017 – Jun 2018 |

Create Study Database |

Feb 2017 – Aug 2017 |

Analyze Data |

Aug 2018 – Mar 2019 |

Create and Validate National & State-Level Estimation Models |

Apr 2019 – Sep 2019 |

Draft, Revised, and Final Reports |

Jun 2019 – Jun 2020 |

Presentation of Preliminary Findings |

Jan 2020 |

Draft, Revised, and Final Briefing Materials |

Jun 2020 – Aug 2020 |

Additional Reports and Analyses |

May 2020 – Sep 2020 |

* These pre data collection activities include the use of FNS administrative data and public records to develop the sample frame

** The data collection and analysis schedule will be adjusted as needed if OMB approval is not received by August 2017.

Generate a national estimate of the annual amount of erroneous payments based on School Year 2017-2018. (Objective 1). The weighted statistical tables will be used to derive the national estimates of erroneous payments due to certification, meal claiming, and aggregation error. We will estimate erroneous payment rates and associated dollar amounts of erroneous payments, including overpayments, underpayments, and gross and net erroneous payments separately for NSLP and SBP, as well as provide a combined school meals estimate of erroneous payments.

Calculation of Certification Rate Errors – Certification error occurs when students are certified for levels of benefits for which they are not eligible (e.g., certified for free meals when they should be certified for reduced-priced meals, or vice versa). For non-CEP schools, program eligibility and the associated reimbursement rate is determined separately for each student. In CEP schools, the CEP group’s eligibility is determined jointly, resulting in one reimbursement rate for all the schools in the group.

For non-CEP schools, once the applicant’s true certification status is independently determined, it will be compared with his or her current certification status. For CEP schools, we will identify certification errors by independently estimating the variation between the verified identified student percentage (ISP) and the current ISP being used for reimbursement purposes. Once we estimate the certification errors for each meal type, we will compute the per-meal erroneous payments for a given student. The next steps are to (a) calculate the erroneous payment per meal; (b) sum over students to get national level error estimates; (c) estimate student or school level erroneous payments; (d) budget calibrate the weights; and (e) estimate national erroneous payments.

Calculation of Aggregation Error Rates. Aggregation errors occur in the process of tallying the number of meals served each month by claiming category (which occur at the point of sale within the school) and transferring it from school to SFA, from SFA to State, and from State to USDA for reimbursement. For non-CEP schools, at each stage there can be meal over counts or under counts within each reimbursement category, leading to potentially six types of errors at each of the four aggregations stages. For CEP schools, all reimbursable meals are free, so they only report the total number of reimbursable meals. As such, they can only make two types of errors (under or over report) at each aggregation stage.

We will identify errors from daily meal count totals not being summed correctly at each of the four stages of aggregation. Because the meals for this analysis are reported at the school level, a school-level analysis weight will be derived for each sampled school. Once the errors are identified, the next steps are (a) estimate the national error rate by applying school weights; (b) estimate school level erroneous payments; and (c) estimate national erroneous payments by applying budget-calibrated school weights.

Calculation of Meal Claiming Errors. These errors occur when a meal is incorrectly classified as reimbursable or not, based on whether the meal served meets the specific meal patterns required for NSLP or SBP. This error occurs in school cafeterias at the point of sale, with the unit of analysis being a “meal.” That is, through observation we can determine if a cashier correctly classifies meals, but we will not know the student’s eligibility status. We will assume that errors are randomly distributed and affect the reimbursement for each eligibility type proportionally. Once the errors are identified, the next steps are (a) estimate the national error rate by applying school weights; (b) estimate school level erroneous payments; and (c) estimate national erroneous payments by applying budget-calibrated school weights.

The analyses will also compare key findings of APEC III to those found in the previous two APEC studies using tests of significance. These key comparisons include certification and non-certification (meal claiming and aggregation) error rates as well as improper payment rates by each category (i.e., under- and over-payment) and source of error (e.g., point-of-sale, SFA meal counts) for NSLP and SLP separately. A regression model will be used to estimate both the effects of procedural changes and effects of trends on the certification error measures for APEC III compared to APEC II. These estimates will be used to adjust the data over time to create a consistent series. T-tests will be implemented to test the significance of variation in certification errors across APEC studies. Non-certification errors and rate of improper payments are computed as means or proportions. Standard statistical analyses such as the SURVEY PROCs in SAS can be used to conduct the adjusted Wald F statistical tests to such outcomes across studies in different years (i.e., APEC I, II, and III).

Provide a robust examination of the relationship of student, school, and SFA characteristics to error rates (Objective 2). FNS will collect information on the administrative and operational structure of SFAs and schools sampled for the study. After applying the appropriate weights, data will be tabulated to provide descriptive summaries that are representative of SFAs and schools participating in the school meals programs nationally during SY 2017-2018. The relationship between student, school, and SFA characteristics on each of the key types and sources of error will be examined. The distribution of program error data at all levels before starting the analyses will be examined, and outliers will be flagged, especially among unusual contextual factors that may be contributing to the program errors observed on the data collection day. Analyses will be conducted on program errors with and without outliers.

Conduct a sub-study on the differences in error rates among SFAs using different implementation strategies in their school meals programs (Objective 3). Sub-studies will include analyses to determine whether there is a statistically significant difference in error rates that may be associated with SFA characteristics (e.g., implementation strategies in school meals programs). Bivariate and multivariate regression analyses at the SFA level will be used to answer this research question. Bivariate analyses will examine the relationship between an error rate and a specific SFA characteristic (e.g., SFA size, location). In contrast, multivariate analysis will use regression techniques to examine the relationship between a set of independent variables and the error rate. The coefficients in the regression will indicate how much and in what direction the error rate changes with a one-unit increase in the independent variable, controlling for other variables in the model. Additionally, we will use the findings from the qualitative analysis of the semi-structured interview to assist in interpreting the implications of the findings. The data will be weighted as appropriate given the sampling plan and nonresponse rates. Chi-square tests will be used to test if differences in SFA characteristics and policies are related to the error rates.

Provide qualitative analyses examining the reasons for erroneous payments (Objective 4). Data collection efforts will provide qualitative data from in-depth interviews with households and SFA directors along with the in-person interviews with cafeteria managers regarding their perceptions of the possible causes of errors. Additionally, there will be data from a few open-ended questions from the household survey and SFA director survey. Analyses of the qualitative data will involve conducting a thorough examination of the data to detect patterns and relationships related to the reasons for erroneous payments. Qualitative analysts will conduct content analysis of open-ended responses using thematic analysis guided by principles of iterative emergent coding (Silverman, 1993).31 This approach involves comparing concepts or categories and finding similarities and differences between them (Glaser, 1992),32 identifying themes that recur across households, schools, and SFAs.

Estimate Future Errors and State Level Estimates. Models will be developed for estimating future error rates at the national and State level. First, we will use econometric forecasting models similar to those used in the previous APEC studies to estimate future error rates and produce State-level estimates. In addition, we will employ a Bayesian estimation approach with a Markov Chain Monte Carlo (MCMC) to model the error rates. The MCMC model will be estimated using a Bayesian approach, unlike the econometric model, which allows for the way variables affect errors to potentially change over time.

17. Displaying the OMB Approval Expiration Date

If seeking approval to not display the expiration date for OMB approval of the information collection, explain the reasons that display would be inappropriate.

The agency plans to display the expiration date for OMB approval of the information collection on all instruments.

18. Exceptions to the Certification Statement Identified in Item 19

Explain each exception to the certification statement identified in Item 19 of the OMB 83-I Certification for Paperwork Reduction Act.

There are no exceptions to the certification statement. The agency is able to certify compliance with all provisions under Item 19 of OMB Form 83-I.

1 Food and Nutrition Service, Measuring and Reducing Errors in the School Meal Programs (Summary) May 2015, http://www.fns.usda.gov/sites/default/files/ops/APECII-Summary.pdf.

2 U.S. Department of Agriculture, Food and Nutrition Service, Office of Policy Support, Program Error in the National School Lunch Program and School Breakfast Program: Findings from the Second Access, Participation, Eligibility and Certification Study (APEC II) Volume 1: Findings by Quinn Moore, Judith Cannon, Dallas Dotter, Esa Eslami, John Hall, Joanne Lee, Alicia Leonard, Nora Paxton, Michael Ponza, Emily Weaver, Eric Zeidman, Mustafa Karakus, Roline Milfort. Project Officer Joseph F. Robare. Alexandria, VA: May 2015.

3 U.S. Department of Agriculture, Food and Nutrition Service, Office of Policy Support, Program Error in the National School Lunch Program and School Breakfast Program: Findings from the Second Access, Participation, Eligibility and Certification Study (APEC II) Volume 1: Findings by Quinn Moore, Judith Cannon, Dallas Dotter, Esa Eslami, John Hall, Joanne Lee, Alicia Leonard, Nora Paxton, Michael Ponza, Emily Weaver, Eric Zeidman, Mustafa Karakus, Roline Milfort. Project Officer Joseph F. Robare. Alexandria, VA: May 2015.

4 U.S. Department of Agriculture, Food and Nutrition Service, Office of Analysis, Nutrition, and Evaluation. Access, Participation, Eligibility and Certification Study (APEC): Erroneous Payments in the NSLP and SBP. Volume 1: Findings by Michael Ponza, Philip Gleason, Lara Hulsey, Quinn Moore. Project Officer John Endahl. Alexandria, VA: October 2007.

5 U.S. Department of Agriculture, Food and Nutrition Service, Office of Policy Support, Program Error in the National School Lunch Program and School Breakfast Program: Findings from the Second Access, Participation, Eligibility and Certification Study (APEC II) Volume 1: Findings by Quinn Moore, Judith Cannon, Dallas Dotter, Esa Eslami, John Hall, Joanne Lee, Alicia Leonard, Nora Paxton, Michael Ponza, Emily Weaver, Eric Zeidman, Mustafa Karakus, Roline Milfort. Project Officer Joseph F. Robare. Alexandria, VA: May 2015.

6 Direct certification is a process in which States and/or local education agencies (LEAs) directly certify students for free meals without an application through an automatic process (i.e., computer/electronic match) using records of program participation (SNAP, TANF, etc.). Categorically eligible refers to students who are eligible for free meals because of their participation in assistance programs (e.g., SNAP, TANF)) or who are migrant, runaway, homeless, etc. Directly certified students are categorically eligible (unless certified from WIC Medicaid), whereas categorically eligible students are not necessarily directly certified.

7 This is the sum of 275 SFA Meal Participation Data Requests plus 44 State Meal participation Data Requests.

8 This is the sum of 53,698 responses from respondents plus 5,318 responses from nonrespondents.

9 The total responses submitted electronically equals 5,412 (4,818 household survey + 275 SFA director survey + 319 State/SFA electronic records).

10 This includes requests for 6,424 applications for abstraction from 275 SFA staff, requests for 625 school meal counts from 625 school staff, and requests for 275 SFA meal claims from 275 SFA staff. This totals 1,175 requests and 7,324 records.

11 These additional 7,480 records are not included in the estimated 9.2 percent of responses submitted electronically.

12 Accessed May 4, 2016, at: http://www.whitehouse.gov/sites/default/files/omb/memoranda/2015/m-15-02.pdf.

13 Incentives will only be provided to individuals and not to State, SFA, or school staff for their participation.

14 Singer, E. (2002). The use of incentives to reduce non response in household surveys. In Groves, R., Dillman, D., Eltinge, J., Little, R. (Eds.). Survey Non Response. New York: Wiley, pp 163-177.

15 Groves, R., Fowler, F., Couper, M., Lepkowski, J., Singer, E., and Tourangeau, R. (2009). Survey Methodology. John Wiley & Sons, pp 205-206.

16 Singer, E., and Ye, C. (2013). The use and effectives of incentives in surveys. Annals of the American Academy of Political and Social Science, 645(1):112-141.

17 Singer, E. (2006). Introduction: Nonresponse Bias in Household Surveys. Public Opinion Quarterly, 70(5): 637-645.

18 Dose-response analysis refers to the measurement of the relationship between the dose (in this case dollar amount of incentive) and the response (in this case response rates). Such analysis provides data for the expected improvement in response rate per additional dollar of incentive.

19 Mercer, A., Caporaso, A., Cantor, D., and Townsend, R. (Spring 2015). How much gets you how much? Monetary incentives and response rates in household surveys. Public Opinion Quarterly, 79(1): 102-129.

20 Approval # 0584–0530 NSLP/SBP Access, Participation, Eligibility, and Certification Study, Discontinued 08/31/2015.

24 This amount reflects the total Westat contract value plus FNS oversight over the 5-year period ($11,728,218 + $92,705.60).

25 The average annual cost is over a 5-year period.

26 This amount is the Westat total contract value.

27 This average annual cost is over a 5-year period.

28 Office of Personnel Management, General Schedule, accessed January 24, 2017, at: https://www.opm.gov/policy-data-oversight/pay-leave/salaries-wages/salary-tables/pdf/2017/DCB_h.pdf.

29 Approval # 0584–0530 NSLP/SBP Access, Participation, Eligibility, and Certification Study, Discontinued 08/31/2015.

30 This includes qualitative interviews with a sub-sample of SFA directors, cafeteria managers, and household respondents.

31 Silverman, D. (1993). Beginning Research. Interpreting Qualitative Data. Methods for Analysing Talk, Text and Interaction. Londres: Sage Publications.

32 Glaser, B.G. (1992). Basics of Grounded Theory Analysis: Emergence vs. Forcing. Mill Valley, CA: Sociology Press.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Wallace-Williams, Devin - FNS |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy