2018EndtoEndCensusTest_Response Rates_Study Plan

2018EndtoEndCensusTest_ResponseRatesStudyPlan.docx

2018 End-to-End Census Test – Peak Operations

2018EndtoEndCensusTest_Response Rates_Study Plan

OMB: 0607-0999

|

|

Study Plan for the 2018 End-to-End Census Test Response Rates Assessment

Draft Pending Final Census Bureau Executive Review and Clearance.

|

|

|

Version 0.2 |

This page is intentionally left blank.

Table of Contents

IV. Questions To Be Answered 9

IX. Division Responsibilities 12

XI. Issues That Need to be Resolved 17

XIII. Document Revision and Version Control History 18

Appendix A: Demographics of Respondents and Non-respondents 20

Appendix B: Response Rates by Panel and Cohort 22

Appendix C: Internet Response Rates by Device, Panel, and Cohort 23

One of the goals of the 2020 Census is to generate the largest possible self-response rate, which will reduce the need to conduct expensive, in-person follow-up with nonresponding households. Contact strategies will encourage the use of the internet as the primary response mode through a sequence of invitations and postcard mailings. Households will be able to respond using multiple modes (i.e., internet, paper, or telephone, if they call the Census Questionnaire Assistance [CQA] Center). Furthermore, respondents will be able to submit a questionnaire without a unique identification code.

The 2018 End-to-End Census Test is an important opportunity for the Census Bureau to ensure an accurate count of the nation’s increasingly diverse and rapidly growing population. It is the first opportunity to apply much of what has been learned from census tests conducted throughout the decade in preparation for the nation’s once-a-decade population and housing census. The 2018 End-to-End Census Test will be held in three locations, covering more than 700,000 housing units: Pierce County, Washington; Providence, Rhode Island; and the Bluefield-Beckley-Oak Hill, West Virginia area.

The 2018 End-to-End Census Test will be a dress rehearsal for most of the 2020 Census operations, procedures, systems, and field infrastructure to ensure there is proper integration and conformance with functional and non-functional requirements. The test also will produce prototypes of geographic and data products. Note that 2018 End-to-End Census Test results are based on three sites that were purposely selected and cannot be generalized to the entire United States.

This study plan documents how the response rates from the 2018 End-to-End Census Test will be assessed, as guided by questions to be answered.

The following section provides a summary of contact strategies tested throughout this decade and the response rates associated with them. Data are presented for the 2012 National Contact Test, the 2014 Census Test, the 2015 National Content Test, the 2016 Census Test, and the 2017 Census Test. This section concludes with a discussion of the contact strategy plans for the 2018 End-to-End Census Test.

2012 National Content Test

The 2012 National Content Test (NCT) played an early role in 2020 Census planning. The sample was selected from housing units in mailout/mailback1 areas from the 50 states and the District of Columbia. This sample of 80,000 housing units was randomly assigned to one of six contact and notification strategy panels to determine the best “Internet Push” methodology that would get respondents to complete the test census online (Bentley and Meier, 2012). These six panels are outlined in Table 1.

The 2012 NCT results showed variation of self-response rates across panels and modes. (See Table 2). Panel 6 experienced the highest overall self-response, while Panel 3 had the highest internet response.

Table 1: 2012 NCT Contact Strategy Panel |

|||||||||

Panel |

Thursday August 23 |

Thursday August 30 |

Tuesday September 4 |

Friday September 14* |

Friday September 21* |

||||

1) Advance Letter (n=13,334) |

Advance letter |

Letter + Internet instructions |

Reminder postcard |

|

Mail questionnaire (w/choice) |

||||

2) Absence of Advance Letter (n=13,334) |

|

Letter + Internet instructions |

Reminder postcard |

|

Mail questionnaire (w/choice) |

||||

3) 2nd Reminder prior to questionnaire (n=13,333) |

|

Letter + Internet instructions |

Reminder postcard |

2nd Reminder Postcard (blue) |

Mail questionnaire (w/choice) |

||||

4) Accelerated Q followed by 2nd reminder (n=13,333) |

|

Letter + Internet instructions |

Reminder postcard |

Accelerated Mail questionnaire (w/choice) |

2nd Reminder Postcard (blue) |

||||

5) Telephone number at initial contact, accelerated Q and 2nd reminder (n=13,333) |

|

Letter + Internet instructions with telephone number |

Reminder postcard |

Accelerated Mail questionnaire (w/choice) |

2nd Reminder Postcard (blue) |

||||

6) Accelerated Q, content tailored to nonrespondents, and 2nd Reminder (n=13,333) |

|

Letter + Internet instructions |

Reminder postcard |

Accelerated Mail questionnaire (w/choice) with content tailored to nonrespondents |

2nd Reminder Postcard (blue) with content tailored to nonrespondents |

||||

Source: 2012 National Census Test * These mailings were targeted to nonrespondents. |

|||||||||

Table 2: 2012 National Census Test Weighted Response Rates by Panel and Response Mode |

|||||||||

Panel |

Total |

Internet |

TQA |

||||||

1. Advance Letter |

60.3 (0.66) |

38.1 (0.68) |

17.2 (0.53) |

5.1 (0.33) |

|||||

2. Absence of advance letter |

58.0 (0.62) |

37.2 (0.62) |

16.5 (0.48) |

4.3 (0.25) |

|||||

3. 2nd reminder prior to questionnaire |

64.8 (0.65) |

42.3 (0.70) |

13.6 (0.46) |

8.9 (0.40) |

|||||

4. Accelerated questionnaire followed by 2nd reminder |

63.7 (0.60) |

38.1 (0.61) |

20.3 (0.51) |

5.3 (0.28) |

|||||

5. Telephone number at initial contact, accelerated questionnaire, and 2nd reminder |

64.5 (0.65) |

37.4 (0.64) |

17.6 (0.49) |

9.4 (0.40) |

|||||

6. Accelerated questionnaire, content tailored to nonrespondents and 2nd reminder |

65.0 (0.63) |

37.6 (0.64) |

22.2 (0.59) |

5.2 (0.32) |

|||||

Source: 2012 National Census Test data Note: Estimates are weighted with standard errors in parentheses. |

|||||||||

2014 Census Test

The 2014 Census Test was a site test conducted within a number of census blocks delineated from areas in the District of Columbia and Montgomery County, Maryland. This test examined eight contact strategies for optimizing self-response including preregistration, internet response without an ID, and email invitations in lieu of mail. The goal was to determine which panel best encouraged internet response. Table 3 summarizes the experimental panel design:

Table 3: 2014 Census Test Contact Strategy Panels |

||||||

Panel |

Prenotice |

#1 (June 23) |

#2 (July 1) |

#3* (July 8) |

#4* (July 15) |

#5* (July 22) |

1) Notify Me (Preregistration) |

Postcard (June5) |

Email/Text |

Email/Text |

Email/Text |

Mail Questionnaire |

|

2) Internet Push Without ID |

|

Letter (no ID) |

Postcard (no ID) |

Postcard (no ID) |

Mail Questionnaire |

|

3) Internet Push (Control) |

|

Letter |

Postcard |

Postcard |

Mail Questionnaire |

|

4) Internet Push with Email as 1st Reminder |

|

Letter |

Postcard |

Mail Questionnaire |

|

|

5) Internet Push with AVI as 3rd Reminder |

|

Letter |

Postcard |

Postcard |

Mail Questionnaire |

AVI |

6) Cold Contact Email Invite, and 1st Reminder |

|

Postcard |

Mail Questionnaire |

|

||

7) Letter Prenotice, Email Invite, and 1st Reminder |

Letter (June 17) |

Postcard |

Mail Questionnaire |

|

||

8) AVI Prenotice, Email Invite, and 1st Reminder |

AVI (June 17) |

Postcard |

Mail Questionnaire |

|

||

Source: 2014 Census Test core response data and sample file * Targeted only to nonrespondents |

||||||

AVI = Automated Voice Invitations |

||||||

Note: Households in Panel 1 that do not preregister by 6/18 will receive the M1 panel materials. |

||||||

Response rates were the primary analytical measure used to evaluate the success of the different contact strategy panels. Panel 5 produced the highest self-response rate and the highest internet response rate. Table 4 shows the total response rates for each panel.

Table 4: 2014 Census Test Weighted Response Rates by Panel and Response Mode |

||||

Panel |

Total |

Internet |

TQA |

|

1) Notify Me (Preregistration) |

60.0 (0.49) |

44.2 (0.50) |

5.9 (0.24) |

10.0 (0.30) |

2) Internet Push Without ID |

58.9 (0.49) |

40.6 (0.49) |

6.7 (0.25) |

11.5 (0.32) |

3) Internet Push (Control) |

61.4 (0.49) |

46.3 (0.50) |

6.5 (0.25) |

8.6 (0.28) |

4) Internet Push with Email as 1st Reminder |

62.0 (0.49) |

44.6 (0.50) |

5.4 (0.23) |

12.1 (0.33) |

5) Internet Push with AVI as 3rd Reminder |

64.1 (0.48) |

46.9 (0.50) |

7.3 (0.26) |

9.9 (0.30) |

6) Cold Contact Email Invite, and 1st Reminder |

53.6 (0.50) |

28.6 (0.45) |

2.9 (0.17) |

22.1 (0.42) |

7) Letter Prenotice, Email Invite, and 1st Reminder |

54.9 (0.50) |

31.2 (0.46) |

2.7 (0.16) |

21.0 (0.41) |

8) AVI Prenotice, Email Invite, and 1st Reminder |

54.1 (0.50) |

28.5 (0.45) |

2.6 (0.16) |

22.9 (0.42) |

Source: 2014 Census Test core response data and sample file Note: includes all sample housing units, regardless of whether the unit was matched to a landline phone number or email address on the supplemental contract frame. Estimates are weighted with standard errors in parentheses. |

||||

2015 National Content Test

The 2015 National Content Test (NCT) was conducted with a nationwide sample of 1.2 million

housing units including Puerto Rico. The 2015 NCT focused on content testing, testing different contact strategies aimed at optimizing self-response (OSR), and testing different approaches for offering language support in mail materials.

The OSR research conducted in the 2015 NCT compared nine contact strategies that differed in the timing and content of contacts. It also compared three language panels with different designs for bilingual mail materials. For the OSR sample, the probability of selection for each housing unit was dependent on tract-level data regarding the number of residential fixed high-speed Internet connections reported by the Federal Communications Commission (FCC) and the Low Response Score (LRS) from the Planning Database2 (PDB) (Mathews, 2015). Table 5 summarizes the contact strategy panel design, and Table 6 summarizes the three language panels.

Table 5: Contact Strategy Panel Design |

||||||

|

Panel |

#1 (August 24) |

#2 (August 31) |

#3* (September 8) |

#4* (September 15) |

#5* (September 22) |

1 |

Internet Push (Control) |

Letter |

Postcard |

Postcard |

Mail Questionnaire |

|

2 |

Internet Push with Early Postcard |

Letter |

Postcard (August 28) |

Postcard |

Mail Questionnaire |

|

3 |

Internet Push with Early Questionnaire |

Letter |

Postcard |

Mail Questionnaire |

Postcard |

|

4 |

Internet Push with Even Earlier Questionnaire |

Letter |

Mail Questionnaire |

Postcard |

Postcard |

|

5 |

Internet Choice |

Mail Questionnaire |

Postcard |

Postcard |

Mail Questionnaire |

|

6 |

Internet Push with Postcard as 3rd Reminder |

Letter |

Postcard |

Postcard |

Mail Questionnaire |

Postcard |

7 |

Internet Push Postcard |

Postcard |

Postcard |

Letter |

Mail Questionnaire |

|

8 |

Internet Push with Early Postcard and 2nd Letter Instead of Mail Questionnaire |

Letter |

Postcard (August 28) |

Postcard |

Letter |

|

9 |

Internet Push with Postcard and Email as 1st Reminder (Same time) |

Letter |

Postcard and Email (August 28) |

Postcard |

Mail Questionnaire |

|

Source: 2015 National Content Test * Note: Targeted only to nonrespondents |

||||||

Table 6: Language Panel Design |

|

Panel |

Treatment |

1 |

Control: English materials with Spanish sentence (similar to 2014) directing to website/telephone number |

2 |

Dual-sided letter: Cover letter redesigned with English on front and Spanish on back, with languages (English and Spanish) on outside of envelope |

3 |

Swim-lane letter: Cover letter redesigned to include both English and Spanish content on the same side, with languages (English and Spanish) on outside of envelope |

Source: 2015 National Content Test |

|

Table 7 shows the self-response rates, overall and by mode, for each of the contact strategy panels in the stateside portion of the NCT sample. Panel 6 had a significantly higher overall response rate than all other panels. Panel 8, which did not have the option of responding by mail, had a significantly higher internet response rate, but a significantly lower overall response rate than all other variations of the Internet Push panel with the exception of Panel 4

Table 7: 2015 National Census Test Response Rates by Panel and Response Mode for Stateside Sample |

||||

Panel |

Total |

Internet |

TQA |

|

1. Internet Push (Control) |

53.6 (0.18) |

37.5 (0.19) |

6.5 (0.09) |

9.5 (0.11) |

2. Internet Push with Early Postcard |

53.4 (0.18) |

37.1 (0.16) |

6.5 (0.09) |

9.8 (0.11) |

3. Internet Push with Early Questionnaire |

53.1 (0.17) |

33.7 (0.17) |

5.1 (0.08) |

14.3 (0.12) |

4. Internet Push with Even Earlier Questionnaire (Low OSR Stratum Only) |

37.5 (0.28) |

16.8 (0.23) |

3.2 (0.10) |

17.5 (0.23) |

5. Internet Choice (Low OSR Stratum only) |

42.6 (0.29) |

10.8 (0.17) |

2.1 (0.09) |

29.8 (0.28) |

6. Internet Push with Postcard as 3rd Reminder |

55.2 (0.18) |

38.1 (0.18) |

6.8 (0.09) |

10.4 (0.10) |

7. Internet Push Postcard |

52.1 (0.18) |

36.1 (0.17) |

6.1 (0.09) |

9.9 (0.11) |

8. Internet Push with Early Postcard and 2nd Letter instead of Mail Questionnaire |

48.5 (0.17) |

41.0 (0.18) |

7.4 (0.10) |

N/A |

9. Internet Push with Postcard and Email as 1st Reminder (same time) |

53.9 (0.19) |

37.8 (0.18) |

6.3 (0.09) |

9.8 (0.10) |

Source: 2015 National Content Test data. Estimates are weighted with standard error in parentheses. |

||||

2016 Census Test

The 2016 Census Test was a site test, so all sampled housing units were geographically clustered in Harris County, Texas, and Los Angeles County, California. One of the objectives for the 2016 Census Test was to experiment with methods of maximizing self-response. Each strategy was designed to increase the number of households that responded online and to gain the cooperation of respondents who speak languages other than English. Four of the five panels incorporated the Internet Push approach in which sampled housing units did not receive a paper questionnaire in the first mailing. The fifth panel represented the Internet Choice option for respondents who received in the first contact both the paper questionnaire and the explanation of the choice to respond on the internet. The full contact strategy panel design is summarized in Table 8.

All housing units in the two selected sites were assigned to a language stratum and to a response stratum. The language stratum was assigned through the use of a special tabulation of 2010-2014 five-year data collected by the American Community Survey. The three response strata represented an estimated Low, Medium, or High likelihood of responding. The likelihood of a particular case to respond was calculated by combining data from the FCC with information about 2010 Census mail return rates. More details about how the sample for the 2016 Census Test was selected can be found in the sample design specification (Konya, 2016).

Table 8: Contact Strategy Panel Design |

||||||

|

Panel |

#1 (March 21) |

#2 (March 24) |

#3* (April 4) |

#4* (April 11) |

#5** (May 12) |

1 |

Internet Push |

Letter |

Postcard |

Postcard |

Mail Questionnaire and Letter |

Postcard (to AdRec addresses) |

2 |

Internet Push with Early Postcard |

Letter |

Letter |

Postcard |

Mail Questionnaire and Letter |

|

3 |

Internet Push with Early Questionnaire |

Brochure |

Postcard |

Postcard |

Mail Questionnaire and Brochure |

|

4 |

Internet Push with Even Earlier Questionnaire |

Letter and Insert |

Postcard |

Postcard |

Mail Questionnaire and Letter/Insert |

|

5 |

Internet Choice |

Mail Questionnaire and Letter |

Postcard |

Postcard |

Mail Questionnaire and Letter |

|

Source: U.S. Census Bureau, 2016 Census Test * Targeted only to nonrespondents. |

||||||

** Sent to addresses identified by administrative records. |

||||||

Note: Materials will be bilingual (English/Spanish, English/Korean, or English/Chinese) based on ACS estimates for each census tract and the estimates for data from the “Ability to Speak English” question. |

||||||

Table 9 shows the combined response rates for both sites. Overall, the 2016 Census Test had a response rate of 45.9 percent, and the internet response rate was 30.3 percent. The panel with the highest response rate and the highest internet response rate was the Internet Push with the language insert panel, 47.8 percent and 34.7 percent respectively. The Internet Push panel had the lowest response rate of 43.8 percent.

Table 9: 2016 Census Test Response Rates by Panel and Response Mode |

||||

Panel |

Total |

Internet |

CQA |

|

Total |

45.9 |

30.3 |

2.3 |

13.4 |

1. Internet Push |

43.8 |

31.9 |

2.4 |

9.5 |

2. Internet Push with reminder letter |

44.6 |

32.8 |

2.4 |

9.4 |

3. Internet Push with multilingual brochure |

46.6 |

32.5 |

2.6 |

11.5 |

4. Internet Push with language insert |

47.8 |

34.7 |

2.6 |

10.5 |

5. Internet Choice |

45.8 |

16.9 |

1.0 |

27.9 |

Source: U.S. Census Bureau, 2016 Census Test |

||||

2017 Census Test

The 2017 Census Test sampled 80,000 housing units across the nation with a Census Day of April 1, 2017. One objective of the 2017 Census Test was to measure consistency and accuracy of responses to questions asking about household members’ enrollment in American Indian or Alaska Native entities. A household was sampled into one of four panels and received up to five mail packages inviting response to the 2017 Census Test. Table 10 summarizes the mailout strategy for the 2017 Census Test.

Table 10: 2017 Census Test National Sample Contact Strategy |

|||||

Panel |

Mailing 1 Monday, March 20 |

Mailing 2 Thursday, March 23 |

Mailing 3* Monday, April 3 |

Mailing 4* Monday, April 10 |

Mailing 5* Monday, April 20 |

Internet First English Only |

Letter + CQA Insert |

Letter |

Postcard |

Letter + CQA Insert + Questionnaire |

Postcard |

Internet First Bilingual |

Letter + CQA Insert + FAQ Insert |

Letter |

Postcard |

Letter + CQA Insert + FAQ Insert + Questionnaire |

Postcard |

Internet Choice English only |

Letter + CQA Insert + Questionnaire |

Letter |

Postcard |

Letter + CQA Insert + Questionnaire |

Postcard |

Internet Choice Bilingual |

Letter + CQA Insert + FAQ Insert + Questionnaire |

Letter |

Postcard |

Letter + CQA Insert + FAQ Insert + Questionnaire |

Postcard |

Source: 2017 National Content Test data * Sent only to nonresponding households |

|||||

Table 11 shows the weighted response rates by panel and mode. Overall, the 2017 Census Test had a response rate of 50.3 percent, and the Internet response rate was 31.7 percent. The Internet First panel had a higher overall response rate with most of the responses coming from the internet. Most of the response from the Internet Choice panel were from a paper questionnaire.

Table 11: 2017 Census Test Weighted Response Rates by Panel and Response Mode |

||||

Panel |

Total |

Internet |

CQA |

|

Total |

50.3 (0.28) |

31.7 (0.27) |

2.4 (0.09) |

16.2 (0.20) |

1. Internet First |

53.2 (0.34) |

37.4 (0.33) |

2.8 (0.11) |

13.0 (0.24) |

2. Internet Choice |

38.5 (0.36) |

9.0 (0.21) |

0.6 (0.06) |

28.9 (0.34) |

Source: 2017 National Content Test data. Estimates are weighted with standard error in parentheses. |

||||

2018 End-to-End Census Test

The 2018 End-to-End Census Test will be held in three locations, covering more than 700,000 housing units: Pierce County, Washington; Providence, Rhode Island; and the Bluefield-Beckley-Oak Hill, West Virginia area. Although they are not part of a designed experiment, the test will include two mail panels, three different “mail cohorts” that will be mailed the Internet Push mailings at different times, as well as bilingual and monolingual mail panels. See Table 12 for a description of mail panels and mail cohorts.

Table 12: 2018 End-to-End Census Test Self Enumeration Panel Descriptions |

||||||

Panel |

Cohort |

Mailing 1 Letter |

Mailing 2 Letter |

Mailing 3* Postcard |

Mailing 4* Letter + Questionnaire |

Mailing 5* “It’s not too late” Postcard |

Internet First |

1 |

March 16 |

March 20 |

March 30 |

April 12 |

April 23 |

2 |

March 20 |

March 23 |

April 3 |

April 16 |

April 26 |

|

3 |

March 23 |

March 27 |

April 6 |

April 19 |

April 30 |

|

Internet Choice |

N/A |

March 16

Letter + Questionnaire |

March 20 |

March 30 |

April 12 |

April 23 |

Source: U.S. Census Test, 2018 End-to-End Census Test * Targeted only to nonrespondents Cohorts and dates are tentative and subject to change. All dates are in 2018. |

||||||

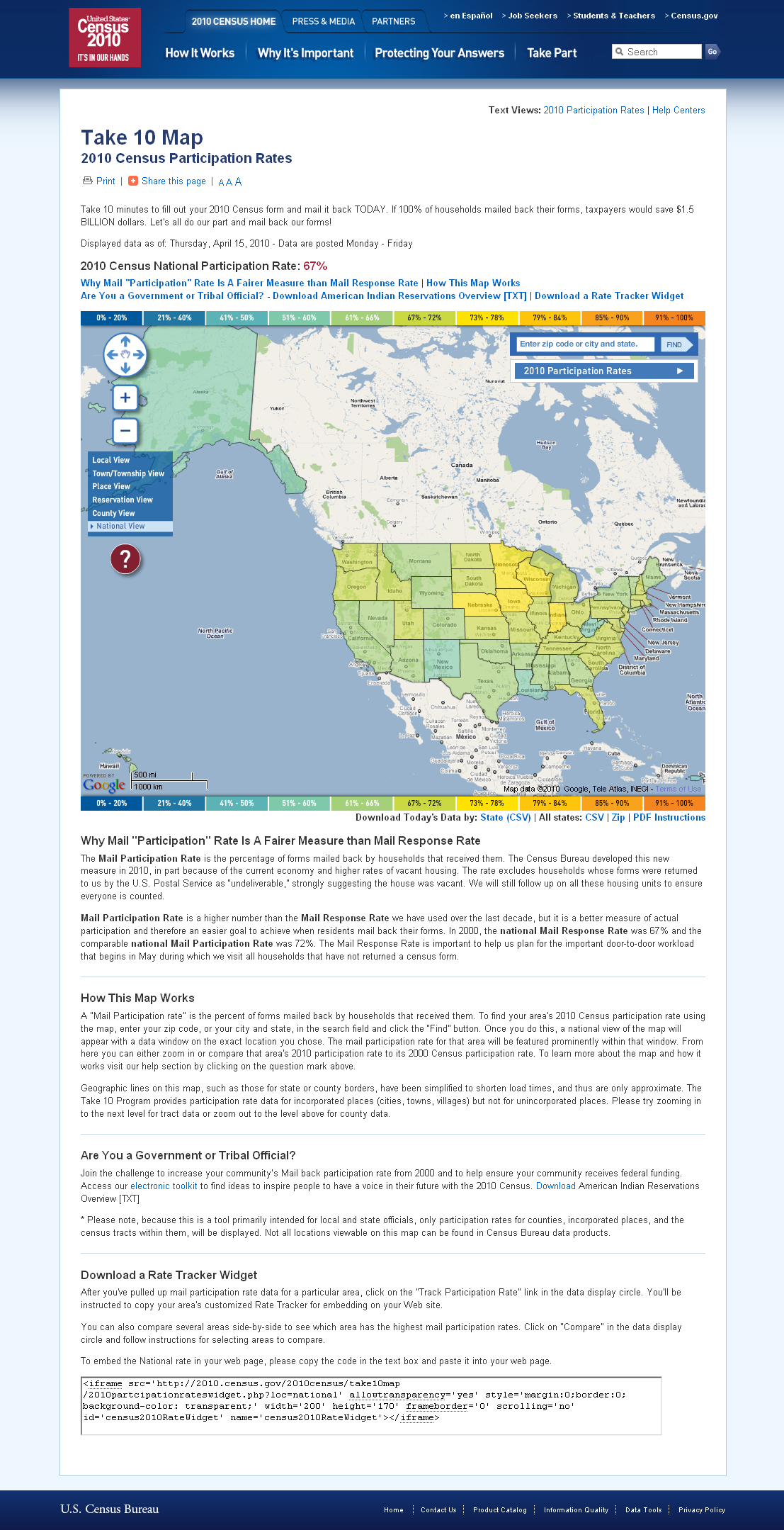

Response Rate Mapping Application

In 2010, the Census Bureau used the Take 10 Map to communicate to the public daily decennial self-response rates at different geographic levels. The 2010 Census Take 10 Map was used by the public to promote census participation, for example, by fostering competition between communities. Figure 1 displays a screenshot of this map.

While a similar application will not be available for the 2018 End-to-End Census Test, the Census Bureau plans to build an online, interactive mapping tool for use during the 2020 Census. The planned application will track self-response rates by mode across geographies as well as provide underlying self-response rate data to the public via an application programming interface (API). An internal test after the 2018 End-to-End Census Test will be conducted to ensure all systems for displaying public response rates on a daily basis during the 2020 Census are working properly.

Figure 1. Screenshot of 2010 Census Take 10 Map

The assumptions for the Response Rates Assessment are:

The 2018 End-to-End Census Test will consist of three test sites, but only the Providence County, Rhode Island site will have a self-response option.

Self-response data collection will occur between March 16, 2018 and July 31, 2018.

Update Leave will be conducted between April 9, 2018 and May 4, 2018.

All mail returns and Undeliverable as Addressed (UAA) will have a check-in date.

Field work for the Nonresponse Followup (NRFU) operation will be conducted between May 9, 2018 and July 24, 2018.

Questions To Be Answered

The questions to be answered in the Response Rates Assessment are:

Research Question #1: What were the response rates by mode and overall?

Research Question #2: What were the daily response rates by panel, cohort, mode and overall? What impact did each mailing have on the daily response rates?

Research Question #3: What were response rates by mail panel, mail cohort, and language of mailing? How did response rates vary across self-response modes?

Research Question #4: What were the demographic and housing characteristics of respondents across mail panel, mail cohort, and language of mailing?

Research Question #5: What device types did Internet respondents use to complete the form?

Research Question #6: Is the API prepared to provide data for the response rate mapping application in the 2020 Census?

The primary measure calculated for this study will be a self-response rate. The overall self-response rate is a measure of respondent cooperation and reflects the sample housing units that respond to the survey by one of the three response modes: (1) responding online to the internet survey site, (2) providing information to a phone interviewer via Census Questionnaire Assistance (CQA), or (3) completing and returning the mail questionnaire. By definition, the self-response rate is the number of responses received by any self-response mode divided by the number of sampled housing units.

Overall self-response rate = |

Unduplicated sufficient responses (internet, CQA, or mail) |

* 100 percent |

Total sample size |

Households providing more than one self-response are counted in the response rate calculation only once. A response is sufficient if at least two household-level items or at least two of the following person-level items are provided: name, age/date of birth, sex, race/Hispanic origin, or relationship.

The self-response rate by mode is similar to the overall self-response rate, but it focuses on each individual response method rather than combining them together.

Internet response rate = |

Unduplicated sufficient internet responses |

* 100 percent |

Total sample size |

CQA response rate = |

Unduplicated sufficient CQA responses |

* 100 percent |

Total sample size |

Mail response rate = |

Unduplicated sufficient mail responses |

* 100 percent |

Total sample size |

Examples of demographic, response rate, and device type tables appear in Appendix A, Appendix B, and Appendix C.

The limitations for the Response Rates Assessment are:

The response rates from the 2018 End-to-End test are not generalizable to the entire country or to the 2020 decennial census environment.

Timing of mailed responses may not accurately reflect respondent behavior on a daily basis.

A higher percentage of respondents use the Internet rather than CQA or paper questionnaire to respond.

Increases in the daily response rates occur around the time the mailings arrive in potential respondents homes.

Peak reaponse rates are spread evenly across mail cohorts.

Respondents completing a questionnaire by Internet, CQA, or paper questionnaire are not enumerated in NRFU.

The following list describes data requirements that will be needed to answer the research questions in the Response Rates Assessment. These requirements are specific to this assessment and are in addition to any global data requirements.

Denote check-in date on the response data file of mail all paper questionnaire returns received through the end of check-in processing.

Denote check-in date on the response data file of all internet returns received through the end of check-in processing.

Denote check-in date on the response data file of all CQA returns received through the end of check-in processing.

Denote the check-in date on the response data file of all UAAs received through the end of check-in processing.

Denote on the response data file the type of device used to complete an internet return.

Denote MAFID on the response data file of all forms completed with an ID.

Denote on the response data file all forms completed without an ID.

Denote the panel for each record on the response data.

Denote the cohort for each record on the response file.

Denote the type of enumeration area on the response data file for each housing unit.

Denote on the response data file records that were in the NRFU universe.

Denote the date on the response data file of all housing units enumerated in NRFU.

Denote the sex of each person on the response data file.

Denote the race and ethnicity for each person on the response data file.

Denote the tenure of the household for each person on the response data file.

Denote the age for each person on the response data file.

Denote on the response data file whether or not a household received a bilingual (English/Spanish) questionnaire during the mailout of questionnaires.

The following divisions will contribute to the completion of the Resposne Rates Assessment:

Decennial Information Technology Division (DITD)

Acquire response data to compile analysis files.

Provide analysis file.

Decennial Statistical Studies Division (DSSD)

Determine panel and cohort for the mailing materials in the test sites.

Conduct data analysis

Create Response Rates Assessment report.

Field Division (FLD)

Conduct Update Leave (UL) and Nonresponse Followup (NRFU) operations.

National Processing Center (NPC)

Conduct check-in processing of all mail returns and UAAs.

Provide housing unit level response data file with check-in results of all mail returns and UAAs for analysis.

Below are the standard schedule activities for the development of the research study plan and report. The durations appearing below are suggested durations. Authors may alter them as they deem necessary. Definitions of acronyms are noted in the glossary section.

Activity ID |

Activity Name |

Original Duration |

Start |

Finish |

Response Rates Assessment Study Plan |

||||

First Draft of Response Rates Assessment Study Plan |

|

|

|

|

|

Prepare First Draft of Response Rates Assessment Study Plan

|

15 |

06/12/17 |

06/27/17 |

|

Distribute

First Draft of Response Rates Assessment Study Plan to the

Assessment Sponsoring DCMD ADC and Other Reviewers |

1 |

06/28/17 |

07/05/17 |

|

Incorporate

DCMD ADC and Other Comments to Response Rates Assessment Study

Plan |

5 |

07/06/17 |

07/12/17 |

Initial Draft of Response Rates Assessment Study Plan |

|

|

|

|

|

Prepare

Initial Draft Response Rates Assessment Study Plan |

5 |

07/13/17 |

07/19/17 |

|

Distribute

Initial Draft Response Rates Assessment Study Plan to Evaluations

& Experiments Coordination Brach (EXC) |

1

|

07/20/17 |

07/20/17 |

|

EXC

Distributes Initial Draft Response Rates Assessment Study Plan to

the DROM Working Group for Electronic Review |

1 |

07/21/17 |

07/21/17 |

|

Receive

Comments from the DROM Working Group on the Initial Draft

Response Rates Assessment Study Plan |

5 |

08/10/17 |

08/10/17 |

|

Schedule the Response Rates Assessment Study Plan for the IPT Lead to Meet with the DROM Working Group

|

17 |

08/08/17 |

08/08/17 |

|

Discuss

DROM Comments on Initial Response Rates Assessment Study Plan |

1 |

08/10/17 |

08/23/17 |

Final Draft of Response Rates Assessment Study Plan |

|

|

|

|

|

Prepare

Final Draft of Response Rates Assessment Study Plan |

15 |

08/24/17 |

09/14/17 |

|

Distribute

Final Draft Response Rates Assessment Study Plan to the DPMO and

the EXC |

1 |

09/15/17 |

09/15/17 |

|

Schedule

and Discuss Final Draft Response Rates Assessment Study Plan with

the 2020 PMGB |

14 |

09/18/17 |

10/05/17 |

|

Incorporate

2020 PMGB Comments for Response Rates Assessment Study Plan |

5 |

10/06/17 |

10/12/17 |

|

Prepare

FINAL Response Rates Assessment Study Plan |

5 |

10/13/17 |

10/19/17 |

|

Distribute

FINAL Response Rates Assessment Study Plan to the EXC |

1 |

10/20/17 |

10/20/17 |

|

EXC Staff Distributes the Response Rates Assessment Study Plan and 2020 Memorandum to the DCCO

|

3 |

10/23/17 |

10/25/17 |

|

DCCO Staff Process the Draft 2020 Memorandum and the Response Rates Assessment Study Plan to Obtain Clearances (DCMD Chief, Assistant Director, and Associate Director)

|

30 |

10/26/17 |

12/08/17 |

|

DCCO

Staff Formally Release the Response Rates Assessment Study Plan

in the 2020 Memorandum Series |

1 |

12/09/17 |

12/09/17 |

Response Rates Assessment Report |

||||

First Draft of Response Rates Assessment Report |

||||

|

Receive, Verify, and Validate Response Rates Assessment Data

|

10 |

09/05/18 |

09/19/18 |

|

Examine Results and Conduct Analysis

|

10 |

09/20/18 |

10/04/18 |

|

Prepare First Draft of Response Rates Assessment Report

|

15 |

10/05/18 |

10/26/18 |

|

Distribute First Draft of Response Rates Assessment Report to the Assessment Sponsoring DCMD ADC and Other Reviewers

|

1 |

10/29/18 |

10/29/18 |

|

Incorporate DCMD ADC and Other Comments Response Rates Assessment Report

|

7 |

10/30/18 |

11/08/18 |

Initial Draft of Response Rates Assessment Report |

||||

|

Prepare Initial Draft Response Rates Assessment Report

|

8 |

11/09/18 |

11/27/18 |

|

Distribute Initial Draft Response Rates Assessment Report to Evaluations & Experiments Coordination Br. (EXC)

|

1 |

11/28/18 |

11/28/18 |

|

EXC Distributes Initial Draft Response Rates Assessment Report to the DROM Working Group for Electronic Review

|

1 |

11/28/18 |

11/28/18 |

|

Receive Comments from the DROM Working Group on the Initial Draft Response Rates Assessment Report

|

10 |

11/29/18 |

12/13/18 |

|

Schedule the Response Rates Assessment Report for the IPT Lead to Meet with the DROM Working Group

|

10 |

12/14/18 |

01/04/19 |

|

Discuss DROM Comments on Initial Draft Response Rates Assessment Report

|

1 |

01/07/19 |

01/07/19 |

Final Draft of Response Rates Assessment Report |

||||

|

Prepare Final Draft of Response Rates Assessment Report

|

25 |

01/08/19 |

02/13/19 |

|

Distribute Final Draft of Response Rates Assessment Report to the DPMO and the EXC

|

1 |

02/14/19 |

02/14/19 |

|

Schedule and Discuss Final Draft Response Rates Assessment Report with the 2020 PMGB

|

14 |

02/15/19 |

03/07/19 |

|

Incorporate 2020 PMGB Comments for Response Rates Assessment Report

|

10 |

03/08/19 |

03/22/19 |

Final Response Rates Assessment Report |

||||

|

Prepare FINAL Response Rates Assessment Report

|

10 |

03/25/19 |

04/08/19 |

|

Deliver FINAL Response Rates Assessment Report to the EXC

|

1 |

04/09/19 |

04/09/19 |

|

EXC Staff Distribute the FINAL Response Rates Assessment Report and 2020 Memorandum to the DCCO

|

3 |

04/10/19 |

04/12/19 |

|

DCCO Staff Process the Draft 2020 Memorandum and the FINAL Response Rates Assessment Report to Obtain Clearances (DCMD Chief, Assistant Director, and Associate Director)

|

30 |

04/15/19 |

05/28/19 |

|

DCCO

Staff Formally Release the FINAL Response Rates Assessment Report

in the 2020 Memorandum Series |

1 |

05/29/19 |

05/29/19 |

|

EXC

Staff Capture Recommendations of the FINAL Response Rates

Assessment Report in the Census Knowledge Management SharePoint

Application |

1 |

05/29/19 |

05/29/19 |

Identify the exact dates for each mailing within the panels and cohorts.

Get a finalized schedule of operation dates for Self-Response and Update Leave.

-

Role

Electronic Signature

Date

Fact Checker or independent verifier

Author’s Division Chief (or designee)

DCMD ADC

DROM DCMD co-executive sponsor (or designee)

DROM DSSD co-executive sponsor (or designee)

Associate Director for R&M (or designee)

Associate Director for Decennial Census Programs (or designee) and 2020 PMGB

VERSION/EDITOR |

DATE |

REVISION DESCRIPTION |

EAE IPT CHAIR APPROVAL |

v. 0.1/Earl Letourneau |

07/12/2017 |

First Draft |

|

v. 0.2/Earl Letourneau |

08/10/2017 |

Initial Draft |

|

v. 0.3/Earl Letourneau |

08/23/2017 |

Final Draft |

|

-

Acronym

Definition

ADC

Assistant Division Chief

API

Application Programming Interface

CQA

Census Questionnaire Assistance

DCCO

Decennial Census Communications Office

DITD

Decennial Information Technology Division

DPMO

Decennial Program Management Office

DROM

Decennial Research Objectives and Methods Working Group

DSSD

Decennial Statistical Studies Division

EXC

Evaluations & Experiments Coordination Branch

FLD

Field Division

FCC

Federal Communications Commission

IPT

Integrated Project Team

LRS

Low Response Score

NCT

National Census Test

NPC

National Processing Center

NRFU

Nonresponse Followup

OSR

Optimizing Self-Response

PDB

Planning Database

PMGB

Portfolio Management Governance Board

R&M

Research & Methodology Directorate

UAA

Undeliverable as Addressed

References

Bentley, M. and Meier, F. (2012), “Sampling Specifications for 2012 National Census Test,”

DSSD 2020 Decennial Census R&T Memorandum Series #G-01, U.S. Census Bureau.

May 29, 2012

Konya, S. (2016). “Sample Selection for the 2016 Census Test.” DSSD 2020 Decennial Census

R&T Memorandum Series #R-13, U.S. Census Bureau. January 7, 2016

Mathews, K. (2015), “Sample Design Specifications for the 2015 National Content Test,” Decennial Statistical Studies Division 2020 Decennial Census R&T

Memorandum Series #R-11, U.S. Census Bureau. November 18, 2015.

Appendix A: Demographics of Respondents and Non-respondents

Demographics of Respondents in the 2018 End-to-End Census Test by Panel and Cohort |

|||||

|

|

Internet First |

Internet Choice |

||

Demographic Group |

Cohort 1 |

Cohort 2 |

Cohort 3 |

|

|

Sex |

|

|

|

|

|

|

Male |

|

|

|

|

|

Female |

|

|

|

|

|

Blank |

|

|

|

|

Race and Ethnicity |

|

|

|

|

|

|

White Alone |

|

|

|

|

|

Black Alone |

|

|

|

|

|

Asian Alone |

|

|

|

|

|

American Indian or Alaska Native Alone |

|

|

|

|

|

Middle Eastern or North African Alone |

|

|

|

|

|

Native Hawaiian or Other Pacific Islander Alone |

|

|

|

|

|

Some Other Race Alone |

|

|

|

|

|

Hispanic Alone or In Combination |

|

|

|

|

|

Multiple Responses (Non-Hispanic) |

|

|

|

|

|

Blank or Invalid |

|

|

|

|

|

Not Available* |

|

|

|

|

Tenure |

|

|

|

|

|

|

Owned with Mortgage |

|

|

|

|

|

Owned without Mortgage |

|

|

|

|

|

Rented |

|

|

|

|

|

Occupied without Payment |

|

|

|

|

|

Blank |

|

|

|

|

Age |

|

|

|

|

|

|

0-4 |

|

|

|

|

|

5-9 |

|

|

|

|

|

10-14 |

|

|

|

|

|

15-19 |

|

|

|

|

|

20-24 |

|

|

|

|

|

25-29 |

|

|

|

|

|

30-34 |

|

|

|

|

|

35-39 |

|

|

|

|

|

40-44 |

|

|

|

|

|

45-49 |

|

|

|

|

|

50-54 |

|

|

|

|

|

55-59 |

|

|

|

|

|

60-64 |

|

|

|

|

|

65+ |

|

|

|

|

|

Blank or Invalid |

|

|

|

|

Source: U.S. Census Bureau, 2018 End-to-End Census Test * No race or ethnicity question was asked of Persons 7-10 on the paper questionnaire |

|||||

Appendix B: Response Rates by Panel and Cohort

Response Rates in the 2018 End-to-End Census Test by Panel and Cohort |

|||||

Panel |

Cohort |

Total |

Internet |

CQA |

|

Total |

|

|

|

|

|

Internet Push |

1 |

|

|

|

|

2 |

|

|

|

|

|

3 |

|

|

|

|

|

Internet Choice |

|

|

|

|

|

Source: U.S. Census Bureau, 2018 End-to-End Census Test |

|||||

Appendix C: Internet Response Rates by Device, Panel, and Cohort

Internet Response by Device in the 2018 End-to-End Census Test by Panel and Cohort |

|||||

Panel |

Cohort |

Total |

Mobile Phone |

Tablet/Laptop |

Desktop |

Total |

|

|

|

|

|

Internet Push |

1 |

|

|

|

|

2 |

|

|

|

|

|

3 |

|

|

|

|

|

Internet Choice |

|

|

|

|

|

Source: U.S. Census Bureau, 2018 End-to-End Census Test |

|||||

1 Mailout/Mailback was the primary means of census taking in the 2010 Census. Cities, towns, and suburban areas with city-style addresses (house number and street name) as well as rural areas where city-style addresses are used for mail delivery comprised the Mailout/Mailback areas.

2 The Planning Database assembles a range of housing, demographic, socioeconomic, and census operational data that can be used for survey and census planning.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | 2020 Census REsearch Study Plan Template DRAFT |

| Author | douglass Abramson |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy