QRS CACFP ADCC - OMB Clearance Memo Final 12.3.18

QRS CACFP ADCC - OMB Clearance Memo Final 12.3.18.docx

Special Nutrition Programs Quick Response Surveys

QRS CACFP ADCC - OMB Clearance Memo Final 12.3.18

OMB: 0584-0613

Memorandum

Date: December 3, 2018

To: Stephanie Tatham, OMB Desk Officer, Office of Information and Regulatory Affairs, Office of Management and Budget

Through: Ruth Brown, Desk Officer, United States Department of Agriculture, Office of Chief Information Office

From: Christina Sandberg, Information Collection Clearance Officer, Food and Nutrition Service, Planning & Regulatory Affairs C.S.

Re: Under Approved Generic OMB Clearance No. 0584-0613 – Special Nutrition Programs Quick Response Surveys: CACFP Adult Day Care Center Food Safety Needs

The U.S. Department of Agriculture (USDA) Food and Nutrition Service (FNS) is requesting approval to conduct research under Approved Generic OMB Clearance Number 0584-0613, Special Nutrition Programs (SNP) Quick Response Surveys (QRS), expiration date 02/28/2021.

This request is to acquire clearance to conduct a survey with a nationally representative sample of Child and Adult Care Food Program (CACFP) – Adult Day Care Center (ADCC) program directors to identify the food safety education needs (i.e., training and resources) of ADCC program directors and their staff. The following information is provided for your review:

Title of the Project: CACFP ADCC Food Safety Needs

Control Number: 0584-0613, Expires 02/28/2021

Public Affected by this Project:

State, Local, or Tribal Government

State Agencies

Business or Other For-Profit and Non-profit Institutions

ADCC Sponsoring Organizations

ADCC Program Directors for the CACFP Program

See section 7, Project Purpose, Methodology & Formative Research Design, for a description of the number of participants for each audience (State Agencies, ADCC sponsoring organizations, and ADCC program directors for the CACFP Program) by research methodology (survey).

Number of Respondents and Research Activities:

Exhibit 1 | Assumptions on Number of Respondents

Respondent Group |

Respondents |

Research Activity |

# of Participants |

State, Local and Tribal Government |

CACFP State Agencies |

Email notification from Regional Offices to CACFP State Agencies |

51* |

Frequently Asked Questions (FAQs) |

51 |

||

Business or other for-profit and non-profit institutions |

ADCC Sponsoring Organization |

Pretest - Email notification about the study for pretest recruitment |

3 |

Email notification about the study |

400** |

||

Frequently Asked Questions (FAQs) |

403 |

||

ADCC Program Directors |

Pretest – recruitment emails |

6 |

|

Pretest – recruitment follow-up phone calls |

3 |

||

Pretest – survey testing |

66 |

||

Initial survey recruitment emails# |

700 |

||

Initial hard copy survey recruitment mails# |

100 |

||

Survey Reminders Emails 1 |

560 |

||

Survey Reminders Emails 2 |

448 |

||

Survey Reminders Emails 3 |

280 |

||

Survey Reminders Emails 4 |

224 |

||

Hard Copy Survey Reminder (for those who never click the survey link) |

112 |

||

Hard Copy Survey Reminder (for those who received an initial hard copy survey) |

80 |

||

Phone Call Reminder 1 |

448 |

||

Phone Call Reminder 2 |

280 |

||

Post-Survey Response Clarification Email |

64 |

||

Post-Survey Response Clarification Phone Call |

13 |

||

Survey |

800 |

||

Frequently Asked Questions (FAQs)### |

806 |

||

|

Total |

|

1,260## |

Notes:

* 50 States and District of Columbia.

** 50 percent of the total sample of 800 centers will be from sponsored ADCCs.

# For those participants without email addresses (n=100), hard copy invitation packets will be sent by postal mail.

## Total unique # of participants: 51 State Agencies, 403 ADCC sponsoring organizations, and 806 ADCC program directors.

### All the respondents and non-respondents are sent the FAQs (even for pretesting)

It is estimated that 80 percent of ADCC program directors contacted will complete a survey, including non-responders and those choosing not to participate.

Time Needed Per Response:

The estimated time needed for the pretest, survey, and email notification/recruitment and follow-up reminders are shown in Exhibit 2. The estimated times for the email and follow-up reminders are the same for all respondent types.

Exhibit 2 | Time Needed for the Pretest, Survey, Email Notification and Reminders for All Respondents

Research Activity |

Time (minutes) |

Time (hours) |

Email notification from Regional Offices to CACFP State Agencies |

8 |

0.13 |

Email notification to ADCC Sponsoring Organization Point of Contact |

8 |

0.13 |

Pretest - email notification to ADCC Sponsoring Organization Point of Contact for pretest recruitment |

8 |

0.13 |

Pretest - recruitment email to ADCC Program Operators* |

3 |

0.05 |

Pretest - recruitment phone calls to ADCC Program Operators |

5 |

0.08 |

Pretest - survey testing for ADCC Program Operators (Hard copy survey) |

60 |

1 |

Initial survey recruitment emails to ADCC Program Directors (Web survey with link) |

3 |

0.05 |

Initial hard copy survey recruitment to ADCC Program Directors (Hard copy survey mailed) |

3 |

0.05 |

Survey reminder email 1 to ADCC Program Directors (Web survey with link) |

3 |

0.05 |

Survey reminder email 2 to ADCC Program Directors (Web survey with link) |

3 |

0.05 |

Survey reminder email 3 to ADCC Program Directors (Web survey with link) |

3 |

0.05 |

Survey reminder email 4 to ADCC Program Directors (Web survey with link) |

3 |

0.05 |

Hard copy survey reminders to ADCC Program Directors (for those who never clicked the link) |

3 |

0.05 |

Hard copy survey reminders to ADCC Program Directors (for those who received an initial hard copy) |

3 |

0.05 |

Telephone reminder 1 to ADCC Program Directors (Web survey) |

5 |

0.08 |

Telephone reminder 2 to ADCC Program Directors (Web survey) |

5 |

0.08 |

Post-Survey response clarification email to ADCC Program Directors |

5 |

0.08 |

Post-Survey response clarification phone call to ADCC Program Directors |

7 |

0.12 |

Survey (completed by web or paper hard copy) |

20 |

0.33 |

Survey (completed by phone) |

25 |

0.42 |

Frequently Asked Questions (FAQs) |

5 |

0.08 |

*- During the pretesting of the survey, the respondents suggested that the most appropriate person for the survey would be the program director. As a result, the study team has updated the text from program operators to program directors. For the survey, the study team plans to recruit the person who knows the most about foodservice operations and food safety training and education needs of center staff. However, the pretesting was conducted with program operators.

Total Burden Hours on Public:

The estimated total burden in terms of hours is 491.79 hours (10.71 hours for State government and 481.08 hours for Businesses). The complete burden table is enclosed as Appendix A.1. This collection has a web survey (although respondents may complete the survey in paper hard copy format or over the phone). Out of a total 6,096 responses for this collection, FNS estimates that 572 (9.3%) will be submitted electronically.

Project Purpose, Methodology, and Research Design:

Background

Foodborne illness adversely impacts millions of Americans each year, resulting in more than 128,000 hospitalizations and 3,000 deaths.1 However, foodborne illness is often preventable when proper food safety practices are employed, especially by those who are responsible for procuring and preparing food. Food safety is a particularly important consideration for ADCC program directors2 because many older and functionally impaired adults often have health conditions and suppressed immune systems that make them more susceptible to acquiring and less able to recover from foodborne illness.3 Maintaining food preparation and safety standards can prove challenging because food preparation and operations vary across ADCCs.

ADCCs that participate in CACFP must follow State and local health and sanitation regulations.4 The FNS Office of Food Safety (OFS), as part of FNS’s Supplemental Nutrition and Safety Programs, is committed to expanding food safety training in FNS programs to meet the needs of nutrition assistance program staff. Since 2011, OFS has been funded through Child Nutrition appropriations and has historically focused its food safety training and technical resources toward individuals within school meal and child care programs. OFS5 is working to expand its efforts to include CACFP, including ADCC program directors and their staff. OFS aims to determine the specific food safety education needs of these program directors using this QRS.

Purpose

The objective of this study is to identify the food safety education needs (i.e., training and resources) of ADCC program directors. To achieve this research objective, this study will address the following three research questions:

What are the food safety knowledge gaps among ADCC program directors?

How do site-level directors want to receive food safety education?

What is the best way to communicate the availability of food safety education training and resources?

The answers to these research questions will help FNS better understand the food safety education needs among ADCC program directors and how these program directors want to receive the needed information. This data will help OFS develop training and technical resources to meet the needs of program directors and their staff.

Methodology/Research Design

The study team will survey a nationally representative sample of ADCC program directors across the United States using a mixed-mode data collection effort. Collaborating with FNS, the study team has developed recruitment materials for State Agencies, ADCC sponsoring organizations, and ADCC program directors. These materials will feature the purpose of the study, potential benefits of participating in the study, data collection time frame, and data request. The study team will tailor letters based on the appropriate audience. Participants will be told the voluntary nature of the study and the anonymity of participation. Contact information for the FNS liaison will be provided as part of the Frequently Asked Questions (FAQs) (Appendix A.19) developed for the study.

Design/Sampling Procedures

The study team will select a nationally representative sample of 800 ADCCs. An 80 percent response rate will provide an analytical sample of approximately 640 ADCCs to satisfy the precision targets required—namely, a 5 percent level of precision with a 95 percent level of confidence for national estimates, and a 7 percent to 10 percent level of precision with 90 percent confidence level for subgroup estimates, according to FNS region and urbanicity. The study team will select a stratified, systematic, random sample of ADCCs, where strata are defined according to the seven FNS regions and urbanicity. Prior to sampling, ADCCs will be sorted within FNS regions by urbanicity and center size according to the number of adults in the center. This approach ensures that enough ADCCs are selected for each region and urbanicity category and a balanced number of smaller and larger centers are represented in the sample.

Recruitment and Consent

Recruitment for Pretesting

The study team conducted cognitive pretests of the survey with five ADCC program operators. FNS staff contacted one FNS Regional Office (Western Regional Office) which identified nine ADCC centers from one State (California), with a mix of sponsored and independent centers. The study team contacted three ADCC sponsoring organizations (Appendix A.1 in Appendix A.18 - Cognitive Pretest Memo) and six ADCC program operators via emails (Appendix A.2 in Appendix A.18 - Cognitive Pretest Memo) and phone calls (Appendix A.3 in Appendix A.18 - Cognitive Pretest Memo) to explain the pretest and schedule a 1-hour pretest interview. To minimize the burden of participating in this pretest, program operators completed a hard copy survey (Appendix A.4 in Appendix A.18 - Cognitive Pretest Memo) and answered cognitive interview questions during a single call. The cognitive interview questions focused on whether respondents understood the meaning of the questions; whether they had difficulty answering the questions; and whether the response options were applicable, clear, and comprehensive. Pretest questions also focused on whether the survey question ordering is logical and whether the skip patterns are clear, which is especially important for hard copy survey administration. Each respondent received a $30 incentive for participating in the pretest as they spend an additional 40 minutes providing detailed responses to the cognitive interview questions. The study team updated the final survey instrument (Appendix A.15 through A.17) and recruitment materials (Appendix A.2 through A.14) to reflect the findings from the cognitive pretest.

Recruitment for Survey

The study team will recruit ADCC program directors according to the following steps:

FNS Regional Office liaisons will notify the State Agencies about the study (Appendix A.2). The study team will notify the sponsoring organizations that one of their ADCCs was selected for participation (Appendix A.3).

The study team will send an invitation packet by email to the sampled ADCC program directors (Appendix A.4). The packet will contain background information on the study, answers to FAQs (Appendix A.19), and instructions for accessing the survey online (web survey - Appendix A.16) and online help systems to provide guidance for unanswered questions.

For those ADCCs without email addresses, the study team will send a hard copy invitation packet by postal mail (Appendix A.5). The packet will include a hard copy of the survey (Appendix A.15) and a prepaid postage return envelope to return the survey to the study team, along with answers to FAQs (Appendix A.19) and instructions for accessing the help systems (either online or by phone).

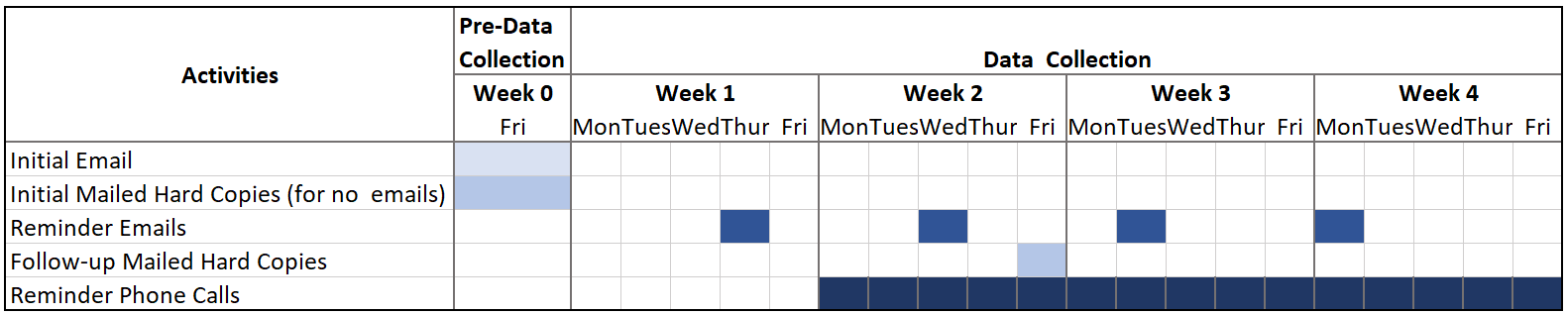

Exhibit 3 presents a Gantt chart that details our data collection activities and efforts. The schedule allows for a total of 4 weeks for data collection to reach the target number of completed surveys (N = 640). To help achieve that goal, after 1 week the study team will conduct telephone follow-up with those respondents who still have not completed the survey. The study team will implement the following strategies:

Every 4 days, send reminder emails to complete the survey to respondents with email addresses (Appendix A.6-A.8, A.10)

Initiate weekly follow-up telephone calls for all respondents, starting 1 week after the initial email or mailed hard copy is sent, for those who have not completed the survey. During the telephone call, the study team will offer the option to complete the survey by telephone because of the short duration of the survey (Appendix A.11). We estimate that completing the survey over the phone will take 25 minutes.

Send hard copies of the surveys with prepaid postage return envelopes, 2 weeks after sending the initial emails, to those who have not logged on to the survey online (Appendix A.5) and those without email addresses who have not returned the initial hard copy of the survey (Appendix A.9)

Exhibit 3 | Schedule of Data Collection Activities

Survey support staff will address technical issues with the survey immediately and will respond to substantive questions within a few hours. They will also be available to administer the survey over the phone. We will provide in-depth training to the survey support staff. The study team will analyze the web/mailed responses and identify any responses that require follow up. These responses will be passed to the survey support staff to initiate contact with the respondents to clarify questionable responses. The clarifications will be made via email (Appendix A.12), or in the case of urgent clarifications, by phone (Appendix A.13).

A weekly data collection memo will detail survey response rates (e.g., number of completed surveys [web, mail, and phone], surveys in progress, or surveys not started/accessed/returned); recruitment and follow-up activities; and any challenges.

COMPENSATION

There will be no compensation given to the respondents of the survey.

Data Analysis

Upon completion of data collection, the study team will review the survey data for inconsistent data and reporting errors.6 After reviewing the data, the dataset will be considered clean and ready for post-survey adjustments (nonresponse adjustments). The study team’s statisticians will weight the survey data to adjust for unit nonresponse, using a propensity modeling procedure to predict the probability of responding to the survey based on the available characteristics data collected on the sampling frame file (to be determined). Under this approach, the responding cases will be weighted by the inverse of the predicted probability of response, using a weighting class methodology that divides the propensity scores into classes and assigns the average score within the class to each case. This approach helps eliminate large adjustments to the survey weights to increase the survey precision in the estimates. 7

The study team will adjust for nonresponse bias according to the characteristics available if an 80 percent response rate is not achieved. To conduct this analysis, the study team will carry out the following steps:

Code sampled records as respondents or non-respondents

Use a logistic regression model to identify subgroups that are significantly different between respondents and non-respondents

Report model results and potential non-response bias to FNS

Use a jackknife variance replication method to simplify the statistical significance testing of the descriptive statistics and regression-adjusted estimates8

This methodology also will help account for the non-sampling errors associated with the non-response adjustment and any subsequent post-stratification or calibration.

Descriptive findings from the survey will be presented in data tables as weighted percentages. Data tables will include weighted and unweighted counts of respondents. The study team will provide descriptive cross-tabulations by ADCC characteristics for survey questions, if appropriate, such as FNS region, urbanicity, and type of center (sponsor vs. independent; affiliated vs. unaffiliated).

Outcomes/Findings

OFS is committed to expanding food safety training in FNS programs to meet the needs of nutrition assistance of ADCC program directors in CACFP. The findings from this study will help FNS better understand the food safety education needs among the ADCC program directors and how these program directors want to receive the needed information. This data will help OFS develop training and technical resources to meet the needs of program directors and their staff.

Confidentiality:

The survey does not ask any sensitive questions. All information gathered from the survey is for research purposes only and will be kept private to the full extent allowed by law. Respondents will be assured that their information and responses will remain private and will only be used for research purposes and reported in aggregate. In addition, a unique identifier will link the respondent information to the survey responses. The study team will ensure that the spreadsheet linking the unique identifier with the respondent information (name, job title, work e-mail address, and other work contact information) is electronically stored with access allowed only to the study team and that it is kept separate from the survey responses. This file linking the respondent’s information to the unique identifier on the survey responses will only be transmitted using encryption. All paper copies of the returned completed survey will be entered electronically and shredded instantly after.

Data confidentiality will be considered a continuous process during the life of the study and will be monitored and controlled by study team. 2M will report any improper disclosure or unauthorized use of FNS data to the Contracting Officer’s Representative within 24 hours of discovery or loss of data.

FNS published a system of record notice (SORN) titled FNS-8 USDA/FNS Studies and Reports in the Federal Register on April 25, 1991, volume 56, pages 19078-19080, that discusses the terms of protections that will be provided to respondents. FNS and the study team will comply with the requirements of the Privacy Act of 1974.

Federal Costs:

It is estimated that federal employees will spend approximately 208 hours overseeing this study in 2018. Using the hourly wage rate of $45.59 for a GS-12, step 6 federal employee from the 2018 Washington, DC, locality pay table, the estimated 2018 costs equal $9,482.72. In addition, we assume 20 hours annually for the Branch Chief, at GS-14, Step 1, at $54.91 per hour for a total of $1,098.20.

Contractor costs associated with this study will total $288,900.06 to the Federal Government in 2018. The combined federal employee and contractor costs are estimated at $299,480.98. Adding $98,828.72 to this total to account for fully loaded wages ($299,480.98 x 0.33%), FNS estimates that the total annual cost to the Federal Government for this information collection will be $398,309.70.

Appendices (including Research Tools/Instruments):

Appendix A.1 Respondent Burden Table

Materials Related to Recruitment for the Survey

Appendix A.2 Email Notification from Regional Offices to CACFP State Agencies

Appendix A.3 Email Notification to ADCC Sponsoring Organization Point of Contact

Appendix A.4 Initial Survey Notification Email to ADCC Program Directors

Appendix A.5 Initial Survey Hard Copy Notification to ADCC Program Directors (Hard Copy Survey Reminders to ADCC Program Directors who never clicked the link)

Appendix A.6 Survey Reminder Email 1 to ADCC Program Directors

Appendix A.7 Survey Reminder Email 2 to ADCC Program Directors

Appendix A.8 Survey Reminder Email 3 to ADCC Program Directors

Appendix A.9 Hard Copy Survey Reminders to ADCC Program Directors (for those who received an initial hard copy)

Appendix A.10 Survey Reminder Email 4 to ADCC Program Directors

Appendix A.11 Telephone Reminder 1 and 2 to ADCC Program Directors

Appendix A.12 Post-Survey Response Clarification Email to ADCC Program Directors

Appendix A.13 Post-Survey Clarification Telephone Script

Appendix A.14 Email Notification to Regional Offices.

Survey Instruments

Appendix A.15 Survey of Food Safety Education Needs of CACFP ADCC– Hard Copy

Appendix A.16 Survey of Food Safety Education Needs of CACFP ADCC– Web Version

Appendix A.17 Survey of Food Safety Education Needs of CACFP ADCC– Screenshots of the Web Version

Additional Materials

Appendix A.18 Cognitive Pretest Memo

Appendix A.1: Pretest –– Recruitment Email to ADCC Sponsoring Organizations

Appendix A.2 Pretest - Recruitment Email to ADCC Program Operators

Appendix A.3 Pretest - Recruitment Phone Calls to ADCC Program Operators

Appendix A.4 Pretest - Survey of Food Safety Education Needs of ADCC (Hard Copy Survey)

Appendix A.19 Frequently Asked Questions (FAQs) about the study

1 Centers for Disease Control and Prevention. (2016). Estimates of foodborne illness in the United States. Retrieved from https://www.cdc.gov/foodborneburden/2011-foodborne-estimates.html

2 During the pretesting of the survey, the respondents suggested that the most appropriate person for the survey would be the program director. As a result, the study team has updated the text from program operators to program directors. For the survey, the study team plans to recruit the person who knows the most about foodservice operations and food safety training and education needs of center staff.

3 U.S. Department of Health and Human Services. (2018). Food safety for older adults. Retrieved from: https://www.foodsafety.gov/risk/olderadults/index.html

4 U.S. Food and Drug Administration. (2017). Food code: 2017 recommendations of the United States Public Health Service Food and Drug Administration. Retrieved from https://www.fda.gov/downloads/Food/GuidanceRegulation/RetailFoodProtection/FoodCode/UCM595140.pdf

5 Currently, educational materials from the Institute of Child Nutrition (ICN) are promoted, as OFS and ICN have a cooperative agreement in place. Materials can be found at: https://theicn.org/icn-resources-a-z/food-safety-in-child-care/

6 Inconsistent reporting errors can occur for several reasons and in different forms. For example, a respondent might rush through questions and select the first response to each question.

7 Wun, L., Ezzati-Rice, T. M., Baskin, R., Greenblatt, J., Zodet, M., Potter, F., . . . Touzani, M. (2004). Using propensity scores to adjust weights to compensate for dwelling unit level nonresponse in the Medical Expenditure Panel Survey (Agency for Healthcare Research and Quality Working Paper No. 04004). Retrieved from https://meps.ahrq.gov/data_files/publications/workingpapers/wp_04004.pdf

8 Shao, J., & Wu, C. F. J. (1989). A general theory for jackknife variance estimation. Annals of Statistics, 17(3), 1176–1197.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Generic OMB 0584-0613: SNP Quick Response Surveys |

| Subject | 0584-0613 |

| Author | Gerad O'Shea |

| File Modified | 0000-00-00 |

| File Created | 2021-01-20 |

© 2026 OMB.report | Privacy Policy