National TPM Implementation Review Survey Design and Questions

Assessment of Transportation Performance Management Needs, Capabilities and Capacity

20161116_(revised)HIPN_RevisedNIRSurveyQuestionsandDesignReport_V7 (2)

National TPM Implementation Review Survey Design and Questions

OMB: 2125-0655

National TPM Implementation Review Survey Design and Questions

This collection of information is voluntary and will be used to gather information about the application of transportation performance management and performance based-planning and programming principles at State Departments of Transportation (State DOTs) and Metropolitan Planning Organizations (MPOs). Public reporting burden is estimated to average 22 hour per response, including the time for reviewing instructions searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Please note that an agency may not conduct or sponsor, and a person is not required to respond to, a collection of information unless it displays a currently valid OMB control number. The OMB control number for this collection is 2125-XXXX (state OMB #). Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden to: Michael Howell, Information Collection Clearance Officer, Federal Highway Administration, 1200 New Jersey Avenue, SE, Washington, DC 20590.

Overview

The primary goal of the National Transportation Performance Management (TPM) Implementation Review is to gather information about the application of transportation performance management and performance based-planning and programming principles at State Departments of Transportation (State DOTs) and Metropolitan Planning Organizations (MPOs). The National TPM Implementation Review seeks to provide quantitative and coded qualitative data from open ended questions that can be summarized to spur further discussion of the resource and guidance needs of transportation agencies. It is believed that State DOTs and MPOs have a general understanding of TPM practices and have begun implementation, but it will be beneficial to have a better understanding of specific capabilities, progress, and challenges. The review will collect data from State DOT and MPO staff regarding:

Self-assessments of their capabilities to implement performance management practices;

Identification of key challenges of TPM implementation from the perspective of the Partner Organizations;

Assessment of interest in receiving or reviewing training, guidance resources, and implementation assistance;

Preferences among alternative means for providing capacity building and training; and

This document was revised to reflect comments submitted by stakeholders. Those changes are described in detail in Appendix A.

The web survey instrument for the National TPM Implementation Review consists of the following sections.

TPM General

Performance-based Planning and Programming

Asset Management

TPM by Performance Area: Safety

TPM by Performance Area: Bridge

TPM by Performance Area: Pavement

TPM by Performance Area: Freight

TPM by Performance Area: Congestion/Mobility/System Performance

TPM by Performance Area: On-road Mobile Source Emission

TPM by Performance Area Supplement: Transit Safety and Transit State of Good Repair (directed only at State DOTs and MPOs with Transit Oversight)

For each of the performance area sections listed above (D-J), a set of 18 common questions is used and organized into the following subsections:

Subsection |

Example questions |

Staffing |

|

Data & Analysis

|

|

Performance Measures |

|

Target Setting |

|

Planning and Programming

|

|

Monitoring & Reporting |

|

In addition to the transit questions in Section J, Section A also contains a transit supplement section aimed at capturing additional transit TPM information.

In addition to the common set of questions, a limited number of performance area specific questions are also included

For each question, respondents will also have the option to provide additional comments to clarify their responses. Please note that the option to provide additional comments is not explicitly shown next questions in this document to conserve for space.

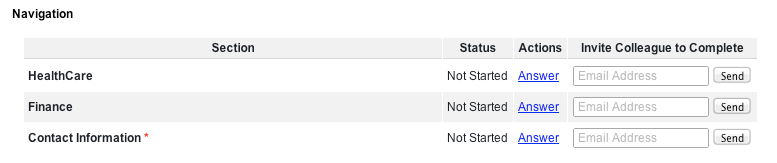

In the administered online survey, each set of performance area questions along with a set of common questions, is “self-contained” so they can be delegated to the appropriate subject matter experts, if an agency desires. A responding agency has the option to delegate sections of the survey by performance area via email. Those who are delegated survey sections must have their response reviewed and submitted by the designated survey point of contact. Only the sole designated survey point contact for that agency has the ability to submit the completed aggregated (includes all sections and delegated responses) survey to FHWA. This is discussed in more detail in Part 2 of this document.

The remainder of this document is divided into two parts:

Part 1 lists the draft questions proposed for the National TPM Implementation Review Survey and Part 2 outlines the Data Collection and Analysis Design of the survey. Use the following table of contents to navigate through the document.

Table of Contents

A4. If your AGENCY has a reporting website please provide the URL:_________________________ 9

Transit Safety and Transit State of Good Repair Supplement 10

B1. How does your agency incorporate PBPP into its LRTP? (check all that apply) 11

B3. How does your agency incorporate PBPP into its STIP/TIP? (check all that apply) 12

B10. On a scale of 1 to 5 how effective has the PBPP process been as a tool for:* 14

C. Highway Asset Management 15

Sections D to J: Common and Specific Performance Area Questions 18

CommonQ4. Does your agency mostly outsource the analysis of data for PERFORMANCE AREA X? 19

D. SPECIFIC TO SAFETY: DATA & ANALYSIS 20

D2. Which agencies do you cooperate with to gather crash data (check all that apply)_? 20

D4. To what extent does your agency have current or projected railroad traffic? 21

E. SPECIFIC TO BRIDGE: DATA & ANALYSIS 21

F.Specific to PAVEMENT: DATA & Analysis 21

F1. Is pavement data collected in both directions?* 21

F2. How often is pavement data collected on the National Highway System? 22

F3. Who acquires pavement data on non‐State owned NHS Routes? 22

G.SPECIFIC TO FREIGHT: DATA & ANALYSIS 22

H.SPECIFIC TO CONGESTION/MOBILITY/SYSTEM PERFORMANCE: DATA & ANALYSIS 22

J. SPECIFIC TO TRANSIT STATE OF GOOD REPAIR SUPPLEMENT: DATA AND ANALYSIS 23

G.SPECIFIC TO FREIGHT: PERFORMANCE MEASURES 24

G2. Does your freight performance measurement include truck parking? 24

G3. Have you developed freight performance measure in the following modes?* 24

H.SPECIFIC TO Congestion/Mobility/System Performance: PERFORMANCE MEASURES 25

D.SPECIFIC TO SAFETY: PLANNING AND PROGRAMMING: 26

D8. How effective is your agency at interacting and collaborating with the SHSO on HSIP efforts? 27

E.SPECIFIC TO BRIDGE: PLANNING AND PROGRAMMING 27

E3. Describe impact of expansion of National Highway System on the State agency Bridge programs. 27

F.SPECIFIC TO PAVEMENT: PLANNING & PROGRAMMING 27

G.SPECIFIC TO FREIGHT: PLANNING AND PROGRAMMING 28

G4. Does your agency have a MAP‐21 compliant Statewide Freight Plan? 28

I.SPECIFIC TO ON-ROAD MOBILE SOURCE: PLANNING AND PROGRAMMING 28

I2. How do you plan to transition to quantitative emissions estimates? 28

MONITORING, ANALYSIS, AND REPORTING 29

Outline of the National TPM Implementation Review Data Collection and Analysis Design 30

National TPM Implementation Participants 31

Respondent Selection within Partner Organizations: 33

Advantages of this approach: 33

Disadvantages of this approach: 34

National TPM Implementation Assessment Process 34

State DOT and MPO Assessment Results Analyses & Report: 35

Follow-up State DOT and MPO Assessments: 35

Follow-up State DOT and MPO Assessment Analysis & Report 36

Selection of data collection mode 36

Selection of survey data collection software 36

National TPM Implementation Assessment and Follow-up Assessment Content 37

Survey Question Construction 38

Bias limitation and detection 38

Survey Data Files and Tabulation 40

FHWA’s National TPM Implementation Assessment Report 40

Summary of Comments on Docket FHWA-2016-0010 and resulting edits to Information Collection 42

Length of Survey, Timing, Wording, and Responses Options 43

Approach to Administrating the Survey 44

Protect Confidentially and Survey Reporting 44

Section A: TPM General

FHWA defines Transportation Performance Management (TPM) as a strategic approach that uses system information to make investment and policy decisions to achieve national performance goals. In short, Transportation Performance Management (TPM):

Is systematically applied in a regular ongoing process.

Provides key information to help decision makers -- allowing them to understand the consequences of investment decisions across multiple markets.

Supports the improvement of communications between decision makers, stakeholders and the traveling public.

Encourages the development of targets and measures in cooperative partnerships and based on data and objective information.

A1. Based on your agency’s understanding of finalized or proposed changes to the federal-aid program, which of the following Performance Areas do you expect will be the biggest challenge for your agency to carry out?

*Please rate how challenging each TPM component will be for your agency from 0 to 10, where "10" (Biggest Challenge) means that you feel your agency does not have the skills or resources to address that aspect of TPM at all and "0" (Not a Challenge) means that your agency sees no challenge in fulfilling that TPM component.

Performance Area |

(0)Not a challenge to Biggest Challenge (10) |

||||||||||

Highway Safety |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Transit Safety |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Pavement |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Bridge |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Transit State of Good Repair |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Congestion/Mobility/System Performance |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

CMAQ On-Road Mobile Source Emissions |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

Freight |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

A2. Select and describe the option(s) that best aligns with how your agency is staffed or planning to staff to support transportation performance management. (Check all that apply)

|

TPM Staffing (non-transit) |

( ) |

No specifically dedicated staff or unit (ad hoc) |

( ) |

Dedicated performance management staff |

( ) |

Temporary implementation group |

( ) |

Committee structure |

( ) |

Other |

Please elaborate on how your organization is staffed or plans to its self to support transportation performance management: ____________________________________________________

A3. What number of Full Time Equivalent (FTEs) would you estimate are focused on performance management activities? ______

A4. If your AGENCY has a reporting website please provide the URL:_________________________

A5. For each of the performance management functions listed below please indicate: 1) your agency’s interest in reviewing available tools and resources and 2) on a scale of 1 to 5, your agency current capacity (availability of staff, skills, resources, and tools)

Function |

Interest in Reviewing Tools/Resources (y/n) |

Agency’s Current Capacity (1 to 5) |

Producing graphical and map displays |

(y/n) |

1 2 3 4 5 |

Conducting project level benefit-cost alternative analysis |

(y/n) |

1 2 3 4 5 |

Conducting system level investment scenario analyses |

(y/n) |

1 2 3 4 5 |

Comparing trade-offs across projects, investment scenarios, and performance areas |

(y/n) |

1 2 3 4 5 |

Creating internal operational dashboards |

(y/n) |

1 2 3 4 5 |

Creating externally facing dashboards |

(y/n) |

1 2 3 4 5 |

Reporting progress and performance outcomes on websites |

(y/n) |

1 2 3 4 5 |

Communicating/Messaging performance results to public and stakeholders |

(y/n) |

1 2 3 4 5 |

Identify performance areas in need of improvement. |

(y/n) |

1 2 3 4 5 |

A6. Does your agency have oversight of Transit and Public Transportation entities? (Yes/NO)If Yes, please answer the question(s) in the Transit Safety and Transit State of Good Repair Supplement Questions and throughout the remainder of the survey.

A7. To what capacity building format would benefit you and other agency staff members the most? (Check all that apply)

Workshops

Courses (NHI or similar),

Guidance

Guidebooks and technical papers

Webinars

Document templates

Analytical Tool demonstrations

Other:

A8. Please indicate the areas that your agency would be interested in FHWA researching further. (Check all that apply)

Data Analysis

Data management

Performance measure development

Target setting

Connecting system performance information to various transportation plans

Linking performance information to programming decisions

Performance monitoring

Performance reporting & communication

External collaboration

Organizational and cultural resistance to TPM practices

Coordination across performance measure areas (e.g. safety, congestion, freight, etc.)

Other

Transit Safety and Transit State of Good Repair Supplement

A9. Select and describe the option that best aligns with how your agency is staffed or planning to staff to support transportation performance management in the areas of Transit Safety and Transit State of Good Repair.

TPM Staffing focused on Transit |

Transit Safety |

Transit State of Good Repair |

No specifically dedicated staff or unit (ad hoc) |

( ) |

( ) |

Dedicated performance management staff |

( ) |

( ) |

Temporary implementation group |

( ) |

( ) |

Committee structure |

( ) |

( ) |

Other |

( ) |

( ) |

Section B- PBPP Questions

The

following questions pertain specifically to PBPP and to your agency’s

use of PBPP in the transportation planning process.

PBPP

Definition: Performance-based

planning and programming (PBPP) refers to the application of

performance management within the planning and programming processes

of transportation agencies to achieve desired performance outcomes

for the multimodal transportation system. This includes processes to

develop a range of planning products undertaken by a transportation

agency with other agencies, stakeholders, and the public as part of a

3C (cooperative, continuing, and comprehensive) process.

It

includes development of these key elements:

Long range transportation plans (LRTPs) or Metropolitan Transportation Plans (MTPs),

Other plans and processes: e.g., Strategic Highway Safety Plans, Asset Management Plans, the Congestion Management Process, CMAQ Performance Plan, Freight Plans, Transit Agency Asset Management Plans, and Transit Agency Safety Plan,

Programming documents, including State and metropolitan Transportation Improvement Programs (STIPs and TIPs).

PBPP

attempts to ensure that transportation investment decisions in

long-term planning and short-term programming of projects are based

on an investment’s contribution to meeting established goals.

[Source: FHWA

PBPP guidebook;

http://www.fhwa.dot.gov/planning/performance_based_planning/]

B1. How does your agency incorporate PBPP into its LRTP? (Check all that apply)

The LRTP includes performance measures linked to the plan’s vision, goals, or objectives

The LRTP includes performance measures corresponding to MAP-21 national goals

The LRTP includes performance measures corresponding to goals in addition to the national goals specified under MAP-21

The LRTP performance measures are linked to project selection or screening criteria for STIP/TIP programming

The LRTP evaluates multiple scenarios based on established performance measures

The LRTP sets performance targets for goals

The LRTP includes a monitoring plan for evaluating the results of LRTP investments using performance measures

All of the above

None of the above

Not sure

B2. Indicate the degree to which your agency can link a relationship between the LRTP and actual investment decisions for the following performance areas (Please rate the level of linkage between program investments and the performance outcome they intend to achieve using a 1(No link) to 5(Strong Link) scale.)

Performance Area |

1-Cannot link investment decisions to LRTP |

2-Can link very few investment decisions to LRTP |

3-Can link several but not most investment decisions relationships to LRTP |

4- Can link most investment decisions to LRTP |

5-Can S Link All Investment Decisions to LRTP |

Highway Safety |

( ) |

( ) |

( ) |

( ) |

( ) |

Transit Safety |

( ) |

( ) |

( ) |

( ) |

( ) |

Pavement |

( ) |

( ) |

( ) |

( ) |

( ) |

Bridge |

( ) |

( ) |

( ) |

( ) |

( ) |

Transit State of Good Repair |

( ) |

( ) |

( ) |

( ) |

( ) |

Congestion/Mobility/System Performance |

( ) |

( ) |

( ) |

( ) |

( ) |

CMAQ On-Road Mobile Source Emissions |

( ) |

( ) |

( ) |

( ) |

( ) |

Freight |

( ) |

( ) |

( ) |

( ) |

( ) |

B3. How does your agency incorporate PBPP into its STIP/TIP? (Check all that apply)

The LRTP goals and performance measures are reflected in STIP/TIP project selection or screening

Priorities or rating of proposed STIP/TIP investments are determined or informed by performance measures

The STIP/TIP evaluates alternative investment scenarios based on LRTP goals and performance measures

The results of STIP/TIP investments are monitored using performance measures

STIP/TIP project selection or screening includes a discussion as to how the investment program will achieve targets

B4. Indicate the

degree to which you agree with the following statement:

“Your

agency has a plan that identifies the strategies and/or investments

that will be made to achieve specific targets in the following

performance areas:”

Performance Area |

1-Strongly Disagree |

2-Somewhat Disagree |

3-Neutral |

4-Somewhat Agree |

5-Strongly Agree |

Highway Safety |

( ) |

( ) |

( ) |

( ) |

( ) |

Transit Safety |

( ) |

( ) |

( ) |

( ) |

( ) |

Bridge |

( ) |

( ) |

( ) |

( ) |

( ) |

Pavement |

( ) |

( ) |

( ) |

( ) |

( ) |

Transit State of Good Repair |

( ) |

( ) |

( ) |

( ) |

( ) |

Congestion/Mobility/System Performance |

( ) |

( ) |

( ) |

( ) |

( ) |

CMAQ On-Road Mobile Source Emissions |

( ) |

( ) |

( ) |

( ) |

( ) |

Freight |

( ) |

( ) |

( ) |

( ) |

( ) |

B5. Does your agency use measures to evaluate performance in any of the following areas? (Check all that apply)

Amtrak/Freight Rail

Transit Rail

Transit Bus

Aviation

Waterways/Shipping

Passenger/ Auto Ferries

Hiking/Biking Trails (recreational)

Bike/Pedestrian (mobility)

Other areas: __________________

B6. How does your agency evaluate the outcomes of its transportation planning and programming processes? (Check all that apply)

The agency regularly monitors the effect of project and strategies funded in the STIP/TIP

The agency reports on progress towards achieving performance targets

The agency applies the evaluation of investment effectiveness in future programming decisions

Congestion Management Program annual reporting

The Agency DOES NOT identify the outcomes they want from the transportation planning and programming process

B7. Using the scale below, to what degree does your agency collaborate and communicate with other planning organizations (the State DOT, MPO(s), Rural Transportation Planning Organization(s) (RTPO[s]), Tribal Organizations, operators of public transportation, and local agencies) on the use of performance measures and targets to align performance expectations? (Select one)

Rating |

Scale |

( ) |

Nonexistent – State DOT and other planning organizations/agencies do not communicate effectively |

( ) |

Ineffective ‐ State DOT and other planning organizations/agencies communicate but are not aware of each other's view of performance expectations for the region |

( ) |

Somewhat Effective – State DOT and other planning organizations/agencies share their respective performance expectations but do not collaborate on a shared vision for the region |

( ) |

Highly Effective – State DOT and other planning organizations/agencies collaboratively work together to program investments that support generally shared performance expectations. Absent agreements, each implements programs based on shared expectations. |

( ) |

Very Highly Effective – State DOT and other planning organizations/agencies work together in a collaborative manner to decide on performance expectations for a region. All agree to program investments in support of this shared expectation of performance |

B8. Related to your answer provided in the previous question, does your agency interact or collaborate on any of the following specific topics:

Data sharing;

Data management;

Setting goals/objectives/performance measures;

Target-setting;

Project selection.

Other __________.

B9. Have you realized any benefits (quantitative or qualitative) in using performance-based planning and programming principles? (Please check all that apply)

Not Applicable

The planning process is improved

The planning process has a greater influence on decisions

New or enhanced focus on measurable results

Improved results – “better decisions”

Improved transparency and credibility of process

Improved understanding of process by partners, public, and stakeholders

Greater acceptance of plans and projects by partners, public, and stakeholders

Other (describe):_______________________________________

B10. On a scale of 1 to 5 how effective has the PBPP process been as a tool for:*

PBPP process |

1-Nonexistent |

2-Ineffective |

3-Somewhat Effective |

4-Highly Effective |

5-Very Highly Effective |

Guiding transportation investments |

( ) |

( ) |

( ) |

( ) |

( ) |

Encouraging interaction between the MPO and state DOT, public transit, and other partner agencies |

( ) |

( ) |

( ) |

( ) |

( ) |

Setting direction in the LRTP (strategic direction, goals, priorities, policies) |

( ) |

( ) |

( ) |

( ) |

( ) |

Selecting or screening alternative projects for STIP/TIP |

( ) |

( ) |

( ) |

( ) |

( ) |

Communicating results |

( ) |

( ) |

( ) |

( ) |

( ) |

Evaluating the results of policies, investments, and strategies |

( ) |

( ) |

( ) |

( ) |

( ) |

Improving understanding and support for the planning process |

( ) |

( ) |

( ) |

( ) |

( ) |

Encouraging participation by stakeholders and the public in the planning process. |

( ) |

( ) |

( ) |

( ) |

( ) |

C. Highway Asset Management

FHWA defines asset management is a strategic and systematic process of operating, maintaining, and improving physical assets, with a focus on engineering and economic analysis based upon quality information, to identify a structured sequence of maintenance, preservation, repair, rehabilitation, and replacement actions that will achieve and sustain a desired state of good repair over the lifecycle of the assets at minimum practicable cost.

Each State is required to develop a risk-based asset management plan for the National Highway System (NHS) to improve or preserve the condition of the assets and the performance of the system. While the required risk-based asset management plan specifies pavements and bridges on the NHS in 23 U.S.C. § 119(e) (4), 23 U.S.C. 119(e) (3) (MAP-21 § 1106) requires USDOT to encourage States to include all infrastructure assets within the highway right-of-way. Examples of such infrastructure assets include: pavement markings, culverts, guardrail, signs, traffic signals, lighting, Intelligent Transportation Systems (ITS) infrastructure, rest areas, etc., in the asset management plan.

C1. On a scale of 1 to 5, please indicate to what extent your agency has documented the following Asset Management Plan activities (1-no at all documented to 5-Completely documented):

Asset Management activities |

1-Not at all Documented |

2- Beginning to Document |

3-Somewhat Documented |

4-Significantly Documented |

5-Completely Documented |

Summary listing of the pavement and bridge assets on the National Highway System in the State, including a description of the condition of those assets |

( ) |

( ) |

( ) |

( ) |

( ) |

Asset management objectives and measures; |

( ) |

( ) |

( ) |

( ) |

( ) |

Performance gap identification |

( ) |

( ) |

( ) |

( ) |

( ) |

Lifecycle cost and risk management analysis, |

( ) |

( ) |

( ) |

( ) |

( ) |

A financial plan |

( ) |

( ) |

( ) |

( ) |

( ) |

Investment strategies |

( ) |

( ) |

( ) |

( ) |

( ) |

C2. On a scale of 1 to 5, please indicate the degree to which your planning practices align with each of following Asset Management practices in your Agency (1-No linkage) to 5-Strong Linkage):

Asset Management practices |

1-No Alignment with planning practices |

2-Minor Alignment with planning practices |

3-Some Alignment with planning practices |

4-Moderate Alignment with planning practices |

5-Strong Alignment with planning practices |

Long-range plan includes an evaluation of capital, operational, and modal alternatives to meet system deficiencies. |

( ) |

( ) |

( ) |

( ) |

( ) |

Capital versus maintenance expenditure tradeoffs are explicitly considered in the preservation of assets like pavements and bridges. |

( ) |

( ) |

( ) |

( ) |

( ) |

Capital versus operations tradeoffs are explicitly considered in seeking to improve traffic movement. |

( ) |

( ) |

( ) |

( ) |

( ) |

Long-range plan provides clear and specific guidance for the capital program development process. |

( ) |

( ) |

( ) |

( ) |

( ) |

Criteria used to set program priorities, select projects, and allocate resources are consistent with stated policy objectives and defined performance measures |

( ) |

( ) |

( ) |

( ) |

( ) |

Preservation program budget is based upon analyses of least-life-cycle cost rather than exclusive reliance on worst-first strategies. |

( ) |

( ) |

( ) |

( ) |

( ) |

A maintenance quality assurance study has been implemented to define levels of service for transportation system maintenance |

( ) |

( ) |

( ) |

( ) |

( ) |

C3. Please check the management systems your agency/organization currently has, along with the status of each system within an overall Asset Management framework (please check all that apply):

Stand-alone management system: |

Integrated within Asset Management framework |

|||

o Pavement (PMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Bridge (BMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Highway Safety (SMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Traffic Congestion (CMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Public Transportation Facilities and Equipment (PTMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Intermodal Transportation Facilities and Systems (ITMS) |

o Yes |

o No |

o Planned |

o Don't know |

o Maintenance Management (MMS) |

o Yes |

o No |

o Planned |

o Don't know |

Please list any other management systems used by your agency/organization:

:__________

C4. On a scale of 1 to 5 how effectively has your agency used your Asset Management practices to guide transportation investments (1-nonexistent to 5-very highly effective)

Not Applicable

1: Nonexistent

2: Ineffective

3: Somewhat Effective

4: Highly effective

5: Very Highly Effective

C5. On a scale of 1 to 5 how effective has your agency used your Asset Management practices to improve data collection (1-nonexistent to 5-very highly effective)?

AM process |

Not Applicable |

1- Nonexistent |

2- Ineffective |

3-Somewhat effective |

4-Highly effective |

5-Very highly Effective |

Completing and keeping an up-to-date inventory of your major assets. |

( ) |

( ) |

( ) |

( ) |

( ) |

( ) |

Collecting information on the condition of your assets. |

( ) |

( ) |

( ) |

( ) |

( ) |

( ) |

Collecting information on the performance of your assets (e.g. serviceability, ride quality, capacity, operations, and safety improvements). |

( ) |

( ) |

( ) |

( ) |

( ) |

( ) |

Improving the efficiency of data collection (e.g., through sampling techniques, use of automated equipment, other methods appropriate to our transportation system). |

( ) |

( ) |

( ) |

( ) |

( ) |

( ) |

Establishing standards for geographic referencing that allow us to bring together information for different asset classes. |

( ) |

( ) |

( ) |

( ) |

( ) |

( ) |

Sections D to J: Common and Specific Performance Area Questions

Common questions that will be asked for all 8 performance areas (including the transit supplementary questions) are grouped in this section. Questions in the section are also grouped thematically by following subsections:

Staffing

Data & Analysis

Performance Measures

Target Setting

Programming

Monitoring & Reporting

In the administered online survey, each set of performance area questions is “self-contained” so they can be delegated to the appropriate subject matter experts. While the questions can be delegated to subject matter experts, only the sole survey point of contact can submit responses to FHWA. Additionally, the final survey instrument will filter questions based on whether the respondent is a State or an MPO

TPM STAFFING

CommonQ1. On a scale of 1-5, rate that impact that implementing federal performance management requirements (either those being proposed and or final) to related PERFORMANCE AREA X will have on staff resources (1-No Impact to 5-High Impact).

1-No Impact |

2. Minor Impact |

3. Some Impact |

4. Moderate Impact |

5-High Impact |

( ) |

( ) |

( ) |

( ) |

( ) |

Please Explain______________

DATA & ANALYSIS

Common Q2. Rate the level of data availability and data quality to support performance management for PERFORMANCE AREA X in regards to the following items (scale of 1-available to 5-high support):

|

Data availability |

|

Data availability |

||||||||

Timeliness |

1 |

2 |

3 |

4 |

5 |

|

1 |

2 |

3 |

4 |

5 |

accuracy |

1 |

2 |

3 |

4 |

5 |

|

1 |

2 |

3 |

4 |

5 |

Completeness |

1 |

2 |

3 |

4 |

5 |

|

1 |

2 |

3 |

4 |

5 |

CommonQ3. How do you obtain data relevant for PERFORMANCE AREA X performance management (select all that apply)?

No data |

Collect own data |

Purchase data |

Provided by 3rd party |

Collaboration with Partner agency |

[ ] |

[ ] |

[ ] |

[ ] |

[ ] |

CommonQ4. Does your agency mostly outsource the analysis of data for PERFORMANCE AREA X?

Yes, my agency mostly outsources data analysis

No, my agency does not mostly outsource data analysis

Both, my agency uses a relative equal share of in-house and outsourced data analysis

CommonQ5. In respect to your answer in the previous question, what criteria did your agency use to determine whether or not to outsource PERFORMANCE AREA X data analysis? (Select all that apply)

Cost‐effectiveness

Scope of data analysis requirements

Availability of qualified contractors

Capability of in‐house data analysis teams

Experiences of other agencies that have out‐sourced data analysis

Coordination with other agencies

Not applicable

Other (please specify): _________________________________________________

CommonQ7. For PERFORMANCE AREA X, for each of the performance management functions listed below please indicate: 1) your agency’s interest in reviewing available tools and resources and 2) on a scale of 1 to 5, your agency’s current capacity (availability of staff, skills, resources, and tools).

Function |

Interest in reviewing Tools/resources (y/n) |

Agency’s Current Capacity (1 low – 5 very high) |

Collecting, processing, reviewing, and managing data |

(y/n) |

1 2 3 4 5 |

Developing performance models and forecasting trends |

(y/n) |

1 2 3 4 5 |

Assessing and developing system-wide targets |

(y/n) |

1 2 3 4 5 |

Selecting and programming projects |

(y/n) |

1 2 3 4 5 |

monitoring and analysis of performance results |

(y/n) |

1 2 3 4 5 |

CommonQ8.For PERFORMANCE AREA X, What specific limitations may constrain your agency’s capacity to conduct the functions listed in the previous question? (Check all that apply)

Available staff

Available data

Lack of staff skills

Funding

Limited time or resources for training

Availability of Final Rules

Available tools;

organizational structure;

Political concerns/existing methods for project prioritization.

All of the above

D. SPECIFIC TO SAFETY: DATA & ANALYSIS

D1. Typically how long does it take for crash data from all public roads to be entered into your statewide crash database?

Over 1 year

9 – 12 months

6 – 9 months

3 – 6 months

0 – 3 months

D2. Which agencies do you cooperate with to gather crash data (check all that apply)?

Counties

Cities

Federal agencies

Tribes

Other States

Other state agencies

Transit agencies

Other (please specify): _________________________________________________

D3. Does your agency collect and analyze data to assess overall program‐level benefits of the HSIP?

Yes

No

Not Sure

D4. To what extent does your agency have current or projected railroad traffic?

The State has extensive data on the current railroad traffic and extensive data on the projected railroad traffic.

The State has extensive data on the current railroad, but little to no data on the projected railroad traffic.

The State has little to no data on the current railroad traffic, but extensive data on the projected railroad traffic.

The State has little to no data on the current railroad traffic and little to no data on the projected railroad traffic.

E. SPECIFIC TO BRIDGE: DATA & ANALYSIS

E1. Who conducts the National Bridge Inspection Standards safety inspections of non-State owned NHS bridges?

State

Owner Agency

Not Sure

E2. How does your agency handle the National Bridge Inspection Standards responsibilities for border bridges (bridges that cross State borders)?

Written agreement

Periodic meetings

Do Nothing

Not Sure

41 – 60%

61 – 80%

81 – 100%

F. Specific to PAVEMENT: DATA & Analysis

F1. Is pavement data currently collected in both directions?

Route Location |

Yes, full extent |

Yes, partial extent |

No |

Not sure |

On Interstate Routes? |

( ) |

( ) |

( ) |

( ) |

On other Routes? |

( ) |

( ) |

( ) |

( ) |

F2. How often is pavement data collected on the National Highway System?

Annually

Biennially

Varies by data item

F3. Who acquires pavement data on non‐State owned NHS Routes?

State

Owner Agency

Don’t Know

G.SPECIFIC TO FREIGHT: DATA & ANALYSIS

G1. What data do you use or plan to use in the freight performance measurement and performance-based planning processes?

Probe data

NPMRDS

FAF

AADT/HPMS

Other (please specify): _________________________________________________

H.SPECIFIC TO CONGESTION/MOBILITY/SYSTEM PERFORMANCE: DATA & ANALYSIS

H1. Do you have any programs in place to count the number of pedestrians and cyclists that use your transportation system?

Yes

No

Not Sure

H2. What data do you use or plan to use in the Congestion/Mobility/System Performance measurement and performance-based planning processes?

Probe data

NPMRDS

FAF

AADT/HPMS

Other (please specify): _________________________________________________

J. SPECIFIC TO TRANSIT STATE OF GOOD REPAIR SUPPLEMENT: DATA AND ANALYSIS

J1. Do you have ready access to data to understand physical condition of transit assets in your area? If yes, describe and explain.

Yes

No

J2. Does your agency collect data on the physical condition of transit assets outside the National Transit Database?

Yes, Annually

Yes, Biennially

Yes, ______________

No

PERFORMANCE MEASURES

CommonQ9. Are the PERFORMANCE AREA X measures used by your agency incorporated into the following activities?

Activity |

Yes |

No |

Included in LRTP |

( ) |

( ) |

Prioritizing Projects |

( ) |

( ) |

Monitoring and Analysis |

( ) |

( ) |

Reporting |

( ) |

( ) |

CommonQ10. The AGENCY tracks leading PERFORMANCE AREA X indicators (leading indicators are metrics that often correlate to a change in performance before a trend can be dedicated using a performance measure) on a regular basis to assess progress in the achievement of longer term outcomes

Strongly Disagree |

Somewhat Disagree |

Neutral |

Somewhat Agree |

Strongly Agree |

( ) |

( ) |

( ) |

( ) |

( ) |

CommonQ11. When establishing your chosen PERFORMANCE AREA X performance measures, did current data availability factors influence what measures were established? If yes, please describe briefly if your agency is planning new measures in the future when data becomes more readily available.

Yes

No

Not Sure

G.SPECIFIC TO FREIGHT: PERFORMANCE MEASURES

G2. Does your freight performance measurement include truck parking?

Yes

No

Not Sure

G3. Have you developed freight performance measure in the following modes?*

|

Yes |

No |

Not sure |

Highway |

( ) |

( ) |

( ) |

Rail |

( ) |

( ) |

( ) |

Marine |

( ) |

( ) |

( ) |

Air |

( ) |

( ) |

( ) |

H.SPECIFIC TO Congestion/Mobility/System Performance: PERFORMANCE MEASURES

H3. Which Congestion/Mobility/System Performance related performance measure areas do your agency track?

Congestion

Reliability

Delay

Incident management

Signal system

Other (please specify): _________________________________________________

TARGET SETTING

CommonQ11. On a scale of 1(low) to 5(high), when establishing targets for PERFORMANCE AREA X, what is the level of coordination with other entities in selecting targets?

Rating |

Scale |

( ) |

Low– State DOT and other organizations/agencies have not previously coordinated to select targets for PERFORMANCE AREA X have not |

( ) |

Moderately-low ‐ State DOT and organizations/agencies communicate to select targets for PERFORMANCE AREA X but have not coordinated to select targets that align with performance expectations for the region |

( ) |

Moderate – State DOT and organizations/agencies coordinate to select targets for PERFORMANCE AREA X that align with performance expectations for the region |

( ) |

Moderately-high – State DOT and other organizations/agencies impacted by PERFORMANCE AREA X collaboratively work together to program investments that support selected performance targets and generally shared performance expectations. Absent agreements, each implements programs based on shared expectations. |

( ) |

High – State DOT and other organizations/agencies impacted by PERFORMANCE AREA X work together in a collaborative manner to select targets and decide on performance expectations for a region. All agree to program investments in support of this shared expectation of performance. |

CommonQ12. Your agency has experience developing short term quantifiable PERFORMANCE AREA X performance targets that can be used to guide program investment decision making

Strongly Disagree |

Somewhat Disagree |

Neutral |

Somewhat Agree |

Strongly Agree |

( ) |

( ) |

( ) |

( ) |

( ) |

Please Explain_______

PLANNING AND PROGRAMMING

CommonQ13. Select your current capability to predict the outcome of PERFORMANCE AREA X programming decisions on the following scale:

1 – unable to predict outcomes |

2 – predictions based on historical trends |

3 – empirical based models |

4 – accurate data driven models |

( ) |

( ) |

( ) |

( ) |

CommonQ14. Does your agency conduct evaluate the before and after performance outcomes on completed PERFORMANCE AREA X projects?

Never |

Rarely |

Sometimes |

Often |

Always |

( ) |

( ) |

( ) |

( ) |

( ) |

CommonQ15. Have you been able to successfully use a performance based justification to acquire additional funds to support PERFORMANCE AREA X transportation needs? Please Explain

( )No

( )Yes

( )Partially

D.SPECIFIC TO SAFETY: PLANNING AND PROGRAMMING:

D7. What criteria are used to prioritize safety projects for programming and implementation? (Check all that apply)

Effectiveness assessment of similar program/strategy (e.g., HSIP evaluation affects future project selection)

Cost

Project readiness

SHSP

All crashes with no indication of safety

Only fatal crashes

Only fatal and serious injury crashes

All crashes with weighting to reflect severity

None

Other (please specify): _________________________________________________

D8. How effective is your agency at interacting and collaborating with the SHSO on HSIP efforts?

Rating |

Scale |

( ) |

Low – State DOT and SHSO do not communicate effectively |

( ) |

Moderately low ‐ State DOT and SHSO communicate but are not aware of each other's view of Safety performance expectations for the region |

( ) |

Moderate – State DOT and SHSO share their respective safety performance expectations but do not collaborate on a shared vision for the region |

( ) |

Moderately high – State DOT and SHSO collaboratively work together to program investments that support generally shared Safety performance expectations. Absent agreements, each implements programs based on shared expectations. |

( ) |

High – State DOT and SHSO work together in a collaborative manner to decide on Safety performance expectations for a region. All agree to program investments in support of this shared expectation of performance |

E.SPECIFIC TO BRIDGE: PLANNING AND PROGRAMMING

E3. Describe impact of expansion of National Highway System on the State agency Bridge programs.

Massive –Major changes to funding and project prioritization efforts?

Significant – Changes to planning and management but little impact on funding.

Moderate –Minor adjustments to State programs and funding program essentially unchanged

F.SPECIFIC TO PAVEMENT: PLANNING & PROGRAMMING

F4. What criteria are used to prioritize pavement projects for programming and implementation? Check all that apply

Greatest need of attention

Scheduled treatment interval

Single year prioritization

Multi-year prioritization

Incremental cost benefit

other

F5. Describe impact of expansion of National Highway System on the State agency pavement programs.

Massive –Major changes to funding and project prioritization efforts?

Significant – Changes to planning and management but little impact on funding.

Moderate –Minor adjustments to State programs and funding program essentially unchanged

G.SPECIFIC TO FREIGHT: PLANNING AND PROGRAMMING

G4. Does your agency have a MAP‐21 compliant Statewide Freight Plan?

Yes

No

Not Sure

I.SPECIFIC TO ON-ROAD MOBILE SOURCE: PLANNING AND PROGRAMMING

I1. Do you currently or regularly develop quantitative emissions estimates for your CMAQ projects?

Yes

Sometimes

No

Not Sure

I2. How do you plan to transition to quantitative emissions estimates?

I am waiting for FHWA to develop a toolkit for estimating emissions

I have a contractor on board to help develop emissions estimates

My staff has the technical capabilities to develop quantitative estimates

I have no plan to transition from the current qualitative analyses

Other (please specify): _________________________________________________

I3. Some project types have historically never had a quantitative emissions estimate, such as public education, marketing, and operating assistance. How do you plan to quantify these benefits?

I am waiting for FHWA to tell me how to estimate emissions for these types of projects

I have a contractor on board to help develop emissions estimates for these types of projects

My staff has the technical capabilities to determine the best way to quantify emissions for these types of projects

I have no plan to start developing quantitative emissions estimates for these types of projects

I have no plan to transition from the current qualitative analyses

Other (please specify): _________________________________________________

I4. How do you capture benefits and report emissions benefits for a group of projects or bundle of projects? (Select the most applicable response)

I didn’t know we could group projects

Only report qualitative benefits

Based on some assumptions about co‐benefits from the group of projects

Other (please specify): _________________________________________________

MONITORING, ANALYSIS, AND REPORTING

CommonQ18. How are the PERFORMANCE AREA X performance results (outcomes, progress meeting targets, etc.) communicated?

Method |

Internal |

External |

Management Meetings |

( ) |

( ) |

Quarterly reports |

( ) |

( ) |

Dashboards |

( ) |

( ) |

Annual Reports |

( ) |

( ) |

Fact Sheets |

( ) |

( ) |

Action Plans |

( ) |

( ) |

Newsletters |

( ) |

( ) |

Other |

( ) |

( ) |

Outline of the National TPM Implementation Review Data Collection and Analysis Design

The primary goal of the National Transportation Performance Management (TPM) Implementation Review is to gather information about the application of performance management, performance based-planning and programming principles, and other MAP-21 performance provisions at State Departments of Transportation (State DOTs) and Metropolitan Planning Organizations (MPOs). This data collection effort helps identify training and capacity-building resources to support the implementation of TPM practices across the transportation industry. The data collection effort will be administered twice; first in 2016 and again in either 2017or in 2018 and later so that progress in the development and application of TPM capabilities may be measured, and so that additional capacity building tools can be created. As stated in the 60 day Federal Register Notice published 6/23/2015, the intention of the National TPM Implementation Review is to establish a baseline to assess:

Implementing MAP–21 performance provisions and related TPM best practices; and

The effectiveness of performance-based planning and programming processes and transportation performance management.

The second National TPM Implementation Review will be conducted several years later and will be used to assess FHWA and its partners’ progress addressing any gaps or issues identified during the first review. The findings from the first review will be used in a pair of statutory reports to Congress due in 2017 on the effectiveness of performance-based planning and programming processes and transportation performance management (23 U.S.C. 119, 134(l)(2)– 135(h)(2)). The findings from the second review will be used in a subsequent follow-up report. It is important to note that this is not a compliance review. The overall focus of the National TPM Implementation Review is on the TPM and performance-based planning processes used by STAs and Metropolitan Planning Organizations (MPOs), not the outcomes of those processes. 1

TPM implementation requires State Dots and MPOs to collaborate with FHWA on the development of transportation performance measures related to national goals. The State DOTs and MPOs will then need to work with FHWA to operationalize these performance measures by developing performance targets and determine what constitutes significant progress. Transportation agencies will also be required to report on and explain performance results. Across all aspects of TPM, the State DOTs and MPOs will need to work collaboratively with each other and with FHWA, and they will need to collect, maintain, and manage the performance data.

The National TPM Implementation Review seek to provide quantitative and coded qualitative data from open ended questions that can be summarized to spur further discussion of the resource and guidance needs of transportation agencies. It is believed that State DOTs and MPOs have a general understanding of TPM practices and have begun implementation of those practices, but it will be beneficial to have a better understanding of specific implementation capabilities, progress, and challenges and needs. The assessment will collect data from State DOT and MPO staff regarding:

Self-assessments of their capabilities to implement performance management practices;

Identification of key challenges of TPM implementation from the perspective of the Partner Organizations;

Assessment of interest in receiving or reviewing training, guidance resources, and implementation assistance;

Preferences among alternative means for providing capacity building and training; and

The analysis of the assessment results will provide quantitative assessments and comparative analyses of the:

Partner Organizations’ readiness to implement TPM;

Partner Organizations perceived usage and their perception of the effectiveness of the performance-based planning and programming process

Gap analysis identifying disconnects between TPM principles and agency capabilities; and

Partner Organizations’ prioritization of potential capacity building and training efforts.

The following is an outline of the assessment data collection plan.

National TPM Implementation Participants

Survey Sampling: The assessment will be based on:

A census (100 percent sample) of 52 State Departments of Transportation (DOTs),

A census (100 percent sample) of urbanized areas from which metropolitan planning organizations (MPOs) will be drawn, and

Follow-up data collection with the same respondent organizations in 2017, or in 2018 and later.

No attempt will be made to draw inferences to any population other than the set of units that responded to the data collection effort.

State DOT Data Collection: As the assessment will seek to include all State DOTs, no formal sampling strategy will be required for this respondent group. A recent preliminary assessment of the state transportation agencies by FHWA had full participation, so we expect that we will have a high response rate of 80 percent or more. With a response rate of 80 percent (42 agencies), the 90 percent confidence level margin-of-error for population proportion estimates would be at most plus or minus 6 percent. With a response rate of 90 percent (47 agencies), the 90 percent confidence level margin-of-error for population proportion estimates would be less than plus or minus 4 percent. We believe this minimum response would adequately enable FHWA to identify and quantify state transportation agency levels of readiness, areas of concern, and training and resource needs.

MPO Data Collection: As the assessment will seek to include all MPOs, no formal sampling strategy will be required for this respondent group. The MPO groups will be sorted into from urbanized area stratums based on the represented metropolitan areas’ population, air quality characteristics (including ozone non-attainment) , and planning organization representation. Since many regulatory requirement thresholds are related to area population and Environmental Protection Agency (EPA) air quality conformity assessments, these thresholds are likely to reflect differences in the surveyed agencies’ level of sophistication and exposure to performance management based planning concepts.

The urbanized area strata will include:

Stratum 1: Areas of more than one million population;

Stratum 2: Areas of less than one million population that have air quality non-attainment issues;

Stratum 3: Areas of between 200,000 and one million population that do not have air quality non-attainment issues;

Stratum 4: Areas represented by MPOs with less-than-200,000 population that do not have air quality non-attainment issues;

The final stratums will be refined through the combination of several available federal databases:

Census Bureau Urbanized Area List;

the MPO database maintained by FHWA; and

EPA Greenbook, which records air quality conformity issues by region.

Based on our preliminary processing of these sources, the populations of urbanized areas by strata are about the following:

Stratum 1 – 50 regions;

Stratum 2 – 63 regions;

Stratum 3 – 112 regions; and

Stratum 4 – 183 regions.

These population estimates will be reviewed and corrected to ensure that the assignment of regions by type is accurate, we would propose to sort MPO participants as follows:

Stratum 1 – include all 50 regions

Stratum 2 – include all 63 regions

Stratum 3 – include all 112 regions

Stratum 4 – include all 183 regions

The MPOs from the selected regions in strata 1 to 4 will be contacted to complete the survey. A recent web-based survey of Census data specialists at MPOs conducted for AASHTO yielded a response rate of 27 percent. Another recent survey of MPOs conducted for FHWA regarding the organizational structure of the agencies had a response rate of 36 percent. The National TPM Implementation Assessment is expected to have a comparatively strong response rate, because of the importance of the data collection topic to the mission of the MPOs and because of the full range of survey design measures that will be employed to minimize non-response bias that are described in later sections below, most notably:

The survey topic will be of greater importance to the target respondents, the agencies’ Executive Directors, as the topic will affect many of the agencies’ business practices;

The survey invitation will come from a more prominent sender from FHWA;

We will seek to have pre-notification, and hopefully endorsement, of the data collection effort be provided by national planning organizations, such as NARC and AMPO, and by State DOTs;

The survey pre-notification and follow-up protocols will be robust and will include both email and telephone contact.

Because of these survey data collection features, we are expecting that the MPO survey response rate will be in the 35 to 45 percent range. For planning, we assume a response rate of 35 percent, though we will seek to achieve the highest possible rate. The 35 percent rate would yield about 117 valid responses. No attempt will be made to draw inferences to any population other than the set of units that responded to the data collection effort

At this level of return, the 90 percent confidence level margin-of-error for population proportion estimates would be at most plus or minus 6 percent. We believe this minimum response would adequately enable FHWA to identify and quantify MPO levels of readiness, areas of concern, and training and resource needs.

Follow-Up Data Collection: A follow-up survey of the same partner organizations will be conducted in 2017 or in 2018 and later. Respondents from the initial State DOT and MPO assessments will be re-contacted for the follow-up assessment. When organizations that complete the initial assessment do not respond to the follow-up assessment, we will seek to identify and recruit similar organizations that did not participate in the initial data collection (either because they were not sampled or because they refused to be included in the initial effort) to participate in the follow-up. The resulting follow-up assessment sample will allow for longitudinal analyses (with attrition replacement).

Respondent Selection within Partner Organizations: One of the important challenges of the National TPM Implementation Assessment will be to identify the best people within the agencies from whom to collect information. The initial State DOT contacts will be the individuals previously identified by FHWA for the previous initial assessments.

The default MPO principal points-of-contact will be the Executive Directors. We will also ask for input from AMPO.

Each of the partner organization assessments will be seeking information that may reside with multiple staff members at the State DOTs and MPOs. Consequently, a survey strategy that involves multiple points of contact will be required. The approach envisioned is to send the main survey invitation to the key points-of-contact, described above, and allow them to complete the subsections of the survey themselves or to identify others in the Agency or Department that should complete the program topic area specific subsections of the survey.

Advantages of this approach:

More likely to capture data from the staff members that are knowledgeable of specific Agency or Department capabilities

Multiple perspectives from each Agency or Department can better identify specific issues and concerns

Increased interest in the TPM implementation and in the Assessment effort throughout the Agencies and Departments

Disadvantages of this approach:

Potential biases may be introduced by letting the primary respondents select the subsection respondents

Multiple perspectives from each Agency or Department could be contradictory

Potential difficulty in gaining perspectives on prioritization between different roles and responsibilities to implement TPM requirements within program areas

In our view, the benefit of reaching the most knowledgeable staff outweighs any potential biases introduced by having the main respondents select the subsection respondents. The multiple perspective approach also reflects the fact that TPM touches on many disciplines within an Agency or State DOT. To address prioritization across the many roles and responsibilities associated with TPM requirements within the system performance areas, the survey will include general prioritization questions for the main respondent to answer, and more specific subsection questions for other sub-respondents.

National TPM Implementation Assessment Process

The data collection effort will consist of the following steps:

State DOT Assessment:

FHWA Office of TPM and Division Office staff will alert State DOT Point of Contact (POC) that a web-based survey is being developed that will help with determining needs and priorities for TPM training, guidance resources, and technical assistance

The FHWA will develop an invitation email with a link to the State DOT survey. The FHWA TPM Director will send the email invitation with a link to the main survey to the State DOT POCs

If no response is received after seven days, an automated reminder email invitation with a link to the survey will be sent to the State DOT contacts

After seven more days, a second automated reminder email invitation with a link to the survey will be sent to non-respondents

If still no response is received, the project team will place a telephone call reminder asking the State DOT contact to either complete the web-based survey or to set up an appointment to complete it by phone

As part of the main survey, the State DOT POC will be given the option to identify the best person within their agencies to complete each of the subsections of the survey, which will be based on the anticipated State DOT’s TPM roles

The survey software will then automatically email the referenced people invitations to complete surveys with the identified survey subsections

The same follow-up protocols will be followed for the subsection survey respondents as for the main surveys

Only the single State DOT POC can review, edit, and submit final approved responses to FHWA.

MPO Assessment:

FHWA Office of TPM and Division Office staff will alert the MPO POCs of an upcoming web-based assessment. If an MPO contact cannot be identified, the MPO Executive Director will be the point-of-contact

The FHWA will develop invitation emails with links to the MPO survey. The FHWA OPM Director will send the email invitation with a link to the surveys to the MPO contacts

If no response is received after seven days, an automated reminder email invitation with a link to the survey will be sent to the MPO contacts

After seven more days, a second automated reminder email invitation with a link to the survey will be sent to non-respondents

As part of the main surveys, the MPO contacts will be given the option to identify the best person within their agencies to complete each of the subsections of the survey, which will be based on their agencies’ anticipated TPM roles

The survey software will then automatically email the referenced people invitations to complete surveys with the identified survey subsections

The same follow-up protocols will be followed for the subsection survey respondents as for the main surveys

Only the single State DOT POC can delegate, review, edit, and submit final approved responses to FHWA.

State DOT and MPO Assessment Results Analyses & Report:

Responses will be monitored throughout the data collection process to identify any issues as promptly as possible and to track data collection progress

Upon completion of the web survey data collection, we will code open-ended question responses and identify any responses that require telephone follow-up clarification

The first output of the readiness assessment effort will be topline tabulations and cross-tabulations of the web survey questions

A report of the assessment results will then be prepared for review and approval by FHWA. The report shall include detailed quantitative and qualitative analysis of the survey results

The raw assessments data will also be submitted to FHWA in a simple and appropriately formatted excel workbook and other appropriate formats.

Follow-up State DOT and MPO Assessments:

FHWA Office of TPM and Division Office staff will alert the State DOT and MPO respondents from the initial assessments of the upcoming web-based follow-up assessments.

The project team will develop invitation emails with links to the State DOT and MPO follow-up assessments. The FHWA OPM Director will send the email invitation with a link to the follow-up assessments to the State DOT and MPO

If no response is received after seven days, an automated reminder email invitation with a link to the survey will be sent to the MPO contacts

After seven more days, a second automated reminder email invitation with a link to the survey will be sent to non-respondents

If still no response is received, the project team will place a telephone call reminder asking the contact to either complete the web-based survey or to set up an appointment to complete it by phone

As for the initial assessments, the State DOT and MPO contacts will be given the option to identify the best person within their agencies to complete each of the subsections of the survey, which will be based on their agencies’ anticipated TPM roles

The survey software will then automatically email the referenced people invitations to complete surveys with the identified survey subsections

The same follow-up protocols will be followed for the subsection survey respondents as for the main surveys

Follow-up State DOT and MPO Assessment Analysis & Report:

Responses will be monitored throughout the data collection process to identify any issues as promptly as possible and to track data collection progress

Upon completion of the web survey data collection, we will code open-ended question responses and identify any responses that require telephone follow-up clarification

The first output of the readiness assessment effort will be topline tabulations and cross-tabulations of the follow-up assessment web survey questions

In addition, a comparative analysis of the initial assessment and follow-up assessment results will be developed

A report of the follow-up assessment results will then be prepared for review and approval by FHWA. The report shall include detailed quantitative and qualitative analysis of the survey results.

The raw follow-up assessment data will also be submitted to FHWA in a simple excel workbook.

Selection of data collection mode

The National TPM Implementation Assessment efforts lend themselves to a web-based survey approach with in-person follow-up because:

the survey audiences are well-connected to the Internet and reachable via email,

the objective of the assessments is to collect largely quantitative data which leads to the use of primarily web-survey friendly closed-ended question types

data consistency checks can be performed as the data are collected, rather than in a separate post-survey cleaning task

although the assessment will not have a large sample size, the multiple point-of-contact survey data collection protocol will require extra care that can be better managed through an online approach

Selection of survey data collection software

The proposed survey software platform is Survey Gizmo.

Specific advantages of this platform compared to other online survey data collection options2:

Wider range of question types than most online survey options, including group questions, matrix questions, and experimental design choice exercises

Custom scripting capabilities

Flexible page and question logic and skipping

Style themes by device type

Email campaign tools

Response tracking, reporting, and multiple data export formats (CSV, Excel, SPSS, etc.)

Greater range of respondent access controls than other online products

Allowance of save-and-continue

Duplicate protection

Anonymous responses

Quota setting

Restrictions on going backward

Section navigation

Greater range of administrator roles and collaboration features than other products

The Section Navigator is particularly critical for the Partner Organization assessment because it will enable the primary points-of-contact to separate the assessment into sections to make it easy for different respondents to complete different parts without interrupting or overwriting one another. Simply stated, the Section Navigator enables one Partner Organization to be completed by multiple people. For example:

Source: Survey Gizmo documentation, 2014.

An example of a recent survey conducted in Survey Gizmo:

http://www.surveygizmo.com/s3/1775738/AASHTO-CTPP-Survey-a

National TPM Implementation Assessment and Follow-up Assessment Content

The initial and follow-up assessments will include questions about TPM in general, performance-based planning and programing (PBPP), and Asset Management. In addition, a set of questions related to data, measures, targets, programming, and reporting will be asked for six performance areas (safety, bridge, pavement, freight, congestion/mobility/system performance, and on-road mobile source emissions). As warranted by each performance area, additional questions will be included. Questions about capacity building needs will be included in the general TPM section, PBPP section and system performance area sections.

Assessment questions are based on:

Draft survey questions developed by FHWA staff

Comments from FHWA staff on PBPP

Comments from FHWA staff on Asset Management

Comments from FHWA staff on Safety

Comments from FHWA staff on Bridge

Comments from FHWA staff on Congestion/Mobility/System Performance

Comments received from external stakeholders via federal register notices and information sessions

The Assessment and follow-up Assessment will include:

Scale questions regarding State DOT and MPO levels of preparedness, relative importance, and challenges with implementing TPM.

Scale questions regarding State DOT and MPO levels of preparedness, relative importance, and challenges with the implementation of PBPP

Scale questions regarding staffing, levels of preparedness, relative importance, and challenges with implementing TPM practices for specific performance areas.

Open-ended questions regarding the need for training, guidance resources, and technical assistance. “What specific training, guidance resources, and technical assistance activities would benefit your agency the most?”

Prioritization of general technical assistance activities.

Given the estimated length of the assessment, the number of open-ended questions will be kept to as low a number as possible.

The web survey instruments for the assessments are envisioned to consist of:

A main survey directed at the principal contacts at the State DOTs, and MPOs regarding TPM in general

A survey section dedicated to PBPP

A survey section dedicated to Asset Management

Sub-sections based on six performance areas:

Safety,

Bridge,

Pavement,

Freight,

Congestion/Mobility/System Performance and

On-road mobile source emissions.

Survey Question Construction

The development of the survey instrument will be an interactive process, beginning with FHWA review and editing of the data elements listed above. As data elements are settled, specific question wording will be developed. Each question and associated response categories will be evaluated along the following dimensions:

Lack of focus

Bias

Fatigue

Miscommunication

Bias limitation and detection

It will be important to limit the amount of time needed for respondents to completely respond to the National TPM Implementation Assessment. Fatigue and loss of interest affect survey completion rates, data quality, and open-ended response completeness and thoughtfulness. We will seek to limit the entire survey process to no more than 22 burden hours. The burden hours estimate acknowledges and accounts for time often needed to collect information; coordinate and input responses; and review and approve final responses,

Where possible, response category orders will be randomized to limit bias.

Survey page timers (not visible to respondents) will be used to identify potential understanding problems (unusually long page dwell times) and potential loss-of-interest problems (unusually short page dwell times)

Testing the Draft Survey

Survey instrument diagnostics: Survey software includes built-in capabilities to evaluate the web-based survey instrument:

Fatigue / survey timing scores

Language and graphics accessibility scores

Generation of survey test data: Once the survey is drafted, we will generate hypothetical synthetic output datasets. This will enable us to correct response category problems and to ensure that the output data will support the tabulations and analyses we expect to perform on the actual data set.

Office pretest: Prior to engaging the Partner Organizations, we will generate an email invitation link to a test survey and distribute it to Spy Pond Partners and FHWA staff that are knowledgeable of the survey topics but that were not involved in the survey development. We will seek their input on the survey questions and identify potential improvements to the survey.

Field pretest: Because the National TPM Implementation Assessment will be distributed to all State DOTs and most MPOs, a full dry-run survey field pretest cannot be used.

Instead, we will schedule about five of the FHWA Partner agencies (State DOT and/or MPOs) assessments to be delivered earlier than the rest of the assessments. We will review results of the early assessments as they are completed to evaluate comprehension and cooperation levels. We will contact early respondents by phone to ask if they had any specific issues that could be fixed. We will make necessary changes for the full assessment release, and if necessary re-contact early respondents to collect any data elements that were not in the early survey.

Analysis of Results

Data review

As the data are collected, we will review responses for validity

Survey response patterns (such as straightlining, etc.)

Page completion times

Completion of closed-ended and open-ended survey responses

Internal consistency checks

Data outlier review

Tabulations

Topline results

Cross-tabulations

Cluster analysis to group partner organizations by similarities, if feasible

Analyses

MaxDiff priority measurement

Gap analysis (training needs versus capabilities)

Open response coding

(Follow-up assessment only) Longitudinal (before-after) comparisons of initial assessments and follow-up assessments

The MaxDiff priority measurement approach is a discrete choice date collection and analysis method where respondents will be asked to select the most important and least important priorities among several experimentally designed lists. The respondent selections will be used to model the relative prioritization of roles and responsibilities, as well as potential capacity building strategies. More direct rating scale questions have the appeal to respondents of being easily understood, but the ratings are commonly affected by response effects, such as respondents scoring many potential responses as the highest priority. In addition, responses to scales can vary from person to person. Consequently, relying only on scale questions can be problematic. Choice exercises, such as MaxDiff, help to alleviate many of the problems of scale questions.

Survey Data Files and Tabulation