UoF Supporting Statement Part B 082218_PostPilot Final

UoF Supporting Statement Part B 082218_PostPilot Final.doc

National Use of Force Data Collection

OMB: 1110-0071

B. Statistical Methods

1. Universe and Respondent Selection

Respondents to the National UoF Data Collection include law enforcement agencies which employ sworn officers that meet the definition as set forth by the Law Enforcement Officers Killed and Assaulted (LEOKA) Program. The LEOKA definition and additional criteria are as follows:

All local, county, state, tribal, and federal law enforcement officers (such as municipal officers, county police officers, constables, state police, highway patrol officers, sheriffs, their deputies, federal law enforcement officers, marshals, special agents, etc.) who are sworn by their respective government authorities to uphold the law and to safeguard the rights, lives, and property of American citizens. They must have full arrest powers and be members of a public governmental law enforcement agency, paid from government funds set aside specifically for payment to sworn police law enforcement organized for the purposes of keeping order and for preventing and detecting crimes, and apprehending those responsible.

General Criteria

The data collected by the Federal Bureau of Investigation’s (FBI’s) LEOKA Program pertain to felonious deaths, accidental deaths, and assaults of duly sworn city, university and college, county, state, tribal, and federal law enforcement officers who, at the time of the incident, met the following criteria. These law enforcement officers:

Wore/carried a badge (ordinarily),

Carried a firearm (ordinarily),

Were duly sworn and had full arrest powers,

Were members of a public governmental law enforcement agencies and were paid from government funds set aside specifically for payment to sworn law enforcement, and

Were acting in an official capacity, whether on or off duty, at the time of the incident

Exception to the above-listed criteria

Beginning January 1, 2015, the LEOKA Program effected an exception to its collection criteria to include the data of individuals who are killed or assaulted while serving as a law enforcement officer at the request of a law enforcement agency whose officers meet the current collection criteria. (Special circumstances are reviewed by LEOKA staff on a case-by-case basis to determine inclusion.)

Addition to the LEOKA Program’s Data Collection

Effective March 23, 2016, the LEOKA Program expanded its collection criteria to include the data of military and civilian police and law enforcement officers of the Department of Defense (DoD), while performing a law enforcement function or duty, who are not in a combat or deployed (sent outside of the U.S. for a specific military support role mission) status. This includes DoD police and law enforcement officers who perform policing and criminal investigative functions while stationed (not deployed) on overseas bases, just as if they were based in the U.S.

Exclusions from the LEOKA Program’s Data Collection

Examples of job positions not typically included in the LEOKA Program’s statistics (unless they meet the above exception) follow:

Corrections or correctional officers

Bailiffs

Parole or probation officers

Federal judges

U.S. and Assistant U.S. Attorneys

Bureau of Prison officers

The number of local, state, and tribal law enforcement agencies in the Uniform Crime Reporting (UCR) Program’s records which meet that criteria are 18,444. In addition, there are potentially up to 114 federal law enforcement agencies which may also meet the criteria for submission of use-of-force incidents to the National UoF Data Collection. The first six months of data collection focused upon a recruited set of law enforcement agencies. Potential participants were those agencies with at least 750 sworn law enforcement officers on their workforce, the four Department of Justice (DOJ) agencies, and additional recruiting from two to five states willing to participate. The goals of the pilot center on data quality and data completeness. More information on the pilot can be found in the response to Supporting Statement Part B, Question 4.

Agency Type |

Pilot (minimum numbers) |

Full Collection |

Municipal |

52 |

11,708 |

County |

30 |

3,031 |

Colleges/Universities |

0 |

788 |

Other Agencies |

0 |

500 |

Other State Agencies |

3 |

1,075 |

State Police |

6 |

1,134 |

Tribal |

0 |

207 |

Federal |

4 |

114 |

Total |

95 |

18,558 |

As the National Use-of-Force Data Collection is intended to collect information on any use of force by law enforcement in the U.S. or on U.S. territory which meets one of the three criteria (death of a person, serious bodily injury of a person, or firearm discharge at or in the direction of a person), sampling methodologies are not used. Instead, the FBI UCR Program relies upon the enumeration of these incidents in total to make statements about the relative frequency and characteristics of the use of force by law enforcement in the U.S. However, the voluntary nature of the UCR Program results in some agencies reporting incomplete information and others not participating in the data collection at all.

Statistical information on law enforcement use of force reported under the definition of justifiable homicide historically has had a low response rate. For the years 2012 to 2014, the total number of justifiable homicides by law enforcement reported by UCR contributors was 1,341, or 447 on average per year. This number represents only about 40 percent of expected reports of justifiable homicide based upon the findings of the Bureau of Justice Statistics (BJS). In its 2015 report1, the BJS found that approximately 1,200 incidents of justifiable homicide per year are reported through the media and corroborated by law enforcement or the medical examiner. The FBI intends to vigorously address the response rate problem to raise it to a minimum of 80 percent once the data collection is established.

2. Procedures for Collecting Information

Information on law enforcement use of force will be collected initially by law enforcement agencies which employ law enforcement officers who meet the same definition and criteria as the LEOKA Program (see response to Supporting Statement Part B, Question 1). Agencies record information on the use-of-force incident for their own purposes in case files which may or may not be housed in automated systems. This information is translated or recoded into standardized answers which correspond to the 41 questions asked in the National UoF Data Collection.

The process of translating agency information into standardized responses for a UCR data collection more closely aligns with the coding process associated with content analysis, rather than traditional survey design. In the reporting of information on a use of force by law enforcement, the responses will usually be provided by a supervisor of a unit charged with investigating the use of force or one of the staff in such a unit. Rarely would the questionnaire be completed by the individual officer(s) involved in the incident. The FBI provided both user guides and “just in time” information to guide individuals in the process of responding to questions in a standardized fashion.

Agencies are encouraged to begin the process of completing the questions regarding a use-of-force incident as soon as possible. All work can be saved within the system and retrieved at a later time for completion. Once an agency has completed the questions related to an incident, a designated individual within each agency indicates the information is ready for the next stage in the workflow. At this point, states can directly manage the collection of use-of-force information at the state level, much like the rest of UCR data collections. Alternatively, states can allow for their agencies to report their use-of-force data directly to the FBI. Regardless of whether it would be the state UCR Program or the FBI that receives the data, all incidents are subject to review for logical inconsistencies by staff of the FBI or state UCR Program. If questions arise regarding the information provided, the original agency is asked to resolve data quality issues.

In addition to use-of-force incident information, agencies are able to indicate on a monthly basis they did not have any use-of-force incidents within scope for the data collection. These “zero report” submissions follow the same general workflow as the use-of-force incident information.

Some agencies and states have automated systems in place to capture information on law enforcement use of force or have plans in the near future to build those systems. The FBI built the capability of “ingesting” a file submission from these systems by a Secure File Transfer Protocol within the first quarter of 2017. The FBI also provided a means for agencies and states to submit data through Extensible Markup Language web services. The FBI provided technical specifications to agencies and states wishing to provide data as a bulk file in addition to the instructions.

3. Methods to Maximize Response

Addressing Nonresponse

Analysis of Patterns of Missing Values

To determine if there was a need for a nonresponse bias study, the FBI analyzed overall reporting patterns from agencies within the pilot states and agencies for the first six months of data collection and will continue to monitor the response rates once the data collection is considered operational. The analysis looks for patterns of unit missing data (i.e., nonparticipating agencies), as well as item missing data (e.g., not reporting within-scope incidents of firearm discharges) by agency type that fall below a threshold of 80 percent. An additional dimension is the data collection is also structured in such a way agencies can leave the majority of data elements as “pending further investigation.” This data value is provided to agencies to accommodate both legal and contractual obligations regarding the release of information. Agencies are frequently bound by either local statute, local policy, or collective bargaining agreements in terms of what information can be released and when it may be released. After the first full year of reporting, the FBI UCR Program will reassess nonresponse patterns and work with the BJS and external experts to provide a methodology for arriving at national estimates.

Technical Response to Address Agency Nonresponse

The recommendation of the Criminal Justice Information Services (CJIS) Advisory Policy Board (APB) to create an FBI-sponsored and FBI-maintained tool is in direct response to issues which have continually provided impediments to the adoption of modifications to the UCR Program. Traditionally, the UCR Program has provided to both agencies and state UCR programs a set of technical specifications for a data submission to any part of the UCR data collections. However, this method is under the presumption that agencies and state UCR programs assume the responsibility to build and maintain a data system for collecting the data. In the case of the use of force data collection, the FBI sponsors and maintains a data collection tool which is accessible through the Law Enforcement Enterprise Portal (LEEP). This portal capability enables agencies to contribute their data directly to the FBI or allows state UCR Program Managers to use the tool to manage the data collection for their states. The tool has been constructed in such a fashion state UCR Program Managers have enhanced privileges to monitor reporting status and other data quality elements.

The LEEP data collection tool assumes agencies have consistent connectivity to the Internet and also have maintained an active account on the LEEP. However, it is unlikely all agencies will proactively enroll in LEEP. The FBI proactively recruited agencies for participation in the pilot study. After the FBI secured an agreement by an agency to participate in the pilot study, the FBI worked with a point of contact within each pilot agency to work on connectivity and LEEP and National UoF Data Collection Portal access issues. This effort allowed for the FBI to determine the most common problems related to access and develop mitigation against these problems.

Confirming a Report of Zero versus Nonresponse

The final aspect of the needs assessment and technical review addresses the current limitations of the justifiable homicide that prevent the FBI from quantifying the level of reporting by UCR contributing agencies. The current systems do not provide a path for agencies to report they record no justifiable homicides as part of the UCR data collections. The data collection on use of force looks for agencies to positively affirm on a monthly basis they did not have any use of force which resulted in a fatality, a serious bodily injury to a person, or firearm discharges at or in the direction of a person.

In addition, the BJS will continue to work with the FBI in researching open-source data on law enforcement fatalities to assist in the determination of nonresponse by agencies.

4. Testing of Procedures

Formal Testing

The testing process in the development of the National UoF Data Collection used a multi-stage approach. The initial process included a needs assessment and conceptualization of key estimates and data elements. This process involved assessing agency record systems, working through the FBI’s APB process, holding focus groups with key law enforcement components, and developing key data elements through the FBI’s Use of Force Task Force. In addition, the FBI consulted with other agencies, such as the BJS and DOJ, on collections related to force. Given the significant overlap in data elements, the DOJ’s Death in Custody Reporting Act data can provide insight into the quality and completeness of agency reporting. Finally, a description of the formal testing process follows. This testing addressed six questions:

What information on use of force do agencies have readily available?

Will agencies provide this information?

Do agencies correctly interpret the scope of the collection?

Do agencies provide the correct information from their record systems?

What procedures are optimal for minimizing agency nonresponse and maximizing data quality?

Do agencies find the National UoF Data Collection application intuitive and easy to use?

The proposed testing occurred in an iterative process, with prior testing informing future testing panels in an effort to expedite the full clearance process. The testing plans were a proposed set of generic, small scale tests, building to a full implementation. Permission for the pretesting activities were sought under the existing UCR Generic Clearance, Office of Management and Budget (OMB) Number 1110-0057).

Pre-testing

Pre-testing activities conducted prior to the initiation of the pilot study. These activities provided the preliminary information needed to both construct the sample of targeted agencies for the pilot study and identify early problem areas that can be resolved prior to formal testing. The pre-testing consisted of three parts: cognitive testing, a canvass of state UCR Program Managers and CJIS Systems Officers, and testing of questionnaire design and usability.

Cognitive Testing

Purpose of the Research

The purpose of the cognitive testing was to investigate the understanding of the language and wording of the questions in the proposed data collection on law enforcement use of force, as well as their associated instructions by the law enforcement community. The ultimate goal for the development research activities was to ensure participants had a clear understanding of what information was requested even in complex law enforcement situations. This aided the UCR Program in its efforts to increase the overall validity and reliability of its data collections. The cognitive testing was a first step to understand the extent to which the law enforcement community had a common understanding of key concepts in the data collection. In addition, there were questions which asked participants to indicate what records are readily available on certain key pieces of information such as time and location.

The cognitive testing instrument was developed with input from the law enforcement community (through the Use of Force Task Force membership), the BJS, and William Bozeman, M.D. Dr. Bozeman is a physician in the Department of Emergency Medicine at Wake Forest University. He has been extensively published in the research area of injury and law enforcement use of force and is a member of the Police Physicians Section of the International Association of Chiefs of Police. We continued our collaboration with all three parties for both pre-testing activities and the pilot study. Based upon input from all parties, draft questions were revised to reflect the final version attached to this document.

Methodological Plan

The cognitive testing primarily focused upon the language and construction of the response categories rather than the usability of the Web-form or other questions on mode of collection. These usability tests were conducted as a part of system development prior to the beginning of the pilot study. The purpose of the cognitive test was to identify key concepts which may have the potential for a high amount of variability in their interpretation. These areas required thorough explanation to promote the reliability of the information measured.

The content and scope of the National UoF Data Collection was constructed based upon the consensus of representatives from the law enforcement community. Through the CJIS APB and the work of the Use of Force Task Force, the law enforcement community indicated this information was valuable for understanding the circumstances surrounding a use of force by law enforcement and the information existed in local records on these events. However, there was a lack of information to understand the extent to which the law enforcement community applies certain terms on a consistent basis.

The purpose of the questions on the cognitive testing instrument was to identify areas where there might not be a common understanding of the same terminology. In essence, the results of the test provided a general assessment of whether there was an existing normative understanding of some concepts in the National UoF Data Collection. This questionnaire was not designed to be used to understand how the terminology may be applied on complex law enforcement scenarios. The FBI peformed further analysis on the application of definitions and guidance during the pilot study.

The areas addressed in the cognitive testing included the following:

The assignment at the time of the incident

The selection of the location and location type (because many location types are not mutually exclusive)

Further exploration on the request identifying aggression

The application of the legal definition of serious bodily injury

On each of these particular concepts, the participants were presented with a series of questions. Some of the questions involved a simple “yes” or “no” response based on how information was recorded by law enforcement. Other questions presented an array of responses for their ranking or interpretation. For example, on the question of serious bodily injury, a list of potential injuries was offered to participants. Each participant indicated the injuries he or she understood to be “serious” based upon the definition provided.

Participants were recruited by a solicitation to the 280 participants in the FBI National Academy. These 280 potential participants represent the total roster of the current FBI National Academy class. The FBI National Academy is a 10-week training program of leaders and managers of state, local, county, tribal, military, federal, and international law enforcement agencies. The questionnaire was administered during a group assembly in November 2016 with 149 completed surveys returned.

Analysis

Of the 149 participants in the survey, the majority were law enforcement representatives from municipal police agencies (61.4 percent), followed by county sheriffs (12.4 percent) and state police (10.3 percent). The largest share of participants described themselves as “mid-level management” (72.2 percent). The regional representation of participants generally mirrored the regional representation of the UCR Program with most participants indicating they are from the South and the fewest indicating they are from the Northeast. In most cases, the results of the testing led to improvements in instructions which were provided as part of the National UoF Data Collection. For questions on both injury and resistance/weapons, results indicated the need for additional data values. For more detailed results, a technical report is available upon request.

Canvass of State UCR Programs

Working with the Association of State UCR Programs (ASUCRP) and the BJS, the FBI constructed a survey for state UCR Program Managers. The primary purpose of this survey was to identify potential participants in the pilot study which commenced January 2017. The National UoF Data Collection allows for states or other domains (for example, federal or tribal agencies) to determine one of three primary paths to submit and manage their data. The states or domains which have their own data systems to collect and maintain use of force data can submit their data as a bulk submission. In the absence of a state/domain solution, the states or domains have the ability to use the data collection tool built and maintained by the FBI on LEEP. The LEEP is a restricted access environment accessible through the Internet which hosts law enforcement services, including the use-of-force data collection tool. Law enforcement agencies within the state directly access the data collection tool on LEEP to submit their agencies’ information. The state or domain UCR program indicate whether they will manage the data collection, as is the case with other UCR data collections, or if the state will allow for agencies to submit data as a direct contributor.

Both phases of the pilot study were predicated on selecting a few states for targeted recruitment for each of the phases. The canvass assisted the FBI with identifying state UCR programs which used the data collection tool on LEEP to fully manage their use of force data collection versus those which have the ability to capture the data in a state system. Additional considerations for the selection of participants in the pilot study include the following information:

Are there any statutory obligations to collect UCR data?

Are there any statutory obligations to collect data on law enforcement use of force? If so, what is the scope of that collection?

Does the state have or anticipate building a system to capture information on law enforcement use of force?

What are the technical capabilities of existing or proposed state systems to collect information on law enforcement use of force?

Will the state program retain management authority over the use of force data collection, or will the state allow all law enforcement agencies to provide data directly to the FBI?

Will the state fully validate data prior to submission to the FBI?

Background Research on Survey Instrument

The survey was constructed with input from various entities both within and outside the FBI which are involved with various aspects of UCR data management—including the ASUCRP and the CJIS APB. Based upon this input, there were six primary areas that were seen to have a potential to influence on participation in the National UoF Data Collection. These areas were also identified as most likely to affect levels of participation in the National UoF Data Collection and subsequent data completeness and quality based upon past experience of FBI personnel and early communications with potential data contributors.

The areas covered in the survey include the following:

How management of the National UoF Data Collection will be organized (2 questions)

Technical capabilities with state or domain systems (9 questions)

State statutes regarding UCR or UoF data collection (4 questions)

Data quality, training, and auditing capabilities of state or domain systems (7 questions)

Publication of use-of-force data by the state or domain (4 questions)

Use of the LEEP by personnel with the state or domain program (6 questions)

Methodological Plan and Selection of Participants

The survey was distributed to state and domain points-of-contact via email in a fillable portable document format. These individuals are usually described as program managers or CJIS Systems Officers (CSOs). The FBI relies upon these individuals to be the main conduit for the collection of UCR data. This would include all 50 states and territories, as well as tribal and federal domain managers. This was not a sample-based survey and had to 104 potential participants based upon two points-of-contact per state and one per territory. The federal domains were also given the option of completing the survey to include the four DOJ law enforcement entities, the Bureau of Indian Affairs, and other federal law enforcement agencies. The survey had one survey returned from 41 of each of the states and outlying areas.

Analysis Plan

The responses from the survey will be analyzed to identify areas the FBI needs to address to ensure the data submissions to the National UoF Data Collection have a high level of completeness and quality. In addition, the state participants in the pilot study were identified based upon the responses to primarily two variables. The pilot states managed their collection of use-of-force data and also used the FBI-constructed collection application on LEEP.

The analysis of the responses involved descriptive statistics. Since the variables measured on the survey are mostly categorical in nature, these statistics involved frequency distributions and cross-tabulations. This survey did not rely upon a statistical sample or seek to test any hypotheses, so there was no need for statistical tests of significance. An internal report was completed with the results of the analysis and shared with the participants of the survey and others upon request.

Testing of Questionnaire Design/Usability Testing

Background Research

The FBI’s approach to questionnaire design and usability testing was a multistage, iterative approach commonly associated with agile development. Final usability testing was conducted as a part of Operational Evaluation testing in January and February 2017. In addition to Operational Evaluation testing, FBI conducted thirteen separate demonstrations of the application in front of 111 different individuals. These demonstrations included the same users on certain occasions, which allowed the development team the opportunity to receive feedback on adjustments and modifications.

A demonstration was conducted after each development sprint for a total of six. The audience for these demos were the Product Owner and at least one representative each from Contracts, Configuration Management, Testing, Crime Data Modernization, LEEP, and Security each representing particular expertise with web application development. In addition, audience members represented the sworn and civilian law enforcement community and provided feedback on the intuitive nature and ease of use of the application. The smallest number of witnesses for a demo was 11, and the most was 22. Each sprint demonstration consisted of a walk-through of the user stories that had been agreed to for that particular sprint, a demonstration of the applications abilities with particular emphasis on the new capabilities related to the stories, a discussion regarding whether or not the new capabilities fulfilled the agreed-to stories, and an agreement as to what stories were completed and what stories (if any) still needed completed to address the usability of the application.

Of the thirteen total demonstrations, there were two internal demonstrations (one after the third sprint and one after the sixth) conducted for the project stakeholders. These demonstrations were conducted for various unit and section chiefs throughout CJIS as well as the Deputy Assistant Directors of the Operational Programs Branch and the Information Services Branch. The format in both cases was a PowerPoint slideshow defining the system and relaying the approved stories followed by a live demonstration of the application. The rest of the time was spent taking feedback from the attendees for possible incorporation into later iterations of the product. Average attendance was 22.

The FBI was able to conduct a series of five demonstrations with external stakeholders. A demonstration was conducted for the FBI Inspection Division at FBI Headquarters to get them familiar with the upcoming application. The demonstration was witnessed by an audience of executives to include four FBI Special Agents and subject matter experts at FBI Headquarters. There was an active give-and-take session throughout the demonstration, and several action items were captured to investigate for possible inclusion in future releases of the application. A similar demonstration was later held at FBI Headquarters for representatives from the Drug Enforcement Agency, Bureau of Alcohol, Tobacco, Firearms, and Explosives, and the United States Marshals Service. Finally, three demonstrations were held via Skype with state UCR Program managers and state criminal justice information services managers. In all five demonstrations, attendees had the opportunity to provide feedback and ask questions about the way the application was designed to function.

Methodology

The Operational Evaluation consisted of three testing sessions, each one hour in length. Two sessions were conducted in one of classrooms at the FBI CJIS Division facility on the same day, while a third testing session was conducted approximately one month later. The first session consisted of nine participants who each will be assigned two scenarios to follow. Scenario A consisted of placing a use-of-force incident into the system for review, and Scenario B consisted of creating and submitting a Zero Report. A Zero Report is simply a record submitted by an agency stating that they did not have any use-of-force incidents that month. It is expected to be the most common of all types of submissions.

The second session consisted of fourteen participants who were assigned two scenarios each. Scenarios were a mixture of the earlier Scenarios A and B, as well as new Scenarios C and D. Scenario C consisted of going into the system, locating the incidents created in Scenario A, and approving those incidents for submission. Scenario D was the review of a Zero Report.

Each volunteer was asked to complete System Usability Scale (SUS) questionnaire. Results were evaluated for possible changes in future releases of the product. Based upon the twenty-eight participants providing complete responses in the Operational Evaluation, the average score was 79.464. This score indicates participants generally found the application to be easy to use. The ratings of four participants resulted in individual scores below 68. Any system scoring above 68 on the SUS is deemed above average in usability. More detailed information on the results is available upon request.

Participant Selection

Volunteers were pulled from various sources, both internal and external to the CJIS Division. Volunteers were solicited from FBI Special Agents assigned to the CJIS Division, the FBI Police force assigned to the CJIS Division, various record clerks within the CJIS Division (as record clerks most assuredly were the individuals inputting incident information, especially in larger law enforcement organizations), and local (city, county, or state) police. Nonfederal volunteers were limited to nine or fewer, partially due to the difficulty receiving their clearances necessary to enter the facility.

Evaluation/Analysis

The sessions were evaluated via the SUS2 .

The SUS consisted of ten responses:

I think that I would like to use this system frequently.

I found the system unnecessarily complex.

I thought the system was easy to use.

I think that I would need the support of a technical person to be able to use this system.

I found the various functions in this system were well integrated.

I thought there was too much inconsistency in this system.

I would imagine that most people would learn to use this system very quickly.

I found the system very cumbersome to use.

I felt very confident using the system.

I needed to learn a lot of things before I could get going with this system.

The SUS will use the following response

format:

The SUS will be scored as follows:

For odd items: subtract one from the user response.

For even-numbered items: subtract the user responses from 5

This scales all values from 0 to 4 (with four being the most positive response).

Add up the converted responses for each user and multiply that total by 2.5. This converts the range of possible values from 0 to 100 instead of from 0 to 40.

Pilot

The pilot study consisted of two phases. Each phase included a set of target agencies and states allowing for sufficient data to evaluate intercoder reliability in the application of definitions and guidance. While survey design “best practices” can be used to inform the process of eliciting information from individuals providing law enforcement statistics for the UCR Program, the data collection is more similar to an extensive process of content analysis. Information captured within law enforcement records and narratives serve as the basis for the statistical information forwarded to the FBI. The challenge for the FBI UCR Program is to communicate coding schemes based upon a common set of definitions. Instructions and manuals, as well as training modules and curricula, were developed and served to help guide individuals at LEAs to translate their local records into a uniform manner. While basic instructions were provided during the pilot study, the results of the pilot study identified concepts with less consensus across locations and types of LEAs for the future development of in-depth instructions, manuals, training modules, and curricula.

Phase I

The activities of the first phase of the pilot focused on a prospective comparison of reported incidents submitted in the UoF data collection through the data collection tool on LEEP to the original records voluntarily provided by the reporting agency to the FBI. Those recruited agencies agreed to participate in the pilot study and understood local records would be forwarded to the FBI upon submission of statistical information to the UoF data collection tool. The local case information was redacted of any personally identifiable information prior to its forwarding to the FBI, and the FBI destroyed all local records upon completion of the pilot study.

The targeted agencies for participation in the pilot study included three groups of agencies, while also accepting agencies of any size who voluntarily approached the FBI to provide their information:

The largest local LEAs with a workforce of 750 or more sworn officers were targeted. The group of the largest agencies included at least 68 agencies across 23 states based on information submitted to the UCR Program. Each state/local agency was approached through their UCR Program Manager for their voluntary agreement to provide data for submission to the UoF data collection and participate in the pilot study activities.

The FBI identified state UCR programs to participate on a voluntary basis.

All four Department of Justice (DOJ) LEAs were asked for voluntary participation.

These state UCR Programs were selected based upon the results of the canvass of the states during pre-testing and subsequent conversations with state representatives about the pilot study. These identified states represented UCR programs using the data collection tool on LEEP to manage the data collection for their UCR Programs.

The Phase I assessment consisted of an administrative review and data quality review. As a prelude to the review of local agency records, the FBI asked each LEA specific questions about their participation in the National UoF Data Collection. The intent was to assess their understanding of and capabilities to comply with data collection guidelines. This occurred upon the submission of the first incident to the National UoF Data Collection pilot. Agencies which opted to participate in the pilot study were provided an overview of the intent of the collection and expressed an interest in wanting to assist the FBI with its development. In addition, information regarding the reasons for refusal were systematically recorded and reviewed for agencies opting not to participate in the pilot. This data was analyzed for detectable patterns by type of agency, region, or any other agency characteristic.

Following review of the LEA’s provided documentation on the UoF incident, the FBI independently and blindly completed the fields in the Incident, Subject, and Officer sections of the data collection. The FBI assisted with assessing whether data collection guidelines were consistently interpreted and applied. The FBI will share a copy of the pilot study report with all participating agencies following OMB approval.

The overall objectives of the Phase I of the pilot study were:

To measure the extent LEAs exhibit a consistent interpretation of the variables in the National UoF Data Collection with the FBI as measured by the intercoder reliability measure of Cohen’s kappa.

To measure the extent LEAs exhibit a consistent interpretation of serious bodily injury as measured by intercoder reliability between FBI and agency.

For the FBI to make corresponding recommendations regarding coding schemes and definitions.

To identify what additional concepts may need to be explored with the subset of LEAs for Phase II.

To systematically record reasons for refusals to participate in the pilot study.

To work with UCR Program Managers in the pilot states to identify any potential problems with record-keeping impeding the ability to provide the UoF information to the FBI.

Phase I of the pilot study lasted three months and began July 1, 2017 concluding on September 30, 2017.

Phase II

LEAs participating in Phase I served as the sampling frame for Phase II. Phase II was an extension of the records review and comparison with targeted, on-site visits to a sample of agencies. The FBI worked with the Bureau of Justice Statistics (BJS) in the development of a statistically-defensible sampling strategy. LEA participation in this phase was also voluntary and occurred during a three-month time period following the conclusion of Phase I.

The original set of agencies recruited for the first phase served as a basis for the selection of agencies in the second phase. The FBI also continued to accept agencies providing data voluntarily to the data collection.

The activities of Phase II were primarily centered on an extension of the records review and comparison with targeted, on-site visits with a sample of pilot agencies. Due to the small numbers of incidents submitted to the data collection and the wide dispersion of pilot agencies, the FBI used a purposefully-chosen sample of agencies for on-site visits by FBI personnel. The FBI, in consultation with the BJS, selected six agencies in four locations to represent key areas of variation and diversity for reporting agencies. There were three major metropolitan police departments and two county agencies. In addition, a state agency with primary responsibility for reporting data for all agencies in the state was also included.

The primary objective of the on-site visits was to understand factors which contributed to the level of underreporting of within-scope incidents—especially those with serious bodily injury or firearm discharges. The on-site visits also allowed for an assessment of local record-keeping capabilities. While this did not allow for an estimate of the level of underreporting by agencies, the information and insight gained from the on-site visits allowed the FBI to develop appropriate responses to mitigate those effects.

The objectives of Phase II were:

To ascertain factors which contributed to either underreporting or over-reporting of incidents

To assess whether any incidents occurred which should have been reported to the National UoF Data Collection, based upon the definition of serious bodily injury or firearm discharges, but were not.

To further explore factors negatively impacting the reliable recording of characteristics of incidents of law enforcement UoF as measured in the National UoF Data Collection.

To allow for an assessment of local-record-keeping capabilities and testing of any possible adjustments made to the language of instructions and data elements or changes to the data collection which may have been implemented during Phase I.

The Phase II of the pilot study lasted three months and began at the conclusion of Phase I on October 1, 2017 concluding on December 31, 2017. The results of Phase II of the pilot study were provided to the OMB at its conclusion and did not include any substantive changes. The full collection of the data will not proceed until clearance from OMB is received.

Analytical Plan and Publication from Pilot Study

At the conclusion of the pilot study, the FBI will release a report following OMB approval, at the request of law enforcement, detailing the results of its pilot study data collection and analysis. The results from the pilot study will be released to the public and will consist of primarily three sections. The first section will provide the results of the on-site assessment regarding underreporting and completeness, as well as an assessment of the reliability of reported data from the Phase I records review. All results in this section will be pooled, and no individual agency will be identified. The second section will provide results of the analysis of nonresponse and missing data—to include refusals to participate in the pilot study. This section will also identify whether a need clearly exists for a nonresponse bias study and a proposed methodology for the study. Again, all results will be pooled, and no individual agency will be identified in the second section. As the pilot study only has two phases, the third section of the report will detail the data collection policies and procedures which will assist with maintaining data quality and completeness as a permanent and final data collection. The third section will also detail any on-going collaboration and partnership between the FBI and the BJS to achieve and maintain a high-level of data quality. Finally, an optional fourth section will list basic agency-level counts of reported data from all participating agencies as a showcase of item completeness and quality. In addition to the public report, the FBI will provide opportunities for the participating agencies in the two phases of the pilot study to hear the results directly and ask questions. This will occur through teleconferences.

Terms of Clearance

The FBI recognizes the importance of response rates and population coverage for the ability of the National UoF Data Collection to generate valid national estimates of the UoF by police officers. After consultation with OMB, FBI agrees to the following terms of clearance describing the quality standards which will apply to the dissemination of the results. For the purpose of these conditions, “coverage rate” refers to the total law enforcement officer population covered by UoF. In addition, “coverage rate” will be considered on both a state-by-state basis, as well as a national scale. “Key variables” include subject injuries received and type of force used. Item non-response refers to the percent of respondents that either do not answer the question associated with a key variable or answer “unknown and unlikely to ever be known.”

For the first year of collection,

If the coverage rate is 80 percent or greater and the item non-response is 30 percent or less, then no conditions apply to the dissemination of the results.

If the coverage rate is between 60 percent and 80 percent or the item non-response is greater than 30 percent, then the FBI will not release counts or totals, but may release ratios or percentages.

If the coverage rate is between 40 percent and 60 percent, then the FBI may release only the response percentages for the key variables across the entire population and for subpopulations which represent 20 percent or more of the total population.

If the coverage rate is less than 40 percent, the FBI will not disseminate results.

In subsequent years, if any combination of conditions three and four are met for three consecutive years, or if condition four is met for two consecutive years, then the FBI will discontinue the collection and explore alternate approaches for collecting the information, for example by working cooperatively with the Bureau of Justice Statistics to expand their current efforts to collect information on deaths in custody, to include law enforcement.

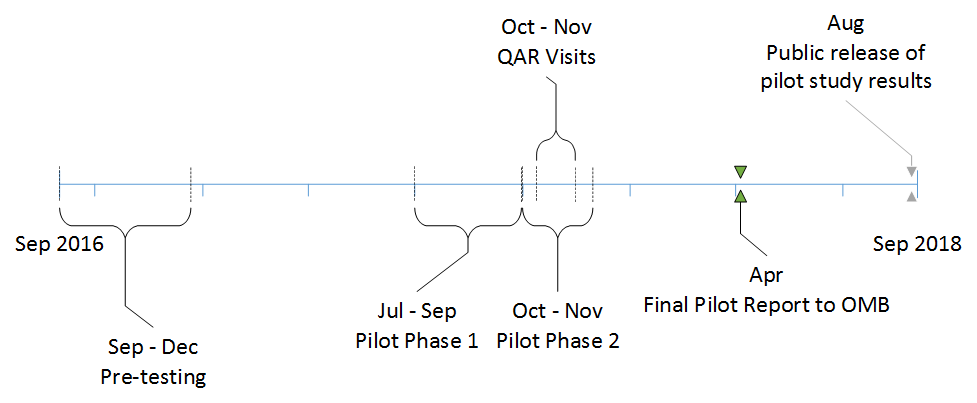

Timeline

5. Contacts for Statistical Aspects and Data Collection

The following individuals were consulted on statistical aspects of the design:

Jeri Mulrow, Ph.D.

Acting Director

Bureau of Justice Statistics

(202) 514-9283

William Sabol

Director (past)

Bureau of Justice Statistics

Michael Planty, Ph.D.

Deputy Director

Bureau of Justice Statistics

(202) 514-9746

Shelley Hyland, Ph.D.

Statistician

Bureau of Justice Statistics

(202) 616-1706

The FBI UCR Program does not have immediate plans to use contractors, grantees, or other persons to collect and analyze the information on behalf of the UCR Program.

1 Banks, D, et al. (2015) Arrest-Related Deaths Program Assessment: Technical Report. RTI International, http://www.bjs.gov/index.cfm?ty=pbdetail&iid=5259

2 Information available at https://www.usability.gov/how-to-and-tools/methods/system-usability-scale.html (accessed on December 15, 2016).

| File Type | application/msword |

| File Modified | 2018-09-04 |

| File Created | 2018-09-04 |

© 2026 OMB.report | Privacy Policy