Supporting Statement A_6.11.19(final)

Supporting Statement A_6.11.19(final).docx

SMARTool Pilot Replication Project

OMB: 0937-0207

Supporting Statement A for

the

Office

of the Assistant Secretary for Health

SMARTool

Pilot Replication Project

Submitted to

Office of Management and Budget

Office of

Information and Regulatory Affairs

Submitted by

Department of Health and Human Services

Office

of the Assistant Secretary for Health

June 2019

Contents

1. Circumstances Making the Collection of Information Necessary 1

2. Purpose and Use of Information Collection 2

3. Use of Improved Information Technology and Burden Reduction 8

4. Efforts to Identify Duplication and Use of Similar Information 8

5. Impact on Small Businesses or Other Small Entities 8

6. Consequences of Not Collecting the Information or of Collecting Less Frequently 9

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 9

8. Comments in Response to the Federal Register Notice, and Outside Consultation 9

9. Explanation of Any Payment/Gifts to Respondents 10

10. Assurance of Confidentiality Provided to Respondents 11

11. Justification for Sensitive Questions 13

12. Estimates of Annualized Hour and Cost Burden 13

14. Annualized Cost to Federal Government 18

15. Program Changes or Adjustments 18

16. Plans for Tabulation and Publication and Project Time Schedule 18

17. Display of Expiration Date for OMB Approval 18

Exhibits

Exhibit 1. Details about Participating Two Implementing Organizations (IOs) 2

Exhibit 2. Overview of the 10 SMARTool Targets 2

Exhibit 4. SRA Logic Model Factors that Will Be Examined in This Study 3

Exhibit 5. Proximal Outcomes That Will Be Examined in This Study 4

Exhibit 6. Quasi-Experimental Study Design: Within-School Comparison Group 6

Exhibit 7. Outside Consultation with Technical Experts 9

Exhibit 8. RTI Studies Involving Incentives for Class-Level Return of Consent Forms 10

Exhibit 9. RTI Studies Involving Incentives to Youth for Return of Consent Forms 11

Exhibit 10. Estimated Annualized Burden Hours 16

Exhibit 11. Estimated Annualized Cost to Respondents 16

Exhibit 12. Adjusted Hourly Wages Used in Burden Estimates 17

Supporting Statement for the Office of the Assistant Secretary for Health

SMARTool

Pilot Replication Project

A. Justification

This is a request for Office of Management and Budget (OMB) approval for new data collection to evaluate sexual risk avoidance (SRA) curricula that are aligned with the Systematic Method for Assessing Risk Avoidance Tool (SMARTool).1 The SMARTool is a technical assistance guide for use by schools, youth-serving organizations, and other agencies interested in delivering SRA education to youth.2 It was developed by the Center for Relationship Education (CRE) as part of a five-year cooperative agreement with the Centers for Disease Control and Prevention and was released in 2008. The Office of the Assistant Secretary for Health (OASH) is sponsoring a project to update the SMARTool to include emerging themes important to achieving optimal health outcomes within the adolescent SRA approach.3 OASH also plans to conduct an evaluation to assess the impact of two SRA curricula that are aligned with the updated SMARTool. The aims of the evaluation are to (1) produce preliminary evidence regarding the effects of two SMARTool-aligned SRA curricula on proximal youth outcomes4, and (2) derive lessons learned to improve SRA program implementation. The evaluation will use quantitative and qualitative methods. OASH is authorized to conduct this study by the Public Health Service Act (42 U.S.C.241) and the FY2017 Consolidated Appropriations for General Departmental Management to evaluate and refine programs that reduce teen pregnancy, including programs that implement SRA education.

To implement this project, OASH contracted with the MITRE Corporation, the operator of the Health Federally Funded Research and Development Center (FFRDC). The Health FFRDC team also includes two MITRE subcontractors, RTI International (RTI) and Atlas Research, Inc. Under the auspices of this project, MITRE has awarded subcontracts to two implementing organizations (IOs) that have established partnerships with approximately 14 secondary schools in 11 school districts to implement SRA education programs. For the purposes of this project, the FFRDC team defines IOs to be organizations focused on health, education, and social services that have experience working with schools or school districts to implement SRA programming. One IO has established partnerships with approximately 4 schools in 1 school district for this project, and the other has established partnerships with 10 schools in 10 different school districts. Each IO will be teaching a different SMARTool-aligned SRA curriculum to youth. Accordingly, results for each IO will be analyzed and reported separately, rather than pooled. Exhibit 1 displays these details.

Exhibit 1. Details about Participating Two Implementing Organizations (IOs)

IO and SRA curriculum |

Length of curriculum |

Expected # of districts |

Expected # of schools |

Estimated # of classrooms |

Estimated # of students |

IO A (REAL Essentials) |

6 sessions (7.5 hours) |

1 |

4 |

17-20 classrooms (30-35 students per classroom)

|

1,200 |

IO B (Represent®) |

5 lessons (3.75 hours) |

10 |

10 |

56-60 classrooms |

1,200 |

*The REAL Essentials curriculum used in IO A may require 1-3 weeks to complete, depending on school scheduling constraints (e.g., longer class block schedules vs. shorter class period schedules). The represent® curriculum used in IO B is approximately 1 week long.

The proposed formative evaluation will seek evidence of the effect of SMARTool-aligned SRA curricula on proximal youth outcomes (i.e., SRA-related knowledge, attitudes, intentions, skills, and peer and parental influences) that shape short- and longer-term sexual health behaviors. These proximal outcomes are aligned with (a) the 10 SMARTool “targets” or themes that correspond to protective factors associated with the sexual behaviors of youth, and (b) an SRA logic model developed as part of this project. A list of the 10 SMARTool targets is presented in Exhibit 2.

Exhibit 2. Overview of the 10 SMARTool Targets

Target Number |

Description |

1 |

Enhance knowledge of physical development and sexual risks |

2 |

Healthy relationship development |

3 |

Support personal attitudes and beliefs that value sexual risk avoidance |

4 |

Acknowledge and address common rationalizations for sexual activity |

5 |

Improve perception of and independence from negative peer and social norms |

6 |

Build personal competencies and self-efficacy to avoid sexual activity |

7 |

Strengthen personal intention and commitment to avoid sexual activity |

8 |

Identify and reduce the opportunities for sexual activity |

9 |

Strengthen future goals and opportunities |

10 |

Partner with parents |

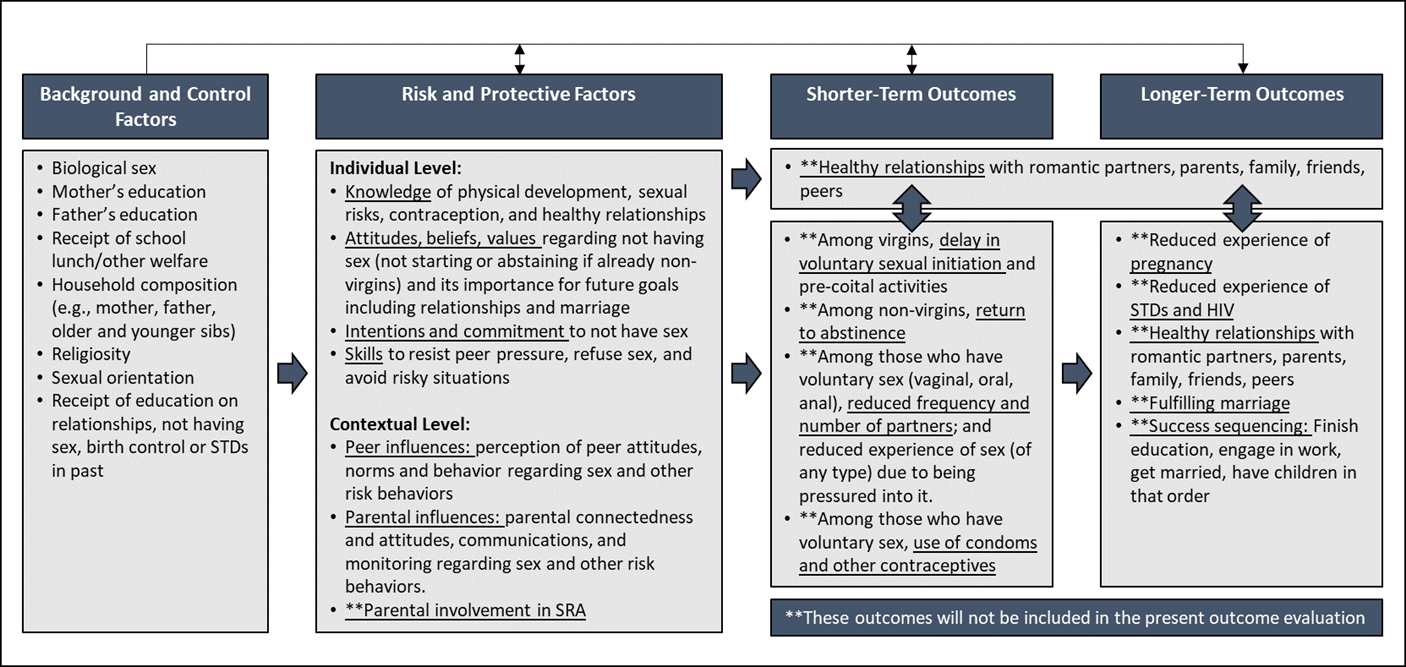

The SRA logic model is presented in Exhibit 3, with annotation to indicate which factors will be measured in the evaluation.

Factors from the SRA logic model that will be examined in this study are shown in Exhibit 4.

Exhibit 4. SRA Logic Model Factors that Will Be Examined in This Study

Background and Control Factors |

Proximal Outcomes (Risk and Protective Factors) |

|

|

The baseline and follow-up data collection instruments for youth in this evaluation are designed to measure these proximal outcomes. The data collection instruments are also designed to measure background (control or covariate) variables that have been theorized (in the SRA logic model) or empirically shown to account for some variance in youth proximal outcomes. Appendices A-J provide copies of the data collection instruments; including the Baseline and Follow-up outcome surveys (i.e., Youth Outcome Questionnaire), which include measures of the proximal outcome constructs described above.

This formative evaluation will focus on examining changes in proximal outcomes, or individual risk and protective factors (e.g., short term SRA-related knowledge, attitudes, and skills that may result from the SMARTool-aligned SRA curricula). Exhibit 5 shows the proximal outcome variables we will examine in this study, drawn from the 10 SMARTool targets and the SRA logic model.

Exhibit 5. Proximal Outcomes That Will Be Examined in This Study

From 10 SMARTool Targets |

From SRA Logic Model |

|

|

It is important to note that given the short time frame between the baseline and follow-up youth surveys, we do not anticipate observing changes in longer-term outcomes such as youth sexual behavior, so this formative evaluation does not include research questions related to the impact of the SRA curricula on youth sexual behavior. The major driver of this decision is the available funding and period of performance of MITRE’s contract for this project. Given the timeline for data collection and analysis for this study, it will not be feasible to conduct a second, longer-term follow-up data collection to assess longer-term outcomes such as behavior change. Limitations associated with conducting an impact analysis that focuses on youth attitudes and beliefs, but not behaviors will be discussed in the final report.

Overview of Study Design

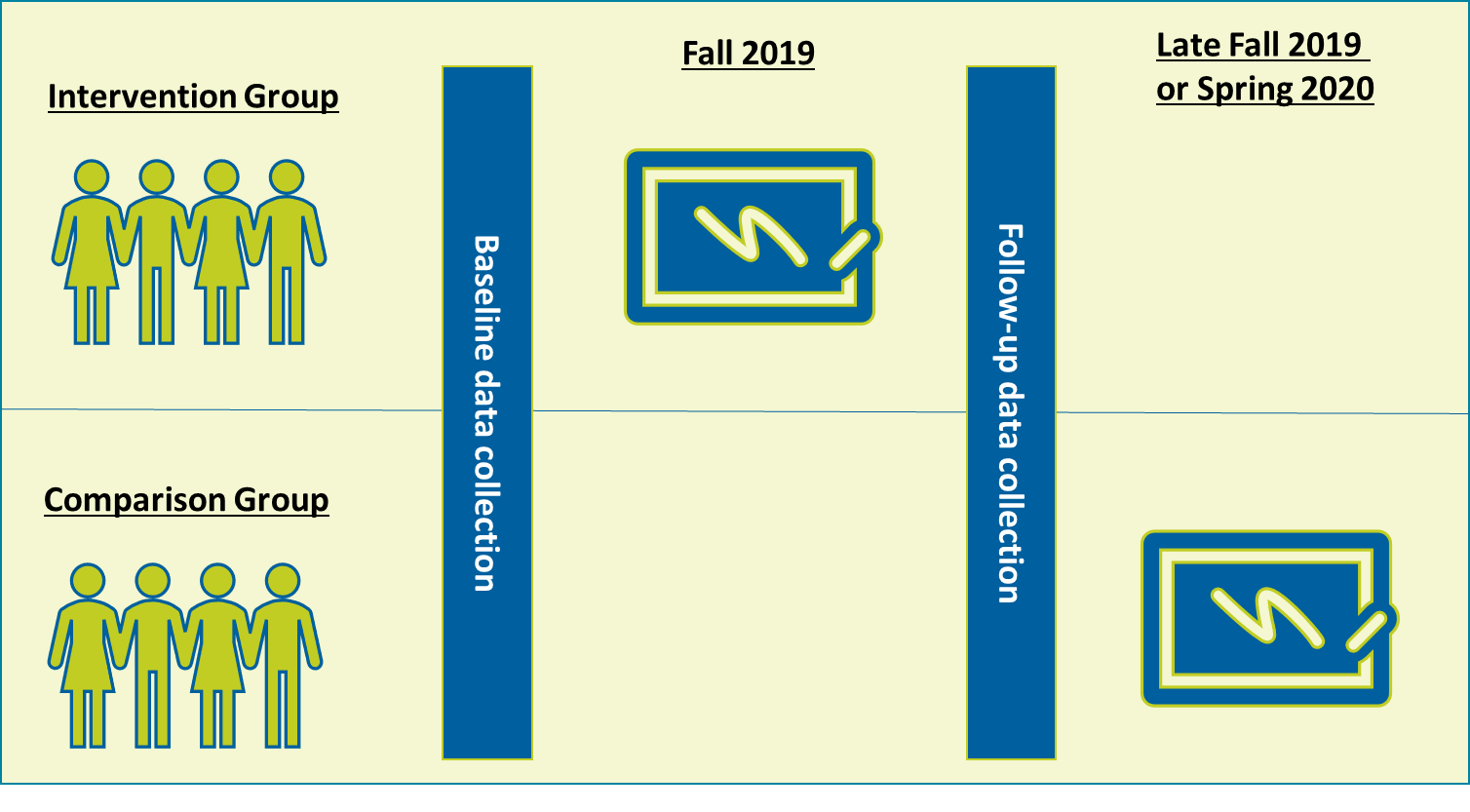

The study design to assess whether such changes have occurred is depicted in Exhibit 6.

Exhibit 6. Quasi-Experimental Study Design: Within-School Comparison Group

This study design is known as a quasi-experimental design with a within-school comparison group. Classes of students at each school will be assigned to either the intervention or comparison (i.e., non-intervention) group. The intervention group classes will receive the curriculum in Fall 2019, and the comparison group classes will not. The project team will collect data on proximal outcomes and background characteristics from youth in both groups (intervention and comparison group) at two time points: (a) Baseline: immediately before the intervention group receives the curriculum, and (b) Follow-up: immediately after the intervention group receives the curriculum.

The curriculum will be provided to the comparison group after follow-up data collection is complete, but this curriculum delivery is not part of the study design.

The primary research question being tested in this design is as follows:

What are the effects of SRA curricula on participants’ SRA-related proximal outcomes (e.g., knowledge, attitudes, intentions, skills, and perceptions or experiences of peer and parental influences)?

As noted above, our study design examines the effects of SRA curricula on SRA participants’ proximal outcomes relative to a comparison group that did not receive the SRA curricula during the field period of data collection.

The analytic approach to answer this question will involve a multilevel analysis to account for clustering (nesting) of students within classrooms as the level of assignment to intervention vs. comparison groups. The analysis will compare the change in youth outcomes in the intervention group over time to the change in youth outcomes in the comparison group over time. If the change in the intervention group is larger than the change in the comparison group, controlling for key covariates (e.g., baseline sexual experience, demographics), then the study can be said to support the hypothesis that SRA curricula have some effect on the proximal youth outcomes measured in this study.

In addition to this research question, the process evaluation will seek evidence of potential explanations for youth proximal outcome findings and generate descriptive data and qualitative insights on promising practices for IOs in delivering SRA programs. Process evaluation data will be collected on adherence (what is being taught), quality (how the content is taught), and implementation (logistics responsible for a conducive learning environment, such as context, dosage, and participant responsiveness).5 Descriptive and qualitative data will also be collected on IOs and their implementation to inform collection and dissemination of good practices for SRA program providers and the broader field of adolescent pregnancy prevention. Process evaluation data will be collected from youth participants in the program, facilitators who deliver the curriculum, and observers who monitor the fidelity/quality of program delivery.

The IOs participating in the evaluation may use the results of the evaluation to improve their SRA curriculum and program implementation. The results of the evaluation will also inform HHS on the extent to which two curricula affect youth proximal outcomes that are aligned with the SMARTool Targets.

The data collection instruments may be shared with program providers or researchers who want to evaluate the impact of SRA curricula on the SMARTool targets. If resources permit, the project team will also conduct psychometric analysis (e.g., Cronbach’s alpha, factor analysis) and report basic psychometrics on the youth data collection instruments to inform HHS.

Limitations of the study design.

The limitations of a within-school comparison group design measuring short-term effects on proximal outcomes are described here and will be described in the final report for the evaluation.

One limitation of a within-school comparison group design is the potential for unmeasured spillover or contamination effects, or the possibility that students in the intervention group classes will communicate with students in the comparison group classes in a manner that influences comparison students’ attitudes, beliefs, or intentions before follow-up data are collected.. Spillover and contamination effects result in potentially underestimating the impact of the SRA curricula.6

The study team explored the possibility of mitigating the potential for spillover or contamination effects by employing a study design that used a between-school comparison group (rather than a within-school comparison group). However, the power calculations for a design with a between-school comparison group revealed a prohibitively large number of schools (at least 22 intervention and 22 comparison schools) would be required to adequately power this design, given the project constraints (e.g., funding, time).7 Further, designs with a between-school comparison group typically have their own limitations— “business as usual” in which schools in the comparison group are implementing their own pregnancy prevention curricula.8 Nonetheless, the limitations of the within-school comparison group design will be discussed in the final report for the evaluation.

Where feasible, available technology will be used to reduce burden and improve efficiency and accuracy. For example, student-level school records (such as gender, race/ethnicity, free and reduced price meal eligibility, English language learner status) will be provided to the study team in electronic format via a secure file transfer protocol (SFTP) environment that is approved by the MITRE Privacy Office for PII storage and transfer and that is only accessible to the project team. Data submitted in hard copy or via fax or PDF file will be manually keyed for storage in this secure environment.

Because of variable access to computers and the Internet in school settings, all surveys will be administered with paper-and-pencil questionnaires.

The evaluation does not duplicate other studies. Several studies have evaluated SRA curricula, including evaluation of curricula such as “Big Decisions” (Realini et al., 2010), “Choosing the Best” (Lieberman & Su, 2012), “Yes You Can!” (Donnelly et al., 2016), and others (Borawski et al., 2005; Jemmott et al., 2010; Kirby, 2008; Markham et al., 2012; Piotrowski & Hedeker, 2016; Weed et al., 2008). However, no studies have specifically evaluated the impact of the SRA curricula on youth outcomes that are aligned with the SMARTool targets.

Schools included in this study may be part of small school districts. Burden will be minimized wherever possible. Contractor staff will conduct all data collection activities and will assist with parental consent and other study tasks as much as possible within each school. Schools will be able to provide student data (e.g., attendance data) in the format that is most efficient for them.

For the purposes of this information collection request, we make the conservative assumption that 100 percent of the schools providing student rosters and administrative data are part of districts that will meet OMB’s definition of small entities: a small government jurisdiction which is a government of a city, county, town, township, school district, or special district with a population of less than 50,000.

If the youth surveys were not conducted, it would not be possible to

assess changes in program participants’

proximal outcomes.

The proposed data collection is consistent with guidelines set forth in 5 CFR 1320.5.

Federal Register Notice Comments. A 60-Day Federal Register Notice was published in the Federal Register on July 2, 2018, in Volume 83, Number 127, pages 30942–30943 (see Appendix N). There were three public comments. A summary of public comments received in response to the 60-Day Federal Register Notice and a response to these comments are in Appendix R. No changes to this information collection request were made as a result of the public comments.

A 30-Day Federal Register Notice was published in the Federal Register on February 8, 2019, in Volume 84, Number 27, pages 2884-2885 (see Appendix L). There was only one public comment requesting copies of the data collection instruments, which were provided to the requestor. No changes to this information collection request were made as a result of the public comments.

Changes were made to this information collection after the publication of the 30-day Federal Register Notice due to consultation with IOs on community standards for sensitive questions and follow-on expert review to minimize youth burden in completing the updated versions of the instruments. IOs provided guidance regarding acceptable language in their communities for youth surveys on sensitive topics such as sexual and pre-coital behaviors. This guidance was solicited to help to increase parental consent and youth response rates in communities where the study will be conducted. After IOs provided guidance, the youth surveys were updated to reflect community standards, and the updated survey versions were provided an expert review to minimize youth burden (e.g., clarifying instructions and question wording).

Outside Consultation. To inform the design of the data collection, OASH consulted with a panel of technical experts on SRA education; the organization responsible for conducting the data collection, the MITRE Corporation; and its subcontractors, RTI, and Atlas Research. Exhibit 7 presents the name, affiliation, and contact information of members of the technical expert panel.

Exhibit 7. Outside Consultation with Technical Experts

Nanci Coppola, D.P.M., M.S. Principal Coppola and Company |

William Jeynes, Ph.D. Professor, California State University, Long Beach Senior Fellow, Witherspoon Institute |

Alma Golden, M.D. Deputy Assistant Administrator Bureau for Global Health, United States Agency for International Development |

Peggy Pecchio Executive Director |

Wade F. Horn, Ph.D. Managing Director Deloitte Consulting |

Lisa Rue, Ph.D. Vice President, Marketing and Strategic Partnerships Preventative Technology Solutions |

A particular challenge to conducting research about youth is obtaining parental consent to participate in the data collection activities. To increase response rates, we will offer small incentives to individual students, classes or groups of students, and/or public school teachers for the return of signed consent forms (regardless of whether the parents provide consent to participate). Which type of incentive may be most appropriate for each school will be determined in collaboration with the schools and the IOs. Options include:

A $25 gift card to each public school teacher for each classroom in which at least 90% of the parental consent forms are returned. Gift cards may be used to purchase classroom supplies or to pay for a class party. (These gift cards will go to school employees, not employees of the IO administering the curriculum.)

Eligibility to participate in two $25 gift card drawings for all youth who return a signed parental consent form.

A small incentive, equivalent to $1 or less in value (e.g., pen, notepad), for each youth who returns a signed form.

$50 for a party for the class from each grade at the school with the highest return rate.

Exhibits 8 and 9 list studies that have employed similar individual and school-level incentives.

Exhibit 8. RTI Studies Involving Incentives for Class-Level Return of Consent Forms

RTI study |

Incentive provided |

Safe Schools/Healthy Students Initiative (1999–2004) |

$25 per classroom achieving a minimum of 70% active parental consent forms signed and returned |

Middle School Coordinator Initiative (1999–2004) |

$25 per classroom achieving a minimum of 70% active parental consent forms signed and returned |

Impact Evaluation of a School-Based Violence Prevention Program (2004–2009) |

$25 per classroom achieving a minimum of 90% active parental consent forms signed and returned |

Exhibit 9. RTI Studies Involving Incentives to Youth for Return of Consent Forms

Parental consent and youth assent forms are provided in Appendices A and B, respectively. These forms discuss participants’ rights and privacy protections.

Personally identifiable information (PII) about participating youth—including name, date of birth, and student identification number—will be collected from their school or school district office along with school records data (e.g., gender, race/ethnicity, grade, homeroom teacher, eligibility for free and/or reduced-price lunches, and English as a Second Language (ESL) designation). PII will be used to construct youth rosters for the purpose of tracking parental consent forms for the youth surveys, distributing survey booklets to youth, and tracking attrition. This latter issue of tracking attrition is particularly important in program evaluation, so that researchers and other stakeholders can understand any differences between youth who participated in the study versus those who did not. Without receiving rosters of all eligible students in the school, researchers would only have demographic data from the youth who did participate in the study and would be unable to determine whether these youth differed from those who did not participate in the study.9 Some PII (e.g., demographics) from the rosters will also be used as covariates or control variables for the analysis.

This PII data will be shared with the project team by schools via a secure file transfer protocol (SFTP) environment that is approved by the MITRE Privacy Office for PII storage and transfer and that is only accessible to the project team. Privacy training and controlled administrative access approvals will be required for project team members to access this environment.

To better protect youth identities, each youth will be assigned a unique study identification number that corresponds with information they provide in the surveys and data from school records. Before the roster data is merged with the survey data, analysts will assign this unique study identification number to each student. They will then delete student names and other identifiers (e.g., student ID assigned by school) from the roster and survey data files. Date of birth will also be transformed into a simple “age” variable (e.g., 14, 15) and then date of birth will be deleted from the roster file. After the student names, student ID from the school, and date of birth are deleted from the roster file, the roster data will be merged with the survey data. Personal identifiers such as name, date of birth, and student ID number will be stripped from the datasets and destroyed.

All other data will be collected in paper form.

To protect youth’s privacy and data, for each of the youth surveys (baseline and follow-up, and process evaluation participant survey) a barcode label will be affixed to the cover of the survey booklet, on which students will either write their name or have their name pre-assigned to that specific barcode. After the survey booklets are distributed to the youth, the youth will be instructed to remove the entire cover sheet containing their name or the part of the label containing their name, leaving only the ID and barcode on the cover sheet and/or on all subsequent pages of the survey booklet. This enables the study team to link youth’s baseline and follow-up data for analysis, but without using PII to do so.

No PII will be stored with the data collected from the youth surveys or associated with the school records data. Only the study identification number will be stored with the data. Only members of the project team conducting the data collection will have a crosswalk that links study IDs to youth names. Once data collection has been completed and the final analytic datasets produced, the crosswalk will be destroyed. No study participant names or other identifying information will be included in any reports or data sets.

The RTI Research Operations Center (ROC) will process data from all paper forms. The SFTP environment will be used to store and analyze all data. The ROC complies with the confidentiality and data security guidelines set forth by the National Institute of Standards and Technology (NIST) in Recommended Security Controls for Federal Information Systems and Organizations. The ROC can accommodate both low- and moderate-risk projects, as defined by NIST’s Federal Information Processing Standards Publication 199, Standards for Security Categorization of Federal Information and Information Systems. After ROC processing is complete, the ROC will produce final datasets that are stripped of identifiers (e.g., name, DOB) and include only the study identification number. The ROC will then transfer these datasets to MITRE using the SFTP environment. All data will be stored and analyzed within this secure environment at MITRE. For paper forms, access to the keys to locked cabinets where paper data is stored will be formally controlled to ensure that only authorized individuals are allowed access to the paper data.

To further protect student and school identities, school names and locations will not be shared with the Agency sponsoring the study (OASH) or with anyone other than the Contractors performing the research. The data will be aggregated and reported for each of the two organizations delivering the two different curricula. The aggregated results may be shared with the organizations delivering the curricula for program improvement purposes.

Procedures for maintaining privacy will include: notarized nondisclosure affidavits obtained from all study personnel who will have access to individual identifiers; personnel training regarding the meaning of privacy; controlled and protected access to computer files; built-in safeguards concerning status monitoring and receipt control systems; and a secure, staffed, in-house computing facility.

Per the Protection of Pupil Rights Amendment (PPRA) (https://studentprivacy.ed.gov/topic/protection-pupil-rights-amendment-ppra), copies of the surveys will be made available in the school (or site) office for parents to review before they sign the consent form.

Data will be kept private to the extent allowed by law. Student-level records for participating youth will be requested from school districts and/or schools under the Family Educational and Privacy Act (FERPA) of 1974 (34 CFR Part 99) exception to the general consent requirement that permits disclosures to “organizations conducting studies for, or on behalf of, educational agencies or institutions” (34 CFR §§ 99.31(a) and 99.35). This information will be securely destroyed when no longer needed for the purposes specified in 34 CFR §99.35.

Data from key informant interviews and facilitator surveys will be provided to IOs and schools only at the aggregate level to protect respondents’ privacy. Because the facilitator Session Logs are part of the IOs’ ongoing program monitoring efforts, the IOs will have access to the data from the Session Logs at the individual level, but the schools will not.

Per HHS 45 CFR 46, all data will be retained for 3 years after MITRE submits its final expenditure report. Then it will be destroyed. A de-identified dataset may be provided to OASH upon completion of the study. Before delivery of any de-identified datasets to the OASH, the project team will conduct a privacy analysis and will aggregate reporting categories for demographic variables to ensure that no fewer than 5 individuals are in any combination of key demographic categories (e.g., Black female 15-year-olds), to mitigate the risk of re-identification.

RTI IRB has determined that the evaluation is not research involving human subjects as defined by DHHS regulations. We have also submitted the study to the MITRE Privacy Office and MITRE IRB for review, and we plan to submit an application for a Certificate of Confidentiality after receiving the MITRE IRB’s determination. As required by school districts, we will also submit the study to individual school district IRBs for review.

The primary purpose of SRA curricula is to reduce adolescent sexual risk behaviors and related outcomes such as pregnancy and sexually transmitted diseases. Although this study is not assessing behavioral outcomes due to timeline constraints, it is necessary to ask sensitive questions about these behavioral constructs to contextualize the findings and include as covariates, or control variables, for the study. Questions in the youth baseline and follow-up surveys will include whether youth have ever engaged in voluntary sexual intercourse. The questionnaire will not ask about any non-voluntary sexual experiences they may have had. Youth who respond that they have never engaged in sexual intercourse will be skipped out of all further questions about sexual activity; youth who respond that they have engaged in sexual activity will be asked additional questions about age of first sexual intercourse and number and sex of partners. Questions about sexual activity are necessary to assess risk factors for sexually transmitted diseases and pregnancy.

Calculation of the estimated annualized hour burden of responding to this information collection is presented in Exhibit 10 and the estimated annualized cost to participants is presented in Exhibit 11. Evaluation data collection will be conducted in one year (May 2019–January 2020), so the total burden and costs are the same as the annualized burden and costs. The total estimated annualized burden hours are 2,243, and the total estimated cost is $30,983.30.

The 2,243 burden hours are lower than the 3,135 estimated in the 30-day FRN. This is because we finalized the number of IOs (2 rather than the 4 previously assumed) and provided an updated estimate of the number of participating schools that would provide class rosters and administrative data (14). We also updated the number of teachers, facilitators, and sessions based on these final numbers of IOs and schools.

For the youth proximal outcome evaluation, parents will be asked to sign a parental consent form, youth will be asked to review and sign a youth assent form, and youth will complete a baseline and follow-up survey. We will also collect class rosters and administrative data from sites. The numbers in the estimated annualized burden hours table (Exhibit 10) represent the burden hours for intervention and comparison groups for both IOs. Exhibit 11 provides the estimated annualized cost to respondents.

Parental consent. An anticipated 1,680 parents will complete a 5-minute parental consent form, for a total burden of 140 hours. Using the U.S. Department of Labor’s Bureau of Labor Statistics’ 2017 estimate of an average hourly wage of $24.34, the total cost will be $3,407.60.

Youth assent. An anticipated 1,680 youth will complete a 5-minute assent, for a total burden of 140 hours and a cost of $1,015.00. The figure of $7.25 per hour (an approximation of the hourly wage that students could earn) is used to value the time cost of participation. However, because the assent and surveys will be administered during the school day, the real cost to students is the opportunity cost of filling out the survey instruments in lieu of formal classroom instruction.

Baseline youth survey. The baseline survey will use Teleform scannable questionnaires and will take 30 minutes on average to complete. An anticipated 1,596 youth will complete the survey, for a burden of 798 hours. Based on the figure of $7.25 per hour, the estimated annual cost to students for the baseline survey is $5,785.50.

Follow-up youth survey. The intervention group follow-up survey will use Teleform scannable questionnaires and will take 10 minutes on average to complete. After consulting with IOs, we estimate that 5% of youth who complete the baseline survey will not complete the follow-up survey (attrition). An anticipated 1,596 youth will complete the survey, for a burden of 798 hours. The estimated annual cost to intervention students for the follow-up outcome survey is $5,785.50.

Classroom roster report. An anticipated 14 schools will provide administrative data and rosters of youth in the school who are eligible for the SRA program. We estimate that producing administrative data and rosters will take an average of two hours per school for a total burden of 28 hours. Based on an adjusted hourly wage of $45.38 for school administrative staff, the total cost for the rosters would be $1,270.64.

For the process evaluation, data will be collected through youth and facilitator surveys, key informant interviews, a Session Log completed by program facilitators, and an attendance form.

Facilitator Session Logs. To obtain information about the fidelity with which the curriculum is administered, and to document the level of youth engagement, facilitators (employed by the IOs) will complete a Session Log for the sessions they facilitate. We anticipate that each of the 8 facilitators will complete approximately 75 Session Logs (assuming 120 classes of 20 students each at 5 sessions per class), and that each Session Log will take approximately 15 minutes to complete. The total burden will be 150 hours. Using the adjusted hourly wage for high school teachers of $60.12, the total cost will be $9,018.00.

Facilitator surveys. An anticipated 8 facilitators will complete a 25-minute survey about their SRA knowledge and attitudes and their experiences implementing the SRA program. The total burden is expected to be 3 hours. Using the adjusted hourly wage for high school teachers of $60.12, the total cost will be $180.36.

Process evaluation participant surveys. An anticipated 756 youth (half of the total follow-up survey youth – i.e., those in the intervention group) will complete a 10-minute survey about their experience with the SRA course and facilitator. The total burden is expected to be 126 hours. Based on the figure of $7.25 per hour, the estimated annual cost to students for the participant surveys is $913.50.

Key informant interviews. To obtain a more in-depth understanding of the implementation process, the contractor will conduct in-person key informant interviews with facilitators as well as representatives of the study sites. For each of the two IOs, two representatives of the sites and three program facilitators/staff will be interviewed, for a total of 10 key informant interviews across both IOs. Each interview is anticipated to last one hour, so the total burden is 10 hours. Using the adjusted hourly wage for high school teachers of $60.12, the total cost will be $601.20.

Attendance forms. We anticipate that 120 teachers will provide attendance data on 5 class sessions each, and that each attendance form will take 5 minutes to complete. Using the adjusted hourly wage for high school teachers of $60.12, the total burden is 50 hours, and the cost is $3,006.00.

Exhibit 10. Estimated Annualized Burden Hours

Respondents |

Form name |

No. of respondents |

No. of responses per respondent |

Average burden per response (in hours) |

Total burden (hours) |

Parents |

Parental consent |

1,680 |

1 |

5/60 |

140 |

High school students |

Youth assent |

1,680 |

1 |

5/60 |

140 |

Baseline survey |

1,596 |

1 |

30/60 |

798 |

|

Follow-up survey |

1,596 |

1 |

30/60 |

798 |

|

School or school district administrative staff |

Classroom roster report |

14 |

1 |

120/60 |

28 |

Program facilitators |

Process evaluation facilitator Session Log |

8 |

75 |

15/60 |

150 |

Program facilitators |

Process evaluation Facilitator survey |

8 |

1 |

25/60 |

3 |

High school students |

Process evaluation participant survey |

756 |

1 |

10/60 |

126 |

Program facilitators, site representatives |

Key informant interviews |

10 |

1 |

60/60 |

10 |

Teachers |

Attendance form |

120 |

5 |

5/60 |

50 |

Total burden |

|

|

|

|

Exhibit 11. Estimated Annualized Cost to Respondents

Respondents |

Form name |

Total burden (hours) |

Adjusted hourly wage |

Total respondent costs |

Parents |

Parental consent |

140 |

$24.34 |

$3,407.60 |

High school students |

Youth assent |

140 |

$7.25 |

$1,015.00 |

Baseline survey |

798 |

$7.25 |

$5,785.50 |

|

Follow-up survey |

798 |

$7.25 |

$5,785.50 |

|

School or school district administrative staff |

Classroom roster report |

28 |

$45.38 |

$1,270.64 |

Program facilitators |

Facilitator Session Log |

150 |

$60.12 |

$9,018.00 |

Program facilitators |

Facilitator survey |

3 |

$60.12 |

$180.36 |

High school students |

Process evaluation participant survey |

126 |

$7.25 |

$913.50 |

Program facilitators, site representatives |

Key informant interviews |

10 |

$60.12 |

$601.20 |

Teachers |

Attendance form |

50 |

$60.12 |

$3,006.00 |

Total cost |

|

|

|

Respondents will include youth participants in each of the IOs’ SRA programs (9th- or 10th-grade students), their parent(s), and samples of the program facilitators and of representatives from participating sites (e.g., teachers, school principals, administrative staff). There will be no cost to participants other than their time.

Wage Estimates. To derive wage estimates, we used data from the U.S. Bureau of Labor Statistics’ (BLS) May 2017 National Occupational Employment and Wage Estimates. We have adjusted the school employee hourly wage estimates by a factor of 100 percent to reflect current HHS department-wide guidance on estimating the cost of fringe benefits and overhead (see Exhibit 12). For school employees, we use the adjusted hourly wages from one BLS occupation title, Education, Training, and Library Workers, All Other.

In addition, to calculate parent time costs, we have used wage estimates for all occupations, using the same BLS data discussed above. To calculate youth time costs, we have used the federal minimum wage. We have not adjusted these costs for fringe benefits and overhead because direct wage costs represent the “opportunity cost” to parents and youth for time spent on form completion.

Exhibit 12. Adjusted Hourly Wages Used in Burden Estimates

Occupation Title |

Occupational Code |

Mean Hourly Wage ($/hr.) |

Fringe Benefits and Overhead ($/hr.) |

Adjusted Hourly Wage ($/hr.) |

Education, Training, and Library Workers, All Other |

25-9099 |

$22.69 |

$22.69 |

$45.38 |

Secondary School Teachers |

25-2030 |

$30.06* |

$30.06 |

$60.12 |

All Occupations |

00-0000 |

$24.34 |

N/A |

$24.34 |

Not applicable (youth wage) |

N/A |

$7.25 (federal minimum wage) |

N/A |

$7.25 |

Source: “Occupational Employment and Wage Estimates May 2017,” U.S. Department of Labor, Bureau of Labor Statistics. http://www.bls.gov/oes/current/oes_nat.htm

* Wages for some occupations that do not generally work year-round, full time, are reported either as hourly wages or annual salaries depending on how they are typically paid. Annual mean wage for Secondary School Teachers is $62,730. Utilizing the Office of Personnel Management’s 2,087 annual standard work hours, mean hourly wage for Secondary School Teachers was calculated to be $30.06.

Estimate of Other Total Annual Cost Burden to Respondents or Recordkeepers and Estimate of Capital Costs

This information collection entails no respondent costs other than the cost associated with response time burden, and no non-labor costs for capital, startup or operation, maintenance, or purchased services.

The cost estimate for the design and conduct of the evaluation will be $2.850 million annually. This total cost covers all evaluation activities, including the estimated cost of coordination among OASH, the contractors, and the IOs; compensation to schools for school records data; project planning and schedule development; Institutional Review Board applications; study design; technical assistance to IOs; data collection; data analysis and reporting; and progress reporting.

This will be a new data collection.

Data will be collected between May 2019 and January 2020. Data will be tabulated and presented to OASH in May 2020. See Exhibit 13 for the anticipated schedule.

Exhibit 13. Estimated Schedule for the Evaluation

Activity |

Start date |

End date |

Research approvals from participating schools, as needed |

December 2018 |

May 2019 |

Collection of parental consent |

May 2019 |

September 2019 |

Youth data collection |

September 2019 |

December 2019 |

Data analysis |

October 2019 |

April 2020 |

Summary report |

‒ |

May 2020 |

The expiration date for OMB approval will be displayed on all data collection instruments.

There are no exceptions to the certification.

References

Borawski, E. A., Trapl, E. S., Lovegreen, L. D., Colabianchi, N., & Block, T. (2005). Effectiveness of abstinence-only intervention in middle school teens. American Journal of Health Behavior, 29, 423–434. http://dx.doi.org/10.5993/AJHB.29.5.5

Coyle, K. K., & Glassman, J. R. (2016). Exploring alternative outcome measures to improve pregnancy prevention programming in younger adolescents. American Journal of Public Health, 106(Suppl 1), S20–S22. https://doi.org/10.2105/AJPH.2016.303383

Donnelly, J., Horn, R. R., Young, M., & Ivanescu, A. E. (2016). The effects of the Yes You Can! curriculum on the sexual knowledge and intent of middle school students. Journal of School Health, 86(10), 759–765. https://doi.org/10.1111/josh.12429

Family and Youth Services Bureau. Making Adaptations Tip Sheet. https://www.acf.hhs.gov/sites/default/files/fysb/prep-making-adaptations-ts.pdf.

Francis, K., Woodford, M., Kelsey, M. (2015). Evaluation of the Teen Outreach Program in Hennepin County, MN: Findings from the Replication of an Evidence-Based Teen Pregnancy Prevention Program. https://www.hhs.gov/ash/oah/sites/default/files/ash/oah/oah-initiatives/evaluation/grantee-led-evaluation/reports/hennepin-final-report.pdf.

Jemmott III, J. B., Jemmott, L. S., & Fong, G. T. (2010). Efficacy of a theory-based abstinence-only intervention over 24 months: A randomized controlled trial with young adolescents. Archives of Pediatrics and Adolescent Medicine, 164(2), 152–159. https://doi.org/10.1001/archpediatrics.2009.267

Kirby, D. B. (2008). The impact of abstinence and comprehensive sex and STD/HIV education programs on adolescent sexual behavior. Sexuality Research and Social Policy, 5(3), 18–27. https://doi.org/10.1525/srsp.2008.5.3.18

Lieberman, L., & Su, H. (2012). Impact of the choosing the best program in communities committed to abstinence education. SAGE Open, 2(1), 215824401244293. https://doi.org/10.1177/2158244012442938

Markham, C. M., Tortolero, S. R., Peskin, M. F., Shegog, R., Thiel, M., Baumler, E. R., & Robin, L. (2012). Sexual risk avoidance and sexual risk reduction interventions for middle school youth: A randomized controlled trial. Journal of Adolescent Health, 50, 279–288. https://doi.org.10.1016/j.jadohealth.2011.07.010

Office of Adolescent Health, U.S. Department of Health and Human Services. (2017). Should Teen Pregnancy Prevention Studies Randomize Students or Schools? The Power Tradeoffs Between Contamination Bias and Clustering. Evaluation Technical Assistance Brief for OAH Teenage Pregnancy Prevention Grantees. https://www.hhs.gov/ash/oah/sites/default/files/contamination-bias-and-clustering-brief.pdf.

Office of the Assistant Secretary for Planning and Evaluation, Office of Human Services Policy, U.S. Department of Health and Human Services. (2015). Improving the Rigor of Quasi-Experimental Impact Evaluations. ASPE Research Brief. https://tppevidencereview.aspe.hhs.gov/pdfs/rb_TPP_QED.pdf.

Piotrowski, Z. H., & Hedeker, D. (2016). Evaluation of the Be the Exception sixth-grade program in rural communities to delay the onset of sexual behavior. American Journal of Public Health, 106(S1), S132–S139. https://doi.org/10.2105/ajph.2016.303438

Realini, J. P., Buzi, R. S., Smith, P. B., & Martinez, M. (2010). Evaluation of “Big Decisions”: An abstinence-plus sexuality curriculum. Journal of Sex and Marital Therapy, 36(4), 313–326. https://doi.org/10.1080/0092623x.2010.488113

Schochet, P. Z. (2008). Statistical Power for Random Assignment Evaluations of Education Programs. Journal of Educational and Behavioral Statistics, 33(1), 62-87. https://journals.sagepub.com/doi/abs/10.3102/1076998607302714.

Schochet, P. Z. (2005). Statistical Power for Random Assignment Evaluations of Education Programs. https://cire.mathematica-mpr.com/~/media/publications/pdfs/statisticalpower.pdf.

Weed S. E., Ericksen, I. E., Lewis, A., Grant, G. E., & Wibberly, K. H. (2008). An abstinence program’s impact on cognitive mediators and sexual initiation. American Journal of Health Behavior, 32(1), 60–73. https://doi.org/10.5993/ajhb.32.1.6

What Works Clearinghouse. (2018). What Works Clearinghouse Standards Handbook, Version 4.0. Washington DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, US Department of Education.

1 The original SMARTool was developed in 2010 by the Center for Relationship Education through a cooperative agreement with the Centers for Disease Control and Prevention, Division of Adolescent School Health: 1-U58-DP000452.

2 The SMARTool defines SRA education as follows: “Sexual risk avoidance education is defined in legislation and while there is some variance in the language, there are several core elements: (1) SRA education must focus on the benefits of voluntarily refraining from nonmarital sexual activity. Within that context SRA education must, (2) present medically-accurate and age-appropriate information; (3) implement an evidence-based approach; (4) provide skills on healthy relationship development; (5) help students develop personal responsibility, self-regulation, and healthy decision making; (6) include teaching on other health risk behaviors such as substance use; and (7) promote parent involvement and communication.” (p. 6).

3 The updated SMARTool is available on CRE’s website: https://www.myrelationshipcenter.org/getmedia/dbed93af-9424-4009-8f1f-8495b4aba8b4/SMARTool-Curricula.pdf.aspx

4 Note that this study is not comparing the SMARTool-aligned SRA curricula vs. curricula that are not aligned with the SMARTool. The comparison of different SRA curricula could potentially be part of a broader research agenda for OASH.

6 https://www.hhs.gov/ash/oah/sites/default/files/contamination-bias-and-clustering-brief.pdf

7 https://cire.mathematica-mpr.com/~/media/publications/pdfs/statisticalpower.pdf https://journals.sagepub.com/doi/abs/10.3102/1076998607302714.

8 See for example: https://www.hhs.gov/ash/oah/sites/default/files/ash/oah/oah-initiatives/evaluation/grantee-led-evaluation/reports/hennepin-final-report.pdf

9 What Works Clearinghouse. (2018). What Works Clearinghouse Standards Handbook, Version 4.0. Washington DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, US Department of Education.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Bernstein, Shampa |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2026 OMB.report | Privacy Policy