Distress Screening SSB

Distress Screening SSB.docx

The Centers for Disease Control and Prevention (CDC) Study on Disparities in Distress Screening among Lung and Ovarian Cancer Survivors

OMB: 0920-1270

Information Collection Request

New

The Centers for Disease Control and Prevention (CDC) Study on Disparities in Distress Screening among Lung and Ovarian Cancer Survivors

Supporting Statement: Part B

Program Official/Contact

Elizabeth A. Rohan, PhD, MSW

Division of Cancer Prevention and Control

Centers for Disease Control and Prevention

Atlanta, GA

Telephone: (770) 488-3053

Fax: (770) 488- 4760

E-mail: [email protected]

January 7, 2019

B.1 Respondent Universe and Sampling Methods 1

B.2 Procedures for the Collection of Information 6

B.3 Methods to Maximize Response Rates and Deal with No Response 8

B.4 Test of Procedures or Methods to be Undertaken 9

B.5 Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data 10

B.1 Respondent Universe and Sampling Methods

A purposive sampling strategy will be employed to identify the sampling frame, which will consist of cancer healthcare facilities (hereafter, referred to as facility) within those states that have fully adopted the use of electronic health records (EHRs). These facilities will be stratified by CoC-accreditation status, geographic urbanicity, and other facility demographic information.

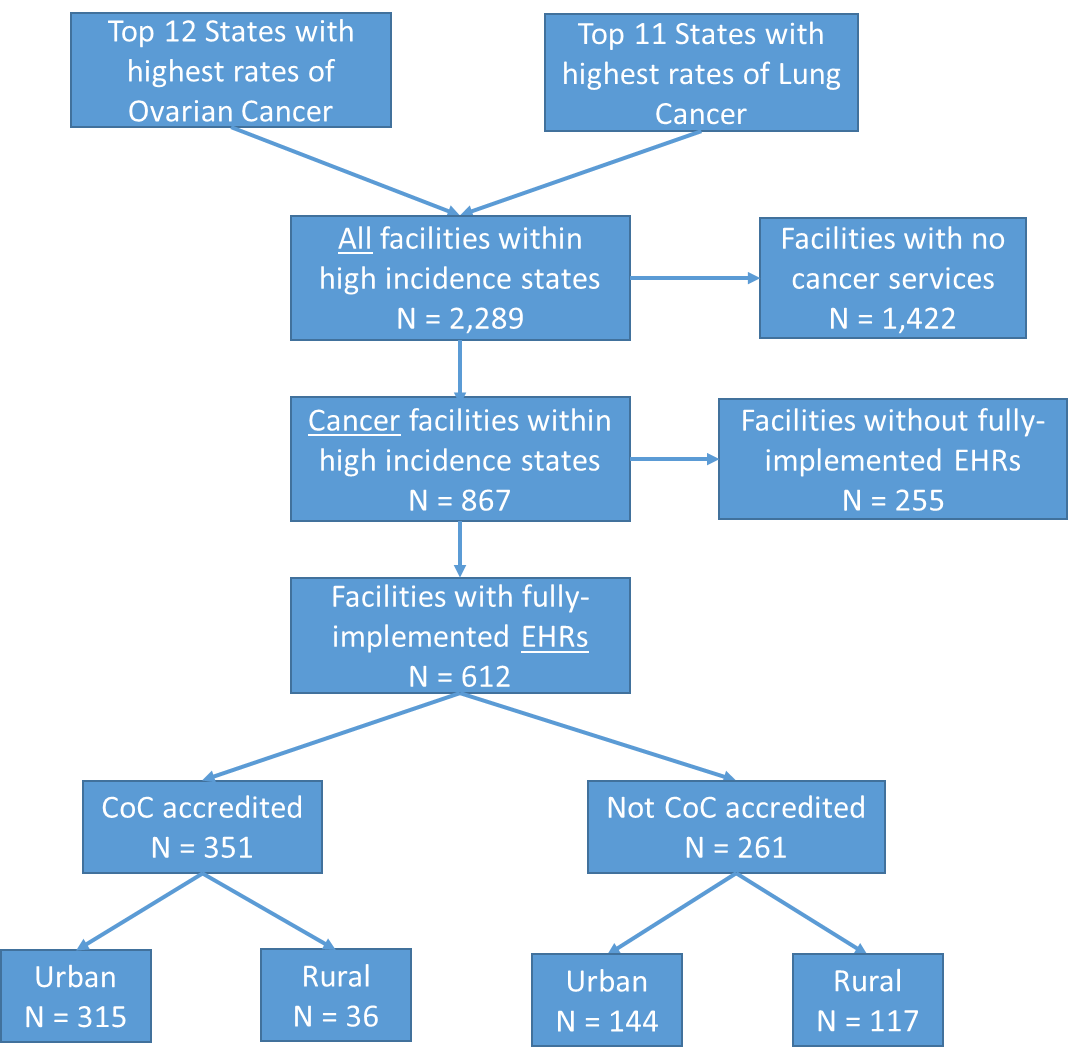

The respondent universe for the proposed study includes 612 facilities that provide cancer services and have fully adopted the use of EHRs in the states with the highest reported incidence rates of lung and ovarian cancers. High incidence states were determined from the U.S. Cancer Statistics Cancer Incidence and Mortality Data.1 The resulting number of facilities comprise the sampling frame which was stratified into four facility types based on CoC-accreditation status and geographic urbanicity. These sampling and stratification steps are based on data supplied through the Annual Survey Database (AHA) database2 and displayed in Figure 1.

Figure 1. Preliminary Selection of Facilities for EHR Abstraction and Qualitative Interviews3

Following this stratification, we will select the facilities that allow for an optimal representation of additional factors such as geographical location, facilities serving patients who are racially and ethnically diverse, have low socioeconomic status, and those who traditionally experience disparities in care. Facility-level demographic data such as Zip Code, bed size, facility ownership (government, non-government), whether the facility has been in operation for a full 12 months at the end of their reporting period, will be obtained through the AHA Annual Survey Database. These and other variables from the AHA database will be used to make final determinations for screening and prioritizing the selection of 50 facilities for this study. Table 2 provides an example of facility count information that we will obtain for determining the final sample of facilities.

Table 2. Sample List of Facility Counts within Selected Universe, by subgroup4

|

CoC-accredited metro |

CoC-accredited rural |

non-CoC-accredited metro |

non-CoC-accredited rural |

Total Count |

315 |

36 |

144 |

117 |

Chemotherapy Services |

306 |

36 |

124 |

104 |

Palliative Care Program |

251 |

22 |

87 |

41 |

Inpatient Palliative Care Unit |

71 |

4 |

23 |

11 |

Women’s health Center/Services |

276 |

29 |

116 |

85 |

Psychiatric Care |

180 |

18 |

70 |

34 |

Psychiatric Consultation/Liaison Services |

218 |

15 |

75 |

35 |

Psychiatric Education Services |

153 |

10 |

50 |

24 |

Ownership |

|

|

|

|

Gov't-non-Federal |

40 |

6 |

19 |

33 |

Gov't-Federal |

6 |

0 |

2 |

1 |

For-profit |

28 |

3 |

20 |

5 |

Not-for-profit |

241 |

27 |

103 |

78 |

Bed size |

|

|

|

|

|

|

|

|

|

25-49 |

3 |

4 |

14 |

43 |

50-99 |

11 |

9 |

24 |

29 |

100-199 |

68 |

14 |

43 |

25 |

200-299 |

65 |

8 |

27 |

8 |

300-399 |

63 |

0 |

17 |

1 |

400-499 |

22 |

0 |

9 |

2 |

500 or more |

81 |

1 |

8 |

0 |

Teaching |

229 |

8 |

88 |

16 |

In addition to the described sampling strategy, we will also solicit participation from the study’s partner organizations to promote the study’s visibility. Professional organizations of interest are those that can promote the study through various organization dissemination channels such as the organization’s listserv, Web site, social media platforms such as LinkedIn or Facebook. Such organizations will include current study partners (American College of Surgeons (ACS) Commission on Cancer (CoC), and the Patient Centered Research Collaborative (PCRC)), and may include others such as the Ovarian Cancer Research Fund Alliance (OCRFA), American Institute for Cancer Research (AICR), the National Association of Social Workers (NASW), Association of Oncology Social Workers (AOSW), Academy of Oncology Nurse & Patient Navigators (AONN), Oncology Nursing Society (ONS), or the American Psychosocial Oncology Society (APOS).

Westat will first contact facilities with an email to all identified facilities. The email will be sent from a project mailbox and contain a brief description of the study, highlight benefits of participation, and study contact information. Westat will also recruit facilities through professional organization contacts or listservs. Project staff will review facilities that express interest in the study and conduct follow-up phone calls to determine the facility’s eligibility, confirm their participation in the study, identify a facility point of contact (POC) for the study, review the study FAQs, and IRB application information. Once facilities have received clearance to participate in the study, project staff will establish data sharing parameters with the facility and provide instructions and training for healthcare facility IT departments to extract and abstract data based on the agreed-upon parameters.

The final study sample will include 50 facilities that implement distress screening and follow-up care to lung and/or ovarian cancer patients. From the 50 selected facilities, we will extract a total of 2,000 patient records. Case selection criteria will be based on age (<90 years old), primary site of cancer (lung and/or ovary) and year of diagnosis (≥ 2017). We will prioritize facilities with larger bed sizes and with the highest number of ovarian and lung cancer cases in each of the four subgroups for data collection to maximize efficiency and reduce burden in obtaining the necessary number of records needed to sufficiently perform statistical hypothesis testing to understand the variation in screening rates across subgroups.

Two levels of analysis were used to estimate the optimal sample sizes for testing the extent to which disparities in screening rates exist across facility types, by patient sociodemographic characteristics or by cancer type. Specifically, power analyses were conducted to determine (1) the number of facilities required to detect statistically significant differences in distress screening rates across four facility categories (CoC-accredited – urban; CoC-accredited – rural; non-CoC-accredited – urban; non-CoC-accredited – rural); and (2) the total number of records that are required to detect these differences when comparing patient-level differences across age, race/ethnic groups and between the two cancer types (ovarian and lung). Table 3 provides sample power calculations based on one-way ANOVA hypothesis tests of association, across standard deviations ranging from 25 percent to 200 percent of the pooled average mean screening rates5 for each subgroup. The results are based assumptions of equal group sizes, statistical significance levels of α = .05, and power of 0.8.

Since the effect sizes will largely vary by each sociodemographic characteristic and distribution of these characteristics across the selected cancer facilities, we anticipate the need to refine the power calculations based on demographic categories and cut points from preliminary analyses.

Table 3: Power analysis to determine number of patients needed to detect differences across subgroups

Level 1: Sample Size Needed - Number of Patient Records (EHR) |

|||||

|

Mean screening rate |

Standard deviation |

Sample size per group (assuming equal group size) |

||

Age - Middle vs. Older |

|||||

40 - 64 65 and above |

0.64 0.62 |

0.25 * pooled avg mean =0.16 |

1006 |

||

0.50 * pooled avg mean =0.32 |

4020 |

||||

0.75 * pooled avg mean =0.47 |

8671 |

||||

1 * pooled avg mean =0.63 |

15578 |

||||

2 * pooled avg mean =1.26 |

62306 |

||||

Race - White vs. Black |

|||||

White Black |

0.63 0.70 |

0.25 * pooled avg mean =0.17 |

94 |

||

0.50 * pooled avg mean =0.33 |

350 |

||||

0.75 * pooled avg mean =0.50 |

802 |

||||

1 * pooled avg mean =0.67 |

1440 |

||||

2 * pooled avg mean =1.33 |

5668 |

||||

Race - White vs. Non-White |

|||||

White Non-White |

0.63 0.66 |

0.25 * pooled avg mean =0.16 |

448 |

||

0.50 * pooled avg mean =0.32 |

1788 |

||||

0.75 * pooled avg mean =0.48 |

4020 |

||||

1 * pooled avg mean =0.65 |

7371 |

||||

2 * pooled avg mean =1.29 |

29027 |

||||

Cancer type - Ovarian vs. Lung |

|||||

Ovarian cancer Lung cancer |

0.71 0.61 |

0.25 * pooled avg mean =0.17 |

47 |

||

0.50 * pooled avg mean =0.33 |

172 |

||||

0.75 * pooled avg mean =0.50 |

392 |

||||

1 * pooled avg mean =0.66 |

685 |

||||

2 * pooled avg mean =1.32 |

2737 |

||||

Level 2: Sample Size Needed - Number of Facilities |

|

||||

Group |

Mean screening rates |

Standard deviation |

Sample size per group (assuming equal group size) |

||

CoC-accredited - urban CoC-accredited - rural non-CoC-accredited - urban non-CoC-accredited -rural |

0.43 0.61 0.70 0.76 |

0.25 * pooled avg mean =0.16 |

6 |

||

0.50 * pooled avg mean =0.31 |

18 |

||||

0.75 * pooled avg mean =0.47 |

40 |

||||

1 * pooled avg mean =0.63 |

71 |

||||

2 * pooled avg mean =1.25 |

276 |

||||

* alpha=0.05, power = 0.8

Qualitative Data Collection.

Westat will collect names and contact information (e.g. email addresses) of facility administrators, providers, and staff for qualitative data collection for the purposes of understanding the participating facility’s cancer distress screening program, organizational policies, processes, as well as facilitators and barriers related to the implementation of distress screening. Westat staff will use email to invite and follow-up with these candidate respondents to confirm their participation in the interview or the focus group. Upon recruitment of facility administrators, Westat will conduct one 60-minute key informant interview by telephone about the administrative decisions related to the implementation of the distress screening policies.

Similarly, Westat will conduct one 90-minute focus group at the same selected facilities. Focus group participants will consist of a mix of medical providers, social workers, mental health professionals, physicians, nurses, and consult staff who are involved in one or more stages of the screening. Together, they will provide multiple perspectives and more nuanced information associated with the screening and follow-up processes. Guiding questions will elicit information about screening tools, modes of administration, healthcare providers providing assessment, availability and accessibility of psycho-social services (onsite or through referrals), settings of administration, and follow-up processes for each patient.

Key informant interviews and focus groups will be conducted by Westat staff through telephone or WebEx. The informed consent will be built into the interview and focus group protocols and will be administered orally at the beginning of the interviews. All participants will be given the opportunity to provide or decline consent to the interview or focus group, as well as to the audio recording of the interview or focus group. Each interviewee who completes the key informant interview will receive an incentive of $100 in the form of an electronic gift card or a check, as reimbursement for their time. Focus group participants will receive an incentive of $60 in the form of an electronic gift card or a check, as reimbursement for their time.

B.2 Procedures for the Collection of Information

Data collection procedures for patient electronic health records, key informant and focus group interviews are outlined below. Within three weeks of OMB approval, the study team will distribute the initial recruitment email to the identified facilities and study partners. The study team will only follow-up with facilities that respond with an interest in participating in the study. Given the proposed mix use of purposive and convenience sampling, response rates will vary based on the tiered strategies outlined in section B.1. We anticipate that response rates from facility lists provided by study partners will be fairly high given the initial buy-in from the partner organization, while response rates from facilities identified through the commercial listing will be fairly low. The study team understands that while lower response rates from the commercial list of facilities may limit the generalizability of study findings, this will not impede or compromise the original intent and goals of the study design.

EHR data collection procedures are described below and presented in Table B.2-1:

Table B.2-1 Procedures for EHR Data Collection

Target Dates |

Activities |

Within 3 weeks after OMB approval |

Recruitment emails sent to all potential participating facilities and partner lists. |

2 weeks later |

Follow-up recruitment emails re-sent to non-responding facilities |

Beginning 5 weeks after OMB approval, to occur on a rolling basis |

Email and follow-up phone calls to interested facilities to initiate the onboarding process, which will entail the following:

|

2 weeks after initiation of onboarding process |

|

6 weeks after onboarding |

Westat and facility will confirm EHR point of contact(s) and establish parameters for sharing data. |

8 weeks after onboarding |

Facilities will submit facility IRB approval letters to Westat. |

10-15 weeks after onboarding |

Facilities will share EHR data components for this study through Westat Secure File Transfer Protocol (SFTP) site. |

Data collection for the interviews and focus groups apply only to a subset of the 50 participating facilities. This segment of data collection will begin only after EHR data collection has been completed for the selected facilities to minimize burden on facility POCs. Reminder and follow-up contacts occur only with non-responders. Assuming a high response rate among PIs and Co-PIs, we expect reminder and follow-up contacts to be minimal. Data collection procedures for the telephone interview are described below and presented in Table B.2-2:

Table B.2-2 Procedures for Interview and Focus Group Data Collection

Target Dates |

Activities |

16 weeks after onboarding |

Email invitation letter sent to potential participants for key informant and focus group interviews. The letter requests the individual’s participation, introduces the purpose of the evaluation, requests an email reply with suggestions of 2-3 convenient times to be contacted for the interview, and provides contact information in case of queries. |

17 weeks after onboarding |

Follow-up email invitation letter sent to potential participants for key informant and focus group interviews. If a potential participant does not reply to schedule the interview, the scheduler will call the individual to schedule the interview. If there is no response received within two weeks from either the telephone follow-up or the second reminder email, the potential participant will be considered a non-respondent and removed from the sample. |

18-22 weeks after onboarding |

Westat will conduct qualitative focus groups and key-informant interviews with selected facility staff and providers. |

B.2.1. Quality Control

The contractor for this study will establish and maintain quality control procedures to ensure standardization, and high standards of data collection and data processing. The contractor will maintain a log of all decisions that affect sample enrollment and data collection. The contractor will monitor response rates and completeness of acquired data and provide CDC with progress reports in agreed upon intervals.

B.3 Methods to Maximize Response Rates and Deal with No Response

Several steps will be taken to maximize response rates and reduce nonresponse bias for all data collection efforts. The contractor will lead and/or be available to support each data collection process, providing ongoing technical assistance, answering questions from facility staff, and providing clarification and guidance whenever needed. Qualitative data collection activities will be restricted to participants involved in the planning and implementation of cancer distress screening. Efforts to maximize response rates are presented here by type of data collection method.

Professional quality recruitment materials that can catch the eye of a potential facility administrator and answer questions can be a significant factor in successful facility recruitment. Westat will create professional recruitment materials such as a list of FAQs to distribute along with the initial email.

Based on the identified facility universe as described, Westat will prepare recruitment protocols to optimize the recruitment of facilities to the study. Westat will purchase an industry standard contact list of potential facility administrators such as Oncology Service Line Director or Administrator, Directors of Quality Improvement. Other lists of key personnel such as CoC Committee members who serve in the role of Psychosocial Service Coordinator (i.e. oncology social worker, clinical psychologist, or mental health professional), Quality Improvement Coordinator, Director of Nursing or Certified Tumor Registrars may also be obtained and used for initial contact with the selected facilities.

To increase interest from recipients of our recruitment invitation, we will include a statement to indicate that this study has been approved to meet compliance for Standard 4.7 (Study of Quality) by the American College of Surgeons (ACS) Commission on Cancer (CoC). Furthermore, this study has been endorsed by the American Society of Clinical Oncology (ASCO) and the Patient Centered Research Collaborative (PCRC).

With the above provisions and top-down recruitment strategy (identifying respondents only from fully participating facilities who have completed EHR data submissions), we anticipate that the rates of non-response or non-cooperation to invitations for key informant interviews and focus groups will be minimal. For each facility selected for the qualitative data collection component of the study, facility POCs will organize one informant interview and a focus group of six staff members who are familiar with distress screening protocols and clinical staff who are involved and invested in the process. The response rates are expected to be at about 80% for key informants and 90% for focus group participants.

As stated above, the study team understands and anticipates a varying degree of response levels from potential identified facilities for recruitment, and that this may limit the generalizability of study findings. However, given the exploratory nature and mixed purposive and convenience sampling strategy for recruitment, response rates will not impede or compromise the original intent and goals of the study design. The accuracy and reliability of information collected will be adequate for intended uses.

B.4 Test of Procedures or Methods to be Undertaken

An interactive EHR abstraction tool and EHR abstraction data entry guide will be used for the collection of EHR data elements. These tools have been reviewed by study partners who are familiar with various facility EHR systems. Based on review feedback, we have made provisions for varying data formats based on the facilities EHR data parameters. We intend to conduct pilot testing of the recruitment and EHR data collection protocol with fewer than 10 sites. We believe that this will greatly inform the feasibility of our proposed approach, operationalization of data elements, and provide insight on areas to refine and/or streamline.

Qualitative data collection instruments include the key informant and focus group interview guides. These were reviewed by the internal project partners who provided feedback on measurement quality, potential burden, feasibility, and ease of administration. We intend to conduct pilot testing of the key informant and focus group interviews with fewer than 10 members of the Patient Centered Research Collaborative (PCRC) who have similar on-the-ground experiences with distress screening practices for cancer survivors in facility settings. Given the small number of total interview respondents for this study (approximately 80-100 individuals), we believe that a pretest involving fewer than 10 respondents is considered adequate. The study team will inform OMB of any changes resulting from the pilot test and provide the office with the final data collection instrument(s).

B.5 Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

The following individuals were critical in developing the research plan, the conceptual framework, survey questions, and sampling strategies underlying this study.

Name, Title, Affiliation, Contact Information |

Project Role |

Grace C. Huang, PhD MPH Senior Study Director Westat 1600 Research Boulevard Rockville, MD 20850 (301) 517-4047 |

Project director of the data collection agent, Westat |

Diane Ng, MPH Westat 1600 Research Boulevard Rockville, MD 20850 (301) 279-4518 |

Project manager; Medical records specialist |

Theresa Famolaro, MPS, MS, MBA Senior Study Director Westat Center for Healthcare Delivery Research and Evaluation 1600 Research Blvd RB1182 Rockville, MD 20850 (301) 738-3547 |

Recruitment specialist |

Nanmathi Manian, PhD Sr. Study Director Westat 1500 Research Blvd., TB364 Rockville, MD 20850 (301) 294-2863 |

Qualitative researcher – oversee all qualitative data collection and preliminary analysis |

Brad Zebrack, PhD, MSW, MPH, FAPOS Professor University of Michigan School of Social Work University of Michigan Comprehensive Cancer Center Health Behaviors and Outcomes Research Program (734) 615-5940 |

Consultant |

Nina Miller, MSSW, OSW-C Cancer Liaison Initiatives Manager American College of Surgeons Commission on Cancer (312) 202-5592 |

Consultant |

Jennifer E. Boehm, MPH Public Health Advisor Program Services Branch Division of Cancer Prevention and Control Centers for Disease Control and Prevention (770) 488-4806 |

Contribute to study design Conduct data analysis |

Michael Shayne Gallaway, PhD Epidemiologist Division of Cancer Prevention and Control Centers for Disease Control and Prevention Phone: 404-498-0491 |

Contribute to study design Conduct data analysis |

Elizabeth A. Rohan, PhD, MSW Health Scientist Centers for Disease Control and Prevention Division of Cancer Prevention and Control 4770 Buford Highway, NE, MS F76 Chamblee, GA 30341-3717 (770) 488-3053 |

Primary Investigator Receive and approve of contract deliverables Oversee study design Conduct data analysis |

1 National Program of Cancer Registries and Surveillance, Epidemiology, and End Results SEER*Stat Database: NPCR and SEER Incidence – U.S. Cancer Statistics 2005–2015 Public Use Research Database, United States Department of Health and Human Services, Centers for Disease Control and Prevention and National Cancer Institute. Released June 2018, based on the November 2017 submission. Accessed at www.cdc.gov/cancer/npcr/public-use.

2 American Facility Association (AHA) Facility Statistics http://www.aha.org/products-services/aha-facility-statistics.shtml; AHA Annual Survey IT Database for 2015

https://www.ahadataviewer.com/additional-data-products/EHR-Database/

3 Sources: U.S. Cancer Statistics (USCS), https://nccd.cdc.gov/uscs/; American Facility Association (AHA) Facility Statistics http://www.aha.org/products-services/aha-facility-statistics.shtml; AHA Annual Survey IT Database for 2015

https://www.ahadataviewer.com/additional-data-products/EHR-Database/

4 AHA Annual Survey Database™ https://www.ahadataviewer.com/additional-data-products/AHA-Survey/

5 Zebrack, B., Kayser, K., Bybee, D., Padgett, L., Sundstrom, L., Jobin, C., & Oktay, J. (2017). A Practice-Based Evaluation of Distress Screening Protocol Adherence and Medical Service Utilization. Journal of the National Comprehensive Cancer Network, 15(7), 903-912.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | TABLE OF CONTENTS |

| Author | Vivian Horovitch-Kelley |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2026 OMB.report | Privacy Policy