NAWS Summary of Nonresponse and Design Studies

1205-0453_NAWS_Summary of Nonresponse and Design Studies_Final_11.12.2019.docx

National Agricultural Workers Survey

NAWS Summary of Nonresponse and Design Studies

OMB: 1205-0453

N ational

Agricultural Workers Survey (NAWS)

ational

Agricultural Workers Survey (NAWS)

Summary

of Nonresponse and

Design Studies

Submitted: September 30, 2019

Submitted to:

Daniel Carroll, USDA/DOL/OPDR

U.S. Department of Labor

Employment and Training Administration

Office of Policy Development and Research

Room N-5637

200 Constitution Ave., N.W.

Washington, D.C. 2021

Submitted by:

Susan Gabbard

JBS International, Inc.

555 Airport Boulevard Suite 400

Burlingame, CA 94010

This report was prepared for the U.S. Department of Labor, Employment and Training Administration, Office of Policy Development and Research by JBS International, Inc., under contract 47QRAA18D00AE; Task Contract 1630DC-19-F-00019. Since contractors conducting research and evaluation projects under government sponsorship are encouraged to express their own judgment freely, this report does not necessarily represent official opinion or policy of the U.S. Department of Labor.

Table of Contents

Nonresponse Study 1 – NAWS Item Nonresponse Rates 4

Nonresponse Study 2 – NAWS Unit (Employer) Nonresponse 5

Nonresponse Study 4 – Examining Employer Eligibility Over Time and NAWS Response Rates 7

Design Study A – Efficiency of the NAWS Sampling Design 7

Design Study B – Optimal Interview Allocations for NAWS Sampling 8

in progress or potential future studies 9

Nonresponse Study 5 – Comparison of the Characteristics of Respondents to National Data 9

Nonresponse Study 6 – Comparing Worker Data of Employers Who Change Response States 9

Design Study C – Extending the Optimal Allocation Study 10

Appendix A: Nonresponse Study 1 – NAWS Item Nonresponse Rates 11

Appendix B: Nonresponse Study 2 – NAWS Unit (Employer) Nonresponse 17

Nonresponse follow-up attempts 30

Data Preparation and the Analytic Sample 31

Appendix E: Design Study A – Efficiency of the NAWS Sampling Design 41

Appendix F: Design Study B – Optimal Interview Allocations for NAWS Sampling 58

Introduction

JBS has undertaken a series of small studies to examine possible nonresponse bias in the National Agricultural Workers Survey (NAWS) and to assess the efficiency of the survey’s design, including four nonresponse studies that assessed employer and item response rates, and two design studies that assessed and potentially improved the survey’s design. This document summarizes each of these studies. The full report for each study is attached in Appendices A–F. The final section of this document describes studies that are in progress or are potential future studies.

The nonresponse studies included:

Nonresponse Study 1 examined the nonresponse rates among items of the NAWS questionnaire.

Nonresponse Study 2 examined nonresponse bias by comparing employers who allowed interviews, eligible employers who refused to allow interviews, and employers whose eligibility could not be determined.

Nonresponse Study 3 attempted further contact with employers who were not successfully screened during the regular data collection cycle to determine whether further contact attempts would result in finding eligible employers who might improve the NAWS response rate.

Nonresponse Study 4 is a Markov chain analysis that incorporated prior data to examine whether employer’s eligibility (i.e., eligible, ineligible, or unable to be determined) impacts response rates.

The design studies covered the following:

Design Study A examined NAWS’s sampling design efficiency by using a series of nested ANOVA to look for interactions between levels of sampling and key survey variables.

Design Study B examined the tradeoffs in the efficiency of interview allocations. Each of these studies are summarized below.

COMPLETED STUDIES

Nonresponse Study 1 – NAWS Item Nonresponse Rates

This study examined nonresponse for questionnaire items. Calculating item nonresponse is one of the survey standard outlines in OMB’s Standard and Guidelines for Statistical Surveys (2006). The full report for the item nonresponse study can be found in Appendix A.

Analysis

Item nonresponse was examined for 86 items on the 2011–2016 NAWS questionnaire that covered all sections answered by the respondents, except for items in the household and work grid. Of the 58 items asked of all respondents, the denominator of the nonresponse rates was the count of respondents. For the 28 items asked only if certain criteria were fulfilled (i.e., having a skip pattern), the denominator was the number of respondents who met the criteria for being asked the question. For both kinds of items, the number of valid responses was the numerator.

Results

For the 58 items asked of all respondents, across fiscal years 2011 to 2016, the average nonresponse rate was less than 0.5 percent. Certain items had higher nonresponse rates than others. For example, the item “When was the last time your parents did hired farm work in the U.S.A?” had a nonresponse rate of up to 3.5 percent.

For the 28 items with skip patterns, across the years, the average nonresponse rate was less than two percent. The item “Does this employer keep in contact with you about future employment before leaving at the end of the season?” had the highest annual nonresponse rate of up to 9.4 percent.

Overall, the NAWS items showed very low item nonresponse with most items exceeding 95 percent valid answers and a few items having 90–94 percent valid responses. For items with less than 70 percent valid responses, the Office of Management and Budget (OMB) requires additional analysis of item nonresponse. No additional analysis was undertaken since all items exceeded the OMB criteria of 70 percent.

Nonresponse Study 2 – NAWS Unit (Employer) Nonresponse

This study assessed nonresponse bias by comparing information in the sampling frame on eligible respondents and nonrespondents. While the sampling data is somewhat sparse for nonrespondents, three pieces of information are useful: geographic location, North American Industry Classification System (NAICS) code, and the source used to obtain employer names. The NAWS uses three sources to acquire employer names: a) the Bureau of Labor Statistics (BLS) Quarterly Census of Employment and Wages (QCEW) microdata on employers paying unemployment insurance (UI) taxes, b) marketing lists, and c) internet searches and contacts with knowledgeable local individuals. Geographic area and source lists are available for all employers, while NAICS codes are available for all employers who pay UI taxes, marketing list employers, and some additional employers. The full report can be found in Appendix B.

Analysis

This study examined three characteristics (source of the employer list, NAICS, and geography) and made three comparisons:

Employers allowing interviews compared to sampled employers that refused or were unable to be screened (i.e., excluding employers who are ineligible).

Employers allowing interviews compared to eligible employers who refused.

Employers who are eligible compared to employers whose eligibility could not be determined.

Nonresponse bias was calculated using the bias calculation formula

from OMB’s Standard and Guidelines for Statistical Surveys

(2006). The formula defines bias for an estimate,

,

as the following:

,

as the following:

where:

yt = the mean based on all sample cases;

yr = the mean based only on respondent cases;

=

the mean based only on the nonrespondent cases;

=

the mean based only on the nonrespondent cases;

n = the number of cases in the sample; and

nnr = the number of nonresponding cases.

Results

The results show that nonresponse rate for the sources was 83–89 percent, 55–57 percent, and 61–75 percent for comparisons A, B, and C, respectively. Furthermore, there was low bias (2–4 percent) across the three comparisons and sources. There were more variations in nonresponse rates for NAICS, but bias remained low at less than 10 percent. The nonresponse rate for the six regions of NAWS was 80–87 percent, 49–62 percent, and 57–66 percent for comparison A, B, and C, respectively, and bias was low at less than seven percent across the three comparisons. The nonresponse rate for the 12 regions of NAWS was 75–87 percent, 46–63 percent, and 54–66 percent for comparison A, B, and C, respectively, and bias was similarly low (less than 7 percent).

JBS also conducted regression analysis to determine the association between employer characteristics (source, NAICS, and geography) for the three comparisons. The results show that in comparisons A and C, employers selected from the InfoUSA source were significantly less likely to participate compared to BLS sourced employers. In all three comparisons, employers with NAICS 1114 (Greenhouse, Nursery, and Floriculture Production) had the highest likelihood of participating in NAWS, compared to NAICS 1119 (Other Crop Farming). In terms of geography, in all three comparisons, employers in four of the six regions (East, Southeast, Midwest, and Northwest), and 10 of the 12 regions, were significantly more likely to participate in NAWS compared to California.

Overall, the results showed that although unit nonresponse rates were high, there was little nonresponse bias between responding and nonresponding employers overall and across NAICS, sampling regions, and list source.

Nonresponse Study 3 – Follow Up with Employers Who Were Not Successfully Screened During the Initial NAWS Data Collection

This study is a repeat of the 2009 study where JBS attempted further contact with employers who were not successfully screened during the data collection. NAWS staff made additional attempts via mail and telephone calls to contact 779 unscreened employers from the Fall 2017 data collection cycle to determine their eligibility status. The goal of this additional nonresponse follow-up (NRFU) was to determine whether further contact attempts or lengthening the onsite data collection period would improve the employer response rate. The full report can be found in Appendix C.

Analysis

The NRFU resulted in 30 percent of previously unscreened respondents responding and being screened. Mail-only response was 17 percent and mail plus telephone response was 35 percent. Respondents in the NRFU study were coded as Eligible, Ineligible, or Unscreened using coding categories similar to the Fall 2017 sample’s response codes. Response rates were calculated for the Fall 2017 Cycle with and without the additional NRFU data using the formula for the unweighted response rate (RRU) from OMB’s Standard and Guidelines for Statistical Surveys (2006).

Results

The initial response rate for the Fall 2017 cycle was 25 percent. The lower bound on the NRFU response rate was calculated assuming all eligible NRFU respondents refused to allow interviews, the response rate then decreased to 20 percent. The upper bound was calculated assuming all NRFU respondents agreed to interviews and was 37 percent. Assuming the same proportion of the NRFU respondents allowed interviews as the original Fall 2017 responding employers, the response rate was 27 percent. The additional NRFU did not substantially change the response rate. This result was the same as the 2009 study. That is, additional effort at finding and screening employers provides information on more employers but does not improve the NAWS response rate.

Nonresponse Study 4 – Examining Employer Eligibility Over Time and NAWS Response Rates

In 2019, JBS repeated the Markov analysis that was completed in 2007. A small number of agricultural employers appear on the survey’s sampling list in multiple administrations of the survey. Attempts to contact these employers may have had different outcomes at different time periods. This study used Markov chain analysis to incorporate information from prior data periods about employers’ states – whether eligible, ineligible, or unable to be determined – and looked at the impact on response rates. The full report can be found in Appendix D.

Analysis

The analysis used contact data on agricultural employers contacted from FY 2006–2017 (cycles 53–88). Some employers were contacted in as many as six different cycles, for a total of 34,774 contacts. Each contact was coded in response category 1–8 (Yes, Yes but, Qualified refusal, Don’t know, Incomplete, Not in sample, Skipped, Office codes), and the probability of an employer moving from one of these categories in a particular cycle, to each of the eight possible categories in the next cycle, was found. The study also calculated the expected percentage of employers in each of the eight categories after a large number of cycles.

Results

The results of the analysis showed a five-percentage point gain in the CASRO response rate from 15 to 20 percent. The overall expected response rate after a large number of cycles is 20 percent.

Design Study A – Efficiency of the NAWS Sampling Design

To better understand the study’s design effects, JBS’s statistical team at Portland State University conducted analyses that used multivariate analysis to identify whether the survey’s sampling design was efficient. The study used a series of nested ANOVA’s to identify whether there were significant interactions between the various levels of sampling and key survey variables. An efficient design has homogeneous strata and heterogeneous clusters. If this were true, then the analysis should show that the homogeneous strata vary from each other significantly and that the heterogeneous clusters do not vary significantly. The full report on this study can be found in Appendix E.

Analysis

The ANOVA analyses looked at nine different key variables and their relations to the sampling levels. The key variables were hourly or hourly-equivalent wage, employed by a farm labor contractor, indigenous, unauthorized, number of farm employers, paid hourly or by the piece, number of farm work days, and number of children in the household. The sampling levels were fiscal year, cycle, region, farm labor area, county, Zip Code region, and agricultural employer. The analysis was conducted separately on 2011–2012 data and on 2013–2014 data. A third set of ANOVA analyses was conducted using the combined 2011–2014 data. This analysis examined the current NAWS weight and a proposed change of the employer weight to include a more complex employer nonresponse calculation.

Results

The analyses on the 2011–2012 data and on 2013–2014 data showed similar findings:

Region (or the cycle/region interaction) is consistently found to be a significant effect in all except two variables. This suggests that, for most variables, the stratification by geographic location divides farm workers into heterogeneous groups and is, therefore, an effective design strategy.

Clustering at the county level and employer level are also consistently significant effects, indicating farm workers within one cluster of employers (or county) are significantly different than farm workers in another cluster of employers (or county) for a particular combination of higher-level clustering and stratification. This is not an optimal design element, but likely necessary for efficient data collection.

Outside of Region, County, and Employer, there is little consistency in significant effects across the sampling level variables.

The analysis on 2011–2014 data using the first more complex employer nonresponse calculation showed little difference between weights.

Design Study B – Optimal Interview Allocations for NAWS Sampling

The purpose of this study was to see how interview allocations would change if they were optimized for statistical efficiency and/or cost reduction. The current interview allocation is proportional to the distribution of crop workers across geographic areas. The result is that crop worker allocations are concentrated in a small number of sampling regions with large numbers of crop workers, resulting in small allocations and potentially larger variances for estimates in the other regions. The NAWS statisticians calculated optimal interview allocations for each of the three cycles and 12 sampling regions used to stratify the NAWS sample. The goal was to gain more information about how to reduce interviewing costs and improve the precision of point estimates. The full report can be found in Appendix F.

Analysis

The optimal allocations were calculated for nine variables that are considered key findings from the NAWS:

The worker’s hourly wage or hourly equivalent wage if a piece rate worker;

Number of farm employers in the past 12 months;

Number of farm work days in the past 12 months;

Number of children in the household;

The employer was an agricultural producer and not a labor contractor;

The worker lacked work authorization;

The worker had only one farm employer;

The worker was paid an hourly wage as opposed to a piece rate or salary; and

The number of children in household was three or fewer.

Two types of allocations were calculated. The optimal allocation achieved both statistical and cost efficiency. The Neyman allocation was a special case of optimal allocation that assumed the cost of each stratum was approximately equal and thus calculated statistical efficiency only.

Results

The results show that both optimal allocation and Neyman allocation would increase interview allocations in the larger crop labor region in all cycles. Regions with currently small interview allocations would have even smaller allocations if allocations were optimized for statistical and/or cost efficiency.

in progress or potential future studies

Nonresponse Study 5 – Comparison of the Characteristics of Respondents to National Data

JBS anticipated comparing the characteristics of respondents to national data on NAICS and geographic distribution separately, and where sample size and data allowed, on NAICS and geographic region combined. While there are no exact matches to the NAWS employer universe in a single Federal data source, it was expected that some comparisons could be made.

The first anticipated comparison was between NAWS NAICS 1151 employers allowing interviews with QCEW data on NAICS 1151 employers. However, the vast majority of the 1151 employers on the list come from the UI microdata which is used to generate the QCEW results. This portion of the study was redundant with analysis done in Nonresponse Study 2.

The second anticipated comparison was between NAICS 111 employers allowing interviews with the 2017 CoA data on farms with hired farm labor. The initial attempt to compare the data sources revealed that a direct comparison is not straightforward. One concern was the difference in the definitions of a hired farm worker between the CoA and the NAWS, particularly the possibility that in some regions the CoA data may include large numbers of family workers that are not eligible for the NAWS. Further examination is planned, including an examination of USDA’s Farm Costs and Returns Survey to better understand family labor on farms.

Nonresponse Study 6 – Comparing Worker Data of Employers Who Change Response States

This goal of this study is to gain insight into whether workers from eligible growers who refuse to participate in the NAWS are different than workers from employers who consent to participate. While it is not possible to interview workers whose employers refuse, the Markov analysis done in Nonresponse Study 4 allows NAWS staff to look at farm worker data from agricultural employers who were in the survey multiple times and at least once allowed interviews. This study will compare workers with agricultural employers who change categories from allowing interviews to refusing to participate (and vice versa) as well as workers whose employers always allow interviews. The analysis will focus on the key variables used in Design Study B above.

NAWS staff will first identify two groups of agricultural employers: 1) those that have consented at every contact, and 2) those that have sometimes consented and sometimes refused. After reviewing the data, the second group may be further subdivided into those that initially refused and then participated and those that participated and later refused. The analysis will compare groups by analyzing survey responses of the farm workers, using t-tests and ANOVA for numerical items and chi-squared tests for categorical items.

Design Study C – Extending the Optimal Allocation Study

After reviewing the results of the optimal allocation study, ETA asked that the study be extended to looking at the variables used and the numbers of years of data used for NFJP population estimates. A goal of the NAWS is to provide accurate regional estimates for crop workers for calculating three factors that are part of the NFJP population estimate – calculations of NFJP eligibility, time in residence, and annual employment. This study will follow the same methods described for Design Study B above.

Appendix A: Nonresponse Study 1 – NAWS Item Nonresponse Rates

NAWS Item Nonresponse Rates

Introduction

Item nonresponse looks at nonresponse for questionnaire items. Calculating item nonresponse is one of OMB’s survey standards outlines in OMB’s Standard and Guidelines for Statistical Surveys (2006). Item nonresponse is calculated as the percent of respondents for whom no valid response was recorded. If that rate is above 30 percent for an item, OMB standards call for additional analysis to identify further the implications of that bias.

Sample

The sample for the item-nonresponse study consisted of 12,602 agricultural worker interviews from NAWS fiscal years 2011–2016 (cycles 68-85).

Data Preparation

Prior to analysis, several steps were taken to prepare the data. First, new dichotomous variables were created for items that were “Mark all that apply” so that 1=Answered and 9=Missing. Second, all variables were examined to determine which values are considered truly missing. Items that were truly missing (i.e., “Not answered”) were recoded to -1 to separate it from non-missing value (i.e., “Not applicable” and “Don’t know”). Finally, a dataset was created that indicates the number of respondents with valid responses and the number missing for each item and fiscal year.

Analysis

Item nonresponse rates were analyzed by fiscal year and whether the item depended on the answer of a previous item. The numerator for each nonresponse rate consisted of the number of agricultural workers who did not answer the item. For items that did not depend on how a previous item was answered (i.e., no skip pattern; farmworker was required to answer all of these items), the denominator was the total sample size for that item. For items that depended on the answer to a previous item (i.e., skip pattern), the denominator was the number of agricultural workers eligible to respond to that item.

Results

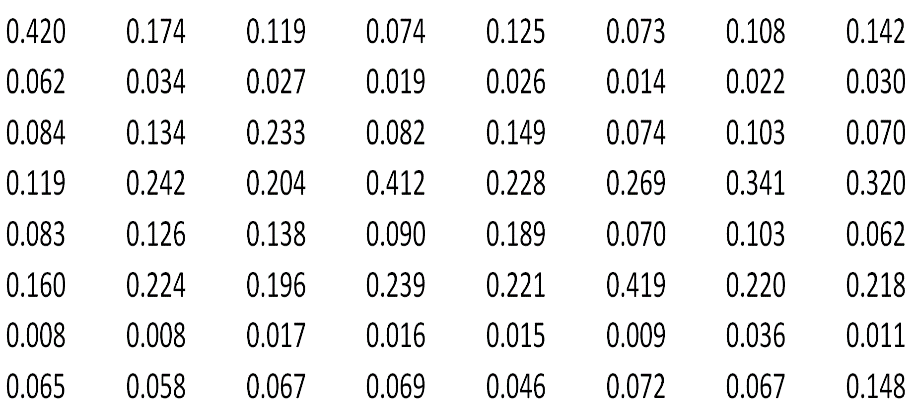

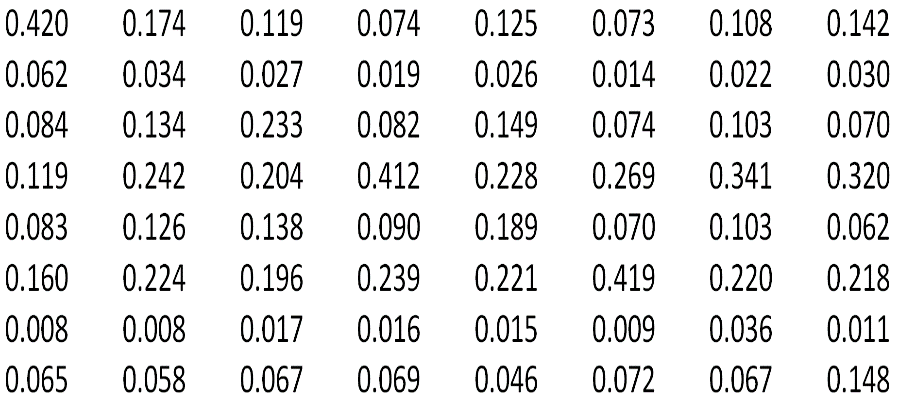

Table 4 shows the item nonresponse rate for items that do not have a skip pattern and the average nonresponse rate for each fiscal year. Overall, the average nonresponse rate in each fiscal year was less than 0.5 percent. The nonresponse rates for all items are low in all fiscal years, from 0 percent to 3.5 percent. Item 15 (“When was the last time your parents did hired farm work in the U.S.A?”) had the highest nonresponse rate, ranging from 1.1 in fiscal years 2014 and 2016 to 3.5 percent in fiscal year 2011.

Table 4. Item Nonresponse for Items Without Skip Patterns, by Fiscal Year.

|

Fiscal year |

|||||

|

2011 |

2012 |

2013 |

2014 |

2015 |

2016 |

Item 1 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 2 |

0.1% |

0.3% |

0.1% |

0.2% |

0.3% |

0.2% |

Item 3 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 4 |

0.1% |

0.1% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 5 |

0.1% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 6 |

0.1% |

0.5% |

0.6% |

0.1% |

0.1% |

0.3% |

Item 7 |

0.0% |

0.1% |

0.1% |

0.0% |

0.0% |

0.0% |

Item 8 |

0.4% |

0.1% |

0.2% |

0.2% |

0.3% |

0.2% |

Item 9 |

0.9% |

0.7% |

1.5% |

0.4% |

2.1% |

1.4% |

Item 10 |

2.6% |

0.7% |

0.8% |

0.5% |

0.5% |

0.6% |

Item 11 |

3.2% |

0.4% |

0.6% |

0.6% |

0.5% |

0.4% |

Item 12 |

0.4% |

0.1% |

0.2% |

0.1% |

0.2% |

0.1% |

Item 13 |

0.6% |

0.1% |

0.1% |

0.3% |

0.4% |

0.6% |

Item 14 |

0.7% |

0.5% |

0.3% |

0.3% |

0.5% |

0.8% |

Item 15 |

2.8% |

3.5% |

2.1% |

1.1% |

1.4% |

1.1% |

Item 16 |

0.0% |

0.1% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 17 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 18 |

0.4% |

0.8% |

0.4% |

0.0% |

0.0% |

0.2% |

Item 19 |

0.0% |

0.1% |

0.3% |

0.1% |

0.6% |

0.3% |

Item 20 |

0.1% |

0.1% |

0.2% |

0.1% |

0.4% |

0.2% |

Item 21 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 22 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 23 |

0.1% |

0.4% |

0.5% |

0.9% |

0.8% |

1.1% |

Item 24 |

0.1% |

0.2% |

0.1% |

0.2% |

0.1% |

0.2% |

Item 25 |

0.0% |

0.2% |

0.1% |

0.2% |

0.1% |

0.0% |

Item 26 |

0.0% |

0.1% |

0.0% |

0.1% |

0.1% |

0.2% |

Item 27 |

0.1% |

0.1% |

0.2% |

0.2% |

0.1% |

0.1% |

Item 28 |

0.1% |

0.1% |

0.1% |

0.2% |

0.2% |

0.2% |

Item 29 |

0.3% |

0.1% |

0.3% |

0.2% |

0.3% |

0.2% |

Item 30 |

0.2% |

0.2% |

0.4% |

0.5% |

0.3% |

0.1% |

Table 4. Item Nonresponse for Items without Skip Patterns, by Fiscal Year (Cont.)

|

Fiscal year |

|||||

|

2011 |

2012 |

2013 |

2014 |

2015 |

2016 |

Item 31 |

0.1% |

0.1% |

0.4% |

0.1% |

0.0% |

0.1% |

Item 32 |

0.1% |

0.1% |

0.1% |

0.1% |

0.2% |

0.1% |

Item 33 |

0.0% |

0.1% |

0.1% |

0.2% |

0.2% |

0.1% |

Item 34 |

0.3% |

0.6% |

0.6% |

0.6% |

0.5% |

0.4% |

Item 35 |

0.3% |

0.3% |

0.1% |

0.2% |

0.9% |

0.4% |

Item 36 |

0.4% |

0.2% |

0.2% |

0.3% |

0.1% |

0.1% |

Item 37 |

0.1% |

0.1% |

0.1% |

0.3% |

0.2% |

0.2% |

Item 38 |

0.4% |

0.5% |

0.2% |

0.7% |

0.8% |

1.0% |

Item 39 |

1.1% |

0.4% |

0.7% |

0.8% |

0.8% |

1.4% |

Item 40 |

1.7% |

1.9% |

2.4% |

2.2% |

2.3% |

2.3% |

Item 41 |

0.7% |

1.3% |

0.6% |

0.5% |

0.5% |

0.4% |

Item 42 |

0.3% |

0.5% |

0.7% |

0.1% |

0.3% |

0.3% |

Item 43 |

0.2% |

0.4% |

0.6% |

0.3% |

0.4% |

0.3% |

Item 44 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

Item 45 |

0.8% |

0.7% |

0.5% |

0.4% |

0.2% |

1.2% |

Item 46 |

1.2% |

0.8% |

0.8% |

0.6% |

0.2% |

1.2% |

Item 47 |

0.8% |

0.8% |

0.6% |

0.6% |

0.3% |

1.0% |

Item 48 |

0.1% |

0.1% |

0.2% |

0.0% |

0.1% |

0.7% |

Item 49 |

0.1% |

0.0% |

0.0% |

0.0% |

0.1% |

0.0% |

Item 50 |

0.5% |

0.5% |

0.4% |

0.2% |

0.2% |

0.1% |

Item 51 |

0.2% |

0.1% |

0.1% |

0.2% |

0.0% |

0.0% |

Item 52 |

0.3% |

0.3% |

0.2% |

0.2% |

0.5% |

0.4% |

Item 53 |

0.5% |

0.5% |

0.1% |

0.7% |

0.3% |

0.4% |

Item 54 |

0.6% |

1.6% |

0.8% |

0.5% |

0.6% |

0.6% |

Item 55 |

0.1% |

0.1% |

0.1% |

0.2% |

0.1% |

0.0% |

Item 56 |

0.1% |

0.1% |

0.1% |

0.2% |

0.1% |

0.0% |

Item 57 |

0.1% |

0.1% |

0.1% |

0.3% |

0.1% |

0.0% |

Item 58 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

|

|

|

|

|

|

|

Average for Items 1–58 |

0.4% |

0.4% |

0.3% |

0.3% |

0.3% |

0.4% |

Table 5 shows the item nonresponse rate for items that have skip patterns and the average nonresponse rate for each fiscal year. Overall, the average nonresponse rate in each fiscal year was less than 2 percent. Fiscal years 2013 and 2014 had the lowest average nonresponse rates (1.1 percent), while fiscal year 2012 had the highest average nonresponse rate (1.7 percent). The nonresponse rate was low in all fiscal years, from 0 percent to 9.4 percent. Item 24 (“Does this employer keep in contact with you about future employment before leaving at the end of the season?”) had the overall highest nonresponse rate, ranging from 5.1 percent in fiscal year 2016 to 9.4 percent in fiscal year 2013. Items that also had higher nonresponse rates relative to other items with skip patterns are Item 4 (2.7–5.1 percent, “And in your home country, do you own or are you buying any of the following items?”); Item 22 (0–6.0 percent, “Are you paid as an individual or by the crew?”); Item 23 (2.2–8.3 percent, “How and when do you receive the money bonus?”); and Item 25 (3.3–7.1 percent, “Do you pay a fee to the grower/contractor ‘raiteros’ for rides to work?).

All items included in the item nonresponse study had nonresponse rates lower than 30 percent. So, none of the NAWS variables met the OMB criteria for further analysis of bias.

Table 5. Item Nonresponse for Items with Skip Patterns, by Fiscal Year (Cont.)

|

Fiscal year |

|||||

|

2011 |

2012 |

2013 |

2014 |

2015 |

2016 |

Item 1 |

1.7% |

2.3% |

0.6% |

1.4% |

1.5% |

1.1% |

Item 2 |

0.2% |

0.3% |

0.3% |

0.1% |

0.0% |

0.1% |

Item 3 |

0.4% |

0.5% |

0.5% |

0.5% |

0.0% |

0.3% |

Item 4 |

2.7% |

4.8% |

2.9% |

3.9% |

5.1% |

4.9% |

Item 5 |

1.1% |

1.5% |

1.0% |

0.2% |

0.8% |

0.3% |

Item 6 |

0.6% |

1.6% |

0.3% |

0.7% |

0.9% |

0.9% |

Item 7 |

0.6% |

0.8% |

1.1% |

0.4% |

0.8% |

0.5% |

Item 8 |

0.3% |

0.3% |

0.7% |

0.2% |

0.1% |

0.3% |

Item 9 |

0.5% |

0.7% |

1.1% |

0.5% |

0.2% |

0.5% |

Item 10 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 11 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 12 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

Item 13 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

Item 14 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 15 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 16 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 17 |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

Item 18 |

0.1% |

0.0% |

0.0% |

0.3% |

0.0% |

0.2% |

Item 19 |

0.1% |

0.0% |

0.0% |

0.4% |

0.0% |

0.2% |

Item 20 |

1.1% |

1.7% |

1.0% |

1.6% |

1.7% |

1.4% |

Item 21 |

0.9% |

1.3% |

1.2% |

1.1% |

1.0% |

1.0% |

Item 22 |

3.9% |

5.7% |

0.0% |

3.2% |

2.9% |

6.0% |

Item 23 |

3.8% |

8.3% |

5.0% |

3.7% |

3.8% |

2.2% |

Item 24 |

7.7% |

7.1% |

9.4% |

8.0% |

7.6% |

5.1% |

Item 25 |

4.4% |

6.2% |

3.6% |

3.3% |

7.1% |

4.8% |

Item 26 |

0.8% |

1.2% |

0.2% |

0.3% |

0.3% |

0.6% |

Item 27 |

0.9% |

2.8% |

1.5% |

0.2% |

0.2% |

0.9% |

Item 28 |

0.6% |

0.2% |

0.7% |

0.2% |

0.3% |

0.5% |

|

|

|

|

|

|

|

Average for Items 1–28 |

1.2% |

1.7% |

1.1% |

1.1% |

1.2% |

1.2% |

Conclusions

The purpose of this study was to determine the number of agricultural workers who did not answer each item (item nonresponse). The item nonresponse study indicated that, on average, there was less than 0.5 percent nonresponse rate for items without a skip pattern and less than 2 percent average nonresponse rate for items with a skip pattern. Since the average and individual item nonresponse rates are less than 30 percent, further analysis of bias is not necessary.

Appendix B: Nonresponse Study 2 – NAWS Unit (Employer) Nonresponse

NAWS Unit (Employer) Nonresponse

Introduction

The first analysis will assess NAWS nonresponse bias by comparing information in the sampling frame on eligible respondents and nonrespondents. This study was described in Part B of the OMB submission as follows: “While the sampling data is somewhat sparse for nonrespondents, three pieces of information are useful: geographic location, NAICS code, and the source used to obtain employer names. The NAWS will use three sources of employer names: a) the BLS UI list, b) marketing lists, and c) internet searches and contacts with knowledgeable local individuals. Geographic area and source lists are available for all employers, while NAICS codes are available for all employers who pay UI taxes, marketing list employers, and some additional employers.”

Using all three variables (source, NAICS, and geography), we made the following comparisons:

Employers allowing interviews compared to sampled employers that refused or were unable to be screened (i.e., excluding the ineligible),

Employers allowing interviews compared to eligible employers that refused, and

Eligible employers compared to unscreened sample members (employers whose eligibility could not be determined).

Nonresponse bias was calculated using the bias calculation formula from OMB’s Standard and Guidelines for Statistical Surveys (2006).

Sample

The sample for the unit nonresponse study consisted of 26,151 agricultural employers from NAWS fiscal years 2013–2017 (cycles 74–88) that were contacted by JBS. The source lists of employers were obtained primarily from BLS and were supplemented with data from a commercial list (InfoUSA), as well as other sources (e.g., consultant and internet searches).

Data Preparation

Prior to analysis, several steps were taken to prepare the data. Response codes were collapsed to allow for easier interpretation. All response codes, 1–46 and 97–99 for fiscal years 2013–2016 and 1–18 for fiscal year 2017 were recoded into response categories (1=Interviewed, 2=Refused, 3=Eligibility unknown, 4=Not in sample/Not eligible, 5=Cannot assign category or no need to contact). All sources were collapsed into 1=BLS, 2=InfoUSA, and 3=Other.

Data cleaning and recoding were conducted for incorrect or missing NAICS codes. One hundred thirty-four employers from the BLS source did not have the expected NAICS codes 1111 (Oilseed and Grain Farming), 1112 (Vegetable and Melon Farming), 1113 (Fruit and Tree Nut Farming), 1114 (Greenhouse, Nursery, and Floriculture Production), 1119 (Other Crop Farming), or 1151 (Support Activities for Crop Production). The NAICS codes for these employers were examined across quarters and years to find the correct NAICS codes. For example, an employer’s first quarter NAICS code might be 112, but other quarters are 111 or 1151. Of the 134 employers, 44 were recoded to NAICS 111 or 1151. Specifically, 1 employer was recoded to NAICS 1111, 7 were recoded to NAICS 1112, 9 were recoded to NAICS 1113, 8 were recoded to NAICS 1114, 7 were recoded to NAICS 1119, and 12 were recoded to NAICS 1151. The remaining 89 employers were unable to be recoded because all quarters and years show they have NAICS 1121–1129. One employer was recoded to missing due to inconsistent NAICS code information.

An additional 585 employers from the InfoUSA source did not have the expected NAICS codes 1111–1119 or 1151. All of these employers have secondary NAICS codes that are 1111–1119 or 1151. Specifically, 34 employers were recoded to NAICS 1111, 1 was recoded to NAICS 1112, 120 were recoded to NAICS 1113, 123 were recoded to NAICS 1114, 141 were recoded to NAICS 1119, and 166 were recoded to NAICS 1151.

Of the 1643 employers that do not have NAICS codes, 385 have SIC codes. The SIC codes for the 385 employers were converted to the appropriate NAICS codes using NAICS Identification Tools (https://www.naics.com/search/). Of the 385 employers, 31 employers were recoded to NAICS 1111, 53 were recoded to NAICS 1112, 141 were recoded to NAICS 1113, 60 were recoded to NAICS 1114, 79 were recoded to NAICS 1119, 20 were recoded to NAICS 1151, and 1 was recoded to NAICS 1122.

Due to the small number of employers (N = 102 across fiscal years 2013–2017) with NAICS 1121–1129, they were collapsed into NAICS 112.

Analysis

Nonresponse rate, differences between respondents and nonrespondents, and nonresponse bias analyses were conducted to examine the differences between respondents and nonrespondents across different characteristics. The following characteristics were examined: source of the employer list (BLS, InfoUSA, or Other), NAICS (1111, 1112, 1113, 1114, 1119, 1151, and 112), and geography (divided into six and 12 regions). Using all three characteristics, the following comparisons were made:

Employers allowing interviews were compared to sampled employers that refused or were unable to be screened (i.e., excluding ineligible employers).

Employers allowing interviews compared to eligible employers that refused.

Eligible employers compared to employers whose eligibility could not be determined.

Nonresponse bias was calculated using the bias calculation formula from OMB’s Standard and Guidelines for Statistical Surveys (2006):

where:

=

the mean based on all sample cases;

=

the mean based on all sample cases;

=

the mean based only on respondent cases;

=

the mean based only on respondent cases;

=

the mean based only on nonrespondent cases;

=

the mean based only on nonrespondent cases;

= the number of cases in the sample; and

= the number of cases in the sample; and

= the number of nonrespondent cases.

= the number of nonrespondent cases.

The formula provides a measure of nonresponse bias, which depends on the nonresponse rate and the difference between the means of respondents and nonrespondents on the three key variables. The smaller each of these components are, the smaller is the nonresponse bias.

In addition to nonresponse bias, logistics regressions were conducted to examine the effects of each characteristics while holding other characteristics constant.

Results

Table 1 shows the distribution of the entire sample by source, NAICS, and geography. The majority (69 percent) of the employers were obtained from the BLS list. Almost one-third of the employers had NAICS 1119 (Other Crop Farming), followed by almost a quarter (22 percent) of employers with NAICS 1113 (Fruit and Tree Nut Farming). The majority (31 percent) of the employers were from California.

Table 2 shows the nonresponse rate and bias for the three comparisons, for source and NAICS. The nonresponse rate for source was 83–89, 55–57, and 61–75 percent for comparisons A, B, and C, respectively. There was low bias (2–4 percent) across the three comparisons and sources. There were more variations in nonresponse rates for NAICS; 70–95, 47–81, and 43–74 percent for comparison A, B, and C, respectively. Despite these larger variations and higher nonresponse, the bias remained low (0–10 percent). NAICS 1114 (Greenhouse, Nursery, and Floriculture Production) had the largest differences between respondents and nonrespondents (15 percent) and the largest bias of 10 percent for comparison A, but its nonresponse rate was the lowest (70 percent).

Table 3 shows the nonresponse rate and bias for the three comparisons, for geography (six regions and 12 regions). The nonresponse rate for the six regions was 80–87, 49–62, and 57–66 percent for comparison A, B, and C, respectively. The bias was low (1–7 percent) across the three comparisons. California has one of the highest nonresponse rates and one of the highest biases. The nonresponse rates for the 12 regions were 75–87, 46–63, and 54–66 percent for comparison A, B, and C, respectively. Similar to the 6-region analysis, the 12 regions also had low bias (0–7 percent) across the three comparisons, and California had one of the highest nonresponse rates and bias.

Table 1. Distribution of Source, NAICS, and Geography (Entire Sample).

|

Sample size |

Percent |

Source |

|

|

BLS |

17981 |

69% |

InfoUSA |

6897 |

26% |

Other |

1271 |

5% |

NAICS |

|

|

1111 (Oilseed and Grain Farming) |

2326 |

9% |

1112 (Vegetable and Melon Farming) |

1851 |

8% |

1113 (Fruit and Tree Nut Farming) |

5373 |

22% |

1114 (Greenhouse, Nursery, and Floriculture Production) |

3240 |

13% |

1119 (Other Crop Farming) |

7379 |

30% |

1151 (Support Activities for Crop Production) |

4235 |

17% |

112 (Cattle Ranching and Farming, Hog and Pig Farming, Poultry and Egg Production, Sheep and Goat Farming, Aquaculture, or Other Animal Production) |

102 |

<1% |

Region 6 |

|

|

East |

3829 |

15% |

Southeast |

3255 |

12% |

Midwest |

4664 |

18% |

Southwest |

2978 |

11% |

Northwest |

3365 |

13% |

California |

8059 |

31% |

Region 12 |

|

|

AP12 |

1723 |

7% |

CA |

8059 |

31% |

CBNP |

2977 |

11% |

DLSE |

1691 |

6% |

FL |

1564 |

6% |

LK |

1687 |

6% |

MN12 |

1302 |

5% |

MN3 |

986 |

4% |

NE1 |

968 |

4% |

NE2 |

1138 |

4% |

PC |

2063 |

8% |

SP |

1992 |

8% |

AP12 = KY, NC, TN, VA, WV. CA = CA only. CBNP = IA, IL, IN, KS, MO, ND, NE, OH, SD. DLSE = AL, AR, GA, LA, MS, SC. FL = FL only. LK = MI, MN, WI. MN12 = CO, ID, MT, NV, UT, WY. MN3 = AZ, NM. NE1 = CT, MA, ME, NH, NY, RI, VT. NE2 = DE, DC, MD, NJ, PA. PC = OR, WA. SP = OK, TX.

East = AP12, NE1, NE2. Southeast = DLSE, FL. Midwest = CBNP, LK. Southwest = MN3, SP. Northwest = MN12, PC. California = California only.

Table 2. Unit Nonresponse Rate and Bias by Source and NAICS.

|

A. Nonresponse among all eligible and unscreened employers |

B. Nonresponse rate among eligible employers |

C. Eligibility Rate |

||||||

Variable |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias1 |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias1 |

Source |

|

|

|

|

|

|

|

|

|

BLS |

83% |

4% |

3% |

57% |

-2% |

-1% |

61% |

7% |

4% |

InfoUSA |

86% |

-3% |

-2% |

55% |

1% |

1% |

69% |

-5% |

-3% |

Other |

89% |

-2% |

-1% |

55% |

0% |

0% |

75% |

-2% |

-2% |

NAICS |

|

|

|

|

|

|

|

|

|

111 or 1151 (vs 112) |

84% |

0% |

0% |

57% |

0% |

0% |

62% |

0% |

0% |

1111 |

89% |

-4% |

-3% |

67% |

-3% |

-2% |

68% |

-2% |

-1% |

1112 |

82% |

1% |

1% |

56% |

0% |

0% |

58% |

2% |

1% |

1113 |

84% |

-1% |

-1% |

56% |

1% |

1% |

64% |

-2% |

-1% |

1114 |

70% |

15% |

10% |

47% |

9% |

4% |

43% |

12% |

5% |

1119 |

86% |

-5% |

-5% |

60% |

-3% |

-2% |

66% |

-4% |

-3% |

1151 |

89% |

-6% |

-5% |

63% |

-4% |

-2% |

69% |

-5% |

-3% |

112 |

95% |

0% |

0% |

81% |

0% |

0% |

74% |

0% |

0% |

Comparison A = Employers allowing interviews compared to sampled employers that refused or unable to be screen (i.e., excluding the ineligible).

Comparison B = Employers allowing interviews compared to eligible employers who refused.

Comparison C = Eligible employers compared to employers whose eligibility could not be determined).

NAICS 1111 = Oilseed and Grain Farming. NAICS 1112 = Vegetable and Melon Farming. NAICS 1113 = Fruit and Tree Nut Farming. NAICS 1114 = Greenhouse, Nursery, and Floriculture Production. NAICS 1119 = Other Crop Farming. NAICS 1151 = Support Activities for Crop Production. NAICS 112 = Cattle Ranching and Farming, Hog and Pig Farming, Poultry and Egg Production, Sheep and Goat Farming, Aquaculture, or Other Animal Production.

1Bias

=

Table 3. Unit Nonresponse Rate and Bias by Geography.

|

A. Nonresponse among all eligible and unscreened employers |

B. Nonresponse rate among eligible employers |

C. Eligibility Rate |

||||||

Variable |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias1 |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias |

Nonresponse rate |

Difference between respondents and nonrespondents |

Bias1 |

Region 6 |

|

|

|

|

|

|

|

|

|

East |

80% |

4% |

3% |

49% |

4% |

2% |

60% |

1% |

1% |

Southeast |

80% |

3% |

3% |

49% |

4% |

2% |

61% |

1% |

1% |

Midwest |

85% |

-1% |

-1% |

58% |

-1% |

0% |

65% |

-1% |

-1% |

Southwest |

86% |

-2% |

-2% |

60% |

-2% |

-1% |

66% |

-1% |

-1% |

Northwest |

80% |

4% |

3% |

53% |

2% |

1% |

57% |

3% |

2% |

California |

87% |

-8% |

-7% |

62% |

-8% |

-5% |

65% |

-3% |

-2% |

Region 12 |

|

|

|

|

|

|

|

|

|

AP12 |

84% |

0% |

0% |

53% |

1% |

0% |

65% |

-1% |

0% |

CA |

87% |

-8% |

-7% |

62% |

-8% |

-5% |

65% |

-3% |

-2% |

CBNP |

86% |

-2% |

-1% |

61% |

-2% |

-1% |

65% |

-1% |

-1% |

DLSE |

81% |

2% |

1% |

46% |

3% |

1% |

64% |

0% |

0% |

FL |

80% |

2% |

1% |

51% |

2% |

1% |

59% |

1% |

1% |

LK |

83% |

0% |

0% |

52% |

1% |

1% |

64% |

0% |

0% |

MN12 |

82% |

1% |

1% |

54% |

0% |

0% |

60% |

1% |

0% |

MN3 |

84% |

0% |

0% |

55% |

0% |

0% |

65% |

0% |

0% |

NE1 |

75% |

2% |

1% |

46% |

2% |

1% |

54% |

1% |

1% |

NE2 |

77% |

2% |

1% |

46% |

2% |

1% |

57% |

1% |

1% |

PC |

79% |

3% |

2% |

53% |

2% |

1% |

56% |

3% |

2% |

SP |

87% |

-2% |

-2% |

63% |

-2% |

-1% |

66% |

-1% |

-1% |

Comparison A = Employers allowing interviews compared to sampled employers that refused or unable to be screen (i.e., excluding the ineligible).

Comparison B = Employers allowing interviews compared to eligible employers who refused.

Comparison C = Eligible employers compared to employers whose eligibility could not be determined.

AP12 = KY, NC, TN, VA, WV. CA = CA only. CBNP = IA, IL, IN, KS, MO, ND, NE, OH, SD. DLSE = AL, AR, GA, LA, MS, SC. FL = FL only. LK = MI, MN, WI. MN12 = CO, ID, MT, NV, UT, WY. MN3 = AZ, NM. NE1 = CT, MA, ME, NH, NY, RI, VT. NE2 = DE, DC, MD, NJ, PA. PC = OR, WA. SP = OK, TX. East = AP12, NE1, NE2. Southeast = DLSE, FL. Midwest = CBNP, LK. Southwest = MN3, SP. Northwest = MN12, PC. California = California only.

Tables 4 and 5 show the regression results with the 6 regions and 12 regions, respectively. The estimate, standard error, statistical significance (p-values), and odds ratio are presented. The odds ratio shows the likelihood of employers participating in the NAWS compared to the reference category (BLS, NAICS 1119, and California) when holding all other variables constant.

In comparisons A and C, employers selected from the InfoUSA source are significantly less likely to participate compared to BLS and while holding all other variables constant (NAICS and region). There were no significant differences between the three sources in comparison B.

In all three comparisons, employers with NAICS 1114 (Greenhouse, Nursery, and Floriculture Production) had the highest likelihood of participating in NAWS, compared to NAICS 1119 (Other Crop Farming). For example, in comparison A, employers with NAICS 1114 were 2.5 times more likely to be interviewed than those with NAICS 1119 (Table 4).

In all three comparisons, employers in four of the six regions (East, Southeast, Midwest, and Northwest) were significantly more likely to participate in NAWS compared to California. Employers in in the Southwest region also had higher odds of participating than employers in California, but that was only significant in comparisons A. In terms of the 12 regions, employers in 10 of the regions had significantly higher odds of participating compared to California. Region SP was not statistically different compared to California. Florida in comparison C was also not significantly different compared to California.

Table 4. Regression with Source, NAICS, and Six Regions.

|

A. Nonresponse among all eligible and unscreened employers |

B. Nonresponse rate among eligible employers |

C. Eligibility Rate |

|||||||||

|

B |

Std Error |

Sig |

Odds radio |

B |

Std error |

Sig |

Odds radio |

B |

Std error |

Sig |

Odds radio |

Source |

|

|

|

|

|

|

|

|

|

|

|

|

BLS1 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

InfoUSA |

-0.32 |

0.07 |

<.0001 |

0.73 |

0.03 |

0.09 |

0.7016 |

1.04 |

-0.46 |

0.06 |

<.0001 |

0.63 |

Other |

0.37 |

0.67 |

0.5838 |

1.45 |

0.46 |

1.10 |

0.6752 |

1.59 |

-0.04 |

0.68 |

0.9551 |

0.96 |

NAICS |

|

|

|

|

|

|

|

|

|

|

|

|

11191 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

1111 |

-0.39 |

0.11 |

0.0003 |

0.68 |

-0.26 |

0.13 |

0.0396 |

0.77 |

-0.26 |

0.07 |

0.0005 |

0.77 |

1112 |

0.31 |

0.10 |

0.0011 |

1.37 |

0.22 |

0.11 |

0.0548 |

1.24 |

0.19 |

0.07 |

0.0087 |

1.21 |

1113 |

0.26 |

0.08 |

0.0007 |

1.30 |

0.38 |

0.09 |

<.0001 |

1.47 |

-0.01 |

0.06 |

0.8867 |

0.99 |

1114 |

0.93 |

0.07 |

<.0001 |

2.53 |

0.55 |

0.09 |

<.0001 |

1.73 |

0.82 |

0.06 |

<.0001 |

2.26 |

1151 |

-0.16 |

0.09 |

0.0652 |

0.85 |

0.05 |

0.10 |

0.666 |

1.05 |

-0.22 |

0.06 |

0.0002 |

0.80 |

112 |

-1.11 |

0.59 |

0.0588 |

0.33 |

-1.00 |

0.63 |

0.1129 |

0.37 |

-0.51 |

0.29 |

0.079 |

0.60 |

Region |

|

|

|

|

|

|

|

|

|

|

|

|

California1 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

East |

0.64 |

0.08 |

<.0001 |

1.90 |

0.62 |

0.10 |

<.0001 |

1.86 |

0.31 |

0.07 |

<.0001 |

1.37 |

Southeast |

0.49 |

0.08 |

<.0001 |

1.63 |

0.54 |

0.10 |

<.0001 |

1.72 |

0.16 |

0.06 |

0.0116 |

1.17 |

Midwest |

0.39 |

0.09 |

<.0001 |

1.48 |

0.31 |

0.10 |

0.0023 |

1.37 |

0.22 |

0.07 |

0.001 |

1.25 |

Southwest |

0.19 |

0.09 |

0.0306 |

1.21 |

0.19 |

0.10 |

0.0731 |

1.21 |

0.06 |

0.06 |

0.3115 |

1.07 |

Northwest |

0.56 |

0.07 |

<.0001 |

1.76 |

0.41 |

0.09 |

<.0001 |

1.51 |

0.38 |

0.06 |

<.0001 |

1.46 |

1 Reference category.

Table 5. Regression with Source, NAICS, and 12 Regions.

|

A. Nonresponse among all eligible and unscreened employers |

B. Nonresponse rate among eligible employers |

C. Eligibility Rate |

|||||||||

|

B |

Std Error |

Sig |

Odds radio |

B |

Std error |

Sig |

Odds radio |

B |

Std error |

Sig |

Odds radio |

Source |

|

|

|

|

|

|

|

|

|

|

|

|

BLS1 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

InfoUSA |

-0.38 |

0.08 |

<.0001 |

0.68 |

-0.02 |

0.09 |

0.7965 |

0.98 |

-0.50 |

0.06 |

<.0001 |

0.61 |

Other |

0.29 |

0.72 |

0.6940 |

1.33 |

0.53 |

1.14 |

0.6419 |

1.70 |

-0.07 |

0.71 |

0.9185 |

0.93 |

NAICS |

|

|

|

|

|

|

|

|

|

|

|

|

11191 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

1111 |

-0.40 |

0.11 |

0.0002 |

0.67 |

-0.24 |

0.13 |

0.0558 |

0.78 |

-0.27 |

0.07 |

0.0003 |

0.76 |

1112 |

0.28 |

0.10 |

0.0042 |

1.32 |

0.20 |

0.12 |

0.0881 |

1.22 |

0.17 |

0.07 |

0.0243 |

1.18 |

1113 |

0.26 |

0.08 |

0.0012 |

1.30 |

0.39 |

0.09 |

<.0001 |

1.48 |

-0.02 |

0.06 |

0.7084 |

0.98 |

1114 |

0.96 |

0.08 |

<.0001 |

2.61 |

0.59 |

0.09 |

<.0001 |

1.80 |

0.83 |

0.06 |

<.0001 |

2.29 |

1151 |

-0.17 |

0.09 |

0.0483 |

0.84 |

0.05 |

0.11 |

0.6688 |

1.05 |

-0.23 |

0.06 |

0.0001 |

0.79 |

112 |

-1.14 |

0.59 |

0.052 |

0.32 |

-1.06 |

0.64 |

0.0984 |

0.35 |

-0.53 |

0.29 |

0.0656 |

0.59 |

Region |

|

|

|

|

|

|

|

|

|

|

|

|

CA1 |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

-- |

AP12 |

0.45 |

0.11 |

<.0001 |

1.56 |

0.48 |

0.14 |

0.0004 |

1.61 |

0.15 |

0.09 |

0.0819 |

1.16 |

CBNP |

0.35 |

0.10 |

0.0008 |

1.41 |

0.23 |

0.12 |

0.0517 |

1.26 |

0.23 |

0.08 |

0.0031 |

1.26 |

DLSE |

0.75 |

0.10 |

<.0001 |

2.11 |

0.79 |

0.13 |

<.0001 |

2.21 |

0.27 |

0.08 |

0.0011 |

1.31 |

FL |

0.28 |

0.10 |

0.0041 |

1.33 |

0.33 |

0.12 |

0.0062 |

1.39 |

0.06 |

0.08 |

0.4795 |

1.06 |

LK |

0.52 |

0.12 |

<.0001 |

1.68 |

0.49 |

0.15 |

0.0008 |

1.63 |

0.24 |

0.09 |

0.01 |

1.27 |

MN12 |

0.61 |

0.12 |

<.0001 |

1.84 |

0.48 |

0.14 |

0.0008 |

1.61 |

0.36 |

0.09 |

<.0001 |

1.43 |

MN3 |

0.48 |

0.13 |

0.0002 |

1.61 |

0.45 |

0.16 |

0.0044 |

1.56 |

0.20 |

0.10 |

0.0383 |

1.22 |

NE1 |

1.07 |

0.14 |

<.0001 |

2.92 |

0.91 |

0.18 |

<.0001 |

2.48 |

0.68 |

0.12 |

<.0001 |

1.98 |

NE2 |

0.64 |

0.12 |

<.0001 |

1.90 |

0.64 |

0.15 |

<.0001 |

1.90 |

0.30 |

0.10 |

0.0027 |

1.35 |

PC |

0.55 |

0.08 |

<.0001 |

1.74 |

0.39 |

0.10 |

<.0001 |

1.48 |

0.39 |

0.06 |

<.0001 |

1.48 |

SP |

0.05 |

0.11 |

0.6372 |

1.05 |

0.06 |

0.12 |

0.6316 |

1.06 |

0.00 |

0.08 |

0.9542 |

1.00 |

Conclusions

The purpose of the unit nonresponse study was to examine nonresponse among agricultural employers sampled by the NAWS to determine whether there was any systematic bias between employers granting permission for their workers to be interviewed compared with nonrespondents, those refusing interviews, those unable to be screened, or those for which survey staff could not determine eligibility. Potential bias was examined across source, NAICS code, and geographic region.

The results of the unit nonresponse study indicated that nonresponse rates were between 43 and 95 percent, depending on whether respondents were compared to those refusing and unable to be screened (70–95 percent), only those unable to be screened (46–81 percent), or those for which eligibility could not be determined (43–75 percent). There were small variations in nonresponse rates between BLS, InfoUSA, and other sources, and between the 6 or 12 regions. There were larger variations in nonresponse rates between the NAICS codes. Although nonresponse rates are high, the bias was less than 10 percent, which indicates that there were small differences between respondents and nonrespondents across the three sources, seven NAICS codes, and 6 or 12 geographic locations.

Appendix C: Nonresponse Study 3 – Follow Up with Employers Who Were Not Successfully Screened During the Initial NAWS Data Collection

Follow Up with Employers Who Were Not Successfully Screened During the Initial NAWS Data Collection

Introduction

As part of efforts to understand agricultural employer Nonresponse in the National Agricultural Workers Survey, JBS conducted a nonresponse follow-up (NRFU) study. The additional contact was focused on identifying whether nonresponding employers were eligible or ineligible. The goal was to see if further efforts could improve the NAWS response rate.

JBS used both mail and telephone attempts to contact nonresponding agricultural employers whose survey eligibility was unable to be determined by interviewer contacts during the Fall 2017 interview cycle (October 2017–February 2018). The additional contact efforts were carried out from May 2018 through August 2018. The gap between the end of the cycle and the follow-up time period was deliberate. Contacting employers at another time in the agricultural cycle was done to see if employers that might have been too busy to respond during the fall would respond if contacted during another season.

NRFU screening

The additional NRFU focused on screening nonresponding employers for eligibility using the questions similar to those that interviewers would ask employers to determine eligibility when carrying out the survey. Employers were asked whether there had been employees actively working during the time period when NAWS interviewers were on site (the reference period) and whether these workers had been doing qualifying tasks on qualifying crops. Employers were also asked how many qualifying workers they had during the reference period.

Interviewers obtain this screening information during their initial contacts with the employers and ask these questions as part of a conversation, probing when needed for additional information. For the NRFU study, the eligibility contacts were standardized and distilled to a set of four questions that were included in a) a telephone script for contacting employers by phone, and b) a letter from the survey director that asked the employers to return their answers to the questions by mail. Both the script and the letter included the same explanation of the survey and the reasons for contacting the employers. The letter also included a JBS contact name and telephone number that the employer could call with questions or concerns about responding. The letter text can be found at the end of this appendix.

The Fall 2017 contact attempts with a nonresponding employer generally happened during a single interviewer trip. In a few counties with large interview allocations, interviewers made more than one trip and continued to contact nonresponding employers from earlier trips. To standardize the reference period, these employers were asked for information about their operations during the first interviewer trip to their county.

Sampling Universe

The universe for the Nonresponse Follow-up (NRFU) Study included 779 growers in 67 counties across 23 states. The list included each employer’s name and contact information along with documentation of the contact attempts made by the NAWS interviewer.

The criteria used to select nonresponding agricultural employers for the NRFU study were 1) the employers had been randomly selected for inclusion in the NAWS employer sampling list for Fall 2017, and 2) response codes indicated that the agricultural employers eligibility had not been determined because: a) the employer had not responded to the NAWS interviewers’ outreach during Fall 2017; b) the employer outreach was incomplete and eligibility for survey inclusion had not been determined; or c) the response code for that employer was missing in the interviewer documentation.

Nonresponse follow-up attempts

The nonresponse follow-up was carried out in four waves that included different combinations of mail and telephone contact attempts. Table 1 shows each wave and the number of employers who responded, the number of employers who did not respond at each wave, and the response rate.

The initial mailing consisted of 779 agricultural employers. A second mailing was sent to 448 NRFU sample members who had not responded to the first letter, who were not in the wave receiving only one mailing plus phone calls, and whose first letters had not been returned as undeliverable. Of the 779 agricultural employers, a random subset of 268 employers also received phone contact attempts. One group of 127 received only the first mailing and one or more telephone calls, while the remaining 141 received both mailings and one or more telephone calls.

Data collection began April 1, 2018 with the first mailing. The first set of NFRU calls took place from May 22 to June 14. The second mailing was sent out beginning June 14 and the second set of telephone calls were conducted from July 16 to August 17, 2018.

Table 1. Results of each wave of nonresponse follow up.

Mailing |

N |

Response |

Non Response |

Response Rate |

Cumulative response |

Cumulative response rate |

First mailing* |

779 |

83 |

696 |

11% |

83 |

11% |

Second mailing |

448 |

53 |

395 |

12% |

136 |

17% |

Total for both rounds of mail response |

779 |

136 |

|

17% |

|

|

Phone follow up after mailing(s) |

|

|

|

|

|

|

One mailing and phone follow up |

127 |

51 |

76 |

40% |

51 |

40% |

Two mailings and phone follow up |

141 |

44 |

97 |

31% |

95 |

35% |

Total phone response |

268 |

95 |

|

35% |

|

|

Mail and Phone Response Combined |

779 |

231 |

548 |

30% |

|

|

*Nonresponse to the first mailing included 121 letters that were returned as undeliverable.

A total of 231 follow-up screenings were completed. One hundred and

thirty-six employers responded to the mailings and returned their

screening information by mail. Another 95 screenings were completed

by telephone. The response rate was 30 percent at the completion of

data collection ( ).

).

Data Preparation and the Analytic Sample

Prior to analysis, steps were taken to prepare the NRFU data and the Fall 2017 data for analysis. The first step was to review the responses to the screening questions and determine if, at the end of the NRFU data collection, the contacted employers could be classified as eligible, ineligible and/or eligibility unknown. This was done by examining the responses to the four eligibility questions. For example, responses to the question about whether the employer had active workers during the reference period included answers such as “Yes” or “Yes, they’re year-round.” There were coded as eligible provided the crops and tasks were qualifying. Responses such as “No, don’t hire workers” or “No…do not farm or hire farmworkers” were coded as ineligible.

Seven agricultural employers were found to have been ineligible for the NRFU study. Further cleaning of the response data at the end of FY2018 resulted in one agricultural employer being removed from the sample because the employer had been interviewed in Fall 2017. Six additional agricultural employers were removed from analysis because they were not contacted during Fall 2017 and should not have been included in the survey. The final sample size for analysis was 772 agricultural employers receiving additional NRFU; 229 of them were successfully screened. The final samples consisted of 1,721 agricultural employers in Fall 2017 and 772 agricultural employers in the NRFU study.

Finally, the 2018 NRFU data was merged with the full Fall 2017 NAWS employer sample to calculate employer response rates. A new set of response codes was created that updated the fall 2017 response codes using the NRFU data. For example, if an agricultural employer’s eligibility was unknown in Fall 2017 but found to be eligible in the NRFU data, the final response code was eligible.

Analysis

Table 2 shows the response codes of the NRFU sample after coding the screened NRFU employers. An important issue for the analysis was that the employers in the NRFU sample did not have the opportunity to agree or refuse to participate in the NAWS survey because the survey period had passed. Employers contacted in Fall 2017 had response codes that included whether eligible employers had agreed or refused to participate in the NAWS.

To address this issue, the response rate was calculated for four scenarios:

The initial Fall 2017 cycle without the NRFU data.

All eligible agricultural employers screened during the NRFU that refused to allow interviews.

A proportion of eligible agricultural employers screened during the NRFU that allowed interviews

All eligible agricultural employers screened during the NRFU that allowed interviews.

Based on Table 2, the number for Scenario 3 is based on the number of interviews (N = 142) and refusals (N = 191) in Fall 2017, and number of eligible agricultural employers in the NRFU sample allowing interviews (N = 123). The estimated number of interviews in the NRFU sample was 52 [142/(142+191)*123 = 52.45].

Table 2. Result of Nonresponse Follow Up with a Sample

of Employers of Unknown Eligibility.

Eligibility after the nonresponse follow up |

|

Response Code |

Employers |

Eligible |

123 |

Ineligible |

106 |

Eligibility Unknown |

543 |

Total |

772 |

Response rates were calculated using the formula for the unweighted response rate from the Office of Management and Budgets’ Standards and Guidelines for Statistical Surveys.1

Where:

C = number of completed cases or sufficient

partials;

R = number of refused cases;

NC =

number of noncontacted sample units known to be eligible;

O

= number of eligible sample units not responding for reasons other

than refusal;

U = number of sample units of unknown

eligibility, not completed; and

e = estimated proportion

of sample units of unknown eligibility that are eligible.

Results

Table 3 shows the response rates for the four scenarios. Of the 1,721 agricultural employers in the Fall 2017 cycle, 142 allowed interviews, resulting in a response rate of 25 percent. If all 123 eligible agricultural employers in the NRFU refused to allow interviews, the resulting response rate would be 20 percent (a 5% decrease compared to Fall 2017). On the other hand, if all 123 eligible NRFU respondents agreed to allow interviews, the response rate would be 37 percent. If the proportion of the NRFU respondents allowing interviews was the same as the Fall 2017 eligible employers, there would be an additions 52 employers allowing interviews and the response rate would be 27 percent.

Table 3. Number of Agricultural Employers from Fall 2017 NAWS Sample.

Category |

Fall 2017 All NRFU Coded as Eligibility Unknown |

All Eligible NRFU Refusing Interviews |

NRFU Share of Refusals Same as Fall 2017 |

All Eligible NRFU Allowing Interviews |

Interviewed |

142 |

142 |

194 |

265 |

Refused |

191 |

314 |

262 |

191 |

Ineligible |

570 |

676 |

676 |

676 |

Eligibility Unknown |

818 |

589 |

589 |

589 |

Total |

1721 |

1721 |

1721 |

1721 |

|

|

|

|

|

Response Rate |

25% |

20% |

27% |

37% |

Conclusions

The purpose of this follow-up study was to determine whether additional effort to contact nonresponding agricultural employers would improve the NAWS response rate. NAWS interviewers contacted 779 nonresponding employers via mail and follow-up telephone calls. At the end of the NRFU data collection period, 231 agricultural employers responded. Employers responded to both the mail and telephone contact attempts. With a mail-only response rate of 17 percent over both mailings and a 35 percent response in the sample who received mailings combined with telephone follow up, it increased the overall NRFU response rate to 30 percent.

Response rates were calculated for four possible scenarios: 1) the initial Fall 2017 cycle without additional effort to contact nonresponding agricultural employers, 2) all eligible agricultural employers who responded to the NRFU refused to allow interviews, 3) a proportion of eligible agricultural employers who responded to the NRFU allowed interviews, and 4) a similar proportion of eligible agricultural employers who responded to the NRFU allowed interviews compared to the Fall 2017 eligible respondents.

The results show that a likely lower bound on the response rate is 20 percent when all NRFU eligible refuse, and conversely the upper bound is 37 percent when all are assumed to allow interviews. A more probable response rate is that the proportion of eligible NRFU employers allowing interviews would be similar to that of eligible employers in Fall 2017 for a response rate of 27 percent.

Comparing the probable NRFU adjusted response rate of 27 percent to the actual Fall 2017 response rate of 25 percent shows that the NRFU had only a small impact on the NAWS response rate. This result is the same as was found in a similar study done in 2009. That is, further efforts of contacting nonresponding employers does not substantially affect the response rates.

The persistence of nonresponding employers is likely an artifact of the NAWS employer list construction. The main component of the NAWS employer sampling frame is the BLS list of employers participating in the Federal unemployment insurance (UI) system. In most states, only large employers participate in the UI system. To overcome this bias, JBS enriches the sampling frame with administrative and commercial lists of employers as well as through internet searches. The quality of these lists is not as high as the UI list, resulting in large numbers of potentially eligible employers that are unable to be contacted.

Sample of the Nonresponse Mail-out Survey

Date

«NawsId» / «ListOrder»

«TradeName»

«Address»

«City», «State» «ZipCode»

To Whom It May Concern:

JBS International is conducting a private follow-up to verify the accuracy of our field operations. A field representative from the National Agricultural Workers Survey, attempted to contact you last fall about our survey.

The main objective of the survey sponsored by the U.S. Department of Labor is to identify trends in the make-up of the hired farm workforce. The information obtained helps agricultural employers and grower organizations stay informed about the characteristics of the hired farm workforce and helps public and private agencies better plan programs for farm workers.

JBS International, Inc. is a private research firm that provides professional, technical, and management services for policy analysis and program evaluation to government agencies, education agencies, and the private sector. JBS International, Inc. has no connection to any union organization.