Attachment 2: Logic Model Evaluation Plan

Attachment 2_Logic Model Evaluation Plan_10.29.18.docx

Drug Free Communities Support Program National Evaluation

Attachment 2: Logic Model Evaluation Plan

OMB: 3201-0012

Attachment 2:

Drug-Free Communities Support Program National Evaluation

Logic Model and Evaluation Plan

Drug-Free

Communities Support Program

National

Evaluation

Evaluation Plan

|

ICF 9300 Lee Highway Fairfax, VA 22031

|

Introduction to Evaluation Plan 1

Collaborative Information Sharing 2

Logic Model Review and Revision 12

DFC National Evaluation Research Questions 13

Evaluation Methods Appropriate to the DFC National 19

Design Components of the DFC National Evaluation 20

Data Collection, Instruments, and Measures 22

Quantitative Data Collection. 24

Qualitative Data Collection. 26

Data Storage and Protection 27

DFC Evaluation Data Management Systems and Tools. 27

Analysis and Interpretation 28

Analyzing DFC Recipient Feedback from Technical Assistance Activities 30

Introduction to Evaluation Plan

The National Drug-Free Communities (DFC) National Support Program is the largest, sustained community youth substance use prevention initiative in the nation. DFC community coalitions were initiated in 1997 with passage of the Drug-Free Communities Act. That support commitment has continued for nearly two decades. The Office of National Drug Control Policy (ONDCP) funded 724 DFC community coalition grants in fiscal year 2018; 155 were first year, and 569 were continuation awards. Each DFC recipient is monitored by a government project officer (GPO). The DFC program has supported evidence-based development and success of the DFC coalitions through training and technical assistance (TA), and has supported evaluation of the national program.

To evaluate the work of the DFC coalitions, ONDCP has engaged ICF in a cross-site national evaluation project. The evaluation plan presented here identifies how the ICF evaluation team will work to accomplish the following broad evaluation objectives:

Conduct focused and comprehensive evaluation analyses aligned with key research questions in line with the broad goals of the DFC program;

Conduct ongoing assessment of reporting requirements and measures (i.e., progress reports, core measures and coalition classification tool [CCT]) and propose new requirements/measures when appropriate;

Identify potential best practices to build community capacity for positive environmental and individual change in substance use perceptions and behaviors;

Disseminate practical evaluation information utilizing a variety of formats and understandable and impactful summaries of important information (e.g., reports, presentations, infographics, briefs, dashboards, data visualization);

Provide DFC recipients with technical assistance that supports the provision of high quality data for the national evaluation and supports DFC recipients in making linkages between local and national evaluation.

Collaborative Information Sharing

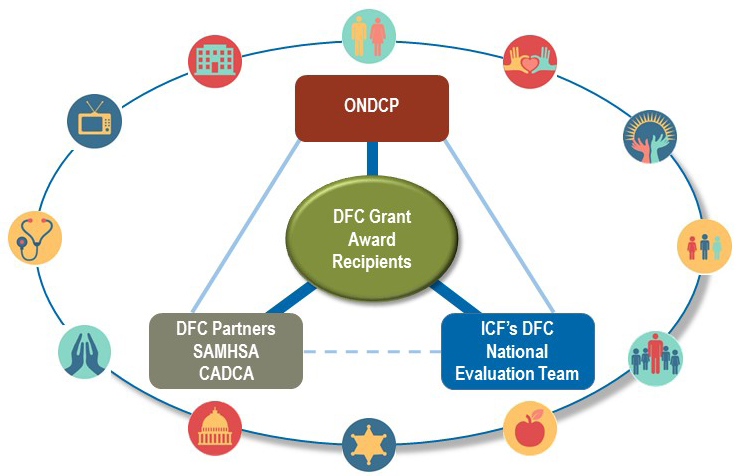

The DFC National Evaluation is grounded in collaboration in line with how ONDCP has structured the grant. Exhibit 1 provides a broad visual overview of how the DFC program has been constructed by ONDCP to mobilize community involvement as an essential tool for addressing environmental influences on youth substance use and, in turn, DFC coalitions change their communities through their work. Community stakeholders are represented in the exhibit by the twelve sectors that must be represented in DFC coalitions (e.g., parents, youth, schools, media, law enforcement).

Exhibit 1: DFC Grant Award Recipients Receive ONDCP Funding and Supports to Facilitate Success and Make a Community-Wide Difference

As shown in the exhibit, ONDCP has invested in the success of DFC coalitions by providing them with a broad spectrum of supports. Government project officers (GPO) monitor the grant and provide leadership to DFC coalitions on the use of SAMHSA’s Strategic Prevention Framework (SPF). The Community Anti-Drug Coalitions of America (CADCA) provides a broad range of training and technical assistance to DFC coalitions intended to increase knowledge, capacity, and accountability of DFC coalitions, in part through the provision of their National Coalition Institute. ICF, as the DFC National Evaluation Team, provides ONDCP, the DFC partners, and DFC coalitions with ongoing support and training with regard to evaluation as well as with high-quality evaluation reports. To realize ONDCP’s vision and priorities, the DFC coalitions must be kept at the center of the program and the supports it provides, including the evaluation team. Indeed, the DFC coalitions and their mobilized community members are the heart of the program, and DFC’s community-by-community success depends on their strength. Accordingly, ONDCP, the DFC Partners (CADCA and GPO) and the DFC National Evaluation Team work together to support coalition and community stakeholder success. ICF’s evaluation plan is grounded in implementing an evaluation that is a) responsive to ONDCP, GPO, DFC recipients, the prevention research and practice community, and public need; and b) accountable to evaluation quality and product objectives through systematic review and revision procedures.

One key aspect to this collaboration is continued participation by the National Evaluation team at monthly DFC Coordination meetings. Our experiences suggest that increased opportunities to share findings and seek input regarding the DFC National Evaluation contributes to improved utility of evaluation products and increased dissemination.

An additional aspect of collaboration of importance to the evaluation is ONDCP’s new online system, DFC Management and Evaluation (Me). Over time, DFC Me serves as a communication tool, provides a Learning Center to DFC coalitions, and serves as the environment for DFC coalitions to submit all data required by the grant. DFC Me also provides tools that allow ONDCP and GPO to better monitor DFC recipients’ compliance with grant terms and conditions requirements. DFC National Evaluation Team members are and will continue to be engaged with DFC Me team members in preparing to build data collection systems and will collaborate closely in data quality processes such as data validation checks to be incorporated into DFC Me. DFC Me may also include potential new sources of data through polling or submitting success stories associated with addressing specific issues or utilizing certain types of strategies. Our TA team works closely with the DFC Me team to ensure that DFC coalition members entering data into the system feel confident in their ability to use DFC Me and are able to stay focused on local efforts while feeling confident in their ability to meet grant requirements. DFC coalitions first used DFC Me in 2016.

Outline of Evaluation Plan

Discussion is organized by the following sections:

Overview of Evaluation: A broad overview and graphic representation of evaluation components and integration with the National DFC Support Program.

Evaluation Conceptual Framework: Introduction to a) basic characteristics of the evaluation setting (e.g., coalitions as unit of analysis, developmental process, local adaptation) and features of an evaluation approach appropriate to this setting; b) the complementary design approaches (i.e., empowerment and natural variation designs) that guide our evaluation methods; c) the current logic model, how it is used, and how it will be continuously modified to fit developments in evaluation findings and coalition practice; and an initial set of research questions that will link the conceptual framework with data and analysis.

Data Collection and Management: Includes a) data collection procedures; b) collaboration with DFC Monitoring and Evaluation (Me) system (e.g., data cleaning requirements, data transfer); c) data file documentation, storage, and retrieval; d) links between data sets (e.g., to legacy data), integration of performance monitoring, CCT, external sources); and e) creation and management of analysis-ready data sets (e.g.; addition, labeling, storage and retrieval of new constructed measures; data sets created to support specific analyses approaches such as longitudinal, or path analysis tasks). This section also discusses instrumentation and measures, including a) development of instruments and how that process is being improved; b) procedures for balancing continuity, efficiency, and sustained relevance of measures in the evaluation data base; c) specific exploratory and confirmatory scaling techniques; and d) mixed method, natural variation techniques to developing measures with high correspondence to reported coalition experience.

Analysis and Interpretation: Describes analysis tasks and techniques proposed to a) provide findings for process and outcome performance monitoring, including dashboards and annual reports; b) develop multi-component latent measures that describe and allow analysis of different coalition experiences with membership, collaboration, intervention strategies and activities, and capacity building; c) clarify the relationship between use of different substances and relationships between youth perceptions of substance and their substance using behaviors; d) produce evidence-based findings on coalition processes and actions that effect change in community capacity and youth substance use; and e) understand longitudinal change in coalition processes and outcomes (retrospective and current).

Reporting and Dissemination: Describes ways in which the ICF team will develop and disseminate evaluation findings that a) contribute to coalition and national DFC program decision-making and accountability; b) present clear evidence-based lessons that empower coalitions to organize and implement activities effective for achieving their goals in their community environments; d) clearly disseminate information on how DFC benefits communities and youth; and e) effectively reach diverse community, policy making, and research audiences.

Routine Data Management Systems and Data Sharing Processes: Provides a summary of steps taken to manage data files and to address requests to use DFC data by outside entities.

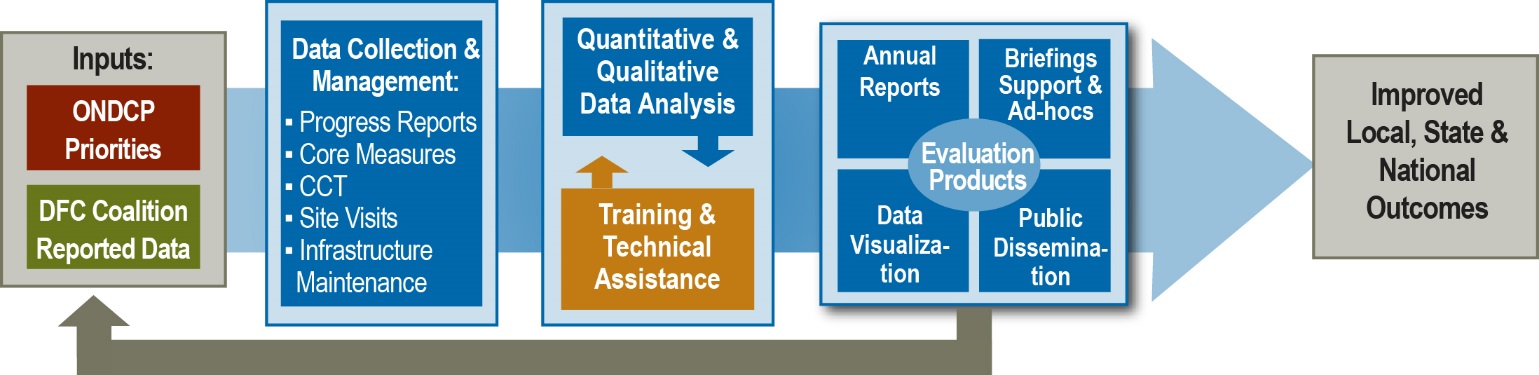

Overview of Evaluation

The evaluation plan framework depicted in Exhibit 2 reflects ICF’s four-phase vision to implement the National Evaluation by supporting DFC coalitions while objectively evaluating the program. Effectively providing this support means a) working closely with ONDCP by providing monitoring and evaluation data and findings on DFC coalition and program performance to ensure accountability and inform program improvement, and b) providing evaluation information to DFC Partners that informs these partners on how to strengthen their monitoring and TA. The framework is intended to result in improved local, state, and national outcomes based on DFC coalitions’ local revisions informed by evaluation products. The ICF evaluation will also develop evaluation products that contribute to the larger community prevention field through evidence-based lessons. Each phase of the DFC National Evaluation Framework is summarized below.

Exhibit 2: Drug-Free Communities National Evaluation Framework

Assess/Clarify ONDCP Priorities and Understanding of DFC Coalitions and DFC Data. Evaluation inputs begin with a careful assessment and understanding of the current and evolving context of ONDCP priorities and DFC coalition data. Establishing clarity on ONDCP’s priority of research questions and the extent to which these prioritized research questions can be addressed with available DFC data is central to ensuring an appropriate evaluation of the DFC program. It is also important to place the evaluation in the context of our understanding of DFC coalitions and their leaders who vary greatly in their experience and comfort level with data collection and data reporting. The combination of new grants awarded each year and a subset of DFC coalitions experiencing leadership turnover each year means that the evaluation team must consistently be prepared to provide basic information regarding DFC data and data entry associated with the evaluation.

Engage in Mixed Method Data Collection. The evaluation uses a mixed-methods approach, including both quantitative and qualitative data and both required reporting data and site visit data. The progress reports submitted every six months through DFC Me include a range of data including budget, membership, and activities/strategies engaged in by the coalition. As part of the progress reports, DFC recipients submit new core measures outcomes data at least every two years. Finally, once each year, DFC recipients complete a survey, the Coalition Classification Tool (CCT), which collects perceptions associated with coalition structure and activity, coalition functioning, and member involvement. While much of this data is quantitative, DFC recipients have ample opportunity to describe qualitatively their challenges and successes. ICF conducts nine site visits each year in order to have more in depth qualitative overviews of coalition functioning, as well as detailed information around a targeted coalition topic. ONDCP priorities determine which sites to focus on in each year of the evaluation.

Conduct Qualitative and Quantitative Data Analysis and Plan and Deliver Evaluation Relevant TA. In Phase 3, data cleaning and analyses of both quantitative and qualitative data occur in conjunction with the provision of evaluation relevant TA. Supporting DFC leaders across their range of expertise and comfort levels is crucial to the provision of data that meets National Evaluation needs and standards. The goal is to help DFC grant award recipients understand how quality data can contribute to their own local successes, rather than viewing data reporting requirements as a burden that takes away from coalition efforts. This is part of our empowerment evaluation design. A summary of issues identified during the data cleaning process will be provided to the TA team, who will use this to identify potential areas of needed training. Issues will be updated throughout Phase 3 as analyses identify any additional potential challenges based on how DFC coalitions are reporting data. The intention is to provide improved data quality to be used in future analyses. At the same time, the TA team will proactively provide training that prepares those new to data submission to successfully complete this task. Our natural variation design (NVD) approach will support rich analysis of the relation of differences in coalition structure and procedures to community prevention capacity and youth substance use and attitude outcomes. Analysis will include a broad range of quantitative and qualitative analytic techniques (e.g., descriptives, t-tests, chi-square/Mann-Whitney u, path analysis, thematic coding).

Develop, Finalize and Disseminate Evaluation Products. In Phase 4, evaluation products addressing the key research questions are developed, refined, and disseminated. ICF understands the importance of developing evaluation products that are meaningful to the community stakeholders, including policymakers for whom DFC is of interest, while also grounding the products in strong analytic approaches. Annual End-of-Year Reports support a primary ICF mission to increase the usefulness of evaluation information as a tool for policy, accountability, and community prevention practice. Annual report data keeps ONDCP, GPO’s, and DFC coalitions informed on individual and overall program progress, and supports data driven decision-making process. ICF’s Data Visualization Team will advance the communication of relevant evaluation outputs to coalitions and the prevention community through continually updated dashboards, infographics, and other visual displays. ICF’s expanded natural variation process analyses will strengthen lessons grounded in DFC coalition experience. We anticipate ongoing and increased public dissemination of these evidence-based lessons, and other DFC products, to the prevention community through social media, conference presentations, published articles, and at DFC events. Our ICF team brings the expertise and flexibility to respond efficiently and effectively to ongoing, ad hoc requests related to emerging ONDCP priorities as well as providing support in preparation for briefings or testimony.

DFC Evaluation Logic Model

Development of a coherent evaluation plan begins with understanding of the problem to be solved, typically laid out in the form of a logic model. More concretely, this means identifying the concepts that will fit the problem, and provide a bridge to appropriate data collection and analysis. In this section, we present an overview of the logic model that shapes the conceptual framework for this evaluation plan. Early in implementation of the 2010–2015 evaluation, ICF worked with ONDCP and DFC recipients to review and assess the logic model developed in the preceding evaluation.

The major reason for revision was that the legacy (pre-2010) model was organized around a four stage classification of coalition maturation (Table 1), which assumed that more mature programs would be more effective.1 This was, in part, a solution for addressing some of the longitudinal challenges associated with the program. Rather than considering a Year 10 to be a DFC recipient with whom more positive outcomes might be associated, this model sought to describe some DFC recipients as more mature than others and associate maturation with outcomes. However, the description of these stages was not related to specific measures of coalition activity; neither were the associated levels of competency related to any clear set of criteria. In summary, this concept from prior literature did not empirically encompass or organize coalition data gathered in the evaluation itself. Indeed, the prior evaluation team concluded that the four stage classification of coalition maturity could not be validated, or reliably measured, using the empirical data gathered through the evaluation.

Table 1: Prior Drug-Free Communities Prevention Coalition Maturation Stages of Development2

Stage of Development |

Establishing |

Functioning |

Maturing |

Sustaining |

Description |

Initial formation with small leadership core working on mobilization and direction |

Follows the completion of initial activities, focus on structure and more long range programming |

Stabilized roles, structures, and functions; Confronted with conflicts to transform and “growing pains” |

Established organization and operations, focus on higher level changes and institutionalizing efforts |

Level of Competency to Perform Functions |

Primarily learner |

Achieving proficiency; still learning and developing mastery |

Achieved mastery; learning new areas; proficient in others |

Mastery in primary functions; capacities in the community are sustainable and institutionalized |

In summary, the maturation concept developed in the legacy evaluation model did not reflect an assessment of correspondence to coalition activities, nor did it guide the development of specific measures. The ICF evaluation team will re-examine the conceptualization of four stages of development that was used in this earlier work. Decisions will be finalized with regard to addressing both year of recipient and perceived maturation as measured by required reporting. If deemed appropriate, a revised stage model more closely aligned with DFC coalition data and experience will be proposed. Our model will be grounded in DFC coalition experience, and in research on similar community coalitions.

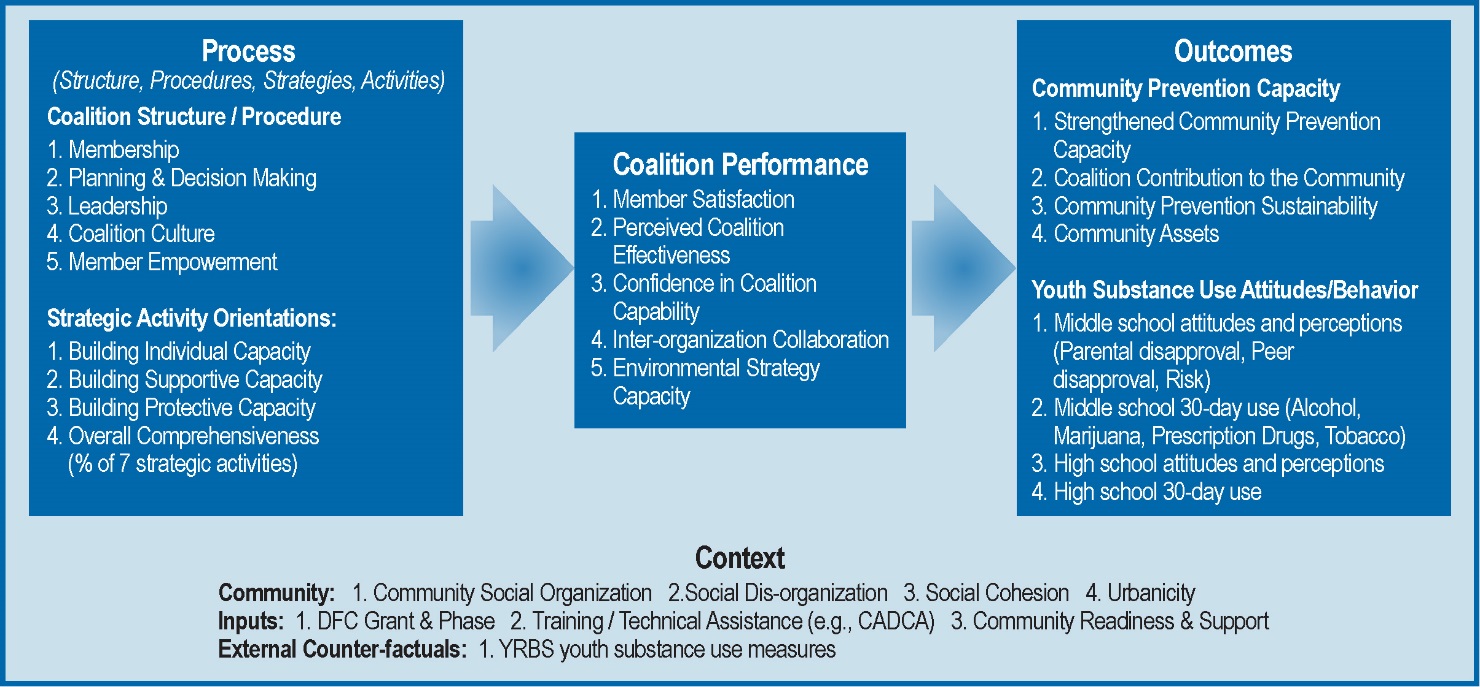

A new logic model (Exhibit 3) resulted from ICF’s discussions with ONDCP, GPO, and DFC recipients. Drafts were reviewed at recipient meetings, and feedback was incorporated into the final model. This model made important improvements including: 1) incorporating current evidence-based understanding of major components of coalition function; 2) using a broad and comprehensive format with clear line logic; and 3) using language that facilitated communication with recipients, policy makers, and practitioners. This creates a conceptual framework in which all DFC coalitions can fit; an emphasis on generating evidence-based lessons based on coalition experience; and overall attention to ensuring correspondence to coalition experience. The logic model provides a central reference point for the evaluation. It can be used for communicating an understandable evaluation framework to recipient and other stakeholder audiences in briefs and presentations. It also serves to link the concepts in the model to measurement and analysis plans. The Measurement section included in this plan was developed based on specifying operational concepts within domains (e.g. context, coalition structure and processes, implementation of strategies and activities, community and population level outcomes), and within dimensions (member capacity, coalition structures, coalition processes, information and support, policies/environmental change, programs and services, community environment, behavior and consequences). Similarly, proposed analyses were aligned with the logic model.

Exhibit 3: The 2010–2020 Evaluation Logic Model

Theory of Change: Well-functioning community coalitions can stage and sustain a comprehensive set of interventions that mitigate the local conditions that make substance use more likely.

Logic Model Review and Revision

The ICF Evaluation Team will make revisions to the logic model as appropriate. The objective is to maintain continuity with the existing model, but improve and update (as necessary) details within it. The need for revisions will be determined through continuous review of emerging findings that have implications for specification of dimensions (e.g., member capacity) and constructs (e.g., member competencies) within major domains (e.g., Coalition Structures and Processes). These constructs may be reorganized to better represent the actual structures, procedures, and strategies put in place by DFC coalitions and documented through the evaluation. The evaluation team will also revise the measurement and analysis models as necessary to guide and document the need for new measures and analyses.

The DFC National Evaluation Team will use the logic model to guide, review, and track evaluation activities and products. Exhibit 4 provides a beginning point for this development based on our understanding of currently developed DFC evaluation measures. The team will develop revised Measurement Logic Models and Analysis Logic Models that link validated measures with high correspondence to coalition experience.

Analysis Logic Models will also be developed to clearly represent the relationship of planned analyses to the descriptive needs and relational line logic of the DFC National Evaluation Logic Model. These products, and the process of producing them, will help the evaluation team systematically optimize relevance and rigor in implementing a useful evaluation. For example, current data collection relies largely on perceptual self-report for characterizing community context (i.e., responses to CCT). Contrary to expectation, few inter-relations of contextual characteristics and other logic model constructs have been found and reported in analyses to date. As discussed further below, ICF will develop more adequate measurement of community context in the ongoing DFC National Evaluation, plausibly through archival and census data.

Exhibit 4: Measurement Logic Model

DFC National Evaluation Research Questions

Revising and agreeing on explicit research questions is a priority task upon contract award. As an integrating component of the evaluation plan, the operational research questions will be used as a link between new or revised requirements that were included in the ONDCP request for proposals for a National Evaluation (e.g., revisions in research questions, data requirements), the logic model proposed by ICF, proposed changes in data collection and instrumentation, and reporting. Table 2 provides an overview of key proposed evaluation research questions.

Table 2. Key Evaluation Questions

To what extent do communities with DFC coalitions experience reduced underage substance use, improved protective perceptions concerning substance use among youth, and improved community prevention capacity? |

|||

Analysis Logic Model Domain |

Operational Question |

Measurement |

|

Substance Use Outcomes

|

To what extent are there changes in reported past 30-day use over time for each of the core substances alcohol, tobacco, marijuana, prescription drugs): Across all DFC coalition communities ever funded? Within currently funded DFC recipients? From first to most recent report? From most recent to next most recent report? |

Core Outcomes Year of Coalition Time of Collection |

|

To what extent is change in past 30-day use of one substance related to change in use of other substances? |

|||

What is the trend in substance use outcomes over time across coalition communities? Are changes sustained? Do changes peak and then level off? Are changes associated with year of data collection? Associated with year of DFC recipient? |

|||

Protective Perceptions Outcomes

|

To what extent are there changes in reported youth perceptions of substance use (risk of use, parental disapproval, peer disapproval) over time for each of the core substances alcohol, tobacco, marijuana, prescription drugs):across all DFC coalition communities every funded? Within currently funded DFC recipients? From first to most recent report? From most recent to next most recent report? What are the differences in improvements for different protective perceptions (health risk, parental disapproval, peer disapproval) across coalition communities? |

Core Outcomes (Perceived Risk, Perceived Peer Disapproval, Perceived Parent Disapproval) Year of Coalition Time of Collection |

|

To what extent is change in perception associated with one another? Associated with Past 30-Day use? |

|||

What are the trends in perception outcomes over time across coalition communities? Are changes sustained? Do changes peak and then level off? Are changes associated with year of data collection? Associated with year of DFC recipient? |

|||

Community Capacity Outcomes |

To what extent does change occur across components of community prevention capacity (e.g., policy assets, other assets, improved organizational collaboration, strengthened coalition, community readiness)? |

Capacity building activities, Membership numbers and level of involvement, site visit interviews |

|

To what extent is change in one community capacity component associated with change in others? |

|||

What are trends in community capacity outcomes over time? |

Capacity building activities, Membership numbers and level of involvement, site visit interviews |

||

Relation Between Components Of Outcomes Effectiveness |

To what extent do coalition communities that experience improvement in one domain of outcomes also experience improvement in others? |

Core Outcomes; Membership Capacity |

|

What Structures, Procedures, Capacities, And Strategies Characterize Coalitions In Communities That Experience Positive Substance Abuse And Community Capacity Outcomes? |

|||

Analysis Logic Model Domain |

Operational Question |

Measurement |

|

Structure And Procedure |

What are the patterns of membership (e.g., breadth and diversity, numbers, member activity level, member capacity) across coalitions? |

membership rosters, capacity building activities |

|

What are the patterns of coalition organizational structure (e.g. fiscal agent; funding sources and amount; degree of formalization, steering committee structure, role, membership, and level of activity; committee / workgroup structure and role) across coalitions? |

budget, membership, membership rosters, capacity building activities, qualitative interviews |

||

What are the patterns of organizational procedure (e.g. leadership style; degree of decision centralization; degree of planning emphasis; communication adequacy) across coalitions? |

|||

Relation Between Components Of Structure And Process |

Are there identifiable types of coalitions (defined by structure and process components that tend to vary together)? |

budget, membership, membership rosters, capacity building activities, qualitative interviews |

|

Relation Of Structure And Procedure To Outcomes |

To what extent do specific components or patterns of structure and process characterize coalitions in communities that experience more positive outcomes? |

budget, membership, membership rosters, capacity building activities, qualitative interviews, outcome measures |

|

Planning And Implementation Of Coalition Structures And Procedures |

How do coalitions plan and implement structures and procedures? What are perceived to be important challenges, opportunities and successes? |

budget, membership, membership rosters, capacity building activities, qualitative interviews |

|

Strategies And Activities |

What are the patterns of use and emphasis for each of the CADCA-identified community prevention strategies across coalitions? |

planning, efforts allocation, implementation, strategy activity details |

|

What are the patterns of activities and outputs associated with each of these strategies across coalitions? |

|||

What is the degree of emphasis (effort, resources, implementation quality) that coalitions place on each of the strategies? |

efforts allocation, implementation, strategy activity details, budget, qualitative interviews |

||

To what extent do coalitions that use / emphasize one strategy also emphasize others (e.g., are there larger strategic approaches that differentiate coalitions)? |

|||

Relation Between Components Of Strategies And Activities |

Are there identifiable types of strategic approaches (defined by strategy and/or activity components that tend to vary together)? |

implementation, strategy activity details, budget, qualitative interviews |

|

Relation Of Strategies And Activities To Outcomes |

To what extent do specific (or specific patterns of) uses and emphases on strategies and activities characterize coalitions in communities that experience more positive outcomes? |

implementation, strategy activity details, budget, qualitative interviews, outcome measures |

|

Planning And Implementation Of Coalition Strategies And Activities |

How do coalitions plan and implement strategies and activities? What are perceived to be important challenges, opportunities and successes? |

strategy activity details, budget, qualitative interviews |

|

Coalition Capacity |

What are the patterns of coalition climate (e.g., coherence, inclusiveness, empowerment) across coalitions? |

CCT, qualitative interviews |

|

To what extent are coalitions strong in one component of coalition climate also strong in others? |

|||

To what extent do coalitions use and emphasize coalition capacity building activities (CADCA training, internal training, resources) for staff / members / collaborators? |

membership, membership rosters, capacity building activities, qualitative interviews, CCT |

||

To what extent do coalitions that use or emphasize capacity building activity also use or emphasize others? |

|||

To what extent do coalitions use systematic continuous improvement techniques (needs assessment, monitoring and evaluation, data based decision making, action plans, evidence-based practices)? |

capacity building activities, qualitative interviews, CCT |

||

To what extent do coalitions that use one continuous improvement technique also use others? |

|||

To what extent do coalitions maintain positive external relations (e.g., resources, shared tasks, attendance at community events, “go to” organization) in the community? |

membership, membership rosters, capacity building activities, qualitative interviews, CCT |

||

To what extent do coalitions that maintain positive external relations in one area also maintain them in others? |

membership, membership rosters, qualitative interview, CCT |

||

Relation Between Components Of Capacity |

To what extent are coalitions strong in one component of capacity also strong in others (defined by strategy and/or activity components that tend to vary together)? |

membership, membership rosters, capacity building activities, qualitative interviews, CCT |

|

Relation Of Coalition Capacity To Outcomes |

To what extent specific do components (or specific patterns) of coalition capacity characterize coalitions in communities that experience positive outcomes? |

membership, membership rosters, capacity building activities, qualitative interviews, CCT, outcome measures |

|

Planning And Implementation Of Coalition Capacity |

How do coalitions plan and implement capacity building activities? What are perceived to be important challenges, opportunities and successes? |

capacity building activities, qualitative interviews, CCT |

|

How Do Coalition Structure, Procedure, Strategies, Capacity, And Outcomes Differ Across Communities And Coalitions With Different Context Characteristics? |

|||

Analysis Logic Model Domain |

Operational Question |

Measurement |

|

Context |

What are the patterns of community characteristics (e.g., urbanicity, population size, diversity, SES, severity/type of substance use problems, strength of community identity, existing prevention assets) across coalitions? |

income, budget, SES, Census data, outcome measures, qualitative interviews |

|

To what extent do communities that have one characteristic also have others? Are there clear community types with shared characteristics? |

|||

What are the patterns of coalition history and context characteristics (time in existence, years of DFC funding, other sources of funding, established place in community, strong institutional relations, prior non-DFC federal funding, existing programs) across coalitions? |

year in grant, budget, outcome measures, qualitative interviews |

||

To what extent do coalitions that have one characteristic also have others? Are there clear types of coalition history with shared characteristics? |

year in grant, strategy detail, outcome measures, qualitative interviews |

||

Relation of Context to Coalition Structure, Process, Strategies, Capacity, and Community Outcomes |

To what extent are components (or patterns) of context related to components (or patterns) of coalition structure, process, strategies, capacity, and to community outcomes? |

year in grant, strategy detail, outcome measures, qualitative interviews |

|

Evaluation questions are an integral part of the conceptual glue that binds evaluation concepts (theory), coalition experience (empirical reality), study purpose (usefulness) and evaluation method and techniques. The ICF team will map questions to the revised logic model, DFC evaluation data, and recipient / ONDCP priorities and develop a revised list as necessary.

Evaluation Framework

Our approach to this evaluation is to move ONDCP to a progressively stronger evidence base, while identifying best practices and providing more practical results for the field. Ultimately, a central feature of the evaluation plan is to address the extent to which the DFC grant recipients are achieving the goals of the program:

Establish and strengthen collaboration among communities, public and private non-profit agencies, as well as Federal, State, local, and Tribal governments to support the efforts of community coalitions working to prevent and reduce substance abuse among youth.

Reduce substance abuse among youth and, over time, reduce substance abuse among adults by addressing the factors in a community that increase the risk of substance abuse and promoting the factors that minimize the risk of substance abuse.

Traditional evaluation designs are not adequate to meet the information objectives of the National Evaluation of the DFC Support Program. Several characteristics of the DFC setting create a need for innovative techniques. The evaluation needs to generate findings and lessons about complex systems (coalitions, communities, behavioral health systems, educational systems, and more), not simply individual behavior. This systems focus is central to the advances in prevention thinking associated with the environmental perspective, and community prevention itself. In addition, these systems are intended to be adaptive, developing collaborative procedures and intervention strategies that fit community need and context. The systemic complexity of coalitions as units of analysis, combined with the intentional variation in interventions across units of analysis (coalitions and communities), presents serious challenges for conducting experimental and quasi-experimental designs. Furthermore, as the introduction to this plan emphasizes, the National DFC Support Program has been designed as a “learning system” that combines evidence-based generation of lessons (evaluation), clear messaging and delivery of evidence-based information and lessons to DFC coalitions and the full community prevention audience. This supports building the capacity of community participants to adapt and apply information and lessons to fit local conditions. This evaluation plan outlines procedures and methods that will provide information and lessons consistent with this learning system concept. In this section, these methods, and the reasons for using natural variation (NVD) and empowerment evaluation designs are summarized.

Evaluation Methods Appropriate to the DFC National Evaluation

Evaluation methods and products that meet the information needs of DFC recipients must be firmly grounded in the real world in which coalitions operate. These real world environments are characterized by complexity and diversity, which means the evaluation reality they represent is: a) Nested: contexts (e.g., community) condition the pattern and meaning of what happens within them (e.g., coalition barriers, opportunities, and actions); b) Multi-variate: social interventions have multiple components and their application is impacted by multiple contextual factors; c) Inter-connected: presence or strength of some conditions alter the state or influence of others; procedures and actions must consider multiple factors and complex chains of influence; and d) Evolving: problems and available solutions change over time, adaptively, at multiple, inter-related levels. Many evaluation approaches are not well designed to accommodate this reality.

Evaluation experience with community prevention stretching back to SAMHSA’s Community Partnerships demonstration more than 25 years ago has provided lessons that establish additional premises upon which this plan builds. Evaluation lessons from Community Partnerships evaluations, both national and local, were summarized as the “three R’s of learning system evaluation” (Springer & Phillips, 1994). The R’s stood for establishing a new and appropriate evaluation role that met the needs of community prevention; an emphasis on producing information and lessons relevant to coalition needs and action environments; and, importantly, developing and applying rigorous evaluation methods that are suited to the community prevention and coalition environment. Close attention to each of these R’s will be evident throughout this plan. Each R is briefly elaborated below.

Evaluation Role: Traditionally, objective evaluation required an arms-length relation between evaluation and practice, particularly the program or initiative being evaluated. The role of evaluation in a learning system, as envisioned in the National DFC Support Program, must be involved at the input and data collection end of the evaluation (e.g., collaboration in defining information priorities, developing feasible instrumentation, understanding the program environment), and through to the dissemination and utilization stage (technical assistance, training, useful lessons).

Evaluation Relevance: Relevance in evaluation implies usefulness; evaluation products that actually can be put into effective practice. Evidence based practices (EBP) are one manifestation of the recognized need for relevance. However, this plan represents a specific perspective on EBP products. Specifically, the ICF team will produce lessons that are empowering rather than prescriptive. One of the mis-steps in EBP work has been an overly prescriptive orientation that defined interventions that should be adopted with strict fidelity, rather than identifying important principles, considerations, and foundations that guide adaptation of interventions to specific environments. Our orientation to developing evidence-based lessons is to give practitioners the skills and tools they need to make effective decisions rather than to tell them what decisions they should make. This is again in line with the DFC perspective that local problems require local solutions.

Evaluation Rigor: In many ways, evaluation method is the most fundamentally challenging reorientation needed to do meet the evaluation needs of community prevention, and other systems level change strategies (e.g., whole school reforms, justice system reforms). Evaluation that will systematically produce verifiable and applicable lessons as opposed to defining rigor entirely as adherence to a research design logic and technique. The principles of NVD are used throughout this plan to ensure rigor in producing useful information.

Design Components of the DFC National Evaluation

ICF’s proposed evaluation design combines two perspectives: empowerment evaluation (Fetterman, 1994; Fetterman, 2012; Fetterman, Rodriguez-Campos, Wandersman, & O’Sullivan, 2014) and natural variation (Calder et al, 1981; Springer and Porowski, 2012).

Empowerment Evaluation

ICF is committed to an empowerment evaluation approach in line with the use of the Strategic Prevention Framework (SPF). Empowerment evaluation aims to increase the probability of achieving program success by providing training and technical assistance to stakeholders, enabling them with tools for the assessment, implementation, self-evaluation, and sustainability. It encourages the integration of evaluation as a key component of program planning and management.

Relying upon an empowerment framework is not simply a conceptual idea – it is integrated into the entire evaluation model. Through hands-on technical assistance, training, and comprehensive data analysis and reporting, the ICF team will ensure that all stakeholders (local coalitions, project officers and the academic community) have valid and reliable ways to monitor their progress, report to funders, and assess results. This approach increases both support for the evaluation within an organization, but also builds capacity to sustain evaluation activities beyond the evaluation period. In general, our intent in all activities is to create the highest evaluation output with the lowest burden possible. The goal is always to get to the point where an organization can sustain their own local evaluation processes while meeting their federal reporting requirements.

Natural Variation Design

Natural Variation Design (NVD) is grounded in the reality that social interventions operating in their natural environments are characterized by complexity and diversity. A basic need for NVD evaluation is that failure to develop adequate correspondence of data and the natural setting weakens applicability of findings. Evaluation design has typically treated this fundamental reality as a threat to generating knowledge. In contrast, NVD treats this complexity as an opportunity to generate findings that are more relevant to real-world application. Accordingly, our methods account for and explain the effects of complexity, rather than attempt to remove its consideration through experimental control (Springer and Porowski, 2012; Sambrano, Springer, et al, 2005). Natural variation thinking is not an explicit set of design criteria and research techniques. Rather, it is an evaluation perspective that focuses on the fit between technique (data collection, measurement, analysis, interpretation) and the configuration of the real-world phenomena under study. As stated in a seminal treatment of comparative systems research, analysis of natural variation across different systems offers an alternative logic more suited to the over-determined variation in naturally occurring environments, and the adaptation of interventions to different settings” (Przeworski & Teune, 1978).

The application of empowerment evaluation and natural variation design approaches to evaluation guide our plan for effectively fulfilling the three R’s of appropriate evaluation role, relevance in evaluation questions and findings, and rigor in evaluation method best suited for DFC National Evaluation purpose and utility.

Longitudinal Design

Finally, the design of the DFC National Evaluation must take into account the longitudinal nature of the DFC Grant program. DFC grant award recipients receive an initial five-year grant in most cases. Some DFC recipients then receive a second five-year award (ten years total). The evaluation must address this longitudinal aspect of the data provided by these recipients. Issues associated with the longitudinal design include the following:

Each year, new DFC recipients are added to the active list while some existing DFC grants period of award ends. In any given cohort, there are DFC recipients in each year of the grant award. However, at any given time the number of DFC recipients in a specific year of grant award is relatively small.

Some Year 6 awards are made continuously from Year 5, while other DFC recipients experience a lag in time between Year 5 and Year 6.

Many recipients experience attrition in DFC leadership and staff, as well as sector membership over the course of the grant. In some cases, leadership changes may result in a coalition losing ground (e.g., a Year 4 recipient perceives taking steps similar to a Year 1 recipient).

The youth population that DFC recipients seek to influence is constantly changing. Recipients collect DFC Core Measure data from students every two years, with data collected primarily in Grade 6 to Grade 12. Grade 6 students would reach Grade 12 in Year 7 if a DFC recipient is continuously awarded.

Core Measure data are required to be collected every two years from at least three grade levels. DFC recipients vary in the year in which data are collected as well as the grade levels data are collected from. This means that not all recipients have comparable data from the same year.

Local, state, and national context with regard to substance issues each can change in any given year in ways that may impact outcomes as well as the focus of implementation by DFC recipients (e.g., changing marijuana laws).

In some cases, the longitudinal design is simply something that must be explained in order to interpret data. However, some longitudinal questions can also be addressed. For example, do outcomes improve continuously in communities over time or are their peaks and valleys in changes in the core outcome measures.

Data Collection, Instruments, and Measures

The empowerment and NVD evaluation approaches rest on designing and implementing appropriate data collection, instrumentation, and measurement tools and procedures. Data provides the link between concepts and observation, and to support useful evaluation that link must produce strong correspondence with the real world setting. Accordingly DFC evaluation data collection must emphasize: a) capture of data broadly inclusive of process, and outcome observation for the policy, program, and/or practice setting(s) being evaluated; b) instrumentation that produces indicators reflecting relevant variance in on these concepts and processes; c) data that supports measurement of context; and d) strategic application of mixed data collection methods. Data collection and management are critical to supporting timely, accurate, and useful analysis in a large, multi-level, multi-site study such as the DFC National Evaluation. In this section, we present our plans for data collection (the procedures through which data is created, cleaned, and managed); instrumentation (the surveys, interview questions, on-line monitoring instruments, and coding procedures through which raw data I gathered), and measurement (data manipulation and analysis procedures that transform raw data into reliable and valid representations of concepts relevant to the evaluation logic model and analysis.

Data Collection

Data currently being reported by DFC coalitions includes twice annual progress reports, core measure data (new data required to be reported every two years in at least three grades), and the annual Coalition Classification Tool. One of our primary goals is to obtain high quality data while minimizing reporting burden and improving user-friendliness of the data submission experience.

Response burden is a serious issue in any evaluation. After all, if a coalition is overburdened with data collection, they will lose focus on their core mission of reducing substance use and its consequences among youth. We also believe that additional response burden is only acceptable when it produces data that are manageable, measurable, and most importantly, meaningful. Prior to adding any new data collection, the DFC National Evaluation Team will first determine whether needed data are available through public use data files. In the absence of public use data, data needs will need to be addressed in the progress report.

Quantitative data is collected primarily by a web-based data collection system developed and operated by an external contractor. The system uses instrumentation developed by the evaluator in collaboration with ONDCP, and with review and approval of OMB. In 2015, the external contractor and the online system changed from the legacy COMET system, to DFC Me. Qualitative data will be provided through open-ended responses to items in DFC progress reports and site visits to nine DFC coalitions each year. In 2018, revisions to data collection measures will be included in the OMB submission for renewed approval.

Quantitative Data Collection.

The DFC National Evaluation Team provides the DFC Me team with appropriate data quality checks to incorporate into the online system, by using our analyses to define appropriate validation points for setting these checks (e.g., setting cut points for outliers in various items). The DFC National Evaluation Team also works alongside the DFC Me team to ensure all submitted data are provided in a format that supports quickly moving into routine data management steps.

The DFC National Evaluation Team will also be directly involved in data collection through training and TA that supports recipients in understanding the data requests and content. The primary goal in this area will be to maintain the quality of the current system and make incremental improves in areas that may require attention. For example, training and TA materials regarding representative sampling techniques, and other ways of improving the representativeness of the data will be developed.

Survey Review

During the previous DFC National Evaluation, the ICF team established the survey review process to facilitate the collection of more accurate data for the National Evaluation by guiding coalitions through core measures data reporting. DFC coalitions are required to submit surveys into DFC Me before they are able to enter core measure data into progress reports. Upon receiving surveys, the team will create individualized survey review guides, walking coalitions through which questions were approved, and providing instruction on how to submit data for each core measure based on the submitted survey. Each survey review goes through a quality control process where a more experienced reviewer checks the guide for any errors before it is sent to the recipient. This process ensures that all survey review guides are complete and accurate, and helps the TA team identify areas of needed training for survey reviewers. The survey review process helps coalition staff ensure that they maintain compliance with grant requirements and, beyond compliance, the process helps them identify typos and issues before survey implementation.

Core Measures

The main focus of this evaluation is on results from the core measures (i.e., 30-day use, perception of risk or harm, perception of parental disapproval, and perception of peer disapproval) for alcohol, tobacco, marijuana, and illicit prescription drug use (using prescription drugs not prescribed to you). Prescription drugs were first introduced as a core measures substance in 2012. DFC recipients are required to report new data for these measures every two years by school grade and gender. The preferred population is school-aged youth in grades 6 through 12, including at least one middle school and at least one high school grade level. Beginning in 2019, DFC recipients will also have the option to report core measure data relevant to heroin and methamphetamines. In addition, we have recommended that core measure data no longer be submitted by gender. This requirement placed a burden on grant recipients as local data are often by grade level and not gender, and the data proved to have little added value to the DFC National Evaluation.

Progress Report

Progress Report data are collected twice annually (in February and again in August). A broad range of elements are collected in the Progress Report (including some data utilized by GPO and outside of the DFC National Evaluation). Broadly these data are aligned with the Strategic Prevention Framework. Data include information on budget, membership, coalition structure, community context, strategies to build capacity and planning. In addition, a large focus of the Progress Report is on strategy implementation. Implementation data includes information about number of activities by strategy type, number of participants (youth and/or adult as appropriate), substance(s) targeted by the activity, and which sectors where engaged in the activity.

Coalition Classification Tool

The CCT is a survey collected annually. A major revision is being proposed to the CCT in the 2018 OMB. In the revised CCT, the majority of the questions (65) ask the DFC coalition to think over their work in the past year and to indicate how strongly they agree with each of the statements. The scale for these items is Strongly Agree, Agree, Disagree, Strongly Disagree, or Not Applicable. Proposed items fit in a range of subscales including building capacity, Strategic Prevention Framework utilization, data and outcomes utilization, youth involvement, member empowerment and building sustainability.

Coalition Structure will be assessed in the CCT by nine items asking the DFC coalition to indicate across a range of activities who is responsible for carrying out the activity: Primarily Staff, Staff and Coalition Members Equally, or Primarily Coalition Members. These items are intended to build on our understanding of the extent to which DFC recipients are building community capacity through engagement of coalition members.

The CCT will continue to assess the extent to which the DFC grant has enabled the recipient to put into place Community Assets. Based on analyses of prior responses, the ICF team has reduced the number of assets identified from 45 to 22. However, the proposed revision also includes the option to add up to 10 community assets to be assessed annually. This flexibility will allow the DFC National Evaluation Team and ONDCP to identify innovative practices, through site visits and/or review of qualitative responses that may be of interest of better understanding the extent to which a broad range of DFC recipients engage in the practice.

Qualitative Data Collection.

The DFC National Evaluation includes conducting nine annual site visits with individual DFC coalitions. A first step for the site visits will be a discussion with ONDCP regarding current priorities that may guide site visit selection. As noted in the PWS, DFC coalitions who engage in work with special populations are a priority to ONDCP. ICF’s next step will be assess available data to identify potential sites to include in the visit. When appropriate, this list will be shared with ONDCP for final approval. ICF successfully engaged in this process in the past to select coalitions based on indicators of being a high-performing DFC coalition, work in states with legalized marijuana laws, and work in states on international borders.

DFC coalition participation in site visits is voluntary. Therefore, the proposed list of DFC coalitions will be longer than nine so that the team can quickly move to the next DFC coalition on the list following any refusals. Each site visit will last approximately 1-2 days in order to meet with the broad range of sector members engaged with the DFC coalition. ICF provides DFC coalitions that agree to participate in site visits with a sample schedule to facilitate their assistance in setting up the visit.

Data Management

ICF maintains an integrated database that is a single, horizontally organized system that supports ready output to custom SAS and SPSS analysis data sets. Data sets are updated each time new data collection occurs. The data set is accompanied by a Data Manual that includes: 1) a standard set of variable IDs and labels that will facilitate team data development and analysis tasks, 2) a visual map of the full data set contents by year and cohort; 3) a summary of changes made in data over time, and the time points at which these changes were implemented; and 4) instructions on making requests for output of data sets for particular output. Appropriate data will be updated in software that supports data visualization (Tableau). These systems improve capacity to quickly meet study needs, including quick turnaround of special analysis requests, providing regular dashboard updates, producing annual reports on an accelerated schedule, and providing timely presentations of recent DFC data.

Data Storage and Protection

DFC data are housed on ICF’s servers, and only the analysis team has authorized access to these data. The data collected as part of this evaluation are the property of ONDCP, and data will be handed back to ONDCP or destroyed at their request. In data reporting, the confidentiality of respondents will be protected, and cell sizes of less than 10 will not be reported to further protect respondents from identification. While we consider this a low-risk project from a human subjects protection perspective, we are nonetheless taking strong precautions to ensure that data are not mishandled or misused in any way.

DFC Evaluation Data Management Systems and Tools.

In general, data will undergo quality checks and cleaning processes. Data management also addresses data storage and data security. Finally, data management processes address development of data dictionary and data codebooks documenting all processes for managing data over time. Key to the data management task is communicating issues identified during data management processes will be shared with the TA team to potentially be addressed as well as with the DFC Me team where appropriate additional validation checks may be added to the system to further support data quality.

Analysis and Interpretation

The proposed analyses will build on findings that DFC-funded communities have experienced significant reductions in youth substance use (ICF International, 2018). We will also continue to conduct analyses of long and short-term change in DFC core measures as well as descriptives associated with sector participation and strategy implementation. This will maintain the continuous record of DFC outcomes over time.

In addition to maintaining continuity with the record of past DFC coalition accomplishments and community experience, the ICF Evaluation Team regularly expands analysis of data based on key evaluation research questions. Priority analysis objectives are a) to better understand how coalitions are organized, the similarities and differences between them, in particular the configuration of coalition membership and the networks of active members that drive their activities; b) to better understand the intervention strategies that coalitions use, the differences between them, and their relative degree of use by coalitions; and c) to better understand the decision-making and leadership processes that link coalition membership and activities. Lessons generated concerning these topics, why alternative processes and strategies are selected, and they are implemented, will provide practical information useful to community prevention practitioners.

The analyses utilized in the DFC National Evaluation include numerous statistical techniques, including: a) t-tests between weighted means as a standard for identifying substantial change in core outcomes over different time periods; b) both parametric (mean, standard deviation) and non-parametric (median, range) and standardized (e.g., percentage) summaries of value distribution; cluster analysis for identifying linearly defined patterns of coalitions; principal components analysis, and factor analysis for exploratory identification of linear dimensions of variables; and d) correlation, multiple correlation, and non-parametric measures of association to assess associations between variables, and identify patterns of relationship.

Analysis of Core Measures

Our primary impact analyses will be characterized by their simplicity. Given that there are inherent uncertainties in the survey sampling process (e.g., we do not know how each coalition sampled their target population for reporting the core measures, we do not know the exact number of youth served by each coalition), the most logical and transparent method of analyzing the data will be to develop simple averages of each of the core measures. Each average will be weighted by the reported number of respondents. In the case of 30-day use, for example, this will intuitively provide the overall prevalence in 30-day use for all youth surveyed in a given year. The formula for the weighted average is:

![]()

Where wi is the weight (in this case, outcome sample size), and xi is the mean of the ith observation. Simply put, each average is multiplied by the sample size on which it is based, summed, and then divided by the total number of youth sampled across all coalitions.

One key challenge in the weighting process is that some coalitions have reported means and sample sizes from surveys that are partially administered outside the catchment area (e.g., county-wide survey results are reported for a coalition that targets a smaller area within the county). Since means for 30-day use are weighted by their reported sample size, this situation would result in a much higher weight for a coalition that has less valid data (i.e., the number of youth surveyed is greater than the number of youth targeted by the coalition). To correct for this, we will cap each coalition’s weight at the number of youth who live within the targeted zip codes. By merging zip codes (catchment areas) reported by coalitions with 2010 Census data, we can determine the maximum possible weight a coalition should have.

To measure the effectiveness of DFC coalitions on the core measures for alcohol, tobacco, marijuana, and prescription drugs, we will conduct the following related analyses:

Annual Prevalence Figures: First, we will compare data on each core measure by year and school level (i.e., middle school [grades 6–8] and high school [grades 9–12]). These results provide a snapshot of DFC grantees’ outcomes for each year; however, since coalitions are not required to report core measures each year, they should not be used to interpret how core measures are changing across time.

Long-Term Change Analyses: Second, we will calculate the average total change in each coalition, from the first outcome report to the most recent results. By standardizing time points, we are able to measure trajectories of change on core measures across time. This provides the most accurate assessment of whether DFC coalitions are improving or not on the core measures. This analysis will be run once using data on all DFC grantees ever funded and then a second time using current fiscal year grantees only.

Short-Term Change Analyses: This analysis will include only current fiscal year grantees and will compare their two most recent times of data collection. This analysis will help to identify any potential shifts in outcomes that may be occurring.

Benchmarking Results: Finally, where possible, results will be compared to national-level data from YRBS. These comparisons provide basic evidence to determine what would have happened in the absence of DFC, and allow us to make inferences about the effectiveness of the DFC Program as a whole.

Together, these three analyses provide robust insight into the effectiveness of DFC from a cross-sectional (snapshot), longitudinal (over time), and inferential (comparison) perspective.

Qualitative Data

Qualitative data are also analyzed in a range of ways. In some cases, data are scanned for key quotes that exemplify a practice or strategy that DFC coalitions utilize. A second approach is to search for key terms (e.g., opioids) and then to code the ways in which coalitions are mentioning/discussing the key term to identify trends. Finally, site visit data are entered into qualitative software and then coded in a range of key ways in order to identify themes.

Analyzing DFC Recipient Feedback from Technical Assistance Activities

Technical assistance for the DFC National Evaluation has been designed to accomplish two major objectives: (1) increase the reliability and validity of the data collected from coalition grantees through various technical assistance approaches; and (2) provide “give backs" (i.e., Evaluation Summary Results) to DFC recipients for their use in performance improvement and to support sustainability planning. By providing DFC Recipients with “give backs” that they can use throughout the course of the evaluation, we increase their likelihood of providing meaningful, valid, and reliable data during data collection.

The TA Team has worked to achieve the first objective by working with the Evaluation Team to draft clear and concise definitions for all data elements to be collected. Consequently, DFC recipients have uniform information for data elements when they are entering progress report data. To further increase the quality of the data collected from grantees, an Evaluation Technical Assistance Hotline (toll-free phone number) and email address have been established. Technical Assistance Specialists provide responsive evaluation support to grantees as questions arise when they are entering the required data. DFC recipients’ queries are logged and analyzed to develop topics for on-line technical assistance webinars. Following each webinar, participants have an opportunity to provide feedback on the webinar, including open ended responses. The TA team discusses these findings and uses to make improvements in future webinars.

The second objective is designed to produce materials that grantees will find useful in their everyday operations, stakeholder briefings, and when they apply for funding for future coalition operations. Since 2016, each DFC grant recipient receives a coalition snapshot following data receipt and cleaning of the progress report and core measure data. They are encouraged to review their snapshots and to discuss with the TA team if they identify any issues or require further feedback. This process has resulted in DFC grant recipients at times realizing issues with their data submission, for example incorrectly entering the year in which data were collected.

Overall, these technical assistance activities help to ensure buy-in for evaluation activities, reduce response burden, improve response rates, and ultimately, improve the quality of the data along with providing DFC grant recipients with evaluation data they can make use of in strengthening their prevention strategies and securing additional funding.

1 The former evaluation was conducted by Battelle Centers for Public Health Research and Evaluation from 2005–2009.

2 Battelle (2009), Development of a classification rule for the drug free communities evaluation.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | ODonnel, Barbara |

| File Modified | 0000-00-00 |

| File Created | 2021-01-14 |

© 2026 OMB.report | Privacy Policy