Ssa _ 0822 Nisvs _3.17.20

SSA _ 0822 NISVS _3.17.20.docx

The National Intimate Partner and Sexual Violence Survey (NISVS)

OMB: 0920-0822

SUPPORTING STATEMENT: PART A

OMB# 0920-0822

The National Intimate Partner and Sexual Violence Survey (NISVS)

Point of Contact:

Sharon G. Smith, PhD

Behavioral Scientist

Contact Information:

Centers for Disease Control and Prevention

National Center for Injury Prevention and Control

4770 Buford Highway NE, MS S106-10

Atlanta, GA 30341-3724

phone: 770.488.1363

email: [email protected]

CONTENTS

Section Page

Summary table 3

Justification 4

A.1. Circumstances Making the Collection of Information Necessary 4

A.2. Purpose and Use of Information Collection 10

A.3. Use of Improved Information Technology and Burden Reduction 12

A.4. Efforts to Identify Duplication and Use of Similar Information 13

A.5. Impact on Small Businesses or Other Small Entities 15

A.6. Consequences of Collecting the Information Less Frequently 15

A.7. Special Circumstances Relating to the Guidelines of

5 CFR 1320.5(d)2 15

A.8. Comments in Response to the Federal Register Notice and

Efforts to Consult Outside the Agency 15

A.9. Explanation of Any Payment or Gift to Respondents 19

A.10. Protection of the Privacy and Confidentiality of Information

Provided by Respondents 21

A.11. Institutional Review Board (IRB) and Justification for Sensitive

Questions 22

A.12. Estimates of Annualized Burden Hours and Costs 22

A.13. Estimates of Other Total Annual Cost Burden to Respondents

or Record Keepers 25

A.14. Annualized Cost to the Government 25

A.15. Explanation for Program Changes or Adjustments 27

A.16. Plans for Tabulation and Publication and Project Time Schedule 27

A.17. Reason(s) Display of OMB Expiration Date is Inappropriate 27

A.18. Exceptions to Certification for Paperwork Reducation Act

Submissions 27

Attachments

A NISVS Background Information

B Authorizing Legislation: Public Health Service Act

C.1 Published 60-Day Federal Register Notice

C.2 Public Comment

D Consultation on the Initial Development of NISVS

E Institutional Review Board (IRB) Approval

F.1 – F.3 Survey - National Intimate Partner and Sexual Violence Survey (NISVS)

F.4A-4B Screeners for paper survey

F.5 – F.6 FAQs for ABS and paper surveys

G Security Agreement

H Privacy Impact Assessment (PIA)

I.1 – I.6 Advance Letters and Reminders

J Thank You Incentive Letter

K Crosswalk of Survey Revisions

L.1 – L.2 NISVS Workgroup Participants & Summary

M Cognitive Testing Report

This

revision request

describes the planned testing of a redesign of the National Intimate

Partner and Sexual Violence Survey (NISVS) and the approach for

collecting NISVS data using multiple data collection modes and

sampling strategies. More specifically, this revision request

details the second and third phases of the NISVS redesign project,

which are inclusive of 1) feasibility testing of the revised survey

instruments, data collection modes, and 2) pilot testing of the new

design.

Goals

of the current revision request:

Conduct

feasibility testing to assess a number of alternative design

features, including the sample frame (address-based sample [ABS],

random digit dial [RDD]), and mode of response (telephone, web,

paper), that help garner participation and reduce nonresponse.

Conduct

experiments that inform the development of a protocol for

alternative sampling and weighting methods for multi-modal data

collection that will result in the future ability to calculate

accurate and reliable national and state-level estimates of SV,

IPV, and stalking.

Conduct

a pilot data collection to ensure that the selected optimal

alternative sampling methods and multi-modal data collection

approaches for NISVS are ready for full-scale implementation.

Intended

use of the resulting data.

These data will be used only

to inform future NISVS data collections. Results from the

feasibility phase experiments may be prepared for publication, as

the findings related to optimal data collection modes and sampling

frames are likely to be useful to other federal agencies currently

conducting national data collections. No national prevalence

estimates will be generated from the data collected during the NISVS

redesign project.

Methods

to be used to collect data.

This revision request is to

conduct a (1) feasibility study which involves testing of the CATI,

paper, and web versions of the NISVS survey using a variety of

sampling frames and single vs. multiple modes, all for the purpose

of determining a new design for NISVS, and (2) pilot test of the new

design.

The

subpopulation to be studied.

Non-institutionalized,

English-speaking women and men aged 18 years or older in the United

States.

How

data will be analyzed.

Data are analyzed using

appropriate statistical software to account for the complexity of

the survey design to compute weighted counts, percentages, and

confidence intervals using national-level data.

A. JUSTIFICATION

A.1. Circumstances Making the Collection of Information Necessary

Background

The National Intimate Partner and Sexual Violence Survey (NISVS) is an ongoing, nationally representative survey of U.S. adults and their experiences of sexual violence, intimate partner violence, and stalking. The survey was first launched in 2010, and data were collected again in 2011, 2012, 2015, and 2016/17 and 2018. Additional background information is included in Attachment A. Selected estimates are presented in Table 1. These data and other reports and factsheets are available at: https://www.cdc.gov/violenceprevention/datasources/nisvs/index.html

Table 1. Selected Estimates of Lifetime Prevalence Over Time ̶ U.S. Women and Men, NISVS

|

Women |

Men |

||||

|

2010 |

2012 |

2015 |

2010 |

2012 |

2015 |

Rape |

18.3 |

19.3 |

21.3 |

1.4 |

1.3 |

2.6 |

Made to penetrate |

-- |

0.8 |

1.2 |

4.8 |

6.3 |

7.1 |

Unwanted sexual contact |

27.2 |

28.0 |

37.1 |

11.7 |

10.4 |

17.9 |

Stalking |

16.2 |

16.1 |

16.0 |

5.2 |

5.2 |

5.8 |

Physical violence by an intimate partner |

32.9 |

32.9 |

30.6 |

28.2 |

29.2 |

31.0 |

--Estimate is not reported: relative standard error > 30% or cell size ≤ 20.

The CDC is the lead federal agency for public health objectives related to injury and violence. The Healthy People 2020 report (Healthy People, 2020) lists several objectives that pertain directly to IPV, SV, and stalking. Applicable objectives include objectives IVP39: “reduce the rate of physical assault by current or former intimate partners”; “reduce sexual violence by a current or former intimate partner”; “reduce psychological violence by a current or former intimate partner”; “reduce stalking by a current or former intimate partner.” Also applicable are objectives IVP40: “reduce the annual rate of rape or attempted rape”; “reduce sexual assault other than rape”; “reduce non-contact sexual abuse.” Authority for CDC’s National Center for Injury Prevention and Control to collect these data is granted by Section 301 of the Public Health Service Act (42 U.S.C. 241) (Attachment B). This act gives Federal health agencies, such as CDC, broad authority to collect data and carry out other public health activities, including this type of study.

Current Request

In order to address concerns regarding generally declining response rates garnered through RDD surveys and, consequently, potential non-response bias impacting data collected through the historical RDD NISVS methodology (described in further detail below), the current OMB revision request is to conduct a series of tests and experiments that will provide an understanding of response rates, non-response bias, and prevalence rates generated through alternative data collection methods. Specifically, we aim to conduct:

1) Experimentation and feasibility testing to assess a number of alternative design features, including the sample frame (address-based sample (ABS), random-digit-dial (RDD)), and mode of response (telephone, web, paper), that help garner participation and reduce nonresponse. The purpose of this phase is to conduct experiments to help us understand response rates by sample frame; the extent to which each sample frame represents key population groups; differences in key outcomes by sample frame, interview mode, and costs associated with each frame and mode.

The ultimate goal of the experimentation and feasibility testing phase is to use findings from these experiments to develop a novel sampling frame and data collection design for future NISVS survey administrations.

2) Pilot testing of a new design, procedures, and a final set of survey instruments to ensure that the newly selected approaches to data collection and sampling are ready for full-scale national data collection. This phase will begin after the completion of feasibility testing. The goal of this phase is to understand how long it takes to implement the new design and modes, whether questions and training materials worked as expected, whether there are any unanticipated glitches with respect to implementation, and to ensure that the survey administration programming works well.

This revision request serves as a revision request for the currently approved National Intimate Partner and Sexual Violence Survey - OMB# 0920-0822, expiration date 02/29/2020, for Phase 2 (experimentation and feasibility testing) and Phase 3 (pilot testing of the new design) of developmental activity. We are removing the burden associated with the national implementation of the NISVS study that was approved under this control number expiring 2/29/2020. A non-substantive change request for the pilot design will be submitted once finalized.

Rationale for the Current Request

Declining RDD Response Rates and Historical Efforts to Understand Potential Impact of Non-response Bias on NISVS Data

In late 2017, CDC learned that the NISVS survey’s response rates had declined substantially during the 2016/17 data collection compared to the 2015 data collection period. The low response rates, combined with an observed increase in some key NISVS prevalence estimates, raised concerns about the potential impact of non-response bias. Thus, we explored several factors to assess non-response bias, including comparing the demographics of the sample with those of the target population, comparing prevalence estimates for certain outcomes to available external benchmarks, internal benchmark comparisons, and assessing whether survey content on violence served as a reason for participating or failing to participate in the survey (i.e., the leverage saliency theory).

Demographic Comparisons: A critical question when considering non-response bias is whether the sample differs from the target population it was selected to represent. That is, did the individuals who chose not to participate in the NISVS survey differ in important ways from NISVS respondents? While there were some differences between the sample and the general population in terms of demographics, these represent ignorable biases to the degree that they can be adjusted for by weighting.

External Benchmark Comparisons: We identified three surveys external to NISVS with which to make comparisons: The National Health and Nutrition Examination Survey (NHANES), the National Health Interview Survey (NHIS), and the Medical Expenditure Survey (MEPS) household component are all in-person national surveys with higher response rates (Range: 46.0% - 65.3%) than RDD telephone surveys. We identified three specific health conditions that could serve as benchmarks: asthma, diabetes and hypertension. Results were mixed, even among the three in-person surveys. However, the NISVS estimate for hypertension (30.0%) fell within the range of estimates provided by the other surveys (NHIS: 24.5% to MEPS: 33.6%), and NISVS estimates for both asthma and diabetes were similar to those from NHANES.

The National Survey of Family Growth (NSFG) collects data on a variety of topics, including non-voluntary sexual experiences. The weighted response rate for the NSFG for data collected between July 2015 and June 2017 was 65.3% (NCHS 2018). Although the NSFG is an in-person survey, the questions on sexual victimization are asked in a part of the interview that is self-administered—audio computer assisted self-interviewing—with the interviewer turning over the laptop to the respondent and providing headphones, making it more similar to how NISVS-3 is administered than other nationally representative in-person surveys on violence victimization (e.g., NCVS). We computed estimates of non-voluntary sexual intercourse for both females and males, and non-voluntary oral and/or anal sex among males although limited to those who are 18 to 49 years of age. Estimates for these forms of violence were not significantly different across the two surveys. While we were only able to identify a few violence victimization estimates with which to compare to NISVS outcomes, these results provide some assurance that even with the low response rate, NISVS estimates for sexual violence are comparable to an external benchmark with a higher response rate.

Internal Benchmark Comparisons: We examined NISVS data from 2015 to 2016/2017, specifically identifying selected health conditions generally thought to remain relatively stable over time to understand whether such outcomes also increased and the magnitude of those increases. We considered four health conditions diagnosed by a doctor, nurse or other health professional (asthma, irritable bowel syndrome, diabetes, and high blood pressure) and three current health conditions (frequent headaches, chronic pain, and difficulty sleeping). There were no significant increases in medically diagnosed conditions for females or males from 2015 to 2016/2017. Similarly, no increases were seen in frequent headaches or difficulty sleeping across the two survey periods for women or men. The only significant increase observed across the two survey periods was among women for chronic pain, but that increase (from 24.2% to 28.0%) was far less in magnitude than the increases seen for violence victimization. In summary, we found few increases in the health conditions studied and the increases observed were small relative to the increases seen for violence. So even with a sharp decline in response rates over time, the NISVS surveys provided similar estimates when comparing adjacent periods.

Leverage Saliency: We considered the possibility that the NISVS sample included an unusually high proportion of adults who were particularly interested in the violence topics or who had experienced some forms of violence examined in NISVS. However, no one knew about the violence topics before consenting to take the survey. Rather, all sampled individuals were invited to participate in a survey about “health and injuries they may have experienced.” NISVS implemented a graduated consent process, through which the general health and violence-specific questions were disclosed only to individuals who were determined to be eligible and who had already agreed to take part in the survey. Further, nearly all eligible respondents who ended the survey prematurely (approximately 96.3%) did so before being read the introduction to the victimization questions. Therefore, there is no evidence that survey content on violence served as a reason for participating or failing to participate in the survey.

Taken as a whole, this assessment provided at least some assurances that the 2016/2017 NISVS data were not greatly impacted by non-response bias. However, because of the continual decline of RDD survey response rates that have shown no indication of leveling off or rebounding, as well as relatively limited ability to understand non-response bias in prior NISVS data collections, and concerns raised about the NISVS methodology by an OMB-required methodology workgroup, CDC funded a contract beginning in September 2018 to explore the feasibility and cost of implementing alternative methods for collecting NISVS data in a manner that would result in increased response rates and reductions in nonresponse bias.

Declining RDD Response Rates & Potential Impact of Non-Response Bias Serve as Impetus for Exploring Alternative Data Collection Methods for NISVS

Although it is well known that RDD survey response rates have declined dramatically and the industry response largely has been to move away from this methodology, the goal of this contract is to compare a novel data collection approach (i.e., an address-based sample [ABS] design with data collection via web, paper, or telephone) to the RDD approach that has historically been used to collect NISVS data in order to ensure that CDC has an established comparison from which to draw conclusions that will inform future data collection efforts. For the feasibility study, in addition to comparing the demographics of the RDD sample, the ABS sample and the US population, CDC will seek out external benchmarks with which to compare our estimates (e.g., from the National Survey of Family Growth) and will also compare non-response bias across sample frames and data collection modes.

Under a non-substantive change to the existing OMB number, CDC recently completed the cognitive testing of a revised computer-assisted telephone interview (CATI) instrument (shortened to reduce respondents’ burden but not altering the core sexual violence (SV), intimate partner violence (IPV), and stalking content of the survey), as well as cognitive testing of web and paper versions of the survey. The results of cognitive testing are presented in Attachment M.

Review of the Literature

One of the primary motivators to redesigning the NISVS is to address the declining response rates associated with RDD surveys. The declining quality of random digit (RDD) surveys and the emergence of a national address-based sample (ABS) frame has led to the emergence of a number of alternative methodologies to conduct general population surveys. Olson, et al (2019) reviews a number of these studies. Below we review a few key studies that have informed the design proposed for the NISVS feasibility study.

The initial attempts to use an address-based frame in this way occurred in the mid-2000’s. Link et al. (2008) experimented with several different methods using ABS and a paper mail survey to conduct the Behavioral Risk Factor Surveillance Survey (BRFSS). They compared the results to the ongoing RDD BRFSS and found the mail survey resulted in higher response rates in the states that had the lowest response rates. The Health Information National Trends Survey (HINTS) had been conducted by RDD in the first several iterations (starting in 2000). However, as response rates declined, the survey experimented with the use of a mail ABS methodology (Cantor, et al., 2008). The results indicated a significantly higher response rate than the RDD survey and produced equivalent results. The survey transitioned to the ABS methodology and continues to use it.

Similarly, the National Household Education Survey (NHES) was a long running RDD survey which was experiencing significant drops in response rates around 2000. To address this, a redesign was carried out which experimented with converting to a mail survey (Montaquila, et al, 2013). Overall the findings indicated that higher response rates could be achieved with the ABS methodology when compared to RDD, while maintaining overall data quality. As part of the redesign, the NHES conducted a number of experiments related to incentives and delivery method. With respect to incentives, a $5 pre-incentive to complete the household screening interview was found to significantly increase response rates when compared to a $2 incentive, although both achieved a relatively high rate (66% vs. 71%). They also found an additional prepaid incentive of at least $5 to complete the NHES topical survey significantly increased response rates by around 14 percentage points.

As web surveys became more common and internet penetration increased, researchers experimented with integrating the web into the ABS methodology. The web offers a number of advantages over a paper survey. First among them for the NISVS is that it is a computerized instrument and allows incorporation of many, if not most, of the complex skip patterns that are needed to for the core estimates. A second advantage is that it is potentially less costly than a paper survey. Several papers published by Don Dillman and his students (Millar and Dillman, 2011; Messer and Dillman, 2011) experimented with different methods to integrating the web into a survey of the general public. Chief among their results was that offering the web and paper surveys in a sequential fashion, with web first then paper, maximized the overall number of individuals who filled out a web survey. One experimental condition offered the respondents a choice between the web and the paper at the first mailing. They found this resulted in a lower overall response rate. In a later review of other studies that compared the use of choice, Medway and Fulton (2012) came to the same conclusion. An exception to the results on giving respondents a choice is Matthews et al. (2012), who conducted an experiment for the American Community Survey (ACS) comparing a choice and sequential design. They found that by prominently displaying the existence of a web alternative in the choice mode produced equivalent response rates to offering it sequentially.1

More recently, Biemer et al. (2017) also found that providing a choice produced comparable response rates as the sequential design. Biemer et al. tested whether providing an extra incentive to use the internet in the choice mode affected results. They found the response rate did not differ from the sequential or choice option. However, the incentive did significantly increase the proportion that used the web to fill out the survey. In the choice mode without a bonus one-third of respondents used the web compared to two-thirds using this mode when offered a bonus for doing so.

Use of the web for NISVS has a number of advantages. The web can accommodate the complex skip patterns associated with the current instrument. It offers the confidentiality that is important for the NISVS topics. Following up the web non-respondents with a paper survey does introduce a switch in mode, but as is evident from the literature, the mode effects for web versus paper are not large. Nonetheless, using paper as a follow-up mode does require simplifying the information that is collected. Consequently, it is worth testing an alternative mode, such as call in CATI, which does not require reducing the amount of information collected on the survey.

While there is a substantial literature on the mixed-mode ABS methodologies, none of it has examined how a mixed mode methodology works for a survey as sensitive as NISVS. For this reason, it is important to assess test an ABS design. The first critical question is the response rate and the associated non-response bias for the NISVS estimates, By testing an RDD design against the proposed ABS design, it will be possible to compare the two and assess whether the ABS design is working in ways similar to other surveys that have adopted the methodology.

A.2. Purpose and Use of Information Collection

The National Intimate Partner and Sexual Violence Survey (NISVS) was designed as a RDD survey to collect consistent and reliable data on the incidence, prevalence, and nature of SV, IPV, and stalking at the national and state levels among U.S. women and men on an ongoing basis. NISVS data are widely used in many settings, such as state public health departments, state coalitions, federal partners, universities, and local community programs for a variety of purposes such as training materials, factsheets, policy briefs, and violence prevention campaign materials. NISVS data have been used by the CDC, its state grantees, federal partners, and the Office of the Vice President (Biden). Additionally, NISVS data were collected for the DoD in 2010 and 2016/17 to understand the prevalence of these types of violence for active duty females and males and wives of active duty males, and for National Institute of Justice (NIJ) to examine SV, IPV, and stalking in the American Indian/Alaska Native population. In addition to federal and state use of these data, public use data sets are developed to promote the use of these data by external researchers.

Assuming RDD survey response rates will continue to decline and costs associated with this methodology will continue to increase, the purpose of the current information collection request is to identify an optimal approach for collecting NISVS data in the future. Prior research has demonstrated the strengths of an ABS with a push to web-based data collection, suggesting that this methodology can result in lower costs and higher response rates. However, questions remain about the use of this frame and the web-based mode for collecting data on sensitive experiences involving violence, thus research to understand response rates and non-response for a survey that includes sensitive and detailed questions about sexual violence and intimate partner violence, in particular, is warranted. Indeed, some of the questions we aim to answer include perceptions of confidentiality, privacy, and general reaction to answering sensitive questions between RDD and ABS.

As such, this revision request involves:

Experimentation and feasibility testing to assess a number of alternative design features, including the sample frame (address-based sample (ABS), random-digit-dial (RDD)), and mode of response (telephone, web, paper), that help garner participation and reduce nonresponse. The purpose of this phase is to conduct experiments to help us understand response rates by sample frame; the extent to which each sample frame represents key population groups; differences in key outcomes by sample frame, interview mode, and costs associated with each frame and mode.

The ultimate goal of the experimentation and feasibility testing phase is to use findings from these experiments to develop a novel sampling frame and data collection design for future NISVS survey administrations.

Pilot testing of a new design, procedures, and a final set of survey instruments to ensure that the newly selected approaches to data collection and sampling are ready for full-scale national data collection. This phase will begin after the completion of feasibility testing. The goal of this phase is to understand how long it takes to implement the new design and modes, whether questions and training materials worked as expected, whether there are any unanticipated glitches with respect to implementation, and to ensure that the survey administration programming works well.

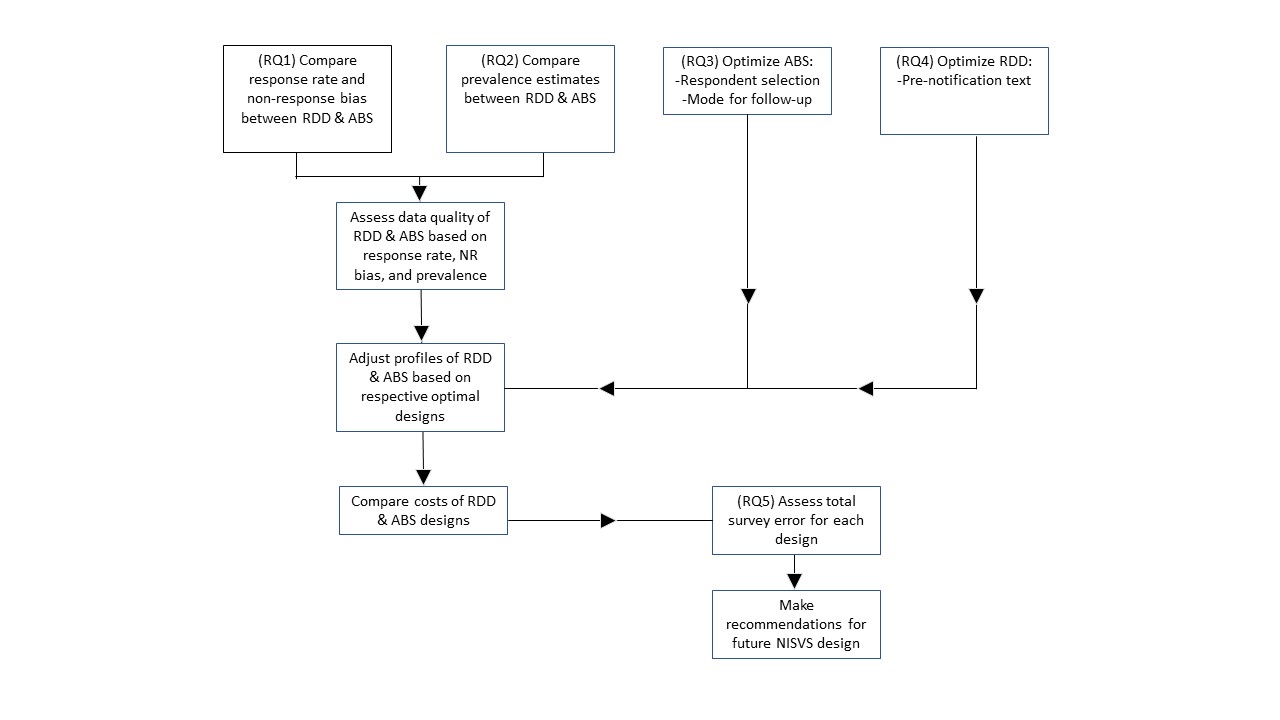

Primary research questions to be addressed during feasibility testing are:

For the NISVS survey, which assesses very personal and sensitive topics, does a multi-modal data collection approach result in improved response rates and reduced nonresponse bias compared to a RDD data collection approach?

For the NISVS survey, does an ABS approach result in different prevalence estimates compared to a RDD approach? If so, does one approach result in better quality data over the other approach?

What are the optimal methods for collecting NISVS data with an ABS sample, with respect to respondent selection and follow-up mode?

What is the effect of prenotification in the RDD on the response rate and non-response bias for NISVS?

What is the total survey error for the ABS and RDD designs?

The diagram below illustrates the overall plan for the project. The SSB provides detailed information indicating how these research questions will be answered in order to our overarching response to the question about which data collection approach should be used moving forward.

Pilot Phase

The pilot phase will be designed based on recommendations from the feasibility phase and will replicate methods implemented in the feasibility phase using a smaller sample. The goal of the pilot phase is to field the survey over a short period of time using the features anticipated for the full-scale NISVS collection (to be completed at a future time under a future OMB submission).

A.3. Use of Improved Information Technology and Burden Reduction

Through 2018, all interviews were conducted over the telephone, using computer-assisted telephone interviewing (CATI) software. Historically, CATI has been used because of its ability to reduce respondent burden, reduces coding errors, and increases efficiency and data quality. The CATI program involves a computer-based sample management and reporting system that incorporates sample information, creates an automatic record of all dial attempts, tracks the outcome of each interview attempt, documents sources of ineligibility, records the reasons for refusals, and locates mid-questionnaire termination.

The CATI system includes the actual interview program (including the question text, response options, interviewer instructions, and interviewer probes). The CATI’s data quality and control program includes skip patterns, rotations, range checks and other online consistency checks and procedures during the interview, assuring that only relevant and applicable questions are asked of each respondent. Data collection and data entry occur simultaneously within the CATI data entry system. The quality of the data is also improved because the CATI system automatically detects errors and ensures that there is no variation in the order in which questions are asked. Data can be extracted and analyzed using existing statistical packages directly from the system, which significantly decreases the amount of time required to process, analyze, and report the data.

In an effort to improve response rates and reduce nonresponse, the current revision request proposes to explore the performance of two new data collection modes – a web-based instrument and a paper-and-pencil instrument (PAPI).

The web-based instrument capitalizes on the use of improved information technology, allowing respondents to complete the survey by personal computer, laptop, tablet, and smartphone. Similar to the CATI version, the web instrument includes skip patterns, rotations, range checks and other online consistency checks and procedures during the interview, assuring that only relevant and applicable questions are asked of each respondent. Data can be extracted and analyzed using existing statistical packages directly from the system, which significantly decreases the amount of time required to process, analyze, and report the data.

Finally, a shortened paper version of the survey will be provided via mail as an option for data collection when initial requests for respondents to complete the full survey on the web are not successful. This paper option of the survey provides potential respondents an opportunity to complete the survey with reduced burden, as the shortened survey assesses only the basic screening items related to the primary types of victimization measured by NISVS and does not collect the same level of detailed information collected by the web and CATI versions of the survey. Also, to facilitate completion of the survey on paper, difficult skip patterns are avoided and less information is collected. This shortened survey provides an opportunity to greatly reduce respondent burden, while at the same time, allows for combining data with the more detailed data on SV, IPV, and stalking experiences assessed in the web and CATI versions of the survey.

A.4. Efforts to Identify Duplication and Use of Similar Information

Prior to NISVS, the most recent national health survey on SV, IPV, and stalking (National Violence Against Women Survey, NVAWS), jointly sponsored by NIJ and CDC (conducted by Schulman, Ronca, Bucuvalas, Inc. (SRBI)), and was completed in 1996 (Tjaden and Thoennes, 1998). Prior to NVAWS, there had been no similar national health surveys with a specific focus on SV, IPV, and stalking. These are also the types of outcomes that are least likely to be disclosed in crime surveys.

When NISVS was originally designed, CDC consulted with other federal agencies (e.g., National Institute of Justice (NIJ), Department of Defense (DoD) and other leading experts and stakeholders in the fields of IPV, SV, and stalking. NCIPC convened a workshop “Building Data Systems for Monitoring and Responding to Violence Against Women” (CDC, 2000). Recommendations provided by those in attendance are reflected in the design of NISVS. As discussed in the Data Systems workshop, surveys that ask behaviorally specific questions and that are couched in a public health context have much higher levels of disclosure than those couched within a crime context (as in the National Crime Victimization Survey (NCVS) conducted by the Bureau of Justice Statistics - BJS).

Although NISVS and NCVS collect similar information, they are complementary in nature. Key characteristics of both systems are listed below. Additional information can be found in Basile, Langton, and Gilbert (2018).

NISVS

Public health context.

Eligible respondents are non-institutionalized adults aged 18 and older.

Respondents are selected using random-digit-dial methodology.

Interviews are conducted by telephone.

Employs behaviorally-specific language as recommended by the National Research Council (National Research Council, 2014).

Focused on sexual violence, intimate partner violence, and stalking.

Questions cover a range a behaviors experienced by victims.

Timeframe of victimization is lifetime and the 12 months preceding the survey.

Data provide lifetime and 12-month prevalence estimates that can be used to generate national and state-specific estimates.

Data provide information on the characteristics of victims and perpetrators.

Data are used to describe associations between victimization and health conditions.

Data on the age at first-time victimization can be used to understand and guide prevention efforts among children and adolescents.

NCVS

Crime-based context.

Eligible respondents are all members of U.S. households age 12 or older and non-institutional group living facilities.

Respondents are selected using a stratified, multi-stage cluster sample.

Interviews are conducted in person and by telephone.

Employs criminal justice terminology.

Focused on nonfatal violent and property crime.

Timeframe of victimization is past calendar year.

Data provide counts and rates of victims, incidents, and victimizations.

Data provide information on the characteristics of victims and perpetrators.

Data can be used to measure trends over time.

In our ongoing assessment of NISVS, CDC worked with the BJS in discussing the complementary nature of NISVS and NCVS. This included demonstrating the ways that these systems provide unique data on victimization and the consequences, exploring options for collaborative, and continuing enhancement of both systems. CDC and BJS participated in regular meetings to discuss the lessons learned and implications for continued improvement of the systems. CDC and BJS also collaborated to develop a summary document that explains the unique and complementary nature of these and other systems for measuring sexual violence. The summary will help users of the data to better understand the survey options that are available and to make an informed decision about which data source to use to address specific questions. The document is available on the CDC website (Basile, Langton & Gilbert, 2018).

Although the Behavioral Risk Factor Surveillance System (BRFSS) included optional IPV and SV modules in 2005, 2006, and most recently in 2007, fewer than half of the states administered the module during any one year. Furthermore, the information collected in the optional modules was limited to a small number of relatively simple questions [IPV (n= 7) and SV (n=8)] and limited to physical and sexual violence. Because financial support from CDC’s Division of Violence Prevention no longer exists for the optional modules, few (if any) states continue to collect IPV or SV data.

A.5. Impact on Small Businesses or Other Small Entities

No small businesses will be involved in this data collection.

A.6. Consequences of Collecting the Information Less Frequently

Because of resource constraints and concern regarding declining response rates on RDD surveys, we propose to delay the nationwide collection of NISVS data until the feasibility and pilot studies of the new data collection approach are completed. The primary consequence of collecting these data less frequently is that stakeholders would have less timely access to national and state prevalence estimates and other data on SV, IPV, and stalking victimization. In order to generate state-level estimates, data from across data collection years must be combined. Thus, reducing the frequency of data collection greatly impacts the nation’s and states’ ability to track and monitor trends in these outcomes over time and to therefore use timely data to inform prevention and program evaluation efforts. NCIPC staff are committed to identifying an optimal data collection approach by June 2021 and to restoring national data collection efforts as quickly as possible thereafter.

A.7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

The request fully complies with the regulation 5 CFR 1320.5.

A.8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency

A.8.a) Federal Register Notice

A 60-day Federal Register Notice was published in the Federal Register on October 9, 2019 vol. 84, No. 196, pp. 2019 (Attachment C.1). CDC Received two anonymous non-substantive public comments (Attachment C.2).

A.8.b) Efforts to Consult Outside the Agency

In the past, CDC participated in discussions involving federal researchers involved in the study of violence against women (documentation included in Attachment D). NCIPC convened a workshop “Building Data Systems for Monitoring and Responding to Violence Against Women” (CDC, 2000). Recommendations provided by those in attendance are reflected in the design of NISVS.

When NISVS was originally designed in 2007, CDC consulted with other federal agencies (e.g., NIJ, DoD) and other leading experts and stakeholders in the fields of SV, IPV, and stalking. Additionally, NCIPC invited a panel of experts to attend a meeting in November 2007 to discuss preliminary findings from the 2007 methodological study (referred to as the NISVS Pilot, although it was not a pilot test of the NISVS survey itself) and to discuss the planned directions for NISVS. The review panel consisted of federal and non-federal subject matter experts with expertise in SV, IPV, and stalking.

In 2008, staff within DOJ and DoD served as technical reviewers for the proposals submitted in response to CDC’s Funding Opportunity Announcement for NISVS. As part of the review team, they participated in the selection of the contractor to do the work and approved the proposed statement of work. DOJ and DoD were also integrally involved in the design of the interview instrument as described below. As described in Section A.4, CDC worked closely with the DoD, NIJ, and other federal agencies in the development of the NISVS. Numerous presentations were made in 2008, 2009 and 2010 to vet the proposed NISVS among a range of interested stakeholders, including victim advocates, family advocacy programs, Title IX Task Force authorized under the 2005 VAWA, and a number of other conferences and public meetings. Further, CDC staff remain engaged in ongoing discussions with Federal colleagues from DoD related to the collection of special population data from military personnel. In 2015 and 2016, staff within the DoD collaborated with CDC in the development, review and approval of the proposed statement of work for the 2016-2017 data collection contract. Data collection for the DoD was conducted in February of 2017 through August 2017. Collaboration between CDC and the DoD was initiated to facilitate collection of military subpopulation data during 2017.

NCIPC recruited a panel of experts to attend a meeting in February 2017 to begin discussions regarding the NISVS study design and to discuss the planned directions for current and future NISVS surveys. The review panel consisted of federal and non-federal subject matter experts with expertise in survey methodology, statistics, IPV and SV research, survey question design, and respondent safety concerns. Attachment L.1 provides a list of those individuals who participated in the meeting and provided recommendations regarding survey design and administration during three webinars and one 2-day in-person meeting between February and July 2017. The summary of recommendations is presented in Attachment L.2.

For the 2016-2018 survey, NCIPC staff actively engaged NCIPC’s Rape Prevention and Education (RPE) and Domestic Violence Prevention Enhancements and Leadership Through Alliances (DELTA) Impact program grantees and other stakeholders to obtain feedback regarding processes implemented to enhance the ability of NISVS to provide timely data that are more easily accessed and used by those groups that have the greatest potential to take actions that can prevent SV, IPV, and stalking, particularly grantees and state-level prevention partners.

In compliance with OMB guidance, NISVS staff have been engaged in the OMB Sexual Orientation and Gender Identity Working Group to ensure that NISVS is using appropriate measures to identify sexual minority populations.

Further, in response to recommendations of the workgroup to maximize collaborative opportunities across Federal surveys, CDC has engaged a number of Federal partners to learn about ongoing experiments being conducted in Federal surveys to improve response rates, to assess the feasibility of partnering to conduct mutually beneficial experiments, and to learn from methods being implemented by other Federal surveys. Since July 2017, CDC has consulted with or referred to publications and work from other Federal and non-Federal partners (including BJS, CDC–BRFSS, CDC–National Survey of Family Growth (NSFG), CDC–National Health Information Survey (NHIS), National Highway Traffic Safety Administration (NHTSA), National Science Foundation (NSF), Census Bureau, National Center for Health Statistics (NCHS), American Association for Public Opinion Research (AAPOR), Office of Juvenile Justice and Delinquency Prevention’s redesign of the National Survey of Children’s Exposure to Violence, and Research Triangle Institute (RTI)) to learn more about studies that are currently in the field or pending and that could have implications for NISVS. For instance, CDC has engaged BRFSS staff to gain a better understanding of BRFSS RDD calling methods (e.g., how many follow-up calls BRFSS conducts before considering a phone number “fully worked”, considering cell phones as personal devices and thereby immediately excluding minors under the age of 18 who answer a cell phone number), methods for calculating response rate (e.g., determining whether other Federal survey statisticians are using survival methods to calculate response rate), and to discuss experiments involving address-based sampling methods and efforts to push potential survey respondents to a web-based survey, to return a phone call, or to reply by mail. Additionally, CDC has engaged with a number of federal agencies that are currently conducting research on methods to enhance participation and reduce nonresponse. For instance, CDC has engaged with NCHS staff working on the National Health and Nutrition Examination Survey to understand results from recent experiments related to optimal incentive structures to garner participation and BJS staff to understand results related to the redesigns of the National Survey of Children’s Exposure to Violence.

Further, CDC engaged a number of partners, including AAPOR members, RTI, NHTSA, and NHIS staff in discussions regarding novel technologies that may be greatly impacting response rates. For example, at the 2017 Annual AAPOR meeting, survey methodologists discussed advancements in technology that have allowed for a proliferation of phone applications that block repeated calls from 800 numbers. Thus, after discussions with RTI, AAPOR scientists, CDC staff, and NCIPC’s BSC, CDC added numbers local to the Atlanta CDC area (770/404) for outbound calls, which would allow for outbound phone numbers to be changed more frequently to avoid being inadvertently blocked by the phone applications designed to block repeated calls from numbers suspected of being marketers. This may have reduced the problem of erroneous flagging and blocking of the study phone number as spam by cell phone carrier applications and increase the number of survey participants.

CDC also engaged Federal partners to learn more about incentives offered to survey respondents and how a range of incentive types and reminder letters, postcards, and other materials may be used to improve response rates. For instance, CDC engaged in conversations with NHIS, NHTSA, BRFSS, and RTI to learn about relatively inexpensive options that could be mailed with an advance letter to potential respondents, which would serve as a reminder to participate in the survey.

The suggestions from the methodology panel and CDC’s efforts to consult with Federal and non-Federal partners outside the agency resulted in a number of ideas for activities that were integrated into the data collection period beginning in March 2018. The impact of these activities on response rates and reductions in nonresponse bias is still being assessed. Consultation with outside entities strengthened our partnerships and improved our ability to call on our partners to discuss opportunities for collaboration and to learn from each other’s research and investments. These discussions further strengthened our ability to develop a contract aimed at determining an optimal data collection approach for NISVS moving forward, which is described herein.

Finally, during conversations with OMB, CDC learned that the Bureau of Justice Statistics (BJS) may have some insight to provide regarding their own redesign of the National Crime Victimization Survey (NCVS). Thus, we consulted with BJS staff to understand lessons learned from their initial redesign testing. Primarily, they raised concern about the seriousness with which respondents complete a web-based survey (i.e., how much thought and time are they putting into it; are respondents simply clicking through to complete the survey?). This is a valid concern, which CDC discussed with our Contractor. To address this concern, we plan to examine the paradata associated with the web-based surveys (e.g., the time it takes to complete the survey, average time spent per item answered), and we will also add some data quality indicator items to the survey (e.g., If you’re paying attention to the survey questions, click yes.) throughout to understand whether respondents are carefully contemplating their responses to each question. We also discussed with both BJS and their OMB statistician the possibility of adding a multi-step victimization classification process to NISVS, as is implemented in NCVS. Unfortunately, to add such a process to NISVS at the current stage of the design contract would be quite troublesome and would require extensive reprogramming of data collection software. However, once we discussed with the NCVS OMB statistician, it was determined that since the weights applied to the NISVS data are independent of the victimization classifications determined in the screening process, there is currently minimal concern from OMB about the NISVS classification system. Finally, our BJS colleagues expressed concurrence with the general consensus in the survey field that RDD data collection has become increasingly challenging and the industry is moving away from this methodology. Given our discussion with OMB around this issue, the NCVS OMB statistician agreed that since CDC is embarking on major changes to the sampling frame and modes compared to the traditional NISVS design, it makes sense to thoroughly understand and test out our novel data collection approach and to compare it to our historical approach so we have a stable basis for estimating how response rates and non-response differ between these frames and modes.

A.9. Explanation of Any Payment or Gift to Respondents

Past NISVS data collections used an incentive structure – see previously approved years (2010, 2011, 2012, 2015, and 2016-2018) of information collection requests (OMB# 0920-0822).

As shown in Table 2, the current study uses both pre- and promised incentives. For telephone and mail-paper surveys, a small pre-incentive of between $1 and $5 has been shown to significantly increase response rates (Cantor, et al., 2008; Mercer, et al., 2015). For the RDD frame Phase 1, a $2 pre-incentive will be included in letters mailed to matched households. A promised $10 incentive will be offered to respondents who complete the survey. And a $40 promised incentive will be offered to sampled non-respondents.

For the ABS frame, a $5 pre-incentive will be included in the first mailing to the household requesting an adult member to fill out the screener on the web. Sampled non-respondents will also receive a $5 pre-incentive. Prior research has also found that offering a bonus incentive to complete by the web increases the proportion using this mode (Biemer, et al., 2017). Promised incentives will be offered to respondents who complete the household screener ($10 by web, $5 by paper). For those who complete the survey, promised incentives will be offered as follows: $15 for web; $10 for in-bound CATI; $5 for paper. Sampled non-respondents will be offered a promised incentive of $40.

Table 2. Use of Incentives by Sample Size

Incentive groups by sample frame |

Sample Size Offered Incentive |

RDD |

|

Pre-paid incentive with letter to matched households |

|

$2 |

2,114 |

Promised incentive to complete the NISVS Interview |

|

For initial requests |

|

$10 |

10,746 |

For sampled non-respondents |

|

$40 |

5,800 |

ABS |

|

Pre-paid incentive |

|

For initial request |

|

$5 |

11,310 |

For sampled non-respondents |

|

$5 |

5,146 |

Promised incentive |

|

To complete household screener |

|

$10 to complete by web |

11,310 |

$5 to complete by paper |

10,122 |

|

|

To complete NISVS |

|

$15 to complete on web |

3,958 |

$15 for call-in CATI |

1,394 |

$5 to complete paper version |

1,394 |

|

|

For sampled non-respondents |

|

$40 |

6,254 |

*Number of eligible sampled persons who are offered the incentive. Estimate contingent on response rates assumed in the sample design. |

|

A.10. Protection of the Privacy and Confidentiality of Information Provided by Respondents

Privacy and Confidentiality

The CDC Office of the Chief Information Officer has determined that the Privacy Act does apply. The applicable System of Records Notice (SORN) is 0920-0136 Epidemiologic Studies and Surveillance of Disease Problems. Published in the Federal Register on December 31, 1992. Volume 57, Number 252, Page 62812-62813. The Privacy Impact Assessment (PIA) is attached (Attachment H).

A number of procedures will be used to maintain the privacy of the respondent. The advance letter (Attachments (I.1-I.6) and subsequent information provided to respondents will describe the study as being on health and injuries. For the web survey, the selected respondent will be required to change their password once they log on to the survey. The selected respondent will be provided with instructions on how to delete the browsing history from the computer.

Participant personally identifiable information (PII) will be collected for both the RDD and ABS samples. For the RDD survey, phone numbers and some addresses will be collected. Addresses and some names will be collected for the ABS sample. Any names, addresses, phone numbers, e-mail addresses, will never be associated or directly linked with the survey data. PII will be securely stored in password-protected files, separate from the survey data, to which only project staff will have access. The PII will be destroyed at the conclusion of the current contract.

The measures used to ensure confidentiality during the feasibility testing phase follow the approved IRB protocol (Attachment E). The contract is covered by a Certificate of Confidentiality from the CDC. The Certificate indicates that contractor employees working on the NISVS contract cannot disclose information or documents pertaining to NISVS to anyone else who is not connected with the research. It may not be disclosed in any federal, state, or local civil, criminal, administrative, legislative, or other action, suit, or proceeding. The only exception is if there is a federal, state, or local law that requires disclosure (such as to report child abuse or communicable diseases) or if a respondent reports plans to harm him/herself or others.

All data will be maintained in a secure manner throughout the data collection and data processing phases in accordance with NIST standards and OCISO requirements. Only contractor personnel, who are conducting the study, will have study-specific access to the temporary information that could potentially be used to identify a respondent (i.e., the telephone number and address). While under review, data will reside on directories that only the project director can give permission to access. All computers will reside in a building with electronic security and are ID- and password-protected (Attachment G).

Informed Consent

The surveys will use a graduated consent procedure. When initially introducing the study, the interviewer will describe it as a survey on “health and injuries”. Once a respondent is selected and reaches the stalking section of the survey, he/she will be given more information about the content of the remaining questions (“physical injuries, harassing behaviors, and unwanted sexual activity”). Prior to each of the remaining sections, additional descriptions are given that are appropriate to the content of the items (e.g., use of explicit language). Respondents will be encouraged to take the survey in a private location. They will also be informed that they can terminate the interview at any time.

A.11. Institutional Review Board (IRB) and Justification for Sensitive

Questions

IRB Approval

CDC’s IRB has deferred to the contractor’s IRB. The IRB approval obtained through the study contractor is presented in Attachment E. IRB Approval is updated annually, and the most current expiration date is 2/5/2020. As approved in the study protocol, CDC will not have contact with study participants, nor will CDC have access to PII.

Justification for Sensitive Questions

Because very few people report SV, IPV, or stalking to officials and very few injuries are reported to health care providers, survey data provide the best source of information regarding the prevalence of SV, IPV, and stalking. It is critical that respondent safety remains the primary concern for any data collection asking about violence, particularly SV, IPV, and stalking. Such measures have been well described (Sullivan & Cain, 2004) and are addressed in the interviewer training.

Attachments F.1-F.3 contain the full NISVS survey instruments. Questions included in the current NISVS are closely modeled after questions that were used in the NVAWS, earlier NISVS, and other studies regarding SV, IPV, and stalking.

A.12. Estimates of Annualized Burden Hours and Costs

Estimates of Annualized Burden Hours.

Previously Approved Burden and Costs

In February 2018, OMB approved the NISVS data collection plans for the 2018 NISVS data collection. At the same time, plans for developmental testing associated with new data collection procedures was approved. Thus, the currently approved burden hours associated with NISVS is 22,700 burden hours associated with survey administration. This included 10,200 hours for a 3-minute screening of 204,000 households during the 2018-19 survey administration. It also included approval of 12,500 burden hours for 30,000 participating households that would engage in a 25-minute survey.

Additionally, we calculated burden for developmental testing related to NISVS. This estimate included as many as 5 focus groups of 10 people each for 90 min (i.e., 75 hours) plus up to 3 waves of cognitive testing with up to 50 respondents per wave for 90 min each (i.e., 225 hours) plus 5000 web survey respondents at 25 min each (i.e., 2,083 hours) + 200 phone surveys at 25 min each (i.e., 83 hours) plus 300 text-back questions at 10 min each (i.e., 50 hours), for a total of 2,516 burden hours.

For the general population, it was estimated that the annual burden cost would be $665,810 for 36,000 completed interviews. This cost was derived by using 204,000 as the expected number of non-participating households screened; an additional 30,000 eligible households completing the survey; and additional 5,700 people engaging in developmental testing related to NISVS.

During Phase 1 of the current contract, the contractor conducted a total of 120 cognitive interviews in July-October 2019. The total burden for the cognitive testing phase of the study was 120 hours. This was derived from the total burden hours for respondents that completed a 60-minute cognitive test of the survey. The cognitive testing report is presented in Attachment M.

Current Request:

Table 3 describes the respondent burden for the current data collection for a one-year period. The breakdown indicates that 7,371 respondents will complete the 3-minute screener (Attachments F.2A and F.2B), and 4,752 respondents will complete a 25 to 40-minute survey (depending on the mode) in the experimentation and feasibility testing phase, resulting in 368 burden hours for screening and 2,599 burden hours for survey completion (2,967 total burden hours).

For Phase 3 (pilot testing phase), 240 respondents will complete the screener (12 burden hours), and 200 respondents will complete a 25-minute survey resulting in 101 annualized burden hours (113 total burden hours for pilot screening and questionnaire). Total burden hours for phases 2 and 3 is 3,080 hours/year.

Table 3. Estimated Annualized Burden Hours for 2019-2020 Data Collection

Type of Respondent |

Mode |

Form Name |

Number of Respondents |

Number of Responses per Respondent |

Average Burden per Response (in hours) |

Total Burden (in hours) |

Individuals and Households |

Phase 2: Experimentation and Feasibility Testing |

|||||

RDD (CATI) |

Screener (Att. F.1) |

3,412 |

1 |

3/60 |

171 |

|

ABS, web |

Screener (Att. F.2A) |

1,188 |

1 |

3/60 |

59 |

|

ABS, paper – Roster method |

Screener (Att. F.4A) |

1,385 |

1 |

3/60 |

69 |

|

ABS, paper – YMOF Method |

Screener (Att. F.4B) |

1,386 |

1 |

3/60 |

69 |

|

RDD (CATI) |

Questionnaire (Att. F.1) |

2,375 |

1 |

40/60 |

1,583 |

|

ABS, web |

Questionnaire (Att. F.2A) |

1,520 |

1 |

25/60 |

633 |

|

ABS, paper |

Questionnaire (Att. F.3) |

752 |

1 |

25/60 |

313 |

|

ABS, in-bound CATI |

Questionnaire (Att. F.1) |

105 |

1 |

40/60 |

70 |

|

Phase 3: Pilot Testing |

||||||

RDD (CATI) |

Screener (Att. F.1) |

80 |

1 |

3/60 |

4 |

|

ABS, web |

Screener (Att. F.2A) |

80 |

1 |

3/60 |

4 |

|

ABS, paper – Roster method |

Screener (Att. F.4A) |

40 |

1 |

3/60 |

2 |

|

ABS, paper – YMOF Method |

Screener (Att. F.4B) |

40 |

1 |

3/60 |

2 |

|

RDD (CATI) |

Questionnaire (Att. F.1) |

66 |

1 |

40/60 |

44 |

|

ABS, web |

Questionnaire (Att. F.2A) |

86 |

1 |

25/60 |

36 |

|

ABS, paper |

Questionnaire (Att. F.3) |

43 |

1 |

25/60 |

18 |

|

ABS, in-bound CATI |

Questionnaire (Att. F.1) |

5 |

1 |

40/60 |

3 |

|

Total Annualized Burden Hours |

3,080 |

|||||

Estimates of Annualized Burden Cost

For the data collection to be completed in 2019-2020, it is estimated that the annual burden cost will be $81,417 (Table 4). For the feasibility phase, this annualized cost was derived by using 7,371 as the expected number of households screened and 4,752 completed interviews. This results in costs of $9,737 for screening and $68,692 for survey completion, for a total cost of $78,429 for the feasibility phase. For pilot testing, the annualized cost was derived by using 240 as the expected number of households screened and 200 completed interviews. This results in costs of $318 for screening and $2,670 for survey completion, for a total cost of $2,988 for pilot testing.

The estimates of individual annualized costs are based on the number of respondents interviewed and the amount of time required from individuals who were reached by telephone and agreed to the one-time interview (Table 4). The average hourly wage was obtained from the 2017 U.S. Bureau of Labor Statistics. For the RDD survey, it takes up to 3 minutes to determine whether a household is eligible and to complete the verbal informed consent. For those who agree to participate, the total time required is approximately 25-40 minutes, on average, including screening and verbal informed consent. The average hourly earnings for those in private, non-farm positions are $26.42 (U.S. Department of Labor, 2017).

Table 4. Estimated Annualized Burden Costs for 2019-2020 Data Collection

Type of Respondent |

Mode |

Form Name |

Number of Respondents |

Number of Responses per Respondent |

Average Burden per Response (in hours) |

Average Hourly Wage |

Total Cost |

Individuals and Households |

Phase 2: Experimentation and Feasibility Testing |

||||||

RDD (CATI) |

Screener (Att. F.1) |

3,412 |

1 |

3/60 |

$26.42 |

$4,507 |

|

ABS, web |

Screener (Att. F.2A) |

1,188 |

1 |

3/60 |

$26.42 |

$1,569 |

|

ABS, paper – Roster method |

Screener (Att. F.4A) |

1,385 |

1 |

3/60 |

$26.42 |

$1,830 |

|

ABS, paper – YMOF method |

Screener (Att. F.4B) |

1,386 |

1 |

3/60 |

$26.42 |

$1,831 |

|

RDD (CATI) |

Questionnaire (Att. F.1) |

2,375 |

1 |

40/60 |

$26.42 |

$41,832 |

|

ABS, web |

Questionnaire (Att. F.2A) |

1,520 |

1 |

25/60 |

$26.42 |

$16,733 |

|

ABS, paper |

Questionnaire (Att. F.3) |

752 |

1 |

25/60 |

$26.42 |

$8,278 |

|

ABS, in-bound CATI |

Questionnaire (Att. F.1) |

105 |

1 |

40/60 |

$26.42 |

$1,849 |

|

Phase 3: Pilot Testing |

|||||||

RDD (CATI) |

Screener (Att. F.1) |

80 |

1 |

3/60 |

$26.42 |

$106 |

|

ABS, web |

Screener (Att. F.2A) |

80 |

1 |

3/60 |

$26.42 |

$106 |

|

ABS, paper – Roster method |

Screener (Att. F.4A) |

40 |

1 |

3/60 |

$26.42 |

$53 |

|

ABS, paper – YMOF method |

Screener (Att. F.4B) |

40 |

1 |

3/60 |

$26.42 |

$53 |

|

RDD (CATI) |

Questionnaire (Att. F.1) |

66 |

1 |

40/60 |

$26.42 |

$1,162 |

|

ABS, web |

Questionnaire (Att. F.2A) |

86 |

1 |

25/60 |

$26.42 |

$947 |

|

ABS, paper |

Questionnaire (Att. F.3) |

43 |

1 |

25/60 |

$26.42 |

$473 |

|

ABS, in-bound CATI |

Questionnaire (Att. F.1) |

5 |

1 |

40/60 |

$26.42 |

$88 |

|

Total Annualized Burden Cost |

$81,417 |

||||||

New/Revised Data Instruments

A revised version of the CATI survey is included in Attachment F.1. New versions of the web-based and paper (i.e., mail-in) survey instruments are included in Attachments F.2A and F.3, respectively. A crosswalk of survey revisions is presented in Attachment K.

A.13. Estimates of Other Total Annual Cost Burden to Respondents or Record Keepers

This data collection activity does not include any other annual cost burden to respondents, nor to any record keepers.

A.14. Annualized Cost to the Government

The contract to conduct the study was awarded to Westat through competitive bid in September of 2018. The total cost for the 2019-2020 data collection is $3,725,698, including $2,845,988 in contractor costs and $879,710 in annual costs incurred directly by the federal government (Table 5).

Costs for this study include personnel for designing the study, developing, programming, and testing the survey instrument; drawing the sample; training the recruiters/interviewers; collecting and analyzing the data; and reporting the study results. The government costs include personnel costs for federal staff involved in the oversight, study design, and analysis, as presented in detail in Table 5.

Table 5. Estimated Cost to the Government for 2019-2020 Data collection

Type of Cost

|

Description of Services |

Annual Cost |

Government Statistician (2 FTEs) |

•Project oversight, study and survey design, sample selection, data analysis, and consultation. •Provide review/input into all statistical aspects of the study design and conduct, including but not limited to study design, sample selection, weighting, total survey error, non-response bias, and response rate. •Survey instrument testing, data analysis and consultation, provide oversight of the QA process. |

$296,283 |

Government Computer Programmer (.5 FTE) |

Process data, produce code for complex quality assurance checks |

$73,948 |

Government Data Manager (.5 FTE) |

•Data storage, documentation, quality assurance checking and reporting •Suggests timetables associated with the data collection and analysis plan •Collaborates with investigators to write plans pertaining to the design of data collection and analysis •Develops plans to ensure quality control of data collection and analysis processes |

$37,607 |

Government Behavioral Scientist (1.6 FTEs) |

•Project oversight, study and survey design, sample selection, data analysis, and consultation. •Discusses different data collection methods and statistical approaches •Applies theories of psychology, sociology, and other behavioral sciences to the development of data collection instruments and methodological approaches •Designs tools and materials for data collection •Communicates research findings to professional audiences and agency staff using appropriate methods (e.g., manuscripts, peer-reviewed journals, conferences) |

$259,590 |

Government Epidemiologist (.9 FTE) |

•Describes sources, quality, and limitations of surveillance data •Defines and monitors surveillance system parameters (e.g., timeliness, frequency) •Defines the functional requirements of the supporting information system •Tests data collection, data storage, and analytical methods •Evaluates surveillance systems using national guidance and methods •Recommends and implements modifications to surveillance systems on the basis of an evaluation •Communicates research findings to professional audiences and agency staff using appropriate methods (e.g., reports manuscripts, peer-reviewed journals, conferences) |

$112,812 |

Government Public Health Analyst (.6 FTE) |

•Project management including oversight of budget and administration •Applies knowledge of the acquisition and grants lifecycle •Manages and monitors the implementation of interagency agreements, and contracts •Applies methods and procedures for funding acquisitions |

$99,470 |

Subtotal, Government Personnel |

$879,710 |

|

Contracted Personnel and Services1 |

Study design, interviewer/recruiter training, data collection and analysis |

2,845,988 |

Total cost |

|

$3,725,698 |

A.15. Explanation for Program Changes or Adjustments

CDC requests a revision to conduct Phases 2 and 3 of NISVS developmental activities (experimentation and feasibility testing).

CDC requests a revision to complete the 2019-2020 data collection cycle using the current survey instrument with the changes described in Section A.1.

A.16. Plans for Tabulation and Publication, and Project Time Schedule

The schedule for data collection, analysis, and reporting is shown in Table 6 below. Data from each phase of data collection will be stored in password-protected files. Results from various experiments and feasibility studies will be developed for publication and dissemination to stakeholders and other federal agencies.

Table 6. Data Collection & Report Generation Time Schedule

Data Collection Period |

Activities |

Time Schedule |

Phase 2: Experimentation and feasibility testing |

Initiate data collection using CATI, paper, and web surveys (Att. F) |

Beginning 3/30/2020 – Post OMB approval of this ICR |

|

Clean, edit, and analyze Phase 3 dataset |

Beginning 6/9/2020 |

|

Complete report documenting results and recommendations related to experiments and feasibility testing |

To Be Completed 8/24/2020 |

Phase 3: Pilot testing |

Data collection |

Beginning 1/18/2021 - Post OMB approval of subsequent non-substantive change request |

|

Clean, edit, and analyze Phase 3 dataset |

Beginning 4/5/2021 |

|

Prepare report documenting results and recommendations related to pilot testing |

Beginning 5/2/2021 |

|

Complete report documenting pilot testing results |

To Be Completed 5/31/2021 |

The pilot phase will be designed based on recommendations from the feasibility phase and will replicate methods implemented in the feasibility phase using a smaller sample. The goal of the pilot phase is to field the survey using the features anticipated for the full-scale NISVS collection (to be completed at a future time under a future OMB submission), but to do so over a short period of time. The plan is to collect 200 completed surveys using the recommended design.

A.17. Reason(s) Display of OMB Expiration Date is Inappropriate

The display of the OMB expiration date is not inappropriate.

A.18. Exceptions to Certification for Paperwork Reduction Act Submissions

There are no exceptions to the certification.

REFERENCES

Basile KC, Langton L, & Gilbert LK. (2018). Sexual Violence: United States Health and Justice

Measures of Sexual Victimization. Retrieved on 10/29/2019 at https://www.cdc.gov/violenceprevention/sexualviolence/sexualvictimization.html

Biemer PP, Murphy J, Zimmer S, Berry C, Deng G, and Lewis K. (2017). Using bonus monetary incentives to encourage web response in mixed-mode household surveys. Journal of Survey Statistics and Methodology, 6, 240–261.

Cantor D, Han D, and Sigman, R. (2008). Pilot of a Mail Survey for the Health Information National Trends Survey. Annual Meeting of the American Association for Public Opinion Research, New Orleans, LA.

Centers for Disease Control and Prevention (CDC). (2000). Building data systems for monitoring and responding to violence against women: Recommendations from a workshop. MMWR, 49, No. RR-11).

Healthy People 2020 [internet]. Washington, DC: U.S. Department of Health and Human Services, Office of Disease Prevention and Health Promotion. [Accessed June 20, 2017]. Available at: https://www.healthypeople.gov/2020/topics-objectives/topic/injury-and-violence-prevention

Link MW, Battaglia MP, Frankel MR, Osborn L, & Mokdad AH. (2008). A Comparison of Address-Based Sampling (ABS) versus Random-Digit Dialing (RDD) for General Population Surveys. Public Opinion Quarterly. 72(1):6-27.

Matthews B, Davis M, Tancreto J, Zelenak MF, & Ruiter M. (2012), 2011 American Community Survey Internet Tests: Results from Second Test in November 2011. American Community Survey Research and Evaluation Program, #ACS12-RER-21, May 14, 2012. https://www.census.gov/content/census/en/library/working-papers/2012/acs/2012_Matthews_01.html.

Medway R, & Fulton J. (2012). When More Gets You Less: A Meta-Analysis of the Effect of Concurrent Web Options on Mail Survey Response Rates. Public Opinion Quarterly, 76, 733–746.

Mercer A, Caporaso A, Cantor D, & Townsend R. (2015). How much gets you how much? Monetary incentives and response rates in household surveys. Public Opinion Quarterly, 79(1), 105–129.

Messer BL & Dillman DA. (2011). Surveying the general public over the internet using address-based sampling and mail contact procedures. Public Opinion Quarterly, 75(3), 429–457.

Millar MM & Dillman DA. (2011). Improving response to web and mixed-mode surveys. Public Opinion Quarterly, 75(2), 249–269

Montaquila JM, Brick JM, Williams D, Kim K, & Han D. (2013). A Study of Two-Phase Mail Survey Data Collection Methods. Journal of Survey Statistics and Methodology, 1, 66–87.

National Research Council. (2014). Estimating the incidence of rape and sexual assault. Panel

on Measuring Rape and Sexual Assault in Bureau of Justice Statistics Household Surveys, C. Kruttschnitt, W.D. Kalsbeek, and C.C. House, Editors. Committee on National Statistics, Division of Behavioral and Social Sciences and Education. Washington, DC: The National Academies Press.

Sullivan CM & Cain D. (2004). Ethical and safety considerations when obtaining information from or about battered women for research purposes. Journal of Interpersonal Violence, 19, 603-18.

Tjaden P & Thoennes N. (1998). Prevalence, incidence, and consequences of violence against women: Findings from the National Violence Against Women Survey. (NCJ Publication No. 172837). U.S. Department of Justice, Office of Justice Programs, Washington, DC.

U.S. Department of Labor. Bureau of Labor Statistics. (2017). Table B-8. Average hourly and

weekly earnings of production and nonsupervisory employees on private nonfarm payrolls by industry sector, seasonally adjusted. Retrieved at: https://www.bls.gov/news.release/empsit.t24.htm

1 Despite these results, ACS still uses a sequential design.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Sharon Smith |

| File Modified | 0000-00-00 |

| File Created | 2021-05-27 |

© 2026 OMB.report | Privacy Policy