SPPC-II Dem Supporting Statement Part B Revised after OMB Comments - 12.16.20 clean with highlights

SPPC-II Dem Supporting Statement Part B Revised after OMB Comments - 12.16.20 clean with highlights.docx

Safety Program in Perinatal Care (SPPC)-II Demonstration Project

OMB: 0935-0246

SUPPORTING STATEMENT

Part B

Safety Program in Perinatal Care (SPPC)-II Demonstration Project

Version #2: December 16, 2020

Agency of Healthcare Research and Quality (AHRQ)

Table of contents

B. Collections of Information Employing Statistical Methods 3

1. Respondent universe and sampling methods 3

2. Information Collection Procedures 5

3. Methods to Maximize Response Rates 8

4. Tests of Procedures 9

5. Statistical Consultants 9

B. Collections of Information Employing Statistical Methods

1. Respondent universe and sampling methods

All study respondents for SPCC-II Demonstration Project are clinical staff working in obstetric units in birthing hospitals that voluntarily enrolled in perinatal quality collaboratives (PQC) in Oklahoma (48 of 48 birthing hospitals enrolled in state PQC) and Texas (210 of 238 hospitals enrolled in state PQC). Each of these units have an assigned clinical lead for activities associated with implementation of patient safety bundles. These bundles are implemented through the state PCQ model with technical assistance from the national Alliance for Improvement on Maternal Health (AIM) program. Thus, clinical leads for bundle implementation are designated “AIM Team Leads”. These individuals are responsible not only for coordinating the implementation of the AIM bundles, but also the mandatory reporting of related data to the AIM program. For these reasons, they represent our target population for in-person training workshops, completion of workshop evaluation forms, baseline hospital surveys, and qualitative interviews. Based on PQC experience in several US states, AIM Team Leads are generally very responsive (personal communications with AIM program leadership and JHU experience participating in the Maryland PQC), especially so in the beginning of a new AIM activity and especially if the activity involves receiving support with bundle implementation (i.e. training in our case). Also of note, AIM Team Leads are expected to be the most knowledgeable regarding AIM bundle implementation, thus no other category of respondents can be considered to provide the information on hospitals’ baseline characteristics. We aim to work in ~8 hospitals willing to participate in the SPPC-II program. One AIM Team Lead and ~up to 4 frontline staff in each hospital will be selected for qualitative phone interviews in the summer/fall of 2021; actual number will depend on the time needed to reach saturation on salient themes. Eight focus group discussions with ~1 AIM Team Lead and 4 frontline staff per hospital will be conducted in each of the ~8 hospitals. JHU will aim to interview staff from hospitals offering all levels of maternity care, teaching and non-teaching hospitals, including some from hospitals with very high (>3,000) and very low (<500) annual deliveries. Discussions with state PQC leadership and Demonstration Project Stakeholder Panel members will further inform selection of respondents for the qualitative interviews.

W e

estimate that, on average, there are about 60 full-time,

hospital-employed clinical staff in obstetric units in Demonstration

Project hospitals.

Given project objectives, these will all be eligible to receive

online training with the SPPC-II e-modules on teamwork and

communication. Therefore, they represent our target population for

the baseline and implementation surveys with clinical staff; and for

assessing teamwork and communication as well as safety culture

outcomes at baseline (i.e. pre-training), at 6, 12, and 18 months of

program implementation (i.e. post-training). Expected baseline levels

for teamwork, communication, and safety culture outcomes are in line

with the published literature. For

the key composite outcome measure derived from the 16-item

validated Mayo High Performance Teamwork Scale, we expect a baseline

level similar to that found in the validation study by Malec et al.

19,

i.e. pre-training mean 20-22; as a reminder, the scale score range is

0-32. This level will represent mostly “inconsistently”

(score of 1) rather than “never” (score of 0) or

“consistently” (score of 2) reports re practices

corresponding to the 16 scale dimensions of teamwork. For the safety

culture gradings, we expect a baseline level similar to that reported

for the most recent Hospital Survey on Patient Safety Culture7

– on average, across reporting hospitals, 33% and 43% of

respondents gave their unit a patient safety grade of “excellent”

and “very good’, respectively.

e

estimate that, on average, there are about 60 full-time,

hospital-employed clinical staff in obstetric units in Demonstration

Project hospitals.

Given project objectives, these will all be eligible to receive

online training with the SPPC-II e-modules on teamwork and

communication. Therefore, they represent our target population for

the baseline and implementation surveys with clinical staff; and for

assessing teamwork and communication as well as safety culture

outcomes at baseline (i.e. pre-training), at 6, 12, and 18 months of

program implementation (i.e. post-training). Expected baseline levels

for teamwork, communication, and safety culture outcomes are in line

with the published literature. For

the key composite outcome measure derived from the 16-item

validated Mayo High Performance Teamwork Scale, we expect a baseline

level similar to that found in the validation study by Malec et al.

19,

i.e. pre-training mean 20-22; as a reminder, the scale score range is

0-32. This level will represent mostly “inconsistently”

(score of 1) rather than “never” (score of 0) or

“consistently” (score of 2) reports re practices

corresponding to the 16 scale dimensions of teamwork. For the safety

culture gradings, we expect a baseline level similar to that reported

for the most recent Hospital Survey on Patient Safety Culture7

– on average, across reporting hospitals, 33% and 43% of

respondents gave their unit a patient safety grade of “excellent”

and “very good’, respectively.

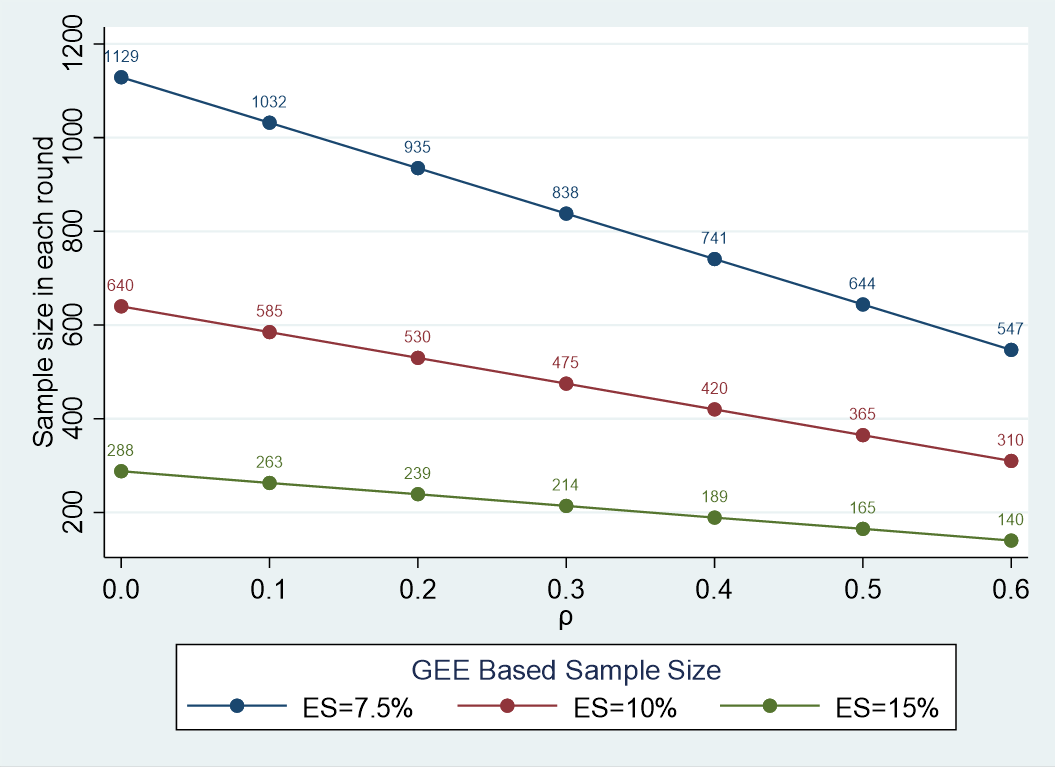

Sample size requirements for these four surveys are shown graphically in Figure 1 and discussed here. Sample size needs to identify conservative effect sizes of 7.5%, 10%, and 15% between any two of the four assessment time points were calculated using a GEE approach for pre- and post-intervention experiments with dropout.18 This GEE approach is used given selection of survey respondents from the same hospitals at all 4 time points and the expected correlation of responses regarding teamwork and communication within hospitals. Calculations consider a 25% decline in response rate between baseline and 30 months post implementation survey and multiple comparisons given the four times of data collection. We expect hospital-level correlations around 0.3, thus we would need to interview 475 to 838 clinical staff to identify a 10% to 7.5%change in teamwork and communication, safety culture outcomes between baseline and the 30-month assessment using a two-sided type I error of 0.01 and 0.80 power; larger effect sizes will require smaller samples.

Figure 1. Sample size requirements for each round of clinical staff surveys

Note: ES, effect size; , hospital-level correlation.

Given AIM’s PQC-based model of implementing the safety bundles, comprehensive lists of clinical staff in each of the 259 hospitals will be developed and used to randomly and systematically select 3 staff at each hospital and for each survey. Staff will be asked to consent participation in our study and complete the surveys online or on paper. Summary Table 1 shows the expected eligible sample sized, response rates, and number of respondents for each data collection activity for the Demonstration Project.

Table 1. Sample size and response rates for SPCC-II Demonstration Project

Data collection component |

Sample size |

Response rate (%) |

Number of respondents/ completed forms |

Evaluation form for training of AIM Team Leads |

258 |

75.00 |

193 |

Self-administered baseline surveys with AIM Team Leads |

258 |

95.00 |

245 |

Self-administered baseline surveys with clinical staff |

774 |

85.00 |

697 |

Qualitative semi-structured interviews with AIM Team Leads |

8 |

100.00 |

8 |

Qualitative semi-structured interviews with frontline staff |

32 |

100.00 |

32 |

Focus group discussions with AIM Team Leads and frontline staff |

40 |

100.00 |

40 |

Self-administered implementation surveys with clinical staff at 6months |

774 |

80.00 |

658 |

Self-administered implementation surveys with clinical staff at 18 months |

774 |

77.50 |

619 |

Self-administered implementation surveys with clinical staff at 30 months |

774 |

75.00 |

581 |

For examining changes in key maternal health outcomes, due to differences in PQC experience and specific AIM bundles being implemented in OK (“older” AIM state, since 2015) versus T X (“newer” AIM state, since 2018), we will separately utilize the data reported to the AIM program by each state. For both states, analyses will employ interrupted time-series (ITS), the strongest quasi-experimental research design to evaluate the impact of health interventions when randomization is not feasible.19 Statistical power increases with the number of time points included in ITS analyses and with larger effect sizes.19 For our study, the underlying standard deviation of the data (i.e. expected pre-post outcome changes) is small given the short, 30-month timeline of the Demonstration Project. With outcome data being reported quarterly to AIM, we will have about 20 data points pre-intervention time points in OK and 5 data points pre-intervention in TX; and 10 data points post-intervention in both states. These data will provide adequate power to detect effects in balanced data series.

2. Information Collection Procedures

Establish Contact with AIM Team Leads – State PQC leadership in OK and TX will inform AIM Team Leads in all 258 birthing hospitals by email about this Demonstration Project using a brief, half-page summary that AHRQ, AIM, JHU, and state PQCs leadership develop together. This summary will be sent with all other information about the PQC meetings scheduled mid-September in 2019 in both OK and TX. AIM Team Leads will be informed about the teamwork and communication training workshops (one in OK and five in TX) to be offered by JHU the day after the state PQC meeting and will be asked whether they would like to participate in the training workshops and the Demonstration Project. State PQC leadership will generate lists of participants, to include names, email addresses, and phone numbers. These lists will be used by JHU to create the AIM Team Lead Roster (Appendix A).

JHU will subsequently send a welcome email to all those who expressed interest in the training workshop and the Demonstration Project. The email will include the following:

invitation to complete a 20-minute baseline survey either online or on paper;

information about how to access and complete this baseline survey (Attachment E) online;

a soft copy of the survey (Attachment E) and the corresponding written consent form approved by the JHU Institutional review Board (IRB) for those who want to complete the survey on paper;

mail and email information for JHU if respondents complete the survey on paper.

request to generate a comprehensive list of clinical staff in their unit (Appendix G) and share only staff IDs and email addresses with JHU.

AIM Team Leads Baseline Survey – As noted above, AIM Team Leads will be asked to complete the baseline survey either online or on paper before the training workshop. If they choose to complete the survey on paper, they will be given the option of bringing the completed survey with them at the training workshop or sending it by mail or email to JHU using the mail and email addresses information provided. The online survey platform will be programmed to send reminder emails once per week during the survey administration period to those who have not completed the survey. If surveys are not completed before the time of the training workshop, a hard copy survey will be given to non-respondents at the training workshop. Respondents will be offered a $10 incentive as described in Statement A. Thank you emails will be automatically generated and sent by the online survey platform upon survey completion. This survey captures key information about the hospital infrastructure, human resources, past experience of staff with teamwork and communication trainings, any use of teamwork and communication tools and strategies by clinical staff, and experience with AIM bundle implementation.

Establish Contact with Clinical Staff for Baseline Surveys – Using the complete listing of staff IDs from each hospital AIM Team Lead, we will randomly select 3 clinical staff in each hospital to complete the baseline surveys. Selected staff IDs will be sent an email with the following information:

invitation to complete a 25-minute baseline survey either online or on paper;

information about how to access and complete this baseline survey (Attachment F) online;

a soft copy of the survey (Attachment F) and the corresponding written consent form approved by the JHU Institutional review Board (IRB) for those who want to complete the survey on paper;

mail and email information for JHU if respondents complete the survey on paper.

Clinical Staff Baseline Survey – As noted above, 3 randomly selected clinical staff will be asked to complete the baseline survey either online or on paper before the training workshop. If they wish to complete the survey on paper, they will need to mail or email to JHU using the mail and email addresses information provided. The online survey platform will be programmed to send reminder emails once per week during the survey administration period to those who have not completed the survey. Surveys will need to be completed before the training workshop attended by AIM Team Lead at each hospital. Respondents will be offered a $10 incentive as described in Statement A. Thank you emails will be automatically generated and sent by the online survey platform upon survey completion. This survey captures key information about past experience of staff with teamwork and communication trainings, any use of teamwork and communication tools and strategies by clinical staff, and experience with AIM bundle implementation; most importantly the survey includes the 16-item validated Mayo High Performance Teamwork Scale,19 several items adapted from the CUSP Team Check-up Tool,20 and the overall patient safety grade used in the Hospital Survey on Patient Safety Culture.21

Tracking of Project’s Training Activities – Information about who attended the training workshop will be captured by JHU at the time of the workshop. During the training workshop, AIM Team Leads will be reminded about the template used to generate a comprehensive list of clinical staff in their unit (Appendix G) and will be encouraged to use the form to track attendance of facilitation sessions by their staff and to update the form every 6 months to capture new hires and staff that left the unit. A training workshop evaluation form (Attachment B) will be distributed at the end of the training. Its completion will be voluntary and aimed at understanding the perceived quality and utility of the training by trainees.

Using the online platform hosting the training e-modules for clinical staff will, JHU will extract key information about completion and re-take of training e-module by clinical staff (Appendix C). In order to record information on key topics covered during the monthly calls organized during the first 18 months of program implementation, JHU will keep track of call participants and topics address during each call (Appendix D).

Qualitative Interviews and Focus Group Discussions with AIM Team Leads and frontline staff – Their timing, expected number, and the selection of interviewees was discussed in section 1. Upon initial email request to participate in these interviews, JHU will send up to three interview request reminders by email, 5-7 days apart, to all those who do not respond. An interview guide (Appendix J3) will be used to obtain data on the hospitals’ progress with the implementation of the SPPC-II training e-modules and facilitation sessions, and the potential needs AIM Team Leads may have. An oral consent form approved by the JHU IRB will be used for these interviews. Respondents will be offered a $50 and $25 Amazon gift card incentive as described in Statement A; and JHU will send thank you emails to all interviewees immediately after interviews are completed. The information collected will offer the opportunity to potentially adjust the intervention if certain components do not work or can be strengthen and will be also used to inform selection of topics for coaching calls, and to complement findings from implementation surveys to be conducted with all types of clinical staff at 6 months. Analysis of the qualitative data will take place in parallel with data collection.

Establish Contact with Clinical Staff for Implementation Surveys – Using updated complete listing of staff IDs from each hospital AIM Team Lead, we will randomly select 3 clinical staff in each hospital to complete implementation surveys at 6, 12, and 18 months after the training workshop attended by the AIM Team Lead at each hospital. Selected staff IDs will be sent an email with the following information:

invitation to complete a 30-minute implementation survey either online or on paper;

information about how to access and complete this survey (Attachment F) online;

a soft copy of the survey (Attachments I or J or K) and the corresponding written consent form approved by the JHU Institutional review Board (IRB) for those who want to complete the survey on paper;

mail and email information for JHU if respondents complete the survey on paper.

Clinical Staff Implementation Surveys – As noted above, 3 randomly selected clinical staff will be asked to complete the implementation surveys at each of the three time points either online or on paper within a month of receiving the invitation. If they wish to complete the survey on paper, they will need to mail or email to JHU using the mail and email addresses information provided. The online survey platform will be programmed to send reminder emails once per week during the survey administration period to those who have not completed the survey. Respondents will be offered a $10 incentive as described in Statement A. Thank you emails will be automatically generated and sent by the online survey platform upon survey completion. This survey captures key information about past experience of staff with teamwork and communication trainings, any use of teamwork and communication tools and strategies by clinical staff, and experience with AIM bundle implementation; most importantly the survey includes the 16-item validated Mayo High Performance Teamwork Scale,19 several items adapted from the CUSP Team Check-up Tool,20 and the overall patient safety grade used in the Hospital Survey on Patient Safety Culture.21

AIM Data – A data use agreement (DUA; Attachment L) allowing JHU access to the data their hospital submits to AIM will be shared with AIM Team Leads at the in-person training workshop. They will be asked to coordinate DUA signature by hospital leadership; and either mail, or scan and email to JHU (mail and email addresses information for JHU will be provided). We expect to receive the signed DUAs from all participating hospitals before the end of 2019. These will be shared with the AIM program using an AHRQ password-protected shared drive, upon which AIM will be able to share process and maternal health outcome data submitted by hospitals in OK and TX. Data sharing will occur electronically on the following schedule:

on January 15th, 2020, OK data submitted between January 1st, 2015 and December 31st, 2019, and TX data submitted between July 1st, 2018 and December 31st, 2019;

every three months thereafter until July 15th, 2022.

Data sharing will involve providing access to a data analyst at the AIM Data Center to

the AHRQ password-protected shared folder described in Statement A. JHU will verify the data and follow up with any concerns or questions regarding missing data or data quality.

3. Methods to Maximize Response Rates

Response rates are discussed in statement B, section 1. JHU has processes in place to reach or even exceed these response rates, as discussed below.

Based on experience with similar data collection from SPPC-II Planning Phase, for qualitative, phone interviews with AIM Team Leads, we expect 100% response rate. JHU obtained a 90% response rate conducting qualitative interviews during the Planning Phase by sending up to three interview request reminders by email, about 5-7 days apart to all those who did not respond upon first request. The same email reminder procedure will be used for the Demonstration Project. Moreover, for the Demonstration Project, the interviews will be conducted 3-4 months after the training workshops, thus after the study team has established a direct rapport with potential interviewees. This situation is superior to that encountered in the Planning Phase, when interviews were conducted with clinical staff, SPPC-I and AIM program stakeholders with whom JHU had no previous interaction.

Also expected is a high 95% response rate with baseline interviews with AIM Team Leads to be self-administered online or on paper before the training workshop. The online survey platform will be programmed to send a reminder email once per week during the survey administration period to staff who have not completed the survey. While respondents will only be offered a modest $10 incentive, they will be offered much desired training (per SPPC-II Planning Phase qualitative interview findings) by a reputable JHU team. If surveys are not completed at the time of the training workshop, a hard copy survey will be given to non-respondents at the training workshop. Respondents will be told that training certificates will only be offered to those who complete the baseline surveys.

An 80% response rate is expected with baseline interviews with clinical staff. They will be given the option to complete the interview online or on paper and will be offered a modest $10 incentive for completing the interview. The online survey platform will be programmed to send a reminder email once per week during the survey administration period to staff who have not completed the survey. We know that a 69% response rate as obtained with paper surveys for the latest 2014 Hospital Survey of Patient Safety Culture (HSPSC).7 Of note, the HSPSC is administered to general clinical staff, not recently trained staff as part of a statewide learning collaborative, which is the case with our Demonstration Project. At their training workshop and during time-aligned monthly coaching calls, AIM Team leads will be provided with information to promote awareness of this survey among their clinical staff, coordinate implementation of the survey, encourage staff to complete the survey, and provide staff time to do so. The state PQC team will also remind and encourage AIM Team Leads by email about the timing of the clinical staff baseline surveys.

For the three clinical staff implementation surveys, we expect response rates of 80%, 77.5% and 75% are expected at 6, 12, and 18 months after initial training workshops. Different clinical staff will be selected to complete the baseline and each of these surveys, so that no exceptional burden in placed on any clinical staff member. Respondents will be given the option to complete the interview online or on paper and will be offered a modest $10 incentive for completing the interview. The online survey platform will be programmed to send a reminder email once per week during the survey administration period to staff who have not completed the survey. We know that a 69% response rate as obtained with paper surveys for the latest 2014 Hospital Survey of Patient Safety Culture (HSPSC).7 Of note, the HSPSC is administered to general clinical staff, not recently trained staff as part of a statewide learning collaborative, which is the case with our Demonstration Project. At their training workshop and during time-aligned monthly coaching calls, AIM Team leads will be provided with information to promote awareness of this survey among their clinical staff, coordinate implementation of the survey, encourage staff to complete the survey, and provide staff time to do so. The state PQC team will also remind and encourage AIM Team Leads by email about the timing of the clinical staff baseline surveys.

4. Tests of Procedures

The procedures for this specific Demonstration Project have not been subjected to testing. However, AHRQ and JHU have conducted similar projects and are using well-established research methods with this project. Specifically, qualitative data from AIM Team Leads will be coded using NVivo10 (QSR) and thematically analyzed to study organizational elements of successful implementation. Survey data collected will be used in a variety of well-established descriptive analyses; estimation of changes in key process and outcomes measures between the different time points; and regression analyses.

5. Statistical Consultant

Dr. Saifuddin Ahmed, MBBS, PhD

Professor

Departments of Population, Family and Reproductive Health and Biostatistics

Johns Hopkins Bloomberg School of Public Health

615 N. Wolfe St., Room E4642, Baltimore MD 21205

Email: [email protected]

Phone: 410-614-4952

References

Creanga AA. Maternal Mortality in the United States: A Review of Contemporary Data and Their Limitations. Clin Obstet Gynecol. 2018;61(2):296-306.

American College of Obstetricians and Gynecologists and the Society for Maternal–Fetal Medicine, Kilpatrick SK, Ecker JL. Severe maternal morbidity: screening and review. Am J Obstet Gynecol. 2016;215(3):B17-22.

Agency for Healthcare Research and Quality. Safety Program in Perinatal Care. Available at: https://www.ahrq.gov/professionals/quality-patient-safety/hais/tools/perinatal-care/index.html

Sorensen, A.V., Webb, J., Clare, H.M., Banger, A., Jacobs, S., McArdle, J., Pleasants, E., Burson, K., Lasater, B., Gray, K., Poehlman, J., Kahwati, L.C., AHRQ Safety Program for Perinatal Care: Summary Report. Prepared under Contract No. 290201000024I (RTI International). AHRQ Publication No. 17-0003-24-EF. Rockville, MD: Agency for Healthcare Research and Quality. May 2017. www.ahrq.gov/perinatalsafety.

Kahwati LC, Sorensen AV, Teixeira-Poit S, Jacobs S, Sommerness SA, Miller KK, Pleasants E, Clare HM, Hirt CL, Davis SE, Ivester T, Caldwell D, Muri JH, Mistry KB. Impact of the Agency for Healthcare Research and Quality's Safety Program for Perinatal Care. Jt Comm J Qual Patient Saf. 2019 Jan 10. pii: S1553-7250(18)30249-6. doi: 10.1016/j.jcjq.2018.11.002. [Epub ahead of print]

American College of Obstetricians and Gynecologists. Alliance for Innovation on Maternal Health. Available at: https://www.acog.org/About-ACOG/ACOG-Departments/Patient-Safety-and-Quality-Improvement/What-is-AIM

Agency for Healthcare Research and Quality. 2014 Hospital SOPS Database Report. Available at: https://www.ahrq.gov/sops/databases/hospital/2014/ch2.html

Riley W, Davis S, Miller K, Hansen H, Sainfort F, Sweet R. Didactic and simulation nontechnical skills team training to improve perinatal patient outcomes in a community hospital. Jt Comm J Qual Patient Saf. 2011;37(8):357-64.

Magill ST, Wang DD, Rutledge WC, Lau D, Berger MS, Sankaran S, Lau CY, Imershein SG. Changing Operating Room Culture: Implementation of a Postoperative Debrief and Improved Safety Culture. World Neurosurg. 2017; 107:597-603.

Safety culture proven to improve quality, must be monitored and measured. Hospital Peer Review, May 2016;41(5):49-60.

The Joint Commission. Sentinel Event Alert. Issue 57, March 1, 2017. The essential role of leadership in developing a safety culture. Available at: https://www.jointcommission.org/assets/1/18/SEA_57_Safety_Culture_Leadership_0317.pdf

The Joint Commission. High Reliability Healthcare. Available at: https://www.jointcommission.org/high_reliability_healthcare/is_patient_safety_culture_improving_repeating_organizational_assessment_at_18-24_months/

Main EK. Reducing Maternal Mortality and Severe Maternal Morbidity Through State-based Quality Improvement Initiatives. Clin Obstet Gynecol. 2018;61(2):319-331.

Kost RG, DeRosa JC. Impact of survey length and compensation on validity, reliability, and sample characteristics for Ultrashort-, Short-, and Long-Research Participant Perception Surveys. J Clin Transl Sci. 2018;2(1):31-37.

Yu S, Alper HE, Nguyen AM, Brackbill RM, Turner L, Walker DJ, et al. The effectiveness ofa monetary incentive offer on survey response rates and response completeness in a longitudinal study. BMC Med Res Methodol. 2017;17(1):77.

Cook DA, Wittich CM, Daniels WL, West CP, Harris AM, Beebe TJ. Incentive and Reminder Strategies to Improve Response Rate for Internet-Based Physician Surveys: A Randomized Experiment. J Med Internet Res. 2016;18(9):e244.

Kirkpatrick Donald. Evaluating Training Programs. 1994. SanFrancisco, CA: Berrett-Koehler Publishers, Inc.)

Zhang S, Cao J, Ahn C. A GEE Approach to Determine Sample Size for Pre- and Post-Intervention Experiments with Dropout. Comput Stat Data Anal. 2014;69. doi: 10.1016/j.csda.2013.07.037.

Penfold RB, Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad Pediatr. 2013;13(6 Suppl):S38-44.

Malec JF, Torsher LC, Dunn WF, Wiegmann DA, Arnold JJ, Brown DA, Phatak V. The Mayo High Performance Teamwork scale: reliability and validity for evaluating key crew resource management skills. Simul Healthc. 2007;2(1):4-10.

Agency for Healthcare Research and Quality. CUSP Toollkit. CUSP Team Check-up Tool. 2019. Available at: https://www.ahrq.gov/professionals/education/curriculum-tools/cusptoolkit/toolkit/teamcheckup.html

Sorra J, Gray L, Streagle S, et al. AHRQ Hospital Survey on Patient Safety Culture: User’s Guide. (Prepared by Westat, under Contract No. HHSA290201300003C). AHRQ Publication No. 15-0049-EF (Replaces 04-0041). Rockville, MD: Agency for Healthcare Research and Quality. January 2016. http://www.ahrq.gov/professionals/quality-patient- safety/patientsafetyculture/hospital/index.html

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Supporting Statement Part B Template |

| Subject | <p>(Supporting Statement Template and Instructions; instructions are in italics) SUPPORTING STATEMENT Part B |

| Author | Heather Nalls |

| File Modified | 0000-00-00 |

| File Created | 2021-06-03 |

© 2026 OMB.report | Privacy Policy