Appendices A-C NAEP 2022 Supplemental Documents

NAEP 2022 Appendix A-C.docx

National Assessment of Educational Progress (NAEP) 2022 Materials Update #2

Appendices A-C NAEP 2022 Supplemental Documents

OMB: 1850-0928

NATIONAL CENTER FOR EDUCATION STATISTICS NATIONAL ASSESSMENT OF EDUCATIONAL PROGRESS

National Assessment of Education Progress (NAEP) 2022

Materials Update #2

Appendix A-C

External Advisory Committees

OMB# 1850-0928 v.25

September 2021

Table of Contents

Appendix A- Committees and Panels (Approved v.23) 2

Appendix B- Weighting Procedures (Approved v.10) 13

The 2013 Weighting Procedures documentation is the most current version available to the public. At this time, there is not a timeline for when the details for later assessment years will be publicly available.

Appendix C- 2022 Sampling Memo (New) 65

Appendix A

Appendix A-1: NAEP Design and Analysis Committee (DAC)

-

Name

Affiliation

Betsy Becker

Florida State University, FL

Peter Behuniak

Criterion Consulting, LLC, CT

Dan Bolt

University of Wisconsin, Madison, WI

Lloyd Bond

University of North Carolina, Greensboro, NC (Emeritus)/Carnegie Foundation (retired)

Derek Briggs

University of Colorado, Boulder, CO

Richard Duran

University of California, Santa Barbara, CA

Ben Hansen

University of Michigan, MI

Brian Junker

Carnegie Mellon University, PA

David Kaplan

University of Wisconsin-Madison, WI

Kenneth Koedinger

Carnegie Mellon University, PA

Sophia Rabe-Hesketh

University of California, Berkeley, CA

Michael Rodriguez

University of Minnesota, MN

S. Lynne Stokes

Southern Methodist University, TX

Chun Wang

University of Washington, WA

Appendix A-2: NAEP Validity Studies Panel (NVS)

-

Name

Affiliation

Keena Arbuthnot

Peter Behuniak

Office of Research and Economic Development

Louisiana State University, LA

Criterion Consulting, LLC, CT

Jack Buckley

Panel Chair American Institutes for Research, Washington, DC

Jim Chromy

RTI International (Emeritus Fellow), Raleigh, NC

Phil Daro

Strategic Education Research Partnership (SERP) Institute, Washington, DC

Richard Duran

Gevirtz Graduate School of Education University of California, Santa Barbara, CA

David Grissmer

Curry School of Education University of Virginia, VA

Larry Hedges

Institute for Policy Research Northwestern University, IL

Gerunda Hughes

Washington, DC

Ina Mullis

TIMSS & PIRLS International Study Ctr, Boston College, MA

Scott Norton

Deputy Executive Director, Programs Council of Chief State School Officers, Washington, DC

Jim Pellegrino

University of Illinois

atof Chicago/Learning Sciences Research Institute, ILGary Phillips

Cambium Assessment, Washington, DC

Lorrie Shepard

School of Education University of Colorado

atBoulder, CODavid Thissen

Dept. of Psychology University of North Carolina at Chapel Hill, NC

Gerald Tindal

University of Oregon, Eugene, OR

Sheila Valencia

Washington, WA

Denny Way

College Board. Washington, DC.

Appendix A-3: NAEP National Indian Education Study Technical Review Panel (NIES)

Name Affiliation

Mandy Smoker Broaddus Education Northwest, Portland, OR

Doreen E. Brown ASD Education Center, Anchorage, AK

Robert B. Cook Teach for America, Summerset, SD

Steve Andrew Culpepper University of Illinois at Urbana-Champaign, IL

Dr. Susan C. Faircloth Panel Chair, Colorado State University, Fort Collins, CO

Dr. Chris Gordon JKL Bahweting Anishinaabe PSA, Sault Ste. Marie, MI

Dr. Jameson D. Lopez University of Arizona. Tucson, AZ

Jeremy MacDonald Rocky Boy Elementary, Box, Elder, MT

Dr. Holly Jonel Mackey North Dakota State University, ND

Jeannette Muskett Miller Central Consolidated School District, Gallup, AZ

Debora Norris Arizona School Facilities Board, Phoenix, AZ

Sedelta Oosahwee National Education Association, DC

Dr. Martin Reinhardt Northern Michigan University, MI

Appendix A-4: NAEP Mathematics Standing Committee

-

Name

Affiliation

June Ahn

University of California, Irvine, CA

Scott Baldridge

Louisiana State University, Baton Rouge, LA

Joe Garofalo

University of Virginia, Charlottesville, VA

Mark Howell

Jennifer Langer-Osuna

Gonzaga College High School, Washington, DC

Stanford University, Stanford, CA

Michele Mailhot

Maine Department of Education, Augusta, ME

Kayonna Pitchford

University of North Carolina, Pembroke, NC

Angelica Niño

De Zavala Elementary School, San Antonio, TX

Allan Rossman

California Polytechnic State University, College of Mathematics and Science, San Luis Obispo, CA

Amy Roth McDuffie

Washington State University, Pullman, WA

Edward Silver

University of Michigan, Ann Arbor, MI

Lya Snell

Georgia Department of Education, Atlanta, GA

Zalman Usiskin

University of Chicago, Chicago, IL

Vivian Valencia

Eutimio “Tim” Salazar III Elementary, Española

Public Schools, Española, NM

Appendix A-5: NAEP Reading Standing Committee

-

Name

Affiliation

Patricia Alexander

University of Maryland, College Park, MD

Alison Bailey

Jensa Bushey

University of California, Los Angeles, CA

Shelburne Community Schools, Shelburne, VT

Bridget Dalton

Christy Howard

University of Colorado, Boulder, CO

East Carolina University, Greenville, NC

Jeanette Mancilla-Martinez

Vanderbilt University, Nashville, TN

Pamela Mason

Harvard Graduate School of Education, Cambridge, MA

Frank Serafini

Arizona State University, Phoenix, AZ

Kris Shaw

Ana Taboada Barber

Kansas State Department of Education, Topeka, KS

University of Maryland, College Park, MD

Diana Townsend

Brandon Wallace

University of Nevada, Reno, NV

Montgomery College, Takoma Park, MD

Appendix A-6: NAEP Survey Questionnaire Standing Committee

Name

Flávio Cunha |

Affiliation

Rice University, Houston, TX |

Hunter Gehlbach |

Johns Hopkins School of Education, Baltimore, MD

|

Cassandra Guarino |

University of California, Riverside, CA |

Jori Hall |

University of Georgia, Athens, GA |

Henry Levin

Laura Moore

|

Teachers College, Columbia University, NY, NY

Obama Foundation, Chicago, IL |

Stanley Presser |

University of Maryland, College Park, MD |

Leslie Rutkowski |

Indiana University Bloomington, IN |

Akane Zusho |

Fordham University, NY, NY

|

Appendix A-7: NAEP Mathematics Translation Review Committee

-

Name

Affiliation

Mayra Aviles

Puerto Rico Department of Education, Hato Rey, PR

David Feliciano

The Melrose School (P.S./M.S 29), Bronx, NY

Yvonne Fuentes

University of West Georgia, Carrollton, GA

Marco Martinez-Leandro

Sandia High School, Albuquerque, NM

Jose Antonio (Tony) Paulino

Nathan Straus Preparatory School (P.S. 140M), New York, NY

Evelisse Rosado Rivera

Puerto Rico Department of Education, Hato Rey, PR

Myrna Rosado-Rasmussen

Austin Independent School District, Austin, TX

Gloria Rosado Vazquez

Puerto Rico Department of Education, Hato Rey, PR

Enid Valle

Kalamazoo College, Kalamazoo, MI

Appendix A-8: NAEP Grade 4 and 8 Survey Questionnaires and eNAEP DBA System Translation Committee

-

Name

Affiliation

Daniel Berdugo

Wilton Elementary School (PS 30X), Bronx, NY

Yvonne Fuentes

University of West Georgia, Carrollton, GA

Marco Martinea-Leandro

Sandia High School, Albuquerque, NM

Jose Antonio (Tony) Paulino

Nathan Straus Preparatory School (P.S. 140M), New York, NY

Evelisse Rosado Rivera

Puerto Rico Department of Education, Hato Rey, PR

Myrna Rosado-Rasmussen

Austin Independent School District, Austin, TX

Gloria M. Rosado Vazquez

Puerto Rico Department of Education, Hato Rey, PR

Enid Valle

Kalamazoo College, Kalamazoo, MI

Appendix A-9: NAEP Principals’ Panel Standing Committee

-

Name

Affiliation

David Atherton

Clear Creek Middle School, Gresham, OR

Williams Carozza

Harold Martin Elementary School, Hopkinton, NH

Rita Graves

Pin Oak Middle School, Bellaire, TX

Stephen Jackson

Principal Consultant and Chair, NAEP Principals’ Panel

Anthony Lockhart

Fulton-Holland Educational Services Center

West Palm Beach, FL

Lillie McMillan

Kourtney Miller

Porter Elementary School, San Diego, CA

Chavez Prep Middle School, Washington, DC

Jason Mix

Howard Lake–Waverly–Winsted High School, Howard Lake, MN

Leon Oo-Sah-We

Ardith Bates Stanford

Saint Francis Indian School, Saint Francis, SD

Murray County Schools, Chattanooga, TN

Sylvia Rodriguez Vargas

The Hill School, Pottstown, PA

Appendix B

NATIONAL CENTER FOR EDUCATION STATISTICS NATIONAL ASSESSMENT OF EDUCATIONAL PROGRESS

National Assessment of Educational Progress (NAEP)

2022

Appendix B

NAEP 2013 Weighting Procedures

OMB# 1850-0928 v.25

August 2021

No changes since v.10

The 2013 Weighting Procedures documentation is the most current version available to the public. At this time, there is not a timeline for when the details for later assessment years will be publicly available.

NAEP Technical Documentation Website

NAEP Technical Documentation Weighting Procedures for the 2013 Assessment

NAEP assessments use complex sample designs to create student samples that generate population and subpopulation estimates with reasonably high precision. Student sampling weights ensure valid inferences from the student samples to their respective populations. In 2013, weights were developed for students sampled at grades 4, 8, and 12 for assessments in mathematics and reading.

Computation of Full-Sample Weights

Computation of Replicate Weights for Variance Estimation

Quality Control on Weighting Procedures

Each student was assigned a weight to be used for making inferences about students in the target population. This weight is known as the final full-sample student weight and contains the following major components:

the

student base weight;

the

student base weight;

school

nonresponse adjustments; student nonresponse adjustments; school

weight trimming adjustments;

school

nonresponse adjustments; student nonresponse adjustments; school

weight trimming adjustments;

student

weight trimming adjustments; and student raking adjustment.

student

weight trimming adjustments; and student raking adjustment.

The student base weight is the inverse of the overall probability of selecting a student and assigning that student to a particular assessment. The sample design that determines the base weights is discussed in the NAEP 2013 sample design section.

The student base weight is adjusted for two sources of nonparticipation: school level and student level. These weighting adjustments seek to reduce the potential for bias from such nonparticipation by

increasing

the weights of students from participating schools similar to those

schools not participating; and

increasing

the weights of students from participating schools similar to those

schools not participating; and

increasing

the weights of participating students similar to those students from

within participating schools who did not attend the assessment

session (or makeup session) as scheduled.

increasing

the weights of participating students similar to those students from

within participating schools who did not attend the assessment

session (or makeup session) as scheduled.

Furthermore, the final weights reflect the trimming of extremely large weights at both the school and student level. These weighting adjustments seek to reduce variances of survey estimates.

An additional weighting adjustment was implemented in the state and Trial Urban District Assessment (TUDA) samples so that estimates for key student-level characteristics were in agreement across assessments in reading and mathematics. This adjustment was implemented using a raking procedure.

In addition to the final full-sample weight, a set of replicate weights was provided for each student. These replicate weights are used to calculate the variances of survey estimates using the jackknife repeated replication method. The methods used to derive these weights were aimed at reflecting the features of the sample design, so that when the jackknife variance estimation procedure is implemented, approximately unbiased estimates of sampling variance are obtained. In addition, the various weighting procedures were repeated on each set of replicate weights to appropriately reflect the impact of the weighting adjustments on the sampling variance of a survey estimate. A finite population correction (fpc) factor was incorporated into the replication scheme so that it could be reflected in the variance estimates for the reading and mathematics assessments. See Computation of Replicate Weights for Variance Estimation for details.

Quality control checks were carried out throughout the weighting process to ensure the accuracy of the full-sample and replicate weights. See Quality Control for Weighting Procedures for the various checks implemented and main findings of interest.

In the linked pages that follow, please note that Vocabulary, Reading Vocabulary, and Meaning Vocabulary refer to the same reporting scale and are interchangeable.

http://nces.ed.gov/nationsreportcard/tdw/weighting/2013/naep_assessment_weighting_procedures.aspx

NAEP Technical Documentation Computation of Full- Sample W eights for the 2013 Assessment

The full-sample or final student weight is the sampling weight used to derive NAEP student estimates of population and subpopulation characteristics for a specified grade (4, 8, or 12) and assessment subject (reading or mathematics). The full-sample student weight reflects the number of students that the sampled student represents in the population for purposes of estimation. The summation of the final student weights over a particular student group provides an estimate of the total number of students in that group within the population.

The full-sample weight, which is used to produce survey estimates, is

Computation of Base Weights

School and Student Nonresponse Weight Adjustments

School and Student Weight Trimming Adjustments

Student Weight Raking Adjustment

distinct from a replicate weight that is used to estimate variances of survey estimates. The full-sample weight is assigned to participating students and reflects the student base weight after the application of the various weighting adjustments. The full-sample weight for student k from school s in stratum j (FSTUWGTjsk) can be expressed as follows:

![]()

where

STU_BWTjsk

is the

student

base weight;

STU_BWTjsk

is the

student

base weight;

SCH_NRAFjs

is the school-level nonresponse adjustment

factor; STU_NRAFjsk

is the student-level nonresponse adjustment

factor; SCH_TRIMjs

is the school-level weight trimming adjustment

factor; STU_TRIMjsk

is the student-level weight trimming adjustment

factor; and STU_RAKEjsk

is the student-level

raking adjustment factor.

SCH_NRAFjs

is the school-level nonresponse adjustment

factor; STU_NRAFjsk

is the student-level nonresponse adjustment

factor; SCH_TRIMjs

is the school-level weight trimming adjustment

factor; STU_TRIMjsk

is the student-level weight trimming adjustment

factor; and STU_RAKEjsk

is the student-level

raking adjustment factor.

School sampling strata for a given assessment vary by school type and grade. See the links below for descriptions of the school strata for the various assessments.

Public

schools at grades 4 and 8 Public schools at grade 12

Public

schools at grades 4 and 8 Public schools at grade 12

Private

schools at grades 4, 8 and 12

Private

schools at grades 4, 8 and 12

NAEP Technical Documentation Computation of Base Weights for the 2013 Assessment

Every sampled school and student received a base weight equal to the reciprocal of its probability of selection. Computation of a school base weight varies by

type

of sampled school (original or substitute); and sampling frame (new

school frame or not).

type

of sampled school (original or substitute); and sampling frame (new

school frame or not).

Computation of a student base weight reflects

School Base Weights Student Base Weights

the

student's overall probability of selection accounting for school and

student sampling; assignment to session type at the school- and

student-level; and

the

student's overall probability of selection accounting for school and

student sampling; assignment to session type at the school- and

student-level; and

the

student's assignment to the reading or mathematics assessment.

the

student's assignment to the reading or mathematics assessment.

NAEP Technical Documentation School Base Weights for the 2013 Assessment

The school base weight for a sampled school is equal to the inverse of its overall probability of selection. The overall selection probability of a sampled school differs by

type

of sampled school (original or substitute); sampling frame (new

school frame or not).

type

of sampled school (original or substitute); sampling frame (new

school frame or not).

The overall selection probability of an originally selected school in a reading or mathematics sample is equal to its probability of selection from the NAEP public/private school frame.

The overall selection probability of a school from the new school frame in a reading or mathematics sample is the product of two quantities:

the

probability of selection of the school's district into the new-school

district sample, and the probability of selection of the school into

the new school sample.

the

probability of selection of the school's district into the new-school

district sample, and the probability of selection of the school into

the new school sample.

Substitute schools are preassigned to original schools and take the place of original schools if they refuse to participate. For weighting purposes, they are treated as if they were the original schools that they replaced; so substitute schools are assigned the school base weight of the original schools.

Learn more about substitute schools for the 2013 private school national assessment and substitute schools for the 2013 twelfth grade public school assessment.

NAEP Technical Documentation Student Base Weights for the 2013 Assessment

Every sampled student received a student base weight, whether or not the student participated in the assessment. The student base weight is the reciprocal of the probability that the student was sampled to participate in the assessment for a specified subject. The student base weight for student k from school s in stratum j (STU_BWTjsk) is the product of seven weighting components and can be expressed as follows:

![]()

where

SCH_BWTjs

is the school base weight;

SCH_BWTjs

is the school base weight;

SCHSsessionassignmentESWTjs

is the school-level

session assignment weight

that reflects the conditional probability, given the school, that the

particular session type was assigned to the school;

SCHSsessionassignmentESWTjs

is the school-level

session assignment weight

that reflects the conditional probability, given the school, that the

particular session type was assigned to the school;

WINSCHWTjs

is the within-school

student weight

that reflects the conditional probability,

given the school, that the student was selected for the NAEP

assessment;

WINSCHWTjs

is the within-school

student weight

that reflects the conditional probability,

given the school, that the student was selected for the NAEP

assessment;

STUSESWTjsk

is Stu_bookmarkthe

student-level session

assignment weight that

reflects the conditional probability, given that the particular

session type was assigned to the school, that the student was

assigned to the session type;

STUSESWTjsk

is Stu_bookmarkthe

student-level session

assignment weight that

reflects the conditional probability, given that the particular

session type was assigned to the school, that the student was

assigned to the session type;

SUBJFACsubjfacjsk

is the subject spiral adjustment factor that reflects

the conditional probability, given that the student was assigned to a

particular session type, that the student was assigned the specified

subject;

SUBJFACsubjfacjsk

is the subject spiral adjustment factor that reflects

the conditional probability, given that the student was assigned to a

particular session type, that the student was assigned the specified

subject;

SUBADJjs

is the substitution adjustment factor to account for the

difference in enrollment size between the substitute and original

school; and

SUBADJjs

is the substitution adjustment factor to account for the

difference in enrollment size between the substitute and original

school; and

YRRND_AFjs

is the year-round

adjustment factor to account for students

in year- round schools on

scheduled break at the time of the NAEP assessment and thus not

available to be included in the sample.

YRRND_AFjs

is the year-round

adjustment factor to account for students

in year- round schools on

scheduled break at the time of the NAEP assessment and thus not

available to be included in the sample.

The within-school student weight (WINSCHWTjs) is the inverse of the student sampling rate in the school.

The subject spiral adjustment factor (SUBJFACjsk) adjusts the student weight to account for the spiral pattern used in distributing reading or mathematics booklets to the students. The subject factor varies by grade, subject, and school type (public or private), and it is equal to the inverse of

the booklet proportions (reading or mathematics) in the overall spiral for a specific sample.

For cooperating substitutes of nonresponding original sampled schools, the substitution adjustment factor (SUBADJjs) is equal to the ratio of the estimated grade enrollment for the original sampled school to the estimated grade enrollment for the substitute school. The student sample from the substitute school then "represents" the set of grade-eligible students from the original sampled school.

The year-round adjustment factor (YRRND_AFjs) adjusts the student weight for students in year- round schools who do not attend school during the time of the assessment. This situation typically arises in overcrowded schools. School administrators in year-round schools randomly assign students to portions of the year in which they attend school and portions of the year in which they do not attend. At the time of assessment, a certain percentage of students (designated as OFF js) do not attend school and thus cannot be assessed. The YRRND_AFjs for a school is calculated as 1/(1- OFF js/100).

NAEP Technical Documentation Website

NAEP Technical Documentation School and Student

Nonresponse Weight Adjustments for the 2013 Assessment

Nonresponse is unavoidable in any voluntary survey of a human population. Nonresponse leads to the loss of sample data that must be compensated for in the weights of the responding sample members. This differs from ineligibility, for which no adjustments are necessary. The purpose of the nonresponse adjustments is to reduce the mean square error of survey estimates. While the nonresponse adjustment reduces the bias from the loss of sample, it also increases variability among the survey weights leading to increased variances of the sample estimates. However, it is presumed that the reduction in bias more than compensates for the increase in

School Nonresponse Weight Adjustment

Student Nonresponse Weight Adjustment

the variance, thereby reducing the mean square error and thus improving the accuracy of survey estimates. Nonresponse adjustments are made in the NAEP surveys at both the school and the student levels: the responding (original and substitute) schools receive a weighting adjustment to compensate for nonresponding schools, and responding students receive a weighting adjustment to compensate for nonresponding students.

The paradigm used for nonresponse adjustment in NAEP is the quasi-randomization approach (Oh and Scheuren 1983). In this approach, school response cells are based on characteristics of schools known to be related to both response propensity and achievement level, such as the locale type (e.g., large principal city of a metropolitan area) of the school. Likewise, student response cells are based on characteristics of the schools containing the students and student characteristics, which are known to be related to both response propensity and achievement level, such as student race/ethnicity, gender, and age.

Under this approach, sample members are assigned to mutually exclusive and exhaustive response cells based on predetermined characteristics. A nonresponse adjustment factor is calculated for each cell as the ratio of the sum of adjusted base weights for all eligible units to the sum of adjusted base weights for all responding units. The nonresponse adjustment factor is then applied to the base weight of each responding unit. In this way, the weights of responding units in the cell are "weighted up" to represent the full set of responding and nonresponding units in the response cell.

The quasi-randomization paradigm views nonresponse as another stage of sampling. Within each nonresponse cell, the paradigm assumes that the responding sample units are a simple random sample from the total set of all sample units. If this model is valid, then the use of the quasi-randomization weighting adjustment will eliminate any nonresponse bias. Even if this model is not valid, the weighting adjustments will eliminate bias if the achievement scores are homogeneous within the response cells (i.e., bias is eliminated if there is homogeneity either in response propensity or in achievement levels). See, for example, chapter 4 of Little and Rubin (1987).

NAEP Technical Documentation School Nonresponse Weight Adjustment

The school nonresponse adjustment procedure inflates the weights of cooperating schools to account for eligible noncooperating schools for which no substitute schools participated. The adjustments are computed within nonresponse cells and are based on the assumption that the cooperating and noncooperating schools within the same cell are more similar to each other than to schools from different cells. School nonresponse adjustments were carried out separately by sample; that is, by

sample

level (state, national), school type (public, private), and grade

(4, 8, 12).

sample

level (state, national), school type (public, private), and grade

(4, 8, 12).

Development of Initial School Nonresponse Cells

Development of Final School Nonresponse Cells

School Nonresponse Adjustment Factor Calculation

NAEP Technical Documentation Development of Initial School Nonresponse Cells

The cells for nonresponse adjustments are generally functions of the school sampling strata for the individual samples. School sampling strata usually differ by assessment subject, grade, and school type (public or private). Assessment subjects that are administered together by way of spiraling have the same school samples and stratification schemes. Subjects that are not spiraled with any other subjects have their own separate school sample. In NAEP 2015, all operational assessments were spiraled together.

The initial nonresponse cells for the various NAEP 2015 samples are described below.

Public School Samples for Reading and Mathematics at Grades 4 and 8

For these samples, initial weighting cells were formed within each jurisdiction using the following nesting cell structure:

Trial

Urban District Assessment (TUDA) district vs. the balance of the

state for states with TUDA districts, urbanicity (urban-centric

locale) stratum; and

Trial

Urban District Assessment (TUDA) district vs. the balance of the

state for states with TUDA districts, urbanicity (urban-centric

locale) stratum; and

race/ethnicity

classification stratum, or achievement level, or median income, or

grade enrollment.

race/ethnicity

classification stratum, or achievement level, or median income, or

grade enrollment.

In general, the nonresponse cell structure used race/ethnicity classification stratum as the lowest level variable. However, where there was only one race/ethnicity classification stratum within a particular urbanicity stratum, categorized achievement, median income, or enrollment data were used instead.

Public School Sample at Grade 12

The

initial weighting cells for this sample were formed using the

following nesting cell structure: census division stratum,

The

initial weighting cells for this sample were formed using the

following nesting cell structure: census division stratum,

urbanicity

stratum (urban-centric locale), and race/ethnicity classification

stratum.

urbanicity

stratum (urban-centric locale), and race/ethnicity classification

stratum.

Private School Samples at Grades 4, 8 and 12

The

initial weighting cells for these samples were formed within each

grade using the following nesting cell structure: affiliation,

The

initial weighting cells for these samples were formed within each

grade using the following nesting cell structure: affiliation,

census

division stratum,

census

division stratum,

urbanicity

stratum (urban-centric locale), and race/ethnicity classification

stratum.

urbanicity

stratum (urban-centric locale), and race/ethnicity classification

stratum.

NAEP Technical Documentation Development of Final School Nonresponse Cells

Limits were placed on the magnitude of cell sizes and adjustment factors to prevent unstable nonresponse adjustments

and unacceptably large nonresponse factors. All initial weighting cells with fewer than six cooperating schools or adjustment factors greater than 3.0 for the full sample weight were collapsed with suitable adjacent cells. Simultaneously, all initial weighting cells for any replicate with fewer than four cooperating schools or adjustment factors greater than the maximum of

or two times the full sample nonresponse adjustment factor were collapsed with suitable adjacent cells. Initial weighting cells were generally collapsed in reverse order of the cell structure; that is, starting at the bottom of the nesting structure and working up toward the top level of the nesting structure.

Public School Samples at Grades 4 and 8

For the grade 4 and 8 public school samples, cells with the most similar race/ethnicity classification within a

given jurisdiction/Trial Urban District Assessment (TUDA) district and urbanicity (urban-centric locale) stratum were collapsed first. If further collapsing was required after all levels of race/ethnicity strata were collapsed, cells with the most similar urbanicity strata were combined next. Cells were never permitted to be collapsed across jurisdictions or TUDA districts.

Public School Sample at Grades 12

For the grade 12 public school sample, race/ethnicity classification cells within a given census division stratum and urbanicity stratum were collapsed first. If further collapsing was required after all levels of race/ethnicity classification were collapsed, cells with the most similar urbanicity strata were combined next. Any further collapsing occurred across census division strata but never across census regions.

Private School Samples at Grades 4, 8, and 12

For the private school samples, cells with the most similar race/ethnicity classification within a given affiliation, census division, and urbanicity stratum were collapsed first. If further collapsing was required after all levels of race/ethnicity strata were collapsed, cells with the most similar urbanicity classification were combined. Any further collapsing occurred across census division strata but never across affiliations.

NAEP Technical Documentation School Nonresponse Adjustment Factor Calculation

In each final school nonresponse adjustment cell c, the school nonresponse adjustment factor SCH_NRAFc was computed as follows:

![]()

where

Sc

is the set of all eligible sampled schools (cooperating

original and substitute schools and refusing original schools with

noncooperating or no assigned substitute) in cell c,

Sc

is the set of all eligible sampled schools (cooperating

original and substitute schools and refusing original schools with

noncooperating or no assigned substitute) in cell c,

Rc

is the set of all cooperating schools within Sc,

SCH_BWTs is

the school base weight,

Rc

is the set of all cooperating schools within Sc,

SCH_BWTs is

the school base weight,

SCH_TRIMs

is the school-level weight trimming factor,

SCHSESWTs

is the school-level

session assignment

weight, and

SCH_TRIMs

is the school-level weight trimming factor,

SCHSESWTs

is the school-level

session assignment

weight, and

Xs

is the estimated grade enrollment corresponding to the

original sampled school.

Xs

is the estimated grade enrollment corresponding to the

original sampled school.

NAEP Technical Documentation Student Nonresponse Weight Adjustment

The student nonresponse adjustment procedure inflates the weights of assessed students to account for eligible sampled students who did not participate in the assessment. These inflation factors offset the loss of data associated with absent students. The adjustments are computed within nonresponse cells and are based on the assumption that the assessed and absent students within the same cell are more similar to one another than to students from different cells. Like its counterpart at the school level, the student nonresponse adjustment is

Development of Initial Student Nonresponse Cells

Development of Final Student Nonresponse Cells

Student Nonresponse Adjustment Factor Calculation

intended to reduce the mean square error and thus improve the accuracy of NAEP assessment estimates. Also, like its counterpart at the school level, student nonresponse adjustments were carried out separately by sample; that is, by

grade

(4, 8, 12),

grade

(4, 8, 12),

school

type (public, private), and

school

type (public, private), and

assessment

subject (mathematics, reading, science, meaning vocabulary).

assessment

subject (mathematics, reading, science, meaning vocabulary).

NAEP Technical Documentation Development of Initial Student Nonresponse Cells for the 2013 Assessment

Initial student nonresponse cells are generally created within each sample as defined by grade, school type (public, private), and assessment subject. However, when subjects are administered together by way of spiraling, the initial student nonresponse cells are created across the subjects in the same spiral. The rationale behind this decision is that spiraled subjects are in the same schools and the likelihood of whether an eligible student participates in an assessment is more related to its school than the subject of the assessment booklet. In NAEP 2013, there was only one spiral, with the reading and mathematics assessments spiraled together. The initial student nonresponse cells for the various NAEP 2013 samples are described below.

Nonresponse adjustment procedures are not applied to excluded students because they are not required to complete an assessment.

Public School Samples for Reading and Mathematics at Grades 4 and 8

The initial student nonresponse cells for these samples were defined within grade, jurisdiction, and Trial Urban District Assessment (TUDA) district using the following nesting cell structure:

students

with disabilities (SD)/English language learners (ELL) by subject,

school nonresponse cell,

students

with disabilities (SD)/English language learners (ELL) by subject,

school nonresponse cell,

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

race/ethnicity.

race/ethnicity.

The highest level variable in the cell structure separates students who were classified either as having disabilities (SD) or as English language learners (ELL) from those who are neither, since SD or ELL students tend to score lower on assessment tests than non-SD/non-ELL students. In addition, the students in the SD or ELL groups are further broken down by subject, since rules for excluding students from the assessment differ by subject. Non-SD and non-ELL students are not broken down by subject, since the exclusion rules do not apply to them.

Public School Samples for Reading and Mathematics at Grade 12

The initial weighting cells for these samples were formed hierarchically within state for the state-reportable samples and the balance of the country for remaining states as follows:

SD/ELL,

SD/ELL,

school

nonresponse cell,

school

nonresponse cell,

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

race/ethnicity.

race/ethnicity.

Private School Samples for Reading and Mathematics at Grades 4, 8, and 12

The

initial weighting cells for these private school samples were formed

hierarchically within grade as follows: SD/ELL,

The

initial weighting cells for these private school samples were formed

hierarchically within grade as follows: SD/ELL,

school

nonresponse cell,

school

nonresponse cell,

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

age

(classified into "older"1

student and "modal age or younger"

student), gender, and

race/ethnicity.

race/ethnicity.

Although exclusion rules differ by subject, there were not enough SD or ELL private school students to break out by subject as was done for the public schools.

1Older students are those born before October 1, 2002, for grade 4; October 1, 1998, for grade 8; and October 1, 1994, for

grade 12.

NAEP Technical Documentation Development of Final Student Nonresponse Cells for the 2013 Assessment

Similar to the school nonresponse adjustment, cell and adjustment factor size constraints are in place to prevent unstable nonresponse adjustments or unacceptably large adjustment factors. All initial weighting cells with either fewer than 20 participating students or adjustment factors greater than 2.0 for the full sample weight were collapsed with suitable adjacent cells. Simultaneously, all initial weighting cells for any replicate with either fewer than 15 participating students or an adjustment factor greater than the maximum of 2.0 or 1.5 times the full sample nonresponse adjustment factor were collapsed with suitable adjacent cells.

Initial weighting cells were generally collapsed in reverse order of the cell structure; that is, starting at the bottom of the nesting structure and working up toward the top level of the nesting structure. Race/ethnicity cells within SD/ELL groups, school nonresponse cell, age, and gender classes were collapsed first. If further collapsing was required after collapsing all race/ethnicity classes, cells were next combined across gender, then age, and finally school nonresponse cells. Cells are never collapsed across SD and ELL groups for any sample.

NAEP Technical Documentation Student Nonresponse Adjustment Factor Calculation

In each final student nonresponse adjustment cell c for a given sample, the student nonresponse adjustment factor STU_NRAFc was computed as follows:

![]()

where

Sc

is the set of all eligible sampled students in cell c

for a given sample, Rc is

the set of all assessed students within Sc,

Sc

is the set of all eligible sampled students in cell c

for a given sample, Rc is

the set of all assessed students within Sc,

STU_BWTk

is the

student

base weight

for a

given student

k,

STU_BWTk

is the

student

base weight

for a

given student

k,

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k, SCH_NRAFk

is the school-level nonresponse adjustment factor

for the school associated with student k,

and SUBJFACk

is the subject factor for a given student k.

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k, SCH_NRAFk

is the school-level nonresponse adjustment factor

for the school associated with student k,

and SUBJFACk

is the subject factor for a given student k.

The student weight used in the calculation above is the adjusted student base weight, without regard to subject, adjusted for school weight trimming and school nonresponse.

Nonresponse adjustment procedures are not applied to excluded students because they are not required to complete an assessment. In effect, excluded students were placed in a separate nonresponse cell by themselves and all received an adjustment factor of 1. While excluded students are not included in the analysis of the NAEP scores, weights are provided for excluded students in order to estimate the size of this group and its population characteristics.

NAEP Technical Documentation School and Student Weight Trimming Adjustments for the 2013 Assessment

Weight trimming is an adjustment procedure that involves detecting and reducing extremely large weights. "Extremely large weights" generally refer to large sampling weights that were not anticipated in the design of the sample. Unusually large weights are likely to produce large sampling variances for statistics of interest, especially when the large weights are associated with sample cases reflective of rare or atypical characteristics. To reduce the impact of these large weights on variances, weight reduction methods are typically employed. The goal of employing weight reduction methods is to reduce the mean square error of survey estimates. While the

Trimming of School Base Weights

Trimming of Student Weights

trimming of large weights reduces variances, it also introduces some bias. However, it is presumed that the reduction in the variances more than compensates for the increase in the bias, thereby reducing the mean square error and thus improving the accuracy of survey estimates (Potter 1988). NAEP employs weight trimming at both the school and student levels.

NAEP Technical Documentation Trimming of School Base Weights

Large school weights can occur for schools selected from the NAEP new-school sampling frame and for private schools. New schools that are eligible for weight trimming are schools with a disproportionately large student enrollment in a particular grade from a school district that was selected with a low probability of selection. The school base weights for such schools may be large relative to what they would have been if they had been selected as part of the original sample.

To detect extremely large weights among new schools, a comparison was made between a new school's school base weight and its ideal weight (i.e., the weight that would have resulted had the school been selected from the original school sampling frame). If the school base weight was more than three times the ideal weight, a trimming factor was calculated for that school that scaled the base weight back to three times the ideal weight. The calculation of the school-level trimming factor for a new school s is expressed in the following formula:

![]()

where

EXP_WTs

is the ideal base weight the school would have

received if it had been on the NAEP public school sampling frame, and

EXP_WTs

is the ideal base weight the school would have

received if it had been on the NAEP public school sampling frame, and

SCH_BWTs

is the actual school base weight the school

received as a sampled school from the new school frame.

SCH_BWTs

is the actual school base weight the school

received as a sampled school from the new school frame.

Thirty-seven (37) schools out of 377 selected from the new-school sampling frame had their weights trimmed: eight at grade 4, 29 at grade 8, and zero at grade 12.

Private schools eligible for weight trimming were Private School Universe Survey (PSS) nonrespondents who were found subsequently to have either larger enrollments than assumed at the time of sampling, or an atypical probability of selection given their affiliation, the latter being unknown at the time of sampling. For private school s, the formula for computing the school-level weight trimming factor SCH_TRIMs is identical to that used for new schools. For private schools,

EXP_WTs

is the ideal base weight the school would have

received if it had been on the NAEP private school sampling frame

with accurate enrollment and known affiliation, and

EXP_WTs

is the ideal base weight the school would have

received if it had been on the NAEP private school sampling frame

with accurate enrollment and known affiliation, and

SCH_BWTs

is the actual school base weight the school received as

a sampled private school.

SCH_BWTs

is the actual school base weight the school received as

a sampled private school.

No private schools had their weights trimmed.

NAEP Technical Documentation Trimming of Student Weights

Large student weights generally come from compounding nonresponse adjustments at the school and student levels with artificially low school selection probabilities, which can result from inaccurate enrollment data on the school frame used to define the school size measure. Even though measures are in place to limit the number and size of excessively large weights—such as the implementation of adjustment factor size constraints in both the school and student nonresponse procedures and the use of the school trimming procedure—large student weights can occur due to compounding effects of the various weighting components.

The student weight trimming procedure uses a multiple median rule to detect excessively large student weights. Any student weight within a given trimming group greater than a specified multiple of the median weight value of the given trimming group has its weight scaled back to that threshold. Student weight trimming was implemented separately by grade, school type (public or private), and subject. The multiples used were 3.5 for public school trimming groups and 4.5 for private school trimming groups. Trimming groups were defined by jurisdiction and Trial Urban District Assessment (TUDA) districts for the public school samples at grades 4 and 8; by dichotomy of low/high percentage of Black and Hispanic students (15 percent and below, above 15 percent) for the public school sample at grade 12; and by affiliation (Catholic, Non-Catholic) for private school samples at grades 4, 8 and 12.

The procedure computes the median of the nonresponse-adjusted student weights in the trimming group g for a given grade and subject sample. Any student k with a weight more than M times the median received a trimming factor calculated as follows:

![]()

where

M

is the trimming multiple,

M

is the trimming multiple,

MEDIANg

is the median of nonresponse-adjusted student

weights in trimming group g, and STUWGTgk

is the weight after student nonresponse

adjustment for student k in trimming group g.

MEDIANg

is the median of nonresponse-adjusted student

weights in trimming group g, and STUWGTgk

is the weight after student nonresponse

adjustment for student k in trimming group g.

In the 2013 assessment, relatively few students had weights considered excessively large. Out of the approximately 840,000 students included in the combined 2013 assessment samples, 226 students had their weights trimmed.

NAEP Technical Documentation Student Weight Raking Adjustment for the 2013 Assessment

Weighted estimates of population totals for student-level subgroups for a given grade will vary across subjects even though the student samples for each subject generally come from the same schools. These differences are the result of sampling error associated with the random assignment of subjects to students through a process known as spiraling. For state assessments in particular, any

Development of Final Raking Dimensions Raking Adjustment Control Totals

Raking Adjustment Factor Calculation

difference in demographic estimates between subjects, no matter how small, may raise concerns about data quality. To remove these random differences and potential data quality concerns, a new step was added to the NAEP weighting procedure starting in 2009. This step adjusts the student weights in such a way that the weighted sums of population totals for specific subgroups are the same across all subjects. It was implemented using a raking procedure and applied only to state-level assessments.

Raking is a weighting procedure based on the iterative proportional fitting process developed by Deming and Stephan (1940) and involves simultaneous ratio adjustments to two or more marginal distributions of population totals. Each set of marginal population totals is known as a dimension, and each population total in a dimension is referred to as a control total. Raking is carried out in a sequence of adjustments. Sampling weights are adjusted to one marginal distribution and then to the second marginal distribution, and so on. One cycle of sequential adjustments to the marginal distributions is called an iteration. The procedure is repeated until convergence is achieved. The criterion for convergence can be specified either as the maximum number of iterations or an absolute difference (or relative absolute difference) from the marginal population totals. More discussion on raking can be found in Oh and Scheuren (1987).

For NAEP 2013, the student raking adjustment was carried out separately in each state for the reading

and mathematics public school samples at grades 4 and 8, and in the 13 states with state-reportable samples for the reading and mathematics public school samples at grade 12. The dimensions used in the raking process were National School Lunch Program (NSLP) eligibility, race/ethnicity, SD/ELL status, and gender. The control totals for these dimensions were obtained from the NAEP student sample weights of the reading

and mathematics samples combined.

NAEP Technical Documentation Development of Final Raking Dimensions

The raking procedure involved four dimensions. The variables used to define the dimensions are listed below along with the categories making up the initial raking cells for each dimension.

National

School Lunch Program (NSLP) eligibility

National

School Lunch Program (NSLP) eligibility

Eligible for free or reduced-price lunch

Otherwise

Race/Ethnicity

Otherwise

Race/Ethnicity

White, not Hispanic

Black, not Hispanic

Hispanic

Asian

American Indian/Alaska Native

Native Hawaiian/Pacific Islander

Two

or

More

Races

SD/ELL

status

Two

or

More

Races

SD/ELL

status

SD, but not ELL

ELL, but not SD

SD and ELL

Neither

SD nor

ELL

Gender

Neither

SD nor

ELL

Gender

Male

Female

In states containing districts that participated in Trial Urban District Assessments (TUDA) districts at grades 4 and 8, the initial cells were created separately for each TUDA district and the balance of the state. Similar to the procedure used for school and student nonresponse adjustments, limits were placed on the magnitude of the cell sizes and adjustment factors to prevent unstable raking adjustments that could have resulted in unacceptably large or small adjustment factors. Levels of a dimension were combined whenever there were fewer than 30 assessed or excluded students (20 for any of the replicates) in a category, if the smallest adjustment was less than 0.5, or if the largest adjustment was greater than 2 for the full sample or for any replicate.

If collapsing was necessary for the race/ethnicity dimension, the following groups were combined first: American Indian/Alaska Native with Black, not Hispanic; Hawaiian/Pacific Islander with Black, not Hispanic; Two or More Races with White, not Hispanic; Asian with White, not Hispanic; and Black, not Hispanic with Hispanic. If further collapsing was necessary, the five categories American Indian/Alaska Native; Two or More Races; Asian; Native Hawaiian/Pacific Islander; and White, not Hispanic were combined. In some instances, all seven categories had to be collapsed.

If collapsing was necessary for the SD/ELL dimension, the SD/not ELL and SD/ELL categories were combined first, followed by ELL/not SD if further collapsing was necessary. In some instances, all four categories had to be collapsed.

NAEP Technical Documentation Raking Adjustment Control Totals for the 2013 Assessment

The control totals used in the raking procedure for NAEP 2013 grades 4, 8, and 12 were estimates of the student population derived from the set of assessed and excluded students pooled across subjects. The control totals for category c within dimension d were computed as follows:

![]()

where

Rc(d)

is the set of all assessed students in category c of

dimension d, Ec(d) is

the set of all excluded students in category c of dimension d,

STU_BWTk

is the student

base weight for a given

student k,

Rc(d)

is the set of all assessed students in category c of

dimension d, Ec(d) is

the set of all excluded students in category c of dimension d,

STU_BWTk

is the student

base weight for a given

student k,

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k,

SCH_NRAFk

is the school-level nonresponse adjustment factor

for the school associated with student k,

STU_NRAFk

is the student-level nonresponse adjustment

factor for student k, and

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k,

SCH_NRAFk

is the school-level nonresponse adjustment factor

for the school associated with student k,

STU_NRAFk

is the student-level nonresponse adjustment

factor for student k, and

SUBJFACk

is the subject factor for student k.

SUBJFACk

is the subject factor for student k.

The student weight used in the calculation of the control totals above is the adjusted student base weight, without regard to subject, adjusted for school weight trimming, school nonresponse, and student nonresponse. Control totals were computed for the full sample and for each replicate independently.

NAEP Technical Documentation Raking Adjustment Factor Calculation for the 2013 Assessment

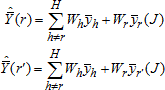

For assessed and excluded students in a given subject, the raking adjustment factor STU_RAKEk was computed as follows:

First, the weight for student k was initialized as follows:

![]()

where

STU_BWTk is the student base weight for a given student k,

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k, SCH_NRAFk

is the school-level nonresponse adjustment

factor for the school associated with student k,

STU_NRAFk

is the student-level nonresponse adjustment

factor for student k, and

SCH_TRIMk

is the school-level weight trimming factor for

the school associated with student k, SCH_NRAFk

is the school-level nonresponse adjustment

factor for the school associated with student k,

STU_NRAFk

is the student-level nonresponse adjustment

factor for student k, and

SUBJFACk

is the subject factor for student k.

SUBJFACk

is the subject factor for student k.

Then, the sequence of weights for the first iteration was calculated as follows for student k in category c of dimension d:

For dimension 1:

![]()

For dimension 2:

![]()

For dimension 3:

![]()

For dimension 4:

![]()

where

Rc(d)

is the set of all assessed students in category c of

dimension d, Ec(d) is

the set of all excluded students in category c of dimension d, and

Totalc(d) is

the control total for category c of dimension d.

Rc(d)

is the set of all assessed students in category c of

dimension d, Ec(d) is

the set of all excluded students in category c of dimension d, and

Totalc(d) is

the control total for category c of dimension d.

k

convergence was reached.

Once the process converged, the adjustment factor was computed as follows:

![]()

where STUSAWTk is the weight for student k after convergence.

The process was done independently for the full sample and for each replicate.

NAEP Technical Documentation Website

NAEP Technical Documentation Computation of Replicate Weights for the 2013 Assessment

In addition to the full-sample weight, a set of 62 replicate weights was provided for each student. These replicate weights are used in calculating the sampling variance of estimates obtained from the data, using the jackknife repeated replication method. The method of deriving these weights was aimed at reflecting the features of the sample design appropriately for each sample, so that when the jackknife variance estimation procedure is implemented, approximately unbiased estimates of sampling variance are

obtained. This section gives the specifics for generating the

Defining Variance Strata and Forming Replicates

Computing School-Level Replicate Factors

Computing Student-Level Replicate Factors

Replicate Variance Estimation

replicate weights for the 2013 assessment samples. The theory that underlies the jackknife variance estimators used in NAEP studies is discussed in the section Replicate Variance Estimation.

In general, the process of creating jackknife replicate weights takes place at both the school and student level. The precise implementation differs between those samples that involve the selection of Primary Sampling Units (PSUs) and those where the school is the first stage of sampling. The procedure for this second kind of sample also differed starting in 2011 from all previous NAEP assessments. The change that was implemented permitted the introduction of a finite population correction factor at the school sampling stage, developed by Rizzo and Rust (2011). In assessments prior to 2011, this adjustment factor has always been implicitly assumed equal to 1.0, resulting in some overestimation of the sampling variance.

For each sample, the calculation of replicate weighting factors at the school level was conducted in a series of steps. First, each school was assigned to one of 62 variance estimation strata. Then, a random subset of schools in each variance estimation stratum was assigned a replicate factor of between 0 and 1. Next, the remaining subset of schools in the same variance stratum was assigned a complementary replicate factor greater than 1. All schools in the other variance estimation strata were assigned a replicate factor of exactly 1. This process was repeated for each of the 62 variance estimation strata so that 62 distinct replicate factors were assigned to each school in the sample.

This process was then repeated at the student level. Here, each individual sampled student was assigned to one of 62 variance estimation strata, and 62 replicate factors with values either between 0 and 1, greater than 1, or exactly equal to 1 were assigned to each student.

For example, consider a single hypothetical student. For replicate 37, that student’s student replicate factor might be 0.8, while for the school to which the student belongs, for replicate 37, the school replicate factor might be 1.6. Of course, for a given student, for most replicates, either the student replicate factor, the school replicate factor, or (usually) both, is equal to 1.0.

A replicate weight was calculated for each student, for each of the 62 replicates, using weighting procedures similar to those used for the full-sample weight. Each replicate weight contains the school and student replicate factors described above. By repeating the various weighting procedures on each set of replicates, the impact of these procedures on the sampling variance of an estimate is appropriately reflected in the variance estimate.

Each of the 62 replicate weights for student k in school s in stratum j can be expressed as follows:

![]()

where

STU_BWTjsk

is the

student

base weight;

STU_BWTjsk

is the

student

base weight;

SCH_REPFACjs(r)

is the school-level replicate factor for replicate r;

SCH_NRAFjs(r)

is the school-level nonresponse adjustment factor for

replicate r; STU_REPFACjsk(r)

is the student-level replicate factor for replicate r;

STU_NRAFjsk(r)

is the

student-level

nonresponse adjustment

factor for

replicate r;

SCH_TRIMjs

is the school-level weight trimming adjustment

factor; STU_TRIMjsk

is the student-level weight trimming adjustment

factor; and STU_RAKEjsk(r)

is the

student-level

raking adjustment

factor for

replicate r.

SCH_REPFACjs(r)

is the school-level replicate factor for replicate r;

SCH_NRAFjs(r)

is the school-level nonresponse adjustment factor for

replicate r; STU_REPFACjsk(r)

is the student-level replicate factor for replicate r;

STU_NRAFjsk(r)

is the

student-level

nonresponse adjustment

factor for

replicate r;

SCH_TRIMjs

is the school-level weight trimming adjustment

factor; STU_TRIMjsk

is the student-level weight trimming adjustment

factor; and STU_RAKEjsk(r)

is the

student-level

raking adjustment

factor for

replicate r.

Specific school and student nonresponse and student-level raking adjustment factors were calculated separately for each replicate, thus the use of the index (r), and applied to the replicate student base weights. Computing separate nonresponse and raking adjustment factors for each replicate allows resulting variances from the use of the final student replicate weights to reflect components of variance due to these various weight adjustments.

School and student weight trimming adjustments were not replicated, that is, not calculated separately for each replicate. Instead, each replicate used the school and student trimming adjustment factors derived for the full sample. Statistical theory for replicating trimming adjustments under the jackknife approach has not been developed in the literature. Due to the absence of a statistical framework, and since relatively few school and student weights in NAEP require trimming, the weight trimming adjustments were not replicated.

NAEP Technical Documentation Defining Variance Strata and Forming Replicates for the 2013 Assessment

In the NAEP 2013 assessment, replicates were formed separately for each sample indicated by grade (4, 8, 12), school type (public, private), and assessment subject (mathematics, reading). To reflect the school-level finite population corrections in the variance estimators for the two-stage samples used for the mathematics and reading assessments, replication was carried out at both the school and student levels.

The first step in forming replicates was to create preliminary variance strata in each primary stratum. This was done by sorting the appropriate sampling unit (school or student) in the order of its selection within the primary stratum and then pair off adjacent sampling units into preliminary variance strata. Sorting sample units by their order of sample selection reflects the implicit stratification and systematic sampling features of the sample design. Within each primary stratum with an even number of sampling units, all of the preliminary variance strata consisted of pairs of sampling units. However, within primary strata with an odd number of sampling units, all but one variance strata consisted of pairs of sampling units, while the last one consisted of three sampling units.

The next step is to form the final variance strata by combining preliminary strata if appropriate. If there were more than 62 preliminary variance strata within a primary stratum, the preliminary variance strata were grouped to form 62 final variance strata. This grouping effectively maximized the distance in the sort order between grouped preliminary variance strata. The first 62 preliminary variance strata, for example, were assigned to 62 different final variance strata in order (1 through 62), with the next 62 preliminary variance strata assigned to final variance strata 1 through 62, so that, for example, preliminary variance stratum 1, preliminary variance stratum 63, preliminary variance stratum 125 (if in fact there were that many), etc., were all assigned to the first final variance stratum.

If, on the other hand, there were fewer than 62 preliminary variance strata within a primary stratum, then the number of final variance strata was set equal to the number of preliminary variance strata. For example, consider a primary stratum with 111 sampled units sorted in their order of selection. The first two units were in the first preliminary variance stratum; the next two units were in the second preliminary variance stratum, and so on, resulting in 54 preliminary variance strata with two sample units each (doublets). The last three sample units were in the 55th preliminary variance stratum (triplet). Since there are no more than 62 preliminary variance strata, these were also the final variance strata.

Within each preliminary variance stratum containing a pair of sampling units, one sampling unit was randomly assigned as the first variance unit and the other as the second variance unit. Within each preliminary variance stratum containing three sampling units, the three first-stage units were randomly assigned variance units 1 through 3.

Reading and Mathematics Assessments

At the school-level for these samples, formation of preliminary variance strata did not pertain to certainty schools, since they are not subject to sampling variability, but only to noncertainty schools. The primary stratum for noncertainty schools was the highest school-level sampling stratum variable listed below, and the order of selection was defined by sort order on the school sampling frame.

Trial

Urban District Assessment (TUDA) districts, remainder of states (for

states with TUDAs), or entire states for the public school samples at

grades 4, 8, and 12; and

Trial

Urban District Assessment (TUDA) districts, remainder of states (for

states with TUDAs), or entire states for the public school samples at

grades 4, 8, and 12; and

Private

school affiliation (Catholic, non-Catholic) for the private school

samples at grades 4, 8, and 12.

Private

school affiliation (Catholic, non-Catholic) for the private school

samples at grades 4, 8, and 12.

At the student-level, all students were assigned to variance strata. The primary stratum was school, and the order of selection was defined by session number and position on the administration schedule.

Within each pair of preliminary variance strata, one first-stage unit, designated at random, was assigned as the first variance unit and the other first-stage unit as the second variance unit. Within each triplet preliminary variance stratum, the three schools were randomly assigned variance units 1 through 3.

NAEP Technical Documentation Computing School- Level Replicate Factors for the 2013 Assessment

The replicate variance estimation approach for the mathematics and reading assessments involved finite population corrections at the school level. The calculation of school-level replicate factors for these assessments depended upon whether or not a school was selected with certainty. For certainty schools, the school-level replicate factors for all replicates are set to unity – this is true regardless of whether or not the variance replication method uses finite population corrections – since certainty schools are not subject to sampling variability. Alternatively, one can view the finite population correction factor for such schools as being equal to zero. Thus, for each certainty school in a given assessment, the school-level replicate factor for each of the 62 replicates (r = 1, ..., 62) was assigned as follows:

![]()

where SCH_REPFACjs(r) is the school-level replicate factor for school s in primary stratum j for the r-th replicate.

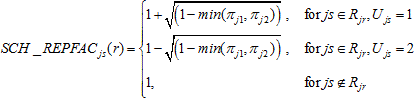

For noncertainty schools, where preliminary variance strata were formed by grouping schools into pairs or triplets, school-level replicate factors were calculated for each of the 62 replicates based on this grouping. For schools in variance strata comprising pairs of schools, the school-level replicate factors,SCH_REPFACjs(r),r = 1,..., 62, were calculated as follows:

where

min(πj1, πj2) is the smallest school probability between the two schools comprising Rjr,

Rjr

is the set of schools within the r-th variance stratum

for primary stratum j, and Ujs

is the variance unit (1 or 2) for school s in primary

stratum j.

Rjr

is the set of schools within the r-th variance stratum

for primary stratum j, and Ujs

is the variance unit (1 or 2) for school s in primary

stratum j.

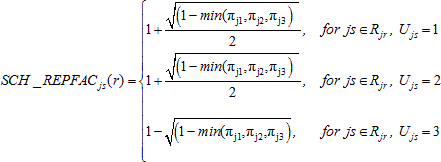

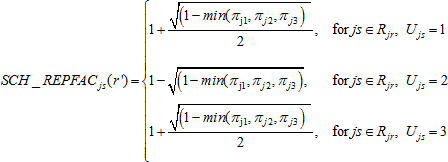

For noncertainty schools in preliminary variance strata comprising three schools, the school-level replicate factors SCH_REPFACjs(r), r = 1,..., 62 were calculated as follows:

For school s from primary stratum j, variance stratum r,

while for r' = r + 31 (mod 62):

and for all other r* other than r and r' :

![]()

where

min(πj1, πj2,πj3) is the smallest school probability among the three schools comprising Rjr,

Rjr

is the set of schools within the r-th variance stratum

for primary stratum j, and Ujs

is the variance unit (1, 2, or 3) for school s in

primary stratum j.

Rjr

is the set of schools within the r-th variance stratum

for primary stratum j, and Ujs

is the variance unit (1, 2, or 3) for school s in

primary stratum j.

In primary strata with fewer than 62 variance strata, the replicate weights for the “unused” variance strata (the remaining ones up to 62) for these schools were set equal to the school base weight (so that those replicates contribute nothing to the variance estimate).

NAEP Technical Documentation Website

NAEP Technical Documentation Computing Student- Level Replicate Factors for the 2013 Assessment

For the mathematics and reading assessments, which involved school-level finite population corrections, the student- level replication factors were calculated the same way regardless of whether or not the student was in

a certainty school.

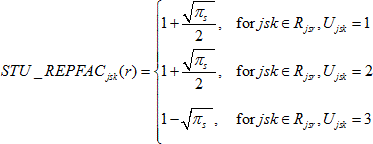

For students in student-level variance strata comprising pairs of students, the student-level replicate factors, STU_REPFACjsk(r), r = 1,..., 62, were calculated as follows:

![]()

where

πs

is the probability of selection for school s,

πs

is the probability of selection for school s,

Rjsr

is the set of students within the r-th variance stratum

for school s in primary stratum j, and Ujsk

is the variance unit (1 or 2) for student k in school s

in stratum j.

Rjsr

is the set of students within the r-th variance stratum

for school s in primary stratum j, and Ujsk

is the variance unit (1 or 2) for student k in school s

in stratum j.

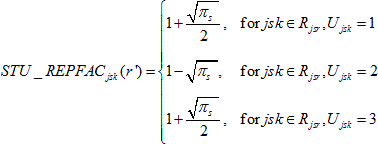

For students in variance strata comprising three students, the student-level replicate factors STU_REPFACjsk(r), r = 1,..., 62, were calculated as follows:

while for r' = r + 31 (mod 62):

and for all other r* other than r and r' :

![]()

where

πs

is the probability of selection for school s,

πs

is the probability of selection for school s,

Rjsr

is the set of students within the r-th replicate stratum

for school s in stratum j, and Ujsk

is the variance unit (1, 2, or 3) for student k in

school s in stratum j.

Rjsr

is the set of students within the r-th replicate stratum

for school s in stratum j, and Ujsk

is the variance unit (1, 2, or 3) for student k in

school s in stratum j.

Note, for students in certainty schools, where πs = 1, the student replicate factors are 2 and 0 in the case of pairs, and 1.5, 1.5, and 0 in the case of triples.

NAEP Technical Documentation Website

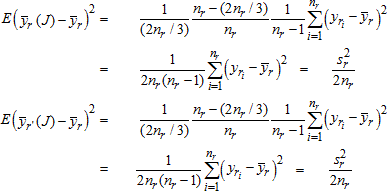

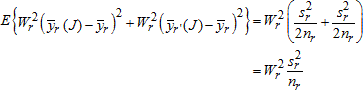

NAEP Technical Documentation Replicate Variance Estimation for the 2013 Assessment

Variances for NAEP assessment estimates are computed using the paired jackknife replicate variance procedure. This technique is applicable for common statistics, such as means and ratios, and differences between these for different subgroups, as well as for more complex statistics such as linear or logistic regression coefficients.

In general, the paired jackknife replicate variance procedure involves initially pairing clusters of first-stage sampling units to form H variance strata (h = 1, 2, 3, ...,H) with two units per stratum. The first replicate is formed by assigning, to one unit at random from the first variance stratum, a replicate weighting factor of less than 1.0, while assigning the remaining unit a complementary replicate factor greater than 1.0, and assigning all other units from the other (H - 1) strata a replicate factor of 1.0. This procedure is carried out for each variance stratum resulting in H replicates, each of which provides an estimate of the population total.

In general, this process is repeated for subsequent levels of sampling. In practice, this is not practicable for a design with three or more stages of sampling, and the marginal improvement in precision of the variance estimates would be negligible in all such cases in the NAEP setting. Thus in NAEP, when a two-stage design is used – sampling schools and then students – beginning in 2011 replication is carried out at both stages. (See Rizzo and Rust (2011) for a description of the methodology.) When a three-stage design is used, involving the selection of geographic Primary Sampling Units (PSUs), then schools, and then students, the replication procedure is only carried out at the first stage of sampling (the PSU stage for noncertainty PSUs, and the school stage within certainty PSUs). In this situation, the school and student variance components are correctly estimated, and the overstatement of the between-PSU variance component is relatively very small.

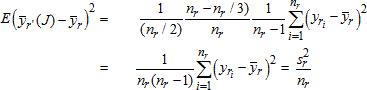

The jackknife estimate of the variance for any given statistic is given by the following formula:

![]()

where

![]()

![]() represents

the full sample estimate of the given statistic, and represents the

corresponding estimate for replicate h.

represents

the full sample estimate of the given statistic, and represents the

corresponding estimate for replicate h.

Each replicate undergoes the same weighting procedure as the full sample so that the jackknife variance estimator reflects the contributions to or reductions in variance resulting from the various weighting adjustments.

The NAEP jackknife variance estimator is based on 62 variance strata resulting in a set of 62 replicate weights assigned to each school and student.