MIHOPE-K Extension_Supporting Statement B

MIHOPE-K Extension_Supporting Statement B.docx

Mother and Infant Home Visiting Program Evaluation (MIHOPE): Kindergarten Follow-Up (MIHOPE-K)

OMB: 0970-0402

Alternative Supporting Statement for Information Collections Designed for

Research, Public Health Surveillance, and Program Evaluation Purposes

Mother and Infant Home Visiting Program Evaluation (MIHOPE): Kindergarten Follow-Up (MIHOPE-K)

OMB Information Collection Request

0970 - 0402

Supporting Statement

Part B

November 2021

Submitted By:

Office of Planning, Research, and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

4th Floor, Mary E. Switzer Building

330 C Street, SW

Washington, D.C. 20201

Project Officers:

Nancy Geyelin Margie

Laura Nerenberg

Part B

B1. Objectives

Study Objectives

In 2011, the Administration for Children and Families (ACF) and the Health Resources and Services Administration (HRSA) within the U.S. Department of Health and Human Services (HHS) launched the Mother and Infant Home Visiting Program Evaluation (MIHOPE). MIHOPE is providing information about the effectiveness of the Maternal, Infant, and Early Childhood Home Visiting program (MIECHV) in its first few years of operation and providing information to help states and others develop and strengthen home visiting programs in the future. The goals of the study are:

to understand the effects of home visiting programs on parent and child outcomes, both overall and for key subgroups of families,

to understand how home visiting programs were implemented and how implementation varied across programs, and

to understand which features of local home visiting programs are associated with larger or smaller program impacts.

Generalizability of Results

This randomized study is intended to produce internally-valid estimates of the causal impact of home visiting, not to promote statistical generalization to other sites or service populations. This study could help ACF, HRSA, and the broader home visiting field understand the long-term impact of home visiting on low-income families.

Appropriateness of Study Design and Methods for Planned Uses

The purpose of this data collection activity is to help us understand the long-term effects of home visiting and the pathways through which home visiting affects families’ long-term outcomes. Evaluating the effect of home visiting on families’ outcomes and the pathways through which home visiting affects families’ long-term outcomes would not be possible without following up with families at multiple time points. For the kindergarten follow-up, data collection methods are similar to those used for the MIHOPE follow-up that occurred when children were 15 months of age. Specifically, information is being gathered from a structured interview conducted with mothers. The study is also drawing on video-recorded interactions of mothers and children playing with toys; direct assessments of children’s language skills, math skills, and executive function; direct assessments of mothers’ executive function; state administrative child welfare data; state school records data; and a survey conducted with children’s teachers.

Measuring children’s cognitive, behavioral, self-regulatory, and social-emotional skills before formal schooling begins or at the outset of formal schooling will provide important data on intermediate effects of home visiting. In addition, a wealth of literature demonstrates that children’s math, language, and social-emotional skills at the time of the transition to formal schooling are predictive of academic and behavioral outcomes over the longer term, and a follow-up during the kindergarten year will allow the study team to measure these key mediators. Consistent with this research evidence, the legislation that authorized MIECHV indicated that home visiting programs are expected to improve school readiness. The study team identified eight areas of adult and child functioning and behavior where effects of home visiting services are most likely to be observed when children are kindergarten age:

Family economic self-sufficiency

Maternal positive adjustment

Maternal behavioral health

Family environment and relationship between parents

Parent-child relationship and interactions

Parental support for child’s cognitive development

Child social, emotional, and cognitive functioning and school readiness

Receipt of and connection to services

A study that follows families over time provides an opportunity to examine child and family outcomes at individual time points as children get older, and to learn about the trajectories of child and family outcomes.

Given the study’s requirements for local programs, the home visiting programs participating in MIHOPE are not representative of all MIECHV local programs. It is not clear how the effects of home visiting where it was studied in MIHOPE would compare with the results for MIECHV as a whole. Key limitations will be included in written products associated with the study. As noted in Supporting Statement A, this information is not intended to be used as the principal basis for public policy decisions and is not expected to meet the threshold of influential or highly influential scientific information.

B2. Methods and Design

Target Population

At baseline, MIHOPE recruited 4,229 families from 88 local programs (sites) in 12 states. Families were randomly divided between a program group, which could be enrolled in one of the home visiting programs being studied, or a control group, which was provided with referrals to other services in the community. Families were eligible for the study if (1) the mother was pregnant or the family had a child under six months old when they were recruited for the study, (2) the mother was 15 years or older at time of entry in the study, and (3) the mother was available to complete the baseline family survey.

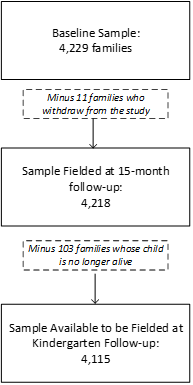

The study is conducting kindergarten follow-up activities with all families who enrolled in the study, not just those who have completed previous rounds of follow-up data collection. The sample available to be fielded at the start of MIHOPE-K data collection was different from the baseline MIHOPE sample only because 1) a few families withdrew from the study and 2) in some cases, the child with whom the mother enrolled in MIHOPE is no longer alive. The sample remaining for this ongoing data collection will include a large portion of first graders, due to the delay in data collection caused by the COVID-19 pandemic (see B4).

As shown in Figure B.1, the study enrolled 4,229 families, all of whom completed the baseline interview. Between the baseline interview and the 15-month follow up, 11 families withdrew from the study, resulting in a fielded sample of 4,218 families at the 15-month follow up.2 At the 15-month follow up, we learned that 103 children had never been born (for example, because of miscarriages) or had died after birth. Therefore, the fielded sample for the kindergarten follow up includes 4,115 families.

Figure B.1

MIHOPE Sample at Baseline, 15-month Follow Up, and Kindergarten Follow Up

Sampling and Site Selection

Local sites meeting several criteria were chosen to participate in the study: (1) operating programs that existed for at least two years by the time of study recruitment, (2) evidence of enough demand for home visiting services that they could provide a control group, (3) no evidence of severe implementation problems that would interfere with the program’s ability to participate in the study, and (4) a contribution to the diversity of sites and families for purposes of estimating effects for important subgroups of families.

To estimate the effects of home visiting on family outcomes, MIHOPE enrolled over 4,200 families across 88 sites in 12 states. Families were eligible for the study if they included a pregnant woman or an infant under six months old and the mother was at least 15 years old at the time of study entry. Families were recruited into the study by Mathematica’s survey research staff, who visited families to obtain informed consent when home visitors determined whether a family was eligible for the study or soon after that determination had been made. For state child welfare records, we are following up with 11 of the 12 original states where MIHOPE enrolled participants due to the California IRB not approving the collection of child welfare records from the state.

For power calculations, see Appendix K.

We have completed the direct kindergarten data collection with the two cohorts of participants who entered kindergarten in the 2018-2019 and the 2019-2020 school years, though administrative data collection for these participants is still ongoing.

B3. Design of Data Collection Instruments

Development of Data Collection Instruments

The MIHOPE-K data collection instruments were developed to understand the effects of home visiting programs on parent and child outcomes, both overall and for key subgroups of families (see B1. Study Objectives).

Structured interview with caregivers

The structured interview with caregivers (Instrument 1) relays information on several domains: child health; child development and school performance; relationships and father involvement; maternal health and well-being; parenting practices; intimate partner violence; child maltreatment; family economic self-sufficiency, and the caregiver’s adverse childhood experiences. Interview questions primarily focus on:

outcomes for which previous studies of home visiting have found effects,

outcomes with the greatest potential to be linked to long-term economic benefits

outcomes that are measures of adverse childhood experiences (ACEs), which are important predictors of poorer socioeconomic and health outcomes as children get older, and

measures that are key mediators of longer-term outcomes. The information collected from this interview will not be available from other sources (such as administrative records).

Table B.1 lists the constructs that are included in these various domains and the associated measures.

Table B.1

Construct |

Scale name (if applicable) |

Child development and school performance |

|

Child care setting before kindergarten |

N/A |

Social-emotional skills |

Social Skills Improvement System (SSIS) |

Behavior problems |

Social Skills Improvement System (SSIS) |

Early intervention services |

N/A |

Social support and relationships |

|

Relationship and marital status |

N/A |

Relationship with child’s biological father |

N/A |

Biological father’s involvement |

Maternal Social Support Index |

Caregiver-child separations |

N/A |

Social support |

Perceived Social Support Measure |

Intimate partner violence |

|

Women’s experience of battering |

Women’s Experience with Battering Scale (WEB) |

Physical assault: perpetration and victimization |

Conflict Tactics Scale (CTS2) |

Family conflict |

Family Environment Scale |

Parenting |

|

Learning environment: Home literacy environment |

N/A |

Learning environment: Cognitive stimulation |

N/A |

Parenting stress |

Parenting Stress Index - Short Form |

Household chaos |

Chaos, Hubbub, and Order Scale |

Mobilizing resources |

Healthy Families Parenting Inventory |

Family economic self-sufficiency |

|

Maternal education |

N/A |

Public assistance |

N/A |

Employment |

N/A |

Income |

N/A |

Housing |

N/A |

Food insecurity |

USDA U.S. Household Food Security Survey Module - Short Form |

Material hardship |

N/A |

Maternal health and well-being |

|

Subsequent births and pregnancies and outcomes |

N/A |

Maternal depression |

Center for Epidemiological Studies Depression Scale (CES-D) |

Drug use |

N/A |

Alcohol use |

N/A |

Mastery |

Pearlin Mastery Scale |

Child health |

|

ED visits |

N/A |

Hospital admissions |

N/A |

Insurance coverage |

N/A |

Child maltreatment |

|

Abuse: physical and psychological/emotional |

Parent Child Conflict Tactics Scale (CTSPC) |

Mother’s adverse childhood experiences |

|

Mother’s adverse childhood experiences |

N/A |

Direct assessments of children

Maternal stimulation of children’s language development and cognitive functioning is a core component of many home visiting programs. Language development and cognitive functioning in the early years of life is a predictor of longer-term readiness and achievement. Furthermore, there is evidence of positive effects of home visiting programs in these areas. For these reasons, assessment of children’s language—particularly their receptive language—and their early numeracy and executive functioning are important outcomes. These outcomes are best measured via direct assessments of children (Instrument 2).

A direct assessment of the child’s language development is conducted using the Woodcock Johnson IV Picture Vocabulary (WJPV) subtest, which is from the Woodcock Johnson IV: Tests of Oral Language (WJOL). The Picture Vocabulary subtest assesses receptive language by having the children point to pictures of objects or actions on an easel panel that are named by the assessor. A Spanish version of the Woodcock Johnson subtest for bilingual Spanish-English speakers is available.

The Woodcock Johnson III Applied Problems subtest is used to measure children’s early numeracy and math skills. This is a subtest from the Woodcock Johnson III: Test of Achievement and measures children’s ability to solve oral math problems (for example, “how many dogs are there in this picture?”). A Spanish version of the subtest is also available. Before we conduct the Woodcock Johnson subtests, we also administer a preLAS language screener for children to serve as a warmup to the assessments and, for bilingual children, to determine which versions they should be administered.

Children’s executive functioning, including their working memory, inhibitory control, and cognitive flexibility, is assessed using a combination of the Digit Span and Hearts & Flowers:

Digit Span, which is a measure of working memory, assesses the child’s ability to repeat an increasingly complex set of numbers.

Hearts & Flowers is designed to capture inhibitory control and cognitive flexibility and is administered through an application on a tablet. The task includes three sets of trials: (1) 12 congruent “heart” trials, (2) 12 incongruent “flower” trials, and (3) 33 mixed “heart and flower” trials. Children are presented with an image of a red heart or flower on one side of the screen. For the congruent heart trials, the children are instructed to press the button on the same side as the presented heart. For incongruent flower trials, children are instructed to press the button on the opposite side of the presented flower. Accuracy scores are drawn from the incongruent block and mixed block.

Along with direct assessments of the child’s language skills, early numeracy, and executive functioning, the assessor also observes the families and their homes to assess parental warmth and the child’s emotion, attention, and behavior. Since it does not represent a burden to families, the observational component follow-up is not subject to the Paperwork Reduction Act. Specifically, it (1) does not require the family to provide any information, and (2) is conducted at the same time as other in-home aspects of data collection. This is consistent with 44 USC, 5 CFR Ch. 11 (1-1-99 Edition), 1320.3, which indicates that “information” does not generally include facts or opinions obtained through direct observation by an employee or agent of the sponsoring agency or through nonstandardized oral communication in connection with such direct observations. The areas covered by the assessor observation component of the in-home visit are parental warmth and the child’s behavior, although the specific questions are not provided because they are proprietary.

Survey of the focal children’s teachers

The teacher survey (Instrument 3) is designed to collect information on behaviors that are more commonly demonstrated in a classroom setting rather than the home. For these outcomes, such as learning behaviors and approaches to learning, teacher-reported measures have also shown better reliability than parent-reported measures. Other outcomes (such as behavior problems and social-emotional skills) are part of the teacher survey even though they are also included in the family follow-up survey. For these outcomes, teachers can provide information about these outcomes in unique contexts (for example, parents usually reference their communities when assessing children while teachers use their school experiences). Further, teachers can provide a different, and presumably less biased, perspective since they were not the targets of the home visiting intervention.

Direct assessments of caregivers

An emerging body of literature has indicated that mothers’ cognitive control capacities are particularly relevant for engaged and responsive caregiving; these skills support caregivers’ ability to be perceptive, responsive, and flexible. Cognitive control capacities are especially important for mothers in stressful conditions including adverse life events and stress related to lower family socioeconomic status (e.g., poverty, unemployment).

Given that many families in MIHOPE experience a variety of risk factors and live in communities that are more disadvantaged than the national average, it is particularly important to directly assess mothers’ cognitive functioning to understand whether mothers possess skills that are theorized to enable them to resist environmental distractions, monitor children’s needs, and flexibly switch focus between competing contextual demands. The measure being used to assess maternal cognitive control (and specifically, working memory – an aspect of cognitive control) is the backwards Digit Span.

As part of the assessment of caregivers, we also ask two items about parental warmth from the Home Observation for Measurement of the Environment. This assessment protocol is included in Instrument 4.

Videotaped caregiver-child interactions

A caregiver-child interaction task is administered in order to assess the behavior of the mother and of the child during a semi-structured play situation. The interaction task is videotaped and viewed at a later date by trained coders, who rate caregiver and child behavior to assess qualities of parenting (such as parental supportiveness, parental stimulation of cognitive development, parental intrusiveness, parental negative regard, and parental detachment) and the child’s behavior (such as child engagement of parent, child's quality of play, and child's negativity toward parent). These outcomes require independent assessments (as opposed to self-reports, which may be more likely to be influenced by home visiting programs through raising parents’ awareness of preferred or desired responses regarding various types of parenting behaviors).

The caregiver-child interaction task involves semi-structured play and consists of tasks that were used in previous longitudinal studies that measured child development outcomes (i.e., the NICHD Study of Early Child Care and Development, a longitudinal study that examined the relationship between child care experiences and characteristics and child development outcomes, and the Early Head Start Research and Evaluation Project, a longitudinal impact evaluation of the Early Head Start program). The activities involve having the parent and child play with toys such as an Etch-A-Sketch, wooden blocks, animal puppets, and/or Play Doh. With each toy, the pair is instructed to either complete a specific task or to play with them in whichever way they would like. The activities are fun and interesting for children to complete with their caregivers. The protocol is included in Instrument 5.

Three of the data collection components, the direct assessments of children, the direct assessments of caregivers, and the videotaped caregiver-child interactions, occur during the in-home visit. Due to the ongoing COVID-19 pandemic, in-home visits may be conducted virtually, without an assessor physically present in families’ homes. The study team has developed a “virtual visit” version of the direct assessments of children, the direct assessments of caregivers, and the videotaped caregiver-child interactions. The virtual versions are shown along with the in-person versions in Instruments 2, 4, and 5.

Caregiver Website

Caregivers are provided with a website they can visit to confirm the focal child’s participation in kindergarten or first grade, update their contact information, schedule a time for the structured interview with caregivers, provide information about the child’s school and teacher, and give consent.

The website is designed so as to minimize burden for respondents and ensure that respondents are not being asked any unnecessary questions. For example, if caregivers do not provide consent for the teacher survey, we do not ask them about their child’s teacher.

The text for the website is included as Instrument 6.

Administrative Data: Child welfare records and school records

As indicated earlier in this section, we plan to continue to obtain child welfare data from state agencies (the study obtained child welfare records from the 12 MIHOPE states at its 15-month follow-up but plans to obtain them from 11 states for the kindergarten follow-up) and plan to request school records data from state and local agencies. (For school records, we have assumed that we will obtain data from 11 states and 5 local education agencies.)

For both child welfare and school records, we plan to receive two data files from each agency. We have assumed a lower burden per state for child welfare records (as compared to school records). Requests to agencies are included in Instruments 7 and 8.

MIHOPE-K Pre-tests:

As part of MIHOPE-K, the study team used pretesting to identify revisions to be made to materials, procedures, and instruments for follow-up data collection. All updates resulting from pretesting have been incorporated into the currently approved information collection materials. A description of pretesting efforts follows.

We identified 9 families with a child in kindergarten (including both English and Spanish-speaking participants) in several locations (including New Jersey, Ohio, and South Carolina) and recruited them to pretest the structured interview. Six families also participated in the pretest of the direct assessments. The study team attempted to recruit participants that represent the diversity of the MIHOPE sample (including linguistic, ethnic, and racial diversity). We also had 4 kindergarten teachers in several locations (New Jersey and North Carolina) pretest the survey of focal children’s teachers. Each of these groups received different measures, and no individual question was asked of more than 9 people.

The pretest included debriefings after the structured interview with caregivers and the survey of focal children’s teachers to investigate caregivers’ and teachers’ understanding of questions, and ease or difficulty of responding, and any questions or confusion they may have had. The pretest interviews and surveys were timed so that accurate estimates of the length of the interview and the survey could be obtained.

After completing the kindergarten data collection with the first set of MIHOPE families, we identified a number of updates to improve data collection efforts and submitted the proposed changes as a nonsubstantive change (approved by OMB in August 2019). The updates included some minor changes to the following elements of our data collection: direct assessments of children; direct assessments of caregivers; videotaped caregiver-child interaction; structured interview with caregivers; caregiver contact materials; teacher contact materials; and parent website. Specifically, we reduced the length of the structured interview because it was taking slightly longer per family than originally estimated, and cut some of the direct assessments of children and caregivers to reduce burden; changed wording to improve flow and administration in the structured interview with caregivers, direct assessments of children, direct assessments of caregivers, and the videotaped caregiver-child interaction; and revised contact materials to further simply the language, gain respondents’ attention, and encourage them to participate, as well as added a few materials that are tailored to respondents who may be less likely to participate in data collection.

After completing the kindergarten data collection with the second set of MIHOPE families, we identified updates to make to facilitate the virtual option for the activities that have taken place in person in the past (approved by OMB in September 2021).

B4. Collection of Data and Quality Control

This section describes the collection of data for MIHOPE-K. We are following best practices for conducting the data collection, including training and certifying staff on data collection procedures and monitoring data collection to ensure that high quality data are collected and the target response rate is achieved. Mathematica Policy Research is the subcontracted survey firm for the data collection. Our data collection method builds on the methods used in previous phases of MIHOPE to the greatest extent possible. In particular:

Design and text of respondent contact materials are informed by principles of behavioral science.

Varied methods are used to reach out to respondents (i.e., email, text messages, phone calls).

Tokens of appreciation are provided to increase families’ willingness to respond to the pilot data collection.

In addition, as mentioned in Supporting Statement A, we have adapted our data collection methods that involve in-person contact so that they can be conducted during the ongoing COVID-19 pandemic. In particular:

Computer-assisted telephone interviewing (CATI) is used to conduct the structured interview with caregivers.

In the virtual version of the caregiver-child interaction task, direct assessments of children, and direct assessments of caregivers, we will use Webex to connect with families, to administer assessments, and guide families through the “visit.” The study team will provide a laptop or tablet for families to use for the visit. Incentives and tokens of appreciation will be provided to increase families’ willingness to respond to each of the follow-up data collection components.

Contact information gathered during previous rounds of data collection is used to inform the work of locators.

Design and text of respondent contact materials is informed by principles of behavioral science.

Varied methods are used to reach out to respondents (i.e., email, text messages, phone calls).

Conducting the Follow-Up Family Data Collection

This sample release includes families in which the focal child reached kindergarten age by the cutoff date for the state in which the family lives. The sample also includes a large portion of first graders, due to the data collection delay necessitated by the COVID-19 pandemic and associated public health emergencies. Since we were not able to collect data from the sample in kindergarten during the 2020-2021 academic year, we plan to collect data from this set of the sample during the upcoming data collection.

The respondent notification plan includes the following (all caregiver-specific contact materials are included in Appendix A):

Pre-outreach package (information letter and gift). Before the sample release, we will mail the families a pre-outreach letter that asks them to update or confirm their contact information. The package will also include a small gift for the child (for example, a small book) as a thank you for their past participation in MIHOPE as well as a newsletter/infographic with information on the current status of the study. Since the newsletter/infographic will contain information about ongoing study activities, it has not yet been developed (however, a shell showing what it would look like and the kind of information that could be included is in Appendix C). Families can update or confirm their contact information either online or by calling the toll-free number to contact the study team, which is listed in the letter. Families for whom we have an email address will also receive a pre-outreach email.

Invitation letter. Once the sample is released, we will send the family an invitation letter with information about the follow-up data collection activities that we would like them to participate in, the gift card amount to be provided for completing the activities, a toll-free number to contact the study team, notification that we will be calling them soon to complete the structured interview with caregivers via telephone, and information about the website they can visit (included as Instrument 6). Participants can also call the toll-free number to complete the structured interview. The invitation letter will also include an FAQ with some more information about the study and study activities. Telephone interviewers at Mathematica’s Survey Operations Center (SOC) will begin trying to contact families who have not yet completed the structured interview about one week after the invitation letter is mailed. Telephone interviewers will call nonresponding families for approximately four weeks.

Email notifications. During outbound dialing and during the fielding period, we will also email families for which we have email addresses. The first email will contain similar information to the invitation letter and will provide the participants a toll-free number in case they want to call the study team to complete the structured interview via telephone. It will also provide a toll-free number and website (included as Instrument 6) to schedule the structured interview and in-home visit and to provide consent for the survey of focal children’s teachers. Additional reminder emails will be sent to those that have not yet completed the structured interview.

Text messages. Text reminders to complete the data collection activities will also be sent to participants.

Field advance letter and field locating letter. If the respondent has completed the structured interview before in-person locating begins, we will send them a field advance letter that lets respondents know that field staff will be in their area soon. If the respondent has not completed the structured interview before the in-person locating efforts, their field locating letter will also remind them about completing the structured interview. We will also send an additional locating letter during the fielding period if we have not been able to contact respondents, which we plan to send via priority mail.

Refusal conversion letters. If families have firmly refused to participate in the most recent data collection round in which contact has been established (prior to kindergarten), they will receive a tailored letter early in the data collection period. The letter acknowledges their refusal in the earlier data collection round and encourages them to reconsider taking part in the data collection activities. The letter also invites participants to contact the study team to ask any questions or share concerns about their participation. A similar letter will be sent to families who refuse to participate during the kindergarten follow-up data collection period on an as-needed basis.

Reminder postcards. During outbound dialing and at interim periods after transitioning the case to the field for in-person efforts, we will send reminder postcards to those that we have not yet heard from and have not been able to contact.

Caregiver website. Caregivers will be provided with a website they can visit to confirm the focal child’s participation in kindergarten or first grade, update their contact information, schedule a time for the structured interview with caregivers, provide information about the child’s school and teacher, and give consent. Once caregivers log into the website, they will be asked to confirm whether the focal child will be in kindergarten or first grade in the current or upcoming school year (if contacted before the school year has begun). If the focal child is not in kindergarten or first grade (or will not be at the start of the school year, if contacted in the summer), caregivers will be asked to update their contact information and told that they will be contacted again next year. Caregivers who have an eligible child will be asked to update their contact information, then provide their scheduling preferences for the structured interview and in-home visit, provide consent for the teacher survey, and give their child’s school and teacher contact information. At any point, caregivers can also go to the website to learn about any updates to MIHOPE and learn about the kindergarten data collection activities.

Prior to beginning administration of the structured interview, we will obtain verbal consent for the structured interview. We will also obtain consent from the teacher prior to administration of the survey of focal children’s teachers (electronic or paper – depending on the survey administration method). We will document consent for the home activities, and we will document the caregiver’s consent for the study team to contact the child’s teacher for the survey of focal children’s teachers.

Conducting the Teacher Data Collection

In the aforementioned caregiver contact materials, we will include letters and/or emails for the caregiver to give to the focal child’s teacher that explain the study and inform the teacher that we will be contacting him/her to complete the survey of focal children’s teachers. We will also send the teacher versions of these materials directly because the caregiver may not pass them along to the teacher. All teacher-specific contact materials are included in Appendix B. Specifically, the teacher notification plan will include the following:

Introduction letter/email/text. After we have documented the caregiver’s consent to contact the child’s teacher and have received the teacher’s contact information from the caregiver, we will send the teacher the survey or a link to the survey with an introduction letter/email/text explaining the study. The communication mode will depend on the contact information we have received from the caregiver.

Email notifications. For teachers for whom we have email addresses, we will also email them with a reminder to fill out the survey if we have not heard back from them. The first reminder email will contain the link to the survey, and an FAQ will be attached with more information on the study and the survey. Additional reminder emails will be sent to those that have not yet completed the survey.

Reminder letter. For teachers whose school addresses we have, we will also send them a reminder letter to follow-up with them if we have not heard back. This letter will also contain a link to the survey in case the teacher prefers to complete it online.

Before contacting the teachers, we will send the school districts and principals FAQs with information about the study in case the teachers need district/principal approval before participating in the study.

Quality Assurance

Quality assurance reviewers will review the assessments of remote assessors to ensure completeness, correct scoring of responses, and that clear guidance was given to families during the assessment. There are checkpoints after each task in the virtual visit protocol to collect additional information that will inform any issues that could affect the validity of the data. Following their review, quality assurance reviewers will provide feedback to assessors and answer any questions the assessors might have. This data collection will use the MIHOPE-K quality assurance protocol for in-home assessors. As part of the processing of the caregiver-child video interaction data, the study team will also conduct reviews of all videos to check for audio and picture integrity. Transfer of all video files will be carefully logged in a transmission log to minimize any errors in the transfer of data.

B5. Response Rates and Potential Nonresponse Bias

Response Rates

We are expecting a response rate of 75 percent for the structured interview and 70 percent for the in-home activities (i.e., child and caregiver assessments, assessor observation, and videotaped caregiver-child interaction). We anticipate that we may be able to achieve a 75 percent response rate among teachers for whom we have contact information and for whom we have received consent to make contact from caregivers. This expectation is in line with the response rates from the MIHOPE 15-month data collection, which were 78.7 percent and 70.5 percent for the structured interview and in-home activities, respectively. The response rates for MIHOPE Check-in were lower (51 and 48 percent for the 2.5 and 3.5 year follow-ups respectively) because MIHOPE Check-in did not include field locating (also referred to as in-person locating) for most of the sample for budget-related reasons. Instead, most sample members were encouraged to complete the survey only through a combination of email, text message, and phone call reminders. The current data collection effort will be more similar to the 15-month data collection both in terms of the locating efforts and the format of the data collection. We expected the response rates for the kindergarten data collection effort would more closely resemble those of the 15-month data collection. Since 77 percent of the sample completed the caregiver interview at 15 months, we initially predicted that 70-75 percent will complete the interview at kindergarten with the use of in-person locating. For the first two kindergarten cohorts, the caregiver interview response rate was 64 percent (65 percent for cohort 1 and 64 percent for cohort 2).

See Appendix F: Maximizing response rates for additional information.

NonResponse

As with already concluded MIHOPE-K data collection, following the close of data collection, a non-response analysis will be conducted to determine whether the results of the study may be biased by non-response. In particular, two types of bias will be assessed: (1) whether estimated effects among respondents apply to the full study sample, and (2) whether program group respondents are similar to control group respondents. The former type of bias affects whether results from the study can be generalized to the wider group of families involved in the study, while the second assesses whether the impacts of the programs are being confounded with pre-existing differences between program group and control group respondents.

To assess non-response bias, several tests will be conducted:

The proportion of program group and control group respondents will be compared to make sure the response rate is not significantly higher for one research group.

A logistic regression will be conducted among respondents. The “left hand side” variable will be their assignment (program group or control group) while the explanatory variables will include a range of baseline characteristics. An omnibus test such as a log-likelihood test will be used to test the hypothesis that the set of baseline characteristics are not significantly related to whether a respondent is in the program group. Not rejecting this null hypothesis will provide evidence that program group and control group respondents are similar.

Baseline characteristics of respondents will be compared to baseline characteristics of non-respondents. This will be done using a logistic regression where the outcome variable is whether someone is a respondent and the explanatory variables are baseline characteristics. An omnibus test such as a log-likelihood test will be used to test the hypothesis that the set of baseline characteristics are not significantly related to whether someone is a respondent. . Not rejecting this null hypothesis will provide evidence that non-respondents and respondents are similar.

Impacts from administrative records sources – which are available for the full sample – will be compared for the full sample and for respondents to determine whether there are substantial differences between the two. This analysis can be done using early impacts from administrative data from MIHOPE 2 or new administrative data collected during MIHOPE-LT.

If any of these tests indicate that non-response is providing biased impact estimates, a standard technique such as multiple imputation or weighting by the inverse probability of response will be used to determine the sensitivity of impact estimates to non-response. With data collection that has already been conducted with the first two cohorts of participants, we have conducted non-response bias analysis for the caregiver survey and in-home assessment. We did not find evidence that baseline characteristics were significantly related to whether a respondent is in the program group, but did find evidence that respondents significantly differ from nonrespondents. These findings are consistent with findings from previous rounds of MIHOPE data collection.

The study team will use imputation methods to fill in missing items that are a part of the planned missingness design, (which was used on the caregiver survey to reduce burden on respondents).

In previous rounds of data collection, the study team assessed the readability of questions with a high percentage of missing values to determine whether items needed to be rephrased or additional response options needed to be added. The study team will continue to examine item non-response to determine whether items need to be adjusted to minimize further item non-response.

B6. Production of Estimates and Projections

The data will not be used to generate population estimates, either for internal use or dissemination.

The impact estimates produced are for official external release. Given the study’s requirements for local programs, the home visiting programs participating in MIHOPE are not representative of all MIECHV local programs. It is not clear how the effects of home visiting where it was studied in MIHOPE would compare with the results for MIECHV as a whole.

We plan to archive this data at the Inter-university Consortium for Political and Social Research (ICPSR). Data from earlier MIHOPE data collection efforts is also being archived at ICSPR, and the team has prepared extensive documentation to guide other researchers in using that data.

Methods to be used for statistical tests and analytical techniques that will be used:

Impact Analysis

The impact analysis will assess the effectiveness of early childhood home visiting programs in improving the outcomes of families and children when children are in kindergarten, both overall and across key subgroups of families and programs. Random assignment was used in MIHOPE to create program and control groups that were expected to be similar in all respects when they entered the study. As is standard in random assignment studies, the primary analytical strategy in MIHOPE-LT will be to compare the outcomes of the entire program group with those of the entire control group (an “intent-to-treat” analysis). Doing so preserves the integrity of the random assignment design and means that any differences that emerge after random assignment can be reliably attributed to the program group’s access to evidence-based home visiting.

Information on sample members’ baseline characteristics would be used in the analysis to increase the precision of estimated impacts.

To address the question of whether home visiting programs have larger effects for some groups of families, effects can also be compared across key subgroups of families. This approach is consistent with the MIHOPE analysis that was conducted when children were 15 months of age and follows the recommendations of Bloom and Michalopoulos (2013). For example, in estimating the effects for mothers who were pregnant and the effects for those whose children were infants when they entered the study, the impact analysis would investigate whether estimated effects were larger for one group than for the other. If there are no statistically significant differences in the estimated impacts across subgroups and there are statistically significant effects estimated for all families, the presumption would be that home visiting is effective for all subgroups. This approach is proposed because estimated effects for subgroups are less precise than estimated effects for the full sample (because subgroup sample sizes are smaller than the full sample), meaning that it is likely that estimated effects for some subgroups would not be statistically significant even if the program were modestly effective for that subgroup.

Mediational Analyses

At the kindergarten follow-up, mediational analyses can be conducted to shed light on the pathways through which home visiting has longer-term effects on families. A model, or description of a hypothetical pathway, could be estimated for each kindergarten outcome of interest. To identify models and their components, outcomes that had evidence of effects at the MIHOPE 15-month follow-up could be chosen as mediators. Theory and prior research could guide the thinking about how these 15-month effects might influence longer-term outcomes. As indicated above, any mediational analyses will draw on data collected from earlier MIHOPE data collection efforts.

The team has posted plans at clinicaltrials.gov.

B7. Data Handling and Analysis

Data Handling

To mitigate errors, the computerized virtual visit instrument and structured interview with caregivers are programmed to guide the assessor through the instruments with the participant. The instruments are programmed to allow only certain responses to each item, confirm caregiver and child information, hide response options that are not applicable, pipe in the child’s name and appropriate text for randomization, and remind the remote assessor of the view to display for the child and caregiver. Similarly, the survey of the focal children’s teachers is programmed to allow only certain responses to each item, confirm teacher information, and pipe in the child’s name and school.

Electronic data collection will also allow the research team to track real-time response rates and to monitor data on a regular basis to ensure data quality in real time. The research team will receive weekly reports, which will allow them to monitor data collection by detailing who has completed the direct assessments. Given the study’s real-time access to the web-based data, research staff will be able to regularly review item frequencies and cross-tabulations to guard against inconsistent or incorrect values. In addition, all data processing will be carefully programmed by a team member with knowledge of the protocol and instruments and will be checked by another staff member to minimize errors.

Data Analysis

Since this is an impact study, this information is provided under Production of Estimates.

Data Use

A report published by the federal government will show estimated long-term effects of home visiting programs through the kindergarten follow-up study. This report will be written to inform ACF and HRSA, as well as to provide information to the broader home visiting field. In particular, stakeholders such as home visiting model developers, states, territories, Tribes, and Tribal organizations that oversee the implementation of home visiting programs, local home visiting programs, and researchers in the area of home visiting will all have potential interest in incorporating the findings into their work. Limitations to the data will be included in the published report.

We plan to archive this data at the Inter-university Consortium for Political and Social Research (ICPSR). Data from earlier MIHOPE data collection efforts is also being archived at ICSPR, and the team has prepared extensive documentation to guide other researchers in using that data. Additional documentation describing the MIHOPE-K data will be prepared as part of the data deposit.

B8. Contact Person(s)

MDRC

Kristen Faucetta ([email protected])

Charles Michalopoulos ([email protected])

Mathematica

Eileen Bandel ([email protected])

Nancy Geyelin Margie (OPRE/ACF)

Laura Nerenberg (OPRE/ACF)

Attachments

Instrument 1_MIHOPE-K_Survey of caregivers

Instrument 2_MIHOPE-K_Direct assessments of children

Instrument 3_MIHOPE-K_Survey of the focal children’s teachers

Instrument 4_MIHOPE-K_Direct assessments of caregivers

Instrument 5_MIHOPE-K_Videotaped caregiver-child interaction

Instrument 6_MIHOPE-K_Caregiver website

Instrument 7_MIHOPE-K_Child welfare records request

Instrument 8_MIHOPE-K_School records request

Appendix A_MIHOPE-K_Caregiver contact materials

Appendix B_MIHOPE-K_Teacher contact materials

Appendix C_MIHOPE-K_Newsletter shell

Appendix D_MIHOPE-K_MDRC IRB Approval

Appendix E_MIHOPE-K_Certificate of Confidentiality

Appendix F_MIHOPE-K_Maximizing response rates

Appendix G_MIHOPE-K_Power calculations

Appendix H_MIHOPE-K FRN Comment

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | OPRE |

| File Modified | 0000-00-00 |

| File Created | 2021-12-01 |

© 2026 OMB.report | Privacy Policy