RUDI Draft OMB Package Supp Statement A Post Pilot FINAL

RUDI Draft OMB Package Supp Statement A Post Pilot FINAL.docx

Rapid Uptake of Disseminated Interventions (RUDI) Evaluation

OMB: 0906-0079

Rapid Uptake of Disseminated Interventions (RUDI) Evaluation: Supporting Statement A

Rapid Uptake of Disseminated

Interventions (RUDI) Evaluation

Supporting Statement A

10/20/2023

OMB Control No. 0906-XXXX New Information Collection Request

Terms of Clearance: None.

A. Justification

1. Circumstances making the collection of information necessary

The HIV/AIDS Bureau (HAB), part of the Health Resources and Services Administration, requests approval from the Office of Management and Budget (OMB) for a new information collection effort, the Rapid Uptake of Disseminated Interventions (RUDI) Evaluation. The mixed-methods RUDI evaluation will help HAB systematically assess and understand (1) how, where, and why recipients of Ryan White HIV/AIDS Program (RWHAP) funding access and use its disseminated resources and products and (2) the utility and effectiveness of the disseminated resources and products in caring for people with HIV. Findings from the RUDI evaluation will help HAB maximize the uptake and impact of its disseminated resources and products to end the HIV epidemic in the United States. Legislative authorization for this study comes from The Ryan White HIV/AIDS Treatment Extension Act of 2009– Title XXVI of the Public Health Service Act, as amended.1

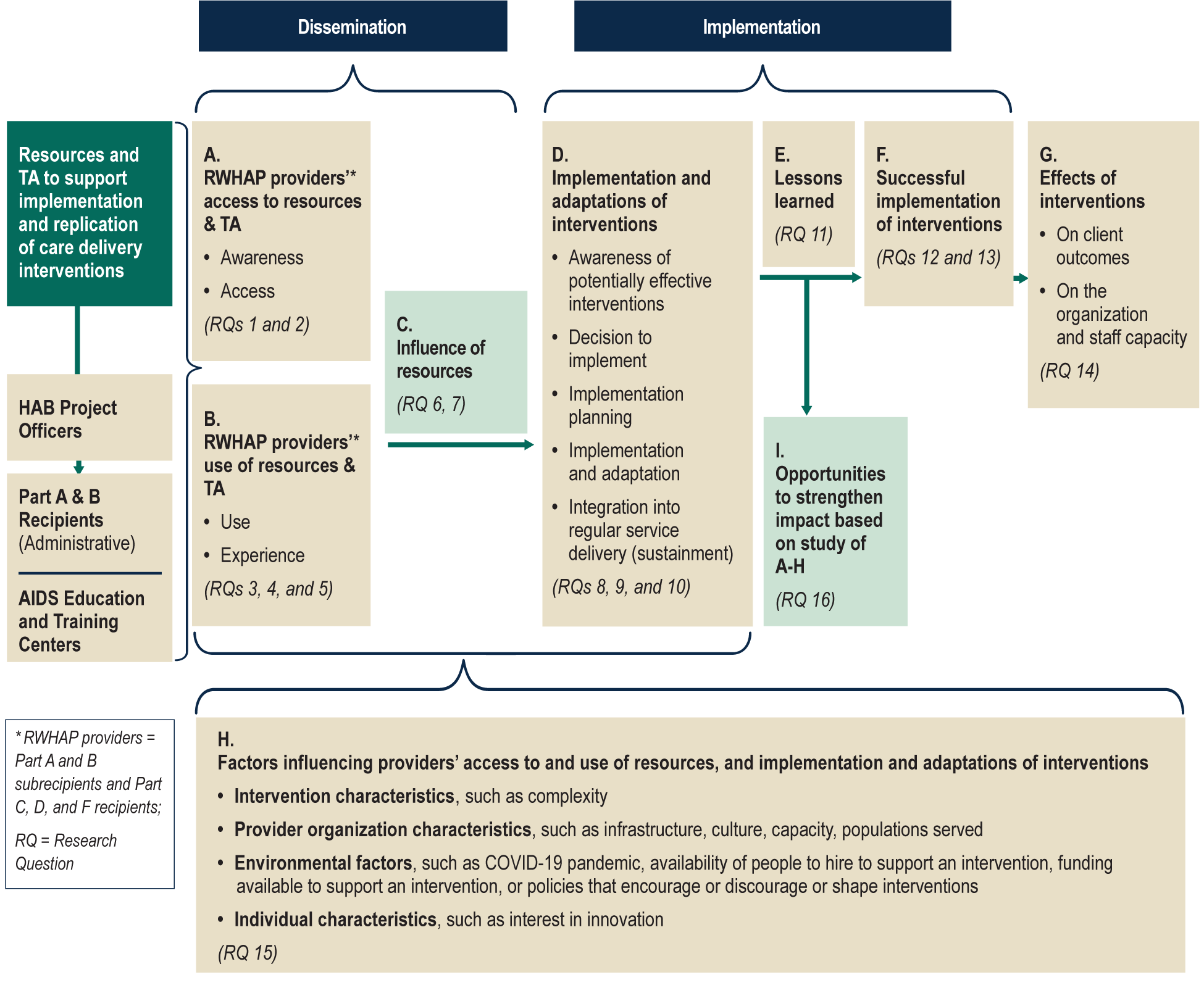

Background. RWHAP, along with the Health Resources and Services Administration’s Health Center Program, plays a critical role in achieving the goals of the U.S Department of Health and Human Services’ Ending the HIV Epidemic initiative.2 The initiative, launched in 2020, aims to reduce new HIV infections by 75 percent by 2025 and by 90 percent by 2030. HAB has worked closely with RWHAP recipients and subrecipients to develop effective intervention strategies to link and retain clients in high-quality care, particularly for populations that carry the greatest burden of HIV. At the same time, many HIV primary care and supportive service providers have developed and adapted their own intervention strategies to address urgent and emerging needs. The challenge that remains is effectively disseminating and implementing the many intervention strategies available, including adapting them to the contextual and intersectional needs of each at-risk population. HAB’s RUDI evaluation will assess the uptake and impact of its disseminated initiatives through an implementation science framework, as depicted in the logic model shown in Exhibit 1.

Exhibit 1. RUDI evaluation logic model

HAB = HIV/AIDS Bureau; RUDI = Rapid Uptake of Disseminated Interventions; RWHAP = Ryan White HIV/AIDS Program; TA = technical assistance

Note: RQ notations within Exhibit 1 reference the research questions listed in Exhibit 2.

2. Purpose and use of information collection

The Health Resources and Services Administration (HRSA) RWHAP plays a critical role in the U.S. Department of Health and Human Services’ Ending the HIV Epidemic initiative. HRSA’s HAB, which administers the RWHAP, has worked closely with RWHAP recipients and providers to develop and test intervention strategies to link and retain people with HIV to high-quality care and treatment, particularly for populations that are least served by existing programs, as well as the most urgent and emerging needs. In recent years, HAB has been a leader in the utilization and innovation of implementation science in HIV through its portfolio of Special Projects of National Significance and its Technical Assistance (TA) initiatives.3 This work has resulted in resources and tools to help other providers replicate successful interventions to improve care quality and health outcomes for people with HIV, particularly those served by the RWHAP. At the same time, many HIV service delivery organizations (hereafter “providers,” since they provide either medical or non-medical services, or both) have developed and adapted their own intervention strategies. To end the HIV epidemic, these effective interventions need to be disseminated widely so they can be replicated, where appropriate, as rapidly and efficiently as possible to achieve optimal health outcomes for all people with HIV. However, the “optimal implementation” of the many intervention strategies available, including adaptation as needed for each at-risk population and their contextual and intersectional needs, remains a challenge.4 To overcome these challenges, effective dissemination strategies are needed.5

Under this study, HAB seeks to learn more about the uptake, utility, and efficacy of the resources and products it disseminates, the effectiveness of its dissemination process, and the reach of its key dissemination channels. HAB also wants to learn more about the non-HAB resources and products that RWHAP recipients and subrecipients access and use to improve care for people with HIV. Exhibit 2 lists the topics and research questions that the RUDI evaluation will address and identifies the sources of information used to answer each research question.

Exhibit 2. Data sources’ use by research question

Topics and research questions |

RUDI-P/S Survey |

Virtual site visits (interviews) |

RUDI-R Survey |

Interviews with Part A & B Recipients |

Interviews with AETCs |

Google Analytics data |

RSR and other sources of secondary data |

Topic: Recipients and Subrecipients' access to HAB and non-HAB resources |

|||||||

|

x |

|

x |

|

x |

x |

|

|

x |

|

x |

|

x |

x |

|

Topic: Providers’ use of resources and TA |

|||||||

|

|

X |

|

x |

x |

|

|

|

|

X |

|

|

|

|

x |

|

x |

X |

x |

x |

x |

|

|

Topic: Influence of resources on implementation and replication of interventions |

|||||||

|

x |

X |

x |

x |

x |

|

|

|

x |

X |

x |

x |

x |

|

|

Topic: Implementation and adaptations of the interventions |

|||||||

|

xb |

xb |

|

|

|

|

|

|

x |

X |

|

|

|

|

|

|

|

X |

|

|

|

|

|

Topic: Lessons learned from implementation |

|||||||

|

|

X |

|

|

|

|

|

Topic: Measuring the outcomes of the interventions |

|||||||

|

|

X |

|

|

|

|

|

|

|

X |

|

|

|

|

|

Topic: Effects of intervention implementation on the organization |

|||||||

|

|

X |

|

|

|

|

|

Topic: Factors influencing providers’ access and use of resources |

|||||||

|

|

X |

x |

x |

x |

|

x |

Topic: Providers’ use of resources |

|||||||

|

x |

X |

x |

x |

x |

x |

x |

aNote that RWHAP providers include some organizations that are recipients (Parts C, D, and F) and some that are subrecipients (Parts A and B). The broad terms “recipients and subrecipients” therefore includes RWHAP providers, and also includes Part A and B administrative recipients, who do not provide care to clients, but who support providers and also may implement system-level initiatives to improve HIV care.

bThe survey will give insight on this question in that it will ask all RWHAP providers if they used HAB or non-HAB resources to help implement an intervention over the past two years. It seems unlikely that providers would implement an intervention without using any resources (although that’s possible), so we will have an estimate of the number of providers that implemented at least one intervention. The providers who receive virtual site visits will be asked to list all the interventions they implemented in the past two years, so we will know an average number among those interviewed and have a sense of how common it is to implement multiple interventions in a two-year period, but it will not be statistically representative of all RWHAP providers, given the nuances of the selection criteria.

RUDI-P/S = National survey of RUDI providers and subrecipients; RUDI-R = National survey of RUDI Part A and B administrative recipients; HAB = HIV/AIDS Bureau; RSR = RWHAP Services Report file; RWHAP = Ryan White HIV/AIDS Program; TA = technical assistance.

Exhibit 3 shows how each data source will contribute to HAB’s understanding of the key topic areas of the RUDI evaluation. The exhibit also highlights how the five primary data collection activities for which HAB is seeking OMB’s approval contribute to the study: (1) a one-time national survey of all RWHAP providers (either directly funded as recipients or indirectly funded as subrecipients to provide care and support to people with HIV) (RUDI-P/S), (2) a one-time national survey of all RWHAP Part A and B administrative recipients (RUDI-R), (3) virtual site visits (interviews) to a sample of 40 RWHAP provider sites, (4) telephone interviews with a sample of 20 RWHAP Part A and B recipients (administrative entity only), and (5) telephone interviews with 8 AIDS Education and Training Center (AETC) recipients. These data collection activities will occur over an 18-month period beginning in December or January 2024. We discuss each data collection activity separately below.

Exhibit 3. Primary contributions of each data source to the RUDI evaluation

Data source |

Primary contributions to the evaluation |

Data sources requiring OMB clearance |

|

National survey of RWHAP recipients and providers / subrecipients (RUDI-R and RUDI-P/S) |

|

Virtual site visits with a sample of RWHAP providers |

|

In-depth interviews with Part A and B administrative recipients |

|

In-depth interviews with AETCs |

|

Data sources not requiring OMB approval |

|

RSR and Dental Services Report (DSR) files |

|

Google Analytics data |

|

Other publicly available data |

|

Reports internal to HAB |

|

HAB = HIV/AIDS Bureau; OMB = Office of Management and Budget; RSR = RWHAP Services Report file; RWHAP = Ryan White HIV/AIDS Program.

Data collection activities requiring OMB approval

National Survey of RUDI Providers and Subrecipients (RUDI-P/S). HAB will conduct a web-based survey of all RWHAP providers and subrecipients— either directly or indirectly funded by the program. While the word “providers” includes subrecipients in use within project planning and analysis, we include the word subrecipients in the survey title because we have learned that some non-medical HIV service organizations may not recognize themselves as providers. The survey will collect information on the following:

The extent to which RWHAP providers access and use HAB and non-HAB resources to improve the delivery of care

The specific channels and pathways that RWHAP providers use to access and download HAB and non-HAB resources and products

The type and characteristics of the intervention resources RWHAP providers use to improve the delivery of care and treatment

The contribution of the HAB and non-HAB resources and products to implementation success

Opportunities to strengthen the impact of HAB’s resources and products, as well as the way in which HAB disseminates them, on care delivery and health outcomes

The RUDI-P/S survey will be a census, meaning that all providers receiving RWHAP funds either directly or indirectly will receive an invitation to participate. A census is preferred as sampling may result in findings that are representative of a subset of RWHAP providers and subrecipients. A census will increase our chances of obtaining the widest range of experiences, as possible. We plan to send survey invitations to approximately 2,130 providers that received funding under the RWHAP in 2020 (the latest year for which we have contact information), and we anticipate a response rate no lower than 50 percent (n = 1,065). We expect the web-based survey will take 15-20 minutes to complete. In addition to answering several of the study’s key research questions, we will also use the findings from the survey (together with administrative data from the RWHAP Services and Dental Services Reports [RSRs and DSRs]) to select the RWHAP provider sites for participation in virtual site visits.

The draft RUDI-P/S survey instrument is provided in Appendix A.

National Survey of RUDI Part A and B Administrative Recipients (RUDI-R). HAB will conduct a web-based survey of all Part A and B administrative recipients funded by the RWHAP. The primary purpose of the RUDI-R survey is to obtain information on (1) the extent to which RWHAP recipients access and use of HAB and non-HAB intervention resources, (2) the channels they use to access them, (3) the contribution they make to internal knowledge, (4) the extent to which they are used to support recipients who are implementing system-level initiatives, and (5) the extent to which they are used to support providers as providers implement interventions for clients living with HIV, and (6) opportunities to strengthen the effectiveness of HAB’s dissemination activities. We will field the web-based survey with a census of RWHAP Part A and B administrative recipients, so sampling methods are not applicable. Like the RUDI-P/S Survey, a census is preferred for the RUDI-R Survey. Sampling may result in findings that are representative of a subset of RWHAP providers and subrecipients. A census will increase our chances of obtaining the widest range of experiences, as possible.

We will obtain contact information for the universe of RWHAP Part A and B administrative recipients from an administrative list provided by HAB. RWHAP recipients responding to the survey will form the sample frame for the set of one-hour interviews described below.

The draft RUDI Recipients Survey is provided in Appendix B.

Virtual site visits with RWHAP providers. By virtual site visits, we mean telephonic interviews (usually conducted over several days, depending on the informants’ availability) with a range of staff members who have different roles and perspectives from within each provider site. Virtual site visits will provide more in-depth, nuanced information on (1) how RWHAP providers access and use HAB and non-HAB resources and products to improve care, (2) the contribution of those resources and products to implementation success, and (3) opportunities to strengthen the impact of HAB activities on dissemination and implementation of intervention strategies. The research team will virtually visit 40 RWHAP provider sites. During each virtual site visit, the team will engage one to five staff members in semi-structured individual or small-group interviews. Each interview will last 15 to 60 minutes, depending on the role that informant plays in accessing and implementing dissemination materials.

The draft virtual site visit discussion guide is provided in Appendix C.

Interviews with RWHAP Part A and B Recipients (administrative entity only). Part A and B recipients use HAB resources in three ways: directly to improve their own knowledge to help achieve Ryan White program goals, directly to aid them in implementing system-wide initiatives, and indirectly to support providers in the providers’ efforts to improve services for their clients. Through these interviews, which will last up to 60 minutes each, the research team will understand how this audience directly accesses and uses HAB resources (which may be different from how providers and AETCs access and use them) and will collect the recipients’ observations and learnings that they report from their subrecipients (providers) regarding use of these materials. The research team will interview a key representative of the recipient organization identified by HAB for a sample of 20 recipients; in most cases one interview per organization will be sufficient. In some cases, when the identified contact indicates others would have complementary and valuable information to our topic, the key contact may invite one or two other staff members to the single interview. We estimate that in five states (for example, California, New York, Texas, Florida, New York City), the Part A and B recipient organizations will be so large that one recipient representative would not be able to provide enough in-depth detail, such that we would interview up to 2 additional contacts from the same organization in separate interviews.

The draft guide for interviews with RWHAP Part A and Part B administrative recipients is provided in Appendix D.

AETC Interviews. The AETCs are another key user audience for HAB dissemination products, so without these interviews the team would miss the perspectives of another important user group. The team will interview a key contact in each of the eight regional AETCs identified by HAB, for up to 60 minutes each, to learn how they use HAB dissemination products themselves, how they use these resources to support RWHAP providers in their region, what they have heard from providers in their region about the use and usefulness of the resources, and their perspectives on what could be improved to make them more useful.

The draft guide for AETC interviews is provided in Appendix E.

Data collection activities not requiring Office of Management and Budget (OMB) approval

HAB is not seeking OMB approval for the following secondary data sources. We describe each source to provide a comprehensive understanding of the RUDI evaluation.

RSR and DSR files. We will link RWHAP provider characteristics from the 2021 RSR and DSR data files (the most recent version available) to data from the RUDI-P/S Survey to identify variation in the use of HAB and non-HAB resources across different types of providers and patient populations. For example, we might find that medical providers are more likely to use disseminated resources than support service providers or that providers serving a greater number are clients are more likely to use certain resources than those serving fewer clients. Similarly, we might find that providers serving racial and ethnic minority populations access and use resources differently from those serving predominantly White populations. Characteristics of interest available from the RSR and DSR files include provider type, ownership status, type of funded services, source of funding, geographic location, number of clients served, characteristics of clients served (such as race and ethnicity, gender, HIV risk group, poverty status, and housing status). Also, health outcomes characteristics from the RSR file are of interest (such as percentage virally suppressed).

Google Analytics data. We also plan to use Google Analytics data from two websites (www.TargetHIV.org and www.ryanwhite.hrsa.gov) through which HAB disseminates most of its HIV intervention resources and products for the RUDI evaluation. We will primarily focus on the library section of the TargetHIV website, through which users can access several key subpages, such as (1) the Best Practices Compilation page, (2) the Special Projects of National Significance Resource Directory page, and (3) the HIV Care Innovations: Replication Resources page, among others. We will track the most frequently accessed library resources, most frequently searched library topics, and the most common downloaded materials. We will also identify the most used search filters and keywords on the Best Practices Compilation page, the most frequently clicked subpages, and the most commonly clicked hyperlinks on those pages. We will use analytic data to identify the channels or pathways (such as the internal search function, navigation within the website, or from external links) that users take to find resources relevant to delivering care to people with HIV. We will also calculate similar access and use metrics based on Google Analytics data for resources and pages outside of the TargetHIV website that are relevant to the evaluation, such as the various data reports and slide decks that HAB prepares from the annual RSR data and makes publicly available online.

Other available data. Along with data from the RSR and DSR, we will use publicly available data to understand the extent to which HAB and non-HAB resource utilization is associated with community-level factors, such as HIV prevalence and incidence, as well as population characteristics such as poverty, education, and employment. Potential sources of information on community characteristics include HIV surveillance data, the Area Health Resource File, and the American Community Survey. In addition, we will use publicly available data to identify Medicaid expansion states, since we understand use of HAB resources may vary by this large difference in the state environment. Also, we will review existing data within HAB that pertain to resource use, including (1) Recipient Satisfaction Survey results (which are helpful but are much broader – they do not overlap with the new data collection proposed here), (2) call center volume from the National Clinician Consultation Center, which fields calls from clinicians about HIV treatment (again, this is non-overlapping), and (3) the report from the National Evaluation Contractor for AETCs.

3. Use of improved information technology and burden reduction

We have made efforts to minimize respondent burden while obtaining the essential information needed to answer the RUDI evaluation research questions. Fielding one-time web-based surveys and conducting site visits and interviews virtually will burden respondents less than alternate methods, such as longitudinal paper-based surveys and in-person site visits and interviews. The RUDI evaluation will collect primary data and acquire secondary data through a combination of electronic methods that we describe here.

RUDI surveys. We will program both the provider and recipient web surveys using QuestionPro software. QuestionPro allows us to design and program input screens that visually guide respondents through the survey instrument and increase accurate data entry. Pilot testing demonstrated that the web survey functions as intended and provided data required for accurate burden estimates. Analytic Computing Environment (ACE3). In addition, we will conduct all outreach to survey respondents electronically via emails and, if applicable, notifications via listservs and electronic newsletters.

Virtual site visits and interviews. We will conduct the virtual site visits, comprised of individual or small-group interviews (depending on the preference of the respondent), and RWHAP recipient and AETC interviews over WebEx. We will schedule interviews at times convenient for each respondent. With respondents’ verbal consent, we will audio record all interviews and store the audio recordings and electronic notes on a secure server with need-to-know access.

4. Efforts to identify duplication and use of similar information

This evaluation collects information unique to the RUDI evaluation and that, in the absence of this study, is not available to HAB. With an eye toward minimizing duplication and burden, the survey and interview data generated by this study are not duplicative and are complementary to the other evaluation and monitoring activities conducted by HAB. The overall evaluation strategy uses four primary data sources to generate data that do not currently exist: (1) the RUDI surveys and (2) virtual site visit interviews with staff at selected RWHAP provider sites, (3) interviews with RWHAP recipients, and 4) interviews with AETC staff. The RUDI evaluation also leverages the following existing data sources: (1) RSR and DSR data, (2) Google Analytics data, and (3) other publicly available data files with information on community characteristics, and (4) existing reports from within HAB, pertaining to National Clinician Consultation Center call volume, National Evaluation Contractor information for AETCs, and Recipient Satisfaction Survey data. This approach ensures that evaluation participants are asked only for information that does not currently exist.

The information collected through these activities serves as the only comprehensive source of qualitative and quantitative information for meeting the goals of the RUDI evaluation.

5. Impact on small business or other small entities

Information collection will not have a significant impact on small entities. We will collect data from RWHAP recipients and subrecipients, which typically do not constitute small businesses.

6. Consequences of collecting the information less frequently

The proposed frequency of data collection (one-time surveys and one round of virtual site visit interviews) is the minimum necessary to meet the evaluation objectives. We developed the proposed plans for data collection activities with input from a RUDI work group, HAB project officers, and HAB leaders. We made efforts to consider and balance data collection burden with the ability to achieve the evaluation’s aims. The evaluation design includes two, one-time web-based surveys, up to a maximum of 200 interviews with staff from across 40 RWHAP provider sites, up to 30 interviews with Part A and B recipient administrative contacts, and up to 8 interviews with AETC contacts. Because relevant data does not currently exist, these data collection activities are required to achieve the evaluation’s objectives. Importantly, HAB has implemented strategies to reduce burden on participants by employing electronic and virtual data collection techniques and using relevant, readily available secondary data (RSR and DSR files, Google Analytics, publicly available data sets, and existing reports from within HAB).

If we do not conduct the RUDI-P/S and RUDI-R Surveys and virtual site visits, HAB will continue to lack important information about the uptake, utility, and efficacy of the products it disseminates, the effectiveness of its dissemination process, and the reach of its key dissemination channels. The lack of information on the uptake, use, and efficacy of HAB’s dissemination resources and products will constrain the implementation of effective care delivery strategies across the country and ultimately impede movement toward the long-term goal of ending the HIV epidemic in the United States.

7. Special circumstances relating to the guidelines of 5 CFR 1320.5

The request fully complies with the regulation.

8. Comments in response to the federal register notice/outside consultation

Section 8A.

A 60-day Federal Register Notice was published on July 12, 2023, Vol. 88, No. 132, pp. 44371-44373. No comments were received. A 30-day Federal Register Notice was published on October 19, 2023, Vol 88, No. 201, pp. 72085-72086.

Section 8B.

Feedback from HAB staff. The HAB contractor conducting the RUDI evaluation consulted with 17 HAB staff for feedback on the overall evaluation design, the content of the RUDI Survey, the virtual site visit interview protocol, and the approach to integrating secondary data into the evaluation.

We also presented the RUDI evaluation approach and solicited input at HAB division meetings, with a total of 83 participations from the following HAB divisions including: Office of Program Support (n=12), Division of Policy and Data (n=28), Division of Community HIV/AIDS Programs (n=43). An additional 38 HAB staff attended division meetings, but their specific division within HAB was not provided.

HAB staff were consulted because they possessed important knowledge about RWHAP staff including how they engage (or do not engage) with resources and how best to interface with staff on data collection activities included in the RUDI Evaluation. These collections were not subject to PRA because feedback was gathered from federal employees and not of members of the public.

Pilot test. We pilot tested the RUDI-P/S and RUDI-R surveys, the RWHAP provider discussion guide, the Part A and B administrative recipient discussion guide, and the AETC discussion guide with RWHAP providers, subrecipients, administrative recipients, and AETC staff respectively. Each data collection tool was tested with nine or fewer individuals. Feedback received was used to streamline the various documents to reduce burden and remove any items that were duplicative across instruments. Detailed pilot test findings can be found in Supporting Statement B, Section D.

9. Explanation of any payment/gift to respondents

RUDI-P/S and RUDI-R surveys. In the context of the RUDI evaluation, a RWHAP provider is an organization with one or more staff members providing RWHAP-funded services to eligible clients. Each provider will submit one survey, reflecting the experience of all staff in the provider site. The first approximately 560 RWHAP providers and the first 28 RWHAP recipients that respond to the survey will receive a $50 Amazon gift card. These numbers represent one-half of the anticipated number of total respondents for each survey. The remaining responders will receive a $25 Amazon gift card. The provider site can share the incentive with those contributing to the survey or the provider can choose to use the entire amount for a specific purpose. The Amazon gift card is a token of HAB’s appreciation for providers’ participation. Research is limited on the impact of incentives on survey response rates among RWHAP providers. However, in recent studies of school principals and physicians, $50 incentives outperformed incentives of lower amounts.6,7 Further, in an experiment embedded into the US Panel Study of Income Dynamics, the group that was offered $150 for early response had higher response rates than the group that was offered $75, regardless of completion time.8 Maximizing response increases the likelihood of generalizable results and supports disaggregated analyses across different types of providers and communities. This, in turn, will aid in the selection of diverse sites for participation in virtual site visit interviews. Participating providers and recipients can opt out of gift card receipt, if preferred, at the end of the web survey.

Virtual site visits with RWHAP providers and interviews with RWHAP recipients and AETC providers. We anticipate that together, the virtual site visit interviews will last up to three hours per provider site and involve one to five people from each site. Participating RWHAP provider sites will receive a $380 Amazon gift card. RWHAP recipients and AETC staff participating in 1-hour virtual interviews will receive a $125 Amazon gift card. The incentives are consistent with the $125 hourly wage for individuals in similar positions (see Exhibit 5). That is, AETC staff participating in a one-hour interview will receive a $125 incentive. For virtual site visits that involve multiple people over multiple hours, a larger incentive is offered. A similar approach was used in a 2021 study that involved interviews with leaders in physician practices. Incentives ranged from $500 to $1,500 depending on practice size.9 As with the survey, the provider site can choose to share the gift card among the individual informants participating in the interviews or opt to use the entire amount for a specific purpose. The gift card reflects the importance HAB places on input from the individual staff members within its RWHAP-funded provider sites on issues related to the uptake, utility, and efficacy of the products it disseminates, the effectiveness of its dissemination process, and the reach of its key dissemination channels. As with the surveys, providers and recipients participating in the virtual site visits and interviews can opt out of gift card receipt, if preferred.

10. Assurance of confidentiality provided to respondents

Data will be kept private to the extent allowed by law. We will protect the confidentiality, integrity, and availability of study data in the following ways. First, we will control access to systems and data on a role-based, need-to-know, and least privilege basis, and we will periodically review access rights to ensure only those with the need to access the information can do so. We will secure all project data in transit and at rest using U.S. Federal Information Processing Standard 140-2 compliant encryption. We will also ensure secure external access to information systems by using a virtual private network and an encrypted secure file transfer portal. Only authorized study team members will have access to the secure data transfer application and project folders throughout the life of the project.

As part of the data collection effort, we will do the following:

Provide project staff with role-based security and privacy training to educate them about data protection expectations (such as permitted uses of data), where they can and cannot store and access data, and the processes for authorized data disclosure and prohibitions on data disclosure.

Collect data that reflects the provider sites’ experience rather than the experience of any one individual.

Use HAB-provided contact information for RWHAP providers and recipients to field the surveys and schedule the virtual site visits and interviews. The survey and the virtual site visit/interview protocols will seek a limited set of personally identifiable information to better understand the composition of staff at RWHAP provider and recipient sites.

Store all survey data, including contact information, in access-restricted folders.

Report aggregated study findings only.

Remove contact information from data that the HAB contractor shares with HAB and transmit the data securely via a secure data transfer application.

Employ data destruction methods at the end of the project that are consistent with National Institute of Standards and Technology standards and require the HAB contractor to provide a certificate of destruction, upon request.

The following approaches will be used to collect, transfer and store project data:

RUDI surveys. We will obtain a census list of eligible survey respondents and their contact information from the 2021 RSR and DSR files. We will transmit the information to the HAB contractor securely via the file transfer protocol, where it will be maintained on a secure server with limited access. We will send the survey invitations via QuestionPro from within the HAB contractor’s secure environment. We will collect and store all survey data in a project-specific restricted folder during the contract. Upon survey completion, we will remove email addresses and names from the corresponding survey responses. Each completed survey will be assigned a unique identification (ID) number; a crosswalk with the unique ID, respondent email, and name will be created and stored in a secure project folder with “need to know” access by project staff.

Site visit interview data. We will select RWHAP provider and recipient sites for the virtual interviews based on survey responses and provider characteristics derived from the RSR and DSR. We will maintain both data sources (the survey responses and the RSR/DSR extract file), along with electronic notes, on a secure server with limited access. We will audio record all interviews after the informant gives us verbal consent. We will upload the audio recordings from the WebEx platform to a project-specific restricted folder immediately after the interview along with any electronic notes taken during the site visit. After the upload, we will delete the audio recording from the WebEx platform.

11. Justification for sensitive questions

The RUDI evaluation focuses on the uptake, utility, and efficacy of the HAB and non-HAB disseminated resources and products, the effectiveness of its dissemination process, and the reach of its key dissemination channels. The information that we will collect from RWHAP providers and recipients (via survey or interviews) is not sensitive in nature. Because of the evaluation’s focus, we will mostly ask respondents to provide information about their organizations and organizational activities. Information collected in the survey and during virtual site visits will pertain to staff experiences and uses of various dissemination resources and products. Questions are not designed to collect personal or sensitive information.

12. Estimates of annualized hour and cost burden

12A. Estimated annualized burden hours

This section provides annualized burden hours for each RUDI evaluation primary data collection activity described in this OMB package. The primary data collection activities will occur in Year 2 of the RUDI evaluation. As Exhibit 4 shows, total burden hours for this entire OMB package is 543. Each of the RUDI surveys (RUDI-P/S and RUDI-R) will take no more than 20 minutes (.33 hours) to complete. Virtual site visit interviews with staff within 40 RWHAP provider sites will not exceed three hours in total. We anticipate that one to five staff members from each site will participate in the interviews; we used an average number of three for purposes of the burden estimate. Individual interviews with RWHAP recipient sites will take 45 hours. At fifteen of the RWHAP recipient sites we will conduct one one-hour interview with an average of two participants. Five of the twenty RWHAP recipient sites are large and will average three participants per site. We have based our time estimates for survey completion and interview duration on pilot testing that was done with RWHAP providers and recipients and our experience with similar efforts in comparable studies.

Exhibit 4. Annualized burden hour estimate associated with data collection

Respondent |

Data collection |

Number of RWHAP provider sites |

Number of RWHAP staff providing input |

Number of responses per respondent |

Total responses |

Average burden per response (in hours) |

Total burden hours |

|

RWHAP providers |

RUDI - Provider Survey |

1,066 |

1 |

1 |

1,066 |

0.33 |

351.78 |

|

RWHAP providers |

Virtual site visit interviews |

40 |

3 |

1 |

120 |

1.00 |

120.00 |

|

RWHAP recipients |

RUDI – Recipient Survey |

56 |

1 |

1 |

56 |

0.33 |

18.48 |

|

RWHAP recipients

|

Interviews (small sites) |

15 |

2 |

1 |

30 |

1.0 |

30.0 |

|

Interview (large sites) |

5 |

3 |

1 |

15 |

1.0 |

15.0 |

||

AETC providers |

Interviews |

8 |

1 |

1 |

8 |

1.0 |

8.0 |

|

Total |

1,295 |

|

543.26 |

|||||

12B. Estimated annualized burden costs

As Exhibit 5 shows, the annualized cost burden of the proposed primary data collection activities of the RUDI evaluation is $66,821. There are no direct costs to respondents other than their time spent contributing to the survey and participating in the virtual site visits. Hourly wage rates are based on the mean hourly wage rate for medical and health services managers reported in the 2022 Occupational Employment by the Bureau of Labor Statistics found at https://www.bls.gov/oes/current/oes_nat.htm#b29-0000.htm. Medical and health services managers were chosen based on the BLS job description and typical educational level– these individuals ‘plan, direct, or coordinate medical and health services in hospitals, clinics, managed care organizations, public health agencies, or similar organizations’ and typically have at least a bachelor’s degree.

Exhibit 5. Annualized cost burden associated with data collection

Type of respondent |

Total burden hours |

Hourly

wage |

Total

|

RWHAP provider staff contributing to RUDI Survey |

352 |

$123.06 |

$43,317 |

RWHAP provider staff participating in virtual site visits |

120 |

$123.06 |

$14,767 |

RWHAP recipient staff completing RWHAP Recipient Survey |

18 |

$123.06 |

$2,215 |

RWHAP recipient staff participating in interviews (small and large sites) |

45 |

$123.06 |

$5,538 |

AETC interviews |

8 |

$123.06 |

$984 |

Total |

|

|

$66,821 |

b The mean hourly wage of $61.53 for medical and health services managers reported in the 2022 Occupational Employment by the Bureau of Labor Statistics was doubled to $123.06, to account for overhead costs.

13. Estimates of other total annual cost burden to respondents or recordkeepers/capital costs

Other than their time, there is no cost to respondents.

14. Annualized cost to federal government

The portion of the 2.5-year, fixed-price contract that supports survey- and interview-related activities is $2,362,280, annualized to $944,952. This includes labor costs to design and manage the evaluation; convene and facilitate the RUDI work group meetings; develop and pilot test the survey instrument and interview protocol; recruit survey and interview respondents; collect, clean, and analyze the data; and prepare a final report and manuscripts. In addition, there will be the cost for the level of HAB official’s effort is a GS 13 (Step 6 located in Washington-Baltimore-Arlington, DC-MD-VA-WV-PA) at 30 percent-time (adjusting by 1.5 to account for benefits, the cost would be $58,807.50) time to monitor the project. The average annual total cost of the project is $114,779, and the total cost of the 2.5-year RUDI evaluation is $3,443,365.

15. Explanation for program changes or adjustments

This is a new information collection.

16. Plans for tabulation, publication, and project time schedule

We will prepare a final report and manuscripts appropriate for and actionable to stakeholders at the end of the project. We will make all final materials Section 508 compliant before dissemination. The contractor will also collaborate with HAB to develop materials that would be of interest to the TargetHIV, RWHAP, AIDS Education and Training Center National Coordinator Resource Center websites as well as other venues to be determined during the period of performance that will fit within the project’s budget. This includes publications in peer-reviewed journals as appropriate. Exhibit 6 represents a timeline for data collection and reporting benchmarks for the RUDI evaluation.

Exhibit 6. Schedule for conducting the RUDI evaluation

Activity/deliverable |

Target timeline |

Evaluation design and development of web surveys, virtual site visit guide, and interview protocol |

December 2022–August 2023 |

Pilot test surveys and virtual site visit protocols |

August 2023–September 2023 |

Access secondary data (RSR, DSR, Google Analytics, public data sets) |

November 2022–March 2025 |

Field surveys and conduct virtual site visit interviews |

December 2023–March 2024 |

Data analyses and reporting |

17. Reason(s) display of OMB expiration date is inappropriate

The OMB number and expiration date will be displayed on the entry page of the web-based RUDI surveys and on any respondent-facing electronic documents.

18. Exceptions to certification for Paperwork Reduction Act submissions

There are no exceptions to the certification.

1 http://HRSA HAB.hrsa.gov/aboutHRSA HAB/legislation.html.

2 Information on Ending the HIV Epidemic initiative is available at https://www.hrsa.gov/ending-hiv-epidemic.

3Psihopaidas, D., C.M. Cohen, T. West, A. Latham, A. Dempsey, K. Brown, C. Heath, A. Cajina, H. Phillips, S. Young, A. Stubbs-Smith, and L.W. Cheever. “Implementation Science and the Health Resources and Services Administration’s Ryan White HIV/AIDS Program’s Work Towards Ending the HIV Epidemic in the United States.” PloS Medicine, vol. 17, no. 11, 2020. https://doi.org/10.1371/journal.pmed.1003128.

4Eisinger, R.W., C.W. Dieffenbach, and A.S. Fauci. “Role of Implementation Science: Linking Fundamental Discovery Science and Innovation Science to Ending the HIV Epidemic at the Community Level.” Journal of Acquired Immune Deficiency Syndrome, vol. 82, no. 3, December 1, 2019, pp. S171–172. https://doi.org/10.1097/qai.0000000000002227.

5 Psihopaidas et al. 2020 (see footnote 1).

6 Coopersmith, J., Vogel, L. K., Bruursema, T., & Feeney, K. (2016). Effects of incentive amount and type of web survey response rates. Survey Practice, 9(1).

7 Keating, N. L., Zaslavsky, A. M., Goldstein, J., West, D. W., & Ayanian, J. Z. (2008). Randomized trial of $20 versus $50 incentives to increase physician survey response rates. Medical Care, 46(8), 878-881.

8 Mcgonagle, K. A., Sastry, N., & Freedman, V. A. (2022). The Effects of a Targeted “Early Bird” Incentive Strategy on Response Rates, Fieldwork Effort, and Costs in a National Panel Study. Journal of Survey Statistics and Methodology, smab042.

9 Khullar, D., Bond, A. M., Qian, Y., O’Donnell, E., Gans, D. N., & Casalino, L. P. (2021). Physician practice leaders’ perceptions of Medicare’s Merit-based Incentive Payment System (MIPS). Journal of General Internal Medicine, 1-7.

Mathematica®

Inc.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Supporting Statement A |

| Author | OMB |

| File Modified | 0000-00-00 |

| File Created | 2023-11-01 |

© 2025 OMB.report | Privacy Policy