Att E_Feasibility Study Report

Att E_Feasibility Study Report.docx

[NCIPC] The National Intimate Partner and Sexual Violence Survey (NISVS)

Att E_Feasibility Study Report

OMB: 0920-0822

Attachment E

Study of the Feasibility Survey for Redesign of the National Intimate Partner and Sexual Violence Survey

July 21, 2021

Authors

David Cantor Mina Muller

Ting Yan Darby Steiger

Eric Jodts Stephanie Mendoza

J. Michael Brick

Submitted to:

Centers for Disease Control and Prevention

Atlanta, GA

Submitted by:

Westat

An Employee-Owned Research Corporation®

1600 Research Boulevard

Rockville, Maryland 20850-3129

(301) 251-1500

Table of Contents

Chapter 1. Introduction 1

Research Questions and What Is Covered in This Report 1

Chapter 2. Methodology 2

Methods for the Random Digit Dial Survey 2

Methods for the Address-Based Sample 3

Variance Estimation and Significance Test 6

Chapter 3. What Are Response Rates for RDD and ABS Surveys? 7

Definition of a Completed Survey 8

Chapter

4. How

Do Prevalence Estimates Compare Between ABS and RDD

Surveys? 15

Lifetime Prevalence Estimates 16

12-Month Prevalence Estimates 16

Chapter 5. How Do ABS and RDD Compare with Respect to Nonresponse Bias? 22

Comparing Demographic and Socioeconomic Characteristics to National Benchmarks 23

Comparing Selected Health Measures to the National Health Interview Survey 24

Comparing by Level of Effort to Complete the Survey 25

Comparing to Prior NISVS with Different Response Rates 26

Chapter

6. How

Do ABS and RDD Compare with Respect to Other Measures

of

Data Quality and Two Key Survey Outcomes? 37

Comparison to the National Survey of Family Growth (NSFG) 40

Comparing the Relationship Between Age and Victimization for ABS and RDD 42

Comparison of ABS and RDD on Intimate Partners and Consequences of Victimization 43

Table of Contents (continued)

Chapter

7. How

Do ABS and RDD Compare on Burden, Privacy, and

Confidentiality? 54

Privacy Conditions Surrounding the Survey Administration 56

Chapter 8. Results of ABS experiments 62

Chapter 9. Recommendations for the Next NISVS 78

Appendix

National Intimate Partner and Sexual Violence Survey (NISVS) Redesign – Weighting Plan A-1

List of Tables

Table

2-1 Incentives for the screener by frame and NRFU status 4

2-2 Incentives for the extended interview by frame, mode and NRFU status 4

3-1 Disposition of sampled telephone numbers 11

3-2 ABS and RDD survey results by final disposition codes 12

3-3 Response, cooperation, refusal, contact, and yield rates for ABS and RDD samples 13

3-4 Last section completed for RDD and ABS by completion status 14

4-1 Lifetime prevalence estimates for selected measures of sexual, physical, and emotional abuse 18

4-3 12-month prevalence estimates for selected measures of sexual, physical, and emotional abuse 20

List of Tables (continued)

Table

5-1 Comparison of demographic distributions for RDD, ABS, and American Community Survey (ACS) 29

5-3 Comparison of final weighted health indicators for RDD, ABS, and national benchmarks 31

5-4 Lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys 32

5-5 Male lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys 33

5-6 Female lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys 34

5-7 Lifetime prevalence estimates for NISVS 2010 to 2017, ABS, RDD by sex 35

5-8 Comparison of lifetime prevalence estimates by sex for ABS and RDD to NISVS 2015 and 2016-17 36

6-4 Comparison of estimates of forced sex for those age 18-49 for RDD, ABS, and NSFG by sex 48

6-5 NSFG lifetime measures for respondents age 18-49 of forced sex by age and survey 49

6-6 Non-intimate partner 12-month prevalence estimates by age and sample frame 50

6-7 Non-intimate partner lifetime prevalence estimates by age, sex, and sample frame 51

List of Tables (continued)

Table

6-9 Percentage of victims reporting selected consequences 53

7-1 Number of surveys and timing of RDD and web surveys by type of device for web 57

7-3 Perceptions of burden for RDD and ABS by type of web device (percent) 59

7-4 Perceived sensitivity of the survey for RDD and ABS by type of web device (percent) 60

7-5 Privacy conditions when taking the survey for RDD and ABS by type of web device (percent) 61

8-7 Demographic distributions for ABS sample by mode of response 73

List of Tables (continued)

Table

Acronyms

AAPOR American Association for Public Opinion Research

ABS address-based sampling

ACASI audio computer-assisted interviewing

ACS American Community Survey

CATI computer-assisted telephone interviewing

IPPA intimate partner psychological aggression

IPPV intimate partner physical violence

IPV intimate partner violence

NCVS National Crime Victimization Survey

NHIS National Health Interview Survey

NISVS National Intimate Partner and Sexual Violence Survey

NRB nonresponse bias

NRFU nonresponse follow-up

NSFG National Survey of Family Growth

PTSD post-traumatic stress disorder

RBP Rizzo-Brick-Park probability method

RDC Research Data Center

RDD random digit dial

YMOF Youngest Male Oldest Female

1. Introduction

This report summarizes findings from the Feasibility Study testing two alternative designs for the National Intimate Partner and Sexual Violence Survey (NISVS). One design uses random digit dial (RDD) as a sample frame and computer-assisted telephone interviews (CATI) as the mode of interviewing. The second design uses an address-based sample (ABS) that pushes respondents to the web and follows up with multimode alternatives. One alternative gave respondents a choice between the web and calling in to do a CATI. The second gave the choice between web and filling out an abbreviated paper survey. The goal of this report is to summarize results that are key for deciding the design for the next NISVS.

Chapter 2 provides an overview of the methodology used in the data collection, weighting, and analysis. Chapters 3-7 summarize results addressing each research question. The final chapter makes a recommendation on for the next NISVS.

Research Questions and What Is Covered in This Report

The analysis of the Feasibility Study is organized around six research questions:

How do response rates compare between the RDD and ABS surveys?

How do prevalence estimates compare between ABS and RDD surveys?

How do ABS and RDD compare on bias due to nonresponse?

How do ABS and RDD compare on other measures of data quality (item missing data) as well as measures of other outcomes (comparison to other surveys measuring sexual violence, relationship of victimization with age; measures of consequences of victimization)?

How do ABS and RDD compare on respondent burden, privacy, and confidentiality?

What are results of the experiments for ABS and RDD?

These cover the critical issues needed to decide on whether RDD, ABS, or a combination of these frames should be part of the NISVS design moving forward.

The results of the analyses (Chapters 3–8) begin with a summary of the major findings for each research question. This is followed by more specific descriptions of the results in the form of highlights. The tables are included at the end of each chapter.

2. Methodology

The Feasibility Study was designed to compare random digit dial (RDD) and address-based sample (ABS) methodologies for the National Intimate Partner and Sexual Violence Survey (NISVS). This chapter provides a brief overview of the methodology used for the study.

Methods for the Random Digit Dial Survey

The RDD portion of the Feasibility Study is a dual-frame national telephone survey with approximately 77 percent of the sample being cellphone numbers and 23 percent landline. The cellphone frame comprises the majority of the sample because of its superior coverage, as well as the tendency for young adults and males to be better represented than on a landline. The cell numbers were prescreened to take out businesses and numbers identified as not likely to be active. For the landline frame, the numbers were matched to an address list. For those that match to an address, a letter was sent alerting the household about the survey. The letter contained a $2 bill to encourage the respondent to read the letter. Respondents were offered an incentive to complete the survey, with the amount depending on the stage of the survey. One experiment was conducted that tested whether sending out a text prior to calling a cellphone had an effect on the response rate.

For the cellphone component, the person who answered the telephone was considered the eligible respondent, as long as that person was 18 years old or over. For the landline survey, a screening interview was conducted to randomly select an adult in the household using the Rizzo-Brick-Park method.1

Calling for the RDD survey began on May 4, 2020 and ended on July 12, 2020. There were two phases to the survey. The first phase occurred from May 4 to June 21, 2020 and consisted of calling and following up all eligible telephone numbers. Follow-up calls were placed when no one answered the phone or the person eligible for the interview was not available. Refusal conversion was attempted for those that refused but did not express hostility. Those who completed the survey were paid $10. The second phase, or nonresponse follow-up (NRFU), began June 29 and ended on July 12, 2020. For the NRFU, the nonrespondents who had not displayed hostility were sampled and called back. A $40 incentive was offered to these individuals to complete the survey.

Once the survey was completed, the data were weighted2 by first computing the probabilities of selection. After that, an adjustment was made for nonresponse within the landline and cellphone frames. After this was done, the two frames were combined using information collected on the survey about those who could have been selected in both of the sample frames. The final weights were created by raking to national totals from the American Community Survey for sex; age; race-ethnicity; marital status; education; and telephone status (cell, landline, both).

Methods for the Address-Based Sample

The sampling frame for the ABS was drawn from a database of addresses provided by the sample vendor MSG. The MSG database is derived from the Computerized Delivery Sequence File (CDSF), which is a list of addresses from the United States Postal Service (USPS). The CDSF is estimated to cover approximately 98 percent of all households in the country. All nonvacant residential addresses in the United States present on the MSG database, including post office (P.O.) boxes; throwbacks (i.e., street addresses for which mail is redirected by the USPS to a specified P.O. box); and seasonal addresses were considered eligible.

Data collection began on May 4 and ended October 6, 2020. As with the RDD, data collection was conducted in two phases. Phase 1 began on May 4 and NRFU began on August 17, 2020. Unlike RDD, data collection for the two phases continued concurrently and both ended on October 6, 2020.

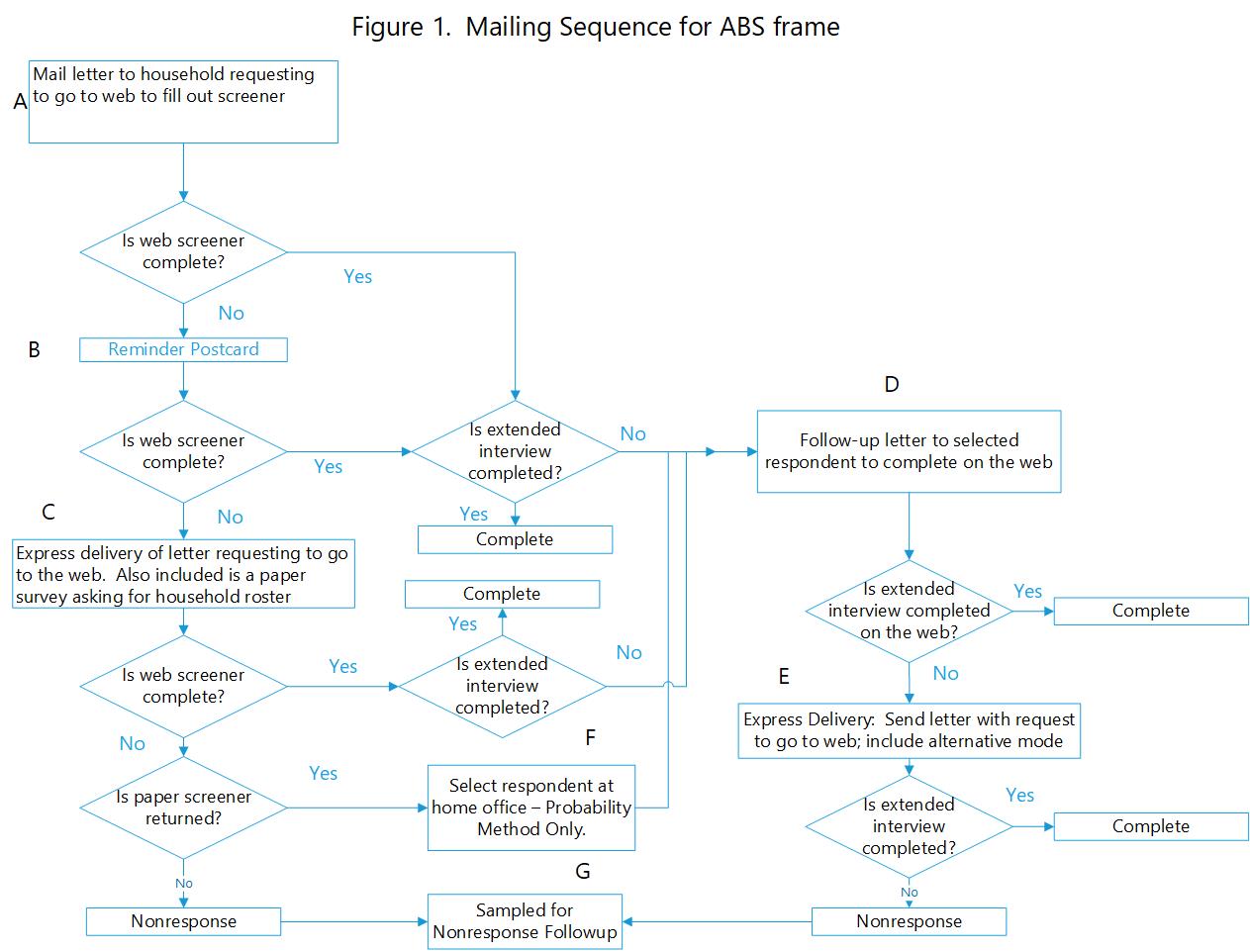

The procedures for the ABS frame are shown in Figure 2-1. The first step was to send a letter asking an adult to complete the screening survey on the web (Figure 2-1 - Box A). The letter contained a monetary incentive of $5 cash and a promised $10 Amazon gift code upon completing the web screener. Letters included a unique PIN for each household and the URL to launch the survey. The letter also included a helpdesk toll-free number for any questions about the study. The web screener included questions about the household needed to select an individual to be the respondent for the NISVS survey. If the person selected for the extended interview was the screener respondent, then that person was instructed to proceed directly to the extended interview. If the screener respondent was not selected for the extended survey, the screener respondent was instructed to ask the selected adult to log in to the website and complete the survey.

A reminder postcard was sent approximately one week after the first mailing. If the screener was not completed, a letter was sent by express delivery asking the respondent to either fill out the screener on the web or complete a paper version of the screener (Figure 2-1 – Box C). If the web screener was still not completed and the paper screener was not returned after this mailing, the household was considered for subsampling for the nonresponse follow-up (Figure 2-1 – Box G).

Additional follow-up contacts were attempted for those households that completed the screener, but the selected respondent did not complete the extended interview. This included two groups. One group was those who completed the web screener, but there was no response for the extended survey. The second group was those who returned the paper screener. For this second group, Westat home office staff selected a respondent from the household roster (Figure 2-1 – Box F). In the case of both of these groups a follow-up letter was sent inviting the selected individual to complete the survey on the web (Figure 2-1 – Box D). The person was promised an incentive of $15 to complete the survey. If there was no response, a letter was sent express delivery to the selected respondent (Figure 2-1 – Box E), asking the person to complete the extended survey by web or by an alternative mode. Half of these were given the choice between the web and a paper version of the questionnaire. The other half were given the choice between the web or to call in and complete the survey over the phone.

To provide the incentives for those who completed the survey on the web, an Amazon gift code was provided at the end of the survey. If respondents completed the survey by paper or computer-assisted telephone interviewing (CATI), a letter thanking them for completing the survey and the cash incentive was mailed by USPS First Class.

Once all attempts were exhausted, a subsample of the nonrespondents was selected. Contacts using the same procedures as described above were attempted, but offered a larger incentive ($40 for completing the web or by CATI; $30 to do it by paper).

Fifty percent of the screener nonrespondents were subsampled for NRFU. Those who were selected received a letter encouraging them to go to the web to complete the screener or complete the enclosed paper screener. The mailing also included a $5 cash prepaid incentive, but there were no promised incentives for completing the screener whether by web or by paper.

Additionally, 50 percent of the extended survey non-respondents who completed the phase 1 protocol were selected for NRFU3. This group was split into two mode options: web/paper or web/CATI. They were offered $40 for completing the extended survey on the web or on the phone and offered $30 for completing it by paper.

The incentives provided at each stage for each frame are provided in tables 2-1 and 2-2.

Table 2-1. Incentives for the screener by frame and NRFU status

|

ABS |

RDD |

With first invitation letter |

$5 Cash |

$2* Cash |

Complete by web |

$10 AC |

NA |

Complete by paper |

$5 CM |

NA |

NRFU with Invitation Letter |

$5 Cash |

NA |

NA – Not aplicable

NRFU - Nonresponse follow-up.

AC - Amazon code available online after completing survey.

CM - Cash sent by mail after completing survey.

* Sent to landline telephone numbers where an address was found.

Table 2-2. Incentives for the extended interview by frame, mode and NRFU status

|

ABS |

RDD |

||

Web |

CATI |

Paper |

CATI |

|

Complete |

$15 AC |

$15 CM |

$5 CM |

$10 CM |

NRFU - Complete |

$40 AC |

$40 CM |

$30 CM |

$40 CM |

NRFU - Nonresponse follow-up.

AC - Amazon code available online after completing survey.

CM - Cash sent by mail after completing survey.

Figure 2-1. Mailing sequence for ABS frame |

|

The weights for the ABS were first computed by calculating the probability that a respondent was selected into the sample. The weights were then adjusted for nonresponse, with a final raking adjustment using the same characteristics as those for the RDD except for telephone status (sex, age, race-ethnicity, marital status, and education).4

Variance Estimation and Significance Test

To compute standard errors a stratified jackknife (JK2) variance estimator5 was used. This involves creating a set of replicate weights created by applying the same adjustments made to the full sample weight. The JK2 method has good statistical properties. The JK2 method with 100 replicates is used for the analysis.

For purposes of the discussion in the report, we have selectively used the standard errors to test for significant differences. These tests were carried out using a two-sample z-test. The discussion generally makes the distinction between differences that are statistically significant at least at the 5 percent level (two–tailed test) and those that are different but do not reach statistical significance.

3. What Are Response Rates for RDD and ABS Surveys?

Summary |

|

Definition of a Completed Survey

The definition of a complete and partial complete survey was different across collection modes. For surveys completed by CATI and paper, a partial complete was defined as any survey that is completed through the stalking questions but did not qualify for a full complete. The stalking section is the first violence section. A full completed interview was any survey that had responses through the consequences section, the last section asking about violent victimization. Both full and partial completes the web, CATI and paper surveys were used in the final weighting and in the analyses for this report. There were a total of 3526 for ABS and 1461 RDD completes and partial completes (Table 3-4).6

A different definition of a complete and partial was used for the web survey. This was in recognition that web respondents tend to drop out of the survey more often than those completing by CATI or paper. There also tends to be more item-missing data on the web. For the web, if a partial survey was defined as early as completion of the stalking section, there would be a significant amount of missing data for measures that rely on later sections of the questionnaire. Of particular concern were the sections asking about rape and made to penetrate, which come relatively late in the survey. These definitions also considered a minimum level of data quality, such as providing data for the basic measures used on NISVS.

A web survey was considered a full complete if there were responses through the consequences section and met the following criteria:

There had to be substantive answers to at least half of the items that screen for lifetime victimization.

The respondent had to correctly answer at least one of the two questions designed to catch those who were not paying attention. One of these items was placed at the beginning of the questionnaire, in the Health section, which asked the respondent how carefully they were reading the survey:

[SAT1] I am reading this survey carefully.

Yes 1

No 2

The second item is in the last section of the survey. This instructed the respondent to select a particular response category.

[SAT2] We just want to see if you are still awake. Please select ‘’Neutral” to this question.

Strongly Disagree 1

Disagree 2

Neutral 3

Agree 4

Strongly Agree 5

A partial complete were those:

that provided answers through the rape/made to penetrate section, but did not get through the consequences section

had substantive answers to at least half of the items that screen for lifetime victimization.

The respondent had to correctly answer at least one of the two questions designed to catch those who were not paying attention.

There were relatively few people who failed two questions designed to check on attention. In total, there were six individuals who marked not reading the survey carefully on SAT1. There were 20 who did not pick the neutral category in SAT2. Only one respondent picked both “not reading carefully” and a non-neutral category. This one individual was taken out of the analytic dataset (n=3526, Table 3-4).

Survey Results

1. For the RDD sample, the total number of phone numbers purchased was 58,838 (Table 3-1). Approximately half of these were taken out after pre-screening. A total of 27,476 were dialed.

2. Response rates were calculated using American Association for Public Opinion Research (AAPOR) Response Rate 4 (RR4) (Tables 3-2 and 3-3). They include both the Phase 1 and the NRFU stages of the surveys. The response rate is weighted to account for the sampling at the NRFU stage.

2. The overall ABS response rate was 33.1 percent (Table 3-3). The screener response rate was 50.3 percent and the extended response rate was 65.3 percent.

3. The RDD response rate was 10.8 percent. The landline sample had a response rate of 18.4 percent compared to the cellphone sample with a rate of 8.5 percent.

4. For RDD, there is a large difference in the response rate between landline and cell at the screener level (28.5% for landline vs. 14.9% for cellphone). A large percentage of the RDD nonresponse is in the unknown category (UH), primarily from no one answering the phone (58%). The second biggest category are refusals (21%).

5. For the ABS, many of those who dropped out did so at the beginning of the survey (Table 3-4). There were also increases in dropouts at the sexual harassment and first section on rape.

6. For the ABS sample, a large portion of the surveys were completed on the web (3,306 out of 3,526 – 93.7%; data not shown). There were 187 completed by paper and 33 for the call-in CATI.

Table 3-1. Disposition of sampled telephone numbers

|

Frame |

||

Landline |

Cell |

Combined |

|

Total numbers purchased |

29,158 |

29,680 |

58,838 |

Ineligible pre-screened out |

23,015 |

8,347 |

31,362 |

Nonresidential |

2,014 |

|

|

Nonworking |

20,663 |

|

|

Inactive and Unknown |

|

8,347 |

|

LL ported to CP |

338 |

|

|

|

|

|

|

Total numbers released |

6,143 |

21,333 |

27,476 |

Phone numbers released in predictor and main |

4,143 |

13,333 |

17,476 |

Phone numbers in reserve |

2,000 |

8,000 |

10,000 |

Total dialed |

6,143 |

21,333 |

27,476 |

Table 3-2. ABS and RDD survey results by final disposition codes*

Classification |

ABS |

RDD |

||||||||||

Overall |

Landline |

Cell |

||||||||||

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

|

I: Complete |

5,765 |

3,630 |

3,630 |

3,014 |

1,606 |

1,606 |

872 |

500 |

500 |

2,141 |

1,106 |

1,106 |

P: Partial |

0 |

131 |

131 |

0 |

132 |

132 |

0 |

42 |

42 |

0 |

90 |

90 |

R: Refusal |

50 |

183 |

233 |

4,465 |

500 |

4,965 |

762 |

146 |

908 |

3,703 |

354 |

4,057 |

O: Other Nonresponse |

1 |

0 |

1 |

1,227 |

247 |

1,474 |

164 |

70 |

234 |

1,063 |

176 |

1,240 |

NC: Noncontact |

0 |

1,814 |

1,814 |

0 |

33 |

33 |

0 |

6 |

6 |

0 |

27 |

27 |

UO: Unknown Other |

5,996 |

0 |

5,996 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

UH: No answer/call blocking |

0 |

0 |

0 |

13,593 |

458 |

14,051 |

2,612 |

93 |

2,705 |

10,982 |

365 |

11,346 |

SO: Ineligible |

755 |

7 |

762 |

229 |

4 |

233 |

3 |

0 |

3 |

226 |

4 |

230 |

Data weighted to account for the subsampling at the nonresponse follow-up. RDD counts exclude those numbers taken out in the pre-screening.

Table 3-3. Response, cooperation, refusal, contact, and yield rates for ABS and RDD samples

Preliminary measures |

ABS |

RDD |

||||||||||

Overall |

Landline |

Cell |

||||||||||

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

Screener |

Extended |

Overall |

|

Response Rate AAPOR RR4 |

50.3% |

65.3% |

33.1% |

18.1% |

58.5% |

10.8% |

28.5% |

63.3% |

18.4% |

14.9% |

56.6% |

8.5% |

Cooperation Rate: (I+P)/(I+P+R+O) |

99.1% |

95.3% |

94.1% |

40.3% |

77.7% |

25.9% |

53.4% |

78.8% |

37.4% |

36.6% |

77.2% |

22.8% |

Refusal Rate: R/((I+P)+(R+NC+O)+ UH + UO) |

0.4% |

3.2% |

2.0% |

25.6% |

16.8% |

29.4% |

24.9% |

17.0% |

30.7% |

25.8% |

16.8% |

29.0% |

Contact Rate: (I+P)+R+O / (I+P)+R+ O+NC+(UH + UO) |

49.2% |

68.5% |

33.8% |

50.6% |

83.7% |

48.8% |

58.7% |

88.6% |

57.0% |

48.2% |

81.7% |

46.4% |

Yield Rate: I/Released Cases |

45.9% |

63.0% |

28.9% |

11.0% |

53.3% |

5.8% |

13.8% |

57.3% |

7.9% |

10.1% |

51.6% |

5.2% |

For ABS:

Response Rate 4 = (I+P)/((I+P) + (R+NC+O) + e(UH+UO)]

e calculated using methods in DeMatteis, J. (2019). Computing “e” in self-administered addressee-based sampling studies. Survey Practice, 12(1). Available at: https://www.surveypractice.org/api/v1/articles/8282-computing-e-in-self-administered-address-based-sampling-studies.pdf

For RDD:

Response Rate 4 = (I+P)/(I+P+R+NC+O)+(e1xUO)+(e1xe2xUH)

e1is the calculated the proportion of eligibles divided by the number of eligibles and known ineligibles for the household screener. E2 is the same proportion but for the extended interview.

Overall response rate calculated by combining the cell and landline samples in proportion to thenumbers that were dialed for each frame (23% for landline; 77% for cell).

Table 3-4. Last section completed for RDD and ABS by completion status X

Section |

RDD |

ABS |

||||

Total |

Complete or Partial |

Not complete |

Total |

Complete or Partial |

Not complete+ |

|

Health characteristics |

23 |

0 |

23 |

188 |

0 |

188 |

Stalking |

27 |

0 |

27 |

72 |

0 |

72 |

Sexual harassment, unwanted touching |

50 |

50 |

0 |

138 |

0 |

138 |

Completed alcohol/drug-facilitated rape and made to penetrate |

24 |

24 |

0 |

87 |

0 |

87 |

Unwanted sex due to threats of harm/physical force |

3 |

3 |

0 |

67 |

1 |

66 |

Attempted physically forced sex |

12 |

12 |

0 |

26 |

1 |

25 |

Sexual violence: outcomes of rape and made to penetrate |

0 |

0 |

0 |

2 |

2 |

0 |

Psychological aggression |

4 |

4 |

0 |

48 |

48 |

0 |

Physical violence |

7 |

7 |

0 |

32 |

31 |

1 |

Consequences and follow-up |

1 |

1 |

0 |

16 |

14 |

2 |

Debriefing questions |

5 |

4 |

0 |

103 |

76 |

27 |

Completed all applicable questions |

1,356 |

1,356 |

0 |

3,409 |

3,353 |

56 |

Total |

1,512 |

1,461 |

50 |

4,188 |

3,526 |

662 |

X Unweighted counts

+ Surveys that are not complete but dropped out after attempted forced sex are those that did not meet the other criteria related to completed survey (e.g., too much missing data; did not answer trap questions correctly).

4. How Do Prevalence Estimates Compare Between ABS and RDD Surveys?

Summary |

|

Lifetime Prevalence Estimates

1. Estimates for total population for all types of victimization are higher for RDD than ABS. All but one of the differences are statistically significant at least at the 5 percent level (Table 4-1). The one exception is for stalking by an intimate partner.

2. For the total population, most of the ABS estimates range between 58 percent to 75 percent of the RDD estimate. For example, the ratio between the ABS to RDD estimates for unwanted touching, sexual coercion, and rape are .58, .66, and .65, respectively. The ratio gets somewhat larger for victimizations involving intimate partner violence, ranging from .73 to .80.

3. The pattern is similar for the sex-specific estimates (Table 4-2). All ABS estimates are nominally lower than RDD estimates. Many of the differences are statistically significant at least at the 5 percent level.

4. For females, all but one of the comparisons between ABS and RDD are significant at least at the 5 percent level. Using the ratio of ABS to RDD, the biggest difference is for unwanted touching where the ABS estimate is about 60 percent of the RDD estimate (35.0% vs. 58.5%). The ratio of the ABS estimate to RDD for other types of Contact Sexual Violence are .65 (sexual coercion) and .62 (rape) of the RDD estimate.

5. For males, while all ABS estimates are lower than RDD, not all differences are statistically significant. Part of this may be the lower estimates for males, which require larger sample sizes to have the power to detect statistical significance.

6. For females, the difference between ABS and RDD are larger for contact sexual violence/stalking relative to intimate partner victimizations of the same type. Ratios of ABS to RDD for contact sexual violence/stalking are between .60 and .66, while it is between .74 to .85 for intimate partner victimizations. It is more mixed for males, with no clear pattern.

7. For both RDD and ABS, the estimates for females are generally higher than for males. Many differences between males and females are statistically different; most well beyond the 5 percent level (test not shown). For RDD there are two exceptions to this. The male RDD estimate for intimate partner physical violence (IPPV) is about the same as for females (33.7% vs. 33.8%). The estimate for male IPPA is less than for females, but it is not statistically different (44.4% vs. 49.6%; p>.10).

12-Month Prevalence Estimates

8. For the total population 12-month prevalence estimates, there is not a consistent difference between ABS and RDD (Table 4-3). RDD is larger for some of the estimates, but only one reaches statistical significance (unwanted touching - 4.0 vs. 2.3; p<.05). For several types of victimizations, the ABS is larger, but do not reach statistical significance. The 12-month estimates are generally less than 5 percent, which reduces the statistical power when testing for differences, relative to lifetime estimates. However, with a few exceptions, the nominal differences between the estimates are not large.

9. The estimates for males are generally larger for the RDD relative to ABS (Table 4-4), with a few reaching, or approaching, statistical significance, including unwanted touching (3.2 vs. 1.1; p<.06) and IPPA (6.6% vs. 3.6%; p<.05).

10. For females, none of the differences between ABS and RDD are statistically significant. The differences are not consistently in one direction.

11. The relationship between sex and victimization differs between ABS and RDD. There is a strong relationship for ABS. All ABS estimates for females are higher than for males. All differences are statistically significant, except for IPPV (3.2% vs. 2.0%; p<.10; significance test not shown). For RDD, males have higher estimates than females for IPPV and IPPA. All the other differences for RDD are higher for females, although not all statistically significant.

Table 4-1. Lifetime prevalence estimates for selected measures of sexual, physical, and emotional abuse*

|

RDD |

ABS |

Sig. testing p‑value |

||

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

||

Contact sexual violence |

113,677,147 |

47.1 |

73,337,937 |

30.4 |

0.000 |

Unwanted touching |

100,827,179 |

41.8 |

58,266,012 |

24.4 |

0.000 |

Sexual coercion |

44,361,636 |

18.4 |

28,932,456 |

12.1 |

0.000 |

Rape |

43,781,903 |

18.1 |

28,281,935 |

11.7 |

0.000 |

Made to penetrate+ |

n/a |

n/a |

n/a |

n/a |

n/a |

Stalking |

33,892,785 |

14.0 |

22,136,378 |

9.2 |

0.000 |

Intimate partner violence |

94,033,171 |

38.9 |

69,125,448 |

28.6 |

0.000 |

Contact sexual violence by intimate partner |

35,373,223 |

14.6 |

28,514,862 |

11.8 |

0.050 |

Stalking by intimate partner |

16,253,714 |

6.7 |

12,417,282 |

5.2 |

0.114 |

Intimate partner physical violence |

81,443,393 |

33.7 |

54,232,032 |

22.7 |

0.000 |

Intimate partner psychological aggression |

113,621,584 |

47.0 |

79,080,932 |

33.1 |

0.000 |

n/a – Not applicable. Not asked of female respondents

* Combines estimates for males and females

+ Made to penetrate only asked of males.

Table 4-2. Lifetime prevalence estimates for selected measures of sexual, physical, and emotional abuse by sex+

|

Males |

Females |

||||||||

RDD |

ABS |

p‑value |

RDD |

ABS |

p‑value |

|||||

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

|||

Contact sexual violence |

36,028,444 |

30.3 |

20,215,501 |

17.5 |

0.000 |

77,648,703 |

63.3 |

53,122,435 |

42.2 |

0.000 |

Unwanted touching |

29,097,986 |

24.5 |

14,723,766 |

12.9 |

0.000 |

71,729,193 |

58.5 |

43,542,246 |

35.0 |

0.000 |

Sexual coercion |

10,832,375 |

9.1 |

6,609,717 |

5.8 |

0.066 |

33,529,261 |

27.3 |

22,322,739 |

17.9 |

0.000 |

Rape |

5,025,974 |

4.2 |

3,638,372 |

3.1 |

0.261 |

38,755,929 |

31.6 |

24,643,563 |

19.7 |

0.000 |

Made to penetrate |

8,802,790 |

7.4 |

5,831,723 |

5.0 |

0.130 |

n/a |

n/a |

n/a |

n/a |

n/a |

Stalking |

8,427,334 |

7.1 |

5,615,161 |

4.9 |

0.104 |

25,465,451 |

20.8 |

16,521,217 |

13.2 |

0.001 |

Intimate partner violence |

42,182,620 |

35.5 |

23,853,762 |

20.6 |

0.000 |

51,850,551 |

42.3 |

45,271,686 |

36.0 |

0.032 |

Contact sexual violence by intimate partner |

7,030,991 |

5.9 |

5,968,605 |

5.2 |

0.343 |

28,342,232 |

23.1 |

22,546,256 |

17.9 |

0.051 |

Stalking by intimate partner |

4,214,824 |

3.5 |

2,493,065 |

2.2 |

0.168 |

12,038,890 |

9.8 |

9,924,217 |

7.9 |

0.203 |

Intimate partner physical violence |

40,021,937 |

33.7 |

20,896,232 |

18.3 |

0.000 |

41,421,457 |

33.8 |

33,335,799 |

26.8 |

0.009 |

Intimate partner psychological aggression |

52,770,530 |

44.4 |

33,525,608 |

29.2 |

0.000 |

60,851,053 |

49.6 |

45,555,325 |

36.7 |

0.000 |

n/a – Not applicable. Not asked of female respondents.

+ p-value is the result from a two-sample difference of means z-test.

Table 4-3. 12-month prevalence estimates for selected measures of sexual, physical, and emotional abuse+#

|

RDD |

ABS |

p‑value+ |

||

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

||

Contact sexual violence |

12,725,988 |

5.3 |

11,672,415 |

4.8 |

0.353 |

Unwanted touching |

9,570,647 |

4.0 |

5,601,152 |

2.3 |

0.042 |

Sexual coercion |

5,065,328 |

2.1 |

6,025,207 |

2.5 |

0.318 |

Rape |

2,621,355 |

1.1* |

2,824,323 |

1.2 |

0.391 |

Made to penetratex |

535,783 |

0.5* |

705,739 |

0.6* |

0.379 |

Stalking |

8,044,867 |

3.3* |

7,052,414 |

2.9 |

0.347 |

Intimate partner violence |

12,183,906 |

5.0 |

11,863,637 |

4.9 |

0.395 |

Contact sexual violence by intimate partner |

4,332,759 |

1.8* |

5,696,394 |

2.4 |

0.245 |

Stalking by intimate partner |

3,272,433 |

1.4* |

2,561,998 |

1.1 |

0.339 |

Intimate partner physical violence |

8,153,303 |

3.4 |

6,298,749 |

2.6 |

0.256 |

Intimate partner psychological aggression |

13,907,769 |

5.8 |

11,707,172 |

4.9 |

0.259 |

# Estimates combine male and females

* CV>30%

+ p-value is the result from a two-sample difference of means z-test.

x Made to penetrate only asked of males.

Table 4-4. 12-month prevalence estimates for selected measures of sexual, physical, and emotional abuse by sex+

|

Males |

Females |

||||||||

RDD |

ABS |

p‑value |

RDD |

ABS |

p‑value |

|||||

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

Number of victims |

Prevalence estimate |

|||

Contact sexual violence |

4,275,526 |

3.6 |

2,409,339 |

2.1 |

0.159 |

8,450,462 |

6.9 |

9,263,076 |

7.4 |

0.381 |

Unwanted touching |

3,813,563 |

3.2* |

1,314,381 |

1.1 |

0.058 |

5,757,084 |

4.7 |

4,286,771 |

3.4 |

0.246 |

Sexual coercion |

1,151,793 |

1.0* |

656,891 |

0.6* |

0.315 |

3,913,535 |

3.2 |

5,368,316 |

4.3 |

0.251 |

Rape |

189,379 |

0.2* |

452,275 |

0.4* |

0.208 |

2,431,975 |

2.0* |

2,372,049 |

1.9 |

0.397 |

Made to penetrate |

535,783 |

0.5* |

705,739 |

0.6* |

0.375 |

n/a |

n/a |

n/a |

n/a |

n/a |

Stalking |

2,532,928 |

2.1* |

2,132,029 |

1.9 |

0.380 |

5,511,940 |

4.5 |

4,920,385 |

3.9 |

0.353 |

Intimate partner violence |

5,495,404 |

4.6 |

3,265,312 |

2.8 |

0.131 |

6,688,503 |

5.5 |

8,598,325 |

6.8 |

0.249 |

Contact sexual violence by intimate partner |

1,658,230 |

1.4* |

796,062 |

0.7 |

0.228 |

2,674,529 |

2.2* |

4,900,331 |

3.9 |

0.089 |

Stalking by intimate partner |

798,304 |

0.7* |

392,260 |

0.3* |

0.318 |

2,474,129 |

2.0 |

2,169,738 |

1.7 |

0.379 |

Intimate partner physical violence |

4,854,894 |

4.1 |

2,332,904 |

2.0 |

0.083 |

3,298,409 |

2.7 |

3,965,845 |

3.2 |

0.349 |

Intimate partner psychological aggression |

7,828,448 |

6.6 |

4,108,231 |

3.6 |

0.035 |

6,079,320 |

5.0 |

7,598,941 |

6.1 |

0.265 |

n/a – Not applicable. Not asked of female respondents.

* CV>30%

+ p-value is the result from a two-sample difference of means z-test.

5. How Do ABS and RDD Compare with Respect to Nonresponse Bias?

Summary |

ABS. The most significant differences were for females in the ABS sample, which found the NRFU estimates to be higher than the Phase 1 sample. Interpreted as a measure of nonresponse bias, this indicates a negative bias (estimates are too low) for all types of victimization. There was no indication of bias for males. RDD. For males, the Phase 1 estimates were less than that of the NRFU for selected estimates. This is indicative of a negative bias for unwanted touching, sexual coercion, rape, IPPV and IPPA. For females, the Phase 1 estimates were higher than NRFU for selected estimates. This is indicative of positive bias for contact sexual violence by intimate partner and stalking by intimate partner.

|

The analyses in this chapter analyzes nonresponse bias (NRB) using four different methods: 1) Comparing demographic estimates to national benchmarks, 2) Comparing health indicators to the NHIS, 3) Comparing key NISVS outcome measures by the amount of effort to complete the survey, and 4) Comparing estimates to prior NISVS data.

Comparing Demographic and Socioeconomic Characteristics to National Benchmarks

One method to assess NRB is to compare the distribution of key demographic and socioeconomic indicators from the survey to national benchmarks before the full population weights are applied. The extent these characteristics are different than the national population is an indication of possible NRB.

Table 5-1 provides this comparison for age, sex, marital status, race-ethnicity, education, income, born in the U.S., access to internet, and homeownership. For these comparisons, the ABS and RDD surveys were weighted to reflect their probabilities of selection. For ABS this consists of weighting for selection within the household and selection for the NRFU. For RDD this adjustment had three components: 1) for the landline sample an adjustment was made for selection within the household; 2) for both frames an adjustment for selection for the NRFU; and 3) for both frames, an adjustment for the probability of selection into the landline or cellphone frame.

Many of the above demographic characteristics were used when raking to control totals when computing the final population weights. Comparisons using the above selection weights provides a profile of respondents before forcing the totals to be equal to the control totals used in the raking while still controlling for differential probabilities of selection built into the different survey designs.

1. Both the ABS and RDD surveys were close to the American Community Survey (ACS) distribution by sex and income (Table 5-1). The RDD survey had slightly more females than the national benchmark (50.0% vs. 48.7%; p=.288), while the ABS is slightly lower (47.1% vs. 48.7%; p=.076). With respect to income, both samples are close to the national distribution as measured by the ACS.

2. Both surveys underrepresent individuals who have low education. Both the RDD and ABS surveys are well below the national benchmark for those that have less than a high school degree (RDD - 24.6%, ABS - 21.1%, 36.4% - ACS). The two surveys differ somewhat for the other two education groups. The RDD has significantly more persons in the highest level of education (Bachelor’s degree or higher) than benchmark (46.6% vs. 28.9%) and fewer among those with some college (28.8% vs. 34.3%). The ABS survey also over-represents those in the highest education group (44.6% vs. 28.9%) but is very close for those who have some college (34.3% vs. 34.8%).

3. Both surveys over-represent non-Hispanic Whites (RDD 68.7%, ABS 71.3%, and ACS 64.1%).

4. Both RDD and ABS underrepresent Hispanics. RDD underrepresents by a larger amount than ABS (8.8% RDD, 12.2% ABS, and 15.7% ACS). RDD has good representation of non-Hispanic Blacks (12.5% RDD, and12.0% ACS), while ABS underrepresents this group (6.6% ABS).7

5. Both surveys over-represent married individuals and underrepresent those who have never been married. The ABS had the highest proportion of married individuals (57.3% ABS, 53.5% RDD, and 50.4% ACS) and least never married (20.0% ABS, 25.6% RDD, and 30.0% ACS).

6. Both RDD and ABS over-represent those who have access to the internet by a considerable amount. With respect to those with any type of access, almost all of the RDD (96.4%) and ABS (98.6%) have access. This is about 10 percentage points higher than the ACS estimate (87.4%).

7. Table 5-2 provides the distributions for the same characteristics once applying the final population weights. The final population weights were created by raking to ACS distributions for age, race-ethnicity, marital status, and education. Given this, it is not surprising that both RDD and ACS are very close to the ACS distributions for these characteristics. The distributions for the other characteristics shift a bit. For example, there is now an overrepresentation in the lower income groups.

Comparing Selected Health Measures to the National Health Interview Survey

One shortcoming of using demographics to assess NRB is the analysis does not account for the fact that the population weights compensate for differences in the national distribution for many of the characteristics of interest. It only provides an indication of differences before the final weights are applied. A second method to assess the representation of the sample is to examine the alignment for characteristics that might be related to nonresponse but are not used to develop the weights. To apply this method the analysis compares several different measures of health and injuries to the NHIS. These comparisons use the final NISVS weights which adjust to align the population distributions to national totals. The assumption is that since the NHIS has a high response rate it can be used as a standard against which to assess potential NRB of the Feasibility Study surveys. To do this, several questions from the NHIS were placed on the Feasibility Study surveys.

There are differences between the NISVS surveys and NHIS that may confound the comparisons. One is that not all questions were worded exactly as on the NHIS. A second difference is the mode of interviewing. The NHIS is a mix of both in-person and telephone interviewing. A third difference is who is selected as the respondent. For some questions the NHIS respondent is a household respondent, while it is a randomly selected person for the Feasibility Study surveys.

Nonetheless, it is still useful to compare the ABS and RDD surveys to the NHIS when assessing the extent of NRB.

8. The surveys included a question on whether any household member had a physical, mental or emotional problem preventing them from working (Table 5-3). The RDD estimate is virtually the same as for the NHIS (12.9% RDD and 12.8% NHIS). The estimate for the ABS is higher (14.8%) than both, but only by two percentage points (p<.20).

9. The surveys included a question on whether an adult in the household had been hospitalized overnight in the last 12 months. The RDD estimate (19.4%) is above the NHIS estimate (15.6%). The ABS estimate is below (12.3%).

10. All surveys included a question on whether the respondent had been told he or she had asthma. The RDD estimate (13.2%) is virtually the same as the NHIS (13.4%). The ABS estimate is slightly above the NHIS estimate (14.1% vs. 13.4%). The difference between the RDD and ABS is not statistically significant (p=.325).

11. The NISVS estimates for those depressed are somewhat higher than the NHIS. The RDD estimate is 27.7 percent compared to 24.2 percent for the ABS. Both of which are significantly higher than the estimate for the NHIS of 15.7 percent. The RDD is significantly higher than the ABS (p<.05).

12. Both surveys included a question, originally taken from the NHIS, on whether any adult in the household had been injured in the last 3 months. Since fielding the surveys we have learned that this particular question has been taken off the public use file. To date, we have been unable to process the data to retrieve an equivalent estimate from the NHIS. To derive the estimate, it will be necessary to get access through the NCHS restricted use enclave.

The ABS estimate is higher than the RDD estimate (27.2% ABS vs 20.7% RDD; p<.000).

Comparing by Level of Effort to Complete the Survey

Using demographics and health characteristics to assess NRB does not directly test whether the outcomes of interest are biased. There will only be bias if the characteristic is correlated with both nonresponse and the particular outcome. For example, both the ABS and RDD over-represent those with an internet connection. For this to lead to NRB there would need to be a correlation between internet access and a particular type of victimization. Another method to test for NRB that does not rely on this assumption is to compare the outcomes of interest by how much effort the survey operations take to complete the survey. This type of analysis, called a continuum of resistance model, assumes those respondents who require more effort to complete the survey resemble those who do not respond at all. For the Feasibility survey, this can be accomplished by comparing those who responded after the initial attempts were made to get a completed survey to those who responded during the NRFU. To motivate nonrespondents to participate as part of the NRFU, respondents were offered more money to complete the survey.

The logic of this analysis is that if the NRFU estimates are higher than Phase 1 then this is indicative that the estimates discussed in the prior chapter are too low or a negative bias. That is if the NRFU respondents represent the non-respondents, then getting 100% response would entail adding individuals who have higher estimates. The opposite is the case if the NRFU estimates are lower than the Phase 1 estimates.

One disadvantage of the continuum of resistance methodology is the assumption that those who responded to the NRFU resemble the non-responders. This assumption can be particularly problematic for surveys with response rates as low as 10 to 30 percent. In this case the assumption is that those responding to the NRFU adequately represent the 70 to 90 percent who did not respond. Nonetheless, it is still useful to assess how those who did exhibit some reluctance to survey and eventually cooperated differ for the main outcomes of interest to the NISVS.

13. The ABS prevalence estimates for the total population before NRFU are largely less than the NRFU estimates, indicating a negative bias (rates are too low) (Table 5-4). Many of the differences are statistically significant at least at the 5 percent level. However, this pattern is not consistent across males and females (Tables 5-5, 5-6).

Males (Table 5-5). The direction of the difference between before NRFU and the NRFU are both negative and positive. None of these differences are statistically significant.

Females (Table 5-6). All of the differences are negative, indicating a negative bias in the estimates (i.e., survey estimates are too low). All of these are statistically significant.

14. The differences for RDD are a mixture of both negative and positive signs (Table 5-4). The differences for rape and IPPV are negative and statistically significant. The differences for contact sexual violence by intimate partner and stalking by an intimate partner are positive and statistically significant. The patterns differ by sex.

Males (Table 5-5). The direction of the difference between before NRFU and the NRFU are primarily negative. Many of the significant effects are for the components of contact sexual violence including unwanted touching, sexual coercion and rape. In addition, for IPPV and IPPA the differences are negative and statistically significant.

Females (Table 5-6). None of the differences for contact sexual violence or stalking are statistically significant. The differences for Contact Sexual Violence by Intimate Partner and Stalking by Intimate Partner is positive and significant.

Comparing to Prior NISVS with Different Response Rates

Like virtually all RDD studies, the NISVS response rate has declined between 2010 (33.1%) and 2016/2017 (8.5%). At the same time, the lifetime victimization estimates have increased. Between the 2015 and 2016/2017 administrations, the response rate dropped from 26.4 percent to 8.5 percent. At the same time, the lifetime prevalence estimates increased (Table 5-7). For example, the female estimate of contact sexual violence increased from 43.6 percent to 54.3 percent. One would not expect the lifetime estimates to change this much in a 1- or 2-year time-period. The age-cohorts are not shifting dramatically. Theoretically, women age 18+ in 2015 would be between ages 19 and 20+ in 2016/2017. Lifetime experiences may change a bit, but it would be unusual that it would change as much as indicated.

A second explanation is NRB. Prior studies have not found a strong correlation between measures of NRB and the survey’s response rate (Groves and Peytcheva, 2008).8 Nonetheless, theoretically there are reasons to believe that as response rates go down, the chances of NRB goes up. In a re-analysis of data from Groves and Peytcheva (2008), Brick and Tourangeau (2017)9 found study-level correlations of between -0.4 and -0.5 between the response rate and the average NRB for a study. The co-occurrence between the drop in response rate and spike in prevalence rate nominally suggests this explanation may apply.

An extensive analysis of NRB was conducted by CDC when assessing the quality of the 2016/2017 data set.10 This included an assessment of changes in the demographic distributions of survey respondents across the three time periods the NISVS has been collected. In addition, the analysis compared measures of medical conditions (e.g., asthma) collected on NISVS to the same measures from surveys with higher response rates. The consistency of these same health measures was also measured over the NISVS time series. The theory being that if there was significant NRB, then it may also have affected these health measures. None of these analyses suggest significant NRB for the 2016/2017 data. As noted above, however, these types of analyses do not directly test whether there is NRB for the measures of victimization.11

Another possible explanation discussed in the CDC report is an increased willingness to report victimization on the part of the general public. As indicated by social media searches,12 a number of highly publicized incidents boosted consciousness of sexual violence during this time period. Google trends show high interest on topics like rape or sexual assault during the 2012 – 2019 period. This is a logical explanation, but still not a direct link to the trends. Social media does not provide a clear measure of the views of the general public, so it is not clear how to generalize the observed trends to how respondents would answer questions on a survey. The trends of the NISVS during this time period run counter to several other surveys that have been conducted over the same time period and had relatively stable response rates. For example, the estimates of forced intercourse for females and males measured by the National Survey of Family Growth (NSFG) did not change for the time periods 2011-15 and 2015-17. A survey of 21 colleges in 2015 and 2019 did not find significant increases in estimates for the large majority of the schools.13 The rape and sexual assault estimate from the National Crime Victimization Survey (NCVS) has remained stable over the 2015-17 time period.

A third explanation that may account for the jump in the lifetime estimates for the 2016/2017 data are changes in the questionnaire between 2015 and 2016/2017. The change streamlined the questionnaire:

by doing less follow-up in the 2016/2017 instrument. Specifically, rather than asking about perpetrators after each ‘yes’ to a victimization screening item, the follow-ups were done after each section (e.g., all stalking screening items; all physically forced rape screening items, etc.).

by changing the follow-up perpetrator questions. In 2015 the initials of perpetrators were collected and these were used to check overlap between perpetrators across different types of victimizations. This was not done in 2016/2017.

The more detailed follow-up in 2015 may have led some respondents to learn that more follow-up questions were being asked once they endorse an item. This could depress victimization estimates relative to 2016/2017 if respondents do not endorse a screening item to avoid the follow-ups.14

For purposes of discussion below, we assume this change was not primarily responsible for changes in estimates between 2015 and 2016/2017. Neither the 2015 nor the 2016/2017 are ‘grouped’ designs. A grouped design holds all follow-up questions until after all screening items are completed. Grouped designs have been shown to lead to higher reports of events relative to interleafed designs. 15 Both 2015 and 2016/2017 are interleafed designs. Both have follow-up questions in between screening items. The difference between 2015 and 2016/2017, from this perspective, is a matter of degree.

With the above caveats in mind, this section compares the 2015 and 2016/2017 results to the ABS and RDD surveys. The analysis makes the assumption that the 2015 survey was subject to less NRB than the 2016/2017 survey. If this is true, then significant deviations from 2015 is indicative of NRB. Similarly, estimates close to the 2016/2017 survey are interpreted as a sign of NRB. As discussed above, attributing the change in 2016/2017 to NRB is subject to debate and may only be part of the explanation for the increase.

To make the comparison, the NISVS estimates for 2015 were taken from the published report for that year. The CDC provided the 2016/2017 estimates.16

16. The difference between ABS and 2015 is consistently smaller than the difference between ABS and 2016/2017 (Table 5-8). For females, the gap is very large for most types of victimizations. For example, for contact sexual violence the absolute difference with 2015 is 1.4 percentage points. This compares to 12.1 percentage points for the difference with 2016-17. For males the differences are mostly in the same direction, but not as large.

17. The RDD estimates for females are generally closer to 2016/2017. This is most evident for sexual coercion, and intimate partner psychological aggression (IPPA). The results are more mixed for males where the RDD and ABS estimates are not consistently above or below the 2016/2017 estimates.

18. The RDD estimates for males are uniformly closer to the 2016/2017, by a larger amount than for females.

Table 5-1. Comparison of demographic distributions for RDD, ABS, and American Community Survey (ACS)*#

|

Estimates (percent) |

p-value |

||||

RDD |

ABS |

ACS |

RDD-ACS |

ABS-ACS |

ABS-RDD |

|

Age |

||||||

18-29 |

13.9 |

19.5 |

21.5 |

0.000 |

0.020 |

0.000 |

30-44 |

19.7 |

25.7 |

25.1 |

0.000 |

0.302 |

0.000 |

45-64 |

25.9 |

24.4 |

25.7 |

0.396 |

0.116 |

0.262 |

65+ |

40.4 |

30.4 |

27.7 |

0.000 |

0.001 |

0.000 |

Sex |

||||||

Male |

50.0 |

47.1 |

48.7 |

0.288 |

0.076 |

0.118 |

Female |

50.0 |

52.9 |

51.3 |

0.288 |

0.076 |

0.118 |

Marital status |

||||||

Married |

53.5 |

57.3 |

50.4 |

0.074 |

0.000 |

0.054 |

Never married |

25.6 |

20.0 |

30.0 |

0.000 |

0.000 |

0.000 |

Other |

20.8 |

22.8 |

19.5 |

0.277 |

0.000 |

0.205 |

Race |

||||||

Hispanic |

8.8 |

12.2 |

15.7 |

0.000 |

0.000 |

0.003 |

NH-White |

68.7 |

71.3 |

64.1 |

0.003 |

0.000 |

0.120 |

NH-Black |

12.5 |

6.6 |

12.0 |

0.351 |

0.000 |

0.000 |

NH-Multiracial |

3.5 |

2.6 |

1.7 |

0.006 |

0.003 |

0.176 |

NH-Other |

6.5 |

7.3 |

6.6 |

0.398 |

0.125 |

0.292 |

Education |

||||||

High school or less |

24.6 |

21.1 |

36.4 |

0.000 |

0.000 |

0.121 |

Some college |

28.8 |

34.3 |

34.8 |

0.012 |

0.374 |

0.044 |

Bachelor’s or higher |

46.6 |

44.6 |

28.9 |

0.000 |

0.000 |

0.293 |

Income |

||||||

Less than $25,000 |

18.1 |

17.3 |

15.7 |

0.127 |

0.030 |

0.359 |

$25,000 - $49,999 |

18.4 |

18.6 |

20.0 |

0.254 |

0.063 |

0.398 |

$50,000 - $74,999 |

16.5 |

15.9 |

18.3 |

0.095 |

0.000 |

0.356 |

$75,000+ |

47.0 |

48.3 |

46.0 |

0.343 |

0.022 |

0.332 |

Born in United States |

||||||

Yes |

90.1 |

87.5 |

81.6 |

0.000 |

0.000 |

0.029 |

No |

9.9 |

12.5 |

18.4 |

0.000 |

0.000 |

0.029 |

Access to internet not including through cellphone |

||||||

Yes |

90.4 |

94.2 |

75.6 |

0.000 |

0.000 |

0.000 |

No |

9.6 |

5.8 |

24.4 |

0.000 |

0.000 |

0.000 |

Any access to internet |

||||||

Yes |

96.4 |

98.6 |

87.4 |

0.000 |

0.000 |

0.000 |

No |

3.6 |

1.4 |

12.5 |

0.000 |

0.000 |

0.000 |

Home ownership |

||||||

Owned |

72.8 |

73.5 |

66.8 |

0.000 |

0.000 |

0.370 |

Rented |

25.4 |

25.6 |

31.6 |

0.000 |

0.000 |

0.395 |

Other |

1.9 |

0.9 |

1.6 |

0.337 |

0.000 |

0.047 |

# Except where noted, estimates combine male and females * Unweighted with an adjustment for probability of selection. Significance tests assume no sampling error for the ACS.

For ABS, this consists of weighting for selection within the household and selection for the nonresponse follow-up (NRFU). For RDD, this adjustment had three components: 1) for the landline sample an adjustment was made for selection within the household; 2) for both landline and cellphone frames an adjustment for selection for the Phase 2 NRFU; and 3) for both frames, an adjustment for the probability of selection into the landline or cellphone frame.

Table 5-2. Comparison of final weighted demographic distributions for RDD, ABS, and American Community Survey (ACS)+#

|

Estimates (percent) |

p-value |

||||

RDD |

ABS |

ACS |

RDD-ACS |

ABS-ACS |

ABS-RDD |

|

Age X |

||||||

18-29 |

21.5 |

21.6 |

21.5 |

|

|

|

30-44 |

25.1 |

25.2 |

25.1 |

|

|

|

45-64 |

25.7 |

25.7 |

25.7 |

|

|

|

65+ |

27.7 |

27.5 |

27.7 |

|

|

|

Sex X |

||||||

Male |

49.2 |

48.4 |

48.7 |

|

|

|

Female |

50.8 |

51.6 |

51.3 |

|

|

|

Marital status X |

||||||

Married |

50.6 |

50.5 |

50.4 |

|

|

|

Never Married |

30.1 |

30.0 |

30.0 |

|

|

|

Other |

19.3 |

19.5 |

19.5 |

|

|

|

Race X |

||||||

Hispanic |

15.8 |

15.8 |

15.7 |

|

|

|

NH-White |

64.0 |

64.1 |

64.1 |

|

|

|

NH-Black |

12.0 |

12.0 |

12.0 |

|

|

|

NH-Multiracial |

1.7 |

1.7 |

1.7 |

|

|

|

NH-Other |

6.5 |

6.4 |

6.6 |

|

|

|

Education X |

||||||

High school or less |

32.9 |

36.2 |

36.4 |

|

|

|

Some college |

38.3 |

34.8 |

34.8 |

|

|

|

Bachelor’s or higher |

28.9 |

29.0 |

28.9 |

|

|

|

Income |

||||||

Less than $25,000 |

21.5 |

23.4 |

15.7 |

0.001 |

0.000 |

0.246 |

$25,000 - $49,999 |

19.2 |

21.6 |

20.0 |

0.343 |

0.080 |

0.148 |

$50,000 - $74,999 |

17.9 |

15.6 |

18.3 |

0.385 |

0.000 |

0.137 |

$75,000+ |

41.4 |

39.5 |

46.0 |

0.003 |

0.000 |

0.203 |

Born in United States |

||||||

Yes |

89.5 |

86.3 |

81.6 |

0.000 |

0.000 |

0.011 |

No |

10.5 |

13.7 |

18.4 |

0.000 |

0.000 |

0.011 |

Access to internet not including through cellphone |

||||||

Yes |

89.1 |

92.2 |

87.4 |

0.123 |

0.000 |

0.017 |

No |

10.9 |

7.8 |

12.6 |

0.123 |

0.000 |

0.017 |

Access to internet |

||||||

Yes |

96.4 |

98.6 |

87.4 |

0.000 |

0.000 |

0.011 |

No |

3.6 |

1.4 |

12.6 |

0.000 |

0.000 |

0.011 |

Home ownership |

||||||

Owned |

72.8 |

73.5 |

66.8 |

0.341 |

0.007 |

0.352 |

Rented |

25.4 |

25.6 |

31.6 |

0.220 |

0.046 |

0.386 |

Other |

1.9 |

0.9 |

1.6 |

0.127 |

0.017 |

0.034 |

# Except where noted, estimates combine male and females; + p-value is the result from a two-sample difference of means z-test.

X – Characteristic is a raking dimension. There are no statistical significance tests computed because the totals were forced to the national distribution.

Final population weights used for estimates. Significance tests assume ACS has no sampling error.

Table 5-3. Comparison of final weighted health indicators for RDD, ABS, and national benchmarks+#

|

Estimates (percent) |

p-value |

||||

RDD |

ABS |

NHIS |

RDD-NHIS |

ABS-NHIS |

ABS-RDD |

|

Any adult in the household have physical, mental, or emotional problem preventing from working |

||||||

Yes |

12.9 |

14.8 |

12.8 |

0.397 |

0.015 |

0.200 |

No |

87.1 |

85.2 |

87.2 |

0.397 |

0.015 |

0.200 |

Any adult in the household been hospitalized overnight in last 12 months |

||||||

Yes |

19.4 |

12.3 |

15.6 |

0.005 |

0.000 |

0.000 |

No |

80.6 |

87.7 |

84.3 |

0.007 |

0.000 |

0.000 |

Doctor, nurse, or other health professional told you that you have asthma |

||||||

Yes |

13.2 |

14.1 |

13.4 |

0.391 |

0.279 |

0.325 |

No |

86.8 |

85.9 |

86.5 |

0.383 |

0.308 |

0.325 |

Doctor, nurse, or other health professional told you that you have any type of depression |

||||||

Yes |

27.7 |

24.2 |

15.7 |

0.000 |

0.000 |

0.022 |

No |

72.3 |

75.8 |

84.2 |

0.000 |

0.000 |

0.022 |

Any adult Injured in household in last 3 months |

||||||

Yes |

20.7 |

27.2 |

n/a |

n/a |

n/a |

0.000 |

No |

79.3 |

72.8 |

n/a |

n/a |

n/a |

0.000 |

n/a – Not applicable. Estimate could not be computed because the public use dataset did not have the relevant variables.

# Estimates combine male and females

+ p-value is the result from a two-sample difference of means z-test.

Table 5-4. Lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys+#

|

ABS |

RDD |

||||||

Before NRFU |

NRFU |

Difference |

p-value |

Before NRFU |

NRFU |

Difference |

p-value |

|

Contact sexual violence |

29.7 |

34.9 |

-5.2 |

0.016 |

46.8 |

48.3 |

-1.4 |

0.353 |

Unwanted touching |

23.5 |

30.6 |

-7.1 |

0.001 |

41.4 |

43.6 |

-2.2 |

0.290 |

Sexual coercion |

11.8 |

14.6 |

-2.8 |

0.092 |

18.1 |

19.7 |

-1.6 |

0.322 |

Rape |

11.0 |

16.8 |

-5.8 |

0.002 |

17.1 |

23.1 |

-6.0 |

0.014 |

Made to penetrate* |

2.5 |

2.0 |

0.5 |

0.350 |

3.7 |

3.2 |

0.5 |

0.375 |

Stalking |

8.9 |

12.6 |

-3.7 |

0.034 |

14.5 |

11.9 |

2.6 |

0.212 |

Intimate partner violence |

27.7 |

35.2 |

-7.6 |

0.001 |

38.4 |

41.5 |

-3.1 |

0.211 |

Contact sexual violence by intimate partner |

11.6 |

13.1 |

-1.4 |

0.270 |

15.5 |

10.6 |

4.9 |

0.033 |

Stalking by intimate partner |

4.8 |

7.4 |

-2.7 |

0.083 |

7.5 |

3.1 |

4.4 |

0.013 |

Intimate partner physical violence |

22.0 |

28.0 |

-6.0 |

0.004 |

32.4 |

39.9 |

-7.5 |

0.007 |

Intimate partner psychological aggression |

32.1 |

40.2 |

-8.1 |

0.000 |

46.5 |

49.7 |

-3.3 |

0.221 |

# Estimates combine male and females

+ p-value is the result from a two-sample difference of means z-test.

* Made to penetrate only asked of males.

Table 5-5. Male lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys+

|

ABS |

RDD |

||||||

Before NRFU |

NRFU |

Difference |

p-value |

Before NRFU |

NRFU |

Difference |

p-value |

|

Contact sexual violence |

18.0 |

13.8 |

4.3 |

0.077 |

29.2 |

35.6 |

-6.4 |

0.084 |

Unwanted touching |

12.8 |

13.5 |

-0.7 |

0.380 |

23.0 |

31.8 |

-8.8 |

0.017 |

Sexual coercion |

5.8 |

5.6 |

0.2 |

0.396 |

7.7 |

15.8 |

-8.1 |

0.005 |

Rape |

2.8 |

5.4 |

-2.6 |

0.120 |

2.6 |

12.2 |

-9.6 |

0.000 |

Made to penetrate |

5.2 |

3.9 |

1.3 |

0.275 |

7.5 |

6.7 |

0.8 |

0.377 |

Stalking |

5.2 |

4.1 |

1.0 |

0.320 |

7.5 |

5.2 |

2.3 |

0.236 |

Intimate partner violence |

20.5 |

21.6 |

-1.1 |

0.360 |

33.1 |

47.0 |

-13.8 |

0.000 |

Contact sexual violence by intimate partner |

5.5 |

3.0 |

2.5 |

0.101 |

5.7 |

6.8 |

-1.1 |

0.358 |

Stalking by intimate partner |

2.0 |

1.9 |

0.1 |

0.398 |

3.8 |

2.2 |

1.6 |

0.278 |

Intimate partner physical violence |

18.0 |

20.2 |

-2.2 |

0.265 |

31.4 |

44.5 |

-13.1 |

0.000 |

Intimate partner psychological aggression |

29.1 |

30.4 |

-1.3 |

0.350 |

42.6 |

53.2 |

-10.6 |

0.007 |

+ p-value is the result from a two-sample difference of means z-test.

Table 5-6. Female lifetime prevalence estimates by Phase 1 and NRFU stages for ABS and RDD surveys+

|

ABS |

RDD |

||||||

Before NRFU |

Nonresponse Follow-up (NRFU) |

Difference |

p-value |

Before NRFU |

Nonresponse Follow-up (NRFU) |

Difference |

p-value |

|

Contact sexual violence |

40.3 |

56.1 |

-15.8 |

0.000 |

64.1 |

59.7 |

4.4 |

0.196 |

Unwanted touching |

33.3 |

47.5 |

-14.2 |

0.000 |

59.4 |

54.3 |

5.2 |

0.145 |

Sexual coercion |

17.2 |

23.3 |

-6.1 |

0.010 |

28.3 |

23.2 |

5.1 |

0.131 |

Rape |

18.5 |

28.3 |

-9.8 |

0.000 |

31.3 |

33.0 |

-1.8 |

0.352 |

Made to penetrate |

n/a |

n/a |

n/a |

n/a |

n/a |

n/a |

n/a |

n/a |

Stalking |

12.3 |

21.0 |

-8.7 |

0.000 |

21.4 |

18.0 |

3.4 |

0.239 |

Intimate partner violence |

34.2 |

48.9 |

-14.7 |

0.000 |

43.6 |

36.5 |

7.1 |

0.051 |

Contact sexual violence by intimate partner |

17.2 |

23.2 |

-6.0 |

0.011 |

25.1 |

14.1 |

11.1 |

0.002 |

Stalking by intimate partner |

7.3 |

12.9 |

-5.6 |

0.009 |

11.1 |

3.9 |

7.3 |

0.003 |

Intimate partner physical violence |

25.6 |

35.8 |

-10.2 |

0.000 |

33.3 |

35.7 |

-2.3 |

0.308 |

Intimate partner psychological aggression |

34.9 |

50.3 |

-15.5 |

0.000 |

50.3 |

46.6 |

3.7 |

0.243 |

n/a – Not applicable. Not asked of female respondents.

+ p-value is the result from a two-sample difference of means z-test.

Table 5-7. Lifetime prevalence estimates for NISVS 2010 to 2017, ABS, RDD by sex

|

NISVS |

Feasibility |

|||

2010-2012 |

2015 |

2016/2017 |

ABS |

RDD |

|

Response rate |

33.0% |

26.4% |

8.5% |

33.1% |

10.3% |

Female |

|||||

Contact sexual violence |

36.3 |

43.6 |

54.3 |

42.2 |

63.3 |

Unwanted sexual contact |

27.5 |

37.0 |

47.6 |

35.0 |

58.5 |

Sexual coercion |

13.2 |

16.0 |

23.6 |

17.9 |

27.3 |

Rape |

19.1 |

21.3 |

26.8 |

19.7 |

31.6 |

Made to penetrate |

0.5 |

1.2 |

n/a |

n/a |

n/a |

Contact sexual violence by intimate partner |

16.4 |

18.3 |

19.5 |

17.9 |

23.1 |

Intimate partner physical violence |

32.4 |

30.6 |

42.0 |

26.8 |

33.8 |

Intimate partner psychological aggression |

47.1 |

36.4 |

49.4 |

36.7 |

49.6 |

Male |

|||||

Contact sexual violence |

17.1 |

24.8 |

30.7 |

17.5 |

30.3 |

Unwanted sexual contact |

11.0 |

17.9 |

23.3 |

12.9 |

24.5 |

Sexual coercion |

5.8 |

9.6 |

10.9 |

5.8 |

9.1 |

Rape |

1.5 |

2.6 |

3.8 |

3.1 |

4.2 |

Made to penetrate |

5.9 |

7.1 |

10.7 |

5.0 |

7.4 |

Contact sexual violence by intimate partner |

7.0 |

8.2 |

7.5 |

5.2 |

5.9 |

Intimate partner physical violence |

28.3 |

31.0 |

42.3 |

18.3 |

33.7 |

Intimate partner psychological aggression |

47.3 |

34.2 |

45.1 |