Att G_NISVS Redesign_Pilot Test Final Report

Att G_NISVS Redesign_Pilot Test Final Report.docx

[NCIPC] The National Intimate Partner and Sexual Violence Survey (NISVS)

Att G_NISVS Redesign_Pilot Test Final Report

OMB: 0920-0822

ATTACHMENT G

Task 6.3: Results of the Pilot Test for the National Intimate Partner and Sexual Violence Survey

March 21, 2022

Submitted to:

Centers for Disease Control and Prevention

Atlanta, GA

Submitted by:

Westat

An Employee-Owned Research Corporation®

1600 Research Boulevard

Rockville, Maryland 20850-3129

(301) 251-1500

Table of Contents

Chapter Page

2. The Design of the Pilot Survey 1

5. National Prevalence Estimates 7

1. Introduction

The purpose of this report is to describe the results of the Pilot Survey (PS) conducted for the National Intimate Partner and Sexual Violence Survey (NISVS). As part of the redesign of the survey, a Feasibility Survey (FS) was completed to test several different designs for the survey going forward. The FS administered parallel surveys using different sample frames (Random Digit Dial (RDD); Address Based Sample (ABS)) and different modes of collection (Telephone vs. multi-mode including web, paper, and telephone).1 Several different experiments were completed with respect to methods to select the appropriate respondent and different combinations of mode. Emerging from the FS was the recommendation to move forward with an ABS design that pushed respondents to the web, with the use of a paper and telephone modes at different points in the process (see description below).

The PS was intended to be an operational test of the recommended procedures. The goal was to collect 200 surveys. This report provides a summary of the results of the PS with respect to response rates (Section 3), sample composition (section 4), prevalence estimates (section 5), item missing data (section 6) and measures of burden, confidentiality, and Privacy (section 7). The final section of the report summarizes the results and briefly discusses the implications for moving forward with the NISVS on a larger scale. The full set of recommendations are provided in another report which integrates the results of the FS and PS into one set of more detailed recommendations.

2. The Design of the Pilot Survey

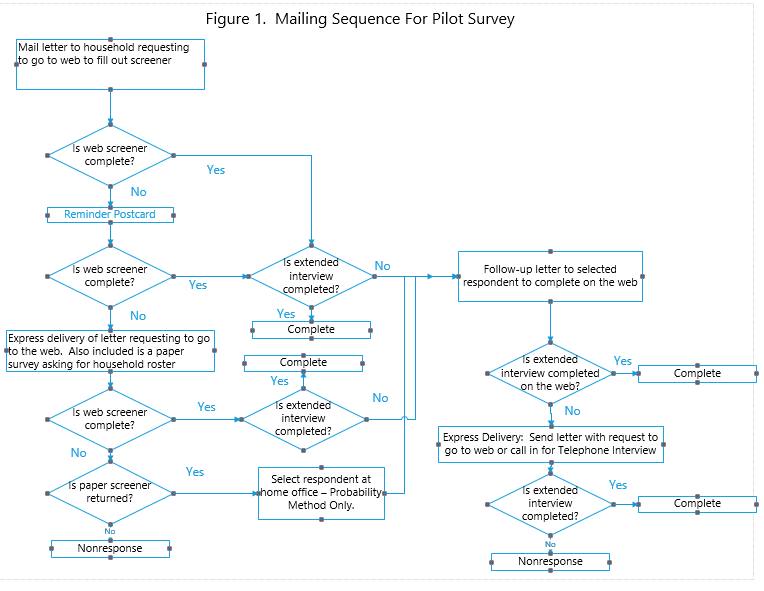

Based on the results of the FS, the PS adopted a design that was restricted to the ABS frame. The RDD survey was not recommended for the full-scale implementation because of very low response rates and high costs (relative to ABS) on the Feasibility Study (FS). Also, there are signs that the response rates for this method of data collection will continue to decline, and the costs will continue to increase into the future. The PS started with mailing a request to the randomly selected household to fill out a screening survey on the web to select a respondent (Figure 1). If there was no response to this, the respondent was sent a package that included a paper screener. The person filling out the web screener could go directly to the extended NISVS survey if he/she was selected as the respondent. If the person was not selected, he/she was asked to pass the information along to the selected individual. If the screener was completed on paper, a separate request to do the extended survey was mailed to the selected respondent.

If the screener was completed, but there was no response to the extended survey, a mailing was sent to the selected respondent. If there was no response, a request was sent that gave the respondent a choice between doing the survey on the web or calling in to do it on the telephone.

Incentives were offered at each stage. A $5 cash incentive was included in the very first mailing. Respondents were offered a $10 Amazon gift code to complete the screener on the web. A $5 cash incentive was offered if the paper screener was filled out. For the extended interview, respondents were promised a $15 Amazon gift code for completing the survey on the web. If it was completed on the telephone, they were provided a $15 Amazon gift code.

3. Response Rate

The PS was in the field from September 27, 2021 to December 6, 2021. There was a total of 285 completed screening surveys (Tables 1 and 2). This resulted in a screener response rate of 36.9 percent (AAPOR RR4).2 With respect to the extended survey, there were 162 surveys that were fully completed and 6 that were partially completed but provided enough data to be counted as a partial complete (Response rate 58.9%). Surveys were defined as a complete or partial complete using the same definition as that for the FS. A partial complete was defined as including all those who (1) responded at least through the section on attempted rape (females) or made to penetrate (males) and (2) had substantive responses to at least one of the screening items to measure victimization in at least half of the sections. A complete was defined using the same criteria, except that the respondent did make it to the end of the survey. These sections included stalking, harassment, unwanted touching, coercion, alcohol related rape, alcohol related made to penetrate (males), physically forced rape, physically forced made to penetrate (male), attempted forced rape and attempted forced made to penetrate (male) and (3) the respondent dropped out before completing the survey. A complete used the same basic criteria except they made it to the end of the survey. The final response rate, considering both stages, is 21.8 percent (36.9% x 58.9% = 21.8%).

Table 1. Dispositions and Response Rate for Pilot Survey

|

Screener |

Extended |

I -Complete |

285 |

162 |

P - Partial |

0 |

6 |

R - Refusal |

4 |

0 |

O - Other Nonresponse |

18 |

1 |

NC - Noncontact |

0 |

116 |

UH – Unknown Households |

0 |

0 |

UO - Unknown Other |

510 |

|

SO - Ineligible |

38 |

|

Total |

855 |

285 |

Proportion eligible (‘e’) |

.953 |

100% |

Response rate |

36.9% |

58.9% |

Final Response rate: Screener x Extended |

21.8 |

NA |

e calculated using methods in DeMatteis, J. (2019). Computing “e” in self-administered addressee-based sampling studies. Survey Practice, 12(1). Available at: https://www.surveypractice.org/api/v1/articles/8282-computing-e-in-self-administered-address-based-sampling-studies.pdf

Table 2. Detailed Response and Cooperation Rates Screener and Extended Surveys

|

Screener |

Extended |

Final |

Response Rate AAPOR RR4 |

36.9% |

58.9% |

21.8% |

Cooperation Rate: (I+P)/(I+P+R+O) |

92.8% |

99.4% |

92.3% |

Refusal Rate: R/((I+P)+(R+NC+O)+ UH + UO) |

0.5% |

0.0% |

0.0% |

Contact Rate: (I+P)+R+O / (I+P)+R+ O+NC+(UH + UO) |

37.6% |

59.3% |

22.3% |

Yield Rate: I/Released Cases |

33.3% |

58.9% |

19.6% |

For ABS:

Response Rate 4 = (I+P)/((I+P) + (R+NC+O) + e(UH+UO)]

e calculated using methods in DeMatteis, J. (2019). Computing “e” in self-administered addressee-based sampling studies. Survey Practice, 12(1). Available at: https://www.surveypractice.org/api/v1/articles/8282-computing-e-in-self-administered-address-based-sampling-studies.pdf

e=.953 for screener; 1.0 for extended survey

The survey included several questions to detect whether a respondent might not have been reading the survey carefully. One of such questions was placed at the very end of the sexual harassment section. It asked respondents to choose a particular response category:

[SAT1] Paying attention and reading the instructions carefully is critical. If you are paying attention, please choose Silver below. [RANDOMIZE RESPONSES]

Red 1

Yellow 2

Blue 3

Green 4

Silver 5

Orange 6

There was a programming error and only a small number of respondents received this question. As a result, this, and the other ‘trap’, question was not used to define a complete or partially completed survey. The intended purpose was to not accept as a complete or partial complete those who did not pass both trap items. Since one question could not be used, this criterion was not applied. We note that when a similar criterion was used for the FS, no respondents were taken out of the survey.

The response rate for the PS is quite a bit below that of the FS. The FS had a response rate of 27 percent, compared to 21.8 percent for the PS using the equivalent protocol. One possible reason the rate dropped is the timing of the two surveys. The FS was in the field at the very beginning of the pandemic (May of 2020). The visibility of the CDC at the time was very high. In combination with the fact that many people were restricting their activities to their houses, this may have artificially boosted the response rate relative to the time when the PS was in the field.

A total of 192 individuals started the extended survey in the PS (Table 3). Of those, 30 did not complete the whole survey. Six of the 30 completed enough to be considered a partially completed survey. About one-fourth (8 of 30) dropped before getting to the stalking section and did not answer any of the victimization questions. Another one-third (12 out of 30) dropped out between the stalking and sexual coercion sections. Of the ten remaining individuals, about half (6 of 10) completed the survey through the attempted rape questions. The dropout rates for the PS were comparable to the FS (19% for FS vs. 16% for PS).

As noted above, the respondents were able to complete the screener by paper if they did not do it on the web. Of the 285 completed screeners, 63 were filled out by paper (about one-fifth). Nine of the 63 paper screeners resulted in a completed extended interview. Four of the 168 completed and partially completed NISVS extended surveys were by telephone. With respect to device types, 62 percent (n=103) were done on a desktop computer, 34 percent (n=55) on a phone and four percent (n=7) were done on a tablet.

Table 3. Last section completed by survey completion status

Sections |

Total |

Complete or Partial |

Not complete |

Started |

5 |

0 |

5 |

Personal characteristics |

2 |

0 |

2 |

Health characteristics |

1 |

0 |

1 |

Stalking |

5 |

0 |

5 |

Sexual harassment, unwanted touching |

1 |

0 |

1 |

Coerced Sex |

6 |

0 |

6 |

Completed alcohol/drug-facilitated rape and made to penetrate |

1 |

0 |

1 |

Unwanted sex due to threats of harm/physical force |

3 |

0 |

3 |

Attempted physically forced sex |

1 |

1 |

0 |

Psychological aggression |

3 |

3 |

0 |

Debriefing questions |

2 |

2 |

0 |

Completed all applicable questions |

162 |

162 |

0 |

Total |

192 |

168 |

24 |

4. Sample Composition

One assessment of the quality of the survey is how well it represents the national population with respect to key characteristics. The PS respondents were compared to the FS and the national population with respect to socio-demographics. The distribution for the FS was derived by taking out those who were in the alternative mode group that included the paper option. It also excludes the responses from the non-response follow-up. This was done so the FS estimates are based on the same set of procedures as the PS. The distributions shown below for the PS and FS account for their probability of selection. For the PS, this includes the probability of being selected within the household. For the FS, this includes selection within the household as well as being selected to be in the experimental mode choice group that included the telephone call-in option.

The composition with respect to socio-demographics of the PS respondents is similar to that of the FS, with a few exceptions (Table 4). None of the differences between the PS and FS are statistically significant at the ten percent level.3 With this in mind, the proportions for the PS and FS by age, race-ethnicity, born in the US are very similar between the PS and FS. As with the FS, there is overrepresentation, relative to the American Community Survey (ACS), of married individuals (62.4% PS; 58.1% FS; 50.4% ACS). The PS underrepresented those with low education and blacks, exhibiting the same pattern as the FS. Nominally the PS has a lower proportion of those in the low education group than the FS. For example, 16.8 percent of those in the PS have a high school education or less. This compares to 19.7 percent for the FS and 36.4 percent for the ACS. The drop in these categories for the PS could be due to the somewhat lower response rate than the FS. Everyone in the PS reported having internet access, compared to 98.8 percent for the FS and 87.4 percent for the ACS.

Table 4. Comparison of demographic distributions for Pilot Survey, Feasibility Survey

and American Community Survey (ACS).

|

Pilot Survey (n=168) |

Feasibility Survey (n=3009) |

ACS |

Age |

|||

18-29 |

17.1 |

18.5 |

21.5 |

30-44 |

23.5 |

26.3 |

25.1 |

45-64 |

26.9 |

24.5 |

25.7 |

65+ |

32.4 |

30.7 |

27.7 |

Sex |

|||

Male |

48.9 |

46.8 |

48.7 |

Female |

51.1 |

53.3 |

51.3 |

Marital Status |

|||

Married |

62.4 |

58.1 |

50.4 |

Never married |

19.0 |

19.8 |

30.0 |

Other |

18.7 |

22.2 |

19.5 |

Race – Ethnicity |

|||

Hispanic |

12.7 |

11.8 |

15.7 |

NH-White |

66.4 |

71.2 |

64.1 |

NH-Black |

4.6 |

6.4 |

12.0 |

NH-Multiracial |

5.3 |

2.7 |

1.7 |

NH-Other |

11.1 |

7.9 |

6.6 |

Education |

|||

High school or less |

16.8 |

19.7 |

36.4 |

Some college |

28.8 |

34.5 |

34.8 |

Bachelor’s or higher |

54.4 |

45.8 |

28.9 |

Income |

|||

Less than $25,000 |

11.6 |

15.5 |

15.7 |

$25,000 - $49,999 |

17.5 |

18.6 |

20.0 |

$50,000 - $74,999 |

20.9 |

16.5 |

18.3 |

$75,000+ |

50.0 |

49.5 |

46.0 |

Born in the US |

|||

Yes |

84.4 |

87.6 |

81.6 |

No |

15.6 |

12.4 |

18.4 |

Any Access to Internet |

|||

Yes |

100.0 |

98.8 |

87.4 |

No |

0.0 |

1.2 |

12.5 |

Home Ownership |

|||

Owned |

74.2 |

73.4 |

66.8 |

Rented |

24.5 |

24.8 |

31.6 |

Other |

1.2 |

0.9 |

1.6 |

The Pilot and Feasibility data are weighted for selection within the household. The Feasibility data are also weighted for selection to the selection to the web/CATI follow-up group.

ACS are 2014 – 2018 5-year average data.

Table 5. Comparison health indicators for the Pilot Survey, Feasibility Survey, and the National Health Interview Survey (NHIS)

|

Pilot Survey (n=168) |

Feasibility Survey (n=3009) |

NHIS |

Any adult in the household have physical, mental, or emotional problem preventing from working |

|||

Yes |

14.7 |

12.0 |

12.8 |

No |

85.3 |

88.0 |

87.2 |

Any adult in the household been hospitalized overnight in last 12 months |

|||

Yes |

14.1 |

13.2 |

15.6 |

No |

85.9 |

86.9 |

84.3 |

Doctor, nurse, or other health professional told you that you have asthma |

|||

Yes |

13.8 |

12.3 |

13.4 |

No |

86.2 |

87.7 |

86.5 |

Doctor, nurse, or other health professional told you that you have any type of depression |

|||

Yes |

20.6 |

23.5 |

15.7 |

No |

79.5 |

76.5 |

84.2 |

The Pilot and Feasibility data are weighted for selection within the household. The Feasibility data are also weighted for selection in the web/CATI follow-up group.

There are small differences between the PS and FS with respect to health characteristics, but the differences are not statistically significant at the ten percent level (Table 5). The percentage who reported a physical, mental, or emotional problem for the PS is nominally higher than both the FS and NHIS (14.7% vs. 12.0%, respectively; not significant). The same is true for whether the person has been hospitalized overnight (14.1% vs. 13.2%; not significant) and whether the person was told by a health professional they had asthma (13.8% vs. 12.3%; not significant). The opposite is the case for whether the person was told they had any type of depression (20.6% vs. 23.5%; not significant). In one case the PS is further from the NHIS estimate (physical, mental, emotional problems) and in the other cases the PS moves the estimate closer to the NHIS. Given the small sample sizes, the variation around the NHIS is partly attributable to random variation.

5. National Prevalence Estimates

Prevalence estimates were calculated using the PS and compared to the estimates to the FS. As with the data in the previous section, the FS data were restricted to those that participated using the same procedures as in the PS (i.e., web/CATI follow-up group; no non-response follow-up). The data are weighted in the same way as described above for the demographic and health comparisons. Some caution should be taken when interpreting these because of the small number of completed surveys in the PS. Significance tests were conducted assuming the samples were a simple random sample and making a global adjustment for the design effect related to the above weighting using an approximation provided by Kish.4 Tests were conducted using a z-test for difference of proportions. A ten percent level of significance was used.

The prevalence estimate for contact sexual violence is close between the two (29.4 for PS vs. 31.5 for FS; not significant). For unwanted touching the estimates are almost identical. For the other three components (sexual coercion, rape and made to penetrate), the PS estimates are slightly below the FS. None of these differences are statistically significant at the ten percent level. The possible exception is for sexual coercion among males, which has an estimate of ‘0’ for the PS. But this estimate is very low (5.5% for FS) and only requires 3 unweighted cases to equal this estimate on the PS. For stalking, the PS is below that for the FS for males (1.9 for PS vs. 4.5; not significant) and higher for females (16.8 for PS vs. 12.8 for FS; not significant).

There are larger differences for intimate partner violence, with several being significant at the ten percent level. For contact sexual violence by an intimate partner, both the males and females are lower for the PS at the 10 percent level (males: 1.9% for PS vs. 4.9% for FS; p<.10) (females: 12.0% for PS vs. 18.5% for FS; p<.10). The PS is lower for both males and females for intimate partner physical violence. For males, this difference is statistically significant at the two percent level (10.0% for PS vs. 17.6% for FS; p<.02). While the difference for females is also similar, it is not statistically significant at the ten percent level (18.6% vs. 25.3%; not significant). For intimate partner psychological aggression, the prevalence estimates are very close.

The tendency for the PS to be somewhat lower, for selected estimates, than the FS may be related to the lower response rate of the PS. As discussed in the report for the FS, there were indications that the estimates were biased in a negative direction because of non-response. The lower response rate may have exacerbated this negative bias. However, given the small sample size of the PS, it isn’t clear how much of this difference would persist with a larger sample. This is discussed further in the summary section.

Table 6. Lifetime Prevalence Estimates for Pilot Survey and Feasibility Survey by Sex

|

Total |

Male |

Female |

||||||

|

Pilot |

Feasibility |

Pilot |

Feasibility |

Pilot |

Feasibility |

|

||

Contact sexual violence |

29.4 |

31.5 |

16.3 |

17.9 |

41.9 |

43.4 |

|

||

Unwanted touching |

25.4 |

25.8 |

12.5 |

12.5 |

37.7 |

37.5 |

|

||

Sexual coercion |

8.3 |

12.2 |

0 |

5.5 |

16.2 |

18.1 |

|

||

Rape |

9.2 |

11.7 |

1.3 |

2.5 |

16.8 |

19.9 |

|

||

Made to penetrate |

3.8 |

4.8 |

3.8 |

4.8 |

NA |

NA |

|

||

Stalking |

9.5 |

9.0 |

1.9 |

4.5 |

16.8 |

12.8 |

|

||

Intimate partner violence |

19.9 |

28.0 |

11.3 |

20.2 |

28.1 |

34.8 |

|

||

Contact sexual violence by intimate partner |

7.0 |

12.1 |

1.9 |

4.9 |

12.0 |

18.5 |

|

||

Stalking by intimate partner |

6.1 |

4.8 |

0.6 |

1.6 |

11.4 |

7.6 |

|

||

Intimate partner physical violence |

14.4 |

21.7 |

10.0 |

17.6 |

18.6 |

25.3 |

|

||

Intimate partner psychological aggression |

31.8 |

33.1 |

26.9 |

29.1 |

36.5 |

36.7 |

|

||

6. Item Missing Data

In order to qualify as a complete or partial complete, the respondent had to answer at least one of the screening items related to victimization for at least half of the sections on the survey. Respondents who skipped particular screening items were coded as non-victims, as is currently done on the NISVS. The amount of missing data for the primary victimization questions among the 168 individuals who were counted as a complete or partial complete is low (Table 7)5. Levels of missing information is similar to that of the FS, with the largest number skipping at least one item within the multiple sections that ask about rape (4.2%).

Table 7. Percent missing at least one required item for non-intimate partner prevalence measures for the Pilot and Feasibility Surveys

-

Percent missing at least one item

Pilot

Feasibility

Contact sexual violence

7.1

5.1

Unwanted touching

0.6

0.6

Sexual coercion

1.2

0.9

Rape

4.2

5.1

Made to penetrate

1.2

6.1

Stalking

0.6

1.1

7. Estimates of Burden, Privacy and Confidentiality

The mean and median time to complete the PS was 20 and 13 minutes, respectively (Table 8). This pattern is similar to what was found on the FS. The variability in the timings is much larger on the PS than in the FS, as indicated by the large standard deviation for the PS. This may be the result of the relatively small sample for the PS (n=168 vs. n=3009 in the FS).

Two subjective items related to burden were collected on the survey (Table 9). One asked about the length of the survey. For the PS, 31.1 percent said the survey was too long, which is comparable to the result for the FS (35.8%). Respondents were also asked if they would be willing to do the survey again. For the PS 47.5 percent agreed that they would participate again, if asked. This is also comparable to the results from the FS (52.2%).

With respect to the privacy of the interview, virtually all of interviews were done while the respondent was at home (96.3%). This is slightly higher than the responses for the FS (88.4%). The PS respondents reported 18.2 percent of the surveys were done while someone else was in the room. This is also comparable to the results from the FS (23.1%).

The NISVS makes an effort to reveal the topics of the survey to only the person selected as the respondent and who agrees to do the interview. For the PS 14.9 percent of the respondents reported that someone else in the household knew what the survey was about. This is slightly below what occurred on the FS (20.4%).

Table 8. Timing for the Feasibility Survey and Pilot Survey

|

Pilot Survey |

Feasibility Survey |

Median |

13 |

15 |

Mean |

20 |

18 |

Minimum |

4 |

4 |

5th percentile |

6 |

7 |

25th percentile |

8 |

11 |

75th percentile |

18 |

21 |

95th percentile |

47 |

37 |

Maximum |

200 |

111 |

Standard deviation |

31 |

10 |

Table 9. Measures of Burden, Privacy and Confidentiality for the Pilot and Feasibility Surveys

|

Pilot Survey |

Feasibility Survey |

Survey was too long |

31.1 |

35.8 |

Strongly agree or agree to making choice to participate |

47.5 |

52.2 |

Did the survey at home |

96.3 |

88.4 |

Someone else was in the room |

18.2 |

23.1 |

Someone else in the household knew what the survey was about |

14.9 |

20.4 |

8. Summary

The intent of the PS was to implement the survey using the design recommended from the FS. This design employed a sequential multi-mode strategy with an emphasis of pushing respondents to the web. The first request was a postal mailing to randomly selected households asking an occupant to fill out the screening survey on the web. If that failed, respondents were asked to do it on the web or paper. For the NISVS extended survey, the first request was to do it by web. If that failed, respondents were given a choice between the web and calling in to do it by telephone. In many ways, the results from the PS were similar to that of the FS. Almost all respondents used the web to respond to the screener and the extended interview. A relatively small number of persons used the alternative modes. About one-fifth of the completed screeners were done by paper (63 of 285) and even fewer did the survey by telephone (4 out of 168 completes). The FS and PS were also very similar with respect to the percentage of missing data, the time it took to complete the survey, measures of privacy and confidentiality.

However, the PS had a lower response rate than the FS (21.8% vs. 27%). We speculate this is related to when the two surveys were administered relative to the pandemic. The FS was at the very beginning, May to October of 2020, while the PS was administered over a year later when the pandemic was receding to some degree. The publicity CDC received at the beginning of the pandemic may have made the survey request stand out, especially as many individuals were spending most of their time at home. The higher screener response rate for the FS (38% vs.50%) reflects this type of attention. The response rate for the extended NISVS survey was also lower for the FS, but not to this degree (65.3% vs. 58.9%).

The small sample size for the PS makes it difficult to judge whether the lower response rate affected the prevalence estimates from the survey. With respect to the sample composition, the PS and FS were similar by age, race-ethnicity and born in the US. The patterns of nonresponse and coverage relative to the national population are very similar between the PS and FS. Both over-represented those who are married and underrepresented those with low education and blacks. Nominally, the PS exacerbated these deficits relative to the FS. The profile of respondents in the two surveys on several health measures were similar as well.

The non-intimate partner prevalence estimates were close, with a few of the differences being statistically significant at the ten percent level. The estimates for intimate partner related victimization were different for selected types of violence. The biggest differences were for contact sexual violence by an intimate partner and intimate partner physical violence. The PS was consistently lower than that of the FS. A few were significant at the ten percent level. The estimates for stalking and psychological aggression involving an intimate partner were close to each other.

One possible reason for the lower estimates is the lower response rate. The report on the FS did find evidence that the estimates exhibited a negative bias due to non-response (i.e., estimates are too low). If this is true, then the lower response rate of the PS may have exacerbated this effect. It is also possible that the difference is related to differential measurement error. For example, it may be that that the PS respondents were not paying as close attention to the questions as in the FS. Perhaps this led to more respondents not reporting incidents related to intimate partners. However, it isn’t clear why this would be the case for the PS and not the FS. Both surveys administered essentially the same questionnaires. The indicators of burden, such as timing and item missing data, were very similar between the FS and PS. In addition, several of the intimate partner estimates were not different between the two surveys.

These observations should be taken in light of the very small sample size of the PS, which was about 5 percent of the FS and about 1 percent of what is planned for the larger NISVS implementation. The sample was too small to develop non-response adjusted weights, which could have an effect on the final results. For example, the estimates reported above got a bit closer once the selection probabilities were accounted for. The PS also did not implement a NRFU. Based on prior analyses, this would be expected to bring in individuals that report more victimization. On the other hand, the comparisons to the FS were based on the same methodology --- it used those that were part of the web/CATI mode option group and did not include the NRFU portion of the collection.

The recommendations for the national implementation of the NISVS (Task 6.4 report) include several suggestions to address the questions raised by both the FS and the PS. This includes adding more mailings to the protocol and maintaining the NRFU. Both strategies will bring in more respondents and increase the response rate. Another option to address response rates is to do further experimentation with a paper questionnaire. The FS did find a slightly higher response rate when using this type of design, as well as bringing in more respondents with lower education. It was not recommended because the loss of information when using the paper instrument was greater than the gains in response rate. However, given the differences between the PS and FS, this result may be different if the design is tested at a different point in time.

Another recommendation is to implement protocols to slow respondents down as they answer the survey. This would be in the form of checks on how fast respondents are answering the questions and prompting those who are going too fast. The number of prompts could be limited (e.g., 2 or 3). This has been experimented with on several other surveys and has been found to be reasonably successful without incurring a drop in the response rate.6

1 Cantor, D., Yan, T., Jodts, E., Brick, J.M., Muller, M., Steiger, D. and S. Mendoza (2021) Study of the Feasibility Survey for Redesign of the National Intimate Partner and Sexual Violence Survey. Report delivered to the Centers for Disease Control and Prevention, August 23, 2021.

3 All tests were difference of proportions using a z-test. For this report, we use a significance level of 10 percent. A higher level of significance is used than the standard five percent to account for the small sample size of the PS.

4 Kish, L. (1965). Survey Sampling. New York: John Wiley & Sons Inc.

5 A partial complete are those that met the criteria for inclusion in the estimates but did not get to the end of the survey. A complete are those that met all of the criteria and did get to the end of the survey.

6 Conrad, F. G., Couper, M. P., Tourangeau, R., Zhang, C. 2017. Reducing speeding in web surveys by providing immediate feedback. Survey Research Methods, 11(1): 45-61.

Sun, H., Caporaso, A., Cantor, D., Davis, T. and K. Blake (forthcoming) The effects of prompt interventions on web survey response rate and data quality measures. Field Methods

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Atiba Hendrickson |

| File Modified | 0000-00-00 |

| File Created | 2023-11-10 |

© 2026 OMB.report | Privacy Policy