Supporting Statement Part A CLEAN 10.3.24

Supporting Statement Part A CLEAN 10.3.24.docx

Subminimum Wage to Competitive Integrated Employment (SWTCIE) Program Evaluation

OMB:

Tracking and OMB Number: 1820-NEW

Revised: 10/3/2024

SUPPORTING STATEMENT

FOR PAPERWORK REDUCTION ACT SUBMISSION

Explain the circumstances that make the collection of information necessary. What is the purpose for this information collection? Identify any legal or administrative requirements that necessitate the collection. Include a citation that authorizes the collection of information. Specify the review type of the collection (new, revision, extension, reinstatement with change, reinstatement without change). If revised, briefly specify the changes. If a rulemaking is involved, list the sections with a brief description of the information collection requirement, and/or changes to sections, if applicable.

The U.S. Department of Education’s (ED) Rehabilitation Services Administration (RSA) requests clearance for new data collection activities to support the evaluation of the Disability Innovation Fund (DIF) Subminimum Wage to Competitive Integrated Employment (SWTCIE) program. The aim of this project is to increase transitions to competitive integrated employment (CIE) among people working in subminimum wage employment (SWE)—or considering doing so—through innovative activities to build systemwide alternatives to subminimum wage employment. Advocacy, policy, and practice have evolved in recent decades toward a shift to CIE, as reflected in the Workforce Innovation and Opportunity Act and in the Consolidated Appropriations Act of 2021, which provides funding for the DIF SWITCIE program awarded in fiscal year 2022. This request covers primary data collection activities, including survey data, administrative data, site visits, and focus groups.

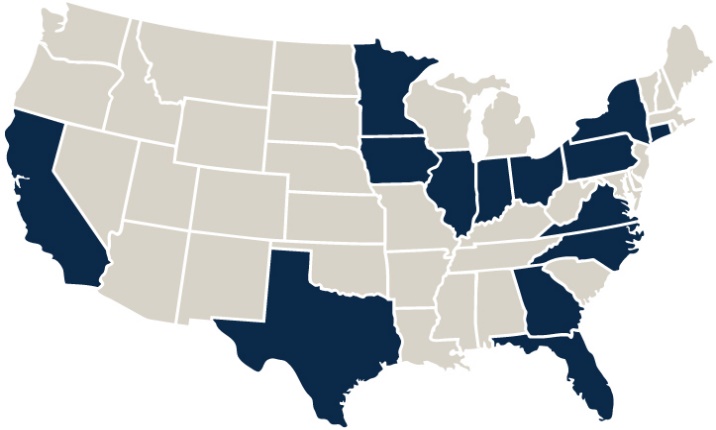

RSA’s Training and Services Program Division (TSPD) contracted with Mathematica to assist RSA in evaluating the Federal fiscal year (FFY) 2022 DIF program. In September 2022, RSA awarded five-year grants for the DIF (Assistance Listing Number 84.421D). The grants provide 14 State vocational rehabilitation (VR) agencies with funding to implement SWTCIE Innovative Model Demonstration projects to decrease SWE and increase CIE among people with disabilities currently employed in or contemplating SWE (Figure 1). To achieve this purpose, the projects are creating innovative models for dissemination and replication to (1) identify strategies for addressing barriers associated with accessing CIE, (2) provide integrated services that support CIE, (3) support integration into the community through CIE, (4) identify and coordinate wraparound services for project participants who obtain CIE, (5) develop and disseminate evidence-based practices, and (6) provide entities holding Section 14(c) certificates (which allows them to pay below the minimum wage for workers with disabilities that impair their productivity) with readily accessible transformative business models for adoption.

Figure 1. States with vocational rehabilitation agencies that were awarded Subminimum Wage to Competitive Integrated Employment projects.

The intervention models vary across the SWTCIE projects, but all of them are working with employers that hold 14(c) certificates, other employers, service providers, and additional community partners to empower transition-age youth and working-age adults with disabilities to pursue CIE. In addition, each project will use part of its funds for an independent evaluator to conduct a project-specific evaluation of its activities and outcomes.

This data collection for the SWTCIE projects is critical as the evaluation is intended to make information more actionable for practitioners and policymakers and ensure the DIF program outcomes are replicable for VR agencies and partners. The evaluation will (1) describe the implementation and costs of the Federally-funded program and strategies; (2) improve the identification of DIF program models and strategies related to the state VR programs, intended to improve outcomes for individuals with disabilities in CIE, including but not limited to accommodations and services for individuals with disabilities, utilization of technology, and supports; (3) interconnect the projects to evaluate the effectiveness of particular strategies in the context of how commonly that strategy is used across the projects; (4) analyze trends in CIE outcomes and potential impacts of Federal grants; and (5) examine the effectiveness and cost effectiveness of the program and strategies that support CIE and reduce SWE.

Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate the actual use the agency has made of the information received from the current collection.

The SWTCIE national evaluation intends to answer a range of research questions and will conduct the following analyses to understand project implementation and their effects:

Participation analysis: The participation analysis will help us understand the people, employers, and service providers that take part in the SWTCIE projects and provide context for the findings of the SWTCIE national evaluation. The participation analysis will describe participants’ characteristics, including whether they represent populations historically underserved by VR agencies and people with high support needs, and compare them with other populations with disabilities. For employers and service providers, the participation analysis will describe the types of partnerships and their roles in the projects.

Implementation analysis: The implementation analysis will explore participants’ experiences and service use, SWTCIE project operations, VR agency engagement with organizations, and the efforts projects make to change systems. The analysis will inform how projects deliver core components of services to participants and will distinguish which activities have been accomplished with project funding, which activities are built on prior initiatives, which activities have occurred in parallel to SWTCIE implementation, and how local and state contexts affected implementation.

Outcome analysis: The outcomes analysis will describe the outcomes of participants, employers, providers, and systems potentially affected by the SWTCIE projects. The projects intend to affect a range of outcomes, such as participants’ employment, earnings, health, and well-being as well as VR policies and practices.

Impact analysis: The impact analysis will generate evidence about the effects of the SWTCIE projects on key demonstration-related outcomes. To understand whether any observed changes in these outcomes are attributable to the projects, we will compare the outcomes of participants with those of a comparison group whose outcomes represent what would have happened to the participants had they not enrolled in a project.

Benefit-cost analysis: Using data collected throughout the course of the national evaluation, the benefit-cost analysis will show whether the benefits of each project were large enough to justify its costs. To provide a comprehensive accounting of the consequences of each project, we will report benefits and costs from four perspectives: participants, the VR agency, the state (everything associated with the state except VR), and the federal government.

The SWTCIE national evaluation team will collect three types of data to answer evaluation research questions and inform the projects’ context and outcomes. We will collect qualitative data from grantee meetings, project documents, and site visits. Qualitative data will provide rich insights into SWTCIE project implementation activities that we cannot glean from other data sources. To better understand the experiences of those directly involved with the projects and assess project outcomes, we will collect survey data from participants along with parents and guardians of participants. Finally, we will leverage a range of administrative data from projects (staff records, RSA Case Service Reports, project administrative data, project status charts, and cost data). These different sources of administrative data will provide information on the backgrounds of SWTCIE project staff, the characteristics and activities of project participants, services, outcomes, and project costs. Table A.1 describes the data collection activities and timelines.

Table A.1. Major data collection activities for the SWTCIE national evaluation, by year

Data |

Data type (primary or administrative) |

Source |

Mode of data collection |

Use(s) in study |

Timing |

Qualitative Data |

|||||

Project documents |

Administrative |

Project staff |

Electronic |

Collect project applications, initial and revised logic models, recruitment flyers, success stories, training manuals, and other project materials to understand project implementation |

At least quarterly in Project Years 2–4 and the first two quarters of Project Year 5 |

On-site observations |

Primary |

Project staff, 14(c) certificate holder and provider staff, project participants |

In-person data collection |

Meet with project staff and participants to capture the varied project-specific interactions such as participant enrollment and employer engagement events |

Project Years 3 and 5 |

Interviews and focus groups |

Primary |

Project staff, 14(c) certificate holder and provider staff, project participants |

In-person data collection |

Meet with participants, project staff, and 14(c) certificate holder and provider staff, to obtain perspectives on implementation |

Project Years 3 and 5 |

Survey Data |

|||||

Participant baseline survey |

Primary |

Project participants |

Electronic |

Capture key baseline indicators for project participants |

On a rolling basis in Project Years 3–5 |

Participant follow-up survey |

Primary |

Project participants |

Electronic |

Capture the experiences of participants |

Q1–2 of Project Year 5 |

Parent and guardian survey |

Primary |

Parents and guardians of project participants |

Electronic |

Capture the perspectives of the parents and guardians of participants regarding the project and how it has affected them and participants |

Q1–2 of Project Year 5 |

Administrative Data |

|||||

Project staff records |

Administrative |

Project staff |

Electronic |

Improve our understanding of project infrastructure such as staffing, training, and collaboration across organizations |

Q1 of Project Years 3‑5 |

Project administrative data |

Administrative |

Project staff |

Electronic |

Capture key information on project administration, such as participant characteristics, project milestones, services used, costs, and outcomes |

Q4 of Project Years 2‑5 |

Project status charts |

Administrative |

Project staff |

Electronic |

Collect summary statistics about project participants and outcomes |

Q2 of Project Years 2‑5 |

RSA-911 data |

Administrative |

RSA |

Electronic |

Provide a comprehensive description of service use and project, employment, and educational outcomes for participants and comparison groups |

Quarterly between Q3 of Project Year 2 and Q3 of Project Year 5 |

Project cost data |

Administrative |

Project staff |

Electronic |

Document services, training, and technical assistance offered through the projects |

Q4 of Project Year 4 and Q1 of Project Year 5 |

Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or forms of information technology, e.g., permitting electronic submission of responses, and the basis for the decision of adopting this means of collection. Please identify systems or websites used to electronically collect this information. Also describe any consideration given to using technology to reduce burden. If there is an increase or decrease in burden related to using technology (e.g., using an electronic form, system or website from paper), please explain in number 12.

The data collection plan is designed to obtain information in an efficient way that minimizes respondent burden, including the use of technology when appropriate. Voxco survey software, which is compliant with Section 508 of Web Content Accessibility Guidelines 2.0 in the United States, will be used to design and administer surveys. The self-administered surveys will be online and can be completed on multiple devices at the respondent’s convenience, including a smart phone, tablet, and desktop PC. Voxco optimizes the user experience, so respondents do not have to resize their screens for maximum visibility. The survey software detects the type of device being used, and elements are reorganized and reformatted to provide an intuitive experience tailored to the device. The surveys will be designed with a high degree of visual appeal, intuitive flow, ease of use, functions that are readily apparent, and few text instructions. Respondents will have the option to save their progress and continue at a later time. Additionally, the surveys will employ drop-down response categories or radio button choice lists whenever appropriate so respondents can quickly select from a list. Dynamic questions, automated skip patterns, and choice restriction logic will be used so respondents see only the questions that apply to them (including those based on answers provided previously in the survey), and their answers are restricted to those intended by the question.

Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use for the purposes described in Item 2 above.

Information that is already available from alternative data sources will not be collected again for this evaluation. Information obtained from the qualitative data collection and survey data collections is not available elsewhere. The evaluation team will design all data collection efforts to gather essential data and minimize burden on projects and participants.

If the collection of information impacts small businesses or other small entities, describe any methods used to minimize burden. A small entity may be (1) a small business which is deemed to be one that is independently owned and operated and that is not dominant in its field of operation; (2) a small organization that is any not-for-profit enterprise that is independently owned and operated and is not dominant in its field; or (3) a small government jurisdiction, which is a government of a city, county, town, township, school district, or special district with a population of less than 50,000.

The study team expects the burden on small businesses or entities to be minimal. Some of the 14(c) certificate holders participating in the site visits may be small entities as defined by OMB Form 83-1. Information being requested has been held to the minimum required for the intended use. The semi-structured interviews with 14(c) certificate holders will be scheduled in collaboration with the program staff to minimize any disruption of daily activities. The site visit team will conduct group discussions to the extent feasible, and no more than 60 minutes will be required of any one individual, with two exceptions (those participating in focus groups, which will be 90 minutes of duration, and staff involved in providing project cost data, which may take up to 16 hours).

Describe the consequences to Federal program or policy activities if the collection is not conducted or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

The information collection is necessary for RSA to help VR agencies, disability service providers, and employers to develop effective programs that decrease SWE and increase CIE among people with disabilities currently employed in or contemplating SWE. If the current data collection is not conducted, RSA will not have access to up-to-date data with which to guide programmatic decisions that identify strategies for addressing barriers associated with accessing CIE, provide integrated services that support CIE; support integration into the community through CIE; identify and coordinate wraparound services for project participants who obtain CIE; develop and disseminate evidence-based practices; and provide entities holding section 14(c) certificates with readily accessible transformative business models for adoption.

There are no known technical or legal obstacles to reducing the burden.

Explain any special circumstances that would cause an information collection to be conducted in a manner:

requiring respondents to report information to the agency more often than quarterly;

requiring respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

requiring respondents to submit more than an original and two copies of any document;

requiring respondents to retain records, other than health, medical, government contract, grant-in-aid, or tax records for more than three years;

in connection with a statistical survey, that is not designed to produce valid and reliable results than can be generalized to the universe of study;

requiring the use of a statistical data classification that has not been reviewed and approved by OMB;

that includes a pledge of confidentiality that is not supported by authority established in statute or regulation, that is not supported by disclosure and data security policies that are consistent with the pledge, or that unnecessarily impedes sharing of data with other agencies for compatible confidential use; or

requiring respondents to submit proprietary trade secrets, or other confidential information unless the agency can demonstrate that it has instituted procedures to protect the information’s confidentiality to the extent permitted by law.

In March 2024, the Office of Management and Budget (OMB) announced revisions to Statistical Policy Directive No. 15: Standards for Maintaining, Collecting, and Presenting Federal Data on Race and Ethnicity (SPD 15) and published the revised SPD15 standard in the Federal Register (89 FR 22182). The present ICR contains no changes to the race and ethnicity items and is therefore compliant with the 1997 SPD15 standard. A request to revise the standards for this information collection will be submitted to OMB by a date to be determined. The revision request will address the new federal statistical standard for race/ethnicity items. The Department is currently working on an action plan for compliance with the newly revised SPD15 standards, which will fully take effect on March 28, 2029. Early discussions suggest that implementation of these standards will be particularly complex and delicate in data collections where race and ethnicity data is reported both by individuals about themselves and also provided by third parties providing aggregate data to the department on the individuals they serve and represent (e.g., state and local education agencies, institutions of higher education).

As applicable, state that the Department has published the 60 and 30 Federal Register notices as required by 5 CFR 1320.8(d), soliciting comments on the information collection prior to submission to OMB.

Include a citation for the 60-day comment period (e.g. Vol. 84 FR ##### and the date of publication). Summarize public comments received in response to the 60- day notice and describe actions taken by the agency in response to these comments. Specifically address comments received on cost and hour burden. If only non-substantive comments are provided, please provide a statement to that effect and that it did not relate or warrant any changes to this information collection request. In your comments, please also indicate the number of public comments received.

For the 30-day notice, indicate that a notice will be published.

Describe efforts to consult with persons outside the agency to obtain their views on the availability of data, frequency of collection, the clarity of instruction and record keeping, disclosure, or reporting format (if any), and on the data elements to be recorded, disclosed, or reported.

Consultation with representatives of those from whom information is to be obtained or those who must compile records should occur at least once every 3 years – even if the collection of information activity is the same as in prior periods. There may be circumstances that may preclude consultation in a specific situation. These circumstances should be explained.

A.8.1. Federal Register announcement

A 60-day notice to solicit public comments was published in the Federal Register, Volume 89, No. 189, page 38112 on May 7, 2024. One public comment was received which was non-substantive and does not require a response. The 30-day notice will be published to solicit additional public comments.

A.8.2. Consultations outside the agency

In formulating this evaluation design, the study team sought input from a technical working group on 5/23/2023 and 5/24/2023. Their input helps ensure the study is of the highest quality and that findings are useful to Federal policymakers. Table A.2 lists the individuals who served on the technical working group, their affiliation, and their relevant expertise.

Table A.2. Technical working group members, their affiliation, and relevant expertise

Member category |

Name |

Affiliation |

Areas of expertise |

Researcher |

Heinrich Hock |

Formerly at American Institutes of Research; currently at Westat |

Analysis methods; evaluation of disability-related employment interventions |

Researcher |

Tim Tansey |

University of Wisconsin |

Multisite evaluations and technical assistance |

Researcher |

John Butterworth |

University of Massachusetts-Boston, Institute for Community Inclusion |

Subminimum wages; employment for people with intellectual and developmental disabilities |

VR expert |

Steve Wooderson |

Council of State Administrators of Vocational Rehabilitation |

National VR policy; VR operations across agencies |

Subminimum employment expert |

Brian DeAtley |

SourceAmerica |

Service delivery issues; implementing changes in services |

SWTCIE representative |

Amanda Jensen-Stahl |

Minnesota VR agency |

SWTCIE project operations |

SWTCIE representative |

Chip Kenney |

San Diego State University |

Evaluation and methods |

People with lived experience |

Bill Stumpf |

Person with a disability or a family member of a person with a disability |

Direct experience with SWE |

VR expert |

Charyl Yarborough |

New Jersey Department of Labor |

Oversight of service providers |

Subminimum employment expert |

Henry Claypool |

American Association of People with Disabilities |

National disability advocacy and policy |

SWTCIE = Subminimum Wage to Competitive Integrated Employment; SWE = subminimum wage employment; VR = vocational rehabilitation

There are no unresolved issues.

Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees with meaningful justification.

To encourage survey participation and to acknowledge respondent burden, a $30 e-gift card will be provided to those who complete the participant follow-up surveys and the parent and guardian survey . Interviews and focus groups participants will also receive a $30 e-gift card.

All incentives will be delivered using Tango Cards. Tango Cards allow respondents to select the vendor gift card of their choice. The study team will create a personalized, project-specific email template that includes a thank you message, instructions, and a link for redeeming the e-gift card, a Tango help desk phone number, and email address and phone number for respondents that need help or have not received their gift cards in a timely manner. After choosing how they will redeem the e-gift card, the respondent will receive a second email from the chosen vendor (or vendors). This email contains the actual gift card, which might include a PIN, a printable bar-coded gift card image, or both. For respondents that lose or cannot access the gift card redemption links, the team can retrieve and forward links.

Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy. If personally identifiable information (PII) is being collected, a Privacy Act statement should be included on the instrument. Please provide a citation for the Systems of Record Notice and the date a Privacy Impact Assessment was completed as indicated on the IC Data Form. A confidentiality statement with a legal citation that authorizes the pledge of confidentiality should be provided.1 If the collection is subject to the Privacy Act, the Privacy Act statement is deemed sufficient with respect to confidentiality. If there is no expectation of confidentiality, simply state that the Department makes no pledge about the confidentiality of the data. If no PII will be collected, state that no assurance of confidentiality is provided to respondents. If the Paperwork Burden Statement is not included physically on a form, you may include it here. Please ensure that your response per respondent matches the estimate provided in number 12.

A.10.1. Personally identifiable information

The information provided by or about participants during the data collection activities including the baseline, participant surveys will contain participant-level personally identifiable information (PII). This includes names and addresses of participants and one additional person the study team may contact, if they are unable to reach the participant to complete their follow-up survey. This contact information is needed to ensure that the study team has information to contact participants to complete their follow-up survey.

The research team will protect the confidentiality of all data collected for the study and will use it for research purposes only. Survey data will be collected by Mathematica’s partner, M. Davis and Company, Inc. (MDAC). All data collection instruments contain a privacy statement as well.

Surveys will be programmed into survey software Voxco, a comprehensive multimodal survey platform. All survey outreach will stress the importance of the potential respondent’s participation and the confidentiality of their response. Voxco survey software has robust systems in place to manage the security of PII. Certain data points are automatically designated PII by the system (for example, phone numbers and e-mail addresses), and any user-created variable can be manually flagged as PII. Access to data flagged as PII is restricted to only those users explicitly granted such access. The system keeps logs of each time a user attempts to view, edit, print, or export PII, regardless of whether the user has been granted access. Data flagged as PII will not be exported with the rest of the data if a user without access runs an export of the data. The web survey data will be stored in the Federal Risk and Authorization Management Program authorized platform and transferred via a secure transfer site to Mathematica’s secure restricted folders for analysis. All electronic data will be stored in Mathematica’s secure restricted folders, to which only approved project team members have access. All respondent materials, including contact emails, letters, and the data collection instruments contain a notice of confidentiality.

All members of the study team with data access will be trained and certified on the importance of confidentiality and data security. MDAC and Mathematica routinely use the following safeguards to maintain data confidentiality and will apply them consistently throughout this study:

All employees must sign a confidentiality pledge that emphasizes the importance of confidentiality and describes employees’ obligations to maintain it.

Personally identifiable information is maintained on separate forms and files, which are linked only by random, study-specific identification numbers.

Access to hard-copy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded materials are shredded.

Access to computer data files is protected by secure usernames and passwords, which are only available to specific users who have a need to access the data and who have the appropriate US Department of Education security clearances.

Sensitive data are encrypted and stored on removable storage devices that are kept physically secure when not in use.

The study team’s standard for maintaining confidentiality includes training staff on the meaning of confidentiality, particularly as it relates to handling requests for information, and providing assurance to respondents about the protection of their responses. It also includes built-in safeguards on status monitoring and receipt control systems. In addition, all study staff who have access to confidential data must obtain security clearance from the U.S. Department of Education, which requires completing personnel security forms, providing fingerprints, and undergoing a background check.

During data analysis, all names are replaced with identification numbers. All study reports will present data in aggregate form only; no survey or interview participants will be identifiable to the data they provided. Any quotations used in public reporting will be edited to ensure the identity of the respondent cannot be ascertained.

Provide additional justification for any questions of a sensitive nature, such as sexual behavior and attitudes, religious beliefs, and other matters that are commonly considered private. The justification should include the reasons why the agency considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

No questions of a sensitive nature will be included in this study.

Provide estimates of the hour burden for this current information collection request. The statement should:

Provide an explanation of how the burden was estimated, including identification of burden type: recordkeeping, reporting or third-party disclosure. Address changes in burden due to the use of technology (if applicable). Generally, estimates should not include burden hours for customary and usual business practices.

Please do not include increases in burden and respondents numerically in this table. Explain these changes in number 15.

Indicate the number of respondents by affected public type (Federal government, individuals or households, private sector – businesses or other for-profit, private sector – not-for-profit institutions, farms, state, local or tribal governments), frequency of response, annual hour burden. Unless directed to do so, agencies should not conduct special surveys to obtain information on which to base hour burden estimates. Consultation with a sample (fewer than 10) of potential respondents is desirable.

If this request for approval covers more than one form, provide separate hour burden estimates for each form and aggregate the hour burden in the table below.

Provide estimates of annualized cost to respondents of the hour burdens for collections of information, identifying and using appropriate wage rate categories. Use this site to research the appropriate wage rate. The cost of contracting out or paying outside parties for information collection activities should not be included here. Instead, this cost should be included in Item 14. If there is no cost to respondents, indicate by entering 0 in the chart below and/or provide a statement.

Provide a descriptive narrative here in addition to completing the table below with burden hour estimates.

The total burden for these data collection activities is 6,672 hours. The total annual respondent burden for the data collection effort covered by this clearance request is 2,224 hours (total burden of 6,672 divided by 3 study years). The number of annual responses is 4,866.

Table A.3 provides an estimate of burden for the data collection activities included in the current request, broken down by instrument and respondent. In addition, the table presents estimates of indirect costs to all respondents for each data collection instrument. These estimates are based on our prior experience collecting data from participants, grantee directors, and state offices, along with actual time recorded during pretesting of each instrument.

Table A.3. Estimate of respondent time and cost burden by year for the SWTCIE national evaluation data collection activities

Instrument |

Number of Respondents (total over request period) |

Number of Responses per Respondent (total over request period) |

Average Burden per Response (in hours) |

Total Burden (in hours) |

Annual Burden (in hours) |

Average Hourly Wage |

Total Annual Cost |

Qualitative data collection |

|||||||

Semi-structured interviews |

|||||||

Participants |

112 |

2 |

0.75 |

168.00 |

56.00 |

$7.25 a |

$406.00 |

Parents and guardians |

112 |

2 |

0.75 |

168.00 |

56.00 |

$29.76 b |

$1,666.56 |

Provider |

84 |

2 |

1 |

168.00 |

56.00 |

$38.13 c |

$2,135.28 |

Focus groups with providers, partners and families |

84 |

2 |

1.5 |

252.00 |

84.00 |

$38.13 c |

$3,202.92 |

Survey data collection |

|||||||

Participant baseline survey |

7,500 |

1 |

0.25 |

1875.00 |

625.00 |

$7.25 a |

$4,531.25 |

Participant follow-up survey |

2,625 d |

1 |

0.50 |

1312.50 |

437.50 |

$7.25 a |

$3,171.88 |

Parent and guardian follow-up survey |

2,625 d |

1 |

0.25 |

656.25 |

218.75 |

$29.76 b |

$6,510.00 |

Administrative data collection |

|||||||

Staff records |

56 |

3 |

2 |

336.00 |

112.00 |

$38.13 c |

$4,270.56 |

Project administrative data |

140 |

6 |

1 |

840.00 |

280.00 |

$38.13 c |

$10,676.40 |

Project cost data |

28 |

2 |

16 |

896.00 |

298.67 |

$38.13 c |

$11,388.29 |

Total |

|

|

|

6671.75 |

2224 |

|

$47,959.01 |

a We obtained the Federal minimum wage rate from https://dol.gov/general/topic/wages/minimumwage and state minimum wage rates from https://www.dol.gov/agencies/whd/minimum-wage/state.

b We obtained average hourly wage rates for All Occupations (00-0000) from https://www.bls.gov/oes/current/oes_nat.htm#11-0000.

c We obtained average hourly wage rates for Management Occupations (11-000) from Social and Community Service Managers (bls.gov) from https://www.bls.gov/oes/current/oes119151.htm.

d We assume that 50% of the baseline surveys will be completed by a proxy, who will then complete the parent/guardian follow-up survey.

Please ensure the annual total burden, respondents and response match those entered in IC Data Parts 1 and 2, and the response per respondent matches the Paperwork Burden Statement that must be included on all forms.

Provide an estimate of the total annual cost burden to respondents or record keepers resulting from the collection of information. (Do not include the cost of any hour burden shown in Items 12 and 14.)

The cost estimate should be split into two components: (a) a total capital and start-up cost component (annualized over its expected useful life); and (b) a total operation and maintenance and purchase of services component. The estimates should take into account costs associated with generating, maintaining, and disclosing or providing the information. Include descriptions of methods used to estimate major cost factors including system and technology acquisition, expected useful life of capital equipment, the discount rate(s), and the time period over which costs will be incurred. Capital and start-up costs include, among other items, preparations for collecting information such as purchasing computers and software; monitoring, sampling, drilling and testing equipment; and acquiring and maintaining record storage facilities.

If cost estimates are expected to vary widely, agencies should present ranges of cost burdens and explain the reasons for the variance. The cost of contracting out information collection services should be a part of this cost burden estimate. In developing cost burden estimates, agencies may consult with a sample of respondents (fewer than 10), use the 60-day pre-OMB submission public comment process and use existing economic or regulatory impact analysis associated with the rulemaking containing the information collection, as appropriate.

Generally, estimates should not include purchases of equipment or services, or portions thereof, made: (1) prior to October 1, 1995, (2) to achieve regulatory compliance with requirements not associated with the information collection, (3) for reasons other than to provide information or keep records for the government or (4) as part of customary and usual business or private practices. Also, these estimates should not include the hourly costs (i.e., the monetization of the hours) captured above in Item 12.

Total Annualized Capital/Startup Cost :

Total Annual Costs (O&M) :____________________

Total Annualized Costs Requested :

There are no direct or start-up costs to respondents associated with the proposed primary data collection.

Provide estimates of annualized cost to the Federal government. Also, provide a description of the method used to estimate cost, which should include quantification of hours, operational expenses (such as equipment, overhead, printing, and support staff), and any other expense that would not have been incurred without this collection of information. Agencies also may aggregate cost estimates from Items 12, 13, and 14 in a single table.

The total cost to the Federal government for this study, which includes the surveys and administration data collection in this ICR and analysis and reporting, is $4,030,695. The average estimated annual cost over the 60-month study is $806,139.

The total cost to the Federal government for this study is $9,988,901. This cost includes the costs incurred for designing and administrating all collection instruments, processing and analyzing the data, and preparing reports. The average annual cost over the 5 years study is $1,997,780.

Explain the reasons for any program changes or adjustments. Generally, adjustments in burden result from re-estimating burden and/or from economic phenomenon outside of an agency’s control (e.g., correcting a burden estimate or an organic increase in the size of the reporting universe). Program changes result from a deliberate action that materially changes a collection of information and generally are result of new statute or an agency action (e.g., changing a form, revising regulations, redefining the respondent universe, etc.). Burden changes should be disaggregated by type of change (i.e., adjustment, program change due to new statute, and/or program change due to agency discretion), type of collection (new, revision, extension, reinstatement with change, reinstatement without change) and include totals for changes in burden hours, responses and costs (if applicable).

Provide a descriptive narrative for the reasons of any change in addition to completing the table with the burden hour change(s) here.

|

Program Change Due to New Statute |

Program Change Due to Agency Discretion |

Change Due to Adjustment in Agency Estimate |

Total Burden |

2,005 |

|

219 |

Total Responses |

|

4,866 |

|

Total Costs (if applicable) |

|

|

|

This is a request for a new collection of information.

For collections of information whose results will be published, outline plans for tabulation and publication. Address any complex analytical techniques that will be used. Provide the time schedule for the entire project, including beginning and ending dates of the collection of information, completion of report, publication dates, and other actions.

This study team will use descriptive, comparative, and qualitative analysis to address the study’s research questions. The SWTCIE national evaluation will conduct five types of analysis: a participation analysis, an implementation analysis, an outcomes analysis, an impact analysis, and a benefit-cost analysis. Below we describe each analysis.

Participation analysis. The participation analysis will describe participants’ characteristics, including whether they represent populations historically underserved by VR agencies and people with high support needs, and compare them with other populations with disabilities. It will also analyze participants’ enrollment progress to understand whether the projects achieved their stated goals. For employers and service providers, the participation analysis will describe types of partnerships and their roles in the projects. Finally, the participation analysis will examine employer and provider recruitment and engagement strategies across projects and highlight lessons and best practices for 14(c) certificate holders, other employers, and service provider enrollment.

Implementation analysis. The implementation analysis will explore participants’ experience and service use, SWTCIE project operations, engagement with other organizations, and system change efforts across the projects. The analysis will inform how projects deliver core components of services to participants; engage organizations such as 14(c) certificate holders, other employers, and service providers; and advance system change efforts. The implementation analysis will examine project structure, including partners, staff, and design. Finally, the implementation analysis will provide insight to RSA that it can share with project staff as formative feedback to inform potential midcourse corrections in implementation.

Outcomes analysis. The outcomes analysis will describe participant, employer, provider, and system outcomes potentially affected by the SWTCIE projects. The projects intend to affect a wide range of outcomes across categories, including employment and earnings, health and well-being, attitudes and expectations, and VR policies and practices. The SWTCIE national evaluation will describe outcomes potentially affected by the projects regardless of whether a valid comparison group is available to estimate project impacts.

Impact analysis. The impact analysis will generate evidence about the effects of the SWTCIE projects on key demonstration-related outcomes. To understand whether any observed changes in these outcomes is attributable to the projects (rather than other changes such as the economic climate), we will compare the outcomes of participants with those of a comparison group whose outcomes represent what would have happened to the participants had they not enrolled in a project.

Benefit-cost analysis. Using data collected throughout the course of the SWTCIE national evaluation, the benefit-cost analysis will show whether the benefits of each project were large enough to justify its costs. To provide a comprehensive accounting of the consequences of each project, we will report benefits and costs from four perspectives: participants, the VR agency, the state (everything associated with the state except VR), and the Federal government.

A.16.2. Time schedule and publication plans

Reporting plans include an evaluation report, internal study brief, study snapshot and how-to guide (Table A.4.).

The evaluation report will present a complete set of findings from the SWTCIE national evaluation and will lead to a related set of 508-compliant dissemination materials to reach a diverse range of audiences.

The internal study brief will consist of a condensed description of evaluation findings, using tables and infographics to convey complex information to people who are not familiar with detailed evaluation methods, such as program staff, employers, and people with disabilities and their families.

The study snapshot will cover a high-level overview of main evaluation findings and takeaways using a headline approach with supporting graphics, figures, or bullets. The target audience for this deliverable is people who are not familiar with detailed evaluation methods, such as program staff, employers, and people with disabilities and their families.

The how-to guide will present the strategies the DIF programs used to promote CIE that were effective and describe how to implement them, with considerations for costs, facilitators, and challenges. The target audience for this deliverable includes professionals and practitioners interested in replicating DIF program strategies.

Table A.4. Deliverable schedule for evaluation-related reports

Deliverable |

Completion Date |

Evaluation report |

March 31, 2027 |

Internal study brief |

March 31, 2027 |

Study snapshot |

March 31, 2027 |

How-to guide |

March 31, 2027 |

If seeking approval to not display the expiration date for OMB approval of the information collection, explain the reasons that display would be inappropriate.

RSA is not requesting a waiver for the display of the OMB approval number and expiration date. The instruments will display the OMB expiration date.

Explain each exception to the certification statement identified in the Certification of Paperwork Reduction Act.

No exceptions to the certification statement are requested or required.

1 Requests for this information are in accordance with the following ED and OMB policies: Privacy Act of 1974, OMB Circular A-108 – Privacy Act Implementation – Guidelines and Responsibilities, OMB Circular A-130 Appendix I – Federal Agency Responsibilities for Maintaining Records About Individuals, OMB M-03-22 – OMB Guidance for Implementing the Privacy Provisions of the E-Government Act of 2002, OMB M-06-15 – Safeguarding Personally Identifiable Information, OM:6-104 – Privacy Act of 1974 (Collection, Use and Protection of Personally Identifiable Information)

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Supporting Statement Part A |

| Author | Authorised User |

| File Modified | 0000-00-00 |

| File Created | 2024-10-28 |

© 2026 OMB.report | Privacy Policy