0648-0744 Supporting Statement Part B

0648-0744 Supporting Statement Part B.docx

Resident Perceptions of Offshore Wind Energy Development off the Oregon Coast and Along the Gulf of Mexico

OMB: 0648-0744

SUPPORTING STATEMENT

U.S. Department of Commerce

National Oceanic & Atmospheric Administration

Resident Perceptions of Offshore Wind Energy Development

off

the Oregon Coast and Gulf of Mexico

OMB Control No. 0648-0744

B. Collections of Information Employing Statistical Methods

Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

Potential Respondent Universe and Response Rate

Oregon:

The potential respondent universe for this study includes residents aged 18 and over living on the Oregon Coast, which includes Clatsop County, Tillamook County, Lincoln County, western Lane County, western Douglas County, Coos County, and Curry County.

The estimated total number of occupied households in the study region is 99,161 (US Census Bureau, 2020) and the estimated total population 18 years and over is 188,063 (US Census Bureau/American Community Survey, 2020).

Gulf of Mexico:

The potential respondent universe for this study includes residents aged 18 and over living in coastal counties in Louisiana and Texas (see map below). The estimated total number of occupied households in the study region is 3,328,119 (US Census Bureau, 2020) and the estimated total population 18 years and over is 9,266,857 (US Census Bureau/American Community Survey, 2020).

Both Collections:

In terms of response rate, previous studies conducted in the regions on similar topics (e.g., environmental issues, alternative energy) and using mail-based survey modes reported response rates ranging from 14% to 56% (Smith et al., 2021; Steel et al., 2015; Needham et al., 2013; Stefanovich, 2011; Swofford and Slattery, 2010; Pierce et al., 2009). To better understand the social context of the issue in the regions of interest, researchers talked with key partners to gather anecdotal information on the level of public knowledge, interest, and awareness of offshore wind energy. Additionally, researchers reviewed local and regional media to determine the nature and degree of media coverage, as a proxy for gauging public interest. There appears to be significant interest in offshore wind energy development on both the Oregon and Gulf Coasts.

Based on the information gathered, researchers conservatively anticipate a response rate of approximately 25%.

Sampling and Respondent Selection Method

Data will be collected using a two-stage stratified random sampling design for each collection. The study regions will be stratified geographically by county and census tract. Details of the strata are explained below. Within each stratum, households will be selected at random, and within each selected household, the individual with the next upcoming birthday who is aged 18 or older will be selected to approximate random selection. Therefore, the primary sampling unit (PSU) is the household, and the secondary sampling unit (SSU) consists of individuals selected within each household.

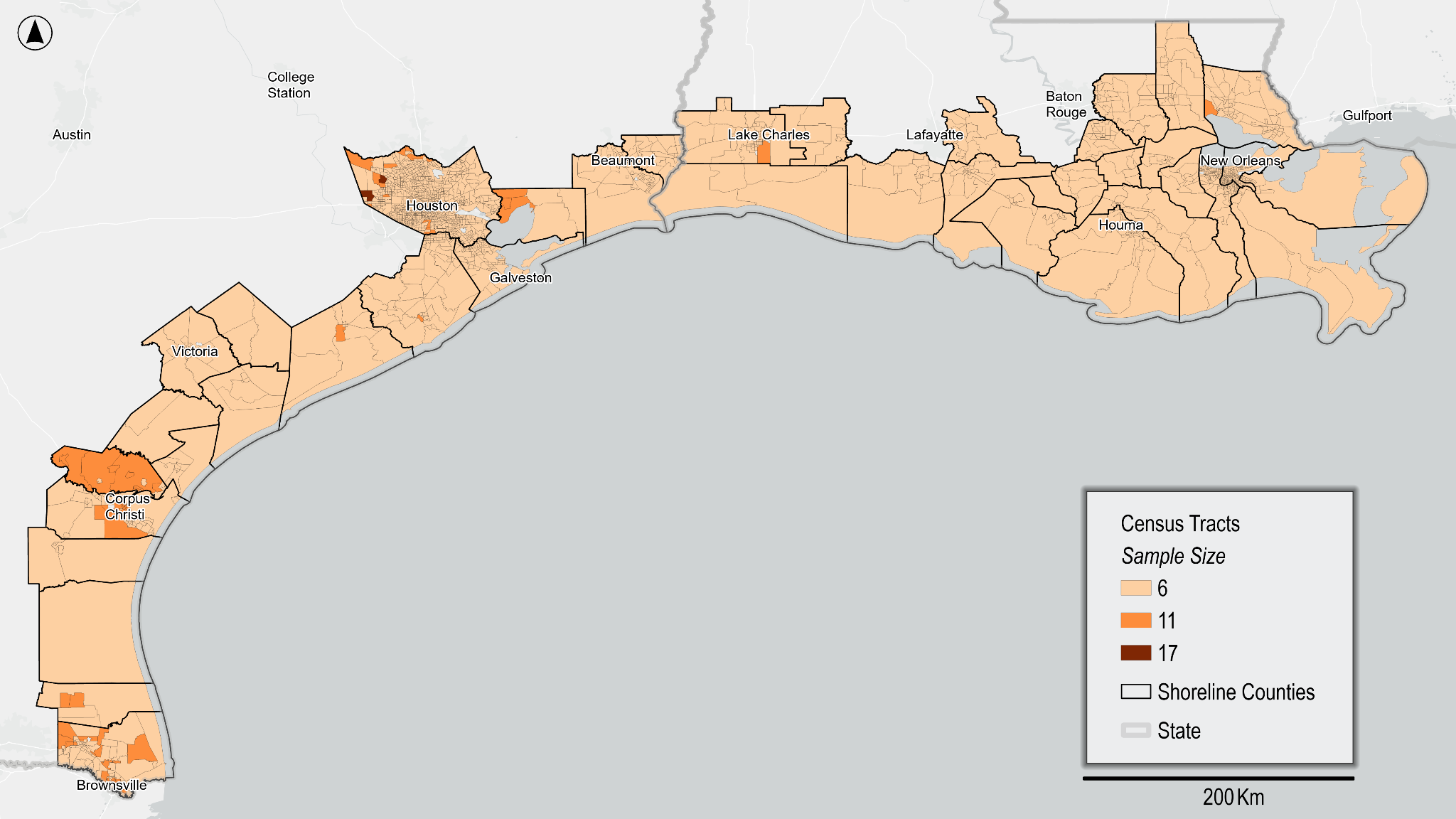

The goals of the proposed strata are to ensure spatial representation and allow researchers to examine the influence of geographic proximity on responses. Additionally, researchers would like to develop estimates for specific Environmental Justice communities. Therefore, tracts with high proportions of those communities will be oversampled. Figure 1 shows the final sample size for each tract. The sample size for the pre-test will be downscaled proportionately to the final sample size.

Figure

SEQ Figure \* ARABIC 1 Sampling Design

Figure 1: Final sample size per Census tract across shoreline counties in Texas and Louisiana.

Table 2 provides a breakdown of the tentative estimated number of completed surveys desired for each stratum, along with the sample size per state. In order to obtain our estimated minimum number of respondents (d), the sample size needs to be increased to account for both non-response (e) and mail non-delivery (f). Estimated non-response rates vary by Census tract or block group. Those with at least 5% of the population within any Environmental Justice community are estimated to have a 20% response rate, and all other Census tracts or block groups are estimated to have a 25% response rate. Therefore, direct stratum-level calculations cannot be shown in Table 2; however, estimated response rates for each state average 19% to 20%. Note that these response rates assume a $2 incentive (see Section 3). Therefore, based on the statistical sampling methodology discussed in detail in Question 2 below, the estimated response rate, and the 10% non-deliverable rate, the sample size for the final collection will be 14,949. See Section 2.iii below for more details on determining the minimum sample size.

Table 2: Estimates of sample size by strata

Strata |

Estimated Population 18 and over (a) |

Estimated Occupied Households (b) |

Pretest Sample (c) |

Estimated Minimum Number of Respondents (d) |

Sample Size Adjusted for 25% Response Rate (e) = (d) ÷ 25% |

Sample Size Adjusted for 10% Non- Deliverable Rate) (f) = (e) ÷ (1−10%) |

Ascension, LA census tracts |

125,289 |

45,195 |

29 |

29 |

157 |

174 |

Assumption, LA census tracts |

21,366 |

8,289 |

7 |

7 |

38 |

42 |

Calcasieu, LA census tracts |

212,646 |

76,829 |

54 |

55 |

296 |

329 |

Cameron, LA census tracts |

5,650 |

2,216 |

4 |

4 |

22 |

24 |

Iberia, LA census tracts |

70,518 |

26,697 |

22 |

22 |

119 |

132 |

Jefferson, LA census tracts |

439,402 |

174,954 |

125 |

125 |

675 |

750 |

Jefferson Davis, LA census tracts |

32,270 |

11,351 |

8 |

8 |

43 |

48 |

Lafourche, LA census tracts |

97,677 |

36,412 |

26 |

26 |

140 |

156 |

Livingston, LA census tracts |

141,057 |

48,474 |

34 |

34 |

184 |

204 |

Orleans, LA census tracts |

383,974 |

156,586 |

182 |

182 |

983 |

1,092 |

Plaquemines, LA census tracts |

23,536 |

8,039 |

9 |

9 |

49 |

54 |

St. Bernard, LA census tracts |

43,821 |

15,472 |

17 |

17 |

92 |

102 |

St. Charles, LA census tracts |

52,411 |

18,640 |

13 |

13 |

70 |

78 |

St. James, LA census tracts |

20,390 |

7,464 |

7 |

7 |

38 |

42 |

St. John the Baptist, LA census tracts |

42,704 |

15,109 |

11 |

11 |

59 |

66 |

St. Martin, LA census tracts |

52,222 |

19,537 |

17 |

17 |

92 |

102 |

St. Mary, LA census tracts |

49,818 |

18,565 |

16 |

16 |

86 |

96 |

St. Tammany, LA census tracts |

262,799 |

99,293 |

58 |

59 |

318 |

353 |

Tangipahoa, LA census tracts |

132,492 |

48,390 |

31 |

31 |

167 |

186 |

Terrebonne, LA census tracts |

110,100 |

41,960 |

34 |

34 |

184 |

204 |

Vermilion, LA census tracts |

57,775 |

21,580 |

16 |

16 |

86 |

96 |

Aransas, TX census tracts |

24,149 |

10,452 |

10 |

10 |

54 |

60 |

Brazoria, TX census tracts |

368,575 |

124,284 |

77 |

78 |

420 |

467 |

Calhoun, TX census tracts |

20,367 |

7,748 |

7 |

7 |

38 |

42 |

Cameron, TX census tracts |

420,554 |

130,030 |

119 |

152 |

791 |

879 |

Chambers, TX census tracts |

45,257 |

14,905 |

6 |

9 |

46 |

51 |

Galveston, TX census tracts |

347,084 |

131,877 |

101 |

101 |

545 |

606 |

Harris, TX census tracts |

4,697,957 |

1,658,503 |

1,113 |

1,129 |

6,082 |

6,758 |

Jackson, TX census tracts |

14,971 |

5,155 |

3 |

3 |

16 |

18 |

Jefferson, TX census tracts |

256,755 |

92,751 |

75 |

75 |

405 |

450 |

Kenedy, TX census tracts |

169 |

48 |

1 |

1 |

5 |

6 |

Kleberg, TX census tracts |

31,015 |

11,559 |

9 |

9 |

49 |

54 |

Matagorda, TX census tracts |

36,323 |

13,686 |

12 |

14 |

74 |

82 |

Nueces, TX census tracts |

353,594 |

129,845 |

95 |

113 |

594 |

660 |

Orange, TX census tracts |

85,045 |

30,636 |

24 |

24 |

130 |

144 |

Refugio, TX census tracts |

6,822 |

2,189 |

2 |

2 |

11 |

12 |

San Patricio, TX census tracts |

68,600 |

23,808 |

16 |

26 |

131 |

146 |

Victoria, TX census tracts |

91,280 |

34,219 |

24 |

24 |

130 |

144 |

Willacy, TX census tracts |

20,423 |

5,372 |

5 |

7 |

36 |

40 |

TOTAL |

9,266,857 |

3,328,119 |

2,419 |

2,506 |

13,454 |

14,949 |

Describe the procedures for the collection of information including: statistical methodology for stratification and sample selection; estimation procedure; degree of accuracy needed for the purpose described in the justification; unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Stratification and Sample Selection

Residential households will be randomly selected from each stratum using an address-based frame procured from the U.S. Postal Service.

Estimation Procedures

For obtaining population-based estimates of various parameters, each responding household will be assigned a sampling weight. The weights will be used to produce estimates that:

are generalizable to the population from which the sample was selected;

account for differential probabilities of selection across the sampling strata;

match the population distributions of selected demographic variables within strata; and

allow for adjustments to reduce potential non-response bias.

These weights combine:

a base sampling weight which is the inverse of the probability of selection of the household;

a within-stratum adjustment for differential non-response across strata; and

a non-response weight.

Post-stratification adjustments will be made to match the sample to known population values (e.g., from Census data).

There are various models that can be used for non-response weighting. For example, non-response weights can be constructed based on estimated response propensities or on weighting class adjustments. Response propensities are designed to treat non-response as a stochastic process in which there are shared causes of the likelihood of non-response and the value of the survey variable. The weighting class approach assumes that within a weighting class (typically demographically-defined), non-respondents and respondents have the same or very similar distributions on the survey variables. If this model assumption holds, then applying weights to the respondents reduces bias in the estimator that is due to non-response. Several factors, including the difference between the sample and population distributions of demographic characteristics, and the plan for how to use weights in the regression models will determine which approach is most efficient for both estimating population parameters.

Degree of Accuracy Needed for the Purpose Described in the Justification

The

following formula can be used to determine the minimum required

sample size, ,

for analysis

,

for analysis

Where

is the z-value required for a specified confidence level (here, 95%),

is the z-value required for a specified confidence level (here, 95%),

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the confidence interval (here, 0.05). Therefore,

is the confidence interval (here, 0.05). Therefore,

This means a minimum sample size of 384 is required to be able to test for differences in means at the 95% confidence level with a 5% confidence interval, which is met by our sampling plan.

Additionally, researchers would like to develop estimates for the following socioeconomic factors related to Environmental Justice:

People of color (defined as individuals who list their racial status as a race other than white alone and/or list their ethnicity as Hispanic or Latino)

Low income (defined as individuals whose ratio of household income to poverty level in the past 12 months was less than 2)

Unemployed

Over 64

Our sampling plan meets the minimum sample sizes for these factors at the 95% confidence level with up to a 7% confidence interval.

Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield "reliable" data that can be generalized to the universe studied.

Focus Groups

The first step in achieving a high response rate is to develop a survey that is easy for respondents to complete. Researchers will conduct focus groups to determine 1) if questions are easy to understand, 2) the survey response process, 3) if questions and responses are relevant and comprehensive, and 4) if enough information is provided for individuals to confidently respond. Local partners will assist in a targeted recruitment of up to 48 focus group participants in Oregon and 64 in the Gulf of Mexico with a goal of ensuring socio-demographic and geographic representation. (See Appendix C for focus group script)

Implementation Techniques

The implementation techniques that will be used are consistent with methods that maximize response rates. Researchers propose a mixed-mode system, employing mail contact and recruitment, following the Dillman Tailored Design Method (Dillman et al., 2014), and online survey administration. To maximize response, potential respondents will be contacted multiple times via postcards and other mailings; this will include a pre-survey notification postcard, a letter of invitation, and follow-up reminders (see Appendix D for postcard and letter text). Final survey administration procedures and design of the survey administration tool will be subject to the guidance and expertise of the vendor hired to provide the data with regard to maximizing response rate, based on their experience conducting similar collections in the region of interest.

Incentives

Incentives are consistent with numerous theories about survey participation (Singer and Ye, 2013), such as the theory of reasoned action (Ajzen and Fishbein, 1980), social exchange theory (Dillman et al., 2014), and leverage-salience theory (Groves et al., 2000). Inclusion of an incentive acts as a sign of good will on the part of the study sponsors and encourages reciprocity of that goodwill by the respondent.

Dillman et al. (2014) recommends including incentives to not only increase response rates, but to decrease nonresponse bias. Specifically, an incentive amount between $1 and $5 is recommended for surveys of most populations.

Church (1993) conducted a meta-analysis of 38 studies that implemented some form of mail survey incentive to increase response rates and found that providing a prepaid monetary incentive with the initial survey mailing increases response rates by 19.1% on average. Lesser et al. (2001) analyzed the impact of financial incentives in mail surveys and found that including a $2 bill increased response rates by 11% to 31%. Gajic et al. (2012) administered a stated-preference survey of a general community population using a mixed-mode approach where community members were invited to participate in a web-based survey using a traditional mailed letter. A prepaid cash incentive of $2 was found to increase response rates by 11.6%.

Given these findings, we believe a small, prepaid incentive will boost response rates by at least 10% and would be the most cost effective means to increase response rates. A $2 bill incentive was chosen due to considerations for the population being targeted and the funding available for the project. As this increase in response rate will require a smaller sample size, the cost per response is only expected to increase by roughly $0.59.

Decreasing survey response rates is a growing concern due to the increased likelihood of non-response bias, which can limit the ability to develop population estimates from survey data. Non-response bias may still exist even with high response rates if non-respondents differ greatly from respondents; however, information on non-respondents is often unavailable. One approach to estimating non-response bias in the absence of this information is the “continuum of resistance” model (Lin and Schaffer, 1995), which assumes that those who only respond after repeated contact attempts (delayed respondents) would have been non-respondents if the data collection had stopped early. Therefore, non-respondents are more similar to delayed respondents than to those who respond quickly (early respondents). Researchers will assess the potential for non-response bias by comparing responses across contact waves. If found, a weighting procedure, as discussed in Section B.1.ii above, can be applied, and the implications towards policy outcome preferences will be examined and discussed.

Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of tests may be submitted for approval separately or in combination with the main collection of information.

See response to Part B Question 3 above.

Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

Consultation on the statistical aspects of the study design was provided by Trent Buskirk (tbuskirk@odu.edu).

This project will be implemented by researchers with NOAA’s National Centers for Coastal Ocean Science. The project Principal Investigator is:

Theresa L Goedeke, Supervisory Research Social Scientist

NOAA National Ocean Service

National Centers for Coastal Ocean Science

1305 East West Hwy

Building SSMC4

Silver Spring, MD 20910

Email: theresa.goedeke@noaa.gov

Data collection will be contracted out to an external vendor which has yet to be solicited and selected.

REFERENCES

Ansolabehere, S., Konisky, D.M. (2009). Public attitudes toward construction of new power plants. Public Opinion Quarterly, 73(3): 566-577. doi: 10.1093/poq/nfp041

Bell, D., Gray, T., Haggett, C., Swaffield J. (2013). Re-Visiting the ‘social gap’: public opinion and relations of power in the local politics of wind energy. Environ. Polit., 22(1): 115-135. doi: 10.1080/09644016.2013.

Bidwell, D., Schweizer, P-J. (2020). Public values and goals for public participation. Environmental Policy and Governance, 31(4): 257-269. doi:10.1002/eet.1913

Boudet, H., Clarke, C., Bugden, D., Maibach, E., Roser-Renouf, C., Anthony L. (2014). ‘Fracking’ controversy and communication: using national survey data to understand public perceptions of hydraulic fracturing. Energy Policy, 65: 57-67. doi: 10.1016/j.enpol.2013.10.017.

Church, A.H. (1993). Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opinion Quarterly, 57(1): 62-80.

Chyung, S.Y., Roberts, K., Swanson, I., Hankinson, A. (2017). Evidence-based survey design: The use of a midpoint on the Likert scale. Performance Improvement, 56(10): 15-23. doi: 10.1002/pfi.21727.

Clarke, E.C, Bugden, D., Hart, P.S., Stedman, R.C., Jacquet, J.B., Evensen, D.T.N., Boudet, H.S. (2016). How geographic distance and political ideology interact to influence public perception of unconventional oil/natural gas development Energy Policy, 97: 301-309. doi: 10.1016/j.enpol.2016.07.032.

Dillman, D.A., Smyth, J.D., Christian, L.M. (2014). Internet, mail, and mixed-mode surveys: The tailored design method. Hoboken, NJ: Wiley.

Firestone, J., Kempton, W. (2007). Public opinion about large offshore wind power: Underlying factors. Energy Policy, 35(3): 1584-1598. doi: 10.1016/j.enpol.2006.04.010.

Firestone, J., Kempton, W., Lilley, M., Samoteskul, K. (2012). Public acceptance of offshore wind power: Does perceived fairness of process matter? Journal of Environmental Planning and Management, 55(10): 1387-1402. doi: 10.1080/09640568.2012.688658.

Fleming, C.S., Gonyo, S.B., Freitag, A., Goedeke, T.L. (2022). Engaged minority or quiet majority? Social intentions and actions related to offshore wind energy development in the United States. Energy Research and Social Science, 84: 102440. doi: 10.1016/j.erss.2021.102440.

Goedeke, T.L., Gonyo, S.B., Fleming, C.S., Loerzel, J.L., Freitag, A., Ellis, C. (2019). Resident perceptions of local offshore wind energy development: Support level and intended action in coastal North and South Carolina. OCS Study BOEM 2019-054.

Gonyo, S.B., Fleming, C.S., Freitag, A., Goedeke, T.L. (2021). Resident perceptions of local offshore wind energy development: Modeling efforts to improve participatory processes. Energy Policy, 149L 112068.

Groves, R.M., Singer, E., Corning, A. (2000). Leverage-Saliency Theory of Survey Participation: Description and an Illustration. Public Opinion Quarterly, 64(3): 299-308.

Lesser, V.M., Dillman, D.A., Carlson, J., Lorenz, F., Mason, R., Willits, F. (2001). Quantifying the influence of incentives on mail survey response rates and nonresponse bias. Presented at the Annual Meeting of the American Statistical Association, Atlanta, GA.

Hamilton, L.C., Bell, E., Hartter, J., Salerno, J.D. (2018). A change in the wind? US public views on renewable energy and climate compared. Energy, Sustainability and Society, 8(11). doi: 10.1186/s13705-018-0152-5

Innes, J., and Booher, D. (2004). Reframing public participation: Strategies for the 21st Century. Planning Theory and Practice, 5(4): 419-436. doi: 10.1080/1464935042000293170

Jepson, W., Brannstrom, C., & Persons, N. (2012). “We Don’t Take the Pledge”: Environmentality and environmental skepticism at the epicenter of US wind energy development. Geoforum, 43(4), 851-863.

Jessup, 2010. Plural and hybrid environmental values: A discourse analysis of the wind energy conflict in Australia and the United Kingdom. Environmental Politics, 19(1): 21-44. doi: 10.1080/09644010903396069

Lin, I., Schaeffer, N.C. (1995). Using survey participants to estimate the impact of nonparticipation. Public Opinion Quarterly, 59, 236-258. doi: 10.1086/269471.

Marullo, S. (1988). Leadership and membership in the nuclear freeze movement: A specification of resource mobilization theory. The Sociological Quarterly, 29(3): 407-427.

Mukherjee, D., Rahman, M.A. (2016). To drill or not to drill? An econometric analysis of US public opinion. Energy Policy, 91: 341-351.

National Science Foundation. (2012). Chapter 7. Science and Technology: Public Attitudes and Understanding. In Science and Engineering Indicators 2012.

Needham, M.D., Cramer, L.A., Perry, E.E. (2013). Coastal resident perceptions of marine reserves in Oregon. Final project report for Oregon Department of Fish and Wildlife (ODFW). Corvallis, OR: Oregon State University, Department of Forest Ecosystems and Society; and the Natural Resources, Tourism, and Recreation Studies Lab (NATURE).

Pierce, J.C., Steel, B.S., Warner, R.L. (2009). Knowledge, Culture, and Public Support for Renewable-Energy Policy. Comparative Technology Transfer and Society, 7(3), 270-286. doi: 10.1353/ctt.0.0047.

Sierman, J., Cornett, T., Henry, D., Reichers, J., Schultz, A., Smith, R., Woods, M. (2022). 2022 Floating Offshore Wind Study. Oregon Department of Energy. www.oregon.gov/energy/Data-and-Reports/Documents/2022-Floating-Offshore-Wind-Report.pdf

Singer, E., Ye, C. (2013). The Use and Effects of Incentives in Surveys. The Annals of the American Academy of Political and Social Science, 645: 112-141.

Smith, W. E., Kyle, G. T., Sutton, S. (2021). Displacement and associated substitution behavior among Texas inshore fishing guides due to perceived spotted seatrout declines. Marine Policy, 131: 104624.

Steel, B.S., Pierce, J.C., Warner, R.L., Lovrich, N.P. (2015). Environmental Value Considerations in Public Attitudes About Alternative Energy Development in Oregon and Washington. Environmental Management 55, 634–645. doi: 10.1007/s00267-014-0419-3.

Stefanovich, M.A.P. (2011). Does concern for global warming explain support for wave energy development? A case study from Oregon, U.S.A. OCEANS 2011 IEEE - Spain, 1-9. doi: 10.1109/Oceans-Spain.2011.6003514.

Swofford, J., Slattery, M. (2010). Public attitudes of wind energy in Texas: Local communities in close proximity to wind farms and their effect on decision-making. Energy Policy, 38(5): 2508-2519.

US Census Bureau. (2020). "H1: Occupancy Status." 2020 Census Redistricting Data (Public Law 94-171).

US Census Bureau/American Community Survey. (2020). "B01001: Sex By Age." 2015-2020 American Community Survey.

US Census Bureau. (2021). Computer and internet use in the United States: 2018. Available online: https://www.census.gov/content/dam/Census/library/publications/2021/acs/acs-49.pdf

van Bezouw, J., Kutlaca, M. (2019). What do we want? Examining the motivating role of goals in social movement mobilization. Journal of Social and Political Psychology, 7(1): 33-51. doi: 10.5964/jspp.v7i1.796

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2024-07-20 |

© 2026 OMB.report | Privacy Policy