Supporting Statement Part B Template__2024_1_24

Supporting Statement Part B Template__2024_1_24.docx

Implementation and Testing of Diagnostic Safety Resources

OMB:

SUPPORTING STATEMENT

Part B

Implementation and Testing of Diagnostic Safety Resources

Version: January 1, 2024

Agency for Healthcare Research and Quality (AHRQ)

Table of contents

B. Collections of Information Employing Statistical Methods 3

1. Respondent Universe and Sampling Methods 5

2. Information Collection Procedures 7

3. Methods to Maximize Response Rates 9

4. Tests of Procedures………………………………………………………………..11

5. Statistical Consultants……………………………………………………………..11

B. Collections of Information Employing Statistical Methods

The goal of testing in this project will be to understand and establish optimal implementation strategies for the Calibrate Dx, Measure Dx and Patient Toolkit resources, including for training, technical assistance (TA), and sustainment, and to assess the impact of each resource within the broader context of (1) reducing errors; (2) improving diagnostic processes to address harm from missed, delayed, or incorrect diagnoses, overdiagnosis, and over-testing; and (3) communicating diagnosis-related explanations to patients effectively.

The research is guided by the RE-AIM Framework in the selection of process and outcome measures to evaluate the impact of each resource: Reach, Effectiveness, Adoption, Implementation, and Maintenance of the resource (Table 1). The evaluation will further draw on the recent extension (Shelton, et al., 2020) of the RE-AIM framework to include Sustainability beyond Maintenance, including consideration of adaptation and de-implementation over time and explicit consideration of equity across RE-AIM dimensions. The testing approach is intentionally designed to be minimally burdensome to sites and participating clinicians/staff, while being maximally informative of the impact of each resource on diagnostic excellence.

Table 1. RE-AIM Framework Overview

Dimension |

Example metrics |

Equity Considerations |

Reach |

Target audience; number and type of individuals/organizations receiving resource |

Inclusivity of outreach approaches, diversity of the recruitment sample, reasons for site dropout |

Effectiveness |

Impact of the resource; meaningful change in outcomes; comparisons across groups, unintended consequences |

Variation in impact by type of visit, patient, condition, setting, or geographic location |

Adoption |

Number and type of completers, reasons for attrition, participant characteristics and representativeness |

Differences in type of staff/sites participating in trainings and adopting the resource |

Implementation |

Feasibility, usefulness, utility, acceptability of the resource to stakeholders; adaptations to context and user needs; fidelity and consistency of resource delivery; standardization of implementation |

Equitable engagement of clinicians and staff; appropriate engagement of diverse patient groups; differences in support and resources provided for implementation |

Maintenance |

Spread and adoption of the resource over time; organization-wide interest; extent of de-implementation |

Equitable sustainment approach; variation in resources for sustainment; reasons for de-implementation by setting/site type |

As an implementation evaluation aimed at increasing optimal and widespread use of these diagnostic safety resources, and not a hypothesis-driven outcomes study, the analytic goal is to robustly describe implementation, use, and sustainment of these resources in practice, to understand factors associated with successful implementation, and establish guiding principles for implementation beyond this effort.

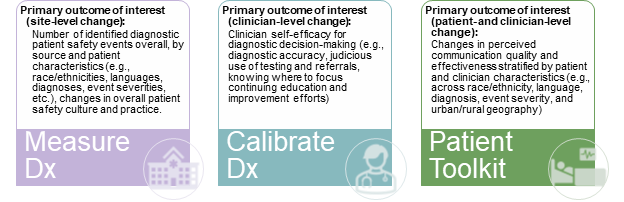

For primary outcomes, each resource will evaluate pre-post changes in the measures most relevant to its diagnostic safety objective at different levels of evaluation (Figure 1).

Figure 1. Evaluation Levels and Primary Outcomes for Each Resource

In addition, data on resource-specific implementation and impact using targeted metrics will be collected. For example, to measure Reach of Calibrate Dx, number and type of scenarios and patient populations targeted for evaluation will be collected, based on clinician documentation in the Calibrate Dx Survey (Attachment P). To measure Effectiveness of the Patient Toolkit, we will use the Patient Toolkit Survey-Provider (Attachment S) to assess number and type of providers who used the 60 Seconds to Improve Diagnostic Safety component with improvements in communication effectiveness. For Measure Dx Effectiveness, we will use various composite measures from the SOPS surveys (Attachment E and Attachment F) such as the Leadership Support for Patient Safety measure and Time Availability and Testing and Referrals measures. To measure Adoption of Calibrate Dx, we will capture the number of Calibrate Dx users and number of cases reviewed (Calibrate Dx Survey (Attachment P)).

Quantitative Data Analysis

Changes over time in the outcomes of interest will be assessed and how these effects might vary by relevant individual clinician, patient, or site characteristics will be evaluated. The analytic team, led by a PhD-level statistician, will employ various methods to achieve this. For example, for appropriate outcomes, generalized linear models with post-implementation indicators and site-specific fixed effects will be used to obtain estimates of average individual-level post-implementation changes, by site, post-treatment time period, and averaged across sites and time (noting that some versions of these models produce coefficient estimates corresponding to paired t-tests). When appropriate, the Wilcoxon signed rank test to test for these same changes will be used. To understand how participant-level effects vary at the individual-level by site characteristic (e.g., urban versus rural, academic versus non-academic), where there is sufficient data, covariates and relevant interactions in the models will be included. Site-level effects and effect heterogeneity will also be explored, including improvements in RE-AIM metrics, utilizing meta-analytic methods. For example, average changes in the outcome at the site-level will be modeled as a function of covariates using random effects models and the Hartung-Knapp adjustment as needed for sub-analyses with smaller numbers of sites (e.g., rural sites). Throughout, there will be accounting for missing covariate data using multiple imputation and missing outcome information by using attrition weighting.

Qualitative Data Analysis

Interviews will be conducted virtually by researchers trained in qualitative data collection in healthcare settings, audio-recorded and transcribed, following verbal consent from participants. Semi-structured interview protocols to guide the discussion will be deductively developed based on the objectives of the data collection. Dedoose software will be used for all qualitative data coding and analysis. Codebooks will be developed iteratively and used to code data using a thematic analysis. A joint coding approach in which each coder will independently code each unique transcript once reliability is established will be used. Inter-rater reliability (IRR) will be established by independently coding a set of transcripts and a kappa score will be calculated. Themes will be compared across sites and across cohorts to identify common and differing factors affecting implementation. Observations of selected TA and/or training sessions will use a guide to capture field notes on participant engagement and adoption, participant needs related to training and implementation, barriers and facilitators to implementation, and key success factors or implementation success stories.

1. Respondent Universe and Sampling Methods

Implementing sites may be an adult or pediatric inpatient hospital (either a department/ward within the hospital, the entire hospital, or multiple hospitals within a single health system), or an ambulatory clinic/medical office (either primary or specialty care) or multi-clinic system. To ensure that the evaluation captures the range of locations where the resources are likely to be used, the contractor will attempt to recruit a diverse population of sites with respect to geography, size and type, infrastructure, patient population, and patient safety and quality performance. They will employ a multi-modal approach to reaching and recruiting potential sites, including through online Listening Sessions, webinars, e-mail blasts, and announcements on the contractor’s and partner websites. They will establish a central project website that will serve as an information portal for interested sites and an avenue for declaring interest/enrolling.

The contractor will work with geographically and demographically diverse organizations interested in improving diagnostic safety and quality to identify potential sites. Specifically, they will recruit through IHI, Convergence, SIDM, and Medstar’s vast national networks of healthcare entities, which together comprise hundreds of diverse health systems and clinics across the country of varying size and patient populations served (Table 2).

Recruitment Partner |

Partner Activities |

|

IHI conducts 99+ million engagements per year, trains 1.5 million people in QI per year, and has an engaged network of 600+ faculty. IHI has different membership programs and national initiatives that can be leveraged for recruitment, e.g., IHI Leadership Alliance, National Steering Committee for Patient Safety, IHI Strategic Partners, Age-Friendly Health Systems in partnership with AHA. |

|

Convergence has approximately 500 tier 1 (strong relationships), 1,000 tier 2 (good relationships and branding), and 1,000 Tier 3 (partner support and branding) site relationships. Convergence will also recruit via state associations and community organizations, with particular outreach to rural and vulnerable populations. |

|

SIDM will recruit through their networks, including the Coalition to Improve Diagnosis, a collaboration of 60+ leading healthcare organizations and networks. |

|

MedStar will provide access to their 10 hospitals and 300+ outpatient service locations and connect RAND to their ACTION IV healthcare organization partners. |

In addition, the contractor will work closely with AHRQ to consider the optimal role for additional PSOs and/or PBRNs in the recruitment plan. The contractor can also recruit through their own networks and partnerships, and through outreach to stakeholder organizations such as America’s Essential Hospitals, the American Hospital Association, the American Association of Family Practice, and the American Academy of Pediatrics.

The use of a convenience sample and self-selection by sites into cohorts based on preferences and capabilities could lead to selection bias. To mitigate this, recruitment targets for geographic and patient diversity, as well as for existing engagement with patient safety efforts (e.g., membership in a PSO) will be closely monitored.

During the recruitment and onboarding phase, descriptive data from all participating sites will be collected. These data include: 1) site characteristics, including type (e.g., inpatient hospital, ambulatory clinic, pediatric hospital), size, number of beds if applicable, patient case-mix, geographic region and urban/rural status, public/private status, and teaching status; and 2) an assessment of existing diagnostic safety practices using the Safer Dx Checklist (Attachment C). These data will allow for description and comparison of the sample with regard to basic demographics and maturity in diagnostic excellence.

Based on prior experience recruiting large numbers of sites for a similar project, an estimated 265 sites total will be recruited (or ~88 sites for each tool), assuming 18% attrition between recruitment and implementation start, and another 31% attrition during implementation, to ensure that 50 sites per tool or a total of 150 sites complete implementation.

Site leads, in consultation with the site QI team and clinical leadership, will identify which of the three resources will be implemented and the specific clinic, department, or practice (sites) within the organization that will implement the resource. At large organizations, multiple sites may implement different resources. The site lead will identify clinicians (e.g., physicians, nurse practitioners, physician assistants) to invite to participate in the implementation.

As part of the evaluation of the Patient Toolkit resource, patients will be recruited to complete a survey to assesses patient-perceived experience and quality of communication (Attachment U). This will be administered to site patients over a 1-week period at five timepoints (Baseline, 3-, 6-, 9-, and 12-months). The survey will be provided to patients upon check-out from their visit with a participating provider. A total of 12,500 surveys will be completed during each 1-week period.

2. Information Collection Procedures

Recruitment of Sites. Based on prior experience recruiting large numbers of sites for a similar project, an estimated 265 sites total are needed or ~88 sites for each tool, assuming 18% attrition between recruitment and implementation start, and another 31% attrition during implementation, to ensure that 50 sites per tool or a total of 150 sites complete implementation.

The contractor will reach out via posted announcements, e-mail blasts, e-newsletters, online listening sessions and through existing networks to advertise the project. Links to the web-based Site Interest Form (Attachment A) will be posted or sent by email; a version will also be available to send by email that can be returned by fax, mail, or email. An anticipated 1060 interested sites will complete this form.

After initial screening, eligible sites will receive a project recruitment packet via email that will include project information, and a fillable forms version of the Site Information Form (Attachment B). This form collects additional contact information, data on patient mix, and information on the organization’s diagnostic safety efforts. Of the 1060 sites that indicate interest, 265 (20%) will complete the Site Information Form and begin enrollment activities. Contractor staff will follow up by email and/or phone to answer questions, encourage response, and assist in completing the enrollment materials.

An anticipated 219 sites (82% of those beginning enrollment activities) will complete the full enrollment process and begin participation in the project. These 219 sites will complete the Safer Dx Checklist (Attachment C) prior to their implementation of one of the three measures. This fillable form will be sent by email to site leads and returned by fax, mail, or email.

As noted above, of the 219 sites that begin participating, 150 sites will complete implementation. The 69 sites that attrit from the implementation will complete a one-time telephone interview based on the Exit Interview Protocol (Attachment D). The telephone interview will take 10 minutes and will collect information on why the site could not sustain its efforts or participation. Contractor staff will contact sites that wish to stop participating by email and/or phone to confirm a time to complete the interview.

Data Collection Activities. The Testing Period will last 12 months for each resource, followed immediately by a sustainability period lasting 14 months. During the testing period, implementation and effectiveness data will be collected at multiple timepoints (e.g., at baseline, 3 months, 6 months, 12 months, and 24 months), depending on the specific data collection and analysis plan for each resource.

A baseline assessment of patient safety culture will be completed once by the site lead for each of the 219 participating sites using the SOPS Survey with the Dx Safety Supplemental Set (Attachment E or F), the Medical Office or Hospital versions, depending on the setting. This survey will be conducted via web; an email link will be sent to the site lead with a request for completion. The contractor will send additional prompts and follow-up by phone as needed to encourage response. As this is a core component of the evaluation, it is anticipated that all 219 sites will complete this survey.

Training on the tools for site participants will be resource-specific and continue up to eight weeks into the implementation period, as needed. Training will entail a blend of online training materials with live virtual training sessions. In addition, two Learning Collaborative (LC) virtual meetings will be conducted each month during implementation to discuss challenges, identify and share solutions, and facilitate support between project participants. To track the perceived value of the training and LC meetings, a Training Post-evaluation Form (Attachment G) will be administered to 1,500 individuals participating in the project’s training sessions and the LC Post-evaluation Form (Attachment H) will be administered to 4,500 individuals participating in the project’s LC sessions. Both evaluations will be administered immediately after each session; an email with a link to the survey will be sent to each attendee and email prompting will also be done. An estimated 90% response rate to these collections is anticipated with a total of 1,350 Training Post-evaluation Forms completed and 4,050 LC Post-evaluation Forms completed.

Each of 219 participating site leads will complete a web-based Clinical Sustainability Assessment Tool (CSAT) (Attachment I) one-time between months 9 to 12 in advance of the sustainment period. This survey will be conducted via web; an email link will be sent to the site lead with a request for completion. The contractor will send additional prompts and will follow-up by phone as needed to encourage response. As this is a core component of the evaluation, it is anticipated that all sites will complete this survey.

Qualitative, semi-structured interviews will be conducted with site leads and frontline staff using the Implementation Interview Protocol (Attachment J). Individuals will be selected randomly from a list of site participants and will be invited to attend by email. Interviews will be conducted virtually and last up to 60 minutes. Interviews with up to 2 individuals from each site will be conducted.

Data Collections Specific to the Measure Dx Tool. In addition to those noted above, the Measure Dx testing will include four additional data collections that will be completed by the clinical and/or other site staff. All sites that begin testing the Measure Dx Tool will complete several additional fillable forms. These will be sent by email and can be returned by fax, mail, or email; the contractor will send additional prompts and follow-up by phone as needed to encourage response. The Measure Dx Organizational Self-Assessment (Attachment K) and the Measure Dx Declaration of Measurement Strategy (Attachment L) will be completed once. Again, as these are core forms that must completed in order to begin participation in the evaluation, all 73 sites are expected to complete these forms. Site leads will also be asked to complete fillable forms versions of Diagnostic Safety Event Reports (Attachment M). The form will be completed by each participating 3 times over the course of the evaluation (at 3-, 12-, and 24- months); it is anticipated that total of 219 reports will be completed.

Five members of the Measure Dx team at each site will be surveyed using the Omnibus Safety and Culture Survey (Attachment N or O), the Medical Office or Hospital versions depending on the setting. This survey will be conducted by web, with email prompting to non-responders. The expected response rate is 85% at each of the three administration periods.

Data Collections Specific to the Calibrate Dx Tool. In addition to the data collections for all sites noted above, the Calibrate Dx testing will include two additional data collections. Clinicians at participating Calibrate Dx sites will complete the Calibrate Dx Survey (Attachment P). This fillable form will be administered 4 times during implementation/sustainment to up to 5 clinicians per site; with email prompting, an estimated 90% response rate to this collection is anticipated. Up to 5 clinicians per site participating in Calibrate Dx will also be asked to complete a Clinician Self-efficacy Survey (Attachment Q); an estimated 90% response rate to this collection is anticipated.

Data Collections Specific to the Patient Toolkit. In addition to the data collections for all sites noted above, the testing for the Patient Toolkit will include five additional data collections. At the beginning of the implementation, all participating providers at each site will complete the Provider Characteristics Form (Attachment R). A total of 73 sites will begin the testing, with 15 providers per site being asked to complete this form. With email prompting, an estimated 90% response rate to this collection is anticipated. The Patient Toolkit Survey-Provider (Attachment S) will also be administered to up to 15 providers at each site at five timepoints (Baseline, 3-, 6-, 9-, and 12-months); with an estimated 90% response rate. A total of 50 qualitative, semi-structured interviews with site clinicians will be conducted during implementation using the Provider Interview Protocol (Attachment T). Individuals will be selected randomly from a list of site participants, and will be invited to attend by email.

As part of the Patient Toolkit, the Patient Toolkit Survey - Patient (Attachment U) will be administered to patients over a 1-week period at five timepoints (Baseline, 3-, 6-, 9-, and 12-months). The survey will be a paper instrument provided to patients upon check-out from a healthcare visit. A total of 12,500 surveys will be completed during each 1-week period; 62,500 patient surveys will be completed during the implementation. The survey includes a question for patients to indicate their interest in participating in an interview (Patient Interview Protocol (Attachment V)) about their experiences. Patients will be selected randomly from those that indicated willingness and contacted by email or phone. A total of 50 interviews will be completed. Interviews will be conducted virtually and last up to 45 minutes.

3. Methods to Maximize Response Rates

The data collection strategy is intended to prevent attrition of sites and site staff and to maximize response rates for each data collection instrument. Specifically, the contractor will 1) minimize burden as much as possible, 2) emphasize the importance and benefits of the evaluation and provide assurance of confidentiality, 3) provide ongoing support to participant sites and staff to encourage continuation and address concerns, 4) enlist the help of a champion at each site, 5) conduct rigorous follow-up when data collection instruments are not completed, 6) and offer a variety of incentives for participation.

Experience has shown that limiting respondent burden reduces non-response, therefore only items that are critical to the analysis and readily available to respondents will be requested as part of the data collection. Data collection instruments are designed to be easy to interpret and can be completed quickly, further reducing burden. In addition, most instruments will be offered in both paper and electronic form, allowing respondents to choose a mode of response that they find to be least burdensome.

Recruitment materials and discussions will reinforce the importance of the evaluation of the resources for advancing diagnostic safety and key benefits of participation, including (1) guided implementation of an already pilot-tested resource, (2) “off the shelf” training materials; (3) multiple opportunities for training with CEU credit; (4) intensive implementation support; (5) synthesized site-specific information regarding patient safety performance and (6) a plan for sustainability. Confidentiality will be promised to participants, providing assurance that the information they provide will not be shared in an identifiable way.

Many sites might be under-resourced or overcommitted to engage in this multi-year effort. To mitigate this, necessary support to interested sites to facilitate enrollment will be provided, such as support for IRB and DUA processes. The contractor will also provide ongoing technical assistance to participating sites through bi-monthly Learning Collaborative virtual meetings to discuss implementation challenges, identify and share solutions, and facilitate support between participants; a TA Help Line where participants can call in with questions or to request information; and a TA Web Page that contains downloadable resources. The contractor’s TA response team will pay particular attention to addressing implementation issues such as leadership support, data issues, and equity among patients, providers, and sites. Guidance will be systematic as well as targeted.

During recruitment, sites will identify a champion to serve as the project coordinator at the site and engage with others working at the practice site. Site champions will also serve as “connectors,” by helping to disseminate and reinforce training to interested clinicians and leaders at their site during implementation and sustainability.

An honorarium commensurate with anticipated complexity of resource implementation, based on prior experience engaging sites in the resource development process, will be provided. As described above, we will socialize the project early in Year 1 to capture interest and attention and will engage in retention activities after enrollment such as project newsletters, site leader check-in calls, and regular project updates. In addition to overall challenges, each resource might raise potential concerns for sites.

Follow-up with respondents who do not complete data collection forms will be timely and consistent. For instance, the contractor will send prompting emails and conduct telephone follow-ups to selected individuals who do not respond to requests.

A variety of modest incentives targeting different participant groups will be offered. A small honorarium of $1500 will be provided as a token of appreciation to sites that complete the project. CE credit will be provided to participating clinicians who complete training modules, participate in live (virtual) training sessions, and complete an end-of-program evaluation. A token of appreciation of $25 will be provided to clinicians and site staff who respond to two surveys that are critical to the evaluation (Attachment N and Attachment O). To encourage participation in interviews that are key to assessing implementation barriers for all resources (Attachments J, T and V), clinicians, site staff and patients who participate in these data collections will be provided with a token of appreciation of $40.

4. Tests of Procedures

No tests of procedures or methods will be undertaken as part of this data collection. As noted in Supporting Statement A, AHRQ previously supported a contract to develop the Calibrate Dx, Measure Dx, and Patient Toolkit resources. After development of these resources, they were pilot tested for feasibility and revised. Five of the data collection instruments (Attachments K, L, P, S, and U) that will be used in this current evaluation are part of the Calibrate Dx, Measure Dx, and Patient Toolkit resources and thus were previously tested.

This evaluation will use four additional previously validated data collection instruments (Attachments C, E, F, and I) and instruments that combine previously developed measures (Attachment N and O). Instruments that have not been tested are based on materials previously used by the contractor for similar projects. They include those used for recruitment and/or those that collect basic demographic information (Attachments A, B, and R), gather data on safety events, (Attachment M), short surveys (Attachments G, H and Q), and interview protocols (Attachments D, J, T, and V).

5. Statistical Consultants

The survey, sampling approach, and data collection procedures were designed by the RAND Corporation under the leadership of:

Courtney Gidengil, MD, MPH

Project Director

RAND Corporation

1776 Main Street

Santa Monica, CA 90407

Tel: (310)-393-0411 ext. 8637

Sangeeta C. Ahluwalia, PhD

Co-Project Director

RAND Corporation

1776 Main Street

Santa Monica, CA 90407

Tel: (310)-393-0411 ext. 7546

The following individual was consulted on the statistical aspects of the sample and analysis of collected data:

Max Rubenstein

Associate Statistician

RAND Corporation

1776 Main Street

Santa Monica, CA 90407

Tel: (310)-393-0411 ext. 4137

References

Shelton RC, Chambers DA, Glasgow RE. An Extension of RE-AIM to Enhance Sustainability: Addressing Dynamic Context and Promoting Health Equity Over Time. Frontiers in Public Health. 2020-May-12 2020;8.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | SUPPORTING STATEMENT |

| Author | wcarroll |

| File Modified | 0000-00-00 |

| File Created | 2024-07-22 |

© 2026 OMB.report | Privacy Policy