B. Round 2 Cognitive Testing Report

Att B - EEIC_QB2_Report_Final_092623 DAO and White Paper reviewed 3.11.24.docx

Generic Clearance for Emergency Economic Information Collections

B. Round 2 Cognitive Testing Report

OMB: 0607-1019

September 26, 2023 (revised 3.11.24)

Cognitive Testing Support Project: Final Report on Development and Cognitive Testing of Emergency Economic Information Collection (EEIC) Question Bank Phase II

Final Report

Prepared for

Measurement & Response Improvement for Economic Programs

Economic Statistics and Methodology Division

Economy-Wide Statistics Division

The U.S. Census Bureau

Prepared by

Y. Patrick Hsieh, Rachel Stenger, Katherine Blackburn,

Jerry Timbrook, and Chris Ellis

RTI International

3040 E. Cornwallis Road

Research Triangle Park, NC 27709

RTI Project Number 0217758.001.003

Contents

Section Page

1. Introduction 1-1

1.1 Goals and research questions 1-1

1.1.1 Cognitive interview methodology 1-2

2. Public Sector Testing 2-1

2.2 Identifying and developing public sector questions for testing 2-1

2.3 Cognitive interview protocol development 2-2

2.4 Cognitive Testing Data Collection 2-4

2.4.1 Population of interest and sampling strategy 2-4

2.4.2 Participant recruitment 2-5

2.4.3 Cognitive interviewing procedure 2-7

2.5 Analytic strategy and assessment process 2-9

2.6.1 Common themes regarding organizational differences for the response process 2-12

2.6.2 Common themes regarding cross-sector applicability of question wording 2-16

2.6.4 Justification for removing items from the Question Bank 2-21

2.7 Finalizing the Question Bank for Public Sector Questions 2-21

3. Private Sector Testing 3-1

3.2 Identifying and revising private sector questions for retesting 3-1

3.3 Cognitive interview protocol development 3-2

3.4 Cognitive Testing Data Collection 3-3

3.4.1 Population of interest and sampling strategy 3-3

3.4.2 Participant recruitment 3-4

3.4.3 Cognitive interviewing procedure 3-9

3.5 Analytic strategy and assessment process 3-10

3.6.1 Common themes regarding organizational level burden 3-12

3.6.2 Common themes regarding cross-sector applicability of questions 3-13

3.6.3 Common themes regarding the utility of examples 3-17

3.6.4 Justification for removing items from the Question Bank 3-19

3.7 Finalizing the Question Bank for Private Sector Questions 3-19

4. Conclusion 4-1

5. References 5-1

A. Summary of Final Disposition Codes for Surveys by American Association for Public Opinion Research A-1

B. EEIC Cognitive Interview Protocols for testing with the Public Sector B-1

B-1. EEIC Cognitive Interview Protocol for testing with the Public Sector (Round 1, Wave 1) B-2

B-2. EEIC Cognitive Interview Protocol for testing with the Public Sector (Round 1, Wave 2) B-19

B-3. EEIC Cognitive Interview Protocol for testing with the Public Sector (Round 1, Wave 3) B-35

B-4. EEIC Cognitive Interview Protocol for testing with the Public Sector (Round 2, Wave 1) B-52

C. EEIC Cognitive Interview Protocols for testing with the Private Sector C-1

C-1. EEIC Cognitive Interview Protocol for testing with the Private Sector (Wave 1) C-2

C-2. EEIC Cognitive Interview Protocol for testing with the Private Sector (Wave 2) C-17

C-3. EEIC Cognitive Interview Protocol for testing with the Private Sector (Wave 2) C-33

D. Recruitment and Interview Materials D-1

D-1. Template of Initial Contact Email Used During Cognitive Interview Recruitment D-2

D-2. Template of Confirmation Email Used During Cognitive Interview Appointments D-3

D-3. Consent Form Used During Cognitive Interviews D-4

E. Questions Not Included in the Question Bank (Questions Classified as “Not Acceptable”) E-1

Exhibits

2.1. Instrument Arrangement for EEIC Cognitive Testing with the Public Sector 2-2

2.2. Recruitment Outcomes by Testing Rounds for the Public Sector 2-6

2.3. EEIC Testing Public Sector Participants Descriptive Statistics 2-8

2.4. Classification Criteria for Questions Cognitively Tested 2-10

2.5. Summary of Testing Results for Public Sector Testing 2-12

3.1. Instrument Arrangement for EEIC Cognitive Testing with the Private Sector 3-3

3.2. Recruitment Outcomes by Testing Rounds for the Private Sector 3-5

3.3. Outcomes from Information Gathering Research 3-7

3.4. EEIC Testing Private Sector Participants Descriptive Statistics 3-9

3.5. Summary of Testing Results for Private Sector Testing 3-12

Research Objective

Through a contract between WhirlWind Technologies, LLC and RTI International (RTI), the Census Bureau commissioned RTI to develop, cognitively test, and finalize a Question Bank of cognitively tested survey questions appropriate for gauging and monitoring the economic impact on U.S. businesses and organizations of regional, national, or international emergencies (research conducted under OMB number 0607-0725). The final product of this work is Emergency Economic Information Collections (EEIC) Question Bank v1.0, which includes 173 unique questions that can be included in federally administered surveys without further OMB approval; the majority of these questions were cognitively tested with employees of businesses in the private sector while some were tested with employees of agencies in the public sector. EEIC Question Bank v1.0 can be found here.

After the conclusion of EEIC Question Bank v1.0, additional cognitive testing was conducted for EEIC Question Bank v2.0 under two main tasks: (1) testing survey questions with employees of agencies in the public sector and (2) testing survey questions with employees of businesses in the private sector. The survey questions tested with agencies in the public sector included existing questions in EEIC Question Bank v1.0 and newly developed questions. The survey questions tested with businesses in the private sector included questions classified as “further testing required” or “not acceptable for inclusion” after EEIC Question Bank v1.0 testing was completed. After cognitive testing with the public and private sectors was complete, the team determined which questions would enter the EEIC Question Bank v2.0.

Methodology and Outcomes

Task 1: Cognitive Testing with the Public Sector

The goal of this first task was to expand the EEIC Question Bank v1.0’s coverage to include additional items tested with public sector agencies. This was accomplished by (1) testing whether relevant items from Question Bank v1.0 work well with employees from public sector agencies and (2) developing and testing new questions specific to the public sector to increase the bank’s topical coverage.

This process resulted in 28 questions for cognitive testing. These questions were tested across two rounds. All 28 questions were tested with public sector agencies in Round 1. Round 2 was reserved for retesting questions that did not perform well in Round 1. These items were revised between rounds based on analysis of Round 1 interview results.

To complete this testing, 60 cognitive interviews were conducted from December 19, 2022, to July 24, 2023, across four waves and two rounds of data collection (three waves in Round 1 and one wave in Round 2). Each wave of data collection included 15 participants and tested between 8 and 11 questions, grouped by topic.

General findings from cognitive testing included the following:

The organization of public sector agencies impacted the response process, especially considering differences in agency size, location (i.e., urban vs rural), and type of agency (e.g., special district, municipality).

“Paid employees” was a difficult construct for public agencies because there are a variety of positions that include no pay (e.g., volunteers) or limited pay (e.g., elected board members who receive a small stipend).

Public sector respondents struggled to understand technical terms about business operations and language used by human resources (HR), such as capital expenditures and overtime.

Some language did not translate well from the private sector to the public sector, such as references to local governments and schools, and the topics of layoffs, recruitment activities, and demand.

It was difficult to develop new questions to measure concepts of interest focused on specialized language from the Census Bureau, such as agency functions, funding, and authority over an agency’s budget.

After cognitive testing from both rounds, 21 questions were classified as acceptable for use with the public sector and 8 questions as unfit for use with the public sector.

Task 2: Cognitive Testing with the Private Sector

At the conclusion of work on Question Bank v1.0, several items did not perform well enough in cognitive testing to be included in the first version of the bank. It was determined that further revisions and testing were required before these items could be included in the bank. The goals of this second task were to (1) revise these questions to address issues discovered during Question Bank v1.0 testing and (2) retest these revised items for potential inclusion into Question Bank v2.0.

This process resulted in 24 questions for cognitive retesting. Unlike the public sector questions, most of these private sector question had been previously tested. Therefore, they were only tested in one more Round for Question Bank v2.0 testing.

Forty five cognitive interviews were conducted from May 11, 2023, to June 19, 2023, across 3 waves of data collection. Each wave of data collection included 15 participants and tested 8-10 questions, grouped by topic.

General findings from cognitive testing included the following:

Companies with multiple establishments, or locations, faced increased response burden for questions that asked them to report about differential changes across establishments.

Findings from the public sector Round 1 testing were applicable to the private sector and helped us uncover some additional concerns about these topics.

Comprehension problems for some items existed across sectors even though the reasons for these difficulties were sector-specific.

It was difficult to adapt all questions to work across industries, especially regarding manufacturers and non-profits.

Including examples for certain terms proved useful.

After cognitive testing, 23 questions were classified as acceptable for inclusion in the Question Bank v2.0 and one question as excluded from the question bank.

Since the onset of the Coronavirus pandemic, the Census Bureau has sought to measure the effect of the pandemic on U.S. businesses through supplemental questions added to several of its recurring business surveys. In 2021, the Census Bureau sought a new generic Office of Management and Budget (OMB) clearance for conducting Emergency Economic Information Collections (EEIC). This generic clearance provided an avenue to collect quality data during future unanticipated emergent events (e.g., natural or humanmade disasters, pandemics or other health emergencies, civil unrest or insurrection, or other emergency events). Through a contract between WhirlWind Technologies, LLC and RTI International (RTI), the Census Bureau commissioned RTI to develop, cognitively test, and finalize a Question Bank of cognitively tested survey questions appropriate for gauging and monitoring the economic impact on U.S. businesses and organizations of regional, national, or international emergencies (research conducted under OMB number 0607-0725). The final product of this work is EEIC Question Bank v1.0, which includes 173 unique questions; the majority of these questions were cognitively tested with employees from businesses in the private sector while some were tested with employees from agencies in the public sector. EEIC Question Bank v1.0 can be found here.

After the conclusion of EEIC Question Bank v1.0, additional cognitive testing was conducted for EEIC Question Bank v2.0 under two main tasks: (1) testing survey questions with agencies in the public sector and (2) testing survey questions with businesses in the private sector. The survey questions tested with public sector agencies included existing questions in EEIC Question Bank v1.0 and newly developed questions. The survey questions tested with private sector businesses included questions classified as “further testing required” or “not acceptable for inclusion” after EEIC Question Bank v1.0 testing was completed. After cognitive testing with the public and private sectors was complete, the team determined which questions would enter the EEIC Question Bank v2.0. This report summarizes the scope of, process for, and outcomes from each of these tasks.

The cognitive testing tasks (both with the private and public sectors) explored whether representatives of businesses/government agencies in the United States understood the survey questions as intended and were able to provide the requested data. This research was conducted under the generic clearance for questionnaire pretesting research (OMB number 0607-0978). The findings from the cognitive interviews enabled the Census Bureau to:

Understand participants’ thought processes and memory demands when answering EEIC questions; and

Understand the record-keeping practices and intraorganizational data retrieval process to answer EEIC questions, reflecting response burden at both individual and organizational levels.

The study results informed the final design of and determined which survey questions would be included in the Question Bank v2.0 for the proposed OMB generic clearance for EEIC.

Cognitive interviewing is a method for evaluating survey questions that assesses how participants from a population of interest understand, mentally process, and respond to the questions we present (Willis, 2004). Cognitive interviewing first involves identifying questions of interest to test, creating probes to use during the interview to evaluate the questions, and finally analyzing the qualitative data collected during the interview process to improve the questions’ construction. The number of interviews required to reach saturation (i.e., the point where sufficient data have been collected to make valid conclusions) can vary depending on the diversity of the population of interest. Because reaching saturation is impractical for resource, time, and budget constraints, the project team for this study agreed that a minimum of 15 completed interviews would suffice, based on Census Bureau experience testing establishment survey questions across businesses/agencies of different sizes and industries/types that complete economic surveys for the Census Bureau. This cognitive testing project used a purposive sample, selected to meet these criteria (i.e., business size and industry or agency type), based on the research questions. Thus, the resulting sample should not be considered statistically representative, and it is inappropriate to use the sample to make statistical inferences about a specific target population. Furthermore, participants completed this study in an artificial, laboratory-like setting with an abbreviated set of questions asking about the effect of an emergency event on their business. Therefore, it is possible that these findings may not generalize to realistic survey conditions.

Items in Question Bank v1.0 were mainly tested with employees from private sector businesses, with only a small number of questions tested with public agencies that process and grant permits. The goal of this first task was to expand the bank’s coverage to include additional items tested with public sector employees from across a variety of agencies. This was accomplished by (1) testing whether relevant items from Question Bank v1.0 work well with employees from public sector agencies and (2) writing and testing new questions specific to the public sector.

This process resulted in 28 questions for cognitive testing. These questions were tested across two rounds. All 28 questions were tested with public sector agencies in Round 1. Round 2 was reserved for retesting questions that did not perform well in Round 1. These items were revised between rounds based on analysis of Round 1 interview results.

The sections below detail how questions were developed, tested, analyzed, and (when appropriate) added as items to Question Bank v2.0.

Development of the public sector questions began with a collaborative effort between all members of the project team. Census Bureau economic subject matter experts (SMEs) reviewed items in Question Bank v1.0 (i.e., items that had only been cognitively tested with employees in the private sector) and identified questions that might also be applicable to agencies across the public sector. The SMEs also drafted new questions to expand the topical coverage of public sector items in the Question Bank. These new questions covered topics such as contact information, reasons for permanent closures, changes to budget, and changes to the primary function of the agency. Although the questions included for public agency testing in Question Bank v1.0 focused only on permits, the goal of the Question Bank v2.0 public sector testing was to develop public sector questions that could be used on agency-wide surveys.

After compiling the initial set of draft survey questions, an iterative expert review approach was used to assess and improve the draft questions. First, survey methodologists revised the existing items identified from Question Bank v1.0 to ensure they were applicable to the public sector (e.g., replacing phrases like “goods and/or services” with just “services”). Next, survey methodologists reviewed each of the newly drafted questions and provided recommendations for methodological changes (e.g., question wording, response options, formatting) if questions did not conform to best practices in the survey methodology literature (Dillman et al., 2014). SMEs then provided further recommendations for revision and questions were remediated using this feedback, which was followed by one final review. This process resulted in 28 questions (22 from Question Bank v1.0, 6 new) moving on to the next step in the research process.

The 28 questions were organized into three waves (Exhibit 2.1), based on question topic. Most questions were tested in only one wave. However, there were two notable reasons for exceptions.

First, during the identification and development process, small revisions were made to the questions that were selected from Question Bank v1.0 (i.e., questions that were originally tested with the private sector) to ensure that they were applicable to the public sector (e.g., replacing phrases like “goods and/or services” with just “services”). However, three of the questions selected from Question Bank v1.0 ask about concepts that are conceptually different across the private and public sectors. To maximize our opportunity to collect sufficient participant feedback, we desired a larger analytic sample. Therefore, we tested these three questions across two waves rather than just one. Using the qualitative findings collected from a larger sample allowed us to make stronger conclusions about results for these questions.

Second, two of the newly written questions asked about a complicated concept (i.e., changes to an organization’s funding/finances because of a natural disaster). We similarly desired a larger analytic sample to allow us to make stronger conclusions about results for these questions and tested them across two waves rather than just one.

After Round 1 cognitive testing was completed, eight questions were selected to be re-tested in Round 2, during one wave of data collection.

Exhibit 2.1. Instrument Arrangement for EEIC Cognitive Testing with the Public Sector

Testing Group |

No. of Questions Tested |

Round 1 |

|

Wave 1 |

8 |

Wave 2 |

7 |

Wave 3 |

8 |

Questions tested across multiple waves |

5 |

Round 1 Total |

28 |

Round 2 |

8 |

Once the draft questions were organized into waves within each round of testing, a protocol was developed for each wave’s cognitive interview. The EEIC cognitive interviews followed a semi-structured interview protocol using the concurrent probing technique. Appendix B includes all cognitive interviewing protocols used with the public sector sample.

When developing the protocols, the fill variables denoted in the draft questions (e.g., “In <time period>” or “<business/agency/etc.>”) had to be operationalized for testing. To maintain consistency of the operationalization for the entire study, decisions made about the terms used in each fill depended on the context of an individual question, the projected timeframe within which the interviews were being conducted, and any findings from previous waves of testing.

Questions were also written for testing using the generic phrase “the natural disaster” as the wording for the <event> fill. During the cognitive interview, interviewers explained to participants that this phrase was intended as a placeholder and would be filled in with something more specific once the questions were finalized, so that interviewers could guide participants to think about whatever event would be most relevant to their agency.

Probing questions were written to focus on each step in the survey response process for establishment surveys (Bavdaž, 2010; Bavdaž et al., 2015; Jenkins & Dillman 1997; Tourangeau et al., 2000; Willimack & Snijkers 2013):

Perception (Does the respondent see the question?)

Comprehension (Does the respondent understand the meaning of terms and phrases used in the question and response options?)

Retrieval (Does the respondent have difficulty locating the information required to answer the question—either from their own memory, from another employee at the company, or from databases/records?)

Judgment (Does the respondent have any difficulty forming their answer? Do they edit their answer?)

Response (Does the respondent have any difficulty mapping their answer onto the available response options?)

Some probing questions were written to ask respondents about organizational-level burden (Bavdaž, 2010); that is, agency practices, policies, and other record-keeping or information retrieval processes that occur at the agency level (i.e., Does the agency maintain a record for the requested information? How does the respondent retrieve the record and what level of effort is needed?). These questions were asked hypothetically (e.g., If you were answering this question on a Census Bureau survey, would you know the answer right away, would you make a guess, or would you consult records or other colleagues?). However, during the interviews, some respondents went to the database where the record was stored so that they could provide an actual answer to the survey question. This concept was key because the questions being tested might be added to already existing Census Bureau surveys, and the goal was to limit respondent burden.

As described in Section 2.1, the questions identified for Round 1 testing with the public sector included both questions from Question Bank v1.0 and newly written questions. For the newly written questions, probes focused on all steps in the response process. For questions from Question Bank v1.0, probes mainly focused on comprehension and individual- and organizational-level burden. For Round 2 testing, probing questions were written to focus on changes made to the question after testing in Round 1.

The population of interest for public sector cognitive testing consisted of local government agencies that had previously responded to Census Bureau surveys like the Government Units Survey (GUS). These local government agencies consist of five types: county, municipal, township, special district, and school district. The sampling frame was a list of local agencies on the Government Master Address File, which is a Census Bureau–maintained universe of state and local governments. This sampling frame included the contact information, which typically consisted of name, address, phone number, and email address of the past respondent at a given agency. However, not every agency had identified a point of contact for the agency and instead included more limited information, such as a phone number and/or email address. The sampling frame also included some essential agency information such as the agency type and the agency function if it was a special district.

To prepare for the agency sample recruitment, the frame was randomly partitioned into replicates consisting of about 100 agencies, stratified by agency type. After creating the replicates, each replicate was reviewed for duplicate contact persons, which occur in situations where one person is responsible for reporting for multiple agencies. Each contact person was only contacted once for recruitment to participate, where the agency was selected randomly when the replicates were created. Additionally, housing authorities were initially included in the sampling file but removed from being eligible for interviews after it was discovered that some housing authorities operate as private businesses. These replicates were then contacted—initially by email and later by phone—and invited to participate in the research study (see Section 2.4.2 for details).

As stated in Section 1.1.1, this project used a purposive sample; therefore, the resulting sample should not be considered statistically representative, and it is inappropriate to use the sample to make statistical inferences about a specific target population.

As described in Section 1.1.1, the goal of participant recruitment for individual waves was to conduct 15 total interviews, with an estimated length of 45 minutes per interview.

The project team recruited participants for cognitive testing and conducted interviews with public sector employees from December 14, 2022, to January 25, 2023, in Round 1 and from June 30, 2023, to July 24, 2023, in Round 2. School districts were excluded from recruitment in Round 2 because the data collection period was during the summer months and the point of contact at schools would be difficult to reach.

Recruitment efforts focused on contacting and recruiting participants using one replicate of sampled agencies at a time. We emailed an invitation to a replicate of sampled agencies to participate in the interview and within about 1 week of sending the first email, a recruiter called the sampled agency. All recruiters and interviewers used Census Bureau email accounts (i.e., [email protected]) when contacting sampled participants. We sent a second email to sampled agencies that did not respond to the first email and phone call. All contact attempts were addressed to the identified contact person in the sampling file, when available. Sampled agencies that agreed to participate by phone or by email were then scheduled for an interview and sampled agencies who refused to participate by phone or by email were removed from further contact.

In cases where the original past respondents were no longer the point of contact for the sampled agency (i.e., the listed contact person was retired, deceased, changed positions, or no longer employed at the agency) or there was no point of contact provided, interview staff attempted to identify and seek cooperation from their replacement for participating in the cognitive interview. However, some of these new employees indicated their lack of knowledge or confidence for answering our questions and instead refused to participate.

Recruitment for participation in each round of testing overlapped, such that some participants who were contacted in Round 1 but did not participate in an interview or refused to participate were sometimes contacted again in Round 2. Overall, staff were able to establish contact (i.e., completed interview, refused, no-show, withdrawal during interview, and rescheduled) with approximately 16% of the sampled agencies in Round 1 and approximately 24% of the agencies in Round 2. In addition, we were able complete interviews with approximately 8% of all contacted sampled agencies in Round 1 and approximately 9% in Round 2. See Exhibit 2.2 and Appendix A for details about the recruitment outcomes for the study.

Exhibit 2.2. Recruitment Outcomes by Testing Rounds for the Public Sector

|

Round 1 (Waves 1–3) |

Round 2 (Wave 1) |

Recruitment start date |

12/14/2022 |

6/30/2023 |

Interviews start and end dates |

12/19/2022—1/25/2023 |

7/10/2023—7/24/2023 |

Number of sampled units with at least one contact attempt |

565 |

171 |

Number of unknown eligibility* |

13 |

4 |

Number of ineligible* |

0 |

0 |

Total number of eligible cases** |

552 |

167 |

Number of refused* |

31 |

16 |

Number of no-shows* |

10 |

5 |

Number of withdrawals* |

4 |

4 |

Number of completed interviews* |

45 |

15 |

Number of eligible cases, non-contact* |

462 |

127 |

Cooperation rate*** |

8.2% |

9.0% |

Contact rate**** |

16.7% |

*See Appendix A for the definitions of each final disposition code (AAPOR, 2016)

**For these purposes, a sampled unit was considered eligible if at least one email was sent and the email did not bounce back, regardless of whether the phone number was called.

***AAPOR Cooperation Rate 1 (COOP1), or the minimum cooperation rate, is the percentage of eligible cases with a completed interview.

****AAPOR Contact Rate 3 (CON3) is the percentage of eligible cases in which some responsible member of the sampled unit was reached by the survey (i.e., completed interview, refused, no-show, withdrawal during interview, and rescheduled).

Note: The number of sampled units where contact was attempted was not mutually exclusive by round. As recruitment efforts overlapped between rounds, sampled units may have been contacted during multiple rounds. The number of completed interviews is mutually exclusive by round; each sampled unit could be interviewed only once.

Participant recruitment challenges

The sample for the Question Bank v2.0 effort included all types of government agencies, rather than only those who process and grant permits as in Question Bank v1.0 testing. In Question Bank v1.0, we had exceptionally high cooperation rates (21.1%) with the permit agencies and expected to have similarly high response rates for a general agency sample. However, this proved not to be true, with cooperation rates closer to the private sample than expected (as illustrated in Exhibit 2.2). It is unclear why the general agency sample had a significantly lower cooperation rate compared to the permit agencies.

In addition to the overall low cooperation rate, there were other challenges experienced with recruiting public sector agencies. When contacting each agency and explaining the topic of the interview as the impacts of natural disasters, many points of contact wanted to direct us to speak with their local government emergency coordinators. In these cases, the point of contact did not believe they were the best fit for the interview topic and wanted to direct us to the appropriate person. After explaining that the goal of the interviews was to speak with the person who normally fills out the Census Bureau surveys, we were able to convert some of these participants to complete an interview, but not all.

Another issue that we ran into was that some points of contact report for multiple agencies, especially in rural areas, where one person may wear many different hats. As described in Section 2.4.1, we decided to remove instances where multiple agencies had the same point of contact listed to simplify the recruitment process. We proceeded with contacting each person only once and asking about their experiences reporting for one given agency. During the interview, data were collected about how many different agencies for which each participant reported, and interviewers asked the participant to focus on how they would report for one given agency. Most of the completed interviews were with participants who only reported for one agency.

All cognitive interviews were conducted remotely (as opposed to in person) using Microsoft Teams. When faced with technical difficulties, some interviews were completed through a direct phone call to the participant. Participants and interviewers used audio only and were not required to turn on their video cameras.

The study consent and the testing instrument for each wave, including an introduction to the study, were programmed as stand-alone web instruments, hosted by Qualtrics. Once a participant was scheduled with an interviewer, the participant was sent an email confirming the appointment. This email contained the link to the consent form and a link to the testing instrument. In addition to the protocol and survey questions themselves, other study materials included invitation and confirmation emails, along with the consent form that had been approved by the Census Bureau’s Policy Coordination Office and OMB and used by Census Bureau researchers. See Appendix D for recruitment and interview materials, including templates of the invitation and confirmation emails that recruiters used (Appendices D-1 and D-2) and the programmed consent form (Appendix D-3).

During each interview, the interviewer went through the following procedure with the participants:

Verified that the participant filled out the consent form and re-verified consent to participate and consent to be recorded.

Began recording the interview (with participant consent) and re-verified consent to participate and consent to be recorded with the recording turned on.

Instructed the participant to navigate to the Qualtrics survey and reviewed the introduction page. During this introduction, the interviewer explained the purpose of the interview and instructed the respondent to “think aloud” as they answered (i.e., tell the interviewer what they are thinking about as they are reading and answering each question, noting if anything is confusing or unclear to them) and provided an example of thinking aloud.

Asked the participant several background information questions, including the type of agency, the participant’s title, and their years of experience at the agency.

Asked the participant about their previous experience with natural disasters or other emergency events and encouraged the participant to either think about that experience or think hypothetically about a future event while answering the questions.

Reviewed questions and probes with the participant, using concurrent probing (i.e., participants reviewed a question, interviewer asked all probing questions for that survey question, then participant moved on to the next question).

Asked concluding questions. These questions included asking participants if they had any other comments about the survey questions, asking any additional information about organizational-level burden (e.g., who else would they needed to ask input from to answer the questions, how much effort would it be to coordinate with them) and asking what device the participant was using to answer the survey.

Thanked the participant for their time and ended the recording and interview.

After the interview concluded, interviewers uploaded the recording of the interview to a secure folder within the Census Bureau’s protected network and entered their notes from the interview into a combined spreadsheet for each wave. Sixty cognitive interviews were conducted across both rounds of testing, with interviews lasting an average of approximately 35–40 minutes. The study did not offer monetary incentives for research participation, which is the Census Bureau’s usual practice for cognitive interviews with agencies. See Exhibit 2.3 for descriptive statistics of participants by round of testing.

Exhibit 2.3. EEIC Testing Public Sector Participants Descriptive Statistics

|

Round 1 (Waves 1–3) |

Round 2 (Wave 1) |

Number of interviews |

45 |

15 |

Length of interview (in minutes) |

|

|

Average (Std. Dev.) |

40.0 (9.5) |

35.9 (12.4) |

Min |

25 |

18 |

Max |

64 |

60 |

Agency Type |

|

|

County |

2 (4.4%) |

1 (6.7%) |

Township |

6 (13.3%) |

2 (13.3%) |

Municipal |

12 (26.7%) |

6 (40.0%) |

School District |

2 (4.4%) |

0 (0.0%) |

Special District |

23 (51.1%) |

6 (40.0%) |

Role/Title of participant |

|

|

Management (e.g., Director, Manager, Administrator, Supervisor) |

22 (48.9%) |

7 (46.7%) |

Accounting/Finance (e.g., Secretary/ Treasurer, bookkeeper, payroll) |

9 (20.0%) |

3 (20.0%) |

Clerk |

8 (17.8%) |

4 (26.7%) |

Other (e.g., Superintendent, Consultant, Trustee, Commissioner) |

6 (13.3%) |

1 (6.7%) |

Length of time at agency (in years) |

|

|

Average (Std. Dev.) |

9.1 (10.7) |

7.3 (7.2) |

Median |

4.75 |

6 |

Mode |

2 |

4 |

Time Zone (of participant) |

|

|

Central |

18 (40%) |

8 (53.3%) |

Eastern |

14 (31.1%) |

3 (20.0%) |

Mountain |

5 (11.1%) |

1 (6.7%) |

Pacific |

8 (17.8%) |

3 (20.0%) |

As explained previously, interviewers wrote up detailed notes from the interview and input the notes into a centralized Excel spreadsheet for notes after each interview. The spreadsheet file was used to analyze the data collected about each question. This qualitative analysis was completed using the survey response process (Bavdaž, 2010; Bavdaž et al., 2015; Jenkins & Dillman 1997; Tourangeau et al., 2000; Willimack & Snijkers 2013) as a guiding framework for understanding issues that may prevent respondents from easily producing appropriate or accurate answers to the questions (i.e., problems preventing quick completion of the response process)—the same framework used to develop the cognitive interview protocols.

Using this framework, methodologists analyzed the interview notes of individual questions to reveal issues that may prevent survey respondents from producing appropriate or accurate answers with a reasonable level of effort. Methodologists also considered the type of government agency during analysis to discover if there were any types of agencies that may have more difficulty with the questions. For example, respondent burden may be low for a small government agency with a staff of three but high for a large municipality with a staff of 150.

Based on the assessment of the findings, staff classified each question into one of three categories: few minor issues, some minor and/or moderate issues, or multiple moderate and/or major issues. These classifications included both the quantity (i.e., few, some, a lot) and severity (i.e., minor, moderate, major) of the issues uncovered during testing. Minor issues were generally problems in the response process mentioned only by one participant, or easy-to-fix problems that did not affect burden and still allowed participants to reach a final answer consistent with the question’s intended meaning. Moderate issues were problems in the response process mentioned by multiple participants and that suggested increased response burden while still allowing participants to reach a final answer; these answers, however, were not always consistent with the intended meaning of the question. Some questions were also classified as moderate issues when we needed SMEs to weigh in on the problems observed during testing. The SME input helped us decide whether the issues were too severe for the question to be included in Question Bank v2.0. Major issues were problems that caused a stoppage in the response process and prevented participants from reaching a final answer (e.g., data requested does not exist in records, large variation in participant comprehension of key terms). The general criteria for classifying questions are summarized in Exhibit 2.4, which details the quality and severity of problems associated with each classification.

Exhibit 2.4. Classification Criteria for Questions Cognitively Tested

Few Minor Issues |

Some Minor and/or Moderate Issues |

Multiple Moderate and/or Major Issues |

Clear to most participants |

Not clear to some participants |

Not clear to most participants |

Small number of minor, easy-to-fix issues |

Multiple minor issues and/or a small number of moderate issues

|

Multiple moderate issues and/or at least one major issue |

Most participants could easily produce and enter a final answer |

Some participants had difficulty producing or entering a final answer |

Most participants had difficulty producing or entering a final answer |

|

|

Question had a high degree of organizational level burden |

In addition to the testing summaries and the assessment of individual questions, staff proposed recommendations for further improvement for most questions regardless of their classification, including a new draft of the revised version, when needed. For some questions, multiple revised versions were provided for the project team to discuss and choose which revised version should move forward (either to the Question Bank or to Round 2 testing). The recommendations also included instructions to advise users of the Question Bank how to administer EEIC survey questions for their research.

In each round of testing, the results of the analysis for each tested question and recommended question changes were reviewed to determine if any adjustments were needed. The project team met to discuss these results and decide on the next steps for each question. The goal for Round 1 was to (1) identify questions that were acceptable for inclusion in the Question Bank as is for the public sector or had very minor changes and could move on to the Question Bank without further testing; (2) identify and diagnose questions that would need further revision and testing in Round 2; (3) identify questions that were not acceptable for use with the public sector even though they worked well for the private sector; and (4) deliberate on and finalize any necessary revision to be implemented for the next steps.

Round 2 analysis focused on a final decision for each question; the final decision for each question was either (1) the implemented changes improved the issues identified in Round 1 and the question was included in the Question Bank or (2) the question in its current form continued to exhibit issues such that any recommended revisions would require additional testing and therefore was not ready for inclusion in the Question Bank. With no further planned testing at this time, no recommendations for question changes for those items still exhibiting severe issues were made.

This section begins by summarizing the assessment of the testing outcomes and the final decisions of the questions by each round and wave of testing. It then presents a discussion of the common themes identified across individual testing waves, followed by a diagnosis interpreting the potential reasons that questions resulted in a poor performance that prevented the team from including such questions in the Question Bank.

Exhibit 2.5 summarizes the testing results of the questions tested for the public sector for Question Bank v2.0. After Round 1, it was determined that 14 questions had few or no issues and could be included in the Question Bank either as is or with minor revisions. The team also identified seven questions that had varying degrees of issues to be addressed. These questions underwent additional revision and then moved on to Round 2 for retesting. In addition to these seven questions, one question was pulled from the existing private sector questions from Question Bank v1.0 to test with the public sector after the initial question chosen for the public sector tested poorly. Fourteen questions that performed poorly during Round 1 were excluded from the Question Bank. See Section 3.4.3 for justifications for excluding questions from the Question Bank and Appendix E for the details of these questions.

After Round 2, analysis supported including seven additional questions in the Question Bank and excluding one question because of the need for further revision and testing and poor performance. This resulted in 21 questions recommended for use with the public sector in addition to the questions added during Question Bank v1.0.

Some of the issues uncovered during testing with the public sector during Round 1 raised new concerns about three questions already in Question Bank v1.0 after being tested with the private sector. These three questions were retested with the private sector and findings from both private and public sector testing were considered when making final recommendations. See Section 3.6 for further discussion of the private sector testing.

Exhibit 2.5. Summary of Testing Results for Public Sector Testing

# |

Qs Tested |

Assessment Classification |

Final Decision |

||||

Few Issues |

Some Issues |

Major Issues |

Accepted to |

Moved to Round 2 |

Excluded from Q Bank |

||

Round 1 |

|

|

|

|

|

|

|

Wave 1 |

8 |

5 |

3 |

0 |

6 |

2 |

0 |

Wave 2 |

7 |

2 |

5 |

0 |

4 |

2 |

1 |

Wave 3 |

8 |

2 |

3 |

3 |

3 |

2 |

3 |

Multiple waves |

5 |

0 |

2 |

3 |

1 |

1 |

3 |

Round 1 Total |

28 |

9 |

13 |

6 |

14 |

7 |

7 |

Round 2 |

8 |

1 |

6 |

1 |

7 |

— |

1 |

Similar to the previously completed private sector testing, we observed several essential differences within the public sector agencies depending on the size and type of government agency. One stark difference was the difference between small, rural agencies and large, urban agencies. Although the organizational structure and functionality of larger, multi-function agencies is similar to that of private businesses or organizations, the organizational structure of smaller, rural single- and multi-function agencies is more diverse than the larger agencies. For smaller, rural agencies, many rely on volunteers to run the agencies and some have no paid employees. Smaller, rural special district agencies typically exist to perform one single, specific function in the community, such as sourcing ambulances for the county or managing the cemetery. Sometimes the duties of these small agencies are limited, such as once a month meetings or yearly budget reviews.

As mentioned earlier when describing the recruitment process in Section 2.4.1, some special districts have one point of contact serving across multiple small agencies. Meanwhile, larger, multi-function agencies, such as municipalities or townships, act much more like private businesses, with many paid employees operating typically out of a central location. These larger, multi-function agencies provide many different services to the community, such as utilities, parks, public transportation, and other general government services.

These differences by size and agency type mean that asking one question that works well across all public sector agencies can be very difficult. We primarily observed issues for small special district agencies, which are highly specialized and vary significantly in their organizational structure. Findings were discussed with the SMEs to understand how the Census Bureau typically accommodates the varying structure of agencies. The challenges observed with asking questions across agencies were similar to the challenges that the Census Bureau often faces with surveying government agencies. Additionally, the SMEs provided context about the known measurement error that they face in their estimates, especially around topics such as number of paid employees and expenses. Additional notes regarding these findings were added to the accepted public questions in Question Bank v2.0 to ensure survey designers can consider all relevant information when selecting questions.

In addition to differences among the different types and sizes of agencies, we also saw key differences compared with the private sector, primarily around questions asking about closures. Almost all public agencies interviewed indicated it would be incredibly unlikely that they would close, even temporarily, during a natural disaster. Instead, these agencies’ role during natural disasters tends to increase, taking on additional functions within the community, such as distributing disaster supplies or converting government buildings (e.g., libraries, office space) to provide shelter. Public agencies also reported that it was typical for staff (paid or volunteer) to work as much as possible to make sure the community can manage the impacts from the disaster and recover, including significant overtime. Although there may still be instances when asking about closures is important, there is likely limited analytic utility for these questions about closures in the public sector; However, it will depend on the type of natural disaster.

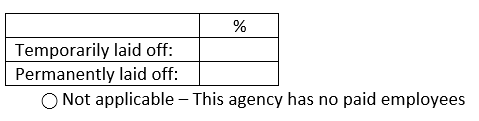

Paid employees as a question construct

Although the concept of paid employees was well-understood for larger, multi-function agencies, small, rural agencies tended to have more confusion about this term. In small, rural agencies, especially special districts, there might only be a handful of staff, some or all of whom operate as volunteers and do not receive any pay. Additionally, when a natural disaster strikes, public agencies in rural areas reported that there was usually an “all hands on deck” reaction, with both staff from the agency and volunteers from the community working together to keep the agency functioning and provide additional community services. This was the case for rural areas regardless of whether there were any paid employees associated with the agency. Some agencies also operate only with elected board members, with each board member receiving a small stipend associated with the position. Some participants at these agencies with board members pushed back on the idea that board members would be considered paid employees because they did not receive an ongoing salary.

Q03_B From April 1, 2023 to June 30, 2023, what percentage of this agency’s paid employees (workers who received a W-2) were temporarily and permanently laid off as a result of the natural disaster? Include:

Exclude:

Temporarily laid off workers are those who have been given a date to return to work or who are expected to return to work within 6 months. Permanently laid off workers have no expectation of being rehired within 6 months. Estimates are acceptable. Enter 0 if no layoffs.

|

Technical terms about business operations

With public agencies, we saw some additional issues with technical terms that we did not observe as frequently for the private sector. Public sector respondents are less likely to be knowledgeable about HR and finance terms, especially for special district agencies in rural areas. Many of these agencies do not have HR, accounting, or finance employees with whom to consult to respond to Census Bureau survey questions. Although we saw issues with these terms in the private sector when the point of contact was not in the given department (i.e., HR or finance), these respondents typically had someone else at their company to consult with to answer the questions. For public sector respondents, they do not always have someone else they can consult.

Q52 As a result of the natural disaster, how did this agency change its budgeted capital expenditures from July 1, 2022 to September 30, 2022? Select all that apply.

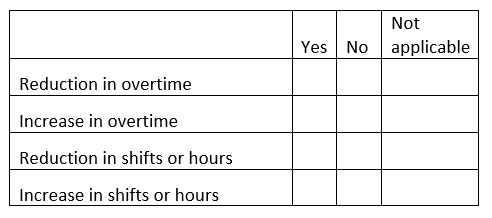

Q20 From July 1, 2022 to September 30, 2022, as a result of the natural disaster, did this agency take the following actions related to shifts or hours?

|

Another example of these types of terms that public sector participants struggled with was “overtime.” The intent of question Q20 (right) was to ask whether the amount of time staff worked had changed, but public sector employees struggled to consistently understand the word “overtime.” Several participants mentioned that “overtime” was not the term they would use because there was a more applicable term for their agency, or their source of funding excluded them from use of overtime. “Overtime” has a very specific definition and is only applicable to certain employees (i.e., typically those who are non-exempt). We suspect the lack of traditional HR employees as the points of contact for the public sector impacts comprehension of the term “overtime.” The points of contact that do respond to public sector Census Bureau surveys may not know the employment status of all agency staff nor understand the term “overtime” as intended. After consulting with the SMEs about these problems, we decided not to recommend this question for use with the public sector.

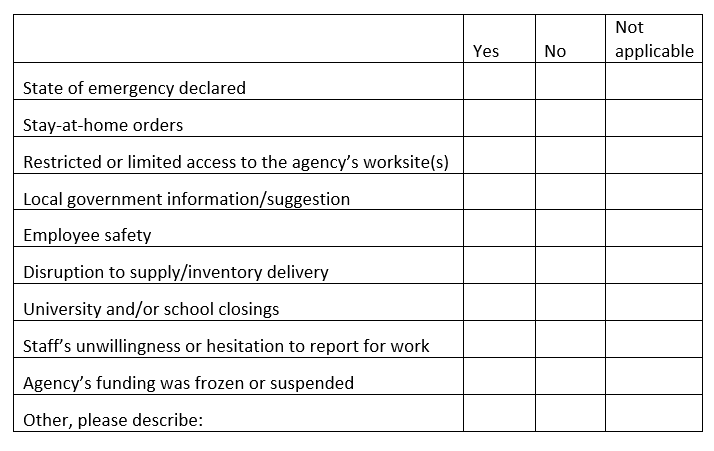

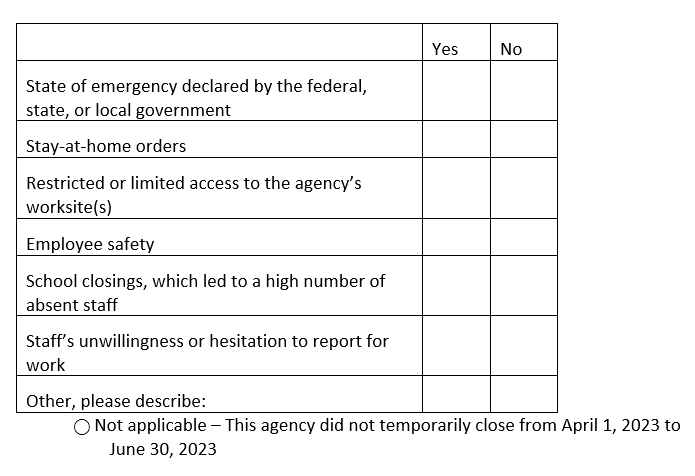

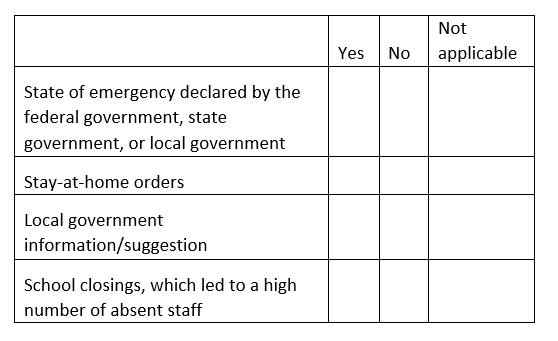

Q101 – Round 1 From July 1, 2022 to September 30, 2022, did the following factors related to the natural disaster influence this agency’s decision to close temporarily?

Q101 – Round 2 From July 1, 2022 to September 30, 2022, did the following factors related to the natural disaster influence this agency’s decision to close temporarily?

|

This question was retested with changes to these items for both the public and private sector. We adjusted the wording of several items and dropped those that did not seem to be working in the context of the public sector (see above). In Round 2, we found comprehension was improved for “state of emergency declared by the federal, state, or local government.” However, participants continued to struggle to understand the item “school closings, which led to a high number of absent staff” since schools are also a part of the public sector. Combined with the findings from the private sector, which indicated difficulties with reporting for large businesses and comprehension issues (as outlined in Section 3.6.1), we recommended removing this item from the question bank for both public and private sectors.

Q22 From July 1, 2022 to September 30, 2022, did this agency have less difficulty, no change, or more difficulty in recruiting paid employees compared to what was normal before the natural disaster?

Q29 From July 1, 2022 to September 30, 2022, how much did demand for this agency’s services change compared to what was normal before the natural disaster?

|

Recruitment activities were also not very relevant for public agencies, which do not typically engage in recruitment like private businesses with headhunters, job fairs, and other activities. Instead, public agencies interpreted “recruitment” to include a variety of hiring activities, including posting job ads, interviewing, and retaining employees. For this question about recruitment (Q22, above), we adjusted the concept of the question to instead focus specifically on hiring, and it tested well in the second round of testing and is recommended for use with the public sector in Question Bank v2.0.

Another concept that some participants struggled to understand was that of “demand” in Q29 (above). This concept is typically well-understood in the private sector as a key aspect of business, but we found that some participants from public sector agencies struggled to apply “demand” to the services they provide. These problems were limited and after Round 1 testing we felt confident we could move forward with “demand,” but after input from the SMEs we decided to test the concept of “need” during Round 2. Unfortunately, the concept of “need” did not work well because of comprehension challenges around the phrase “did need” in the question stem. After discussion with SMEs and considering the findings from both rounds of testing, we felt comfortable moving forward with the term “demand” for the public sector while adding notes to the Question Bank v2.0 to reflect the potential issues some agencies may encounter.

New Question Q21 – Round 1 From July 1, 2022 to September 30, 2022, did the natural disaster cause this agency’s primary function to change?

New Question Q21 – Round 2 From April 1, 2023 to June 30, 2023, did the natural disaster cause this agency to add additional functions? Examples of functions are Fire Protection, Sewage and Water Supply, Utility, etc.

[IF YES:] Please describe the function(s) added: __________ |

New Question Q13 From July 1, 2022 to September 30, 2022, did the natural disaster affect this agency’s funding to pay its employees?

|

New Question Q14 Did the authority over this agency’s budget change as a result of the natural disaster?

|

Questions were removed from consideration for the Question Bank when testing identified substantial issues. See Appendix E for all questions that were removed from consideration for the Question Bank. Some questions were excluded after Round 1 testing if the question could not be re-written to overcome substantial problems with the response process identified during testing (e.g., construct too complex or vague, construct does not match experience of public sector). Feedback from the Census Bureau confirmed that these questions were not high-priority constructs for the Question Bank v2.0. Examples of questions that were not included after Round 1 testing are outlined in the previous sections (2.6.1, 2.6.2, 2.6.3).

We revised other questions after Round 1 and then re-tested them in Round 2. Questions were eliminated after Round 2 if testing determined that they continued to pose problems for the response process and that further revision would require additional cognitive testing before being added to the Question Bank. The only question that we removed after Round 2 testing was W2Q21 about changes in the primary functions of an agency, as outlined above in Section 2.6.3.

The project team updated the EEIC Question Bank v1.0 by completing additional cognitive testing and using the findings to develop Question Bank v2.0. EEIC Question Bank v2.0 includes a description of the additional cognitive testing that was completed for 28 questions with employees from agencies in the public sector.

For the 23 questions from Question Bank v1.0 (i.e., questions that had already been tested with employees in the private sector) that were tested with public sector agency employees across both rounds, notes from this new testing were added to that question’s entry in the Question Bank. Information included in these notes is described below:

A note indicating that the question was cognitively tested with employees from agencies in the public sector.

Whether cognitive testing supported or did not support using the question on a survey with the public sector.

If cognitive testing did not support using the question on a survey with the public sector, a brief description of the findings from testing.

If cognitive testing did support using the question on a survey with the public sector, instructions about any wording changes necessary when using with the public sector (as opposed to the private sector).

Of the six newly developed public sector questions, three were categorized as “Acceptable for the Question Bank” (as described in Section 2.3.2 and Section 2.4) and a new entry was added to the EEIC Question Bank v2.0 using the same template as Question Bank v1.0.

At the conclusion of work on Question Bank v1.0, several private sector items did not perform well enough in cognitive testing to be included in the first version of the bank. It was determined that further revisions and testing were required before these items could be included in the bank. The goals of this second task were to (1) revise these questions to address issues discovered during Question Bank 1.0 testing and (2) retest these revised items for potential inclusion into Question Bank 2.0.

This process resulted in 24 questions for cognitive retesting. Unlike the public sector questions, the private sector questions had been previously tested, and some questions had been tested twice before with varying revisions. Therefore, these 24 questions for the private sector were only tested in one final Round for Question Bank v2.0.

The sections below detail how questions were identified, revised, retested, analyzed, and (when appropriate) added to Question Bank v.2.0.

Project staff members collaboratively reviewed 26 questions that were tested as a part of the Question Bank v1.0 project but were classified as either “further testing required” or “not acceptable for inclusion” and not included in the bank. As a part of this review, staff assessed testing and analysis notes that were created as a part of the Question Bank v1.0 project for each question. Census Bureau economic SMEs were also asked to determine whether each question would be applicable to their division, other divisions, or other agencies/groups, if revised. Ten questions that were not applicable to these groups were dropped from further consideration.

Survey methodologists then revised the remaining 16 draft questions to incorporate testing and analysis notes from Question Bank v1.0. As a part of this revision process, four new questions were created to address methodological concerns (e.g., addressing double-barreled questions by creating two new questions). It was also determined that one question under consideration would benefit from being asked after another question already published in Question Bank v1.0, so these questions were tested as a gate and a follow-up.

Most of these 21 questions were applicable to all industries, but two questions required testing with specific industries. One question about inventories was industry-specific and applicable only to manufacturers. Another question about net profits included an instruction for use by non-profits. The inclusion of these two questions for testing required recruiting specifically for manufacturers and non-profits, as described further in Section 3.4.2.

Notably, private sector retesting was conducted after Round 1 of public section testing (see Section 2). Based on findings from Round 1 public sector testing, project staff revised three additional questions that were tested for and included in Question Bank v1.0. These revisions allowed the project team to test whether insights gained during public sector testing of these three questions would also be applicable to the private sector.

This identification and revision process resulted in 24 questions moving on to the next step in the research process.

The 24 questions were organized into three waves, based on question topic (Exhibit 3.1). Most questions were tested in only one wave. However, there were three notable reasons for exceptions. First, as described in Section 3.2, one question was applicable only to the manufacturing industry. We included this question for manufacturers in all three waves to allow for collecting sufficient feedback from a larger analytic sample. Second, as described in Section 3.2, one question included an instruction that was provided for non-profits. Because this question was applicable across industries and only included an instruction that was industry-specific, we included this question on two waves to allow for feedback on the instruction specifically.

Third, Question Bank v1.0 contains some phrases, definitions, or instructions that are shared across multiple items in the bank (e.g., multiple items use the same instructions for reporting profit). Two of the 24 questions tested in this task used one of these phrases, definitions, or instructions that are shared across other questions in Question Bank v1.0. During the revision process, these two questions were revised by updating the wording of the shared phrases/definitions/instructions. If the updates to these phrases/definitions/instructions tested well for these two questions, this would support the need to update all questions that use these particular phrases/definitions/instructions in v1.0 of the bank. Therefore, to allow for stronger conclusions derived from a larger analytic sample, these two questions were tested across two waves rather than just one.

Once the draft questions were organized into waves within each round of testing, cognitive interview protocols were developed for each wave using the same approach developed for the public sector testing detailed in Section 2.3. As described in Section 3.1, the questions identified for testing with the private sector included questions excluded from Question Bank v1.0 in previous testing, newly written questions, and questions tested with the public sector. Because the majority of these questions had been tested previously, probing questions were written to focus on the changes that were made to the question because it was previously tested. However, for any new questions, probes focused on all steps of the response process.

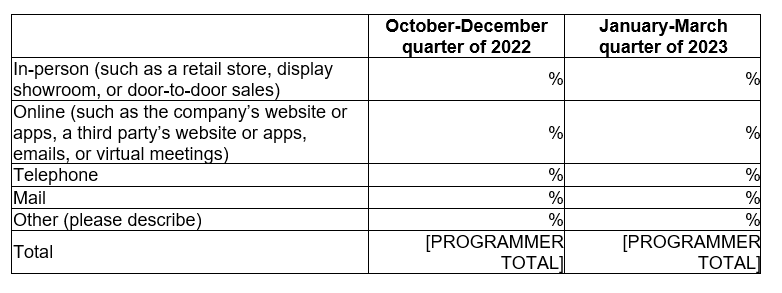

Exhibit 3.1. Instrument Arrangement for EEIC Cognitive Testing with the Private Sector

Testing Group |

No. of Questions Tested |

Round 1 |

|

Wave 1 |

6 |

Wave 2 |

7 |

Wave 3 |

8 |

Questions tested across multiple waves |

3 |

Round 1 Total |

24 |

The population of interest for private sector cognitive testing consisted of businesses that had previously responded to one or more of the following Census Bureau business surveys:

Services Annual Survey (SAS17)

Annual Capital Expenditures Survey (ACES)

Annual Wholesale Trade Survey (AWTS17)

Annual Retail Trade Survey (ARTS17)

Manufacturers’ Unfilled Orders Survey (M3UO14)

Annual Survey of Manufactures (ASM).

To recruit employees in the private sector, we continued to use the same sampling file as we did in the Question Bank v1.0 testing. During this previous testing, Census Bureau staff from the Economy-Wide Statistics Division provided a sampling frame—a list of businesses that had previously responded to at least one of these surveys. This sampling frame included the contact information, which typically consisted of name, address, phone number, and email address of the past respondent at a given business. The sampling frame also included some essential business information such as the business’ industry classification using the North American Industry Classification System (NAICS) and payroll size.

During this previous testing, project staff prepared this sample file for recruitment by removing duplicates and randomly partitioning the frame into replicates consisting of about 100 businesses, stratified on payroll size category (based on the Census Bureau’s classification). Units in payroll size categories A (≥50,000,000) and B (10,000,000–49,999,999) were excluded from the sample. This was done for two reasons: there were so few companies in these payroll size categories that we could not guarantee anonymity and the experiences of these large companies are likely not applicable economywide.

For testing of Question Bank v2.0, staff contacted replicates that were unused in the Question Bank v1.0 testing. These replicates were contacted initially by email and later by phone and invited to participate in the research study (see Section 3.2.2 for details). As stated in Section 1.1.1, this project used a purposive sample; therefore, the resulting sample should not be considered statistically representative and thus it is inappropriate to make statistical inferences about a specific target population.

To ensure the participant recruitment for Question Bank v2.0 would not sample and recontact the businesses that have been contacted or have completed a cognitive interview during the testing of Question Bank v1.0, the same sampling list from Question Bank v1.0 continued to be used. Before data collection began, 50 cases were randomly selected for additional review and research online to better understand the accuracy of the contact information. One staff member researched each company and attempted to establish several key pieces of information: (1) whether the business was closed, (2) whether the contact person listed still worked at the company, and (3) whether a new contact person could be identified. Further discussion of the results of this effort are outlined in Section 3.4.2 under recruitment challenges. Ultimately, we decided that additional online research before calling cases would not improve contact rates, nor ultimately cooperation rates. Instead, the typical contact procedure was followed as described in Section 3.4.2.

As described in Section 1.1.1, the goal of participant recruitment for individual waves was to conduct 15 total interviews, with an estimated length of 45 minutes per interview.

Project staff recruited participants for cognitive testing and conducted interviews with private sector employees from May 11, 2023 to June 19, 2023. Recruitment efforts focused on contacting and recruiting participants using one replicate of sampled businesses at a time. We emailed a replicate of sampled businesses an invitation to participate in the interview and within about 1 week of sending the first email, a recruiter called the sampled agency. All recruiters and interviewers used Census Bureau email accounts (i.e., [email protected]) when contacting sampled participants. We sent a second email to sampled businesses that did not respond to the first email and phone call. Sampled businesses that agreed to participate by phone or by email were then scheduled for an interview and sampled businesses who refused to participate by phone or by email were removed from further contact.

In cases where the original past respondents were no longer the point of contact for the sampled agency (i.e., the listed contact person was retired, deceased, changed positions, or no longer employed at the agency), staff attempted to identify and seek cooperation from their replacement for participating in the cognitive interview. However, some of these new employees indicated their lack of knowledge or confidence for answering our questions and instead refused to participate.

Because of the content of some of the questions tested for the private sector, there was a specific effort made to recruit employees in the manufacturing industry and the non-profit industry, as described in Section 3.3. Recruitment efforts for these industry-specific cases used the NAICS codes provided within the sampling file (as described in Section 3.4.1), using NAICS codes starting with 31–33 for manufacturers and 813 for non-profits. The goal for manufacturers was to gain cooperation from four manufacturers per wave across the three waves with the manufacturing-specific inventory question, for a total of 12 manufacturers. The goal for non-profits was to gain cooperation from two non-profits per wave across the two waves with question with the non-profit instruction, for a total of four non-profits. During Question Bank v1.0, we had similar recruitment goals for manufacturers but never attempted to recruit specifically for non-profits. We found during Question Bank v2.0 that non-profits readily agree to participate and ultimately recruited more non-profits than the original goal. We completed 12 interviews with manufacturers, seven interviews with non-profits, and 26 interviews from all other industries.

Overall, staff were able to establish contact (i.e., completed interview, refused, no-show, withdrawal during interview, and rescheduled) with approximately 15% of the sampled businesses and we were able complete interviews with approximately 5% of all contacted sampled businesses. See Exhibit 3.2 and Appendix A for details about the recruitment outcomes for the study.

Exhibit 3.2. Recruitment Outcomes by Testing Rounds for the Private Sector

|

Round 1 (Waves 1–3) |

Recruitment start date |

5/8/2023 |

Interviews start and end dates |

5/11/2023—6/19/2023 |

Number of sampled units with at least one contact attempt |

961 |

Number of unknown eligibility* |

23 |

Number of ineligible* |

20 |

Total number of eligible cases** |

918 |

Number of refused* |

76 |

Number of no-shows* |

10 |

Number of withdrawals* |

6 |

Number of completed interviews* |

45 |

Number of eligible cases, non-contact* |

781 |

Cooperation rate*** |

4.9% |

Contact rate**** |

14.9% |

* See Appendix A for the definitions of each final disposition code (AAPOR 2016)

**For these purposes, a sampled unit was considered eligible if at least one email was sent, and the email did not bounce back, regardless of whether the phone number was called.

***AAPOR Cooperation Rate 1 (COOP1), or the minimum cooperation rate, is the percentage of eligible cases with a completed interview.

****AAPOR Contact Rate 3 (CON3) is the percentage of eligible cases in which some responsible member of the sampled unit was reached by the survey (i.e., completed interview, refused, no-show, withdrawal during interview, and rescheduled).

Participant recruitment challenges. As outlined in Section 3.4.1, we began recruitment with an experiment to evaluate whether additional upfront online research would improve contact rates and make recruitment calls more productive. We did not request a new sample file because we wanted to avoid contacting any person or business who had been contacted for Question Bank v1.0. We had concerns about how accurate information in the sample was, especially given the challenges we already faced with outdated information during testing for Question Bank v1.0. We were concerned that the recruitment task may require greater effort and more time to recruit the needed number of interviews, with turnover of the points of contact the primary concern.

One option that we considered was to add in a step to the recruitment process to do research online to confirm the contact person at each business, with subsequent recruitment efforts focused only on those who we could confirm. To evaluate the effectiveness of this approach, one staff member reviewed the information available online for 50 businesses to confirm whether the business was still open, whether the listed contact person still worked at the business, and whether a new contact person could be identified. These 50 cases were then worked by the recruiter like normal, with one email sent before one phone call attempt, followed by one final email outreach. The hypothesis was that businesses where we could confirm the contact people may be more productive, thus making additional research up front worth the time and effort. However, the review of these efforts indicated no better contact rate for the contacts that were confirmed online compared to the contacts that were not confirmed. The outcome of this effort is summarized in Exhibit 3.3. Contacts that were confirmed online were contacted at a rate of 55% and contacts that were not confirmed online were contacted at a rate of 57%. Additionally, once contacted, there was no significant difference between the number of contact persons we were able to confirm were still the point of contact for the business: 83% for those we confirmed online, and 88% for those we did not confirm online.

In addition to the information presented in Exhibit 3.3, all three interviews that were scheduled from the recruitment efforts of these 50 cases were from contacts that could not be confirmed online. All three of these businesses were small businesses. We suspect that businesses with employees who are not listed online are most commonly small operations that may have no online presence at all. We also tend to gain more cooperation from smaller businesses, which may help explain why the interviews we did recruit from these 50 cases all came from small businesses we were unable to confirm online. One other interesting outcome from this research was the refusal rates across contacts who could and could not be confirmed online. Among those contacts who could not be confirmed (n=28), six refused to participate, giving a refusal rate of 21%. Among those contacts who were confirmed (n=22), only three refused to participate (14%). Given the small sample, we cannot draw many conclusions from this refusal rate, but it was an interesting finding.

After reviewing all the findings from this effort, we concluded that additional online research before recruitment calls would likely not lead to a higher contact rate.

Exhibit 3.3. Outcomes from Information Gathering Research

|

|

n |

Percentage |

Number of contacts confirmed online |

|

22 |

44% |

Contacted |

|

12 |

55% |

Contact confirmed phone |

|

10 |

83% |

Contact no longer employed |

|

1 |

8% |

Unknown |

|

1 |

8% |

Not contacted |

|

10 |

45% |

Number of contacts not confirmed online |

|

28 |

56% |

Contacted |

|

16 |

57% |

Contact confirmed phone |

|

13 |

81% |

Contact no longer employed |

|

3 |

19% |

Unknown |

|

0 |

— |

Not contacted |

|

12 |

43% |