2020/25 Beginning Postsecondary Students (BPS:20/25) Field Test

2020/25 Beginning Postsecondary Students (BPS:20/25) Full-Scale Study

Appendix D Qualitative Testing Summary v20

2020/25 Beginning Postsecondary Students (BPS:20/25) Field Test

OMB: 1850-0631

2020/25 Beginning Postsecondary Students (BPS:20/25) Field Test

Appendix D

Qualitative Testing Summary

Submitted by

National Center for Education Statistics

U. S. Department of Education

September 2023

Contents

Topic 1: Introduction to Survey 11

Topic 2: Federal Student Loan Forgiveness 11

Ease or Difficulty to Understand or Answer 11

Familiarity with Terminology 12

Awareness of Debt Relief Plan 13

Eligibility Requirements and Qualifications 14

Topic 3: Licensure/Certifications 15

Describing licenses and certifications 15

Defining license and certification 16

Cost of license/certification 16

Familiarity with Terminology 17

Topic 4: Data Collection Text Messages 17

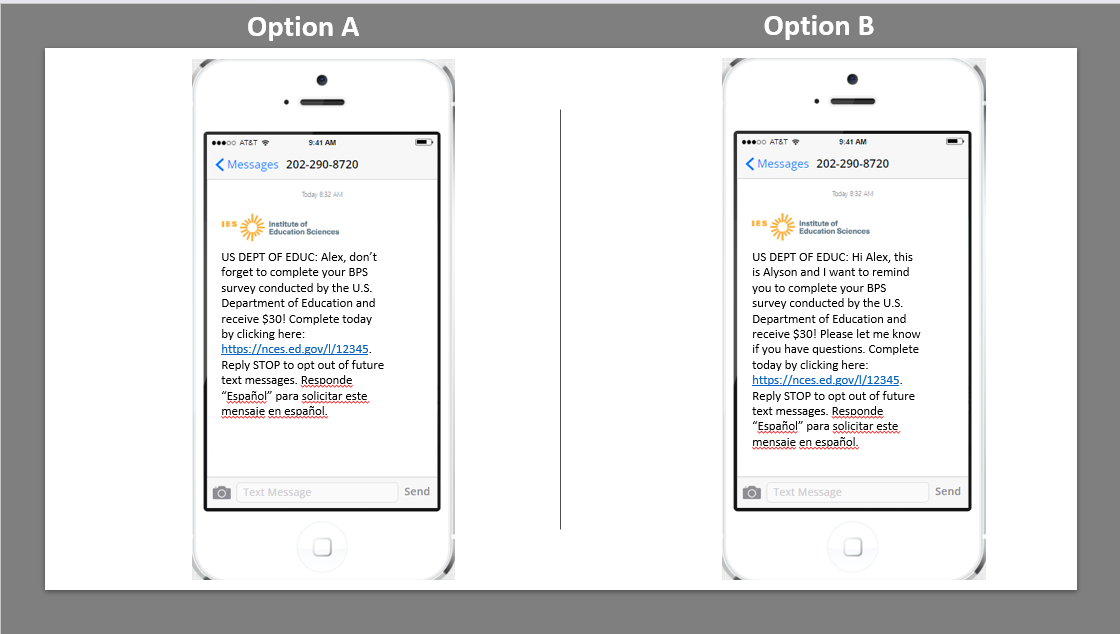

Standardized versus Personalized 17

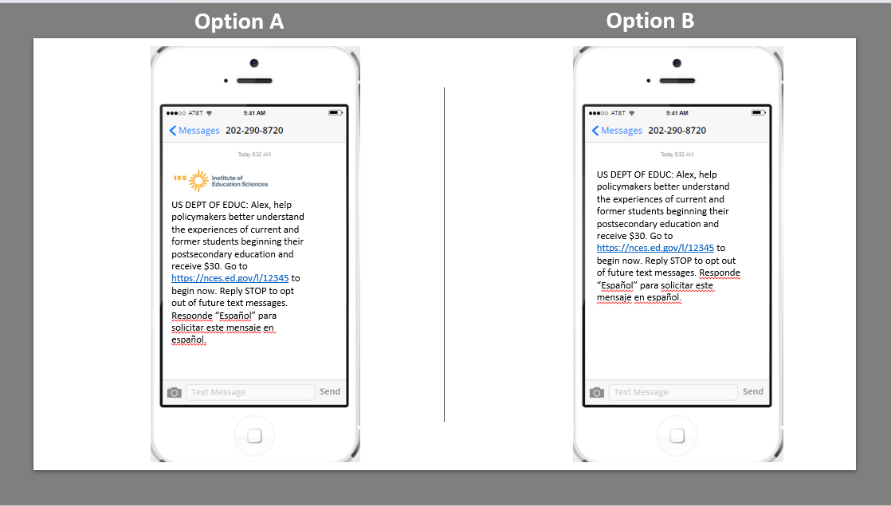

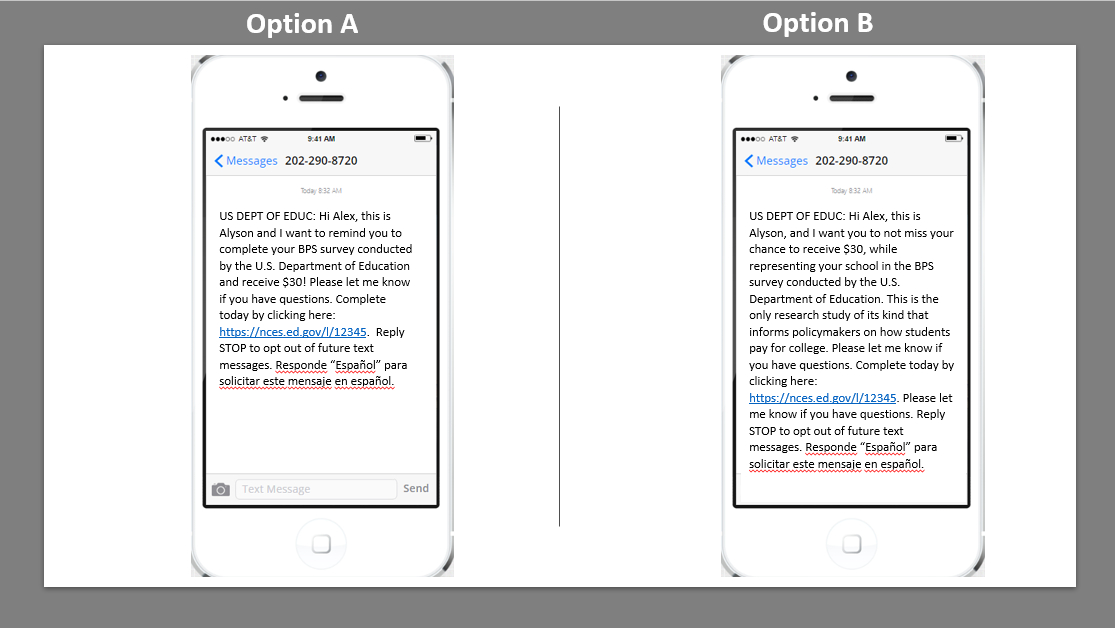

Personalized Regular versus Personalized Tailored Communication Plan 21

Likeliness to Respond to Survey 22

Tables

Figures

Figure 1. Poll – Familiar with One-time Loan Forgiveness Plan Terminology 12

Figure 2. Standardized versus Personalized text message 18

Figure 3. Results of first polling question on text message comparison 18

Figure 4. Logo versus No Logo text message 20

Figure 5. Results of second polling question on text message comparison 20

Figure 6. Regular versus Tailored text message 21

Figure 7. Results of third polling question on text message comparison 21

Background

The National Center for Education Statistics (NCES) is preparing the second longitudinal follow-up to the cross-sectional 2019-20 National Postsecondary Student Aid Study (NPSAS:20) which examines the characteristics of students in postsecondary education, with special focus on how they finance their education. The Beginning Postsecondary Students Longitudinal Study (BPS:20/25) is the second follow-up survey with a subsample of NPSAS:20 sample members who were identified as first-time beginning college students (FTBs) during the 2019-20 academic year. Data from BPS are used to help researchers and policymakers better understand how financial aid influences persistence and completion, what percentages of students complete various degree programs, the early employment and wage outcomes of certificate and degree attainment, and why students leave postsecondary education.

To improve the quality of the data collected, RTI International, on behalf of the NCES, contracted with EurekaFacts to conduct virtual focus group sessions to discuss a subset of questions covered in the BPS:20/25 survey related to either federal loans or licensure and certification. Additionally, each focus group was asked to evaluate contact materials and methodologies related to data collection strategies. Full details of the focus group components were originally approved in April 2023, OMB# 1850-0803 v.336. The focus group results will be used to refine the BPS:20/25 survey questions, maximize the quality of data collected, and provide information on issues with important implications for the survey design, including:

whether respondents can provide accurate data;

the extent to which terms in questions are understood;

update and add terminology when necessary;

the thought processes used to arrive at answers to survey questions;

the appropriateness of response categories to questions; and

sources of burden and respondent stress.

Executive Summary

Introduction

The present study focuses on the Beginning Postsecondary Students Longitudinal Study (BPS:20/25) student survey, investigating issues and preferences regarding item formulation and comprehension as well as pinpointing sources of substantial participant burden.

Sample

Participants were selected from a database of postsecondary students in the United States and were recruited to obtain a diverse sample in terms of gender, ethnicity, race, education level, and socio-economic status. Participants must have been enrolled in a college, university, or trade school for the first time between July 2018 and June 2022. A total of 27 postsecondary students participated in focus group sessions, which were conducted remotely between May 23, 2023 and June 15, 2023. See Study Design below for more information.

Key Findings

Overall, participants were able to answer the subset of questions for their respective survey (e.g., questions on federal loans or licensure and certification). A list of the questions included for each focus group can be found in Attachment 1 at the end of this document. There were slight difficulties, such as recalling specific details and clarification of terminology, but participants completed the surveys without major hurdles.

For the federal loans group, two main themes emerged:

A majority of participants understood the basics of the Debt Relief Plan and were more familiar with the association of the current administration (e.g., ‘Biden-Harris Administration’s Student Debt Relief Plan’ or ‘Biden Student Loan Forgiveness’) compared to a more generic phrasing such as ‘White House Student Debt Relief Plan.’

Most participants applied for the Debt Relief Plan, even with the uncertainty of qualifying.

As for the licensure and certifications group, three main themes emerged:

Participants expressed that the terms "license" and "certification" were suitable since no other words were provided in the survey to describe them.

Participants were unsure whether the college courses they completed for their certificate should be considered part of the overall cost of their license/certification.

Participants were not familiar with the concepts of "income sharing agreements" or "deferred tuition."

The participants' opinions of the displayed text messages yielded three key takeaways:

The inclusion of a logo was found to enhance the perceived legitimacy of the messages.

It is important to keep the text short and concise, the participants favored brevity in their responses.

Participants emphasized the significance of highlighting the monetary incentive within the text messages, recognizing its role in capturing attention and engagement.

Study Design

Sample

The sample is comprised of 27 postsecondary students who enrolled in a college, university, or trade school for the first time between July 2018 and June 2022. Participants were recruited to obtain a diverse sample in terms of gender (77.7 percent female, 14.8 percent male, 7.4 percent who preferred not to answer), ethnicity (88.8 percent not Hispanic or Latino, 11.1 percent Hispanic or Latino), race (33.3 percent White, 25.9 percent Asian, 22.2 percent Two or more races, 7.4 percent Black or African American, 11.1 percent who preferred not to answer), education level (85.1 percent Bachelor’s degree, 14.8 percent Associate’s degree), and socio-economic status (as determined by income – 29.6 percent high, 59.3 percent low). Participant demographics are presented in table 1.

Participant Demographics |

Loan Group |

Certification Group |

Total |

(n = 13) |

(n = 14) |

(n = 27) |

|

Gender |

|

|

|

Male |

2 |

2 |

4 |

Female |

10 |

11 |

21 |

Prefer not to answer |

1 |

1 |

2 |

Age |

|

|

|

18-24 |

13 |

13 |

26 |

25-29 |

0 |

1 |

1 |

Hispanic/Latino Origin |

|

|

|

Yes |

2 |

1 |

3 |

No |

11 |

13 |

24 |

Race |

|

|

|

Asian |

4 |

3 |

7 |

Black or African American |

0 |

2 |

2 |

White |

4 |

5 |

9 |

Two or More Races |

5 |

1 |

6 |

Prefer not to answer |

0 |

3 |

3 |

Income |

|

|

|

<$20,000 |

5 |

6 |

11 |

$20,000 - $49,000 |

1 |

4 |

5 |

$50,000 - $99,999 |

4 |

3 |

7 |

$100,000 or more |

0 |

1 |

1 |

Prefer not to answer |

3 |

0 |

3 |

Current/Most Recent Degree Program |

|

|

|

Associate’s |

2 |

2 |

4 |

Bachelor’s |

11 |

12 |

23 |

Recruitment and Screening

For the purposes of recruitment, EurekaFacts used its internal database of postsecondary students within the United States for e-mail outreach efforts. EurekaFacts also used social media postings to reach postsecondary students. In addition to recruiting diverse participants, recruiting efforts focused on students who had federal loans or who held a certification or licensure.

All staff who conducted the recruitment and screening participated in a training to discuss the overall recruitment objectives; a section-by-section review of the screening instrument; and specific instructions on the critical importance of complete adherence to all OMB guidelines, protocols, and restrictions.

During the screening process, participants were informed about the objectives, purpose, and participation requirements of the data collection effort as well as the associated activities. Participants were also informed that participation was completely voluntary, and responses may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law [Education Sciences Reform Act of 2002, 20 U.S.C §9573].

Participants were screened using the approved screener script programmed in Vovici software to ensure the screening procedure was conducted consistently throughout the recruitment effort. After determining whether they qualified for the study, EurekaFacts staff reached out to the participant to confirm the time and date of the focus group appointment as well as all necessary contact information. Participants were further informed they would be compensated with a $40 e-gift card for their time and effort.

To improve participation rates, confirmation e-mails were sent to the scheduled participants. Additionally, reminder e-mails were sent to the participants as well as a reminder phone call and text message (if given permission) at least 24 hours before the scheduled focus group. Informed consent was obtained for all respondents who participated in the data collection efforts and incentives were given at the completion of each focus group session.

Data Collection Procedures

EurekaFacts conducted seven, 60-minute, online focus groups with postsecondary students from May 23, 2023 to June 15, 2023. Moderators asked students to review a subset of questions from the BPS:20/25 survey to provide feedback on questions, to understand their familiarity with different concepts and terms, and to ensure that the questions were clear and understandable. Data collection followed standardized policies and procedures to protect participants’ privacy, security, and confidentiality. Digital consent was obtained via Microsoft Forms prior to the focus group for most participants. However, participants who did not return a consent form prior to their scheduled focus group were able to complete the online consent form at the beginning of the focus group. The consent forms were stored separately from their focus group data and secured for the duration of the study.

Prior to each focus group, a EurekaFacts staff member created a Zoom meeting invitation with a unique URL. When participants entered the focus group session, moderators introduced themselves and followed the OMB approved script and focus group protocol. Prior to starting the survey task, moderators asked for permission to audio and video record the focus group. Once permission was granted, each session was recorded. Participants first worked through a survey with questions related to federal loans or licensure and certification. After the survey was completed, participants discussed their experience completing the survey and related topics. During the final portion, participants reviewed text messages communications and gave their feedback and opinions. At the end of the focus group session, participants were debriefed about their incentive.

Coding and Analysis

After each session, a trained and authorized EurekaFacts staff member utilized the NVivo software to upload and review a high-quality transcription of each focus group session’s commentary and behaviors. A datafile was created containing completely anonymized, transcriptions and observations that tracked each participant’s contributions from the beginning of the session to its close. One reviewer cleaned the datafile by reviewing the audio/video recording to ensure all relevant contributions were captured. As the first step in data analysis, coders’ documentation of the focus group sessions included only records of verbal reports and behaviors, without any interpretation.

Once all the data was cleaned and reviewed, research analysts began the formal process of data analysis which involved identifying major themes, trends, and patterns in the data and taking note of key participant behaviors. Specifically, analysts were tasked with classifying patterns within the participants’ ideas in addition to documenting how participants justified and explained their actions, beliefs, and impressions. Analysts considered both the individual responses and overarching group responses.

Each topic area was analyzed using the following steps:

Getting to know the data – Several analysts read the moderator guides and viewed the video recordings or transcripts to become familiar with the data. Analysts recorded impressions, considered the usefulness of the presented data, and evaluated any potential biases of the moderators.

Focusing on the analysis – The analysts reviewed the focus group's purpose and research questions, documented key information needs, and focused the analysis by question or topic.

Categorizing information – The analysts gave meaning to participants’ words and phrases by identifying themes, trends, or patterns.

Developing codes – The analysts developed codes based on the emerging themes to organize the data. Differences and similarities between emerging codes were discussed and addressed in efforts to clarify and confirm the research findings.

Identifying patterns and connections within and between categories – Multiple analysts coded and analyzed the data. They summarized each category, identified similarities and differences, and combined related categories into larger ideas/concepts. Additionally, analysts assessed each theme’s importance based on its severity and frequency of recurrence.

Interpreting the data – The analysts used the themes and connections to explain findings and answer the research questions. Credibility was established through analyst triangulation, as multiple analysts cooperated to identify themes and to address differences in interpretation.

Limitations

The key findings of this report were based solely on analysis of postsecondary students' virtual focus group observations and discussions. There are timing constraints due to the nature of the focus group process which can interrupt the flow of participants answering specific follow-up probes. Additionally, every participant may not have responded to each follow-up probe, thus limiting the total number of respondents providing feedback by question and probe.

However, the value of focus groups is demonstrated in their ability to provide unfiltered comments and observation from a segment of the target population. While focus groups cannot provide absolute answers in all conditions, the sessions can play a key role in identifying the areas where participants could encounter potential problems or issues when taking the survey on their own. In addition, participants can respond honestly to favored communication methods.

Findings

The following section of the report provides qualitative results of the focus groups, organized by topic. This testing focused on the portion of the survey related to federal loans and students with professional licensure or certification, as well as obtaining reactions and impressions about contacting materials and messages.

Topic 1: Introduction to Survey

For the first part of the session, both the federal loans and certifications and licensures groups were asked to respond to a subset of the BPS survey prior to engaging in the discussion. A link to the survey was provided via Zoom chat. Upon completing the survey, participants were asked for their overall impressions of the survey. Participants from both groups stated that the survey was "easy," "straightforward," "short," and "simple." As one participant from the federal loans group stated, “I thought it was very easy to take and very easy to understand, and I was able to get it done pretty quickly because of that.”

Participants were further probed on whether it was easy or difficult to recall certain details on any specific survey questions or question types. Note that one participant within the Certifications/Loans group was unable to partake in the discussion portion due to work-related issues, bringing the participation sample to 26 participants; however, they did type in the Zoom chat for some parts of the discussion. Eleven participants across the sessions expressed that it was easy to recall the details asked within their respective survey. One participant explained, “Nothing was too difficult. All the questions were pretty in line with money and financials and all that stuff, so nothing was too difficult.”

Topic 2: Federal Student Loan Forgiveness

Next, participants in the federal loan groups (n = 13) discussed federal student loan borrowing and debt relief, including the Biden-Harris Administration Student Debt Relief Plan. These discussions occurred in May and June of 2023, before the Supreme Court struck down the Plan in Biden v. Nebraska on Friday, June 30, 2023. Following that decision, U.S. Secretary of Education Miguel Cardona began a new rulemaking process to consider other ways to provide student debt relief. While some findings presented below are no longer relevant (e.g., those referring to participants’ familiarity with the Biden-Harris Administration plan specifically), other findings are relevant to potential future survey questions about debt relief plans in general (e.g., discussions about the influence of student debt relief on participants’ future plans).

Ease or Difficulty to Understand or Answer

Participants were asked how easy or difficult it was to understand or answer the series of questions about federal student loan borrowing. About three-fourths of the participants (10 out of 13) expressed that it was easy overall to understand and answer the questions. It was easy due to past knowledge, following the news, self-education, or simply looking up loan information on the FAFSA website. Two participants emphasized that they would have had difficulty in the past, when they were beginning their postsecondary education, but now have a better understanding of loan terminology.

Nevertheless, some participants conveyed that it was difficult to answer or understand some of the survey questions:

Three participants were uncertain about the income question. One participant explained, “especially with student loans, it was usually a mixture of if they ask for your household income versus asking for your personal income. And I wasn't quite sure, I assumed it was asking for personal income, but in hindsight I don't know if that's actually right.”

Two participants expressed confusion about terminology. One participant explained, “I kind of stumbled over what the federal relief plan was because I had never heard its official name, I had only ever heard just student relief, student aid, things like that.”

Two participants expressed taking time to accurately totaling up their federal loans. One participant stated, “The number thing at the end did throw me for a loop. I did have to read the description to make sure I'm giving you the correct number and based off all of that.”

One participant emphasized that it was difficult for them to understand loans in general being from a different country; they had to look up YouTube videos and ask questions of financial advisors.

Familiarity with Terminology

Participants were then asked to respond to a polling question on which term they are most familiar with when thinking of the recent one-time loan forgiveness plan. Figure 1 shows participants’ responses to the poll.

Figure 1. Poll - Familiar with One-time Loan Forgiveness Plan Terminology

Following the poll, participants were asked if they thought other students would recognize the term “White House Student Loan Debt Relief Plan” if asked about it in a survey, or if another term would resonate better. Views on the term were firm within each sub-group’s conversation. Participants agreed that the term “White House Student Loan Debt Relief Plan” was very vague. Two participants voiced that the term is interchangeable with “Biden-Harris Administration’s Student Debt Relief Plan.” Two participants expressed that they know it as “student loan forgiveness plan.” The remaining two participants voiced that they were more familiar with the term with the current administration’s name. One participant explained, “All the headlines that I've seen for news about this, it refers to it as Biden Student Loan Forgiveness. And I think that's a little bit more specific because the White House could potentially change... Biden is really at the front of this push for the loan forgiveness, so it makes more sense to me.” Overall, the discussion on familiar term connects with the polling question results in that participants are most familiar with “Biden-Harris Administration’s Student Debt Relief Plan” or something similar, such as “Biden Student Loan Forgiveness’ or ‘student loan forgiveness plan.”

Awareness of Debt Relief Plan

Participants were asked if they were aware of the Debt Relief Plan before the focus group. All the participants (n = 13) indicated that they were aware of the plan in advance of the focus group. Participants were then asked how they first learned about it. Participants recalled learning about the Debt Relief Plan through various sources, like social media (e.g., Instagram, TikTok), the news (e.g., Google News, local, or national), word of mouth via relatives (e.g., parents), school (e.g., classmates or professor) or friend, e-mail, financial advisor, and loan service website.

Participants were then asked to type in the Zoom chat their understanding of the Debt Relief Plan. Note that it may be possible, as these were virtual sessions, that participants could have looked up the definition or meaning on the web browser during the session. It is reasonable to assume that participants might have built off other chat responses. Overall, the vast majority of participants appeared to have a good understanding of the Debt Relief Plan (table 2).

Table 2. Chat Responses on Understanding of Debt Relief Plan

Submission of Application

Participants were later asked if they submitted an application for the one-time loan forgiveness. Ten participants voiced that they did. Participants responded that they applied because “it wouldn’t hurt” or because it “wasn't anything difficult” to fill out. One participant stated that they knew they were eligible, hence the reason for filling out the application. Another participant expressed that they filled out the application due to the legitimacy of the website, “Once you see it's a credible government site and you're not just putting your social [SSN] into somewhere suspicious, I think there's no reason not to try even if you don't get the maximum forgiveness or anything.”

The remaining three participants did not submit an application. Two participants stated that an e-mail notified them an application would be submitted automatically for them if they were eligible. The remaining participant was unable to submit it due to a “very chaotic” time. However, all three responded that they were unsure if an application was automatically submitted on their behalf.

Eligibility Requirements and Qualifications

Participants were then asked how easy it was to answer the survey question about whether they qualified for the Debt Relief Plan. Queried participants responded with a mix of ease to difficulty. Five participants responded that it was easy for them to answer this question as they understood the qualifications and believed they would be eligible. For example, one participant responded with, “For me, it was actually pretty easy just because I figured I would qualify because I had a zero Expected Family Contribution. And I did have a decent amount of loans from school, so I figured I would qualify.” However, four other participants said that it was slightly difficult for them due to uncertainty of qualifications.

In addition, participants were asked about the total amount they anticipated being forgiven if the plan was approved. Participants within the first group voiced high confidence in receiving loan forgiveness, with three participants expecting to receive the full amount, one of whom stated it is less than the amount that they borrowed. Most, if not all, participants within the second group were hopeful that they would receive loan forgiveness. One participant anticipated that the Debt Relief Plan would cover all their debt, with the others communicating they would still have some loans to pay. One participant captured the tone of the group by stating, “I hope that I get the maximum amount of money that I possibly can. But as somebody that just graduated college, all the loans that I have are done, I can't just not pay them. So even if I get a dollar, it's something.” As for the last group, all the participants were uncertain (low confidence) if they would receive federal loan forgiveness due to the confusion and little knowledge with the qualifications.

Influence of Future Plans

For the last portion of this topic, participants were asked if the Debt Relief Plan may influence their academic and personal or financial plans. Furthermore, they were asked whether the uncertainty of the Debt Relief Plan being enacted has influenced any of their plans.

Academic Plans

When considering impacts to academic plans, three participants communicated that the Debt Relief Plan impacted a desire to further their education (e.g., graduate school). Two participants were more hopeful, whereas one explained an indifference, “I mean I was sort of on the edge about thinking about it (postgraduate degree) and I think if I were more certain that the debt relief would go through that I would more seriously consider it, but at the moment I haven't.” The remaining 10 participants expressed that the Debt Relief Plan would not change their academic plans. Participants communicated that they have already finished their education or would continue with their current field of study. For example, one participant said, “Yeah, I also said it didn't really impact my plan. I'm still in school, I plan to finish, so at the same school, so I'm already a full-time student. I don't really see that changing.”

Personal or Financial Plans

When it came to impacting personal or financial plans, eight participants agreed that it would support them with reducing debt, to help with personal finances (i.e., buying a home), investing, stress relief, or employment. Five participants specified that it would personally help with overall personal finances. Two participants noted on employment, one stating that it would influence where they would work (e.g., nonprofit with low salary) and the other participant stated they would see how much their current internship could offer them when transitioning as an employee. The remaining five participants said there would be no change or have not put much thought into this topic. One participant expressed, “Yeah, I'm still in school and I'm already drowning in loans, and I'm going to go and get my master's degree after I graduate, so I'll have even more. So, it really doesn't affect me. I know I'm just going to keep getting more loans anyway.”

Topic 3: Licensure/Certifications

Note that one participant within this group was unable to partake in the remaining discussion after the second sub-section due to work-related issues, bringing the participation sample to 13 participants; however, they did participate in the third part of the session (polling questions). There was one other participant who had some outside distractions which caused them not to fully answer the questions directly.

Describing licenses and certifications

Next for the certifications and licensures group (n = 14), participants were asked to briefly describe their credential and when they received it. They were prompted to type this into the Zoom chat, a few verbally responded. Participants received their license or certification between 2018 to 2023. Table 3 shows a synthesis of participants’ responses grouped by profession:

Table 3. Summary of Chat Responses by Profession

Education Related |

Health Related |

Other |

Educational Technician III |

Emergency Medical Technician |

Cisco Certified Network Associate (n = 2) |

Teaching License (n = 2) |

Certified Nursing Assistant (n = 2) |

Tax Professional Certificate |

Aid |

Basic Life Support |

|

|

Red Cross Lifeguard |

|

|

ServSafe Person In Charge (Food Safety) |

|

Defining license and certification

Next, participants (n = 13) were asked to explain, in their own words, what a “state or industry license or professional certification” is.

Several participants (n = 6) included references to the involvement of “state government” in their personalized definitions. Additionally, five participants emphasized the importance of possessing “competency” or “required training and/or skills” to carry out specific tasks or responsibilities. The remaining two participants did not contribute further comments, as they agreed with the viewpoints expressed by their peers. To capture the consensus among participants regarding the explanation of what a “state or industry license or professional certification” is, the following quote can be considered representative, “I agree with the statement that it’s meant to make sure that someone is completely competent to do the professional work in a certain state or area. I think professional certification is probably the only word that I think of.”

Out of the five participants who responded to the question regarding additional terms to describe licenses and certifications in a survey, two participants did not propose any other terms. One participant suggested that "professional certification" was the only term that came to mind. However, the remaining two participants provided valuable insights and suggestions. One participant emphasized the importance of including references to coursework and the required hours needed to qualify for a certification test. On the other hand, the other participant expressed a distinction between a certification and a license, stating that the certification signifies the possession of skills while the license is granted by the state. They emphasized that, in their case, the certification and license were separate entities issued by different authorities.

Cost of license/certification

During the discussion, participants were asked about the overall cost of their license or certification and whether they received any financial assistance. Out of the ten participants who responded to this prompt, nine of them received some form of financial support. One participant mentioned having a voucher, while another participant indicated that their certification was obtained through government funding. Two participants had their employers or family cover the expenses of their courses. Lastly, one participant shared that their course was provided free of charge through their magnet school.

Some participants provided detailed explanations regarding how they calculated the total cost of their license or certification. Although they did not specify the exact dollar amount, they offered examples of the components they considered in determining the total cost. One participant mentioned including the expenses for the courses taken, the application fee, and the renewal fee. Three other participants also factored in the number of courses required for their certification. Additionally, one participant mentioned considering tuition fees, textbook costs, and other miscellaneous expenses related to obtaining their associate degree.

Familiarity with Terminology

Participants were then asked about their knowledge or understanding about “bootcamps.” Among the participants, a majority of (8 out of 13) demonstrated a clear understanding of what a bootcamp entails. One participant explained, “I would think a bootcamp is for someone who doesn’t have any knowledge of that specific topic, and just train them to learn the basics and to be able to possibly get a job in that field.” However, one participant expressed a desire for more information, as they lacked knowledge of the subject. Additionally, another participant had some confusion due to associating the term "bootcamp" with military training but was interested in exploring the concept of a bootcamp as it relates to starting a career. During the discussion, participants were specifically asked if they had obtained a license or certification from a bootcamp, only two participants responded to this question by saying “no.”

Participants were also asked about their familiarity with the terms "income sharing agreements" or "deferred tuition." Out of the eight participants who responded, five indicated that they had not heard of either term. One participant mentioned that "income sharing agreements" sounded familiar but had no personal experience with it. On the other hand, two participants demonstrated some knowledge of "deferred tuition." One of these participants said, “Yeah. I am familiar with deferred tuition. As far as I know, that's a payment plan where you can pay in increments. I know that it's something that my community college did offer, which I did not participate in.”

Topic 4: Data Collection Text Messages

The last topic of discussion was gathering feedback on various text message reminders and preferences. Participants were presented with three slides comparing two text message reminders. Participants were first instructed to answer polling questions, which asked them to select which text message reminder would more likely prompt them to take part in the BPS:20/25 survey. After completing the polling questions, the participants discussed the messages and gave feedback.

Standardized versus Personalized

The first options presented to participants compared a standardized versus personalized text message (see figure 2). After answering the poll question, participants expressed their thoughts and opinions on the ‘personalized’ text message (Option B). Figure 3 shows participants’ votes to the polling question.

Figure 2. Standardized versus Personalized text message

Figure 3. Results of first polling question on text message comparison

In the discussion of the text messages, one participant highlighted that the personalized aspect was lacking in Option A, while another participant preferred Option B due to its greater personalization. The inclusion of the statement, "Please let me know if you have questions," in Option B was appreciated by participants as it provided a clear avenue for addressing concerns directly. Another participant echoed their clear preference for Option B, emphasizing its personalized nature in contrast to the somewhat demanding tone of Option A. They drew a parallel between Option A and reminders from school, while finding Option B cleaner and more personal, as it presented a polite request.

However, about two-thirds of participants reported their preference of Option A over Option B. Many participants expressed their appreciation for shorter text messages. One participant mentioned enjoying the shorter texts, believing it would capture their attention more effectively and reduce the likelihood of being ignored or skipped entirely. Another participant expressed a preference for Option A, citing its brevity as a factor that allowed them to quickly understand the process. They believed that the concise nature of Option A facilitated a faster response. Similarly, another participant favored Option A because of its straightforward and concise approach. They admitted to rarely checking their messages but felt that Option A's direct instructions would be more likely to catch their attention and prompt action. One participant best encapsulated the primary reason participants preferred Option A, “Yeah, I definitely enjoyed all the shorter texts. I feel like they’d get my attention more, and I would be less likely to just skip over it and just ignore it completely.”

Some participants were neutral towards the messages. These participants expressed that neither message felt personalized, giving the impression that they were automated. Additionally, these participants found it challenging to distinguish between messages generated by artificial intelligence (AI) and those composed by actual individuals.

During the focus group, participants were queried about the impact of the personalized text in Option B on the message's perceived legitimacy. One participant expressed the belief that Option B gave the impression of being sent by an individual rather than an automated machine. In contrast, two participants expressed a neutral stance on the legitimacy of Option B. One participant mentioned that the inclusion of the name 'Alyson' in the message did not affect their motivation to complete the survey. The other participant stated that the presence of the U.S. Department of Education logo was sufficient evidence of the message's legitimacy, rendering the inclusion of a person's name unnecessary, as they understood it to be a mass text message. This is best described by the following quote: “I like B better because it was more personal. And I also like the fact it says, “Please let me know if you have questions.” So, it’s kind of like if you gave any issues with it, you can just respond here, and you know where to go if you have issues with it.”

Eight participants mentioned that they would anticipate a response if they were to reply. Out of these, seven participants attributed their expectation of a response to the presence of the text "Please let me know if you have any questions" in Option B. They interpreted this as an indication of responsiveness. In contrast, one participant shared a different expectation. They anticipated an initial automated response but anticipated subsequently receiving a response from a different number or e-mail, signifying communication with an actual person. For example, one participant explained, “I probably would expect a robo response initially of some sort. But maybe someone else contacts me, maybe even from a different number or they e-mail me or something, depending how this is originally sent out.”

Logo versus No Logo

The second option compared a text message with a logo versus without a logo. After answering the poll question, participants expressed their thoughts and opinions on the text message with the logo (Option A in figure 4). Figure 5 shows participants’ votes to the polling question.

Figure 4. Logo versus No Logo text message

Figure 5. Results of second polling question on text message comparison

During the discussion, participants were first requested to provide their opinions on Option A. Out of the participants, fifteen individuals specifically commented on the presence of the Institute of Education Sciences (IES) logo in Option A. In their remarks, all of these participants conveyed that the inclusion of the logo enhanced their perception of the text's legitimacy, credibility, and/or official nature. This can best be described by the following quote: “So, I like the logo because it made it seem more legitimate. I think that the other message might just get lost in all of my inbox, so I would prefer it to have a logo.”

Regarding Option B, participants primarily commented on the absence of a logo. The lack of a logo raised concerns among participants, as it left them uncertain about the source of the message and created an impression of dubiousness. One participant noted that Option B could be mistaken for spam since they would not be able to verify its origin. Another participant further elaborated, mentioning that Option B would likely get lost in their e-mail's spam mailbox due to its missing logo. Additionally, another participant described Option B as appearing "weird" without a logo.

Participants were asked about their concerns regarding the potential impact on their data plan when receiving a text message with an included picture. Out of the participants, six individuals reported having unlimited data, indicating that data usage was not a concern for them. Three participants expressed a neutral stance on the matter. Two of these participants mentioned not giving it much thought due to infrequent texting, while another participant simply stated that it was not a significant concern for them. One participant commented on the picture potentially occupying storage on their phone but did not specifically address data usage.

Personalized Regular versus Personalized Tailored Communication Plan

The third and final option (figure 6) compared a personalized regular text message with a personalized tailored text message. After answering the poll question, participants expressed their thoughts and opinions on the text messages. Figure 7 shows participants’ votes to the polling question.

Figure 6. Regular versus Tailored text message

Figure 7. Results of third polling question on text message comparison

During the discussion, participants were asked to provide their opinions on Option A and Option B. Figure 7 illustrates that a large majority of the participants (n = 21) expressed a preference for Option A over Option B. Among these participants, thirteen individuals specifically appreciated the short and concise nature of Option A. In contrast, two participants remarked that Option B was excessively wordy. Additionally, two participants concurred with this sentiment and further elaborated that Option B gave the impression of a scam text, appearing "spammy" in their perception. One participant mentioned that Option A felt more personal. Another participant commented that in Option B the monetary incentive could have gotten lost in all of the other information in the text.

Participants who expressed a preference for Option B provided insightful reasons for their choice. One participant acknowledged their appreciation for the sentence "This is the only research study of its kind..." but still found Option B to be excessively wordy. Two other participants highlighted the value of the longer explanation provided by Option B, considering it a helpful reminder of what they had initially signed up for. Additionally, one participant specifically enjoyed the personalized message of "Please let me know if you have questions" included in Option B. One participant best captured why participants favored Option B, “I chose B because I wouldn’t have any questions after reading this, but I feel like they’re trying really hard to persuade the students to take the survey.”

Likeliness to Respond to Survey

At the end of this topic participants were asked to imagine that they received their favorite text message out of the examples shown earlier and then describe how likely or unlikely they would be to respond to the survey using the link provided in that text message. Six participants identified the monetary incentive as a key motivating factor for their active participation in the survey. Four participants noted that including the Institute of Education Sciences (IES) logo on the text message would enhance their sense of security regarding the text message’s authenticity. Three participants suggested that including the survey's duration in the text message would help them decide if they would complete it.

To conclude, the participants recommended that the text message sent to those in the actual study should be brief and incorporate the following elements: the Institute of Education Sciences (IES) logo, a monetary incentive, and an indication of the expected duration of the survey. By including these components, the text message is more likely to capture participants' attention, enhance their perception of legitimacy, and increase their willingness to engage in the survey.

Topic 5: Closing

At the end of the focus group discussion, participants were given the opportunity to share any final thoughts or comments. While the response rate was limited to two participants, their feedback provided valuable insights. One participant commented on the importance of an initial e-mail as a reminder to the text message, suggesting that a follow-up message would be more enticing and likely to generate a response to the survey. The other participant emphasized the significance of including a deadline in the text message. By incorporating a sense of urgency, participants would be prompted to take immediate action and ensure completion within the specified timeframe. These suggestions offer valuable considerations for RTI and NCES in optimizing their strategies and enhancing the survey response rate.

Attachment 1 – Focus Group Survey Questions

LICENSURE AND CERTIFICATION FOCUS GROUP SURVEY QUESTIONS

Question |

Label |

INTROFORM |

Survey introduction |

LICNAME |

Name of license or certification |

NTEWS37 |

Field of study for license or certification |

LICACTIVE |

License or certification currently active |

NTEWS46 |

Reasons pursued license or certification |

NTEWS34_48 |

Issuer of license or certification |

SCHTYPE |

School name of issuer |

LICUGCERT |

License or certification also a UG certificate or diploma |

NTEWS35 |

Completion time for license or certification – academic instruction |

LICDURATION |

Completion time for license or certification – non-academic timeframe |

LICONL |

License or certification program online |

LICCOST |

Cost of license or certification |

NTEWS53 |

Financial support for license or certification |

RELIEFAWARE2 |

Awareness of White House Student Loan Debt Relief Plan |

FEDERAL LOAN FOCUS GROUP SURVEY QUESTIONS

Question |

Label |

INTROFORM |

Survey introduction |

FEDLOANAMT |

Total borrowed in federal student loans |

PELLGRANT |

Ever awarded a Pell Grant |

RELIEFAWARE |

Awareness of White House Student Loan Debt Relief Plan |

RELIEFQUAL |

Qualify for White House Student Loan Debt Relief Plan |

RELIEFAMT |

Amount of debt relief anticipated |

RELIEFAPPLY |

Apply for White House Student Loan Debt Relief Plan |

RELIEFIMPACT |

Academic plan changes due to White House Student Loan Debt Relief Plan |

EINCOM |

Income in 2021 |

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2024-11-06 |

© 2026 OMB.report | Privacy Policy