ATC21S Field Test Phase 2.4 Memo Revised

ATC21S Field Test Phase 2.4 Memo - Revised 2011-12-09.docx

NCES Cognitive, Pilot, and Field Test Studies System

ATC21S Field Test Phase 2.4 Memo Revised

OMB: 1850-0803

Memorandum United States Department of Education

Institute of Education Sciences

National Center for Education Statistics

|

DATE: December 9, 2011

TO: Shelly Martinez, OMB

FROM: Daniel McGrath, NCES

THROUGH: Kashka Kubzdela, NCES

SUBJECT: ATC21S Project Phase 2.4 Field Test Revision (OMB# 1850-0803 v.62)

This is a revision to the OMB#1850-0803 v.59 clearance package for the ATC21S Project Phase 2.4 Field Test approved by the Office of Management and Budget on November 23rd, 2011. The Information and Communication Technology (ICT) tasks have been deleted and the portions of the text of the original memo that have been revised are highlighted in yellow below.

Submitted-Related Information

The following material is being submitted under the National Center for Education Statistics (NCES) clearance agreement (OMB #1850-0803), which provides for NCES to improve methodologies, question types, and/or delivery methods of its survey and assessment instruments by conducting field tests, focus groups, and cognitive interviews. The request for approval described in this memorandum is to conduct the fourth and final phase of development and field-testing of assessment tasks of 21st century skills for 6th, 8th, and 10th grade students. The four phases of the field test include task validation of the assessment prototype, cognitive labs, a pilot test, and a field test. Attached to this memo please also find Appendix A with the Phase 2.4 field test training and administration materials.

Background

The Assessment and Teaching of 21st Century Skills (ATC21S) project is focused on defining what are considered to be 21st century skills. The two areas to be developed are literacy in information and computer technology (ICT) and collaborative problem solving - areas that are thought to play an important role in students’ preparation for 21st century work places. The project is coordinated by Cisco Systems Inc., Intel Corporation, and Microsoft Corp and led by representatives of six “Founder” countries that have agreed to test the assessments developed in the ATC21S project: Australia, Finland, Portugal, Singapore, the United Kingdom, and the United States. Three associated countries, Costa Rica, the Netherlands, and Russia, have recently joined the project.

In 2009, ATC21S convened five working groups of experts to review the state-of-the-art and develop key issues to be resolved in conceptualizing the domain of 21st century skills; developing methodology and technology for assessing these skills; understanding the relationship between these skills, instruction, and other learning opportunities; and developing a policy framework for implementing assessments of the skills. Short descriptions of the resulting five White Papers, designed to inform the future stages of the project, were provided in the clearance package for ATC21S cognitive interviews (OMB# 1850-0803 v.41), approved on February 23, 2011.

In 2010, the ATC21S Executive Board (made up of senior officials from each of the founder countries and representatives from Cisco, Intel, and Microsoft) approved a focus on two 21st century skill domains promising for measurement: (1) Collaborative Problem Solving and (2) ICT Literacy – Learning in Digital Communities. Panels of experts were convened to define the constructs to be measured. Both panels focused on the theoretical framework for their domain and proposed a series of proficiency levels to guide assessment task developers in the next phase of the project.

The Collaborative Problem Solving panel, under the leadership of Professor Friedrich Hesse (University of Tubingen/Knowledge Media Research Center, Germany) and Professor Eckhard Klieme (German Institute for International Educational Research), defined the construct of collaborative problem solving. The ICT Literacy – Learning in Digital Communities panel under the leadership of Dr. John Ainley (Australian Council for Educational Research, Australia) defined the construct of digital literacy and social networking. Both panels focused on the theoretical framework for their domain and proposed a series of proficiency levels to guide assessment task developers in the next phase of the project.

Web-based assessments designed to measure literacy in information and communication technology (ICT) were developed in draft form in the United States and assessments to measure the collaborative problem solving were developed in draft form in the United Kingdom. The development work is funded by the Cisco, Intel, and Microsoft consortium under a separate contract.

The end products of web-based assessments and supporting strategies will be made available in the public domain for classroom use. NCES plans to use the web-based assessments in development of items for its longitudinal surveys, international assessments, and, potentially, NAEP.

Design and Context

NCES has contracted WestEd (henceforth, the contractor) to implement all phases of the U.S. National Feasibility Test project. Specifically, the contractor has been working with the two development contractors, the Berkeley Evaluation and Assessment Research (BEAR) Center at the University of California, Berkeley, and World Class Arena Ltd. in the UK to test the ICT Literacy and Collaborative Problem Solving assessments in the field through different phases of implementation and report on the findings. The findings will be used to further refine the assessments until a final version is achieved. Eugene Owen and Dan McGrath are the project leads at NCES.

Phases completed to date.

2.1 Phase – Validation of the task concepts was completed on October 28, 2010. WestEd validated the ICT Literacy tasks with 9 teachers (3 at 6th grade, 3 at 8th grade, and 3 at 10th grade). Teacher’s provided WestEd with feedback on the task concepts as they related to the grade levels they taught.

2.2 Phase – Cognitive Lab think aloud sessions (approved on February 23, 2011, OMB# 1850-0803 v.41) were completed on March 31, 2011. The contractor observed a series of individual students as they worked through each of the ICT Literacy prototype tasks, and collected meta-cognitive data during the process. Twenty-one students from the 6th, 8th, and 10th grades participated. The Collaborative Problem solving assessments were not included in the US cognitive lab sessions because these assessments were not available at the time. The Collaborative Problem Solving tasks were used in cognitive labs in other partner countries in the project.

2.3 Phase –The Pilot Test (approved on May 25, 2011, OMB# 1850-0803 v.49) was administered in schools in California in September 2011. The Pilot Test involved the administration of the ICT Literacy tasks in the classrooms of three teachers, one teacher for each grade level (6th, 8th, and 10th grades). Approximately 112 students participated in the pilot (23 students in grade 6; 40 students in grade 8; and 49 students in grade 10). The pilot test produced a data set comprised of the student actions and responses in each of the assessment tasks within the ICT Literacy scenarios, including data on how effectively the assessments measure the targeted skills; the feasibility of administering web-based assessments in a school setting; and the data capture and measurement methods involved in interpreting the complex responses that these types of assessment generate. The three teachers were trained to administer the assessment to their classes. Training was conducted online using webinar software so that the contractor could walk the teachers through the administration process. The Webinar training took approximately one-hour. Teachers reserved school computer rooms for the administration. Teachers had to conduct an accessibility test on all student computers prior to the pilot to make sure the computers were able to access web sites and to check upload and download speeds. Personnel from UC Berkeley provided logins and passwords for all students in the pilot test and the teachers also made sure that student logins were set up prior to the administration. Most students completed Webspiration and Arctic Trek. School district offices blocked the web site Chatzy for liability reasons. The Chatzy web site is needed for administration of the Second Language Chat. As a result of the pilot test, the Second Language Chat will not be administered in the field trials.

Phase for which OMB approval will be sought

2.4 Phase – Approval was sought and granted for administration of the field trials on November 23, 2011 (OMB# 1850-0803 v.59). Due to an unforeseen breakdown in international communications, there has been a change in the administration of the tasks in the field trials. We originally planned and were approved to administer two types of assessment tasks: Information and Communication Technology (ICT) tasks and Collaborative Problem Solving tasks (CPS). During preparation for implementing the field tests, a few days ago, we learned that the ICT tasks will not be included in the field trials.

The ACT21S field test is being administered on the University of Melbourne computer platform. The ICT tasks were developed by the BEAR Center at the University of California, Berkeley, on their computer platform and they only work on the Berkeley platform; they will not run on the Melbourne platform. As a result, the ICT tasks have been dropped from the field trials at this point and we have removed the ICT tasks from this revised OMB package.

The numbers of teachers and students participating in the field trials do not change; we are simply replacing student time on ICT tasks with student time on collaborative problem solving tasks. This will provide more information on the collaborative problem solving tasks, about which we have less U.S. specific information than on the ICT tasks. However, the University of Melbourne CPS modules also ask students to respond to two short 15-minute surveys about the CPS tasks at the end of each testing session. The surveys (refered to as “Peer Assessment“ and “Self-Assessment”) have been added to Appendix A (pages 14-21).

Recruitment for the field trials was approved in an earlier OMB package (OMB# 1850-0803 v.49) on May 25, 2011. The recruitment activities were described on page 8 of the memo dated May 11, 2011, under the section titled 2.4 Phase—Field Test. These activities have been completed and we have not made any changes to them.

Seven teachers at the 6th grade level, seven teachers at the 8th grade level, and seven teachers at the 10th grade, representing a geographically and socioeconomically diverse sample of schools, have been selected to administer the assessments. We are ready to administer the field trials of the collaborative problem solving tasks as soon as possible, in December 2011.

The field trials are designed to provide sufficient data to establish empirically based scales that have the capacity to indicate students’ place and progress on developmental continua associated with each of the 21st century skill sets assessed. The US sample is a part of an overall sampling design that spans all of the countries participating in the field trials phase of the project and will involve field-testing of the Collaborative Problem Solving domains. There are eleven CPS assessment tasks. Students will not take all eleven tasks. They will be allocated linked bundles of tasks administered over two 60-minute class periods. Students will take two 40-minute tasks, a 10-minute practice task, and two 15-minute surveys. The surveys are designed to ask students if they liked the task and how well they thought they had an opportunity to collaborate. No student demographic information will be collected.

Students will be randomly assigned to task bundles. A counterbalanced allocation process is used to ensure that all tasks are administered and that all participating students are linked across tasks. The objective is not to assess the students, but to collect sufficient data to enable calibration of the tasks. Students within age levels are randomly assigned to tasks, but in a manner that ensures the linking across all tasks in order to obtain sufficient data points across specific strands for development of robust scoring.

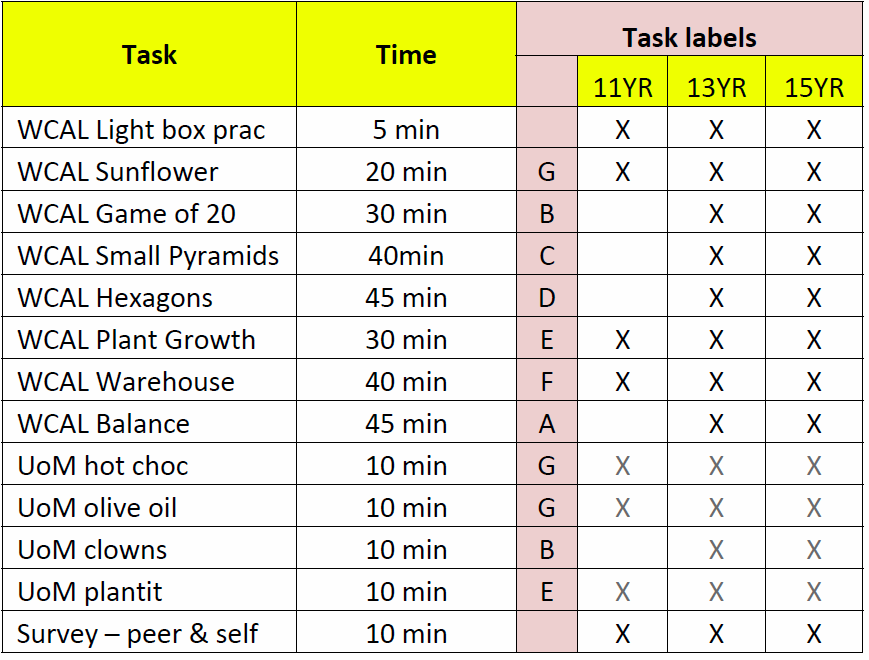

Table 1 shows how the CPS tasks are bundled. In Bundle A, four short tasks are administered in a 60- minute period. Bundles B, C, and D contain two longer tasks administered in a 60-minute period, and Bundle E contains one longer task administered in a 60-minute period. Table 2 shows the CPS task strands by ages 11, 13 and 15.

Table 1: Collaborative Problem Solving Task Bundles

Bundle |

Task 1 |

Task 2 |

Task 3 |

Task 4 |

|

A |

Clowns |

Olive Oil |

Hot Chocolate |

Sunflower |

|

B |

Plant Growth |

Warehouse |

|

|

|

C |

Game of 20 |

Hexagons |

|

|

|

D |

Shared Garden |

Small Pyramids |

|

|

|

E |

Balance |

|

|

|

|

Table 2: Collaborative Problem Solving Task Strand

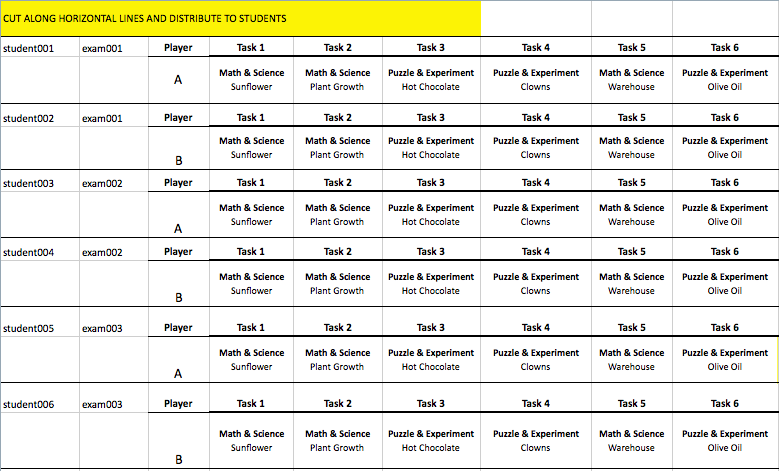

During the field trials, the administration of the tasks will be fully automated, and should not require teacher input apart from their fulfilling a supervisory role during the administration. A web-based delivery system will deliver the assessments. Training of teachers will be conducted online using webinar software so that the contractor can walk the teachers through the administration process. Prior to the training session, teachers will receive the Collaborative Problem Solving Administration Manual (Appendix A, pages 2 to 9) and the new CPS Practice Task Guide (Appendix A, pages 10-13). Teachers will reserve school computer rooms for the administration and check all student computers to ensure that they meet technical requirements. Teachers will also need to check each student computer to ensure that they can access the assessment web site. The University of Melbourne will assign student logins and passwords for each student for administration of the CPS tasks. The logins are provided in a class roster (class rosters were not required for administration of the ICT tasks.) Teachers will need to assign students to individual logins and complete the information on the roster. Table 3 below shows the student logins in a class roster. Teachers will enter student names for each login, cut along horizontal lines and distribute strips with logins to students.

Table 3: Student Logins in a Class Roster

We estimate that 25 students per each of the 21 teachers (total 525 students) will take the assessments. Student responses will be recorded and stored electronically for analysis of the whole data set.

Teachers will receive $200 incentive for: participating in a training session; conducting accessibility checks of classroom computers to ensure each computer meets technical requirements; completing rosters for class logins; administering the practice task, CPS assessments, and surveys; and fulfilling the role of school coordinator. The $200.00 teacher incentive was approved in the OMB package (OMB# 1850-0803 v.49) on May 25, 2011 as part of the recruitment materials and activities.

Overview of the Collaborative Problem Solving Tasks

The Collaborative Problem Solving Skills to be administered during the field trials will measure constructs in: (1) social skills, including participation, perspective taking, and social regulation; and (2) cognitive skills, including task regulation and knowledge building. Students will work with a partner to solve the same problem using different pieces of information. Students must communicate via a chat space to share information to solve the problem within a designated period of time. Figures 1 through 6 show examples of the Collaborative Problem Solving tasks.

Figure 1: WCAL Warehouse—students collaborate on the placement of cameras to secure a warehouse

Figure 2: UoM Plant Growth—students log on to the task as partners

Figure 3: UoM Plant Growth—students collaborate on the amount of light and temperature needed for plant growth

Figure 4: UoM Plant Growth—students see different information—e.g., one student will be able to control the amount of light

Figure 5: UoM Plant Growth—students see different information—e.g., another student will be able to control temperature

Figure 6: UoM Plant Growth—although students see different pieces of information, they must collaborate through a chat space to organize their findings on a graph

Final Report

The ACT21S project has been extended through July 2012. The project was originally intended to end by December 31, 2011. The contractor will produce and deliver a written report summarizing the project, the activities, and the findings by July 31, 2012.

Use of Results

The field test will provide data on the feasibility of the assessments being administered in school settings, on data collection being carried out through the test administration system, and will allow for calibration of the scales used to measure the skills. A data set will be collected. All personally identifying information will be removed.

End products of the overall project will be made publicly available for use in classrooms and will be used by NCES for the development of assessment tasks in its longitudinal studies, international assessments, and, potentially, NAEP.

Results and data from the field trials will be compiled by the contractor and provided in usable form to NCES and to the international researcher coordinator. NCES will provide direction through the international research coordinator.

Assurance of Confidentiality

Field Trial teacher participants are asked to sign (1) a Teacher Consent form provided by the contractor as a condition of participation. Principals are asked to sign (2) a Principal Consent Form and to sign (3) a Memorandum of Understanding for school participation. Teachers are provided with (4) a Parental Opt Out form so that parents can have the option of having their child’s data removed from field trial results. These four forms were submitted with the OMB memorandum on May 2, 2011 and approved on May 25, 2011. Student response data will be de-identified through the use of unique ID numbers so that individual students cannot be identified in the data set collected for analysis. Additionally, WestEd staff working on the project signs the NCES Affidavit of Nondisclosure.

Students will be reminded before they begin the assessment that they will not receive a grade for taking the computer-based assessment tasks; that the tasks are being field tested to see how well these tasks work, that the performance of the students is not being evaluated, that their names will not be associated with the assessments’ results, that no identifying information will be recorded, and all information collected will be used only for statistical purposes.

Project Schedule

2011 2012

Remaining Tasks

|

Aug |

Sept |

Oct |

Nov |

Dec |

Jan |

Feb |

Mar |

Apr |

May |

Jun |

Jul |

Run ICT Pilot Test

|

|

|

|

|

|

|

|

|

|

|

|

|

(Production of Field Test version of ICT/CPS)

|

|

|

|

|

|

|

|

|

|

|

|

|

Prep OMB pack for Field Test

|

|

|

|

|

|

|

|

|

|

|

|

|

Recruit for Field Test

|

|

|

|

|

|

|

|

|

|

|

|

|

Run Field Test

|

|

|

|

|

|

|

|

|

|

|

|

|

Produce final report

|

|

|

|

|

|

|

|

|

|

|

|

|

Estimate of Hour Burden

A purposive sample of teachers and schools across the United States will be recruited to participate in the field trials. While the sample will not be a nationally representative sample, the study will attempt to recruit from all regions of the country and include urban, suburban, and rural schools. For example, the contractor will conduct recruitments in Washington, California, Colorado, Texas, Chicago, Florida, District of Columbia, New York City, and New England. Participants will include seven 6th grade teachers, seven 8th grade teachers, and seven 10th grade teachers. Once recruited, teachers and schools will complete and submit consent forms and confirm technology requirements with the school computer network support expert (Appendix A, pages 22-23). All teachers will schedule and participate in a web-based training session and will be provided student logins and passwords for the testing sessions. All teachers will also conduct accessibility testing on school computers. Schools will be provided a testing window and teachers will administer the two 60-minute tasks in one day or over a two-week period.

Burden Table for Phase 2.4 Field Trials (assessment administration)

Activity |

Number of Respondents |

Number of Responses |

Estimated Hours |

TOTAL Burden Hours |

Teacher computer accessibility testing |

21 teachers |

21 |

2 |

42 |

Teacher Training |

21 teachers |

21 |

1 |

21 |

Teacher administration of the field test |

21 teachers |

21 |

3 |

63 |

Student surveys |

525 |

525 |

132 |

132 |

Total |

546 |

588 |

|

258 |

Cost to the Federal Government

Phase 2.2, Cognitive Labs & Phase 2.3, Pilot Test |

$127,741 |

Phase 2.4, Field Test |

$75,201 |

Final Report |

$32,185 |

ATC21S

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Mike Timms |

| File Modified | 0000-00-00 |

| File Created | 2021-01-31 |

© 2026 OMB.report | Privacy Policy