Form CMS-10398 (#20) CMS-10398 (#20) User Guide - PERM Round 1 Findings Submission

Generic Clearance for Medicaid and CHIP State Plan, Waiver, and Program Submissions

User Guide Round 1 Findings Submission [rev 9-11-2014 by OSORA PRA]

Bundle: PERM Pilot (#20), Same Sex Marriage (#36), and Managed Care Rate Setting (#37)

OMB: 0938-1148

CMS PERM – PETT 2.0 Round 1 Pilot Findings Submission

User Guide Documentation

June 6, 2014

PRA Disclosure Statement

According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless it displays a valid OMB control number. The valid OMB control number for this information collection is 0938-1148 . The time required to complete this information collection is estimated to average [Insert Time (hours or minutes)] per response, including the time to review instructions, search existing data resources, gather the data needed, and complete and review the information collection. If you have comments concerning the accuracy of the time estimate(s) or suggestions for improving this form, please write to: CMS, 7500 Security Boulevard, Attn: PRA Reports Clearance Officer, Mail Stop C4-26-05, Baltimore, Maryland 21244-1850.

2.0 User Registration and Login 3

2.3 Password Retrieval Feature 6

4.0 Round 1 Pilot Findings Data Submission 10

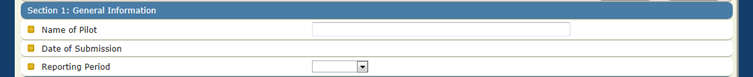

4.1 Section 1 - General Information 15

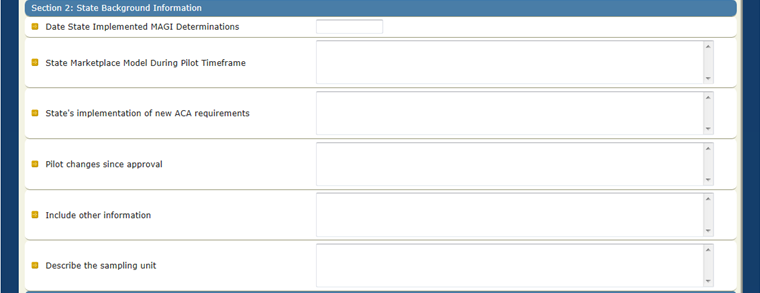

4.2 Section 2 – State Background Information 16

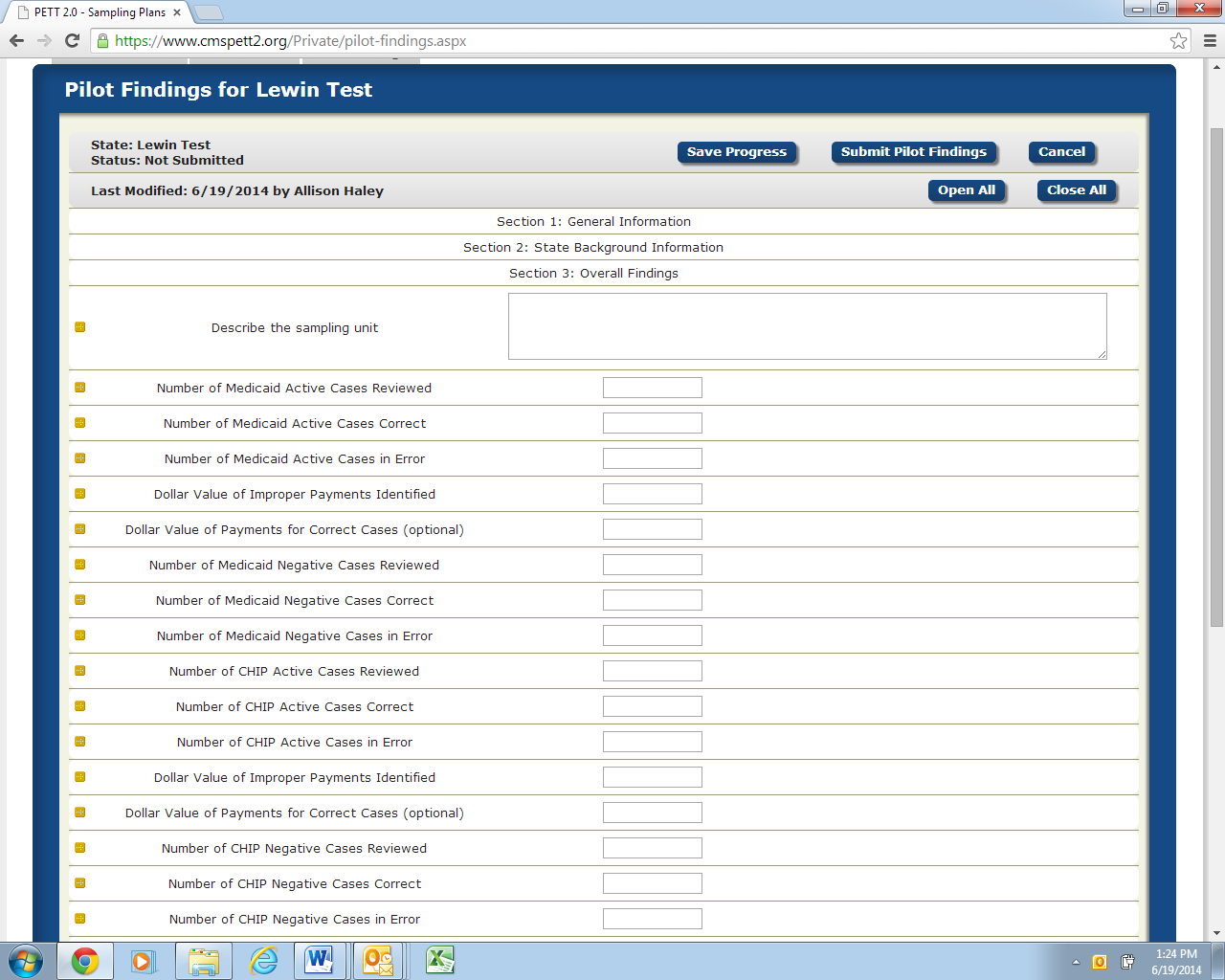

4.3 Section 3 – Overall Findings 17

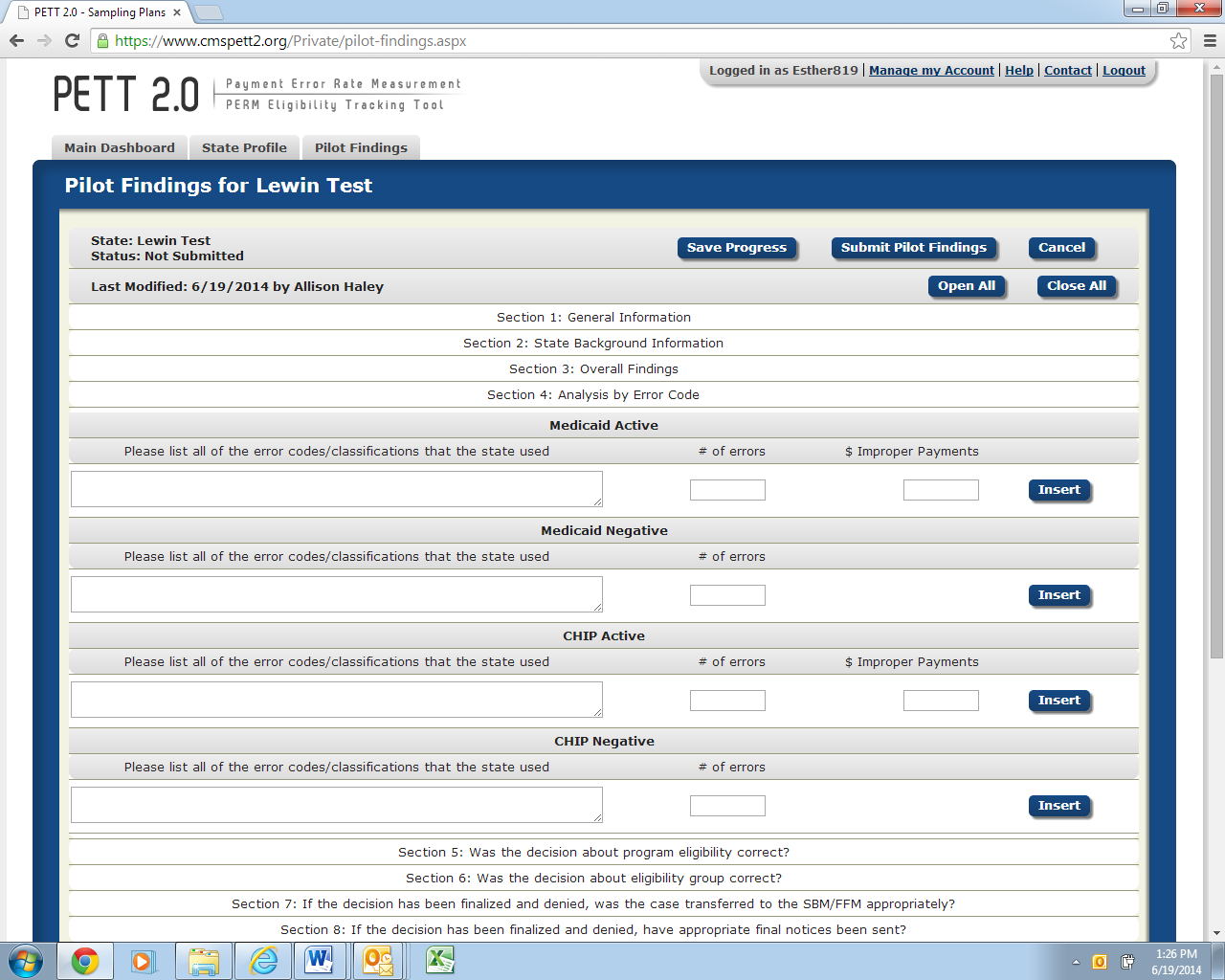

4.4 Section 4 – Analysis by Error Code 20

4.5 Section 5 – 13 Pilot Findings Analysis 21

4.6 Sections 14-16 Point of Application, Type of Application, and Channel Analysis 31

4.7 Section 17 – Additional Analysis/Findings 35

4.8 Section 18 – Pilot Feedback 36

5.0 Submission and Review of Round 1 Pilot Findings Data 38

5.1 Submission of Round 1 Pilot Findings 38

Each state is required to implement Medicaid and Children’s Health Insurance Program (CHIP) Eligibility Review Pilots in place of the Payment Error Rate Measurement (PERM) and Medicaid Eligibility Quality Control (MEQC) eligibility reviews for fiscal year (FY) 2014 – 2016. States will conduct four streamlined pilot measurements over the three year period.

The Medicaid and CHIP Eligibility Review Pilots consist of two independent components. States are required to:

Pull a sample of actual eligibility determinations made by the state and perform an end to end review

Run test cases

States are required to enter the Medicaid and CHIP Eligibility Review pilot findings from the state’s review of eligibility determinations directly on the PERM Eligibility Tracking Tool (PETT 2.0) website. States will not report test case findings on the PETT website.

States will be able to save the findings as draft before submitting as final to CMS. Once the final report is submitted to CMS, CMS will review and provide comments or approval through the PETT website.

The PETT 2.0 website is accessible to authorized system and program administrators (i.e., CMS and its contractors), state administrators, and state viewers who monitor data submission or provide input to the PERM program. Users will have different website privileges depending on their program roles. During the registration process, state users will be asked to select which level of access they are requesting – state administrator or state viewer. Users will be approved by CMS and Lewin after the state eligibility lead notifies CMS and Lewin regarding which individuals should have access to the website.

The remaining sections of this document describe how to access and use the PETT 2.0 website.

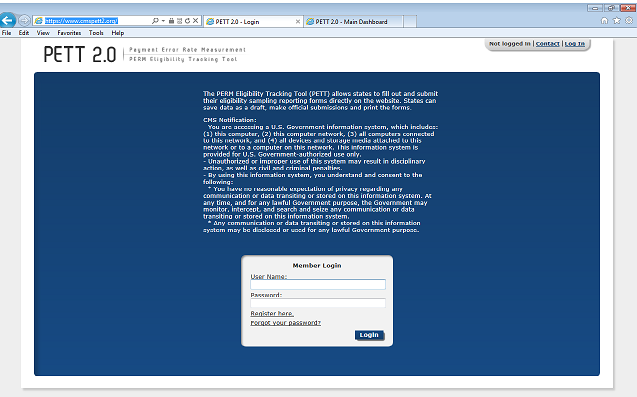

To access this feature: Go to the PETT 2.0 website which can be accessed at the following web address: https://www.cmspett2.org.

The login screen is the first screen that users will see when they go to the PETT 2.0 website. New users will need to register. If you have already registered, enter your case-sensitive user name and password and click the “Login” button.

If a user is inactive for 15 minutes, the user will have to login again to continue working. The user will be returned automatically to the web section they had been working on.

Figure

1: Login screen

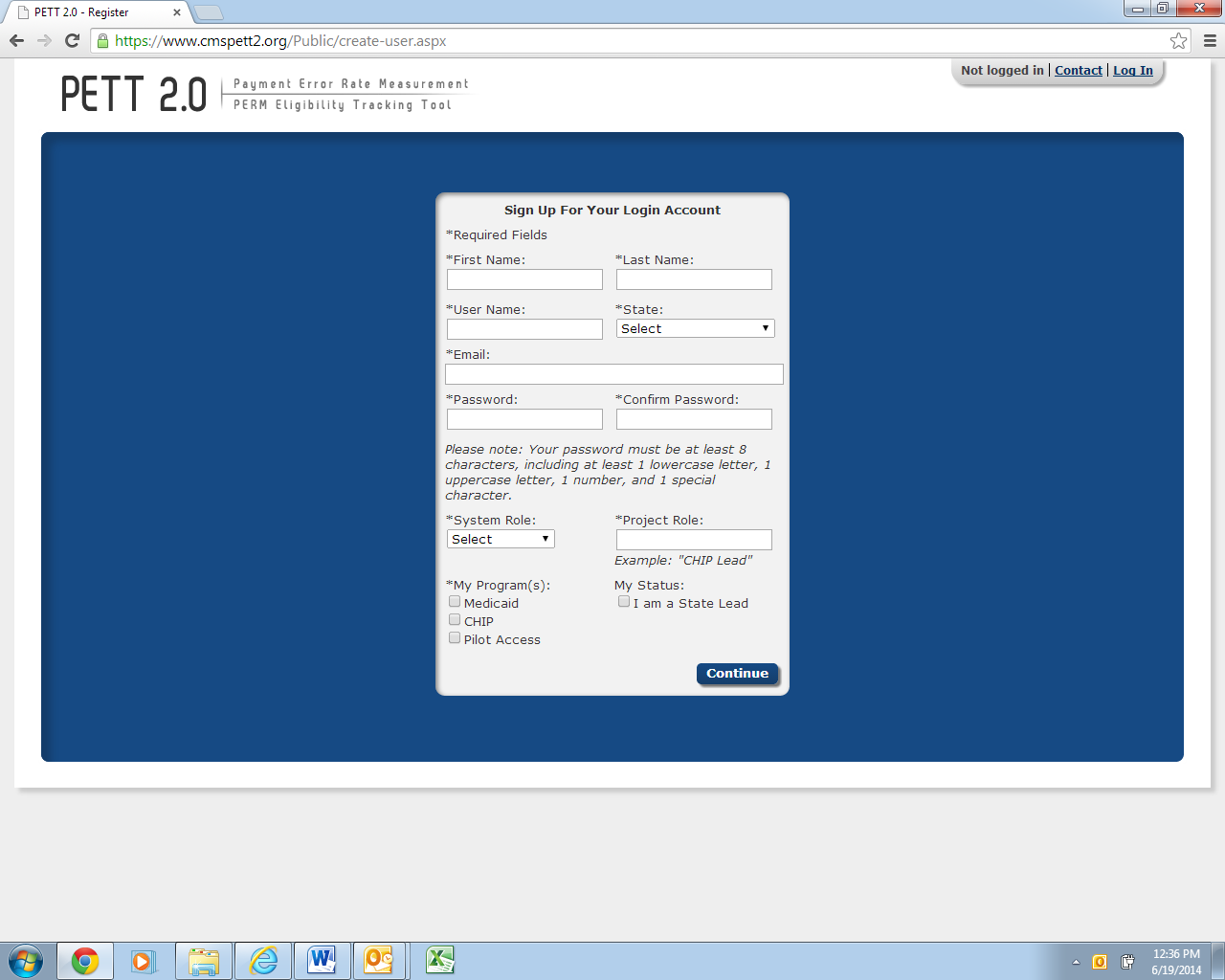

To access this feature: Click the “Register here” link on the login screen.

Figure 2: Registration page

New users must register for access to the PETT 2.0 website. Each state will be allowed to have up to three users. Each state user will be designated as either a state administrator or a state viewer. State administrators will be able to input, edit, and review their state’s pilot review findings, while state viewers will only be able to review their state’s submitted pilot review findings.

During the registration process, state users should select the appropriate level of access from the “System Role” drop-down box. State users should also identify their “Project Role” (e.g., CHIP lead, reviewer, state contractor). The state PERM eligibility lead has the option of selecting the box under “My Status” to indicate their role as the State Lead.

Users need to check “Pilot Access” under “My Program(s).” The Medicaid and CHIP boxes are for FY2013 routine PERM eligibility users only. NOTE: If pilot users currently have access to PETT 2.0 for the FY2013 PERM eligibility cycle, users should submit an email to [email protected] or utilize the Contact feature, described in Section 3.3, to contact system administrators who will update the user’s access to allow the user to view the pilot functionality on the website.

New users will need to create a case-sensitive user name and password. Passwords must be at least eight characters long and contain at least one of each of the following:

A number

An upper case letter

A lower case letter

A special character !#$%&()*+,-./:;<=>?@[\]^_{|}~

Users will need to change their passwords every 60 days; after changing the password, the user will need to login again.

All new account registrations are sent to system administrators (CMS and Lewin) for review. If they approve the new user, the system administrator will enable the account and send the new user a “User Account Approved” email with login instructions. Confirmation emails will be sent from [email protected] typically within 48 hours of registration. To ensure that emails are received, users should add [email protected] to their contact list and follow up with CMS and Lewin if confirmation is not received within 48 hours.

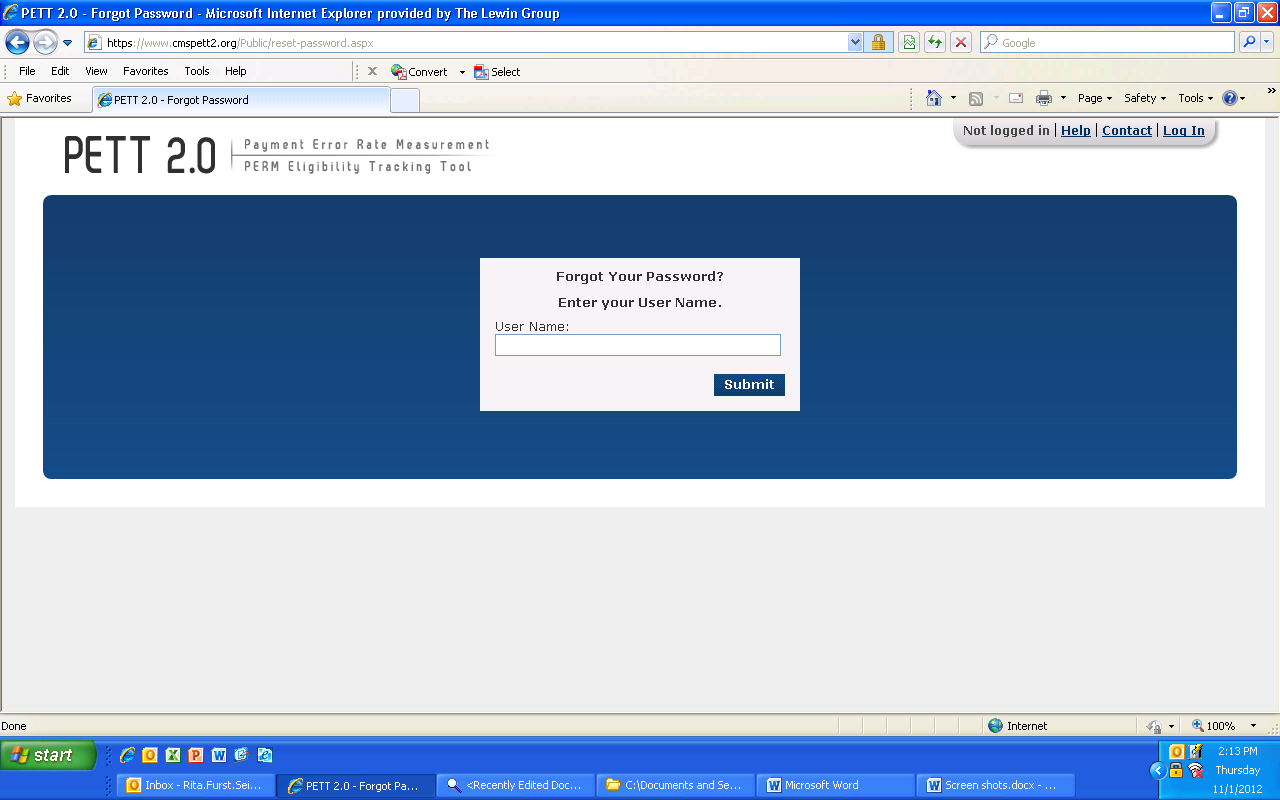

To access this feature: Click the “Forgot your password?” link on the login screen.

Users should enter their user name. A new password will be sent to the email address associated with the user name. Users can only reset their password once per day.

Forgotten user names can only be retrieved by contacting the system administrators, which can be accomplished using the contact form (described in Section 3.4).

Figure 3: New password dialog box

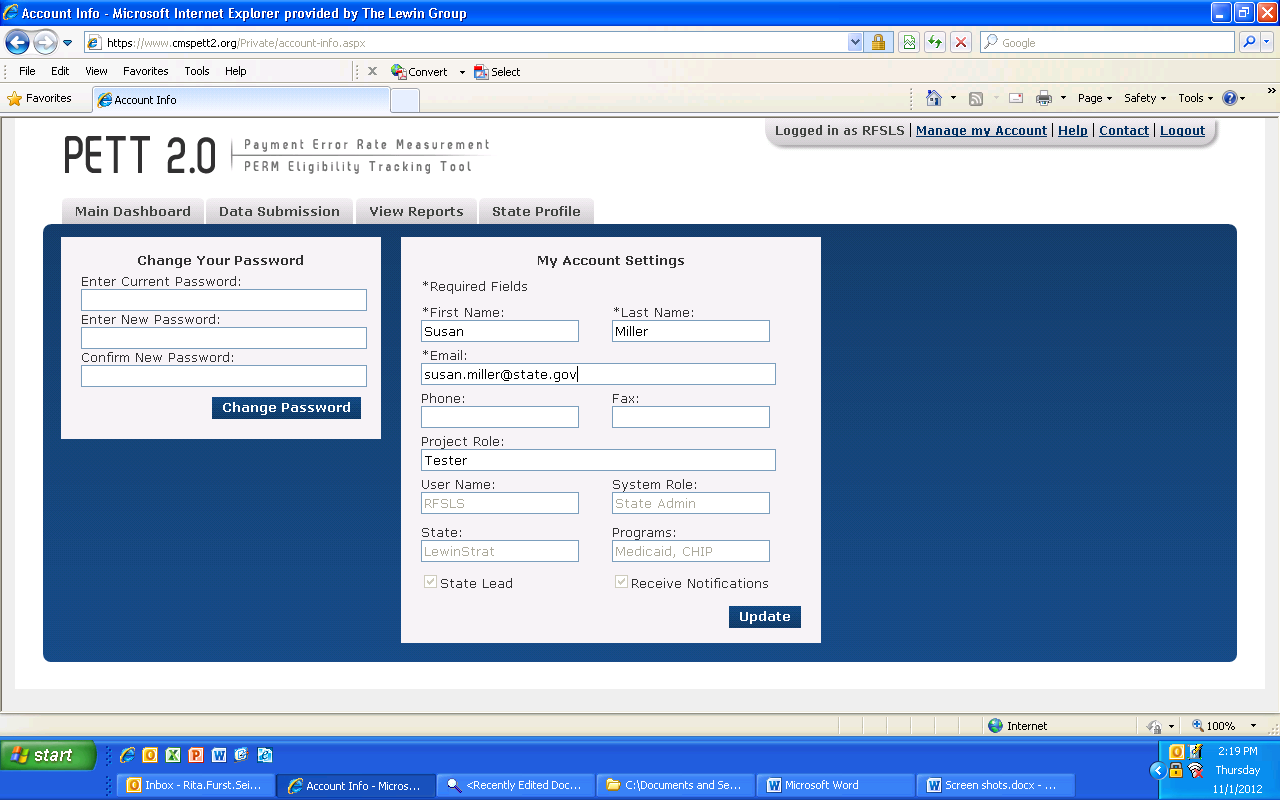

To access this feature: Click the “Manage My Account” link in the upper right navigation bar.

The Manage My Account page allows users to perform account management tasks such as changing their password, name, and contact information.

Users cannot update their user name, system role, state, program, status as state lead, or preference to receive notifications. To change these settings, users should contact the system administrators using the contact form (described in section 3.3).

Figure 4: Manage My Account screen

The Help area, which would typically provide access the contents of this user guide, will not be available at this time to support the submission of the pilot findings. If a user selects the Help link, the user will be directed to the PERM FY13 PETT 2.0 user guide.

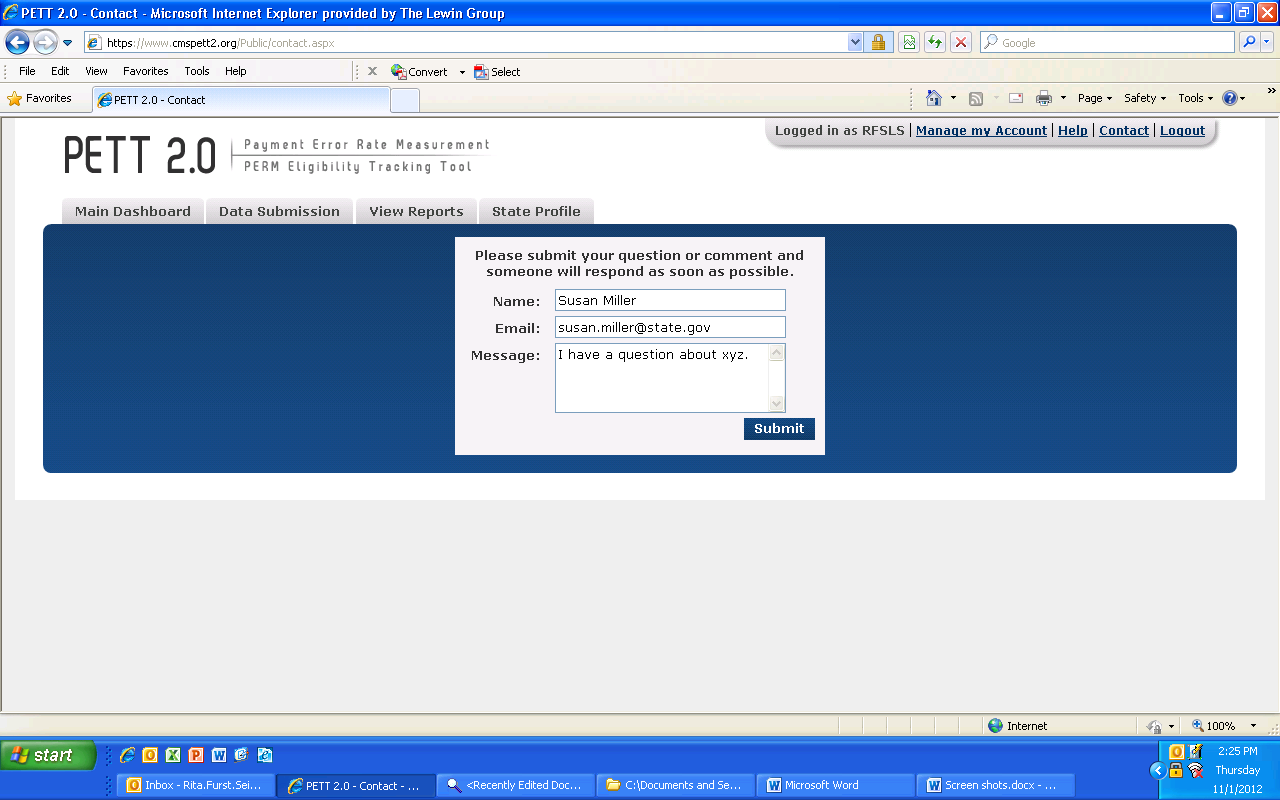

To access this feature: Click the “Contact” link in the upper right navigation bar from any area of the system.

PETT 2.0 contains a Contact feature allowing users to submit questions and comments directly to system administrators. The system administrators will be able to assist users with any issues users may encounter including data submission errors and PETT 2.0 website use. Users will receive a response to their messages typically within 48 hours of submission. To ensure that they receive a response, users should add [email protected] to their contact list. NOTE: System administrators from Lewin will be available for any technical assistance needs. If a user encounters an issue that is not easily resolved via email or if the question is complicated, please request a teleconference and a Lewin administrator will contact the user via telephone, utilizing WebEx functionality, as needed.

The contact form can be accessed even if users are not logged into the PETT 2.0 system.

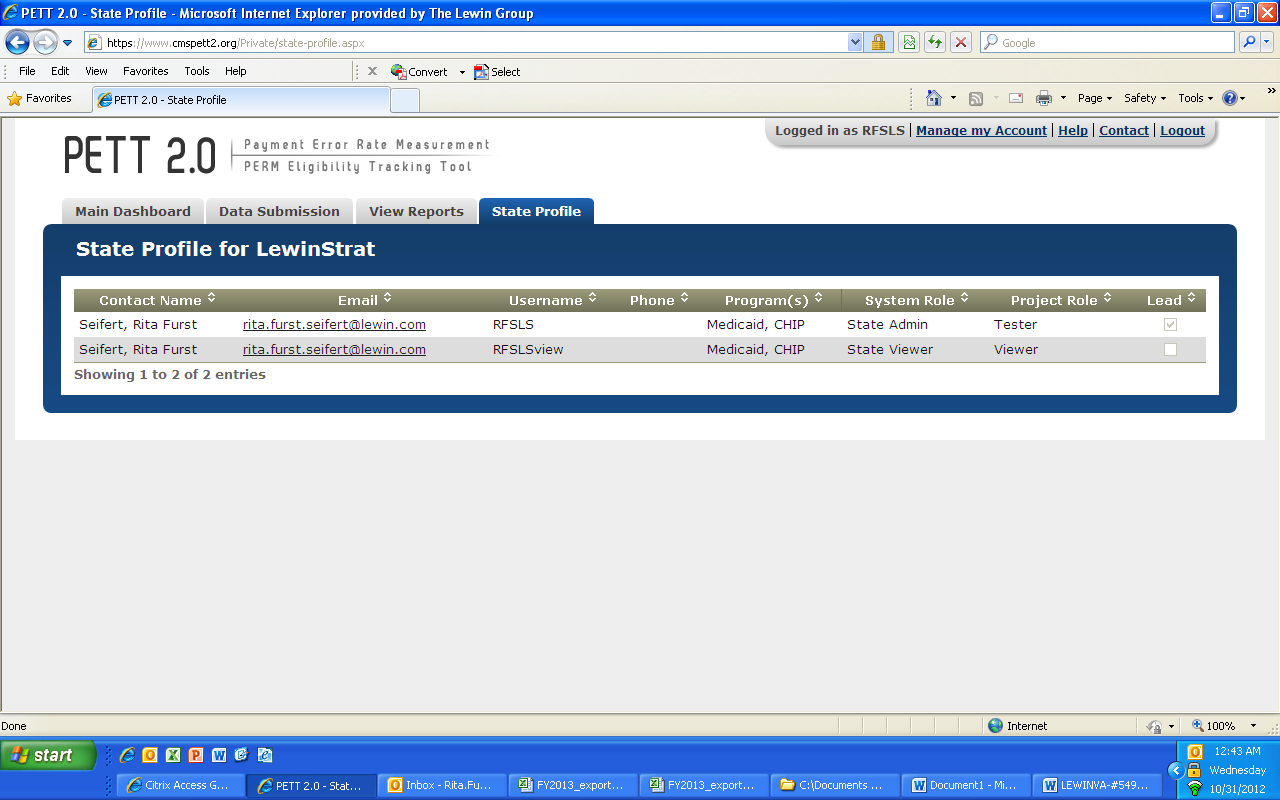

To access this feature: Hover your mouse over the “State Profile” tab and click on “View State Details.”

The State Profile shows the list of users for the selected state. Each row shows the contact name, email address, user name, programs assigned or pilot, system access role, project role and lead.

Figure 5: Contact screen

Figure 6: State Profile

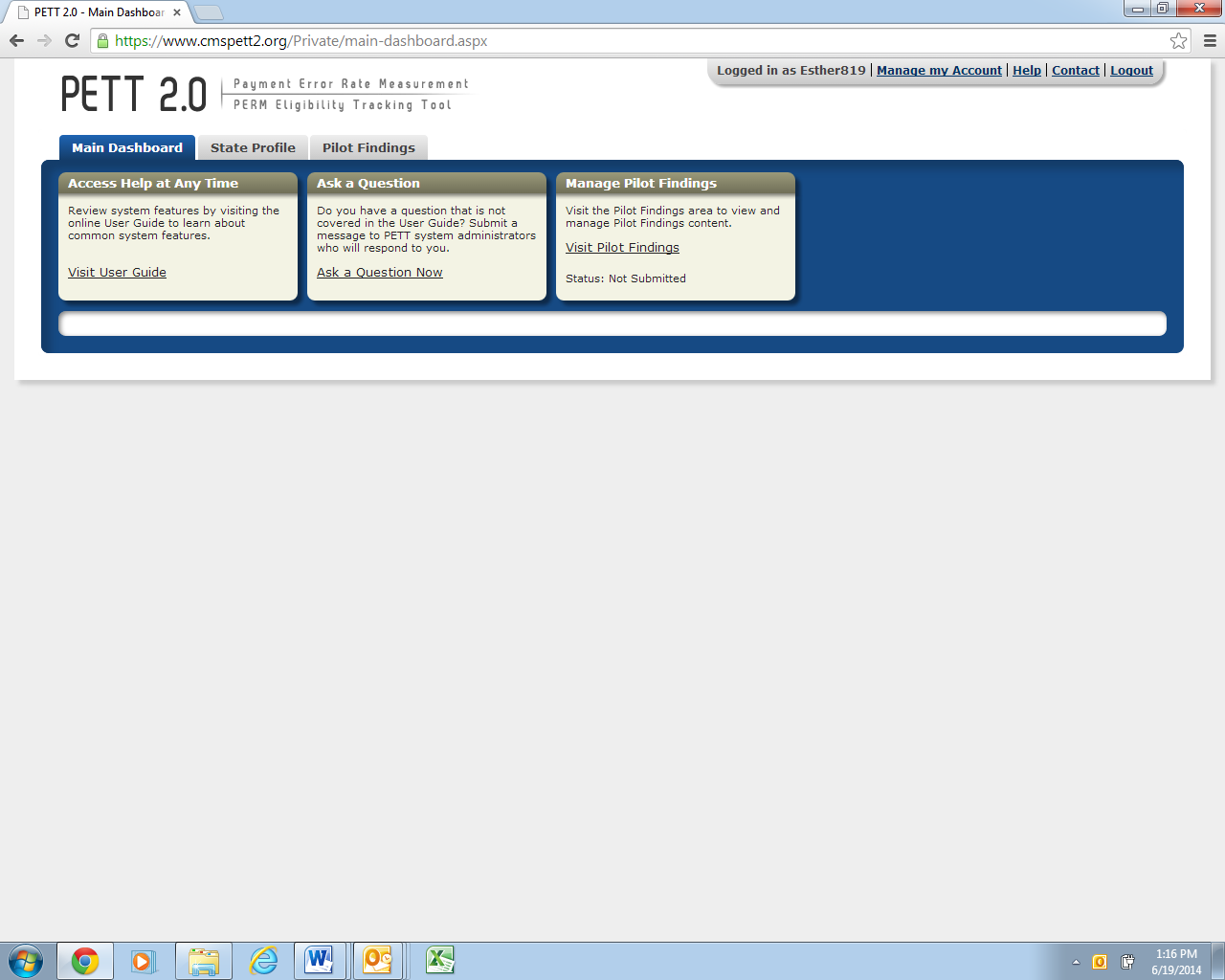

States are required to enter Round 1 Pilot Findings directly on PETT 2.0. After a successful log-in, states are directed to the home page of the PETT 2.0 site (see Figure 10). From the home page, states can navigate to the Pilot Findings reporting section in one of two ways. As indicated in Figure 8, states can either select “Visit Pilot Findings” from the third box labeled “Manage Pilot Findings.” In this box, states will also be provided the status of their pilot findings report: Not Submitted, Submitted – Under CMS Review, Submitted – State Revising, or Approved.

Figure 7: Round 1 Pilot Findings PETT 2.0 Home Page

Alternatively, states can also utilize the third grey tab, selecting “Pilot Findings” and followed by “View and Manage Pilot Findings.” Both approaches will take states to the same location where pilot findings data can be reported.

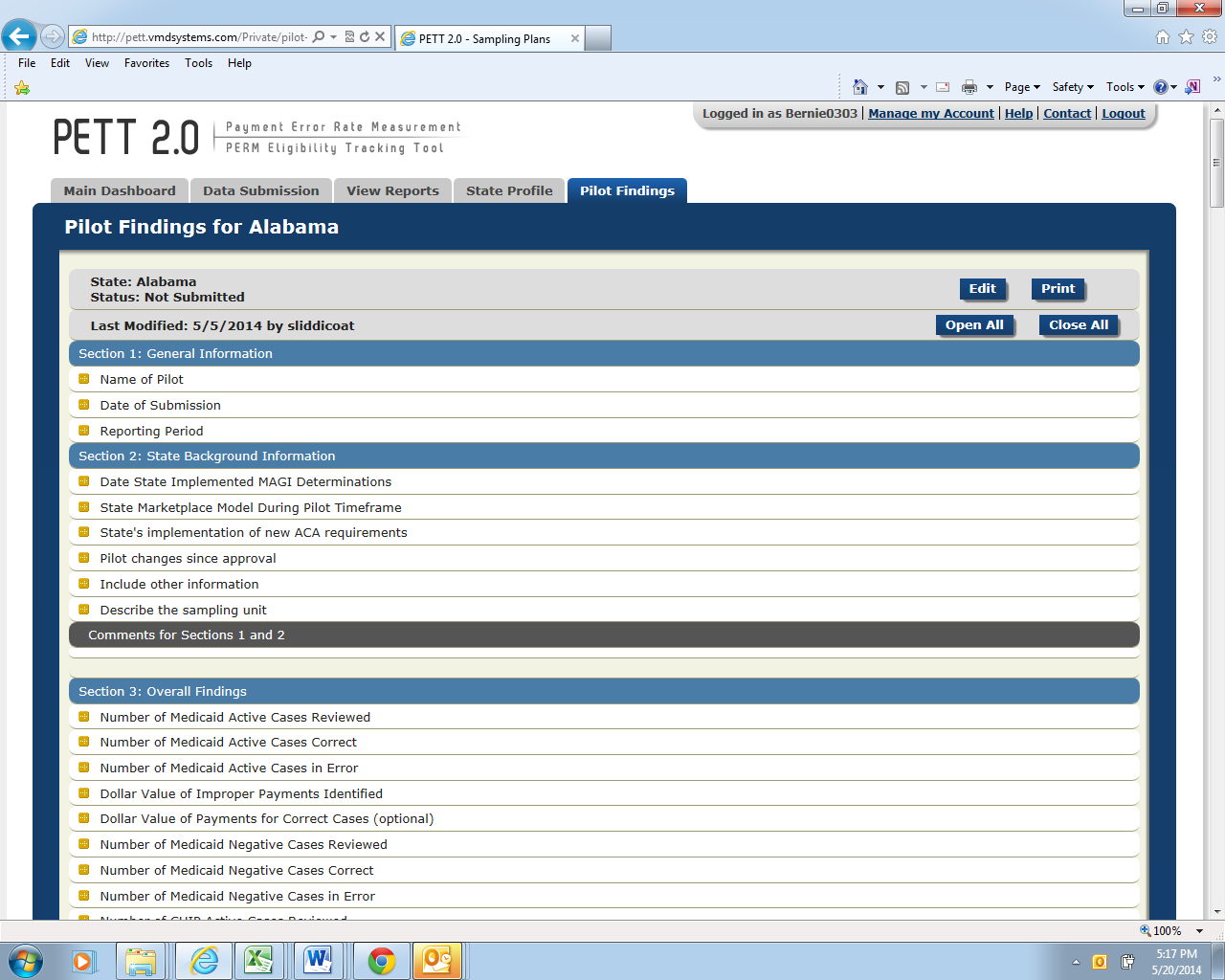

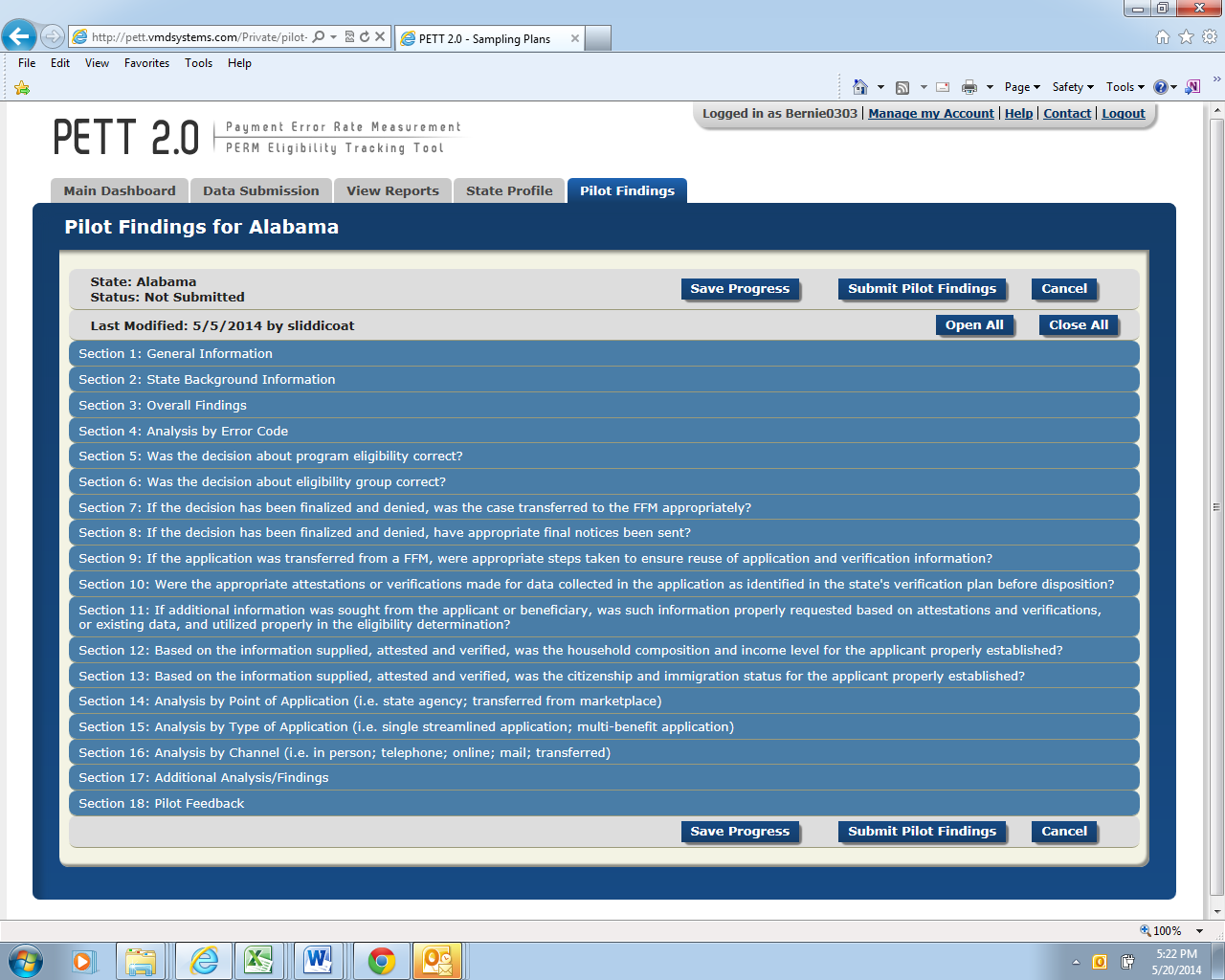

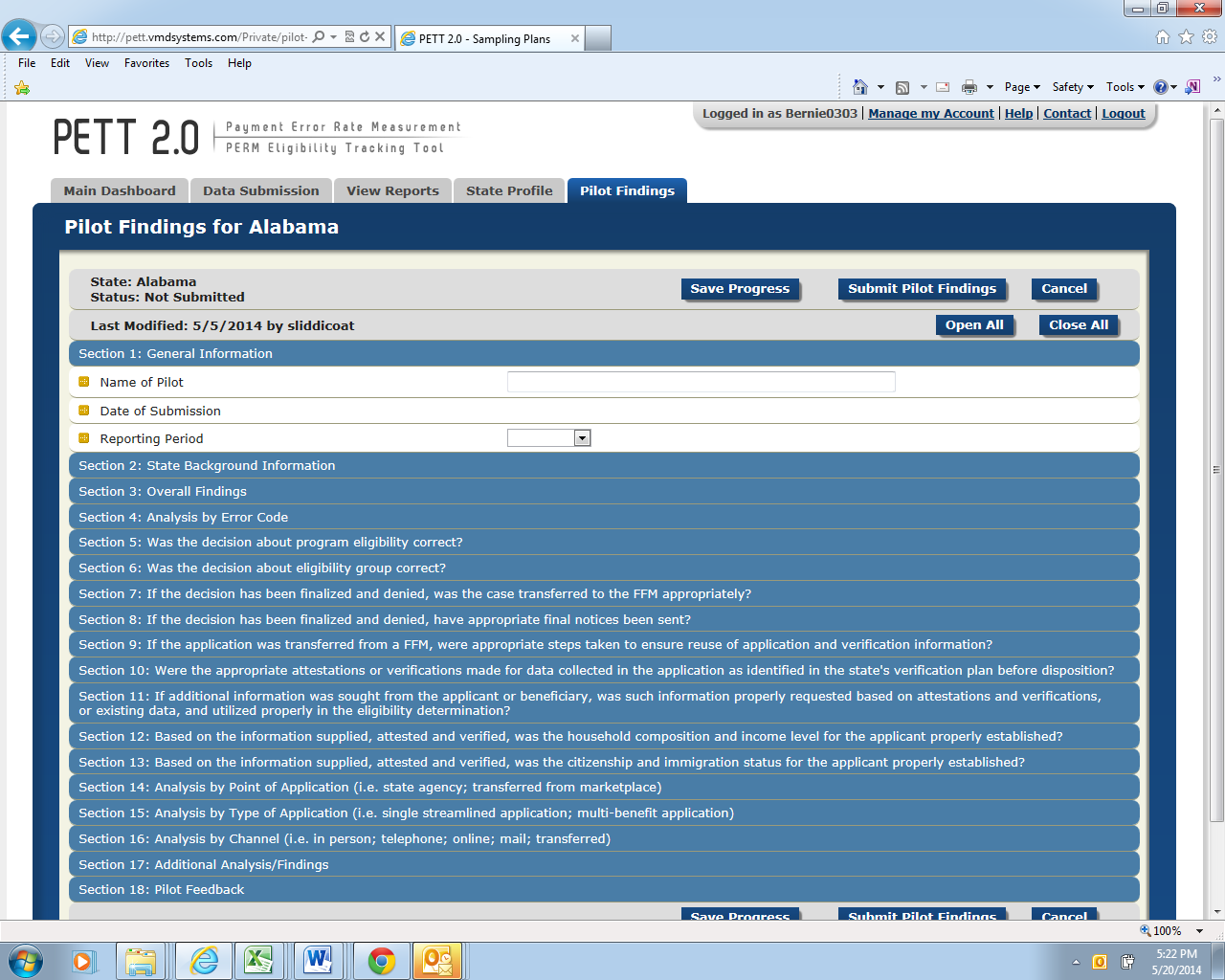

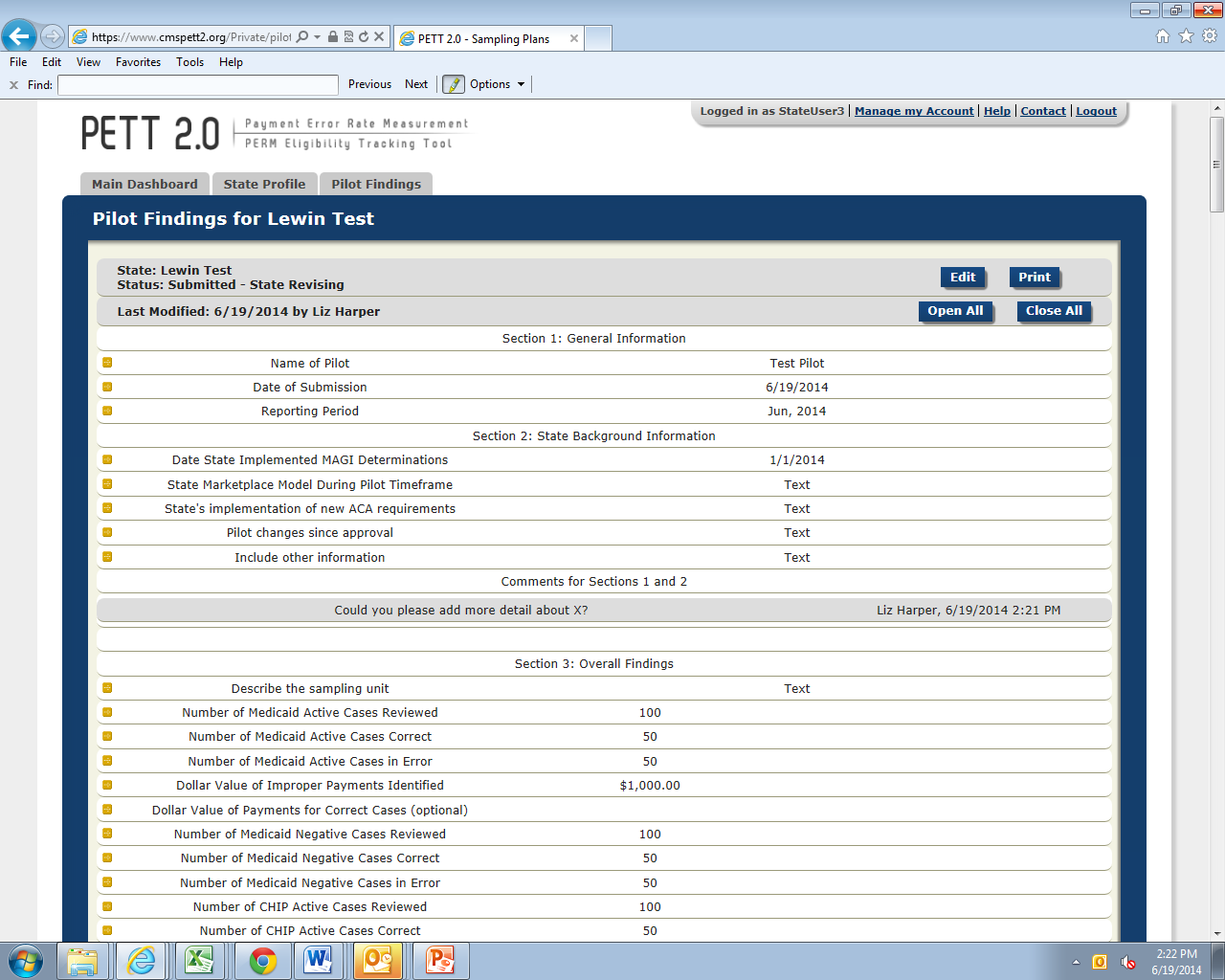

After navigating to the pilot findings report using one of the methods outlined above, states will be taken directly to the reporting tool. Figure 9 displays the initial view of the Pilot Findings Report. Each of the 18 required reporting sections are provided in this single location.

Figure 8: Initial View of the Pilot Findings Report

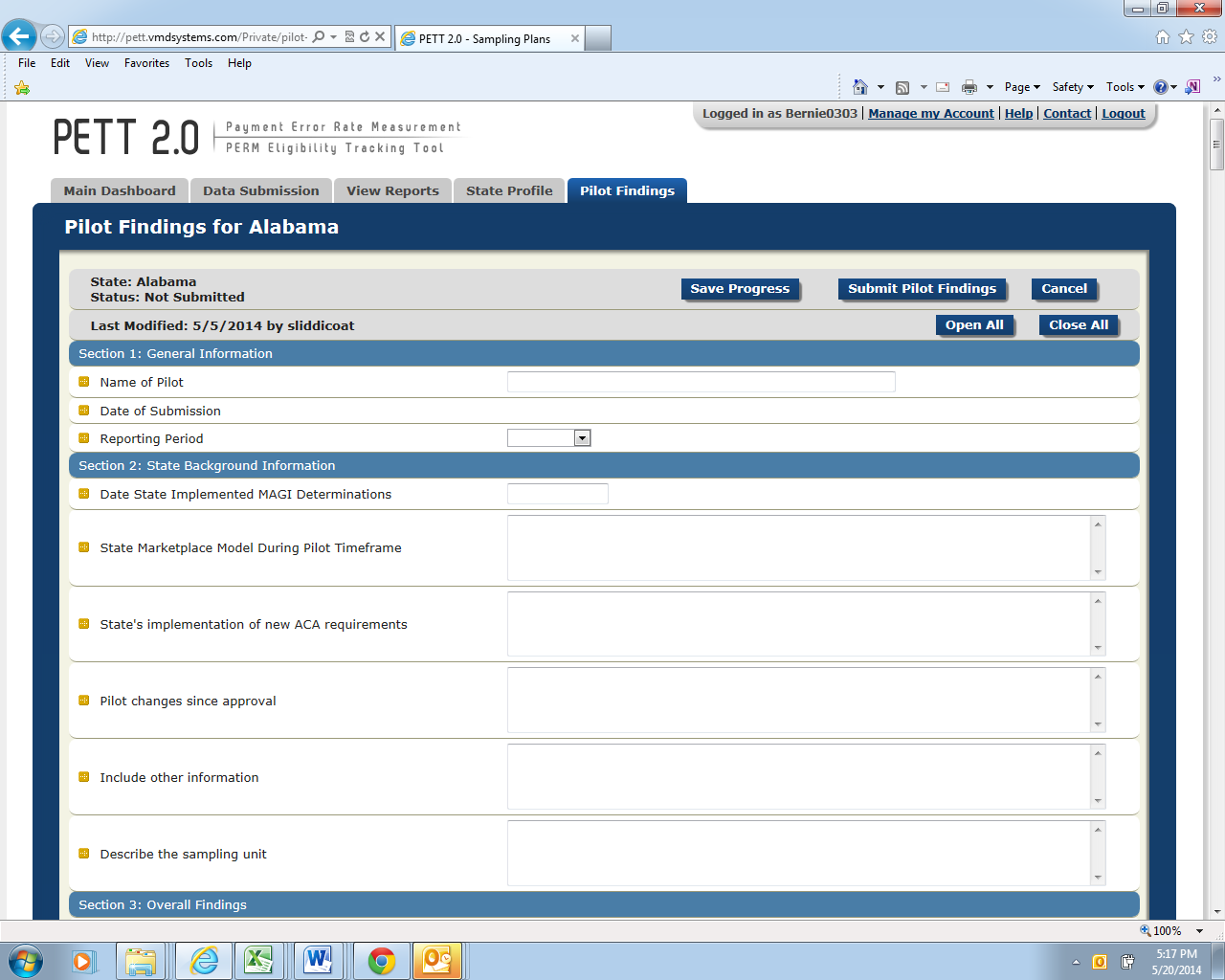

In order to begin entering findings, states first need to select the “Edit” button located in the top right corner of the report. Once this option is selected, states will be able to enter data in each section, as shown in Figure 10.

Upon entering findings, states should utilize the “Save Progress” button, at the bottom of the page after Section 18, frequently in order to ensure that submitted data is not lost. If states are only entering partial findings during a single user session, please remember to select “Save Progress” before logging out of the site. States should be aware that the PETT 2.0 site will timeout after a user is inactive for 15 minutes. NOTE: If PETT 2.0 times out on a user that has not selected the “Save Progress” button, the user’s work will be lost.

Figure 9: View of Pilot Findings Report After Selecting “Edit”

If states want to focus on a single section of the Pilot Findings Report, users should first select the button “Close All” (see Figure 11) and then click on the section that the user would like to populate (see Figure 12).

Figure 10: View with Each Section Closed

Figure 11: View with a Single Section Open

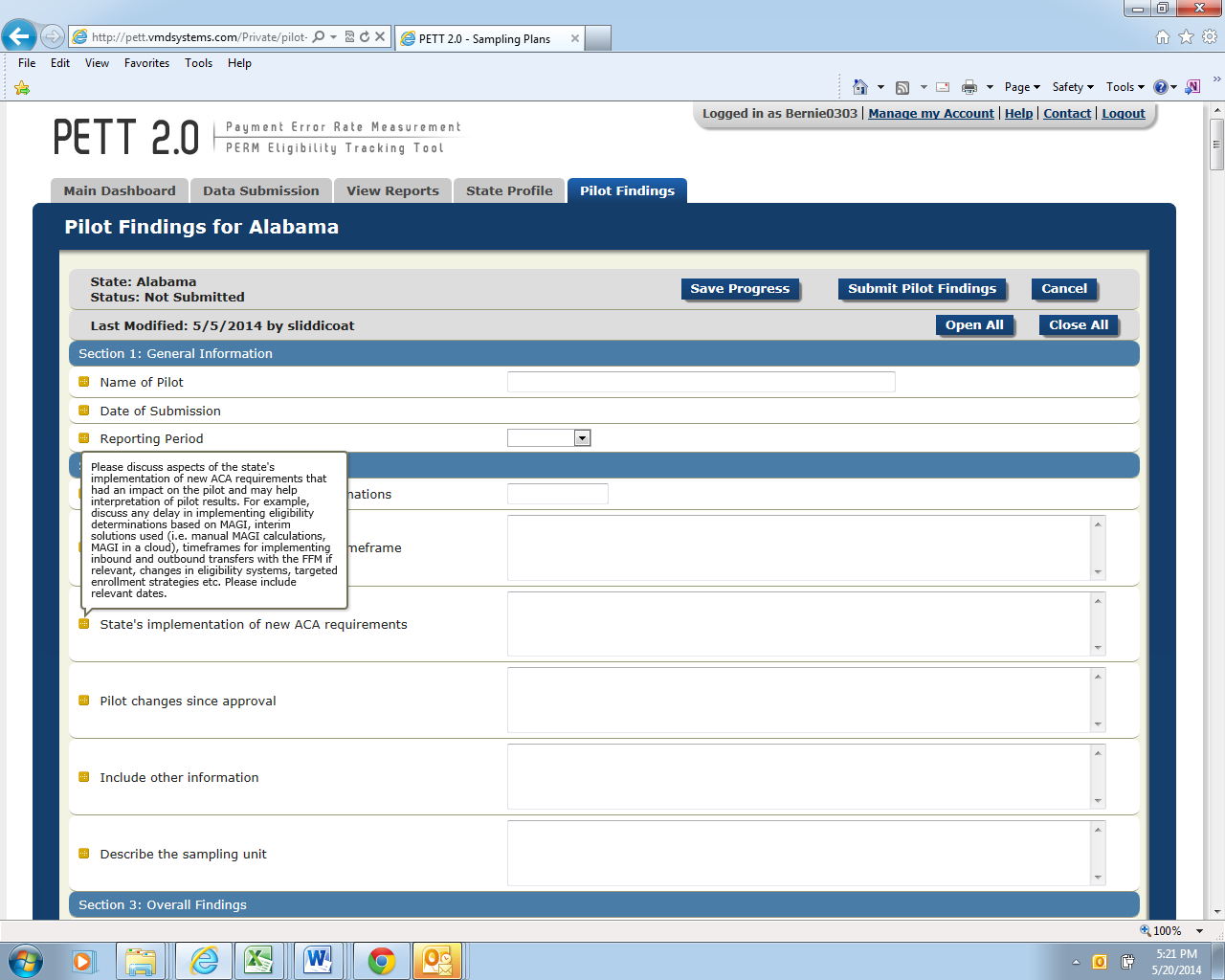

Instructions for populating each field can be accessed by hovering the cursor over the gold square to the left of the field under review, as demonstrated in Figure 13. Entries are typically number values or free-form text. You can use the Tab button on your keyboard to move from one entry box to the next entry box. This is especially useful when there is a series of numeric entries. As provided in the instructions submitted to states,1 there are a total of 18 sections that states will need to complete on PETT 2.0 to meet the pilot findings reporting requirements. Guidance for populating each section is provided below. Each question is listed with the type of input required in parenthesis (i.e., number, text, calendar, drop down) and a description of the item. NOTE: All fields in the form are required. If there is a field that the state does not need to populate (e.g., corrective actions for a type of error not found by the state), the user must enter “NA” in the field. If any fields are left blank, the site will not allow the findings to be submitted.

Figure 12: Accessing Pilot Findings Report Instructions

Figure 13: General Information

Name of Pilot: (text)

Include the name of the pilot you are reporting results for in the free form text box; this should match the name of your pilot from the pilot proposal.

Date of Submission: (no entry--autofill)

PETT will automatically populate this value when the form is submitted as final; no state action is required.

Reporting period: (drop down)

Select “June 2014” from the drop down list.

Figure 14: State Background

Date State Implemented MAGI Determinations: (calendar)

Click inside the entry box and a calendar will appear. Select the date your state started determining Medicaid/CHIP eligibility using new MAGI rules. (e.g., if the state began making MAGI determinations on November 15, 2013 for applicants applying for

Medicaid benefits starting January 1, 2014, the state would enter 11/15/13 in this field).

State Marketplace Model During Pilot Timeframe: (text)

Specify the marketplace model utilized by the state from October 2013 through March 2014. If an FFM or partnership model state, please indicate if the state delegated determination authority to the FFM during the pilot timeframe. If the pilot timeframe includes an overlapping period of both the assessment and determination model, please indicate such and include relevant date ranges. Also, specify if your state changed models during pilot timeframe.

State’s Implementation of new ACA requirements: (text)

Please discuss aspects of the state's implementation of new ACA requirements that had an impact on the pilot and may help interpretation of pilot results. The state should describe its implementation of ACA requirements for Medicaid and CHIP eligibility, including any delays, systems issues, and relevant mitigation plans. Please also include relevant dates. The type of information CMS intends to collect in this section includes, but is not limited to:

Delays in implementing MAGI eligibility determinations

Interim MAGI solutions used (e.g. manual MAGI calculations, MAGI in a cloud) along with the date span these interim solutions were used

Timeframes for implementing inbound and outbound FFM transfers if relevant to pilot

Changes in eligibility systems and relevant dates

Targeted enrollment strategies utilized

Relevant waivers (i.e. FFM Flat File waiver)

Relevant mitigation plans

CMS understands that information in this section may impact a state’s ability to report on certain elements contained in the reporting form. If the state was timely with implementation, experienced no delays, did not implement any targeted enrollment strategies, etc., please state that in this section.

Pilot changes since approval: (text)

Has anything changed about the pilot since approval of the pilot proposal? If yes, please describe. The approved pilot proposal is CMS’ record of how the state chose to conduct the round 1 pilot. If there is anything in the approved proposal that is no longer accurate or anything missing from the proposal, the state should include a description in this section. If the approved pilot proposal accurately reflects the state’s round 1 pilot, please put “no” for this section.

Include other information: (text)

Please include any other information that may help interpretation of the pilot results. The state should include any other information that someone reviewing the state’s results should know. For example, if your state will be entering “0”s in an entire section of this reporting template because it is not something your state is able to report on, this field can be used to describe that. CMS understands that all states may not be able to provide information for every element and only requires that the state provide reasoning why. This field is optional and may be left blank.

Figure 15 shows the Section 3 required fields. Below, each field is described in detail.

Figure 15: Overall Findings

Describe the sampling unit: (text)

Please describe the sampling unit and the level at which the state is reporting errors (e.g. the level at which the state reports errors is how the state defines a “case” throughout reporting). States should describe whether they sampled at the individual or household level.

If your state sampled at the individual level, describe whether you are reporting on the sampled individual or opted to review the entire household and are reporting on each individual in the household. For reporting purposes, a “case” = an individual.

If your state sampled at the household level, describe whether you are reporting on each individual in the household (for reporting purposes, a “case” = an individual; example: Four individuals in a household. One is in error and three are correct so the state would report one error and three correct cases) or if you are reporting on the household itself (for reporting purposes, a “case” = a household; example: a household is reported as one case. Two out of four household members had an eligibility error so entire household is counted as one error for reporting purposes). Please remember that sampling at the household level and reporting on only one individual in the household is not an option.

Please note that depending on the sampling level and reporting level, CMS understands that the reporting numbers may not match up with the sample size numbers which is acceptable since no error rates or other valid statistics are being based on the pilots.

Medicaid Active

Number of Medicaid Active Cases Reviewed: (number)

Number of cases reviewed and determined correct or in error (number of cases correct and number of cases in error should add up to this number).

Number of Medicaid Active Cases Correct: (number)

Number of cases found to be correct based on the state-specific definition of what constitutes a “correct” case, per the state’s approved pilot sampling plan.

Number of Medicaid Active Cases in Error: (number)

Number of cases found to be in error based on the state-specific definition of what constitutes an “error,” per the state’s approved pilot sampling plan.

Dollar Value of Improper Payments Identified: (number--do not include “$”)

Total dollars for active cases identified by the state as “errors” based on the state-specific definition of an “error” and collected according to the timeframes specified in the state’s approved pilot sampling plan.

Dollar Value of Payments for Correct Cases: (number--do not include “$”)

This field is optional; if you were a state that opted to conduct payment reviews on all sampled cases, you can record the dollar value of correct payments here.

Medicaid Negative

Number of Medicaid Negative Cases Reviewed: (number)

Number of cases reviewed and determined correct or in error (number of cases correct and number of cases in error should add up to this number).

Number of Medicaid Negative Cases Correct: (number)

Number of cases found to be correct based on the state-specific definition of what constitutes a “correct” case, per the state’s approved pilot sampling plan.

Number of Medicaid Negative Cases in Error: (number)

Number of cases found to be in error based on the state-specific definition of what constitutes an “error,” per the state’s approved pilot sampling plan.

CHIP Active

Number of CHIP Active Cases Reviewed: (number)

Number of cases reviewed and determined correct or in error (number of cases correct and number of cases in error should add up to this number).

Number of CHIP Active Cases Correct: (number)

Number of cases found to be correct based on the state-specific definition of what constitutes a “correct” case, per the state’s approved pilot sampling plan.

Number of CHIP Active Cases in Error: (number)

Number of cases found to be in error based on the state-specific definition of what constitutes an “error,” per the state’s approved pilot sampling plan.

Dollar Value of Improper Payments Identified: (number--do not include “$”)

Total dollars for active cases identified by the state as “errors” based on the state-specific definition of an “error” and collected according to the timeframes specified in the state’s approved pilot sampling plan.

Dollar Value of Payments for Correct Cases: (number--do not include “$”)

This field is optional; if you were a state that opted to conduct payment reviews on all sampled cases, you can record the dollar value of correct payments here.

CHIP Negative

Number of CHIP Negative Cases Reviewed: (number)

Number of cases reviewed and determined correct or in error (number of cases correct and number of cases in error should add up to this number).

Number of CHIP Negative Cases Correct: (number)

Number of cases found to be correct based on the state-specific definition of what constitutes a “correct” case, per the state’s approved pilot sampling plan.

Number of CHIP Negative Cases in Error: (number)

Number of cases found to be in error based on the state-specific definition of what constitutes an “error,” per the state’s approved pilot sampling plan.

Figure 16 shows the Section 4 required fields. Below, each field is described in detail.

Figure 16: Analysis by Error Code

Separate subsections for Medicaid Active, Medicaid Negative, CHIP Active, and CHIP Negative

Please list all of the error codes/classifications that the state used: (text)

Each error code/classification should be entered in a separate row. The number of rows in PETT will expand to accommodate as many error codes/classifications as the state used. States should list all error classifications/codes used for the pilot (e.g., E – eligible; IE – ineligible; TE – technical errors; V – valid negative action; I – invalid negative action). This should match information from the “Specify how errors will be identified and classified” section of your state’s approved pilot proposal.

Number of errors: (number)

The state should include the number of errors identified for each error code.

Dollar value of improper payments: (number--do not include “$”)

The state should include the dollar value of improper payments associated with each error code. This is not required for eligible codes (i.e., E – eligible) or negative cases.

Questions for each of these sections are listed below. For Section 5, we have provided more detailed guidance for each field. Sections 6 – 13 are identical to Section 5 in terms of level of information requested with the only difference being that each section addresses a different area of required pilot findings. Please note that states may report a case in more than one section should multiple problems be identified on a case.

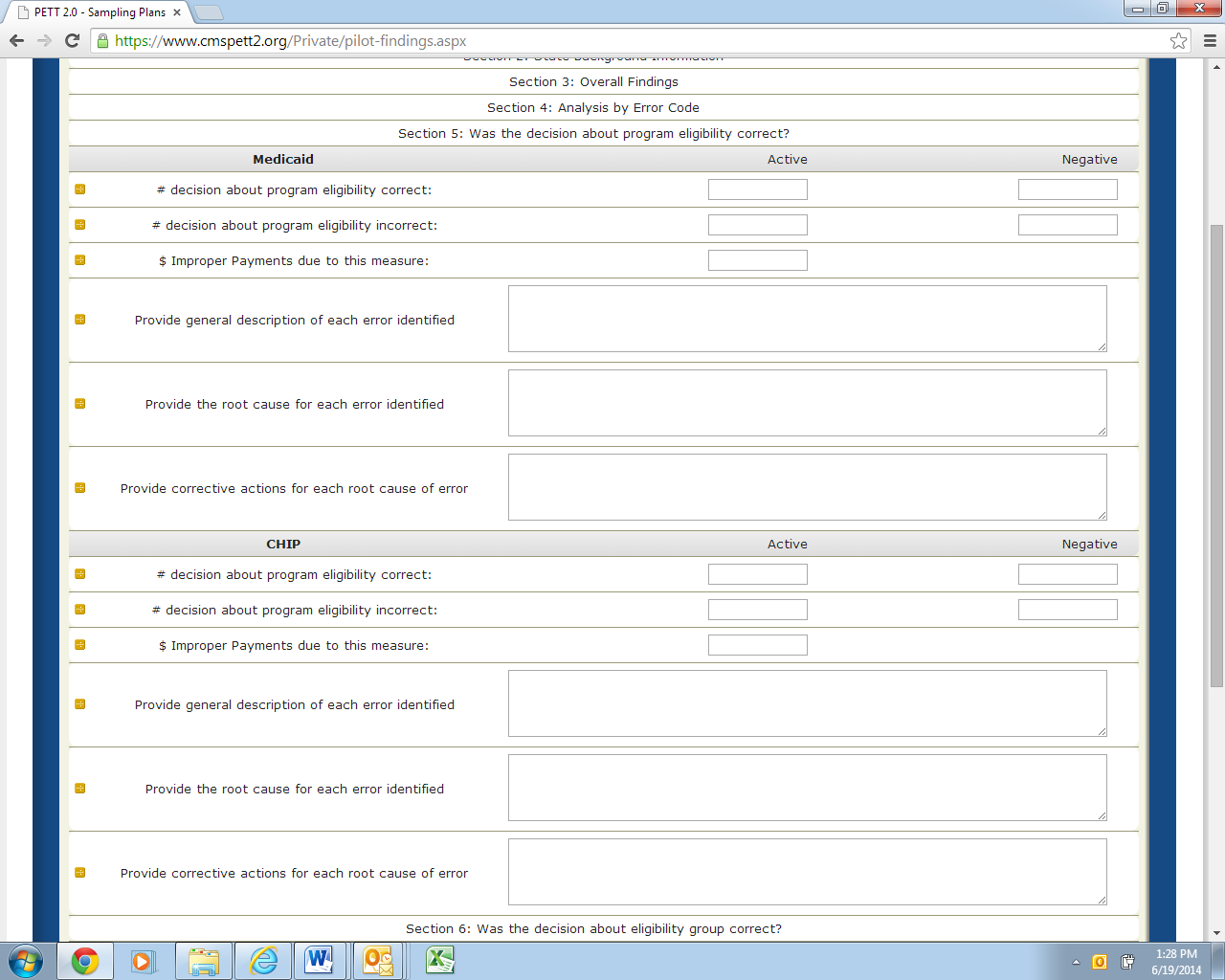

Section 5 – Was the decision about program eligibility correct?

Figure 17 shows the Section 5 required fields. Below, each field is described in detail.

Figure 17: Was the decision about program eligibility correct

Separate subsections for Medicaid and CHIP

Number of decisions about program eligibility correct: Active (number) and Negative (number)

Include the number of Active cases and separately the number of Negative cases for which the decision about program eligibility was correct.

Number of decisions about program eligibility incorrect: Active (number) and Negative (number)

Include the number of Active cases and separately the number of Negative cases for which the decision about program eligibility was incorrect.

Dollar value of improper payments due to this measure: Active (number--do not include “$”)

Include the dollar value of improper payments for cases where the decision about program eligibility was incorrect.

Provide general description of each error identified: (text)

States should enter a general description for each case where the decision about program eligibility was incorrect. The general descriptions should briefly describe the errors (e.g., the state caseworker did not verify income information from the hub as required, and the recipient was over the Medicaid income limit).

Provide the root cause for each error identified: (text)

For each error, states should provide the root cause of the error. States should describe the underlying causes of each error, not just the surface causes, and why a particular program/operational procedure caused the specific errors (e.g., no internal controls in place at the state to ensure the caseworker is completing each required verification; caseworkers not completing all required verifications).

Provide corrective actions for each root cause of error: (text)

For each root cause, states should discuss corrective actions to avoid such errors in the future. States should describe the corrective actions that the state will implement and how these actions will reduce or eliminate errors. For each corrective action the state should discuss, with as much detail as possible (at a minimum):

The state key personnel and components that will be responsible for implementing the corrective action.

How the root cause of the error will be addressed with the corrective action.

Details on the action to be taken, providing a step-by-step process, where applicable. States should identify the specific actions that will be taken (e.g., systems changes, new and/or updated trainings, policy clarifications).

The corrective action implementation dates and the expected due dates for resolving problems.

Expected results of the corrective action and how the state plans to monitor the effectiveness of the corrective action.

Any other corrective action information CMS should know.

While states must discuss corrective actions for errors, it remains the state’s decision on which corrective actions to take to decrease or eliminate errors. States are encouraged to use the most cost effective corrective actions that can be implemented to best correct and address the root causes of the errors. If the state determines that the cost of implementing a corrective action outweighs the benefits then the final decision of implementing the corrective action is the state’s decision. In cases where the state chooses not to implement an action, the cost benefit analysis and the final decision should be included in the corrective action discussion.

States will be required to provide an update on these corrective actions, including an evaluation of the effectiveness of the corrective actions, when reporting on the round 2 pilots in December 2014. Therefore, a thorough and detailed description of the state’s corrective action strategy will benefit states in conducting the second round of pilots later this year.

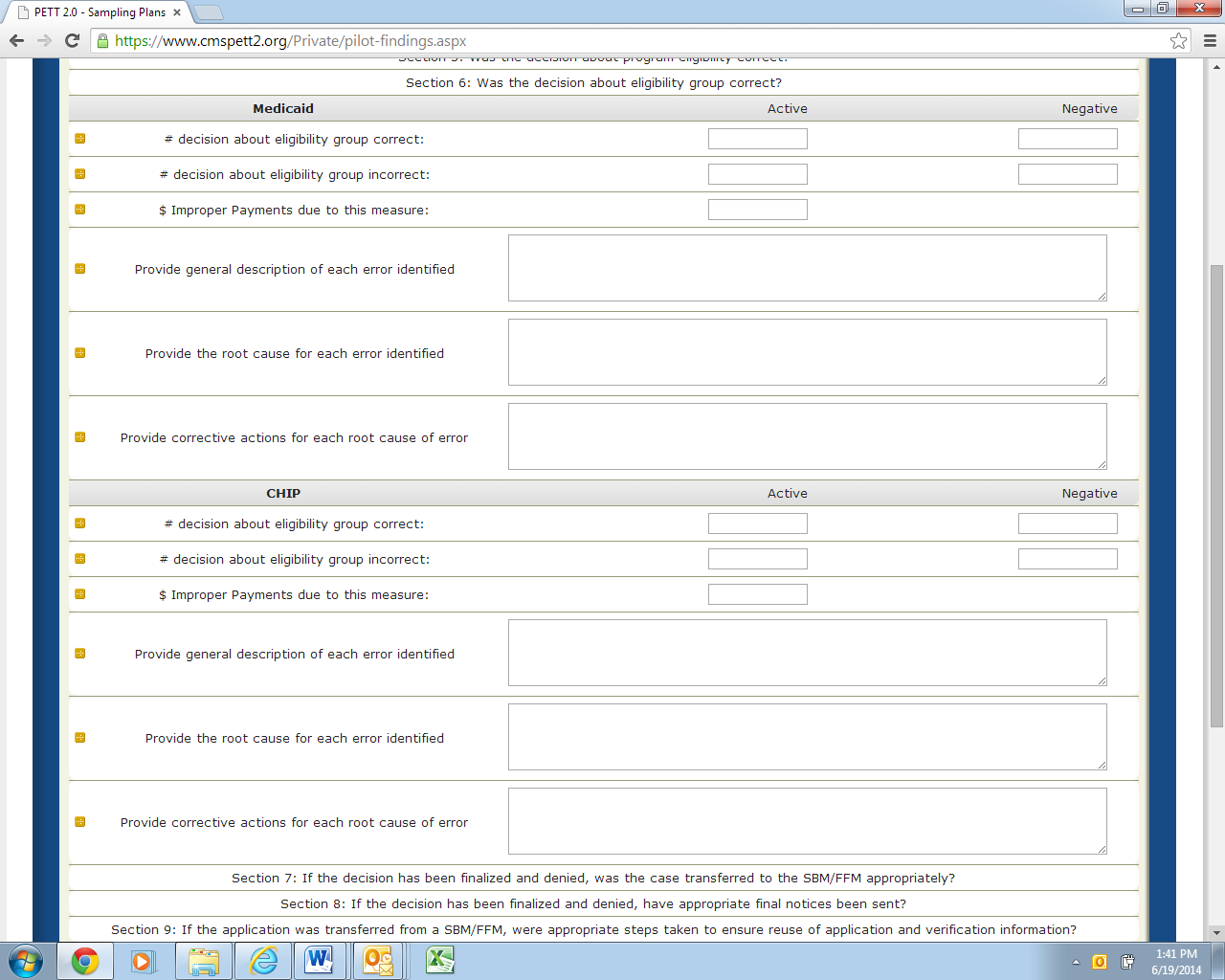

Section 6 – Was the decision about eligibility group correct?

Figure 18 shows the Section 6 required fields. Below, each field is described in detail.

Figure 18: Was the decision about eligibility group correct

Separate subsections for Medicaid and CHIP

Number of decisions about eligibility group correct: Active (number) and Negative (number)

Number of decisions about eligibility group incorrect: Active (number) and Negative (number)

Dollar value of improper payments due to this measure: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

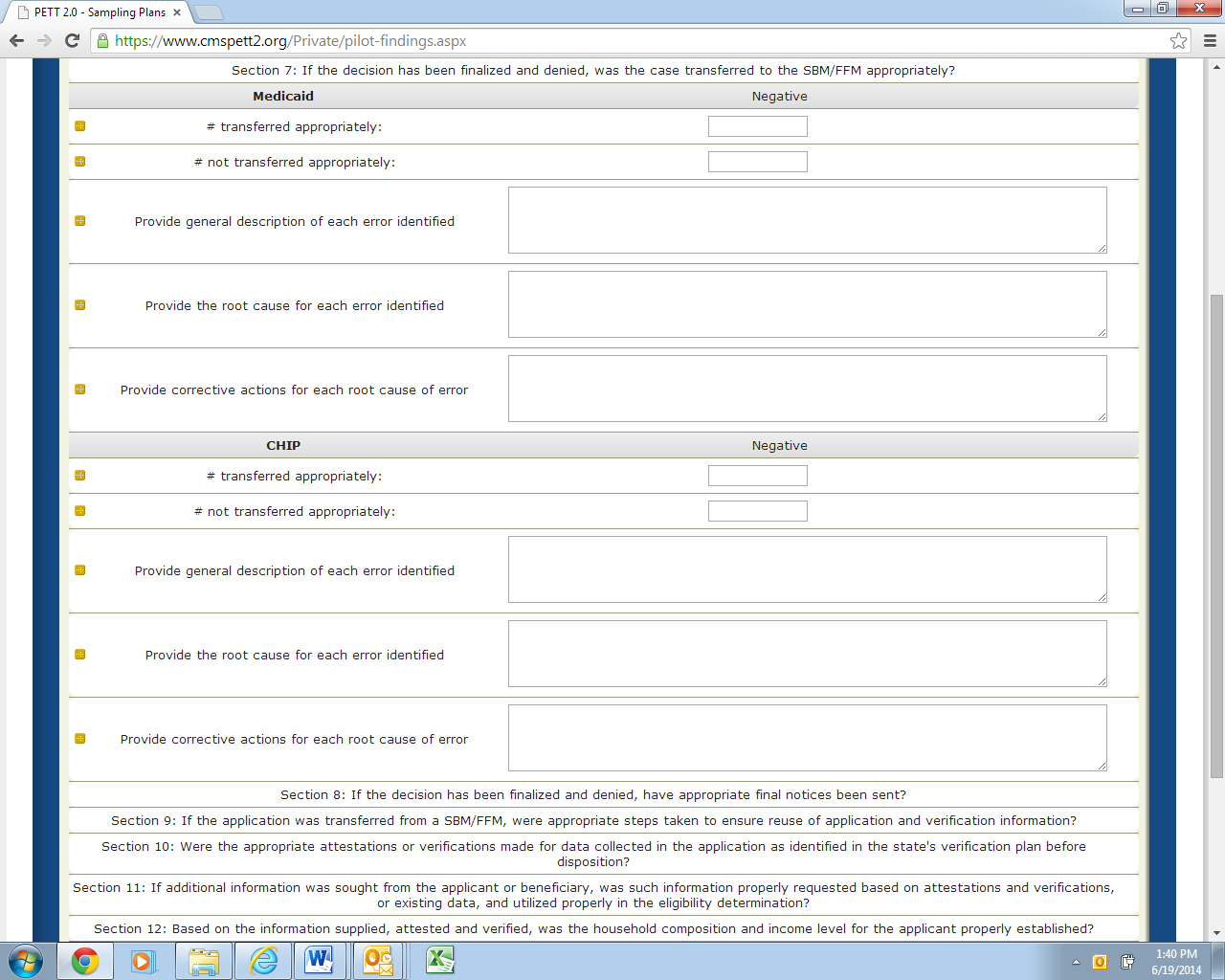

Section 7 – If the decision has been finalized and denied, was the case transferred to the FFM appropriately?

Figure 19 shows the Section 7 required fields. Below, each field is described in detail.

Figure 19: If the decision has been finalized and denied, was the case transferred to the FFM appropriately

Separate subsections for Medicaid and CHIP

Number of cases transferred appropriately: Negative (number)

Number of cases not transferred appropriately: Negative (number)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

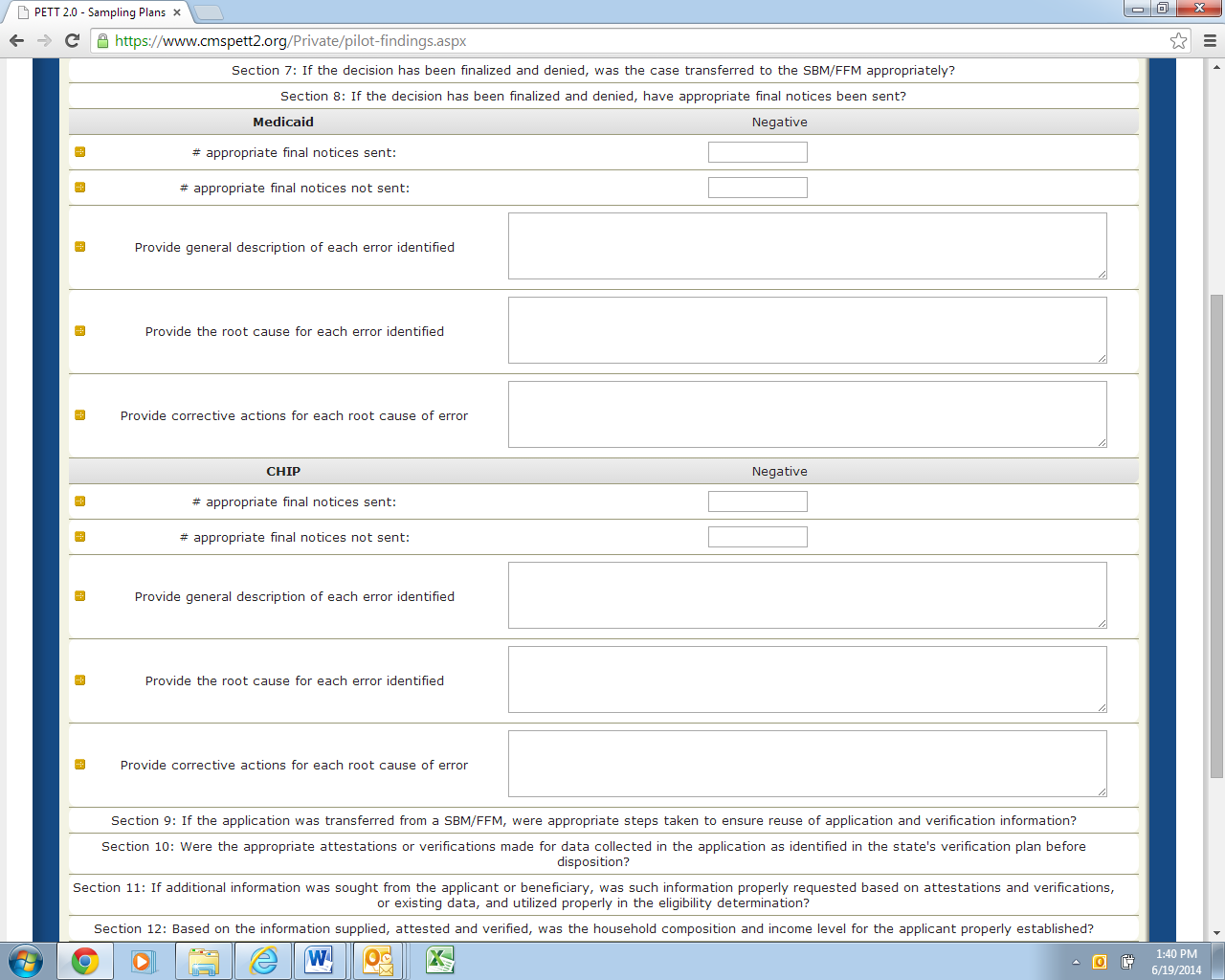

Section 8 – If the decision has been finalized and denied, have appropriate final notices been?

Figure 20 shows the Section 8 required fields. Below, each field is described in detail.

Figure 20: If the decision has been finalized and denied, have appropriate final notices been

Separate subsections for Medicaid and CHIP

Number of appropriate final notices sent: Negative (number)

Number of appropriate final notices not sent: Negative (number)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

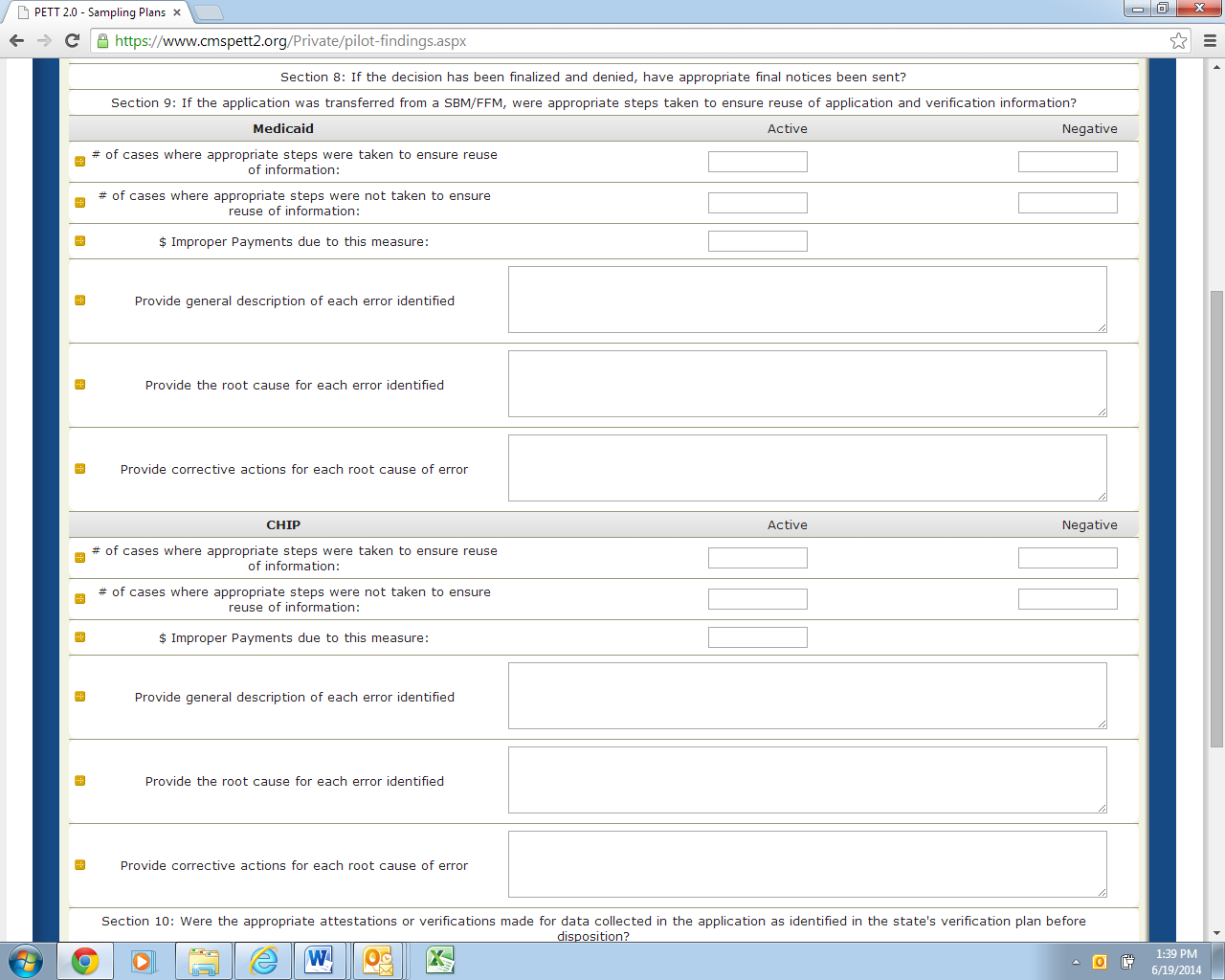

Section 9 – If the application was transferred from a FFM, were appropriate steps taken to ensure reuse of application and verification information?

Figure 21 shows the Section 9 required fields. Below, each field is described in detail.

Figure 21: If the application was transferred from a FFM, were appropriate steps taken to ensure reuse of application and verification information

Separate subsections for Medicaid and CHIP

Number of cases where appropriate steps were taken to ensure reuse of information: Active (number) and Negative (number)

Number of cases where appropriate steps were nor taken to ensure reuse of information: Active (number) and Negative (number)

Dollar value of improper payments due to this measure: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

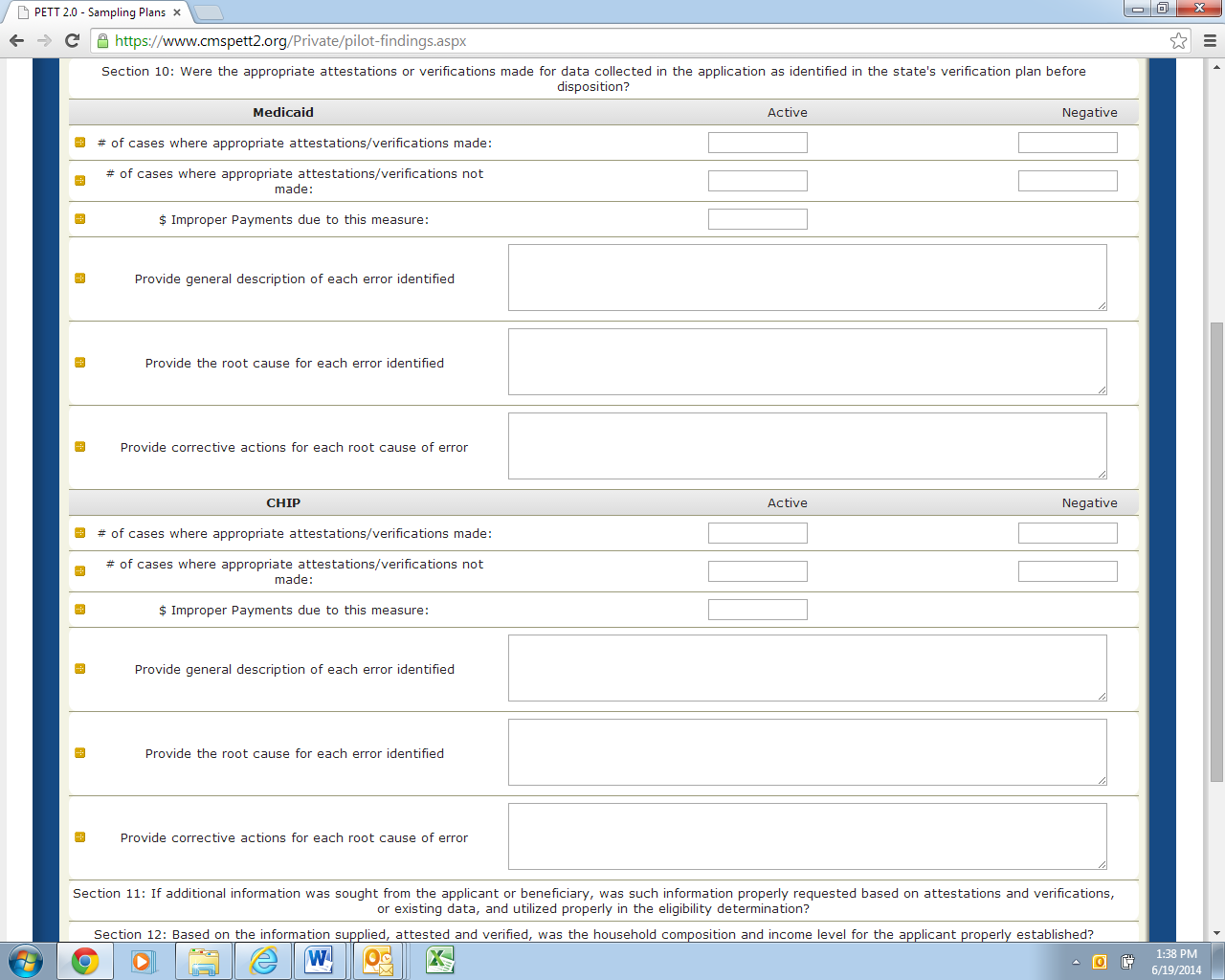

Section 10 – Were the appropriate attestations or verifications made for data collected in the application as identified in the state’s verification plan before disposition?

Figure 22 shows the Section 10 required fields. Below, each field is described in detail.

Figure 22: Were the appropriate attestations or verifications made for data collected in the application as identified in the state’s verification plan before disposition

Separate subsections for Medicaid and CHIP

Number of cases where appropriate attestations/verifications made: Active (number) and Negative (number)

Number of cases where appropriate attestations/verifications not made: Active (number) and Negative (number)

Dollar value of improper payments due to this measure: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

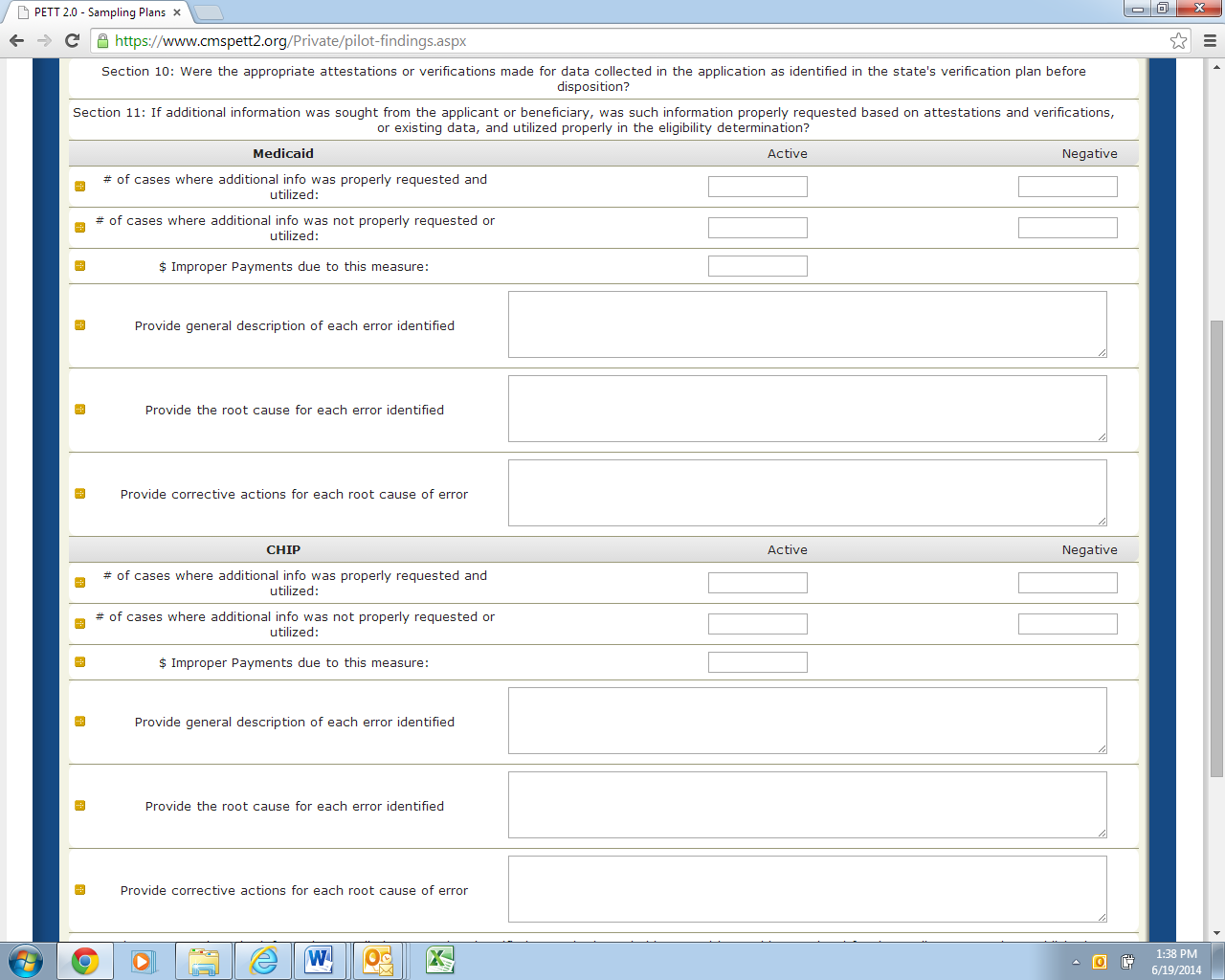

Section 11 – If additional information was sought from the applicant or beneficiary, was such information properly requested based on attestations and verifications, or existing data, and utilized properly in the eligibility determination?

Figure 23 shows the Section 11 required fields. Below, each field is described in detail.

Figure 23: Was additional information sought from the beneficiary properly requested/utilized

Separate subsections for Medicaid and CHIP

Number of cases where additional information was properly requested and utilized: Active (number) and Negative (number)

Number of cases where additional information was not properly requested and utilized: Active (number) and Negative (number)

Dollar value of improper payments due to this measure: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

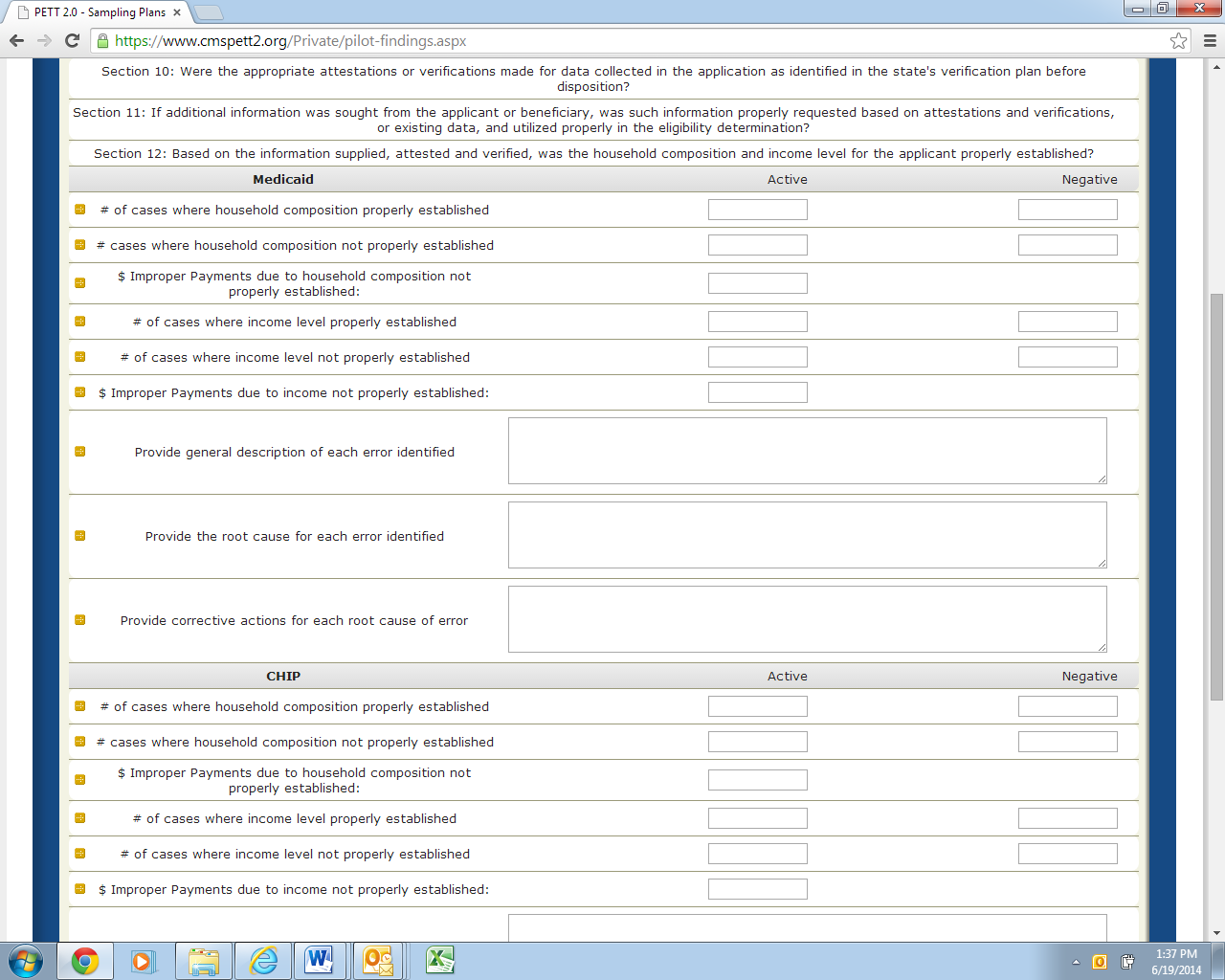

Section 12 – Based on the information supplied, attested and verified, was the household composition and income level for the applicant properly established?

Figure 24 shows the Section 12 required fields. Below, each field is described in detail.

Figure 24: Was the household composition and income level for the applicant properly established

Separate subsections for Medicaid and CHIP

Note: For this measure, states are asked to separately report the number of cases where household composition was/wasn’t properly established (and associated improper payments) and the number of cases where the income level was/wasn’t properly established (and associated improper payments).

Number of cases where household composition properly established: Active (number) and Negative (number)

Number of cases where household composition not properly established: Active (number) and Negative (number)

Dollar value of improper payments due to household composition not properly established: Active (number--do not include “$”)

Number of cases where income level properly established: Active (number) and Negative (number)

Number of cases where income level not properly established: Active (number) and Negative (number)

Dollar value of improper payments due to income not properly established: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

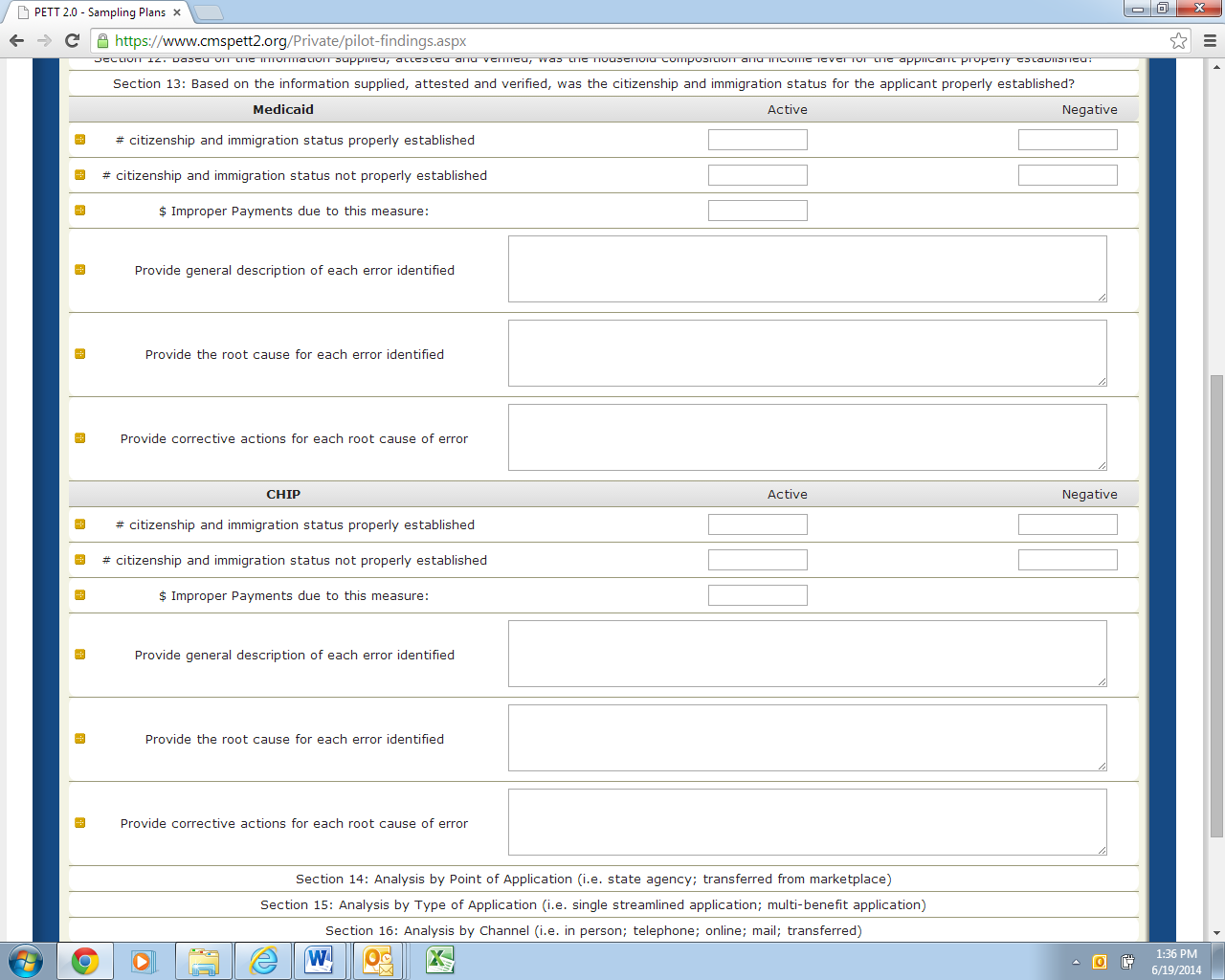

Section 13 – Based on the information supplied, attested and verified, was the citizenship and immigration status for the applicant properly established?

Figure 25 shows the Section 13 required fields. Below, each field is described in detail.

Figure 25: Was the household composition and income level for the applicant properly established

Separate subsections for Medicaid and CHIP

Number of cases citizenship and immigration status properly established: Active (number) and Negative (number)

Number of cases citizenship and immigration status not properly established: Active (number) and Negative (number)

Dollar value of improper payments due to citizenship and immigration status not properly established: Active (number--do not include “$”)

Provide a general description of each error identified: (text)

Provide the root cause of each error identified: (text)

Provide corrective actions for each root cause of error: (text)

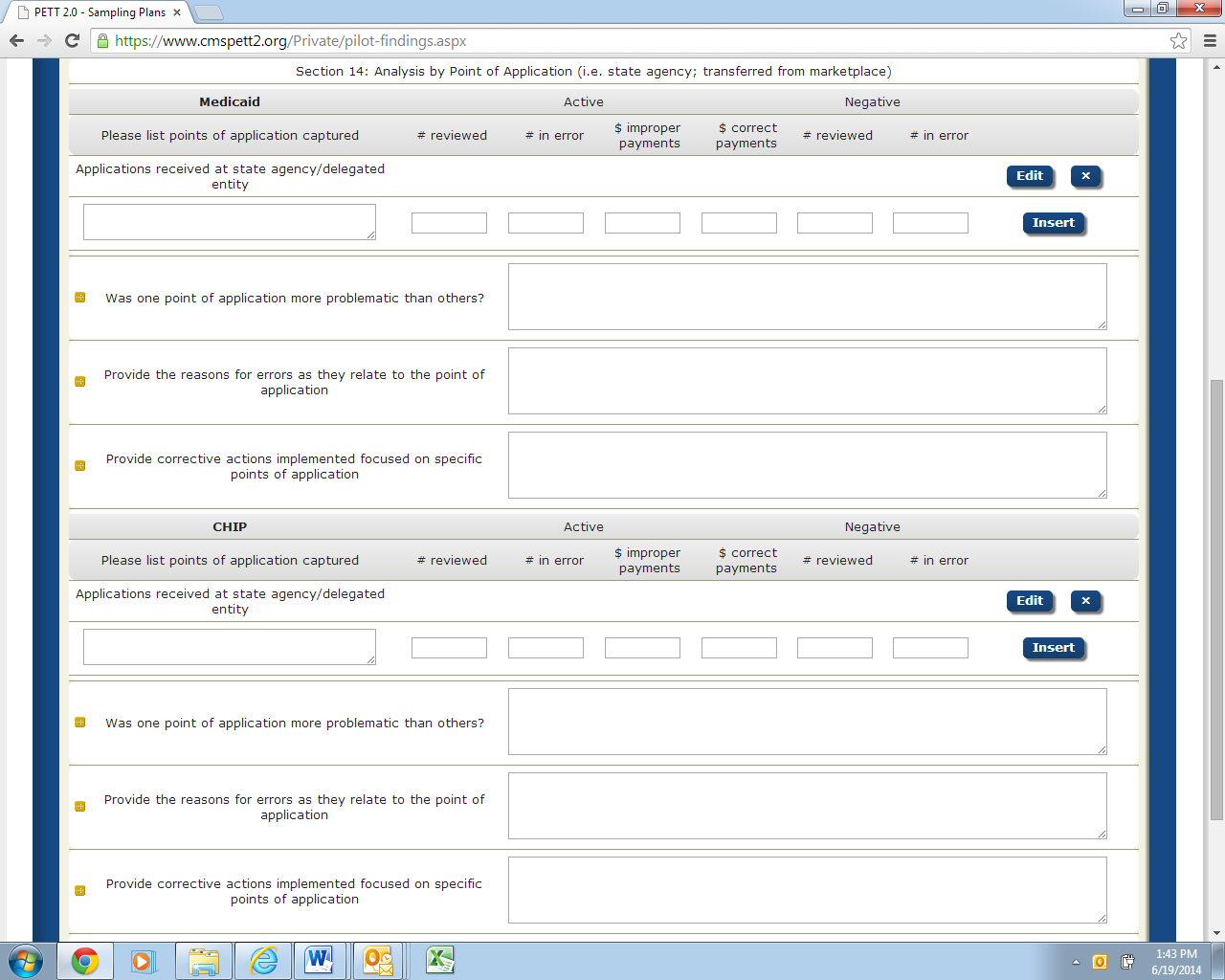

Questions for each of these sections are listed below. For Section 14, we have provided more detailed guidance for each field. Sections 15 and 16 are identical to Section 14 in terms of level of information requested with the only difference being that each section addresses a different area. Section 14 asks about Point of Application (i.e. state agency; transferred from marketplace). Section 15 asks about Type of Application (i.e., single streamlined application; multi-benefit application). Section 16 asks about Channel (i.e. in person; telephone; online; mail; transferred). NOTE: In Sections 14-16, states will be reclassifying errors already discussed in Sections 5-13.

Section 14 – Analysis by Point of Application (i.e. state agency; transferred from marketplace)

Figure 26 shows the Section 14 required fields. Below, each field is described in detail.

Figure 26: Analysis by Point of Application

Separate subsections for Medicaid and CHIP

Please list point of application captured: (text)

States should list the points of application (e.g., state agency, transferred from marketplace) that the pilot captured information on. Pilot guidance required that states report analysis on “Applications received at state agency/delegated entity” so that point of application is prepopulated. Please note that the number of rows in PETT will expand to accommodate as many points of applications the state captured. NOTE: Users should select the “Insert” button for each Point of Application that needs to be entered.

Number of decisions reviewed: Active (number)

The number of cases reviewed for each point of application.

Number of decisions in error: Active (number)

The number of cases in error for each point of application.

Dollar value of improper payments: Active (number--do not include $)

Dollar value of improper payments identified for each point of application.

Dollar value of correct payments: Active (number--do not include $)

If you were a state that opted to conduct payment reviews on all sampled cases, you can record the dollar value of correct payments here for each point of application.

Number of decisions reviewed: Negative (number)

The number of cases reviewed for each point of application.

Number of decisions in error: Negative (number)

The number of cases in error for each point of application.

Was one point of application more problematic than others?: (text)

States should provide a discussion of any trends or analysis relevant to the point of application. The state may also indicate that there were no apparent trends.

Provide the reasons for errors as they relate to point of application: (text)

For any error trends or error findings that were associated with the point of application states should provide a description of the errors and the cause of errors. If there were no trends/error findings associated with this particular measure, the state can indicate that in this field.

Provide corrective actions implemented focused on specific points of application: (text) States should provide a general description of any corrective actions implemented that were focused on a particular point of application or any corrective actions implemented to resolve trends or error findings associated with the point of application. If there were no corrective actions implemented relating to this particular measure, the state can indicate that in this field.

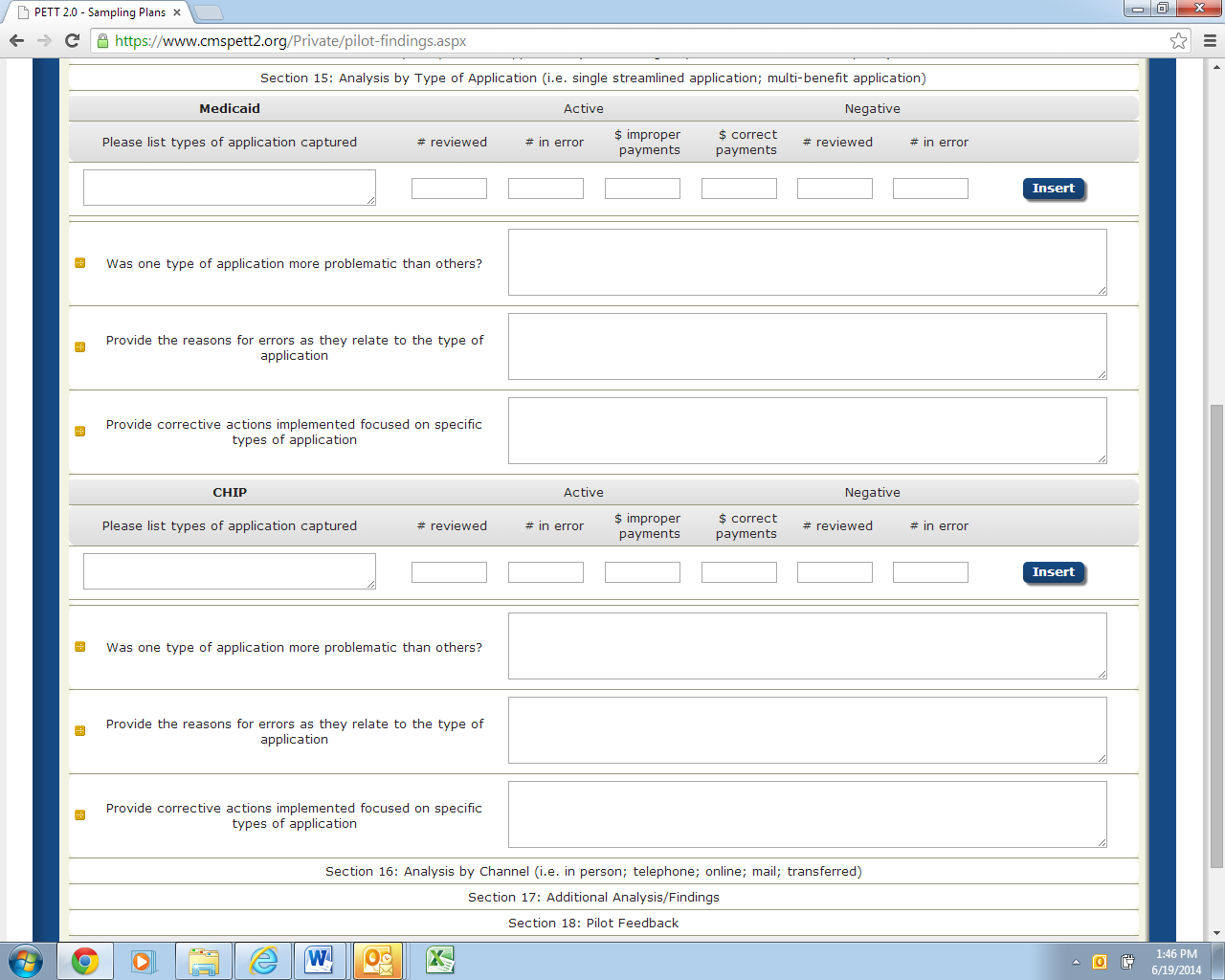

Section 15 – Analysis by Type of Application (i.e. single streamlined application; multi-benefit application)

Figure 27 shows the Section 15 required fields. Below, each field is described in detail.

Figure 27: Analysis by Type of Application

Separate subsections for Medicaid and CHIP

Please list types of application captured: (text)

NOTE: Users should select the “Insert” button for each Type of Application that needs to be entered.

Number of decisions reviewed: Active (number)

Number of decisions in error: Active (number)

Dollar value of improper payments: Active (number--do not include $)

Dollar value of correct payments: Active (number--do not include $)

Number of decisions reviewed: Negative (number)

Number of decisions in error: Negative (number)

Was one type of application more problematic than others? (text)

Provide the reasons for errors as they relate to type of application: (text)

Provide corrective actions implemented focused on specific types of application: (text)

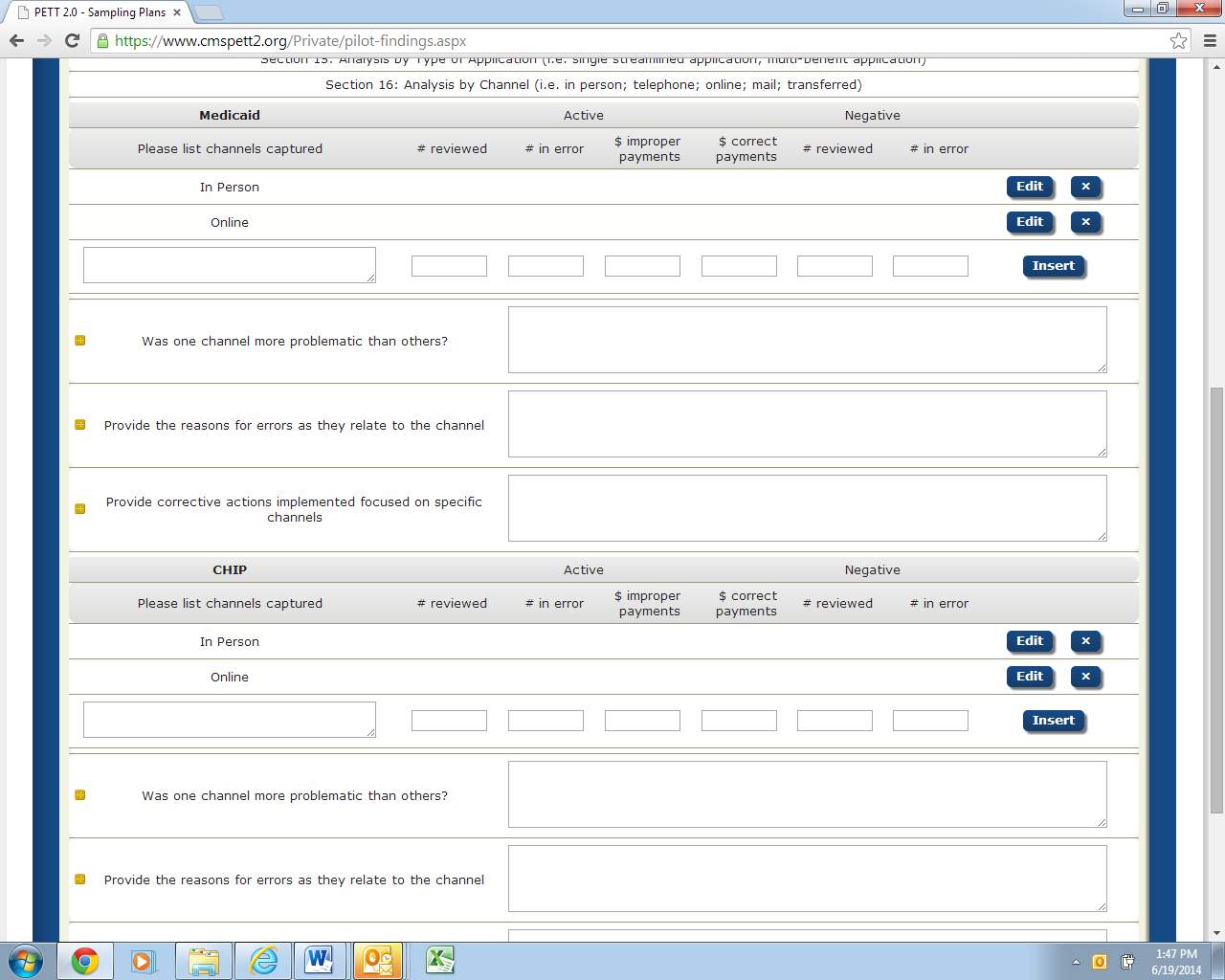

Section 16 – Analysis by Type of Channel (i.e. in person; telephone; online; mail; transferred)

Figure 28 shows the Section 16 required fields. Below, each field is described in detail.

Figure 28: Analysis by Type of Channel

Separate subsections for Medicaid and CHIP

Please list channels captured: (text)

Channels include in person, telephone, online, mail and transferred. The required prepopulated channels are “In Person” and “Online”. NOTE: Users should select the “Insert” button for each Type of Channel that needs to be entered.

Number of decisions reviewed: Active (number)

Number of decisions in error: Active (number)

Dollar value of improper payments: Active (number--do not include $)

Dollar value of correct payments: Active (number--do not include $)

Number of decisions reviewed: Negative (number)

Number of decisions in error: Negative (number)

Was one channel more problematic than others? (text)

Provide the reasons for errors as they relate to point of application: (text)

Provide corrective actions implemented focused on specific points of application: (text)

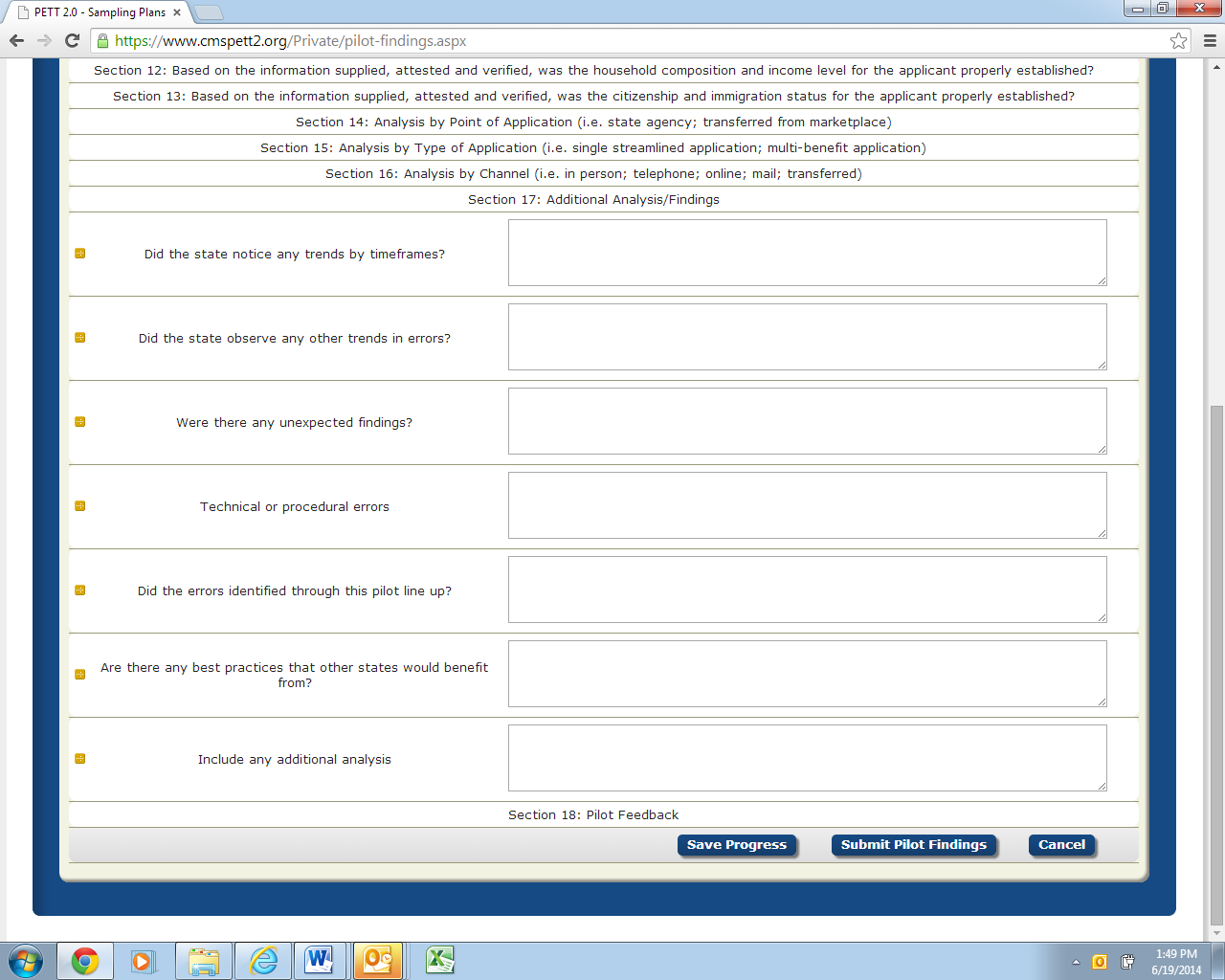

This section is intended to capture any additional information that can be gleaned from the pilot results that was not captured above. States are asked to provide information in each of the fields below. If the question/item is not relevant to the state’s pilot findings, please specify that in the field.

Figure 29 shows the Section 17 required fields. Below, each field is described in detail.

Figure 29: Additional Analysis/Findings

Did the state notice any trends by timeframes? (text)

For example, were October-December findings different than January-March findings? If yes, please describe.

Did the state observe any other trends in errors? (text)

If yes, please describe. Were the trends expected or unexpected?

Were there any unexpected findings? (text)

If yes, please describe.

Technical or procedural errors: (text)

Provide a description of any technical or procedural errors that were identified through the pilot along with actions your state took to address the issues.

Did the errors identified through this pilot line up with previous PERM/MEQC errors identified in your state? (text)

What was different or similar about pilot findings to previous PERM/MEQC findings?

Are there any best practices that other states would benefit from? (text)

For sections that had no or few errors identified, what processes does the state have in place that helped lead to accurate determinations?

Include any additional analysis: (text)

Include any additional analysis, findings and comments the state would like to report.

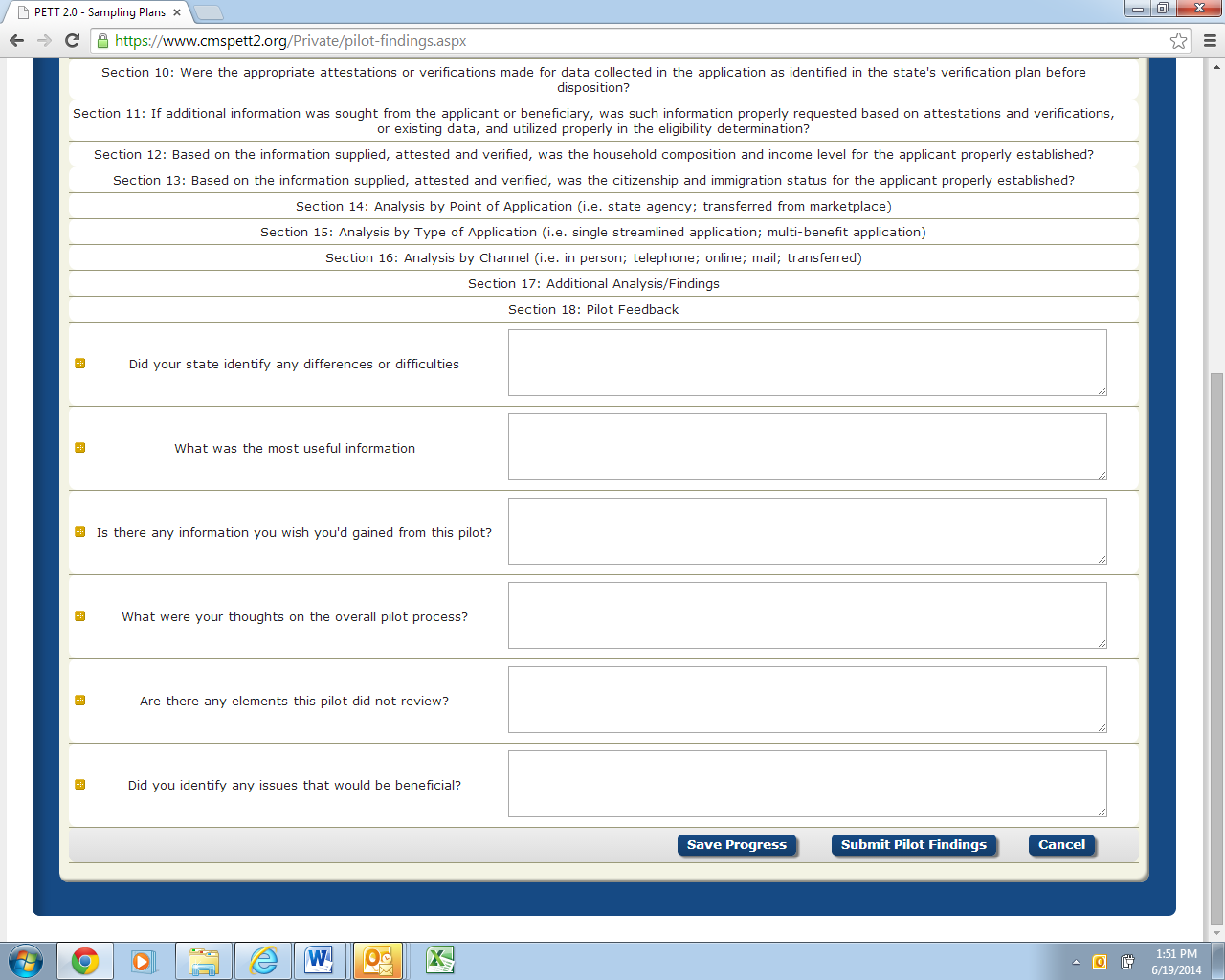

Figure 30 shows the Section 18 required fields. Below, each field is described in detail.

Figure 30: Pilot Feedback

CMS asks that states provide candid feedback on the Round 1 pilots so the pilot process can be improved in future rounds. States are asked to answer the following questions:

Did your state identify any difference or difficulties: (text)

Based on prior experiences with PERM and MEQC, did your state identify any differences or difficulties performing eligibility reviews for MAGI cases?

What was the most useful information? (text)

What was the most useful information you gained from the pilot? What information is most likely to help you improve systems, processes, policies, etc.?

Is there any information you wish you'd gained from this pilot? (text)

What were your thoughts on the overall pilot process? (text)

What did you think about the overall pilot process and what factors would have made the pilot process easier for the state?

Are there any elements this pilot did not review? (text)

Are there any elements this pilot did not review that would be worth including in future pilots?

Did you identify any issues that would be beneficial? (text)

Did you identify any issues that would be beneficial to focus on in future pilots?

Please submit all questions on the content of Pilot Review Findings to [email protected]. Any questions on the use of the PETT 2.0 website should be directed to [email protected].

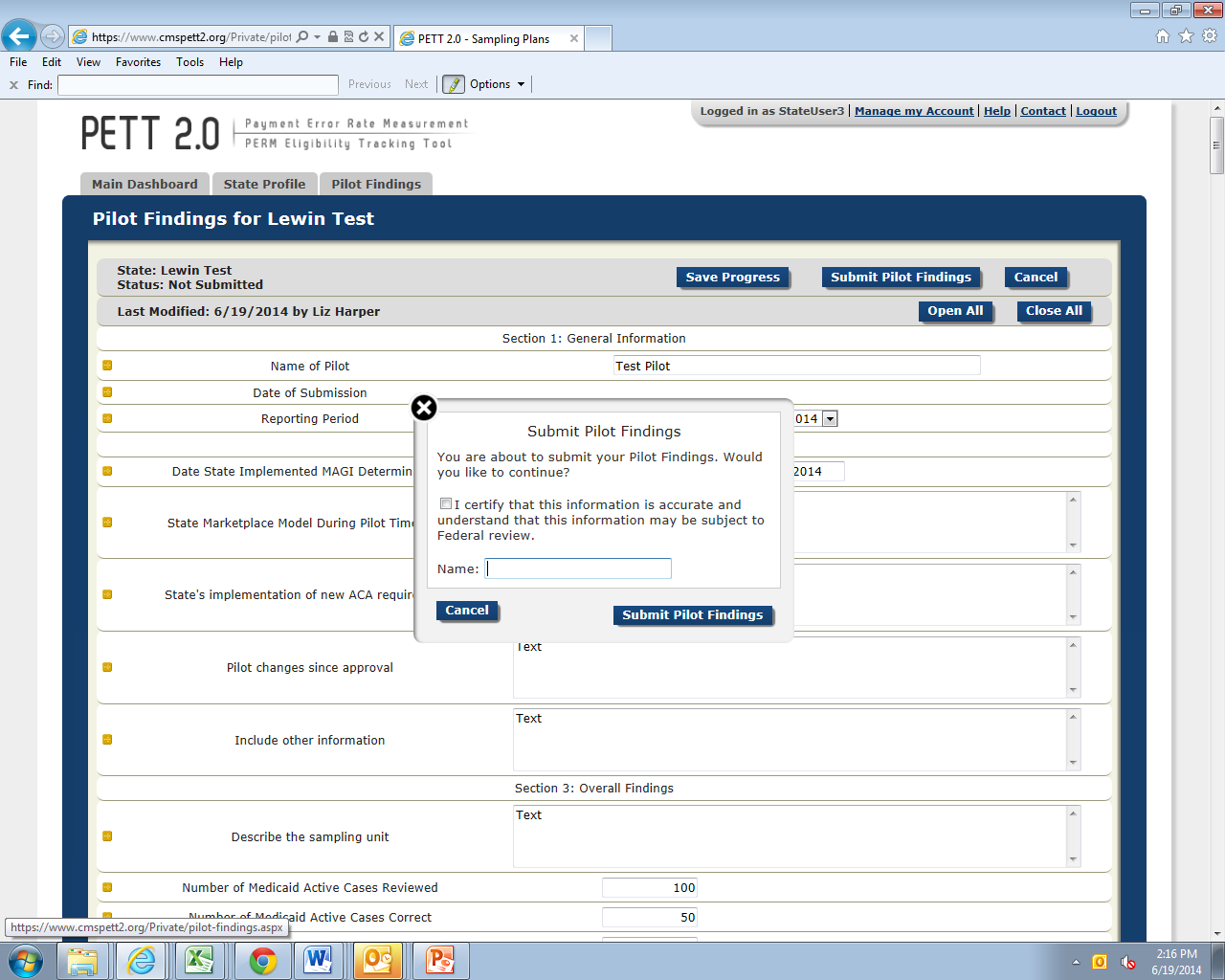

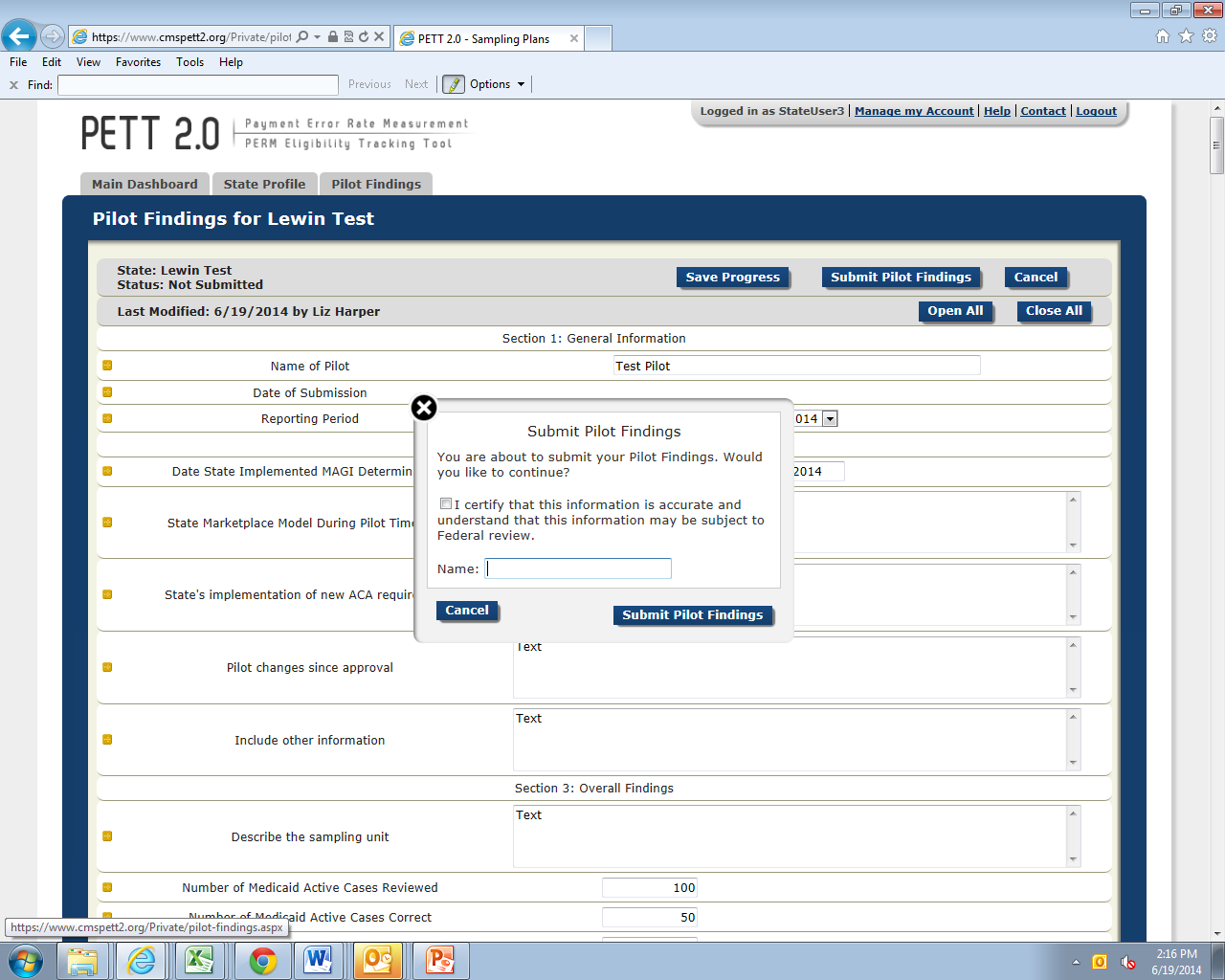

After a state has entered the Round 1 Pilot Findings and confirmed the completeness and accuracy of the reported data, states should click the “Submit Pilot Findings” button, as shown in Figure 31. NOTE: States must be in “Edit” mode to view the “Submit Pilot Findings” button.

Figure 31: Submit Pilot Findings

Prior to selecting the “Submit Pilot Findings” button, users will be required to certify the submitted results as show in Figure 32. Users must check the box next to the attestation “I certify that this information is accurate and understand that this information may be subject to Federal review.” Users must then enter the name of the person submitting the report. The website will not accept the pilot findings submission unless the results have been certified.

Figure 32: Certification of Results

Upon submission, CMS will be notified that the state has submitted its pilot findings. At that time, the state’s status on the home page will change to “Submitted - Under CMS Review.” CMS will conduct a review of the state’s findings and will provide comments, via the PETT 2.0 site.

States will not be able to make any changes to the Round 1 Pilot Findings as long as the status of the report is “Submitted - Under CMS Review.” When CMS has completed its review, CMS will change the status to “Approved” or “Submitted - State Revising” if revisions are needed. State users will receive an email notification when CMS changes the state’s report status.

If CMS approves the state’s pilot findings, users can no longer edit the data. If a state has to make a change to the data, a state user must contact CMS to change the status of the report back to “Submitted - State Revising.”

If CMS has comments on the state’s pilot findings, CMS will change the status of the report back to “Submitted – State Revising.” State users will see the CMS comments in one of five comment boxes as shown in Figure 33. State users should proceed with making updates and edits in response to the CMS comments and resubmit the report for CMS review.

Figure 33: Comments on Round 1 Pilot Findings

.

1 http://www.cms.gov/Research-Statistics-Data-and-Systems/Monitoring-Programs/Medicaid-and-CHIP-Compliance/PERM/Downloads/Round1MedicaidandCHIPReportingandcorrectiveactionguidance.pdf

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Kaleigh Beronja |

| File Modified | 0000-00-00 |

| File Created | 2021-01-31 |

© 2026 OMB.report | Privacy Policy