Impact of Driver Compensation on Commercial Motor Vehicle Safety Contract Management Plan

PRA-2126NEW.DriverCompStudy.PartA.AttI.FMCSA Driver CompensationStudyPlan.033114.docx

The Impact of Driver Compensation on Commercial Motor Vehicle Safety

Impact of Driver Compensation on Commercial Motor Vehicle Safety Contract Management Plan

OMB:

The Impact of Driver Compensation on Commercial Motor Vehicle Safety Survey

Contract Management Plan

Scott Fillmon, Perry Jones, and Louis Rabinowitz

Street Legal Industries, Inc.

October 29, 2013

Study Conducted Under Contract to the Federal Motor Carrier Safety Administration

Contact No. DTMC75-12-Q-00022

Abstract

The primary mission of the Federal Motor Carrier Safety Administration (FMCSA) is to reduce crashes, injuries, and fatalities involving large trucks and buses. Toward that end, FMCSA initiated The Impact of Driver Compensation on Commercial Motor Vehicle Safety Survey. The study is being conducted by Street Legal Industries, Inc. This document provides details about the approach that Street Legal proposes to take to complete the study.

Contents

Develop the Concepts, Methods, and Design of the Survey (Survey Planning) 8

Initial survey design and data collection instrument 15

Conduct Survey Questionnaire Pilot Study 15

Obtain IRB approval to collect study data 16

Prepare collection databases and online collection questionnaire 19

Determine survey frame and select sample 20

Complete carrier SMS data to facilitate carrier contact 26

Submit report describing the carrier frame and carrier safety records 26

Develop data processing protocol 26

Distribute survey introductory letter to carriers chosen as part of the sample 27

Distribute survey participation email 27

Data collection and follow-up 29

Submit report on data analysis of survey results 40

Protocol Cover Sheet

Title: The Impact of Driver Compensation on Commercial Motor Vehicle Safety Survey

Protocol Number: N/A

Date and Version of the Protocol: January 20, 2013, Version 1

IND/IDE Number: N/A

Name and Contact Information:

U.S. Department of Transportation, Federal Motor Carrier Safety Administration

(Sponsoring Organization)

Theresa Hallquist, Research Analyst (FMCSA Contracting Officer Technical Representative)

1200 New Jersey Avenue, SE

Washington, DC 20590

Phone: (202) 366-1064

Street Legal Industries, Inc. (Investigating Organization)

Scott Fillmon, Project Manager

102 Jefferson Court

Oak Ridge, TN 37830

Phone: (210) 284-2298

Names of all institutions that are involved in the research study:

U.S. Department of Transportation, Federal Motor Carrier Safety Administration

Street Legal Industries, Inc.

Introduction

According to the US Department of Transportation’s (DOT) Federal Motor Carrier Safety Administration (FMCSA) Analysis Division publication, Large Truck and Bus Crash Facts 2010 (2012), the number of large trucks involved in fatal accidents, injury crashes, and property damage crashes decreased considerably in the ten year period from 2000 through 2010. Fatal accidents involving large trucks during the period dropped from 4,995 to 3,484; the number of large trucks involved in injury crashes decreased from 101,000 to 58,000; and the number of large trucks involved in property damage only crashes dropped from 351,000 to 214,000. During the same ten-year period, the number of buses involved in fatal crashes decreased from 325 to 249 (p.1).

The significant decline in serious incidents over the period of performance examined in the DOT document is laudable. However, it is noteworthy that “One or more driver-related factors were recorded for 63 percent of the drivers of large trucks involved in single-vehicle fatal crashes and for 27 percent of drivers of large trucks involved in multiple-vehicle fatal crashes” (p.63). The DOT further reports that the most often cited immediate cause of fatal crashes involving large trucks was speeding (p. 63). It is clear from these findings that the number of crashes involving large trucks can drop even further if inappropriate driver behaviors can be reduced.

Type of Research

The type of research being conducted by Street Legal Industries, Inc. under contract to the Federal Motor Carrier Safety Administration is social science research.

Problem Statement

Commercial Motor Vehicle (CMV) driver behavior accounts for a large percentage of crashes. In addition, drivers who are involved in crashes and receive citations for unsafe driving are more likely to be involved in future accidents and receive additional citations. While data showing immediate causes of crashes directly attributable to driver behaviors exist, there have been no studies that examine how a variety of certain variables may lead to the unsafe driving behaviors that cause crashes.

Purpose of the Study

The Federal Motor Carrier Safety Administration (FMCSA) has contracted with Street Legal Industries, Inc. (SLIND, aka the research team), to administer the “Impact of Driver Compensation on Commercial Motor Vehicle Survey” (the study). The primary purpose of the study will be to analyze the possible unintended safety consequences of the various methods in which CMV drivers in the sample are compensated. Should the study show that there is a relationship between the methods drivers are paid and the methods’ effect on safe driving performance, a potential benefit of the study will be to identify the method of compensation that will minimize crashes and unsafe driving behaviors leading to fewer fatalities and injuries. No risks are anticipated as a result of the study.

In addition to the primary purpose of the study, a number of other potentially extraneous variables will be assessed. These variables include the following:

Type of commercial motor vehicle operation (long-haul, short-haul, or line-haul) by size of carrier (very small, small, medium, or large)

Whether for-hire, private, or owner operated and whether the carrier can be characterized as a truckload, less-than-truckload, regional, tanker, or other type of carrier

Number of power units

Average length of haul

Primary commodities carried

Number of regular, full-time drivers the carrier employs

Average driving experience, in years, of drivers working for the companies included in the sample

This data will be used to demonstrate possible relationships of variables as well as determine if the variables may contribute to unintended safety consequences. Unintended safety consequences include driver out-of-service rates, vehicle out-of-service rates, and crash rates. For the purposes of this study, “commercial motor vehicle (CMV)” will refer only to trucks and not include passenger vehicles such as buses.

Definitions of Key Terms

Behavior Analysis and Safety Improvement Categories (BASICS) – Six categories of unsafe driving practices that are used to “rank entities’ performance relative to their peers” (Green & Blower, 2011; John A. Volpe National Transportation Systems Center):

Unsafe Driving BASIC – Operation of CMV in a dangerous or careless manner. Example violations: speeding, reckless driving, improper lane change, and inattention.

Hours of Service (HOS) Compliance BASIC – Operation of CMVs by drivers who are ill, fatigued, or in non-compliance with the hours-of-service (HOS) regulations. This BASIC includes violations of regulations pertaining to records of duty status (RODS) as they relate to HOS requirements and the management of CMV driver fatigue. This BASIC replaces the Fatigued Driving BASIC as of the publication of Carrier Safety Measurement System (CSMS) Methodology in December 2012.

Driver Fitness BASIC – Operation of CMVs by drivers who are unfit to operate a CMV due to lack of training, experience, or medical qualifications. Example violations: failure to have a valid and appropriate commercial driver’s license and being medically unqualified to operate a CMV.

Controlled Substances and Alcohol BASIC – Operation of CMVs by drivers who are impaired due to alcohol, illegal drugs, and misuse of prescription or over-the-counter medications. Example violations: use or possession of controlled substances or alcohol.

Vehicle Maintenance BASIC – CMV failure due to improper or inadequate maintenance. Example violations: brakes, lights, other mechanical defects, and failure to make required repairs.

Improper Loading/Cargo Securement BASIC – CMV incident resulting from shifting loads, spilled or dropped cargo, and unsafe handling of hazardous materials. Example violations: improper load securement, cargo retention, and hazardous material handling.

Commercial Motor Vehicle (CMV) – According to the FMCSA Regulations, Subpart A – FMCSA, 2012, Regulations, Subpart A – General, § 383.5, Definitions, a “motor vehicle or combination of motor vehicles used in commerce to transport passengers or property if the motor vehicle— (1) Has a gross combination weight rating or gross combination weight of 11,794 kilograms or more (26,001 pounds or more), whichever is greater, inclusive of a towed unit(s) with a gross vehicle weight rating or gross vehicle weight of more than 4,536 kilograms (10,000 pounds), whichever is greater; or (2) Has a gross vehicle weight rating or gross vehicle weight of 11,794 or more kilograms (26,001 pounds or more), whichever is greater; or (3) Is designed to transport 16 or more passengers, including the driver; or (4) Is of any size and is used in the transportation of hazardous materials as defined in this section.” For purposes of this study, trucks will be included but buses and other commercial passenger vehicles will not be included.

Crash Indicator – “Histories or patterns of high crash involvement, including frequency and severity… based on information from state-reported crash reports” (John A. Volpe National Transportation Systems Center, Safety Measurement System (SMS) Methodology Draft, 2007).

MCS-150 Form – “The MCS-150 form is technically the application for a USDOT number commonly called a Motor Carrier Identification Report. This is the form a person or entity must use to request a USDOT number before beginning operations and, more importantly, update their MCS-150 information, if they are already in business.” (Morris as cited in North American Transportation Association, 2011). Data provided on MCS-150 forms is maintained in an FMCS database.

Motor Carrier Management Information System (MCMIS) – According to the Federal Commercial Carrier Safety Administration MCMIS Catalog and Documentation webpage, “MCMIS contains information on the safety fitness of commercial motor carriers (truck & bus) and hazardous material (HM) shippers subject to the Federal Motor Carrier Safety Regulations (FMCSR) and the Hazardous Materials Regulations (HMR).”

Pay per Mile System – Compensation system that pays drivers on a per mile basis. Drivers on this system may or may not be paid for loading, unloading, waiting while being loaded, waiting while being unloaded, breakdowns, detention time (waiting to load or unload), or other off-the-road time. Also known as “hub miles,” a reference to a mechanical odometer mounted to an axle that is called a “hubometer.”

Pay per Hour System – Compensation system that pays drivers on a per hour basis. Drivers on this system may or may not be paid for loading, unloading, waiting while being loaded, waiting while being unloaded, breakdowns, detention time (waiting to load or unload), or other off-the-road time.

Pay per Load System – Compensation system that pays drivers a specific amount for each load delivered.

Proportion of Freight Revenue – Compensation system that pays drivers based on a percentage of freight hauled. Typically drivers are not paid for “empty” miles. The driver may receive additional compensation for fuel surcharges, extra pickups or drops, or for “tarping” loads (installing a tarp over cargo).

Safety Consequence – For the purposes of this study, safety consequences include speeding or other traffic law violations or a SAFETYNET reportable crash.

Safety Measurement System – A methodology developed by the John A. Volpe Transportation Systems Center that “measures the safety of motor carriers and commercial motor vehicle (CMV) drivers to identify and monitor safety problems” (Safety Measurement System (SMS) Methodology Draft, 2007). The SMS “ranks entities’ performance relative to their peers in any of six Behavior Analysis and Safety Improvement Categories (BASICS)” and performs as a “crash indicator.” See definition of Behavior Analysis and Safety Improvement Categories (BASICS) above.

SAFETYNET Reportable Crash – A crash that involves a truck used for commercial purposes, with a gross vehicle weight rating (GVWR) or gross combination weight rating greater than 10,000 pounds, or a commercial bus designed to transport more than eight people, including the driver. The crash must result in at least one fatality, at least one injury involving immediate medical attention away from the crash scene, or at least one vehicle disabled as a result of the crash and transported away from the crash scene. [Ref.: US Department of Labor Large Truck and Bus Crash Facts 2010]

Significance of the Investigation

The study will investigate whether there is a relationship between the way CMV drivers are compensated and incidences of unsafe driving behavior or consequence. In particular, the research team will determine if there is a greater relationship between the pay-per-mile method of paying drivers and safety issues than other compensation methods. Other characteristics will be assessed to take into account the influence that possible extraneous variables may have on safety. Public policy at the Federal level, particularly related to the Fair Labor Standards Act and how drivers can be compensated, might be affected should such a relationship be shown to exist.

Review of Literature

An ongoing review of related FMCSA studies and related studies, surveys, and reports of Federal and non-Federal sources is being conducted to minimize duplication of efforts, identify best practices of completed projects, identify statistical information and survey questionnaire items that can be repurposed for the current study, and minimize costs to the government and taxpayers. In addition to the review of existing studies, a review of literature in the fields of research design and statistics will inform the design, development, and implementation of the study.

Several resources have informed the research team’s comprehension of the rate of commercial vehicle crashes that has been necessary for framing the current study. These resources reveal the scope of large truck and bus accidents as well as recent safety trends in the CMV industry and common immediate causes of CMV accidents. One such resource is the US Department of Transportation, Federal Motor Carrier Safety Administration, Analysis Division publication Large Truck and Bus Crash Facts 2010 (2012) which provides data such as fatal crash statistics, property damage only (PDO) crash statistics, profiles of drivers involved in crashes, and causes of crashes. A study completed for FMCSA by the University of Michigan Transportation Research Institute, Tracking the Use of Onboard Safety Technologies Across the Truck Fleet (2009), has proven to be a particularly valuable resource. The University of Michigan study uses the Motor Carrier Management Information System (MCMIS) as its survey frame, which is the same frame being employed for the current study. The research team for the current study has also benchmarked the University of Michigan study to conclude that they should anticipate a rather low initial response rate to the survey questionnaire.

Another resource that detailed three major types of critical events leading to truck crashes (running out of lane, vehicle loss of control due to traveling too fast for conditions, etc., and colliding with the rear end of another vehicle) is The Large Truck Crash Causation Study Summary (2007).

The Volpe Center’s Safety Management System Methodology Draft (2007) and Green and Blower’s “Evaluation of the CSA 2010 Operational Model Test” (2011) both offer valuable definitions of terms and explanations of FMCSA initiatives that will used in the study. Particularly useful are definitions of the six Behavior Analysis and Safety Improvement Categories (BASICS) and crash indicators, as well as a description of the Safety Measurement System (SMS). Green and Blower also offer evidence that the SMS is an important, reliable tool for mitigating crashes. Further, they constructed scatterplots that show associations between carriers’ BASICs percentiles and crash rates.

Howarth, Alton, Arnopolskay, Barr, and Di Domenico prepared the FMCSA technical report “Driver Issues: Commercial Motor Vehicle Safety Literature Review” (2007). While the review found several studies detailing a relationship between pay and safety, most focused on amount of pay or other wage incentives rather than method of compensating CMV drivers (for example, an article by Daniel Rodriguez, Felipe Targa, and Michael Belzer (2006) titled “Pay Incentives and Truck Driver Safety: A Case Study”). However, the review yielded two notable studies that do offer some insight into the correlation between how drivers are compensated and accident rates. Both of the articles are by Kristen Monaco and Emily Williams. In “Accidents and Hours-of-Service Violations Among Over-the-Road Drivers” (2001), the authors found that “Drivers paid by the hour are roughly half as likely as those paid by the mile to doze or fall asleep at the wheel. Hours of sleep is also negatively related to the likelihood of falling asleep at the wheel. Sleeping an additional hour makes a driver 0.85 times as likely to fall asleep at the wheel.” In the second article authored by Monaco and Williams, “Assessing the Determinants of Safety in the Trucking Industry,” they reported that “Drivers paid by percentage of revenue reported a higher percentage of accidents, moving violations, and logbook violations (18%, 38%, and 63%, respectively) than those paid by the mile (13%, 27%, and 55%, respectively).” While this is counter to the hypothesis for the current FMCSA study, the authors go on to point out that “This is not surprising because a driver who is paid by the mile typically gets paid the same amount per mile regardless of the revenue generated by the load (exceptions being premiums paid for hazardous materials, etc.). Drivers who are paid a percentage of revenue, primarily owner-operators, tend to drive more miles and run more loads in order to compensate for any empty or low-revenue loads.”

The research team has reviewed a number of reports resulting from studies that have been conducted for the FMCSA in order to learn more about research designs, report formats, and other characteristics that are typical of studies completed for the agency. Notable examples include “The Impact of Driving, Non-Driving Work, and Rest Breaks on Driving Performance in Commercial Motor Vehicle Operation” (2011), “Efficacy of Web-Based Instruction to Provide Training on Federal Motor Carrier Safety Regulations” (2011), “Improving Heavy-Duty Diesel Truck Ergonomics to Reduce Fatigue and Improve Driver Health and Performance” (Fu, Calgano, Davis, Boulet, & Wasserman, 2010), and “Weather and Climate Impacts on Commercial Motor Vehicle Safety (2011).”

Insight about the preponderance of the pay-per-mile approach to compensating CMV drivers is provided in The Transportation Research Board of the National Academies Transportation Research Circular No. E-C146, Trucking 101: An Industry Primer (Burks, Belzer, Kwan, Pratt, and Shackelford, 2010). The authors cite both a University of Michigan Trucking Industry Program Truck Driver Survey that found that 67% of all over-the-road drivers earn mileage-based pay and a driver compensation study by ATA that revealed that 82% of all team drivers and 60% of all solo drivers are paid per mile.

A number of Federal Government resources have been reviewed by the research team. For instance, the research team consulted the Final Information Quality Bulletin for Peer Review (2004) issued by the Director of the Office of Management and Budget (OMB) when drafting the approach for the initial and final peer reviews that are required for the study and appear in this document. Another OMB resource, Standards and Guidelines for Statistical Surveys (2006), has also been reviewed to ensure compliance with requirements detailed in the Standards. Other resources consulted by the research team to ensure compliance with regulations regarding research include the Confidential Information and Statistical Efficiency Act of 2002, Privacy Act of 1974, Code of Federal Regulations Title 45, Public Welfare, Department of Health and Human Services, Part 46, Protection of Human Subjects (2009), and E-Government Act of 2002.

Finally, the resources that have been of particular value in developing the management plan for the project include the General Accounting Office’s (GAO) Report to Program and Methodology Division, Quantitative Analysis: An Introduction (1992) and the Statistics Canada publication Survey Methods and Practices (2010).

Hypotheses

Null hypothesis (H0): The proportion of unsafe driving behaviors is the same for all methods of driver compensation.

Alternative hypothesis (H1): The proportion of unsafe driving behaviors varies depending on method of driver compensation.

Methodology

The review of literature in this document reveals that considerable research has been done to identify and characterize immediate causes of crashes involving commercial motor vehicle drivers, including unsafe driver behaviors that have resulted in crashes and reportable safety violations. The research team will survey a stratified random sample of CMV carriers to collect data related to characteristics (variables) listed in the Purpose section of this document. Once data is collected from the carriers they will be correlated to safety performance.

The methodology the research team will use to execute the study includes the tasks in Exhibit I, Tasks to be Administered to Complete the Study. These tasks, and the order in which they are administered, are consistent with the FMCSA Request for Quote No. DTMC75-12-Q-00022 (the RFQ) and Office of Management and Budget (OMB) Standards and Guidelines for Statistical Surveys. Each of the tasks and their requisite subtasks will be described in the following sections of this document.

-

Exhibit I: Tasks to be Administered to Complete the Study

Develop the concepts, methods, and design of the survey

Conduct initial peer review

Initial survey design and data collection instrument

Conduct survey questionnaire pilot study

Obtain IRB approval to collect study data

Submit application to OMB for approval

Prepare collection databases and online collection tool

Conduct Survey

Determine survey frame and select sample

Complete carrier SMS data to facilitate carrier contact

Submit report describing survey frame and carrier safety records

Develop data processing protocol

Distribute survey introductory letter to carriers chosen as part of the sample

Collect data

Process and edit data

Analyze data

Submit report on data analysis of survey results

Gather feedback from stakeholders

Write draft final report

Conduct final peer review

Provide final briefing

Submit final report

Develop the Concepts, Methods, and Design of the Survey (Survey Planning)

Justification and potential users. FMCSA has initiated this study in order to determine if the pay per mile approach to compensating commercial motor vehicle drivers contributes to safety violations leading to risk of injury or death of CMV drivers and/or other motorists. Specific safety violations that will be examined include speeding and other motor vehicle traffic law violations; reportable crashes that result in fatalities, injuries, or damages to commercial vehicles resulting in their being disabled and having to be removed from the crash scene; violations of hours-of-service regulations; and vehicle out-of-service and/or driver out-of-service status.

Goal and objectives. Consistent with the RFQ authorizing the study, the primary mission of the Federal Motor Carrier Safety Administration (FMCSA) is to reduce crashes, injuries and fatalities involving large trucks and buses. Toward that end, FMCSA commissions studies to identify the causes of crashes and to influence policy to mitigate those causes. The goal of the study described herein is to characterize the industry practices with respect to driver compensation and determine the effect on safety (FMCSA Request for Quote No. DTMC75-12-Q-00022, 2012).

The objectives detailed in the RFQ that support the FMCSA mission include the following:

Objective 1: Complete a survey of trucking companies to determine method of driver compensation and collect other data necessary for executing the study described herein.

Objective 2: Evaluate the impact of variables being studied on CMV safety.

Objective 3: Assess the safety implications of the variables being studied and implications for requirements of the Fair Labor Standards Act.

Decision the study is designed to inform. According to the RFQ, policy decisions may be contingent on the study’s findings:

To explore the relationship between a number of variables and safety, FMCSA will work with other Federal agencies and the Transportation Research Board (TRB) to assess the safety implications of the variables.

Key survey estimates and the precision required of the estimates. The research team will base estimates on a stratified random sample of non-passenger CMV carriers selected from the Motor Carrier Management Information System (MCMIS) database that includes records for more than 730,000 CMV carriers. The estimate will be at a 95% confidence level.

Conduct initial peer review

Two peer reviews are required for the study: an initial review with the purpose of gathering input from subject matter experts regarding the study’s methodology and a second peer review of the draft final report. This section of the analysis plan describes the initial peer review and the second peer review is covered in a later section of this document.

Compliance issues. The study being administered by the research team is covered by the Final Information Quality Bulletin for Peer Review (the Bulletin) issued by the Director of the Office of Management and Budget (OMB) on December 16, 2004. The bulletin “includes guidance to federal agencies on what information is subject to peer review, the selection of appropriate peer reviewers, opportunities for public participation, and related issues. The bulletin also defines a peer review planning process that will permit the public and scientific societies to contribute to agency dialogue about which scientific reports merit especially rigorous peer review.”

The Bulletin distinguishes between “influential scientific information” and “highly influential scientific information.” The latter applies more stringent requirements for peer review assessments. According to the bulletin, “the term ‘influential scientific information’ means scientific information the agency reasonably can determine will have or does have a clear and substantial impact on important public policies or private sector decisions.” Further, “the term ‘scientific assessment’ means an evaluation of a body of scientific or technical knowledge, which typically synthesizes multiple factual inputs, data, models, assumptions, and/or applies best professional judgment to bridge uncertainties in the available information. These assessments include, but are not limited to, state-of-science reports; technology assessments; weight-of-evidence analyses; meta-analyses; health, safety, or ecological risk assessments; toxicological characterizations of substances; integrated assessment models; hazard determinations; or exposure assessments.” The Bulletin describes highly influential scientific assessments as those that “could have a potential impact of more than $500 million in any year” or “is novel, controversial, or precedent-setting or has significant interagency interest.” Peer reviews executed for highly influential scientific assessments must meet all of the guidelines prescribed in the Bulletin for influential scientific information as well as more stringent guidelines.

While the guidelines in the Bulletin are both prescriptive and rigorous, they also allow for some leeway for draft documents that are not intended as official disseminations: “In cases where a draft report or other information is released by an agency solely for purposes of peer review, a question may arise as to whether the draft report constitutes an official ‘dissemination’ under information-quality guidelines. Section I instructs agencies to make this clear by presenting the following disclaimer in the report:

THIS INFORMATION IS DISTRIBUTED SOLELY FOR THE PURPOSE OF PRE-DISSEMINATION

PEER REVIEW UNDER APPLICABLE INFORMATION QUALITY GUIDELINES. IT HAS NOT BEEN FORMALLY DISSEMINATED BY [THE AGENCY]. IT DOES NOT REPRESENT AND SHOULD NOT

BE CONSTRUED TO REPRESENT ANY AGENCY DETERMINATION OR POLICY.”

Peer review tasks. A number of valuable resources for conducting peer reviews consistent with the OMB Bulletin have been reviewed by the research team. One document that has been reviewed, Peer Review Process Guide: How to Get the Most out of Your TMIP Peer Review, prepared by the John A. Volpe National Transportation Systems Center, includes a useful table entitled “Tasks in the TMIP Peer Review Process.” While many of the tasks featured in the table are specific to the TMIP (Travel Model Improvement Program) peer reviews, the format of the table and many of the tasks listed provide a template for preparing a table for use in the Impact of Driver Compensation on Commercial Motor Vehicle Safety study. Exhibit II, “Tasks in the Impact of Driver Compensation on Commercial Vehicle Safety Study,” on the following page lists peer review tasks and the parties responsible for completing those tasks.

Exhibit II: Tasks in the Impact of Driver Compensation on Commercial Vehicle Safety Study |

|||

Task |

SLIND Research Team |

FMCSA Staff |

Peer Panelists |

Identify peer review goals |

O |

R |

O |

Plan peer review meeting |

R |

R |

O |

Choose panelists |

C |

R |

O |

Develop agenda |

R |

A |

C |

Invite attendees |

C |

R |

O |

Identify specific issues and questions for peer panelists to address |

R |

A |

C |

Prepare and distribute background material |

R |

A |

O |

Complete meeting logistics |

C |

A |

O |

Develop presentations for peer review meeting |

R |

A |

O |

Conduct pre-peer review conference call |

R |

C |

C |

Host meeting |

TBD |

TBD |

O |

Present study details |

R |

C |

O |

Take notes |

R |

O |

R |

Develop and present recommendations |

O |

O |

R |

Document meeting |

R |

O |

O |

Write first draft of report; distribute to peers and FMCSA for review |

R |

O |

O |

Send report comments to SLIND |

O |

R |

R |

Incorporate changes |

R |

C |

C |

Submit final report to peers and FMCSA for approval |

R |

A |

A |

Analyze recommendations; develop implementation plan |

R |

A |

C |

Conduct post-peer review conference call |

R |

C |

C |

Evaluate progress |

R |

C |

C |

Legend: C = Consult (before decision), A = Approve, R = Responsible, O = No role

Plan peer review meeting. As per the project SOW:

The Contractor’s Research Team will work together with FMCSA to plan the peer review meeting (location, time, duration, and agenda).

It is anticipated that the planning process will be completed via telephone conference calls between the research team and FMCSA.

Choose panelists. FMCSA will select the subject matter experts who will constitute the peer review panel. It is anticipated that the panel will include—but not particularly be limited to—individuals with expertise in research design, statistical analysis, the Fair Labor Standards Act, and the commercial motor carrier industry.

Develop agenda. The research team and FMCSA will determine whether the panel review meeting can be completed in one day or whether a two-day meeting will be necessary. As detailed in the Volpe Peer Review Process Guide, this decision will be based on “the goals, scope, and panelists’ availability.” Whether the meeting lasts one or two days, the agenda will begin with a presentation by the research team on the study’s goals, proposed methodology, perceived challenges, anticipated approach to statistical analysis, and other such matters.

After the research team’s presentation, peer panelists will meet privately to discuss the proposed approach to the study and to discuss recommendations for improving the study. The panelists will then present recommendations allowing the research team to query the panel clarifying recommendations and creating dialogue about how best to implement recommendations. A preliminary draft agenda appears in Exhibit III, Examples of Peer Review Meeting Agendas for One- and Two-Day Meetings, below.

Exhibit III: Examples of Peer Review Meeting Agendas for One- and Two-Day Meetings |

|

Example of a One-Day Peer Review |

|

9:00 ‒ 9:30 |

Introductions, review of agenda |

9:30 ‒ 10:30 |

Presentation of research team’s proposed approach to conducting the study |

10:30 ‒ 10:45 |

Break |

10:45 ‒ 12:00 |

Continuation of research team’s presentation |

12:00 ‒ 1:00 |

Break for lunch (Note: suggest catered lunch for one-day meeting) |

1:00 ‒ 3:00 |

Peer reviewers convene to review and discuss research team approach and formulate recommendations for improving study |

(Table continues on next page)

3:00 ‒ 3:15 |

Break |

3:15 ‒ 5:00 |

Peer reviewers present recommendations for improving study to research team; research team queries panelists to clarify and refine recommendations; adjourn |

|

|

Example of a Two-Day Peer Review |

|

Day One |

|

9:00 ‒ 9:30 |

Introductions, review of agenda |

9:30 ‒ 10:30 |

Presentation of research team’s proposed approach to conducting the study |

10:30 ‒ 10:45 |

Break |

10:45 ‒ 12:00 |

Continuation of research team’s presentation |

12:00 ‒ 1:30 |

Break for lunch |

1:30 ‒ 3:30 |

Peer reviewers convene to review and discuss research team approach and formulate recommendations for improving study |

3:30 ‒ 3:45 |

Break |

3:45 ‒ 5:00 |

Peer reviewers reconvene to complete review and discussion; adjourn for day |

Example of a Two-Day Peer Review |

|

Day Two |

|

9:00 ‒ 9:30 |

Peer reviewers convene to review previous days findings |

9:30 ‒ 10:30 |

Peer reviewers present recommendations for improving study to research team |

10:30 ‒ 10:45 |

Break |

10:45 ‒ 12:00 |

Peer reviewers continue to present recommendations for improving study to research team |

12:00 ‒ 1:30 |

Break for lunch |

1:30 ‒ 2:45 |

Research team queries panelists to clarify and refine recommendations; research team and peer reviewers dialogue about approaches for implementing changes to improve study |

2:45 ‒ 3:00 |

Break |

3:00 ‒ 4:00 |

Group concludes implementation discussion; adjourn |

Invite attendees. FMCSA will invite peer reviewers and determine an optimal date or dates for conducting the peer review meeting. FMCSA will also determine how best to resolve any potential conflicts-of-interest that might exist for any of the potential panelists.

Identify specific issues and questions for peer panelists to address. The research team will identify specific issues and questions about the study’s proposed research methodology, statistical analyses to be applied to collected data, commercial motor vehicle carrier issues, and other such matters. These issues and questions will be captured in a draft paper to be submitted to FMCSA for review. FMCSA will make recommendations about modifying the issues and questions presented by the research team and/or will suggest additional issues and questions to include in the peer review.

Prepare and distribute background material. Based on the issues and questions identified by the research team and reviewed and approved by FMCSA, the research team will prepare a background document and ancillary background materials to be distributed to the peer reviewers. Included will be information about the goals and purpose of the study, the project management plan, the research proposal, sampling plan, draft survey questionnaire, and other pertinent background documentation.

Complete meeting logistics planning. The research team and SLIND support staff will work with FMCSA to complete logistics planning for the peer review meeting.

Develop presentations for peer review meeting. The research team will develop presentations for the peer review meeting to include any additional materials to be distributed at the meeting and a PowerPoint presentation that will be used to summarize the study’s goals, proposed methodology, perceived challenges, and anticipated approach to statistical analysis.

Conduct peer review conference call. The research team will coordinate a pre-peer review conference call to include members of the research team, peer reviewers, and FMCSA staff. The call will take place approximately one week prior to the peer review meeting. The conference call will introduce items to be covered at the peer review meeting as well as last minute logistic items that may require resolution such as ground transportation, meeting, and lodging accommodations.

Host meeting. The decision about meeting location will determine whether the host organization will be SLIND or FMCSA. The host organization will be responsible for coordinating meeting location and space, audio-visual equipment requirements, meals and breaks, and other such logistics.

Present study details. The research team will present study details at the outset of the meeting and answer questions that will be helpful for the peer reviewers to meet and formulate recommendations for improving the study.

Record meeting proceedings. Research team members and SLIND support staff will be responsible for taking notes and documenting the proceedings of those parts of the peer review in which both research team members and peer reviewers take part. One of the peer review members will be designated to document the meeting in which peer reviewers meet as a group to formulate recommendations. Certain portions of meetings may be recorded, should peer reviewers allow.

Initial survey design and data collection instrument

The research team will finalize the survey design based on the input of subject matter experts in the initial peer review. The final design will be submitted to FMCSA for their review and the review of other subject matter experts or stakeholders that FMCSA deems appropriate. Once the final analysis plan is reviewed and approved the research team will complete development of the data collection instrument. The data collection instrument in the case of this study will be a survey questionnaire. A comprehensive discussion of concepts guiding the design and deployment of the survey instrument are covered in the Collect data subsection that appears later in this section of the analysis plan.

Conduct Survey Questionnaire Pilot Study

A limited pilot study of trucking companies will be done to test the effectiveness of the study approach and the validity of the survey questions detailed in this document. No payments or gifts will be offered to pilot study participants.

The research team will extract a list of non-passenger motor carriers located within a 200-mile radius of Oak Ridge, Tennessee from MCMIS. The list will be pared down to include only those with current MCS-150 information. The list will be grouped by peer group size and sorted by number of drivers. The research team will contact carriers by phone and email until at least one carrier in each peer group agrees to participate in the pilot study. The study will include fewer than nine (9) carriers. Exhibit IV, FMCSA CMV Peer Group Categories, shows how carriers are categorized by FMCSA.

Exhibit IV: FMCSA CMV Peer Group Categories |

|

Peer Group |

No. of Drivers |

Very Small |

1-5 |

Small |

6-50 |

Medium |

51-500 |

Large |

>500 |

Interviews will be conducted in person at the carriers’ locations, if possible. In cases where in-person interviews cannot be arranged, they will be conducted by telephone.

Companies’ Safety Directors will be asked to participate in interviews. They will be asked to provide feedback about each individual survey item. It is anticipated that interviews will take no longer one hour to complete. The amount of time necessary to complete the survey will be refined based on the interviewers’ pilot experience and feedback from respondents.

Note: The research team conducted pilot interviews during the month of September 2013. A report of this activity is presented in Attachment 1, Pilot Study for The Impact of Driver Compensation on Commercial Motor Vehicle Safety Survey. The research team has revised the survey questionnaire as a result of the pilot study.

Obtain IRB approval to collect study data

Once the data collection package has been approved by FMCSA, the research team will submit the materials to a private internal review board for review and approval. This is an important and necessary step that is covered by regulations advanced by the US Department of Health and Human Services Code of Federal Regulations (45 CFR 46). An important part of the definition of research in the regulations that applies to this study states that

Research means a systematic investigation, including research development, testing and evaluation, designed to develop or contribute to generalizable knowledge. Activities which meet this definition constitute research for purposes of this policy, whether or not they are conducted or supported under a program which is considered research for other purposes.

Further, the regulations provide criteria for when the approval of an internal review board (IRB) is necessary. These criteria are applicable for research involving human subjects, and

Human subject means a living individual . . . about whom an investigator conducting research

Obtains data through intervention or interaction with an individual, or

Uses records gathered on human subjects.

Clearly, this study is covered by both the definition of research as well as the criteria that appear in the regulations.

Submit application to the Office of Management and Budget (OMB) for approval and monitor OMB approval process

As per the requirements spelled out in regulations promulgated in the Paperwork Reduction Act (PRA) of 1995, the research team will work with FMCSA to prepare and submit an OMB application package once IRB approval has been obtained. The package will seek required authorization for an information collection request (ICR) to administer the survey. The US Department of Agriculture (USDA) website provides a helpful listing of the required contents of an OMB ICR package:

Completed OMB 83-I form

Supporting Statement and Burden Grid

Copy of any forms, surveys, scripts, screens, etc. used in the collection of information

Copy of the 60-day Federal Register notice

Copies of any pertinent statutes or regulations, which reference collection requirements or provide guidance on what or how information should be collected

Copies of any pertinent handbooks, manuals or other program instructional material

Copies of reports

30-day Federal Register Notice

Exhibit V: Items Required for OMB Approval lists the requirements for completing the OMB package and summarizes the role the research team can contribute in working with FMCSA to complete and submit the package.

-

Exhibit V: Items Required for OMB Approval

Item Description

Description of Research Team Support

(OMB 83-I Form item number appears in parentheses)

Complete OMB 83-I Form

Assist in identifying keywords (9)

Complete first draft of abstract and edit after FMCSA review (10)

Assist with calculation of annual reporting and recordkeeping time burden (13)

Assist with calculation of annual reporting and recordkeeping cost burden (14)

Supporting Statement and Burden Grid

Complete first draft of Supporting Statement for Paperwork Reduction Act Submissions and edit after FMCSA review

Complete first draft of burden grid and edit after FMCSA review

Copies of any forms, surveys, scripts, screens, etc. used in the collection of information

Provide a hard copy of the survey questionnaire

Provide scripts and job performance aids used by research team to collect non-response data in follow-up telephone interviews

Provide screen shots of all pages of the Web based survey questionnaire

Confidentiality and non-disclosure forms

Copy of the 60-day Federal Register notice

Assist FMCSA with drafting of 60-day notice, as appropriate

(Table continues on next page)

-

Exhibit V: Items Required for OMB Approval

Item Description

Description of Research Team Support

(OMB 83-I Form item number appears in parentheses)

Copies of any pertinent statutes or regulations, which reference collection requirements or provide guidance on what or how information should be collected

Provide documents such as OMB requirements and guidelines, DHHS requirements, GAO guidelines, and any other such materials used in the administration of the study

Provide a summary table of regulations that are to be met (e.g., Privacy Act of 1974, E-Government Act of 2002, references to various Codes of Federal Regulations, etc.)

Copies of any pertinent handbooks, manuals or other program instructional materials

Provide the training manual for research team members that includes information about collecting, processing and editing data; collecting non-response information via follow-up telephone interviews; processing data (including imputation); and other tasks associated with the study

Copies of any pertinent handbooks, manuals or other program instructional materials (cont.)

Provide hard copies of PowerPoint slides to be used in training presentations

Provide copies of forms to be used to critique individuals conducting mock interviews

Provide copies of forms to be used when monitoring individuals collecting information in telephone interviews

Copies of reports

Provide copies of all reports such as the results of initial peer review meeting

30-day Federal Register Notice

Assist FMCSA with drafting of 30-day notice as appropriate

Once the ICR submission package has been completed and submitted to OMB for approval, the research team will monitor the OMB approval process and respond to any additional requests for information.

Prepare collection databases and online collection questionnaire

The research team will use the Motor Carrier Management Information System (MCMIS) to create the survey frame. An initial task will be to review data in the system to determine any omissions, erroneous inclusions, duplications or misclassifications of units in MCMIS records, and other problems that may lead to coverage errors in the administration of the survey. This review of data and the design of the survey frame will take place at the same time that the OMB review of the ICR package is being completed.

The population for this study is defined as non-passenger commercial motor vehicle carrier companies operating legally in the United States. The survey frame will consist of non-passenger CMV carriers in the MCMIS characterized by FMCSA as having an “active” status. The research team will also design and test the online survey data collection survey questionnaire. Functionality of the online questionnaire will allow survey respondents to input partial data, save their online form, and return at a later time to complete the questionnaire. Data from online questionnaires will be able to be directly input into the research team’s database for analysis.

Conduct Survey

Introduction

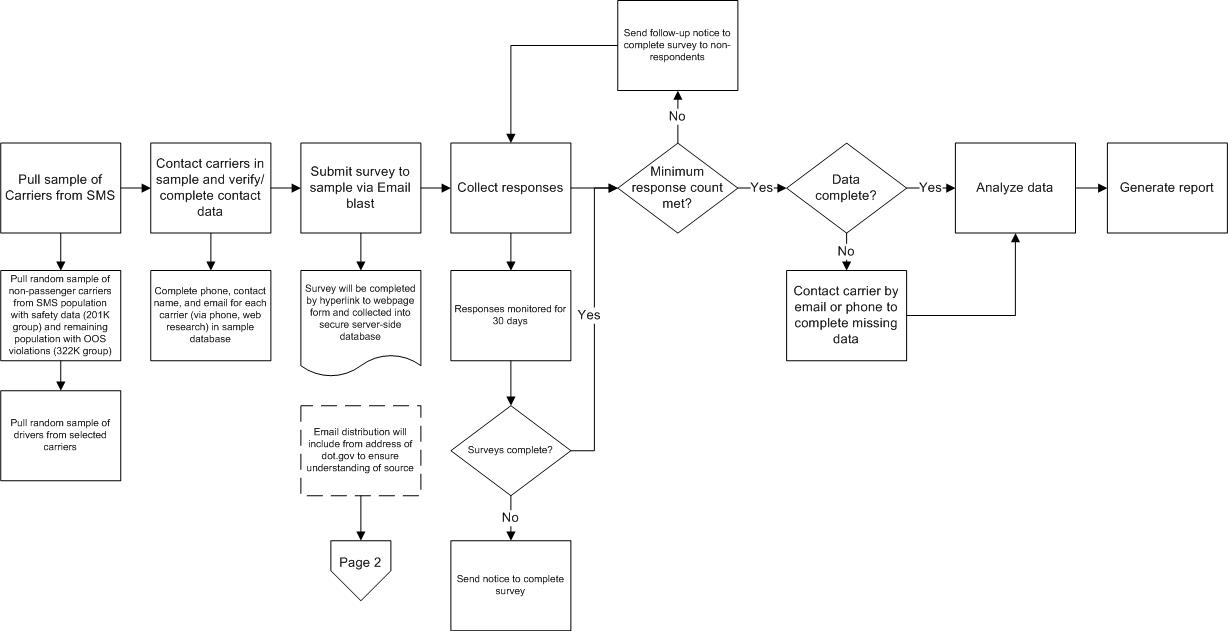

The research team will use surveying to collect data. The procedure to be followed is shown in the flowchart in Exhibit VI, “Flowchart of Data Collection Procedure,” below. Carriers will be asked their type of operation (such as long-haul, short-haul, and line-haul) and how their drivers are compensated. Methods of compensation will be placed in one of the following eight categories:

Pay by the mile

Pay by the hour

Salaried

Pay by percentage of load

Pay by revenue

Pay by delivery or stop

Use of more than one pay method

Other (to include miscellaneous methods that clearly do not fall in one of the other categories

The research team will assure confidentiality of participant responses. No individual participant will be identified in the survey report or other documentation provided to FMCSA. Data collected will be coded and reported in the aggregate and no individual CMV carrier company’s data will be identifiable.

Exhibit VI: Flowchart of Data Collection Procedure

Those carriers surveyed reporting they compensate drivers using more than one method will be asked to provide additional data, some of which will be about specific drivers. The research team will use collected data to determine whether a correlation of method(s) of driver compensation and safety exists.

Determine survey frame and select sample

The population for this study is defined as commercial motor vehicle carrier companies operating in the US that are not passenger vehicles. The survey frame consists of CMV non-passenger carriers listed in the Motor Carrier Management Information System (MCMIS) characterized by FMCSA as having an “active” status. MCMIS is a database of CMV carriers, the majority of which consist of US companies that are non-passenger carriers, although carriers operating in the US from foreign countries such as Canada and Mexico who have been issued a DOT number are also included in the database and will be part of the survey frame. CMV carrier companies will provide information about drivers in the aggregate (e.g., number of drivers who have been involved in a crash or who have been cited for unsafe driving). The minimum age of drivers covered in the study will be 18. No maximum age is anticipated. Drivers of all racial and ethnic origin will be covered by the research. The study is gender neutral. No vulnerable populations are anticipated to be included in the study.

The research team is using a confidence level of 95% and a 5% margin of error for the study. The 5% margin of error is consistent with the General Accounting Office recommendation. The survey frame will be used to obtain a stratified sample for the study. Strata will be based on peer group categorized by number of power units.

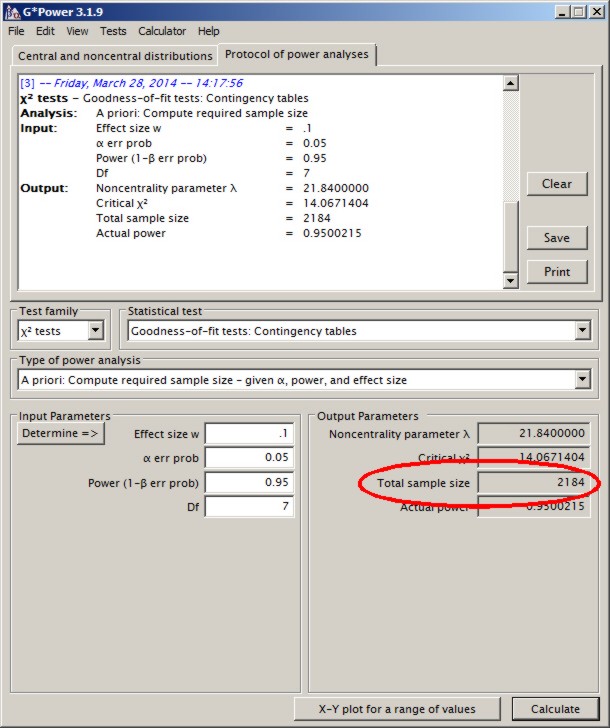

The research team conducted a priori power analysis with the aid of the GPower software application, which is “a free general power analysis program” (Mayr, et al, 2007). To be consistent with a standard advanced by Cohen (1992), the research teach used an effect size (ES) of 0.1. The error probability (α) the team used is .05. While Cohen and others recommend using a power level (1 – β error probability) of .80, the research team opted to use a power level of 0.95 to lessen the possibility of a Type II error. Because the study employs eight (8) categories of driver compensation, seven (7) degrees of freedom (df) were entered into the GPower program to calculate the sample size that will be used for the project.

Exhibit VII, “GPower Program Screenshot,” below, is a screenshot of the GPower program form with the effect size, error probability, power level, and degrees of freedom figures entered in the bottom half of the form and the resulting calculation of sample size in the top half of the form. As shown in the bottom right quadrant of the form, the resulting calculated total sample size is 2,184.

Exhibit VII: GPower Program Screenshot

The research team will collect a response of 2,184 units in order to mitigate the influence the sensitive nature the study may have on response rate and boost the potential for obtaining the minimum number of completed survey questionnaires. The team has set a response rate goal of 20%. Therefore, the team will draw an initial random sample of 10,920 carriers from the survey frame stratified by peer group as illustrated below in Figure VIII. Peer groups are determined by the number of power units the CMV carriers operate and are consistent with FMCSA carrier size categories. There are four categories of peer groups: (1) very small (1–5 power units); (2) small (6–50 power units); (3) medium (51–500 power units); and (4) large (more than 500 power units). Sample stratification will reflect appropriate proportions of units from each peer group. Should the initial random sample not yield the minimum number of units required or should appropriate proportions of peer groups not be achieved, a second and, if necessary, third random sample will be drawn. If appropriate proportions of units are still not be achieved after three samples have been drawn, the research team will weight sample results for nonresponse. Sampling and weighting calculations are described in the Determining sample size and sample size allocation section of this document.

Exhibit VIII, Survey Frame |

|||||

Stratum (Peer Group Categorized by No. of Power Units [PU]) |

No. of Carriers in Survey Frame |

No. of Drivers1 |

Proportion of Drivers(ah)2 |

Calculated Sample Size3 |

Actual Sample Size (5X the Calculated Sample Size)4 |

Very small (1–5 PU) |

517,160 |

941,621 |

.254 |

555 |

3,527 |

Small (6–50 PU) |

187,127 |

1,034,947 |

.279 |

609 |

3,871 |

Med. (51–500 PU) |

24,333 |

358,872 |

.097 |

212 |

1,347 |

Large (>500 PU) |

2,175 |

1,369,164 |

.370 |

808 |

2,175 |

TOTAL |

730,795 |

3,704,604 |

1 |

2,184 |

10,920 |

1Obtained from MCS-150 report data.

2Proportions are based on numbers of drivers in each peer group in relation to the total number of drivers.

3Calculated peer group sample sizes are based on proportions of the total sample of 2,501.

4A factor of 5X the calculated sample size exceeds the total available large peer group size of 2,175; therefore all of the large carriers in the strata will be included in the sample. Actual sample sizes of the other peer groups are adjusted proportionately to equal the total of 10,920 carriers that will be sampled.

The total of 10,920 carriers to be sampled is 5 times as large as the total calculated size for the four peer groups. Proportionately reapportioning the difference of the calculated large peer group sample size and actual number of carrier companies in that cohort (a result of 1,865) to the other three peer groups results in those three smaller peer groups’ calculated sizes being greater than 6 times as large as their calculated sample sizes. In comparison, the calculated sample size of the large peer group is smaller than 3 times its calculated size. However, as the following discussion makes clear, the research team believes oversampling the three smaller groups at using a factor more than twice that of the large group is justified.

Anecdotal information obtained by the research team during its pilot of the survey questionnaire indicates that many commercial motor carriers, particularly very small and small carriers, will likely be reluctant to participate. At the conclusion of piloting events, the research team asked for feedback from participants. Participants from three companies independently offered that they felt that the larger trucking companies would likely participate in the study because they would want to be viewed as cooperative by FMCSA. However, those same participants, some of whom were once over-the-road drivers for very small or small companies, made it clear that many in the industry have the view that FMCSA acts to burden commercial carriers with regulations and are not to be trusted. Therefore, they pointed out, many in the industry would opt out of participating in the survey.

The distrust of FMCSA research and regulations is apparent in industry literature. For example, in an article appearing on the Truckinginfo.com website entitled “What Happens when the Facts Show FMCSA Goofed?” the author provides a strong indictment of the approach FMCSA took to implement hours-of-service regulations that took effect on July 31, 2013. The author goes so far as to say that FMCSA’s HOS regulation will cause experienced drivers to leave the trucking workforce because of burdensome regulations and be replaced with newer, less experienced drivers who will have more crashes.

Similarly, the American Transportation Research Institute’s recent report, Critical Issues in the Trucking Industy‒2013, ranks HOS as the number one issue of concern. The preface to the report includes the following statement: “Additionally, the industry is still sorting through challenges and conflicts with the Federal Motor Carrier Safety Administration’s (FMCSA) Compliance, Safety, Accountability (CSA) initiative, which is now in its third year of national implementation.” That the industry views the FMCSA in a somewhat adversarial way indicates reluctance to participate in voluntary activities such as data collection for the current and past studies.

The research team’s literature search and review yielded very little in the way of research that has already been conducted on the possible relationship of crashes, unsafe driving behaviors, and method of driver compensation method. However, the team was able to benchmark its study approach to one undertaken by the University of Michigan Transportation Research Institute for FMCSA that examined the use of onboard safety technologies. Like the study described herein, the University of Michigan study, “Tracking the Use of Onboard Safety Technologies Across the Truck Fleet” (2009), used the MCMIS database to define the frame and used a random stratified sample approach. As the University of Michigan team reported:

The original estimated response rate to the survey was expected to be approximately 30 percent, but as the survey progressed it became clear that the first sample would not generate the target number of cases. Accordingly, additional samples were drawn . . .

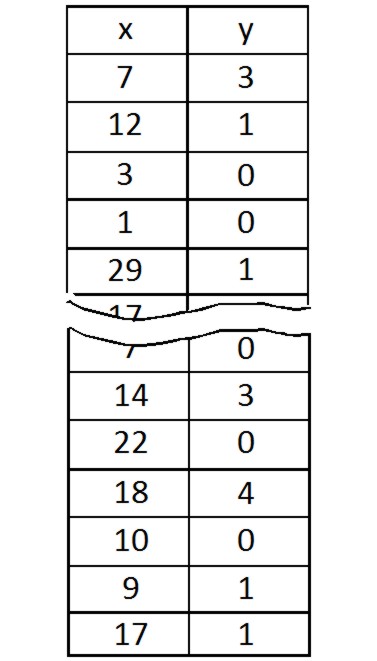

While it is the intent to use a similar approach of multiple sampling to maximize participation, the University of Michigan team’s experience underscores the opinion of those in the current studies piloting activities: the smaller the company, the lower the response rate (the University of Michigan study used six levels of carriers characterized by number of power units; as described elsewhere in this document, the current study uses four levels). Exhibit XI, Survey Statistics, displays a portion of a table that appears on the University of Michigan study.

Exhibit IX: Survey Statistics

STRATA |

REFUSALS |

NONRESPONSE |

NUMBER OF COMPANIES IN SAMPLE |

RESPONSE RATE |

Strata 1: 1-3 Trucks |

168 |

1184 |

1500 |

10% |

Strata 2: 4-20 Trucks |

169 |

1198 |

1500 |

9% |

Strata 3: 21-55 Trucks |

230 |

1061 |

1500 |

14% |

Strata 4: 56-100 Trucks |

150 |

982 |

1334 |

15% |

Strata 5: 101-999 Trucks |

119 |

987 |

1333 |

17% |

Strata 6: 1000+ Trucks |

32 |

216 |

333 |

26% |

Total |

868 |

5628 |

7500 |

13% |

Nonresponse Bias Study

The research team has already conducted a comprehensive literature search and review that has yielded the University of Michigan study cited in the previous section. The study was a means of benchmarking external data that provides some validation to the approach that the research team for the current study is taking to maximize response rate and minimize sample bias (tantamount to comparing surveys to external data).

The research team also has access to the FMCS Safety Management System (SMS) database. This resource includes crash data and data from reports of different types of driver infractions provided by various law enforcement agencies throughout the United States. While the SMS includes only a fraction of the total active carriers (approximately 201,000 of the 731,000 active carriers), the team can compare relative rates of infractions by drivers in each of the FMCSA CMV Peer Group Categories described in a previous section of this document (very small, small, medium, and large). By comparing the percentages of infractions by the drivers in various CMV Peer Group Categories (which constitutes the study’s sample strata) to the results of survey responses and nonresponses, the research team can mitigate possible nonresponse bias by weighting responses for any categories that are under-represented.

Complete carrier SMS data to facilitate carrier contact

The research team will conduct research to gather email addresses and other contact information for individuals who will be asked to respond to survey questionnaire requests. This information will be added to the carrier data to facilitate contact with potential survey participants.

Submit report describing the carrier frame and carrier safety records

The research team will submit a document to FMCSA summarizing the result of the review of the survey frame and carrier safety records. The report will clearly highlight the data that is suspected of being inaccurate or otherwise faulty. FMCSA will review the report and the review team will proceed after receiving FMCSA feedback.

Develop data processing protocol

The research team will develop a protocol for the secure handling of data collected in the study. Exhibit X, Data Processing Protocol, lists the issues related to data processing along with summary statements of the suggested approach the research team will employ to efficiently and effectively deal with those issues. The data processing protocol will be submitted to FMCSA for review and approval once it is fully developed.

-

Exhibit X: Data Processing Protocol

Issue

Research Team Approach

Format of data

The format of data will be considered as the survey questionnaire is being designed and developed in order to minimize issues after data has been collected.

De-identification of data for main study database

A scheme such as coding data to ensure confidentiality.

Data capture

Data capture is the process of converting information provided by a respondent into an electronic format. Data will be self-enumerated by respondents using the questionnaire. Team members responsible for entering data into electronic forms will be trained to do so.

Securely transmitting the data between research partners

Data will be transmitted between research partners using reliable and secure cloud computing technology. The decision about which cloud computing service the research team will use will be determined by an evaluation of providers on criteria such as ability of vendor to encrypt data, ability of vendor to password protect files that team members share, and the ability to set up folder permissions (authorizations) for team members to share data at various levels.

Storage of the data

Data will be stored in databases on a secure server. The backups of collected data will be completed on a daily basis and stored at a remote site using secure cloud computing technology.

Quality assurance

Senior members of the research team will review completed questionnaires to ensure that data has been entered correctly, edits have been applied appropriately, and that missing information is collected in a timely fashion.

Document control

A robust document control procedure will be implemented to be able to account for each in-process questionnaire. The document control procedure will also be used to track changes made to any data that has been collected.

Distribute survey introductory letter to carriers chosen as part of the sample

A copy of a letter issued by FMCSA describing the purpose of the study to carriers in the sample will be emailed prior to the research team’s initial survey deployment. The letter will inform carriers that they will be contacted and asked to provide information about their type of operation and the method(s) they use to pay drivers. Carriers will also be told how the data they provide will be used and the purpose of the study. They will be told when they can expect to be contacted and that they may be asked to provide information about individual drivers working for their company. Finally, the letter will include a web address that will enable potential study participants to access a page that will provide more comprehensive information about the study, including information that underscores the legitimacy of the project and information about how to contact the FMCSA study project officer for further information.

Distribute survey participation email

An email message announcing the web-based survey, providing instructions for completing the survey questionnaire, and setting the deadline for completing the questionnaire will be distributed to potential participants. No payments or gifts will be offered to potential survey participants. A flowchart illustrating the procedure for completing the web-based questionnaire is shown in Exhibit XI, Procedure for Accessing and Completing Web-based Survey Questionnaire. Data collected will yield estimates of various types of carrier operations such as long-haul, short-haul, and line-haul, types of commodities hauled, number of power units in their fleets, and other information. The survey will also determine the percentages of carriers using various methods to compensate drivers such as pay-per-mile, pay-per-load, and hourly rate. The research team will monitor survey responses and send follow-up email reminders to maximize survey response.

The

research team will design and develop training to be delivered to

surveyors. Topics covered by the training will include—but not

be limited to—surveying best practices, tips for dealing with

reluctant subjects, safeguarding personally identifiable information,

and how to enter data into computer forms. The research team will

also produce a survey script that will ensure that surveyors use a

consistent data collection approach and capture all required data.

The

research team will design and develop training to be delivered to

surveyors. Topics covered by the training will include—but not

be limited to—surveying best practices, tips for dealing with

reluctant subjects, safeguarding personally identifiable information,

and how to enter data into computer forms. The research team will

also produce a survey script that will ensure that surveyors use a

consistent data collection approach and capture all required data.

It is anticipated that a portion of carriers participating in the survey will report that they use only one method of paying drivers. Representatives of carriers that use a variety of ways to pay drivers are likely to need time to do some research about specific drivers. Those participants in the initial survey will have the option of a follow-up call from the surveyor or filling out the on-line form to provide driver-level data. Representatives of carriers using a variety of approaches will be asked to provide information about how specific, randomly-selected drivers with a record of a safety violation or violations are compensated.

Regardless of the approach that participants choose to provide driver-level data, the burden will be minimal. It is anticipated that the initial survey items will take considerably less than thirty minutes to complete. If the participant can provide driver-level data without having to research the data and opts to provide that data during the initial call, it should take no longer than five minutes to provide that additional information.

The burden to conduct research to provide driver-level data should not be great for most carriers that have sufficient records. In most cases, participants will be able to complete that research in less than one hour. Follow-up phone interviews will take no longer than five minutes and likewise, those who enter data via the on-line form should be able to do so within five minutes.

Exhibit XII: Auto–Response

Logic for Online Questionnaire

Data collection and follow-up

As survey questionnaires are completed by participants, data will automatically be input into the survey database. The system will be designed to measure completeness of entry. Incomplete surveys will automatically generate an email reminder that will be distributed to the participant. Two additional reminders will be sent at three-day intervals to prompt the participant to complete the questionnaire. The emails will contain a link returning the participant to the incomplete portion of the questionnaire. If the participant does not return to complete the questionnaire they will be contacted directly by phone to request completion of the questionnaire and offer an opportunity to complete the remaining question over the phone at that time. If after these efforts the participant does not complete the questionnaire then no further contact will be made. The questionnaire will be closed and an email thanking the participant for their effort will be sent. This auto-response logic is illustrated in Exhibit XII, Auto-Response Logic for Online Questionnaire. A rudimentary analysis of completed questionnaires will be done by the research team to determine completeness of responses and to identify any potential erroneous responses. In such cases, the research team will contact specific participants to validate and edit data, as appropriate, to maximize the integrity of collected data.

Process and edit data

Some carriers in the sample may not have had access to necessary information to respond to items in the second portion of the survey interview. Therefore, follow-up phone calls will be made. An analysis of all units in the sample will be done. Criteria that will be used to characterize “safety” in this analysis includes driver out-of-service safety rates, motor vehicle declared out-of-service rates, unsafe driving violations (such as speeding or illegal lane changes) and crash rates. A calculation to determine a possible correlation of safety and pay method will then be conducted.

The research team will compare data for how specific drivers with violations are compensated to the way carriers compensate drivers in order to determine if a relationship between compensation method and safety exists.

Approach to maximize response rate. The study team recognizes that it will be collecting information that some carriers in the sample consider sensitive. Therefore, a number of techniques will be used to maximize response rate:

Participant contact information will be verified and completed with formal name, title, phone number, and email address prior to contact to ensure accuracy and maintain formality.

FMCSA will send an introductory letter to participants describing the purpose of the study and encouraging carriers to participate.

The questionnaire will be designed to ensure ease of entry and clarity of questions. Terms will be described in context and defined to ensure understanding.

The questionnaire will be administered online. This will minimize the burden to the participant and allow them to complete the questionnaire during times of convenience.

Participants will be contacted by auto-generated email and reminders to prompt the participant to complete the survey in a timely manner.

For phone contacts, scripts will be used to ensure that interviews are compact and take as little time as possible to conduct. Surveyors will be trained and provided glossaries of terms that can be used should a participant require terms be explained.

Stratified sample populations will be monitored during collection and should results return an unacceptable response rate, the research team will select a second random sample of carriers within the affected population to solicit additional responses.

Respondent privacy and confidentiality of information will be ensured through application of Federal regulations including, but not limited to, Confidential Information and Statistical Efficiency Act of 2002, Privacy Act of 1974, and E-Government Act of 2002. Reinforcement of privacy and confidentiality policy will be provided to potential respondents in the written transmittal announcing the survey that will be signed by an appropriate FMCSA official, and as an opening statement in any other written or oral communication made by members of the research team to respondents.

Validity and reliability of data collected. There are two types of non-sampling errors that can compromise the quality of the study: random errors and systematic errors. Poor survey questionnaire design and deployment can lead to significant non-sampling error, particularly of the systematic variety. Good discussions of errors that result from poor surveying can be found in the Statistics Canada publication Survey Methods and Practices and in the GAO’s Program Evaluation and Methodology Division publication Developing and Using Questionnaires. The Statistics Canada publication lists four sources of non-sampling errors:

Coverage errors consist of omissions, erroneous inclusions, duplications, and misclassifications of units in the survey frame . . . that affect every estimate produced by the survey.

Measurement error is the difference between the recorded response to a question and the ‘true’ value. One of the main causes of measurement error is misunderstanding on the part of the respondent or interviewer . . . (and) may result from:

the use of technical jargon;

the lack of clarity of the concepts (i.e., use of non-standard concepts);

poorly worded questions;

inadequate interviewer training;

false information given (i.e., recall error, or lack of ready sources of information;

a language barrier;

poor translation (when several languages are used).

Nonresponse error can take two forms: item (or partial) nonresponse and total nonresponse. Item nonresponse occurs when information is provided for only some items, such as when the respondent responds to only part of the questionnaire. Total nonresponse occurs when all or almost all data for a sampling unit are missing.

Processing error can occur at several points during the transformation of data obtained during collection into a form that is suitable for tabulation and analysis. Because processing is time-consuming and resource intensive, it a potentially a source for errors. Another type of processing error that can occur is when coding is applied to open questions that require interpretation and judgment. Data capture processing errors result when data are not entered into the computer exactly as they appear on the questionnaire. Editing and imputation processing errors can occur when faulty replacement values are assigned to resolve problems of missing, invalid, or inconsistent data.

The GSA document includes a discussion of non-sampling errors that are introduced in a study as a result of inappropriate questions on a survey. Questions are inappropriate if they

are not relevant to the evaluation goals;

are perceived as an effort to obtain biased or one-sided results;

cannot or will not be answered accurately;

are not geared to the respondent’s depth and range of information, knowledge, and perceptions;

are not perceived by respondents as logical and necessary;

require an unreasonable effort to answer;

are threatening or embarrassing;

are vague or ambiguous; or

are unfair.

Exhibit XIIII, Mitigating Risks of Non-sampling Errors in Surveys, lists the errors discussed in this section along with a statement summarizing the strategies that the research team will use to mitigate those risks. Similarly, Exhibit XIV, Mitigating Risks Created by Inappropriate Survey Items, lists inappropriate questions discussed in this section along with a statement summarizing strategies that the research team will use to mitigate those risks.

Exhibit XIII: Mitigating Risks of Non-sampling Errors in Surveys |

||

Risk |

Strategies for Mitigation |

|

Coverage error |

|

|

Measurement error |

|

|

Nonresponse error |

|

|

Processing error |

|

|

Exhibit XIV: Mitigating Risks Created by Inappropriate Survey Items |

||

Risk |

Strategies for Mitigation |

|

Survey item not relevant to evaluation goals |

|

|

Unbalanced line of inquiry |

|

|

Items that cannot or will not be answered accurately |

|

|

Items that are not geared to respondent’s depth and range of information, knowledge, and perceptions |

|

|

Items that respondents perceive as illogical or unnecessary |

|

|