Supplementary and Part B Questions

08262016_NOAA NWS_OMB Submittal for Impacts Based Warnings.docx

NOAA Customer Surveys

Supplementary and Part B Questions

OMB: 0648-0342

NOAA National Weather Service

Impact Based Warnings (IBW) Project

Part A and B DOC/NOAA Customer Survey Clearance

OMB Control No. 0648-0342

Impact Based Warnings Research Project

July 18, 2016

Supplemental Questions for DOC/NOAA Customer Survey Clearance

(OMB Control Number 0648-0342)

Explain who will be conducting this survey. What program office will be

conducting the survey? What services does this program provide? Who are the customers? How are these services provided to the customer?

NOAA’s National Weather Service (NWS) monitors, forecasts, and issues weather watches, warnings, and advisories for all weather types across all 50 states. In particular, the NWS local offices issue these warnings and other information products through websites, through NOAA Weather Radio, and through interactions with partners such as the media, government officials, emergency managers, and community groups. These warnings provide lifesaving information, such as when to shelter from a severe thunderstorm or tornado warning.

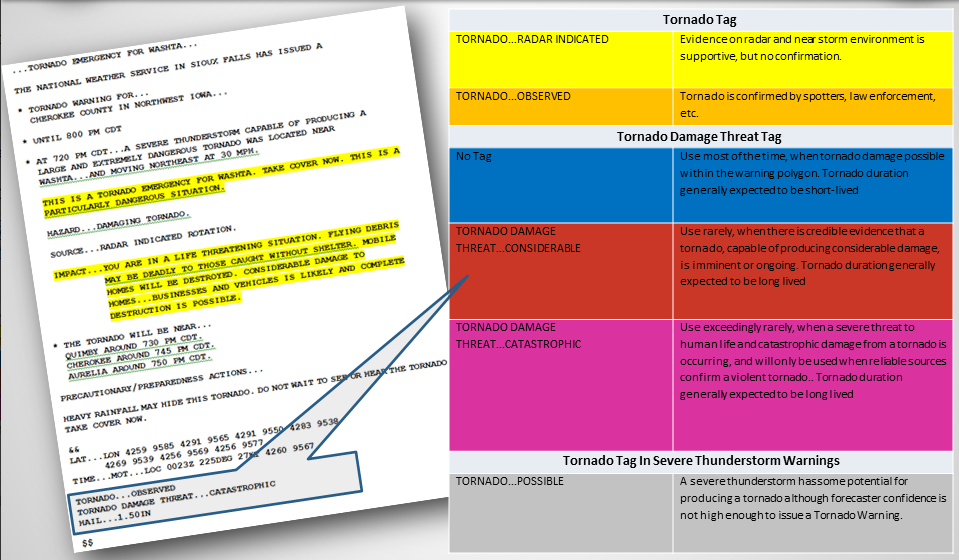

Examples of Impact Based Warning Tags

In the wake of the 2011 Joplin, MO, tornado—the deadliest to strike the United States since modern recordkeeping began in the 1950s—the NWS began considering ways to better alert the public to such dangerous weather event risks. The NWS Central Region conducted an experiment with five weather offices in Missouri and Kansas to test whether the incorporation of impact-based warning (IBW) information in tornado warnings helps people make faster decisions. Along with new wording, threat tags were attached to the warnings based on the severity of the situation (see illustration below). In 2013, the experiment was extended to 38 weather offices throughout 12 additional states within the NWS Central Region; this expansion continued in 2015 to include more offices in the Southern, Eastern, and Western Regions.

Building on past work with NWS partners, such as emergency managers and broadcast meteorologists, the NWS is now looking for input on how the public interprets IBW threat tags. This evaluation will be useful in helping the NWS develop and evaluate its warning language to ensure it is meeting its mission to save lives and protect property. To accomplish this, the NWS seeks to conduct a public survey to evaluate the effectiveness of the IBW program.

Explain how this survey was developed. With whom did you consult regarding content during the development of this survey? Statistics? What suggestions did you get about improving the survey?

The NWS contracted with Eastern Research Group, Inc. (ERG) on the development of the survey. ERG has significant experience in conducting surveys, as well as evaluating risk messages. To develop the survey, ERG worked with Dr. Joseph Ripberger, Deputy Director for Research at the University of Oklahoma Center for Risk and Crisis Management. Dr. Ripberger has conducted many research projects related to risk communication and efficacy of messages, including work on studying public response to weather threat tags. The survey tests the current IBW messages, as well as new ideas that emerged from focus group partner feedback (OMB Reference number: 201504-0648-015ICR).

Explain how the survey will be conducted. How will the customers be sampled (if fewer than all customers will be surveyed)? What percentage of customers asked to take the survey will respond? What actions are planned to increase the response rate? (Web-based surveys are not an acceptable method of sampling a broad population. Web-based surveys must be limited to services provided by Web.)

The survey will be administered online through a Qualtrics web link. The online survey mimics how people currently see the IBW messages. Although tornado warnings are widely disseminated through a variety of technologies, at this time, online mechanisms are the primary way to see IBW specific messages.

To narrow the sample locations, the sample will be obtained from the same locations in which the focus groups took place: North Carolina, Iowa, Oklahoma, and Alabama.1 These states were chosen based on their varying degree of experience with severe weather, as well as their diversity of storm types. Using the same locations as the focus groups will allow for a comparative data analysis between the qualitative partner data and quantitative public data

ERG will obtain a random, non-probability public sample using Qualtrics panels in each of the four states.2 These panels represent a large, convenient sample of the United States. That is, participants opt-in voluntarily to participate in Qualtrics surveys.

A sample size of approximately 1800 participants will be needed with 450 participants from each state while also varying the urban, suburban, rural distribution of the sample. Qualtrics will determine respondents that are highly likely to qualify for the panel in each state based on the inclusion requirement consisting of the target geography. It will initially randomly select 450 participants within the sample in each state since that is our target completion number. Qualtrics will then increase the number of contacts until it reaches the target number of completed surveys. Qualtrics provides all of this sample information, which will enable us to assess the response rate of how many individuals were asked to take the survey versus how many valid responses were provided. If Qualtrics cannot attain the 1,800 completes across all states, then we will broaden our target geography (e.g., add more cities in each state) to the sample pool. Qualtrics will also remind the participants with follow-up emails for up to 2 weeks to increase the response rate.

Describe how the results of this survey will be analyzed and used. If the customer population is sampled, what statistical techniques will be used to generalize the results to the entire customer population? Is this survey intended to measure a GPRA performance measure? (If so, please include an excerpt from the appropriate document.)

The NWS will use the information resulting from this data collection to help guide refinements in threat tag definitions and messaging to ensure the project is meeting its goal to facilitate improved public understanding. ERG is not using any statistical methods to select participants from the population. Rather, we are using a random, non-probability based convenient sample from Qualtrics in the following states: North Carolina, Iowa, Oklahoma, and Alabama. Thus, no direct comparison will be made to the population at large.

The data do not directly contribute to a GPRA measure.

Collections of Information Employing Statistical Methods

Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g. establishments, State and local governmental units, households, or persons) in the universe and the corresponding sample are to be provided in tabular form. The tabulation must also include expected response rates for the collection as a whole. If the collection has been conducted before, provide the actual response rate achieved.

The potential respondent universe is based on the number of participants contacted by Qualtrics. We are obtaining a random, non-probability public sample using a Qualtrics panel. These panels represent a large, convenient sample of the United States. That is, participants opt-in voluntarily to participate in Qualtrics surveys. Dependent on our inclusion criteria (for example, states and urban to rural distribution), Qualtrics will determine respondents that are highly likely to qualify for the panel, and then randomly select within the sample. For example, Qualtrics may have 10,000 participants in Oklahoma, but they will initially randomly select 450 people to participate since that is our target amount per state, and then increase the number of contacts until it reaches the target sample number of completed surveys.

A sample size of approximately 1800 participants will be needed with 450 participants from each state while also varying the urban, suburban, rural distribution of the sample (see Table below). Qualtrics provides all of this information, which allows ERG to assess the response rate of how many individuals were asked to take the survey versus how many valid responses were provided. If Qualtrics cannot attain the 1800 completes, then we will add more cities in each state to the sample pool.

Focus Group Sample

Location |

Total Sample |

Urban |

Suburban |

Rural |

North Carolina |

450 |

150 |

150 |

150 |

Alabama |

450 |

150 |

150 |

150 |

Oklahoma |

450 |

150 |

150 |

150 |

Iowa |

450 |

150 |

150 |

150 |

Totals |

1,800 |

600 |

600 |

600 |

Describe the procedures for the collection, including: the statistical methodology for stratification and sample selection; the estimation procedure; the degree of accuracy needed for the purpose described in the justification; any unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Statistical Method for Stratification and Sample Selection

NWS is not using statistical methods for stratification or sample selection. The respondents will be randomly selected from the panel maintained by Qualtrics partners, but inclusion of respondents in the panel itself is not probability based.

Estimation Procedure and Accuracy

NWS is not using statistical methods for this data collection. However, in order to facilitate meaningful data analysis within the sample itself, we estimated needing 150 people per cell with 4 states and 3 comparative conditions (150 x12), which equals 1800 people.

Unusual Problems Requiring Specialized Sampling Procedures

None are required.

Periodic Data Collection Cycles

This request is for a one-time data collection.

Describe the methods used to maximize response rates and to deal with nonresponse. The accuracy and reliability of the information collected must be shown to be adequate for the intended uses. For collections based on sampling, a special justification must be provided if they will not yield "reliable" data that can be generalized to the universe studied.

ERG is not conducting a probability-based sample, but rather using a random, non-probability public sample using a Qualtrics panel. These panels represent a large, convenient sample of the United States. That is, participants opt-in voluntarily to participate in Qualtrics surveys. A sample size of approximately 1800 participants will be needed with 450 participants from each state while also varying the urban, suburban, rural distribution of the sample. Qualtrics will determine respondents that are highly likely to qualify for the panel in each state based on the inclusion requirements and target geography. It will initially randomly select 450 participants within the sample in each state since that is our target completion number. Qualtrics will then increase the number of contacts until it reaches the target number of completed surveys. Qualtrics provides all of this sample information, which will enable us to assess the response rate of how many individuals were asked to take the survey versus how many valid responses were provided. If Qualtrics cannot attain the 1,800 completes across all states, then we will broaden our target geography to a regional versus a state approach. Qualtrics will also remind the participants with follow-up emails for up to 2 weeks to increase the response rate.

Describe any tests of procedures or methods to be undertaken. Tests are encouraged as effective means to refine collections, but if ten or more test respondents are involved OMB must give prior approval.

As a prior step of this work, Eastern Research Group, Inc. (ERG) conducted focus groups (OMB Reference number: 201504-0648-015ICR) in the four states where the survey will be deployed. The focus groups helped to refine the messages tested in the survey. The survey is also building off previous work of previous work conducted by Dr. Joseph Ripberger, Deputy Director for Research at the University of Oklahoma Center for Risk and Crisis Management. Dr. Ripberger has conducted many research projects related to risk communication and efficacy of messages, including work on studying public response to weather threat tags.

Provide the name and telephone number of individuals consulted on the statistical aspects of the design, and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

The NWS has contracted with Eastern Research Group, Inc. (ERG) of Lexington, MA, to design the survey and implement the data collections. ERG’s project manager for this work is Dr. Gina Eosco (703-841-1705; [email protected]). ERG also had one of its survey experts review this work (Dr. Lou Nadeau; 781-674-7316; [email protected]) and also retained Dr. Joseph Ripberger, Deputy Director for Research at the University of Oklahoma Center for Risk and Crisis Management (405-325-5872; [email protected]) to assist in development of this survey instrument.

1 OMB Reference number: 201504-0648-015ICR

2 We refer to these as “random, non-probability” samples since the selection of respondents from the panel itself will be random; however, inclusion of respondents in the panel itself is not random since potential respondents must opt into the panel.

IBW

Research Project

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Gina Eosco |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy