Expert Review for the 2017 Census of Governments - GUS

Attachment G-Cognitive Expert Review.docx

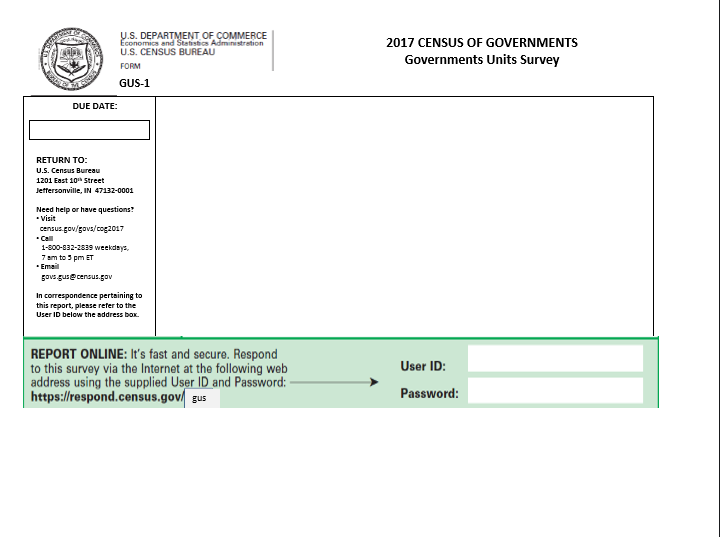

2016 Government Units Survey

Expert Review for the 2017 Census of Governments - GUS

OMB: 0607-0930

Expert Review for the 2017 Census of Governments - Government Units Survey

Prepared for:

Joy Pierson, Public Sector Frame & Classification Branch, ESMD

Franklin Winters, Public Sector Statistics R&M, ESMD

Prepared by:

Kristin Stettler, Data Collection Methodology and Research Branch

Jennifer Beck, Data Collection Methodology and Research Branch

Economic Statistical Methods Division

February 12, 2015

Table of Contents

Findings and Recommendations 4

Economic Directorate Guidelines on Questionnaire Design 5

Question Specific Recommendations 10

About the Data Collection Methodology and Research (DCMR) Branch 13

Executive Summary

Overall, this draft of the revised Government Units Survey is very brief, and should be much simpler for respondents to complete. We make several recommendations in the following sections for improvements.

Findings and Recommendations

This expert review is split into two categories: economic directorate guidelines on questionnaire design and question specific suggestions.

Economic Directorate Guidelines on Questionnaire Design

For further information about the Economic Directorate Guidelines on Questionnaire Design, please click here.

Guideline |

Is the guideline followed? |

Recommendation |

Wording

|

||

|

|

There are several where questions are not used, specifically 2A and 2B. See Question Specific Recommendations. |

|

|

Questions 7-9 in particular are complex questions, and should be broken down into simpler questions, with follow-on questions as appropriate. See Question Specific Recommendations. |

|

|

|

|

|

Several questions use terms that may be unfamiliar to respondents. See Question Specific Recommendations. |

|

|

|

|

|

|

|

|

|

Response Options and Answer Spaces |

||

|

|

We assume consistent formatting will be applied when the final questionnaire design is available. |

|

|

|

|

|

|

|

|

|

|

|

|

Visual Features and Layout |

||

|

|

We assume consistent font variations will be applied when the final questionnaire design is available. |

|

|

|

|

|

|

Navigation |

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Question 2 in particular. See Question Specific Recommendations. |

|

|

Some of the skip instructions may be easily missed. Stronger visual features should be considered. See Question Specific Recommendations. |

|

|

Could consider adding a clause after the skip instruction if the item is on another page. For example, “Go to (10) on page xx.” See Question Specific Recommendations. |

Instructions |

||

|

|

We could not evaluate this as an electronic instrument is not yet available. |

|

|

Some questions might benefit from additional instructions or examples. See Question Specific Recommendations. |

|

|

|

|

|

|

|

|

|

Matrices |

||

|

|

|

Question Specific Recommendations

Overall, one major issue is whether there will be an initial Web push for the survey? Or will it be a follow-up push? The mode will be important for some of the design issues. In particular, there were issues with respondents not following skip instructions in 2012. A web instrument can have skip logic programmed into it, mitigating the problem of respondents not filling out necessary information or filling out information they did not need to provide.

Because the draft we reviewed was a rough mockup in Powerpoint, we do not have many comments on the formatting or layout of the paper instrument. We are assuming that the Economic Directorate Guidelines on Questionnaire Design will be followed. We would be pleased to offer additional comments once a more finalized version is available.

Recommendations: Ensure appropriate skip patterns are programmed into the web instrument. Also make sure the skip patterns on the paper form are as clear and easy to follow as possible. Consider adding a clause after the skip instruction if the item is on another page. For example, “Go to (10) on page xx.”

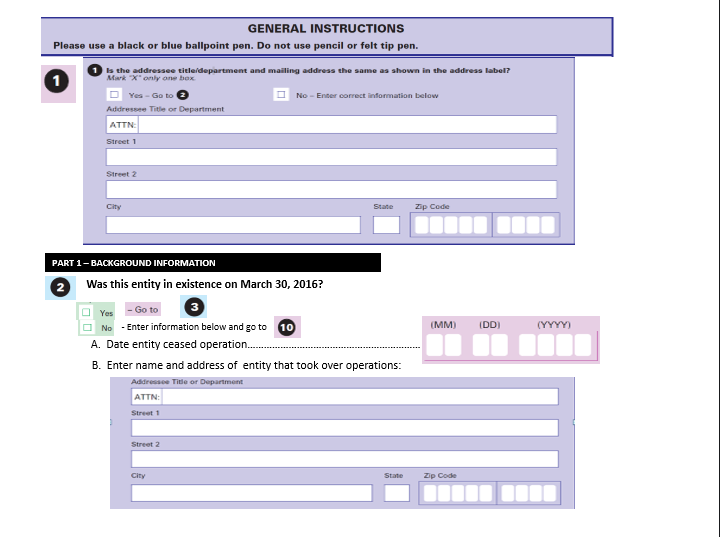

Question 1

This question had problems in 2012. Respondents were filling out the address information when they had answered “yes”. The skip instruction will help, but with a web instrument, these questions could be put on separate screens so they are only shown to respondents when applicable.

Recommendation: As above, ensure appropriate skip patterns are programmed into the web instrument. Also make sure the skip patterns on the paper form are as clear and easy to follow as possible.

Question 2

This question has a lot going on. The skip instruction for “no” has the potential to get lost, as it occurs before the respondent answers the follow-up. The layout also looks busy.

2A is a sentence fragment and should be a question.

2B is a sentence fragment and should be a question. Also, 2B does not offer them a space to tell the name of the new entity.

Recommendations: We recommend using a different numbering scheme and making use of the indent. We also recommend having the questions and response fields aligned vertically for BOTH questions, rather than horizontally for one.2A should read “On what date did the entity cease operations?” 2B should read “What is the name and address of the entity that took over operations?” Also, 2B should have another line before the ATTN: line for the name of the new agency (perhaps “Name of new entity”?).

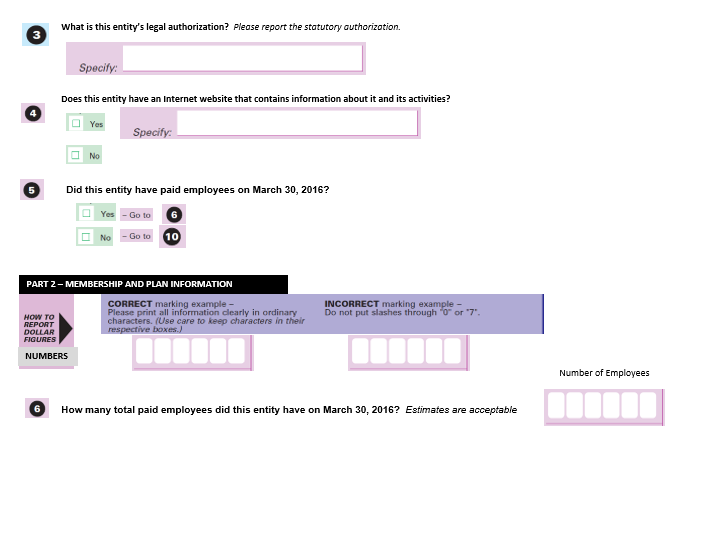

Question 3

The response box does not need “specify”. Are respondents likely to know what this question is asking? Are there examples to provide?

Recommendations: Delete “specify” from the response box. Provide examples if possible.

Question 4

No comments.

Question 5

Do respondents understand what we mean by “paid employees”? Would any examples be helpful?

Recommendation: Define “paid employees” and provide examples, if possible.

Question 6

The answer boxes do not have commas in the thousands place, to help respondents know how to enter their numbers.

Recommendations: Add commas to show the thousands place. Also add thousands place in the two example boxes under Part 2.

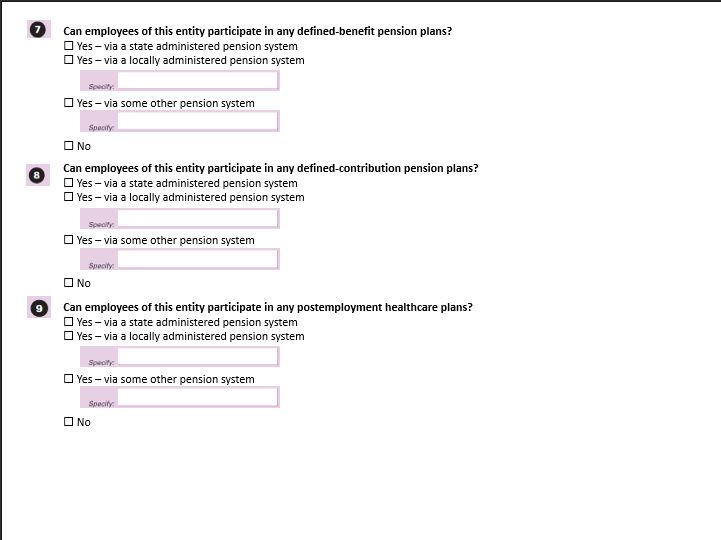

Question 7 - 9

All three of these questions are complex. The respondents have to consider if they have the retirement plan at the same time that they decide if it is state or local and its name. .

Also, are there examples or definitions for these types of plans? When testing these questions on the initial GUS, respondents struggled with these questions. Good examples might be helpful.

Recommendations: For all three questions, make the question a simple “yes/no” question, with follow-ups that elicit the type of plan (state or local) and the name. Define each plan type and provide examples, if possible.

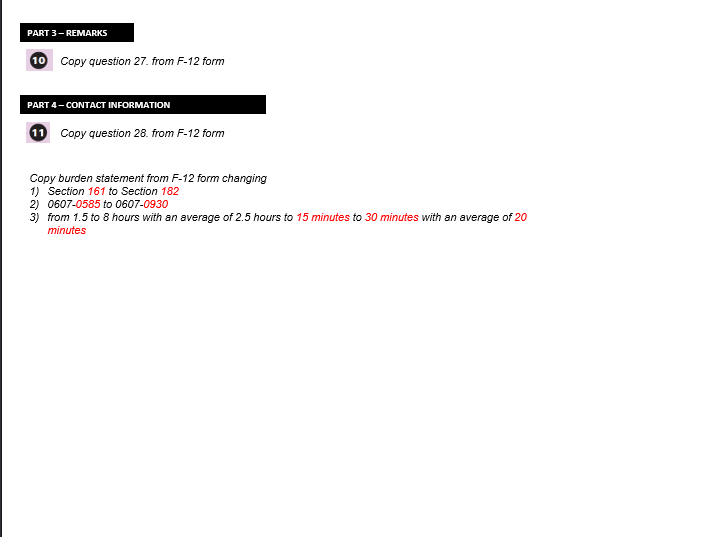

Question 10

No comments.

Question 11

There is an unnecessary word in the revised burden statement.

Recommendation: Delete “minutes” after “15” and before “to” in 3) under Copy burden statement.

About the Data Collection Methodology and Research (DCMR) Branch

The Data Collection Methodology and Research (DCMR) Branch in the Economic Statistical Methods Division assists economic survey program areas and other governmental agencies with research associated with the behavioral aspects of survey response and data collection. The mission of DCMR is to improve data quality in surveys while reducing survey nonresponse and respondent burden. This mission is achieved by:

Conducting expert reviews, cognitive pretesting, site visits and usability testing, along with post-collection evaluation methods, to assess the effectiveness and efficiency of the data collection instruments and associated materials;

Conducting early stage scoping interviews to assist with the development of survey content (concepts, specifications, question wording and instructions, etc.) by getting early feedback on it from respondents;

Assisting program areas with the development and use of nonresponse reduction methods and contact strategies;

And conducting empirical research to help better understand behavioral aspects of survey response, with the aim of identifying areas for further improvement as well as evaluating the effectiveness of qualitative research.

For more information on how DCMR can assist your economic survey program area or agency, please visit the DCMR intranet site or contact the branch chief, Amy Anderson Riemer.

Appendix A

Here is a copy of the Power Point draft of the instrument that we reviewed.

1 Discussed on page 12 of the Guidelines

2 Discussed on page 13 of the Guidelines

3 Discussed on page 11 of the Guidelines

4 Discussed on page 11 of the Guidelines

5 Discussed on page 19 of the Guidelines

6 Discussed on page 19 of the Guidelines

7 Discussed on page 21 of the Guidelines

8 Discussed on page 23 of the Guidelines

9 Discussed on page 26 of the Guidelines

10 Discussed on page 28 of the Guidelines

11 Discussed on page 33 of the Guidelines

12 Discussed on page 34 of the Guidelines

13 Discussed on page 39 of the Guidelines

14 Discussed on page 41 of the Guidelines

15 Discussed on page 44 of the Guidelines

16 Discussed on page 44 of the Guidelines

17 Discussed on page 45 of the Guidelines

18 Discussed on page 48 of the Guidelines

19 Discussed on page 49 of the Guidelines

20 Discussed on page 51 of the Guidelines

21 Discussed on page 54 of the Guidelines

22 Discussed on page 55 of the Guidelines

23 Discussed on page 57 of the Guidelines

24 Discussed on page 59 of the Guidelines

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | pick0002 |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy