OMB Memo

OMB letter_2020 UX Survey_07 28 2020.docx

Generic Clearance for Census Bureau Field Tests and Evaluations

OMB Memo

OMB: 0607-0971

2020 Census User Experience Survey

Submitted Under Generic Clearance for Census Bureau Field Tests and Evaluations

Request: The Census Bureau plans to conduct additional research under the generic clearance for Field Tests (OMB #0607-0971). We will be administering an online voluntary survey designed to measure respondent satisfaction and user experience with the online 2020 Census questionnaire.

Purpose: The purpose of conducting this survey is to gather user satisfaction on the 2020 Census online questionnaire from respondents who used it to complete their census. There were two ways to answer the online questionnaire, with a Census ID which was linked to a specific address or without a Census ID (which we refer to internally as the non-ID path). The non-ID questionnaire path is nearly identical to the ID path, with the exception that the non-ID path requests the respondent enter his or her address. The non-ID online questionnaire is similar to the “Be Counted” forms in past censuses where the public could pick up a paper form at a local library (for example), fill in their address and their information and mail it back. The non-ID response rate for 2020 is over 10 times the 2010 rate. For example, there were about 1.28M non-ID forms in 2010 (around 1% of self response) and currently we have received around 13.9M non-ID responses (around 12.6% of self response). We have also observed more break-offs with the non-ID path than with the ID path. Overall break-off rates are currently 6.8% but for non-ID sessions, the break-off rate is 17.2%. Most of these break offs (63%) occur before the respondents reach the demographic questions.

Two goals of the user satisfaction survey is to determine (1) why respondents break off, and (2) why they used the non-ID path. In additional to these goals, we aim to gather a overall satisfaction measure for the 2020 Census online form, both for the ID path and for the non-ID path and gather additional information on which specific screens or reasons caused user confusion or dissatisfaction.

The information collected in this survey will be used within the Census Bureau to help make decisions to improve the questions, the UX design, and processes for the inter-censual years as well this feedback will be incorporated into the efforts for the 2030 Census. The results may be reported in Census Bureau working papers or in peer-reviewed journal articles. They may be referenced in the 2020 assessments, including the 2020 Non-ID Processing Operational Assessment and the 2020 ID Processing Operational Assessment.

Population of Interest: Residents of the United States (excluding Puerto Rico) who completed the 2020 Census online form and who provided a cell phone number in the 2020 Census as their contact number. The user experience survey will only be available in English.

While our population of interest does not include 2020 Census online responses that provided a landline or no phone number, we do not expect any differences in user experience between the those populations based on our experience from the usability testing of the online census form conducted. During usability testing, we never witnessed users with the two phone types having different problems. However, we will produce tallies of break-offs, the number of ID or non-ID cases between cell phone responses and landline (or missing) phone responses in the final report to be transparent about any limitations.

We are confident that the number provided in the 2020 Census online response is the best number to reach the respondent. In the debriefing during usability testing, we always asked respondents about the phone number provided, and respondents always claimed that it was the best number for them. Based on the success of the Household Pulse survey in using text messages to generate response, we feel that text messages are the best way to contact our population, especially since this will not be a cold-contact text. On the census form where telephone number is collected, the form states “We will only contact you if needed for official Census Bureau business.” This user experience survey is official Census Bureau business.

Timeline: We intend to conduct this survey in four 11-day waves starting in August and running through November with sample drawn from census respondents who answered via the ID or the non-ID path. The frame for the first wave of sample will include cases who reported between March and July; the frame for the second wave will include cases who reported in August; the third wave will include cases from September; and the final wave will include cases from October. The first wave will occur Monday, August 10 through Thursday, August 20, 2020.

Sample: We will select a stratified, systematic sample of 150,000 stateside cell phone numbers that responded to the 2020 Census via the ID and the non-ID online paths. Non-id and break-off cases will be oversampled. We will also oversample later responders in order to reduce recall bias. Sample will be drawn to represent all cases, from March through October. The sample will be weighted separately for the two populations (ID and non-ID). We assume a response rate of 10% but have budgeted in case the response is higher.

The cell phone numbers are obtained from the number provided in the 2020 Census online questionnaire. The phone numbers will be matched to a separate database to only select from cell phone numbers.

Recruitment: Sampled 2020 Census respondents will receive up to 3 text messages to their cell phone. Once the user experience survey is completed, they will not receive subsequent text messages. If they partially complete the survey, they will receive subsequent texts. If they opt out, they will not receive any more messages.

The messages for wave 1 are:

Share your feedback about the 2020 Census online form with the U.S. Census Bureau here: URL-LINK Reply STOP to cancel

REMINDER: Census Bureau needs your help to understand how the 2020 Census online form worked for you. URL-LINK Reply STOP to cancel

Last chance: Share your experience with the 2020 Census online form. URL-LINK Reply STOP to cancel

In wave 1, we will experiment with the text time. The experiment focuses on the time of day the texts are sent. We will split the sample in half to test two different time of days for texting. We will attempt to use the time zone associated with the address from the 2020 Census connected to the cell phone to send the texts at the correct time.

Condition 1 |

|||

1st text |

2nd text |

3rd text |

End of survey (no text) |

Monday |

Weds |

Tues |

Thurs |

Noon |

Noon |

Noon |

midnight |

Condition 2 |

|||

1st text |

2nd text |

3rd text |

End of survey (no text) |

Monday |

Weds |

Tues |

Thurs |

6 pm |

6 pm |

6 pm |

midnight |

For waves 2, 3 and 4, we might change the days of the week to test different combinations, learning from each wave of sample to optimize response. We will keep the 11-day field period constant. We do not plan to change the text message content, but will revisit that decision if the response rate is lower than 4%.

Survey Administration: The questionnaire will be administered online only using the survey platform Qualtrics. Qualtrics has a FedRAMP Moderate approval and a Census Authority to Operate to collect T13 data. Respondents will receive a text message with a link to the survey which will then take them to the Qualtrics instrument.

Questionnaire: The full questionnaire is attached (see Attachment 1: Census 2020 User Experience Questionnaire) with branching identified in the comments. This questionnaire underwent two rounds of “hallway” testing with a total of 10 internal Census Bureau employees and five participants who were related either by friendship or family to the researchers. Changes were made after each round of testing to the flow of the questions, the response choices, or the questions themselves. The researchers used their own experience with usability testing of the 2020 Census online questionnaire, and their experience observing calls to the 2020 Census call center to come up with the response choices to many of the questions.

Initially the questionnaire gathers an overall satisfaction measure. If the respondent was dissatisfied with their experience, the questionnaire attempts to gather why the experience was not satisfactory. Then for non-ID cases, the questionnaire gathers specific information about the screens were the address is collected and where the address is reformatted, as we suspect it is at these screens that the break-offs are occurring. We also gather information about the login screen because in usability testing we observed some participants choosing the non-ID path by mistake or having difficulty with finding their Census ID. We then gather information about how many attempts a respondent made to answer the census (again based call center observations, we found many people trying to help others or identify a vacant property and having difficulty with that process); whether they answered for everyone in their household (which could explain cases where the MAFID has multiple census questionnaires associated with it); and finally if they are successful. Finally, we gather any other comments on the census questions or experience. We found during hallway testing that even respondents who were satisfied had comments on particular aspects of their experience.

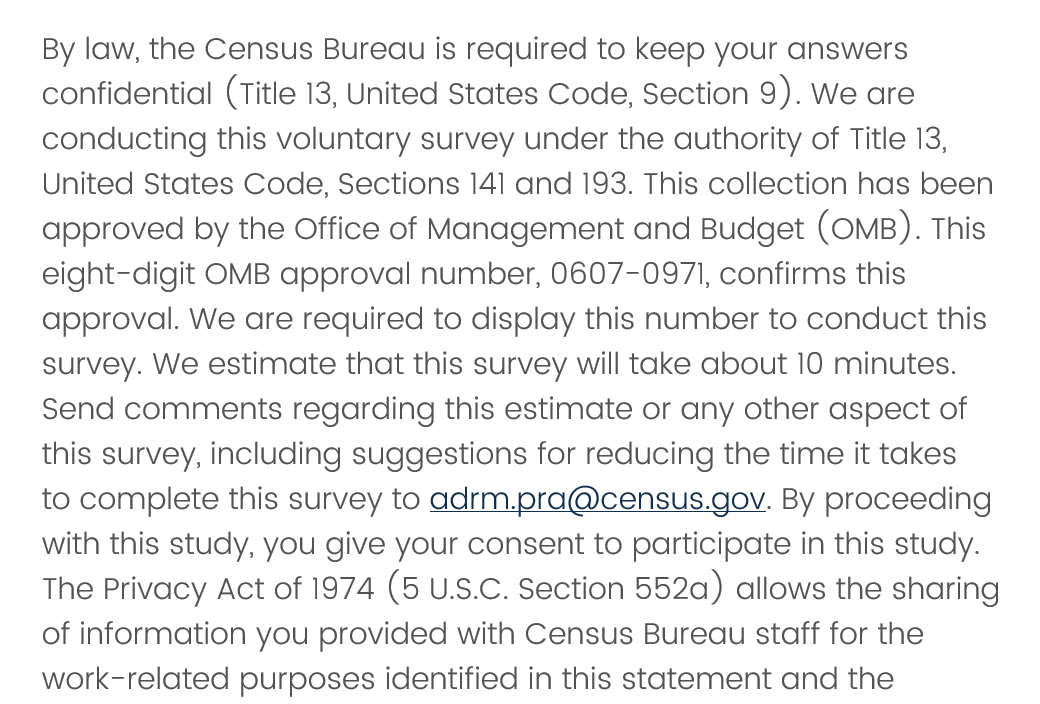

Informed Consent: In the survey introductory text, we inform participants that their response is voluntary and that the information they provide is confidential (protected under Title 13) and will be accessed only by employees involved in the research project. The screen design is shown below.

![]()

Incentive: Participants will receive no incentive.

Length of Interview: We plan on contacting a sample of 150,000 cell phone numbers with up to three text message each. We estimate each text would take no more than 10 seconds to read for a maximum burden of 1250 hours. We estimate that the response rate to the survey could be anywhere between 10% and 33% with a maximum number of completes as 50,000. We estimate that the survey will take an average of 10 minutes for each complete response. The maximum number of burden for the survey is 8,333 hours. The total estimated burden of this research is 9,583 hours.

Table 1. Total Estimated Burden

Category of Respondent |

Max No. of Respondents |

Max No. of times occur |

Time for each occurrence |

Burden |

Reading text message invitations |

150,000 |

3 |

10 seconds each |

1,250 hours |

Survey |

50,000 |

1 |

10 minutes |

8,333 hours |

Maximum Total Estimate |

|

|

|

9,583 hours |

The following documents are included as attachments:

Attachment 1a: Census 2020 User Experience Questionnaire – with no images (this document is easy to version of the survey flow)

Attachment 1b: Census 2020 User Experience Questionnaire – with images (this document is more difficult to read, but it shows the images that will be on the survey screens)

The contact person for questions regarding data collection and the design of this research is listed below:

Center for Behavioral Science Methods

U.S. Census Bureau

Room 5K419

Washington, D.C. 20233

(301) 763-1724

Page

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Erica L Olmsted Hawala (CENSUS/CSM FED) |

| File Modified | 0000-00-00 |

| File Created | 2021-01-13 |

© 2026 OMB.report | Privacy Policy