Research Report

Attachment E_AIES Usability Testing F&R__DRB Approved.docx

Generic Clearance for Questionnaire Pretesting Research

Research Report

OMB: 0607-0725

Findings and Recommendations from Usability Testing for the Annual Integrated Economic Survey

Prepared for:

Lisa Donaldson, Economy-Wide Statistics Division (EWD)

Melissa A. Cidade, Ph.D., EWD

Prepared by:

Rebecca Keegan, Economic Statistical Methods Division (ESMD)

Hillary Steinberg, Ph.D., ESMD

Demetria Hanna, ESMD

Rachel Sloan, ESMD

Office of Economic Planning & Innovation

Economic Programs Directorate [for external release]

U.S. Census Bureau [for external release]

12/01/2023

Table of Contents

USABILITY METRICS AND DATA SCORING 9

Online Spreadsheet Features 18

Error Checking Functionality 22

Related Cognitive Testing Results 29

About the Data Collection Methodology and Research (DCMR) Branch 31

Tables

Table 1. Companies by sector. Note, MUs were classified into multiple sectors. 5

Table 2. Companies by Number of Establishments. 5

Executive Summary

Researchers in the Data Collection and Methodology Research Branch (DCMRB) of the Economic Statistical Methods Division (ESMD) conducted usability testing with 28 participants to understand whether respondents can navigate through and understand the proposed structure of the new Annual Integrated Economic Survey (AIES) instrument, and how the structure of the instrument would be expected to affect procedures respondents use to gather and enter their companies’ data.

Respondents completed up to 9 tasks which were designed to ensure respondents would interact with fundamental aspects of the instrument which are required for survey response. These participants represent a range of company sizes from single units (SUs) to large companies with hundreds of establishments from across the United States. These companies were associated with multiple industries. See participant demographics below.

High priority usability findings included:

Users were not aware they needed to take action to verify their establishment information.

Users could not scroll and read questions at the same time, nor could they see which row corresponded to which establishment when scrolling in the online spreadsheet.

Users were not expecting the online spreadsheet to automatically round their entries to the 1000’s.

Users did not understand the meaning of ‘NAPCS’; Manufacturers did not know they needed to complete this tab.

Users did not understand the meaning or purpose of the KAU rows.

Users were very concerned with the inability to go back to previous steps once they submitted them.

Users wanted to be able to download answers to the entire survey before submission.

In addition, to these findings we present and discuss medium and low priority usability issues in the report. Recommendations to fix the identified usability problems are also included.

Research Objectives

Usability interviews were conducted to assess functionality of the prototype instrument, by examining whether respondents can successfully complete tasks that are designed to mimic those they would need to complete when filling out the AIES. Researchers assessed whether the instrument is user friendly by examining the ability of respondents to navigate through the instrument in an efficient way, and to test the respondents’ ability to successfully provide data to the AIES.

Objectives for the evaluation of the online AIES instrument included the following:

Evaluate the instrument’s performance in terms of efficiency, accuracy, and user satisfaction.

Identify areas of the instrument that are problematic for users.

Identify instructions/features that are difficult for users to understand.

Evaluate the ability of respondents to complete the basic data collection steps.

Understand how respondents navigate and use the spreadsheet.

Identify if respondents demonstrate an understanding of the establishment versus industry reporting.

Evaluate if respondents can access instrument support documents.

Evaluate how respondents resolve errors.

Evaluate if respondents understand how to submit their data.

Provide recommendations for improvements to the design of the instrument that will enhance its usability.

Research Methodology

Usability testing is used to aid development of online web instruments. In this case the objectives were to discover and eliminate barriers that may keep respondents from navigating and completing an online survey accurately and efficiently with minimal burden.

Usability tests are similar to cognitive interviews – that is, one-on-one interviews that elicit information about the respondent’s thought process. Respondents are given a task, such as “Complete the questionnaire,” or smaller subtasks, such as “Send your data to the Census Bureau.” The think aloud, probing, and paraphrasing techniques are all used as respondents complete their assigned tasks. Early in the design phase, usability testing with respondents can be done using low fidelity questionnaire prototypes (i.e., mocked-up paper screens). As the design progresses, versions of the updated web instrument can be tested to choose or evaluate basic navigation features, error correction strategies, etc.

Using usability tests as a method of evaluation, researchers draw conclusions about the instrument:

Layout & Display

Navigation

Functionality

Geisen and Romano Bergstrom define three measures of evaluation – effectiveness, efficiency, and satisfaction. Effectiveness can be measured in terms of whether or not users are successfully able to complete specified tasks. Efficiency is the number of steps it takes a respondent to complete a task. Satisfaction is often a self-rated measure or qualitative comment elicited during the testing that demonstrates the respondents’ perceived ease of use and level of frustration with the product of interest.

For the purposes of this research, the usability tasks were focused on verifying and updating location data, reporting company level data, and using the spreadsheet. See tasks for more information. The interviews followed a semi-structured interview protocol, found in Appendix B. More information about the methodology used for this project is available in Appendix A.

PARTICIPANTS

Twenty-eight participants took part in the usability evaluation of the Annual Integrated Economic Survey instrument. This included a variety of companies representing single unit firms (SUs), multiunit firms (MUs), manufacturing, and industry. Participants had characteristics of potential respondents to the survey.

Table 1. Companies by sector. Note, MUs were classified into multiple sectors.

Sector Code |

Number of Companies* |

Description |

11, 22 |

3 |

Agriculture, Forestry, Fishing and Hunting; Utilities |

31-33 |

15 |

Manufacturing |

42 |

9 |

Wholesale Trade |

44-45 |

3 |

Retail Trade |

48-49 |

4 |

Transportation and Warehousing |

51, 52, 53 |

4 |

Information; Finance and Insurance; Real Estate Rental and Leasing |

54 |

3 |

Professional, Scientific, and Technical Services |

55 |

12 |

Management of Companies and Enterprises |

56 |

5 |

Administrative and Support and Waste Management and Remediation Services |

62; 72 |

4 |

Health Care and Social Assistance; Accommodation and Food Services |

81 |

3 |

Other Services (except Public Administration) |

*Companies were associated with multiple NAICS.

Table 2. Companies by Number of Establishments.

Establishments |

Number of Companies |

1 |

8 |

2-20 |

9 |

21-60 |

6 |

61+ |

5 |

Twenty of the total participants were recruited from multi-unit establishments (according to the LMNS code MU types were classified as S=0; M=3; L=12, N=5), whereas 8 participants were recruited from single-unit business establishments. Participants held positions such as:

President, Controller, CFO, Accountant, Director of Operations, Tax Manager, etc.

The industries in which these participants represented varied and were reflective of the types of establishments reporting to the survey. These included:

Manufacturing, Management of Companies and Enterprises, Wholesale Trade, Administrative and Support and Waste Management and Remediation Services, and Other Services.

Researchers recruited participants using a file which included a sample of companies that were eligible for the final third phase of the pilot (they would not be asked to take part in pilot phase three) and had additionally not participated in either of the first two pilot tests. After some preliminary probing during debriefing interviews associated with the first two pilots, which inquired about the feasibility of in-person visits with participants, it was determined that in-person testing would be most appropriate for two reasons: One, researchers would be able to simulate the AIES on Census computers, as a test site was only available internally; and two, researchers would be able to better discern how participants interacted with the instrument. Thus, we identified which cities were most represented in the file.

Ultimately, we tested in person in Philadelphia, Chicago, Raleigh, Charlotte, Los Angeles, and New York. Surprisingly, we contacted businesses in the Washington DC area, but none consented to interviews. We suspect there may be some fatigue in interacting with government entities in that location, while other areas felt speaking to us was novel.

We identified businesses that were a reasonable distance (within an hour’s travel) from a locust hotel in each city. We first cold-called contacts using information from the Census Bureau’s business register (BR). Then followed up with emails. While we completed 28 interviews, we had 29 appointments. One session was marred by an inability to access the instrument, so the researcher did general cognitive interviewing.

STUDY DESIGN

The objective of the usability test was to (1) evaluate the instruments’ performance (2) identify areas of the instrument that are problematic (3) identify instructions and features that are difficult to understand, and (4) provide recommendations for improvements to the design of the instrument.

Findings derived from the evaluation can serve as a baseline for future iterations of the instrument as a way to benchmark the instrument’s current usability and identify areas where improvements could be made.

During the usability tests, participants interacted with the internal test environment for the AIES production instrument at their establishment using a Census provided laptop. All participants received the same instructions (see Appendix B for a copy of the testing protocol) and the following measures were collected and analyzed:

Effectiveness - Percentage of successful completions for each task

Verbal and non-verbal behavior

For additional details on these measures, see usability metrics.

TASKS

Tasks were designed to reflect realistic and representative tasks that respondents would have to complete while reporting to the AIES survey. All participants completed these tasks. They fell into the following categories:

Verify locations

Participants were shown Step One, which was a spreadsheet with information of all of the locations of the proxy company. They were asked to verify each location’s information as accurate and move to the next page. They could do so by entering a “1” for operational status for each location. Failures to do so before moving forward would result in an error.

Provide company level data

Participants were asked to fill out the information for each question on each page of Step Two. They were provided numbers to fill in (including some 0’s and numbers that would be rounded). They could access these answers via a printed-out packet or a word document on the laptop.

Complete the spreadsheet

Participants were first asked if they would have naturally picked the upload/download spreadsheet or online spreadsheet in Step Three. They were prompted to choose one or the other based on our testing needs. Researchers first asked participants to explore the spreadsheet as they normally would. From there, we asked that they find the question “What were the total capital expenditures for new machinery and equipment in 2022?” in the Capital Expenditures section of the Content tab. We asked that they enter in $100,000 for each location. The online spreadsheet auto rounded responses, adding three zeros to each answer.

Submit data

Participants were asked to go through the steps of submitting their survey. They would enter in dummy data, approximate how long this survey would take their company, and tell us what they expected from a submission page.

User tasks differed for multi-unit and single-unit participants as there are different reporting options presented on the site for each user group. For example, single-unit establishments were not asked to participate in the ‘add a location’ feature. Not every participant completed every task in this section, though most completed at least two. Tasks that not all participants engaged with included:

Add a location (MU only)

Participants from MU companies were asked to add a location in Step One. They were expected to click the “add a location” button. From there, they could make up data to input, including the name. We provided a “major activity code.” Researchers indicated to participants to designate the location as operational.

Locate and understand KAU row(s) (MU’s only)

Researchers brought the KAU row to the attention of the participant, if they did not naturally examine it. Researchers asked the participant what they thought this row represented in the content tab of the Step Three spreadsheet. If they did not know, we asked them how they would find this information. From there, we asked them to navigate to the question “What were the total operating expenses in 2022?” in the Total Operating Expenses’ section of the content tab. We asked them to enter in a value of $100,000 in any available KAU row.

Provide data to NAPCS (Manufacturing only)

We first asked participants to locate the NAPCS tab in the spreadsheet in Step Three and tell us what they thought it was. From there, we asked them to choose any product listed in the tab and value it at $100,000.

Error Check

We first asked participants at the end of completing the spreadsheet in Step Three if there were errors. From there, participants were expected to navigate to the error check tab, run the error check, and click on the link to identify errors. We asked participants how they would like to be notified of errors.

Upload Spreadsheet

We asked those participants who completed the upload/download spreadsheet for Step Three to upload the spreadsheet. They were expected to browse or drag and drop their spreadsheet and click the upload button.

A complete listing of tasks used in the evaluation for multi-unit and single-unit establishments can be found in Appendix B.

PROCEDURES

The testing site for AIES incorporated the use of what’s referred to as “dummy” or proxy data; data that approximated the business’s attributes. Using these options, we matched each company that had signed up for a usability session to a proxy company, based on the number of locations, industry, and manufacturing status. As such, participants completed the testing on Census bureau laptops, while researchers took notes on a Census bureau iPad using a Qualtrics protocol.

Each of the usability sessions were conducted at the participants’ business establishment (or in some cases a neutral space like a public library). Sessions lasted approximately 60 minutes. The participant signed a consent form that referenced the OMB control number for this study, the confidentiality of the session, the volunteer nature of the study, and what will be recorded during the session. Once the participants gave consent, audio/video recording was started.

The test administrator explained the “think-aloud” procedure in which participants are asked to verbalize their thoughts and behaviors as they interact with the site to provide a deeper understanding of their cognitive processes. The participant was then asked to complete tasks using the site and probes were administered as needed by the researcher (e.g., keep talking, um-hum?). Participants were given a written copy of each task.

Once the participant finished the tasks, the TA asked a set of debriefing questions. Finally, the session concluded.

USABILITY METRICS AND DATA SCORING

Effectiveness was the primary metric used to assess the overall performance of the AIES instrument.

Effectiveness refers to task completion and the accuracy with which participants completed each task assigned to them.

A task is considered as being successfully completed if the participant was able to navigate through the instrument to complete a given task (via the optimal paths) without assistance from the test administrator. If the participant was able to successfully complete majority of the task with no assistance from the test administrator, the task will be coded as a partial success. Otherwise, if the participant failed to complete the task or required assistance, it will be coded as a failure.

Researchers also paid close attention to verbal and non-verbal behavior, prompting where appropriate to better understand and capture participant reactions to the tasks in real time.

LIMITATIONS

As in any usability study, findings may be limited by the small, non-statistically representative sample used. Since this study was voluntary, those who participated may be more motivated to complete surveys than those who chose not to participate, and so are not necessarily representative of the population.

Additionally, some users had difficulty with using the Census laptops instead of their own devices. Using unfamiliar devices caused some users to have more difficulty going through the instrument than they may have had if they had been able to use their own devices. Furthermore, the small screen of the laptop condensed the online spreadsheet view, potentially adding to some navigation difficulties discussed below. The use of proxy establishment data, as opposed to real live data, may have contributed to users being less likely to notice issues than if the data had been real.

PRIORITIZATION OF FINDINGS

In order to identify areas of the AIES production instrument that are problematic, user behavior and verbalizations were observed and recorded during usability testing sessions. Usability team members analyzed behaviors across participants to cite usability findings. A summary of the findings and their prioritization can be found in Table 3 below.

Findings were prioritized based on the following criteria:

High priority: These issues can prevent users from accomplishing their goals. The user-system interaction is interrupted, and no work can continue. These also consist of issues that occurred most frequently during testing.

Medium priority: These issues are likely to increase the amount of time it takes users to accomplish their goals. They slow down and frustrate the user, but do not necessarily halt the interaction.

Low priority: These issues negatively influence satisfaction with the application, but do not directly affect performance.

Table 3. Summary of Findings and Prioritization.

Findings |

Prioritization |

|

High Priority |

|

Medium Priority |

|

Low Priority |

RESULTS

Results are organized chronologically, as they would be encountered in the survey instrument, within each prioritization category.

EFFECTIVENESS (ACCURACY)

Table 4. Participant success rates for tasks (n=28)

User tasks |

Full Success Rate (%) |

Success with Prompting Rate (%) |

Failure Rate (%) |

Task 1: Verify Location |

0 |

14 |

86 |

Task 2: Add location |

70 |

10 |

20 |

Task 3: Step Two |

75 |

21 |

4 |

Task 4: Answer by establishment |

26 |

48 |

26 |

Task 5: NAPSC |

46 |

27 |

27 |

Task 6.1: KAU |

11 |

0 |

89 |

Task 6.2: Question Location |

42 |

50 |

8 |

Task 7: Submit |

96 |

4 |

0 |

HIGH PRIORITY FINDINGS

Users were not aware they needed to take action to verify their establishment information.

Step One of the survey instrument required users to verify their establishment information before moving forward. The first task for usability participants was to complete this verify step. They were told to assume each prelisted establishment was operational. When asked to verify locations, users could typically only see the information for each location, and not the questions, in the online spreadsheet due to the layout of the spreadsheet table.

Users did not naturally scroll to the right, or necessarily notice the horizontal scroll bar. As such, every user assumed they could verify the locations by reading the information and clicking save and continue to go to the next step. Every single participant received the error indicating that they must input a value to demonstrate each establishments operational status. Only then did they scroll right and enter the values.

“I’m going to type all this stuff in? Are we waiting for something here? It’s not clear which question to fill out to verify. I’m still confused.”

“You just want me to verify like I was submitting it? I have to scroll through 900 locations. I’ll hit save and continue… [error pops up] I get overwhelmed looking at the errors.” “I scrolled down to see locations. Do I do anything here? I would look at the name, store plant number. This survey looks like the one real big, like the EC. I usually make sure these are right. [Presses save and continue, gets error] Not clear that you had enter anything to verify. It’s not clear there was more to the survey.”

The error preventing respondents from moving forward was noticed by each respondent, but it remained unclear to respondents exactly how to update the operational status. At this point, some respondents had still not discovered the horizontal scroll, and required assistance to complete the task.

“I don’t know what I’m supposed to verify to move forward."

Another critical concern regarding the Verify step was the lack of clarity that questions were over to the right of the visual field. Users did not scroll right to see the rest of the spreadsheet on Step One. They did not know they had to answer questions for each establishment because they did not scroll to the right and see them. Many users remained entirely unaware of the survey questions until they received an error after attempting to move forward.

Many participants assumed the first view of the online spreadsheet was complete. Several participants did not see the horizontal scroll at all. They often had to click into a cell and then scrolled with arrow key.

Recommendations:

Hug the horizontal scroll bar to the bottom of the establishment rows.

Include explicit instructions to input operational status and to scroll right in order to access this column.

Within the error message, indicate which column they need to address (e.g., the last column, or column X, assuming this is consistent regardless of company size).

Highlight the exact column in a bolded boarder, and jump/auto-scroll the table to the relevant column.

Users could not scroll and read questions at the same time, nor could they see which row corresponded to which establishment when scrolling in the online spreadsheet.

Because of the large block of negative space on the top cell, users could not view the key data relevant to their locations, and the survey questions on the spreadsheet at the same time. When scrolling to the right, participants could not see which establishment row they were answering for. Users quickly grew frustrated scrolling back and forth to doublecheck the establishment (or KAU) they were attempting to answer for. This was particularly burdensome for multiunit companies who could easily type in erroneous data intended for an establishment above or below the row they were in. Further, once they had to scroll back to check the location, they lost their place of the question column.

Additionally, users could not view the questions and scroll up and down simultaneously. For those with multiple locations, the spreadsheet requires both up/down scrolling and horizontal left/right scrolling. When scrolling down to input data for locations far down in the location listing, users lost sight of the survey questions, requiring them to scroll up and down to double check the question, and in some cases back over to the left to ensure they were on the correct establishment.

This constant need to reorient is frustrating for users. It will result in lower data quality because it is difficult to locate the correct place to report, but also because it is difficult to check answers.

“That’s awful you can’t see the questions and scroll at the same time. Can I make this smaller, the display smaller?... I want a smaller spreadsheet, bigger font. This is awful, awful, awful. I don’t know how I would do that, scroll over at a time. I have to hold my finger to the screen to make sure I’m on the right question.”

“I would prefer to be able to see the questions as I scroll.”

“Here’s my problem. Usually in Excel you can freeze the header. I can’t scroll and look at the question at the same time. At least the header should freeze where it’s visible.”

“[I] want the questions locked on top- to see what I’m actually looking at.”

“It helps if the scroll bar is somewhere visible with the heading AND the location.”

“I’m supposed to scroll back and forth like that to see what I’m supposed to answer?”

Recommendations:

Freeze key establishment data, such as the plant/location ID and an address, so that when scrolling right, users can retain which establishment they are answering for.

Freeze the question row so users can scroll down and have a constant view of the survey questions.

Automatically play a how-to video describing each tab of the spreadsheet, how to scroll, how to click and drag.

Users were not expecting the online spreadsheet to automatically round their entries to the 1000’s.

Users inputted exact values into the online spreadsheet. Figures automatically rounded to the thousands, adding three zeros. There was no instruction for this, so users did not have an indication this would happen. Users were frustrated and felt as though they were not given the tools to report correctly. This unexpected rounding will lead to the inflation of values and lower data quality.

“There was nothing indicated it would be in thousands.”

“It’s not clear that it’s in thousands. I put a period to make sure, it took it away. So, it clears with error if you don’t put in thousands despite no instructions to do so. Put that it’s in thousands in paratheses.”

“There was nothing indicated indicating it would be in thousands.”

“It auto changed to thousands. That would be fine if it told you that. It should be an instruction on the front tab, ‘please enter in thousands.’”

“I would rather the column, that every column says in 1000s, like in the EC."

“I would say that the improvements that could be made would be to be clearer about the numbers in thousands in hundreds, most of your surveys are. It’s surprising this is not.”

This was especially confusing because some answers could be under 1000. Certain cells won’t allow users to input figures less than 100, such as Capital Expenditures.

“When you type in 100 it changes to 1000’s; bizarre functionality. I would call for help. I can’t put $100.”

Recommendation:

Include a line in the instruction section noting that values will automatically be rounded to the nearest thousand.

Users did not understand the meaning of ‘NAPCS’ and manufacturers did not know they needed to complete this tab.

Users were not inclined to open the NAPCS tab on the online spreadsheet. Users in the upload/download Excel also did not open the NAPCS tab. Of the 28 individuals interviewed not one user knew what NAPCS referred to, and it was not a term they had seen before.

This meant manufacturers were not aware they needed to complete these questions or tabs. They knew they had to fill out the main spreadsheet but were not given instructions to enter other tabs. This will lead to missing data on manufacturers.

“NAPCS, what is this? Not clear I have to fill out that tab."

“I do not know what an NAPCS is. It’s not clear for manufacturing products. Where does it tell me what I’m doing? It’s not clear. The question was not visible.”

“I have no idea what NAPCS is.”

Recommendations:

Change name of tab to a plain English description (such as ‘Product Data’) and do not allow manufacturers to go forward without opening the tab.

Remove the NAPCS tab for respondents in industries that do not require NAPCS data.

Users did not understand the meaning or purpose of the KAU rows.

Multiple issues arose with the addition of the KAU rows. Firstly, users did not necessarily notice the KAU rows as separate from the rest of the establishment listing. This might have been partially due to the fact that dummy data was used to represent their establishments, thus the KAU rows intended to be distinguishable from the rest of the establishments were not salient. The exact placement of the KAU row was unpredictable. At times, located at the top of the table, sometimes at the bottom, and other times intermixed with establishment data. Generally, researchers were required to specifically point out the KAU rows to respondents to complete the KAU usability task.

When the participants were asked if they would be able to describe what the KAU row represented, or whether the row was different from the other rows in the listing, users became frustrated attempting to locate information on the KAU’s roll.

The next issue participants encountered once the row had been pointed out, was that there is no data listed in the columns making it appear as though there’s some sort of glitch, where data did not get filled in.

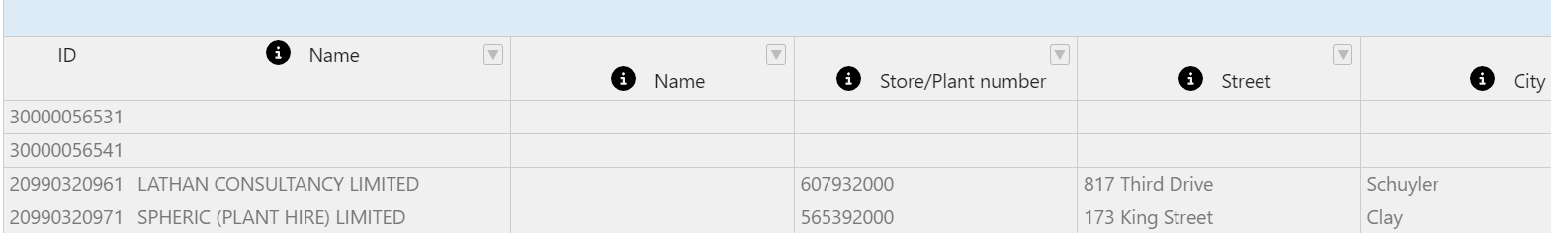

Figure 1. Blank columns in primary visual field contributed to confusion.

The KAU row does not have any clear language distinguishing them, and only had numbers to suggest they differed from establishment rows. It is not clear that the ID column marks the KAU row as different, as the ID column is not meaningful to respondents. Respondents assume the ID column contains Census-specific information, and any distinction between ID’s beginning with 20, versus 30 is not meaningful or noticeable.

This is relevant to the pertinent column, ‘Record Type’ which contains a plain English explanation of the KAU in the description. The record type column is not located by respondents. A single digit distinction between 20 and 30 in the row is not useful or noticeable to respondents. Users were not familiar with such numbers and did not use them in their records. Further, even upon reading the plain English description in record type was still not entirely clear. A better description is required.

Some respondents sought information in the Overview tab to varying success. Users were not familiar with such numbers and did not use them in their records. The definition for the KAUs were in the overview, which most users did not read. Even those who did read the overview were not familiar with KAUs as a term and did not know what these rows signify.

Once they had read the description, users were still mystified. Most did not store information on the industry level, or our industry designations did not match theirs. They thought they could roll up their establishments if they were given indication for which locations matched which industries. Currently, it is unclear.

“It’s not clear what you’re answering for each row. There are three locations but only two are highlighted, so I don’t know what’s going on there.”

“Obviously the gray you can’t fill it in. It depends on the question. Some rows are gray, and some are not. [I assume they’re] errors…If it’s gray, then I don’t have to answer. It’s not applicable…I don’t like that at all. I read overview tab, and this was not clear.”

“I have no idea what those top two [rows] would be. [Clicks them] I don’t see anything. There’s no name. Super confusing that it’s the industry at top. It’s not clear which locations fall under which row in industry. I guess first I would figure out which industry the location is, working with, and then add up those location. Manually adding up location is adding more work for you.”

“They must not be my location.”

Some users also felt that they could answer at the establishment level, and that the program would roll up their answers. Most worryingly, users interpreted the grayed establishment cells in these questions meaning that the entire question did not apply to their company. They skipped these questions, and even if they knew they had to answer it, they were not sure how to. This will lead to missing data. See more in the section about color coding.

“I don’t know what a KAU means. I would have to google it…You can do a sum up. If you were giving info by location, it could automatically sum. I would skip questions that are industry only.”

“First two rows are a glitch. I would just skip them and ignore them if it’s just the two rows, that doesn’t give me information of which location you’re referring to.”

“White means I need to populate, gray means not for me [so I don’t have to answer KAU questions].”

“Gray means I don’t need to look at it. I can’t change it either way.”

Recommendations:

Rename KAU rows and make clear which establishments roll up into them.

Include key descriptive data in the left of the table so users do not need to scroll and seek out a description.

Nest relevant establishments under the matching KAU row.

Consider more ways to distinguish the KAU row with a different color. Numerical distinctions are not salient.

Users were very concerned with the inability to go back to previous steps once they submitted them.

While it was communicated on title pages that users could not go back to previous steps, users did not read these warnings. Most did not notice the inability to navigate back until prompted. Users were dismayed they could not go back to see or make changes to previous answers.

Participants wanted to be able to triangulate their answers in current step with the previous step. They pointed out that many users would lack the ability to go over their work, leading to errors and lower data quality. If they noticed an issue, they would be able to correct it. This also made the survey much longer for users who delegated questions – they may need to reach out to others such as establishment contacts three separate times, per each step, and wait for answers before they can move on.

Furthermore, a vast number of establishment respondents have reported that in gathering data for establishment surveys, they often want to explore what questions they will be asked ahead of data entry. They may do this either by seeking out a template or pdf listing of the upcoming questions, or by manually clicking around to explore what’s coming. In the interest of exploration, some respondents have indicated they may even input fake data as a mechanism to click forward- then go back and enter real data when they have it in hand. The site does not give sufficient warning that the user will not have the ability to return to make corrections which could lead to serious data quality issues.

“I don’t like that you can’t return. Sometimes you want to be able to go back, especially as you go further into the survey, you learn what they’re looking for. It’s important to be able to go back and update for changes. You’re going to get invalid and incorrect information if it is what it is and people can’t change it. I might think it’s correct and want to follow up. With gathering the data, you’re waiting on someone else. It’s nice to fill out what you can and then go back for a few minor follow ups, going to give you more freedom to do when you have time.”

“I didn’t notice that you can’t go back. I prefer that you could. It’s more difficult if you don’t know the information.”

“Is there a way to navigate back? What if I want to add a location? Or check my data?”

Recommendations:

Include the ability to go back, as well as the ability to edit data from prior sections. Subsequent data fields should reflect these changes.

In lieu of including this essential backwards navigation, include warnings by way of popups that clearly message to users before moving forward that they cannot go back to view or make changes.

Users wanted to be able to download answers to the entire survey before submission.

Most participants told us they had an expectation of being able to download all of their answers at the end of the survey for all three steps. Many pointed out this was standard for Census Bureau surveys, and that they used this feature every time. For some, this also means printing their data so they can retain a physical copy for their records.

Participants told us this was important for their own internal records and to discuss any errors they may have missed with the Bureau. Large multi-unit firms, especially manufacturers with recent acquisitions, were especially insistent on this as a way to build institutional memory. Users also suggested that the answers to the AIES from previous years should be accessible in the portal to relieve burden in the future. Note that economic respondents also consistently report that they will print the final confirmation notification page.

Users may become distressed without any indication prior to submission that they will be able to retain a copy of the data for their records, as this is a standard and critical practice for economic respondents.

“Normally when I’m done, I’d print the confirmation.”

“I like to have the report, confirmation could have a button of printable. A locally saved report would be very helpful.”

“I’d like to be able to get back to the information I put into the survey. Other forms ask for roll forward from prior year to new year. I would like a way to access the previous year’s form.”

“I would want a way to get the answer you gave. I was expecting that. Maybe a printout of what I submitted, or a PDF or something.”

“I’d like a submission confirmation, to download responses, and then hit submit, instead of a prayer.”

“I want to print everything out.”

“Usually, it will give you an option to print. And I always do that. Might have been on the previous page?”

Recommendations:

Add a download feature in the final submission step.

Add a print feature in the final submission step.

Include a print option on the confirmation of submission notification.

Include a note on the final page prior to submission that participants will be able to print their data before submission.

Feature the company’s previous AIES submissions in the portal prominently.

MEDIUM PRIORITY FINDINGS

Online Spreadsheet Features

Drag, drop and duplicating data

While the spreadsheet table does allow for data to be dragged and dropped, only two savvy users were able to utilize this feature. This may be because a cell or column needs to be selected first before attempting drag and drop. All other participants assumed the data for each row required manual input.

While this was easy for SUs or smaller MUs, this posed an issue for larger companies. If this feature is not made clear, these users would manually fill in sometimes hundreds of operational statuses. In this study, one company with nearly 1,000 locations, was provided a hypothetical proxy company with 600 locations. The process of inputting a “1” as operational for each location took about five minutes and was extremely burdensome. This could only be magnified for this company, with a third more locations and the need to look up every location and fill out the operational status.

Particularly for large companies, some said they would like to use familiar spreadsheet features, such as programs that copy columns.

One other feature respondents are familiar with that was lacking is an undo button. One respondent struggled to undo an action and was searching for an undo button above the table.

“I have to manually put 1 for each? I’m going to put 1 and copy it. [tries] I would highlight and paste, that doesn’t work here. We should have the ability to manipulate it as much as we can to make it more user friendly. This is administrative stuff that just wastes a lot of time. If you have one location, it’s not a big deal.”

“I don’t feel good about manual [input of one] for each location. I’m discouraged by only online spreadsheet. If you have a whole bunch of locations, a lot of time it’s a PDF and then plants can manually write in…I usually use drag and copy and paste in Excel. That would be helpful, if it was easy to every location for the full year. I could go and fill it.”

Recommendations:

Include instructions on how to duplicate data with drag and drop.

Include an undo and redo button.

Searching and jumping between question topics

Some users planned to fill out the spreadsheet one column or question at a time because their information was centralized, and they only had one location. This process was particularly specific to small, single unit companies, where often the respondent was an owner or accountant, and had access to all the information.

However, most participants reported they would skip around to pertinent questions as they found or received data. Respondents may want to utilize a search/quick find feature for several reasons. For example, to explore the lengthy spreadsheet topics, to skip over question topics that are irrelevant to them, or to jump to a specific section and enter data they didn’t have in hand initially.

Some participants attempted an alternative way, other than scrolling, to jump easily between sections, but this was made much harder by the lack of a search function. Participants expected the search function and voiced wanting a bar at the top to enter in key words.

“I wish there was like search, like the bar. I can go to that column immediately. I would try ctrl-F. Please, if there’s no search bar, then ctrl-F definitely [needs to be there].”

Several participants attempted to use the short key control find, ‘ctrl F’, to seek out the relevant question the task referred to, but this did not work. Users would type in the keyword, for example ‘capital expenditures’ expecting the table to jump to questions with this word, but nothing would happen.

“Can I find? Can I search? I can’t search in the cell. Ctrl-F is not working.”

Most respondents resorted to manually scrolling, and as mentioned above, often became frustrated when they lost track visually of which establishment they were reporting for, needing to constantly reorient themselves.

Recommendations:

Add a search bar.

Ensure the ctrl-F function works. Or provide instructions on how to ctrl-F successfully.

Consider a way to differentiate question topics better visually, such as by using subtle shading or colors.

Seeking question-specific clarification

Respondents sometimes sought clarity to a specific question. For example, one participant explained they were confused by the value of product question, asking if the question was referring to the value of the materials, or the value it was sold at.

The info button was not salient. The information in that button can appear duplicative and not relevant to the cell it’s nested under. For example, often the info button displays identical information for an entire section topic. Participants were expecting easy access to an explanation for each cell. The overview tab did not satisfy this need.

Recommendations:

Consider using another icon for the info button that better emphasizes the “i.”

In other areas of the site respondents might click into for information (such as the ‘more information’ link on the Overview screen) include a line that mentions the information icon nested in the cells.

Be sure that the information link is relevant to the specific cell it is associated with and defines any key words, as well as answers any common questions about the topic.

When a mouse hovers over the “I” icon, show text that reads “information”.

Filtering; Sorting Data

Some participants were interested in reorganizing the order of the rows pertaining to each establishment. Generally, this would be because they want to copy information directly from their records into the spreadsheet, matching the organization of their internal records.

Some participants located the filter feature, but this was not what they were looking for, and is a separate function from sort which they are used to having access to in standard spreadsheet programs.

Some respondents clicked in the filter option expecting a dropdown with fillable options and found the filters “misleading”. Others expected the filters to be a drop down with information regarding that specific cell or a drop down with response items to select from.

In general, the filter feature tested poorly. While several participants clicked into it throughout testing, it did not meet expectations for any of them.

To this end, some respondents from larger companies expected there to be a feature which would allow them to bring in their data directly from their records. One participant said she would want to export her data from the survey site to her records.

[In referencing the filter] “The very first line of data is a drop down with an explanation”.

Recommendations:

Include a sort feature.

Some users sought out alternative ways of entering data.

Several participants attempted to “click into” a cell- expecting to make changes in an overlay format, or a pop up that looks like a form view, which would allow them to fill out data for the establishment specific to the establishment in that row. They perceived this hypothetical form-view layout as easier than scrolling on the spreadsheet and seeking out the cells to fill in data for. This occurred for both single unit and multi-unit companies.

“The very first line of data is a drop down with an explanation.”

“I don’t like filling it out this way- give me each one [individually]. When I clicked on a company - I was expecting a pop up. I need more guidance.”

“TOO cumbersome”

Recommendations:

Consider incorporating a format which allows for form view entry of data; particularly for single unit companies not accustomed to spreadsheet designs.

Allow upload/download.

Highlight the drag the drag to copy mechanism.

Consider adding an undo button.

Users did not read the overview in the online spreadsheet in Step Three.

Users who tested the upload/download spreadsheet often glanced through the overview tab. In the Excel, the overview tab is the first tab, so it is the first screen users read. On the other hand, the online spreadsheet opens up to the survey itself. Very few participants opened the overview tab before trying to input data. Some went into the tab to look for more information about KAUs. Overall, it was difficult to test the effectiveness of the information there because participants did not click into it naturally.

Recommendation:

When users first see the online spreadsheet, include a short auto-play tutorial which features the overview tab.

Users wanted to be able to preview their questions before starting the survey.

Previews of survey content is a requirement for economic respondents. Many participants like to get their records and thoughts in order using the preview. Further, such a preview makes it much easier for participants coordinating information gathering to identify who to go to within their company and what topics will be covered. One participant suggested that being sent an email in advance of the survey which included a list of topics would be helpful to them.

“The PDF prepares you- I would print out the PFD on the payroll page and go to manager- can you get me that information. How much work; how much time. What pertains to me what doesn’t. Really get a sense of time and work resources.”

“I uploaded the template to see what’s required…Would be helpful to see summary template in advance to see what’s required. Sometimes cannot go forward.”

“Maybe they can- before you fill it out- based on prior data and industry, send me an email to tell me the info you will need. When I was new, I didn’t know what information I was supposed to provide…A guide before you start.”

Recommendation:

Provide access to a preview of the survey in the portal, overview, and pre-notification email.

Error Checking Functionality

The error check functionality did not work as expected for users. Note that certain errors, which prompted the red banner with a description of the error at the top of the page, was expected and useful to respondents. These findings pertain to an error page which consolidates and notifies users of numerous or duplicative issues in the survey data.

Primary concerns that arose were:

Users expected to be notified of errors automatically.

Economic survey respondents are accustomed to an error check functionality that runs automatically before submission. Respondents generally expected that advancing the page from Step Three would notify them of any errors. They expected to be blocked from continuing if there were issues with their data requiring a fix.

It was unnatural for the respondents to be required to run this check on their own accord. Participants did not often click into the “Check Data” button or tab spontaneously.

• “I wouldn’t be notified of the error until I [clicked] saved and continue… Show me what I did wrong.”

“It didn’t force me to do a data check- I did submit the screen- never went to the check data tab normally it would throw me into the data check- or error out the column; make it a red column.”

• “[The site] wouldn’t let you continue without with errors.

Recommendations:

Have error check run automatically or stop users from going forward without a prompt to run it.

In lieu of incorporating this automatic push to the error check page, specify in the overview of both Step One and Step Three how to run error checks.

This could also be addressed in an auto-play tutorial.

Some users felt the edit check mechanism was unclear.

When users were instructed to seek out a way to fix errors, or found it on their own, it was not necessarily clear what the function of the page was. The ‘’Check Data” label was not clear. While on the error page, the mechanism to fix the error was clear. Users had different expectations for how they would be directed to the fix. See below.

“Check data, what is that?”

“I did not know what tab was. I would not have clicked on it naturally. I would want error check to kick in automatically. Was that in the overview? It should say you can check data when finished. I’m sort of used to how it is now, when it gives me errors. And then I can click on the fix.”

“I kind of like the check screen if they would just point this out in the instructions.”

“If there’s a tab to check stuff, make that more obvious to people.”

“Run checks doesn’t seem to do anything is there a button press?”

Recommendations:

The error check page to include the word “error.”

Users wanted the edit check link to go directly to the cell with the error.

Users had some confusion about the edit check and how it worked, as discussed. However, while most naturally clicked on the ‘fix’ link that brought them back to the survey, they were dismayed the error check did not indicate specifically what was wrong. It seemed it would point them to the row and not the question. It is especially critical the error checks be precise here to bolster data quality given the massive amount of data the survey asks companies to provide.

“Expect to be brought to the specific cell.”

“The whole row is highlighted. It doesn’t take you right to it? Not that clear.”

“Would expect the error to be in the column. So, at top of page, highlight the relevant question.”

Recommendation:

Ensure the fix button takes respondents to a specific question.

Bold the question, or otherwise make clear which question is in need of fixing.

Use specific language to indicate the exact nature of the error.

Users felt delegation mechanisms in all steps, by both question and topic, would be helpful.

Participants felt that delegation features by company location, topic, and question would be helpful. This was especially true of multi-unit firms, where participants often had the main task of gathering data from others, rather than locating from accessible records. In fact, some respondents are strictly in a role of data input, utilizing data others have provided to them.

Many participants had access to financial information. However, most were routinely reaching out to payroll, HR, and other staff. This process was burdensome and time consuming. There were times participants recalled where they never received answers and could not input information for those questions.

Participants wanted the ability to pass pieces of the report to other locations to fill out on their behalf. They also wanted to pass pieces of the report as an indicator of what data they need to gather so the responder could input it. More than one participant explained the company’s process of delegation, which involved emailing the acting manager of each of their locations, providing her e-corr password, and then inputting the data once she received the information. This was typical for participants. Some respondents described how it would be helpful to a button which would allow the primary contact the ability to assign establishments/plants to specific people.

“I would want a way to delegate both parts and questions. I would want to delegate a row to a yard. With the payroll stuff, they don’t want to share that. Payroll part would be good, even questions would be better. That’s the hardest to get information from. They’re reluctant to share anything with confidentiality. I would want to email that portion straight to them. With a button on the section. I already forgot what was in Step One and Two. Yeah, that would be good to delegate too.”

“I would love to delegate by block or email. Then I don’t have to be collectors of data all the time, waiting forever. I give the best data I can. Or it doesn’t get submitted, and I can’t submit anything. So, I could delegate to plants or locations, rather than sending an Excel or pdf…either I could send it by email, or they can log into the portal.”

“This resembles a bunch of plant locations- I would send the report to them to complete. There is a HQ that’s the parent company of the plants.”

“This is all plant specific stuff I cannot do at the HQ here. It would take reaching out to another person at the plant. This should go to specific plant addresses. The data here is too granular - location-specific data we need the person at the location to report that.”

“If one spreadsheet is coming from 15 plant locations- do I have to get the info from 15 people and I fill it out? Or can they fill it out? I would want a delegate button.”

[Regarding a prior survey] “I printed a copy of the survey. Which was 60 pages- scanned it and sent it (to others to fill out).”

Recommendation:

Add delegation mechanisms by email for each topic, unit, and question.

LOW PRIORITY FINDINGS

Users did not read the overview.

Participants would sometimes take in the broad outline of the overview on the first page of the survey but were unlikely to read the specifics. Only two participants noticed the notes which informed respondents they would be unable to return to previous sections of the survey to go back. Once in the tab, many skimmed or skipped past it. This connected to the finding above that participants wanted to be able to go back, and most did not notice they could not do so because they did not read this page.

Recommendation:

Do not rely on overview text to convey critical information, such as the inability to navigate to previous steps.

Some users inputted “yes” or tried to use a dropdown menu rather than input numbers in Step One.

Participants universally had trouble locating the questions used to verify locations. Once there, they sometimes struggled to answer using numeric values. While the instructions were in the column, some tried to write in “yes” because the question was a yes/no question. One participant made an error in filling out operational status by typing in “operational status” as opposed to just the number key associated with the status (i.e., 1 = In Operation). Some assumed the list of numeric values in the question column were a drop-down menu. While minor, this does mean time and burden for users, and may frustrate them.

Recommendation:

Have “yes” text automatically convert to the numeric value 1.

Some users were confused by unfamiliar terminology in spreadsheet.

Respondents were unfamiliar with the BR ID number (such as 200###). Some respondents wondered if the Store plant ID number was an internal name, determined by their own company, or one the Census Bureau assigned. Some of this confusion may have been due to the dummy data that was preloaded to represent the establishments. More than one respondent noted the 2 different columns for name of company and were unsure the purpose of the second column.

Only a small number of participants were familiar with NAICS as an indicator of industry. NAICS specifically was generally unfamiliar, most companies did not know their NAICS designation. While it was not required to add locations, it was confusing for several participants.

Recommendations:

Include information in the NAICS column which describes NAICS in terms of a numerical code representing industry.

Process of adding a location was clear; subsequent page was confusing.

Participants who were asked to add a location easily located the ‘Add a location’ button at the bottom of the table. However, the second page that generated immediately following the verify step was confusing for respondents. It was not clear the page was generated for solely added locations. In fact, the page was still shown to participants who had not added a location. It appeared as a blank table. Respondents assumed this was a glitch in the instrument.

“Feels a little repetitive.”

“Why is it back to Step One?”

“I thought there was additional task but there is no button? No data here for me”

“Not immediately clear that this is the new location on the CONT. page.”

As a note, it was not as clear to respondents what the process to deduct a location would be (i.e., changing the operational status to something other than in operation). One participant wanted to know how to indicate an acquisition that would severely affect their reported revenue. Once the process was described to them, or otherwise discovered, users liked being able to eliminate locations that were not operational for future steps.

“I like the idea of being able to delete in Step One. If a store is closed, it’s taking a whole lot of space. If I can delete that line, it would be less overwhelming if it’s the open locations.”

Recommendations:

Do not generate the second add location screen if no locations have been added.

Communicate more clearly on the second page that is to get information for added locations.

Users were mixed on whether they liked to answer in the 1000s in Step Two.

Respondents were asked to indicate an annual revenue of “2.5 million” in a textbox with .00 attached. Respondent had varying reactions to the tab tailing the end of the write-in box that indicates the number is already in thousands. Several respondents miswrote the figure as 2,500,000, (which would when processed read as 2,500,000,000.00). Some respondents were uncomfortable that the commas did not autogenerate and noticed this spontaneously. Some respondents attempted to add in a comma and were not able to.

“It wants thousands? I’d rather put in whole numbers. I don’t want to round. I want what’s in the records.”

“I’m okay with rounding as long as it’s clear, it’s not a big deal.”

“Helpful to have the zeros in thousands.”

“2.5 million: 2500- I’m used to that here. That’s clear.”

“It’s not clear what these zeros in a different bucket as well- It’s easier to have that there but a little bit confusing. I guess it would faster. I prefer it to have the full number. Too confusing.”

“It was supposed to separate it for me by commas…old format it would just separate it for you. If it doesn’t separate it for you - it’s hard to read.”

Recommendations:

Further testing of the design.

Autogenerate commas.

Users liked the auto sum on Step Two.

There were multistep questions in Step Two that featured an auto sum mechanism at the bottom of the screen. Some participants did not notice. Those who did, or were prompted to do so, spoke positively of this function. They endorsed the use of auto sum features in general. One recommended that they be more widespread throughout the survey where applicable.

“I love that it calculates for you. That’s actually helpful a lot of the time.”

Recommendation:

Integrate more auto sum mechanisms when possible.

Some users received errors for leaving questions blank rather than inputting 0s.

Several participants assumed they could leave questions in Step Two blank if the value was zero. The distinction between ‘0’ and a blank is important for data quality. Users may be frustrated when receiving an error without being given instructions to input 0’s instead of leaving questions blank.

Recommendations:

Add the instruction that answers in Step Two cannot be left blank.

Keep the error that stops users from moving forward with blank answers.

Text associated with radio buttons should be clickable.

Radio buttons were not always clickable in the survey. Wherever radio buttons are present, notably in Step Two, ensure the whole line of text is “hot” and clickable, as opposed to just the radio button. At least one participant vocalized being frustrated needing to specifically click the small button target.

“Why is it just this little thing?”

Recommendation: Ensure text associated with radio button is hot.

Users preferred the upload/download function rather than online spreadsheet in Step Three.

More than half of participants (57%) indicated they would prefer to fill out the spreadsheet in Excel. This was especially true of lager multi-unit companies who had data stored in multiple Excel files. Most participants felt that their familiarity with Excel, rather than the unknown of the online spreadsheet, made the upload/download option a more comfortable choice. Further, since there was not a way to download answers, and no way to go back to the steps, this also served as a way to maintain records.

“Because multiple locations easier to do on Excel.”

“Uploading is easier. The other thing is so awful. I can pull up my census folder to check which one was what. I can populate the data, and don’t have to scroll through. I can work on it when I have time. I’m extremely comfortable with Excel.”

“I would want to look at both to decide online or upload spreadsheet. If it allows to filter, copy and paste, highlight. Anything I would be able to do on the Excel spreadsheet I would want to be able to do on online spreadsheet. So, I would do my own spreadsheet and upload. I would stop at 10, go to upload and clear the highlight. Highlight is important for stepping away and not losing my spot. It’s very cumbersome, the big spreadsheet. I would have to freeze frames. I really only need the store I won’t look it up by name, but by location number. I would like it to freeze these frames and highlight a location.”

Recommendations:

Preserve this upload/download option as a method for users to provide data.

Color coding was unclear.

Participants were consistently confused by the color scheme of the spreadsheet in Step Three. It was not clear that the gray cells are not editable. For one, users did not immediately realize the cells were gray. This may be because there was not enough visual distinction between white editable cells, (which were often further two the right and not in the immediate visual field), and the gray cells, at the far left of the table.

The amount of content on the survey tab further muddied this distinction. Users could scroll to the right, scanning for where to input data, but the white editable cells were sporadic and not very distinct from gray- thus could easily passed over.

The connection between Step One and Step Three was not clear to users, as they generally would not read the instructions. Users did not understand that they had already locked in their basic establishment data. They could have assumed they just needed to search for the mechanism to make the gray cells editable. The overview tab references blue cells, and one user thought by clicking on the blue they would be able to edit the gray cells.

“Don’t make it the user’s job to know what they have to do.”

“I thought it was the color scheme now when I click on it; clear I couldn’t edit those fields.”

“Why isn’t the whole line white? Highlight the companies you want the info on? The white starts way over here”

“Having a lot of columns gets confusing. Inputting wise, I’m not sure where I’m entering stuff especially with 20 locations.”

“What is this supposed to mean, this big blank tag [that’s blue]?"

Recommendations:

Remove reference to blue cells in overview tab.

Clarify colors in instructions.

Consider another mechanism to better distinguish editable cells from non-editable ones.

SUs were less intimidated than MUs, but less familiar with spreadsheets.

Many single unit companies assumed the “online spreadsheet” would be a form-view version of the survey. Some expressed unfamiliarity with this format, especially given the surveys they received in the past were generally in a form-view format. Many Census surveys eliminate the spreadsheet option for response entirely for single units. That being said, most of these participants were not worried about the roll out of the AIES. They had lower estimated burden times, and less distress or concerns about the steps laid out.

“I’ve never downloaded a spreadsheet before; I like to see the questions one by one.”

“For us it wouldn’t really work to put it in a spreadsheet- it’s not all in one record- it’s easier to put it in directly. Plus, we don’t know what you’re going to ask us.”

“I would prefer a vertical view (i.e., form view)- even if it’s 10 pages with 10 questions.”

Recommendations:

Test possible different paths or views for SUs.

Related Cognitive Testing Results

We relay data we received from participants that cannot strictly be categorized as usability testing in this section. Although we did not engage in cognitive testing, some participants volunteered this data. We enumerate the topics covered, but do not rigorously explore their meaning here, as it does not fall within to the scope of usability testing. We assume these topics will be explore further in future cognitive testing.

The most important cognitive finding that greatly impacts usability is the sheer amount of content. We have heard feedback in every step of respondent research that there is just too much content. This testing was no exception. However, this impacted users’ experiences of the instrument. They were drained by the idea of coordinating answering so many questions in so many steps, and some worried it was not possible to find and report all the information accurately. The third step, the spreadsheet, in particular was overwhelming. Such a high number of questions made it difficult to navigate, with the long scroll time and lack of search function.

Additional themes are listed below.

Many participants liked the idea of integration. They generally endorsed the AIES.

Many reporters did not have access to payroll and HR records. This is typical for Census Bureau surveys. It is most salient at larger, multi-firm companies.

Some mentioned the foreign ownership being hard to think about for their companies.

In Step Two, there was confusion about “sales shipments.” Specifically, participants wanted definitions for the term domestic asset, clarifications for which subsidiaries to include, and the time frame.

In Step Two, participants were not sure what the gross value additions were, and what other would be relating to in the question. Assets, especially if they were depreciable or acquired in the year, was confusing.

In Step Three, multiple participants did not know what NAPCS meant. This resulted in many manufacturing companies not opening that tab. Similarly, KAUs and NAICS were not familiar terms.

In the NAPCS tab in Step Three, some participants were not sure how to calculate the value of goods.

The KAU concept of being able to roll up data to the industry level was well received but required a specific explanation in order to be understood. The concept was not conveyed effectively and requires substantial communication and messaging to be understood as an option for users.

Recommendation:

Separate cognitive testing.

About the Data Collection Methodology and Research (DCMR) Branch

The Data Collection Methodology and Research (DCMR) Branch in the Economic Statistical Methods Division assists economic survey program areas and other governmental agencies with research associated with the behavioral aspects of survey response and data collection. The mission of DCMR is to improve data quality in surveys while reducing survey nonresponse and respondent burden. This mission is achieved by:

Conducting expert reviews, cognitive pretesting, site visits and usability testing, along with post-collection evaluation methods, to assess the effectiveness and efficiency of the data collection instruments and associated materials.

Conducting early-stage scoping interviews to assist with the development of survey content (concepts, specifications, question wording and instructions, etc.) by getting early feedback on it from respondents.

Assisting program areas with the development and use of nonresponse reduction methods and contact strategies.

And conducting empirical research to help better understand behavioral aspects of survey response, with the aim of identifying areas for further improvement as well as evaluating the effectiveness of qualitative research.

For more information on how DCMR can assist your economic survey program area or agency, please visit the DCMR intranet site or contact the branch chief, Amy Anderson Riemer.

Appendix A

Usability testing is used to aid development of automated questionnaires. Objectives are to discover and eliminate barriers that keep respondents from completing an automated questionnaire accurately and efficiently with minimal burden.

Usability tests are similar to cognitive interviews – that is, one-on-one interviews that elicit information about the respondent’s thought process. Respondents are given a task, such as “Complete the questionnaire,” or smaller subtasks, such as “Send your data to the Census Bureau.” The think aloud, probing, and paraphrasing techniques are all used as respondents complete their assigned tasks. Early in the design phase, usability testing with respondents can be done using low fidelity questionnaire prototypes (i.e., mocked-up paper screens). As the design progresses, versions of the automated questionnaire can be tested to choose or evaluate basic navigation features, error correction strategies, etc.

Appendix B

AIES Usability Testing Protocol

Opening Questions

Display This Question:

If Is this company an MU or SU? = MU

From the recruiting file, how many estabs does this company have?

From the recruiting file, what NAICS is this company in?

From the ecorr, What is the name of the fake company they are responding for?

🎈 Manufac? Y/N Is this a manufacturing company?

🎈 MU SU Status? Is this company an MU or SU?

Display This Question:

If Is this company an MU or SU? = MU

🎈 Add location? Y/N Will they Add a location? [not for SUs]

🎈 Error Check Y/N Will they Check Errors?

🎈 ONLINE V UPLOAD Is the participate taking the ONLINE path or the UPLOAD path?

Introduction

Thank you for your time today. My name is XX and I work with the

United States Census Bureau on a research team that evaluates how

easy or difficult Census surveys are to use. We conduct these

interviews to get a sense of what works well, and what areas need

improvement. We recommend changes based on your feedback.

[Confirm they signed the Consent Form; should be sent prior to

interview.]

Thank you for signing the consent form, I

just want to reiterate that we would like to record the session to

get an accurate record of your feedback, but neither your name or

your company name will be mentioned in our final report. Only those

of us connected with the project will review the recording and it

will be used solely for research purposes. We plan to use your

feedback to improve the design of this survey instrument and make

sure it makes sense to respondents like you. I'm going to start the

recording now, if that's okay with you.

[start

/Snagit screen recording, if yes.]

Thank you.

Background

I

am going to give you a little background about what we will be

working on today. Today you will be helping us to evaluate the design

of the online Annual Integrated Economic Survey, or AIES, instrument.

The website is in development, so this is an opportunity to

make sure it works as smoothly as possible.

To do this,

we will have you complete various tasks using the site. These will be

consistent with tasks you would normally complete if you were

requested to complete the actual AIES in the future. There are no

right or wrong answers, we are mainly interested in your impressions

both good and bad about your experience. I did not create the

instrument so please feel free to share both positive and negative

reactions.

There is fake company data preloaded onto the

survey site. This fake company is meant to be a proxy for your

actual company.

We're going to pretend

that the data represents

your company; what

you will see is approximately what you will actually see when you

receive the real survey.

We will provide you

any data you need to fill in on the Answer

Key.

I may ask

you additional questions about some of the screens you see today and

your overall impressions.

Do you have any questions

before we begin? Ok let’s get started. First, I would like to

get some information to give me some context about your business.

Q5 Can you tell me about the business, like what types of goods or services it provides? And how it is organized?

Q6 What is your role within the company?

Q7 Are you typically the person responsible for government surveys?

Do you typically have access to all of the data needed?

If not, what areas or positions do you usually reach out to?

Great

thank you.

Now I’m going to read each task question

out loud, you can also refer to the Answer Key, (the word document

that's open).

Then you will use the website/[spreadsheet]

to complete the task. While you are completing the tasks, I would

like for you to think

aloud. It will be

helpful for us to hear your thoughts as you move through the survey.

Once you have completed each task just let me know by saying finished

or done- then we can move on to the next task.

To get

started click the 'report now'

button for the company ${Q61/ChoiceTextEntryValue} to get

into the survey website.

Task One: Verify Location(s)

Begin the survey by verifying the location data.

Remember,

we're operating as if the data here represents your company. So

for this task, pretend all these locations are yours, and they

are all operational.

Feel free to take a moment and

explore, as you normally would. Remember to think

out loud.

Let me know

when you feel you’ve accomplished this task.

Any comments about the Overview?

Accuracy Task One: Verify Location(s)Was the participant able to complete the task?

Success

Fail

Success with prompt

Success without prompt

Check if participant:

Clicked for 'More Information' in Overview Screen

Clicked for 'More Information' in Step One

Clicked 'How-To PDFs and videos page'

Maximize table button 🔀

Clicked 'Check Data' Tab

Clicked 'Add additional location(s)'

Clicked 'Save 💾'

Got an error message

Clicks ℹ info in cell

*Special Task; Not all R’s receive*

Task:

Add Additional Location

For Non-SUs

Your company opened a new location.

First add a new location, then fill in the cells with fake data. For

example, feel free to enter 123456.

Tell me when you feel you've completed this task.