VAR Mobile App Rev_8-2016

Generic Clearance for the Collection of Qualitative Feedback on Agency Service Delivery (NCA, VBA, VHA)

VAR3 0_UsabilityStudy_Plan_v1.0a

VAR Mobile App Rev_8-2016

OMB: 2900-0770

TITLE

OMB

No. 2900-0770

Expiration Date: 08/31/2017

The Paperwork Reduction Act of 1995: This information is collected in accordance with section 3507 of the Paperwork Reduction Act of 1995. Accordingly, we may not conduct or sponsor and you are not required to respond to, a collection of information unless it displays a valid OMB number. We anticipate that the time expended by all individuals who complete this usability test will average 90 minutes. This includes the time it will take to follow instructions, gather the necessary facts and respond to questions asked. Customer satisfaction is used to gauge customer perceptions of VA services as well as customer expectations and desires. The results of usability test will lead to improvements in the quality of Veterans Appointment Request App (VAR 3.0). Participation in this usability testing is voluntary and failure to respond will have no impact on benefits to which you may be entitled.

VA

Form 10-

APR 2014

Veteran Appointment Request (VAR) Mobile App V3.0

Veterans Health Administration

Completion Scheduled for September 6, 2016

Table of Content

s

Test Location & Technical Configuration 9

Findings and Recommendations 19

Appendix A: Study Materials 24

Session Day Set Up Checklist 24

Session Script: Mobile Device Use 25

Opening Questionnaire (questions asked at the beginning of the session) 39

Post Task Questionnaire (questions asked after every task) 41

Closing Questionnaire (questions asked at the end of the session) 41

Appendix B: HFE System for Ranking Usability Findings 44

Appendix C: System Usability Scale (SUS) 47

Appendix D: Human Factors Engineering Questionnaires 48

HFE Volunteer Tester Information Survey 49

Version History

Version |

Date |

Comments |

V0.1 |

5/3/2016 |

Initial drafting of plan based on proposal approved by program office. |

V0.2 |

5/16/2016 |

Updated scripts based on initial dry runs. Completed configuration diagrams based on initial dry runs. |

V0.3 |

6/13/2016 |

Updated materials based on initial access to functioning VAR 3.0 on the SCA environment. |

V0.4 |

7/21/2016 |

Updated tasks and script based on dry run activities. |

V0.5 |

7/26/2016 |

Dropped notifications task due to lack of functionality in VAR 3.0 and replaced with task for providing feedback to VA, at the request of the program office. |

V1.0 |

7/29/2016 |

Made final revisions based on white glove review. Distributed to recruiter and Connected Care. |

Introduction

Study Details

Proposal Author(s): Ashley Cook (VHA OIA HFE, CSRA; [email protected])

John Brown (VHA OIA HFE; [email protected])

HFE Point of Contact: Nancy Wilck (VHA OIA HFE; [email protected])

Application: Veteran Appointment Request (VAR) App

Application Version: v3.0, SQA Environments

https://vet-int.mobilehealth.va.gov/veteran-appointment-requests

Study Sponsor(s): Kathleen L. Frisbee, PhD, MPH (VHA Connected Care; [email protected])

Deyne Bentt, MD (VHA Connected Care; [email protected])

Developer POC(s): Mark Ennis (VHA Connected Care, [email protected])

Kate Wheelbarger (BAH, [email protected])

Device(s): Internet Explorer v11 as installed on an HFE Windows laptop

Default device browsers on the following HFE mobile devices:

iOS (mobile and tablet)

Android (mobile and tablet)

Study Points of Contact

The following table outlines the points of contact to be employed by the study team in executing the study.

Role |

Name |

Phone |

|

HFE Point of Contact |

Nancy Wilck |

202-330-1818 |

|

Study Lead |

Ashley Cook |

571-643-6909 (m) |

|

Human Factors Engineer |

John Brown |

615-320-6293 |

|

Human Factors Engineer |

Jane Robbins |

571-759-2579 (m) |

|

Sponsor Point of Contact |

Deyne Bentt |

202-745-8000 ext. 52412 |

|

Recruiter Point of Contact |

Brian Moon |

540-414-6710 540-429-8126 (m) |

|

Development & Data Team Point of Contact |

Kate Wheelbarger |

614-204-3268 |

|

VA Apps Help Desk |

Hotline |

877-470-5947 M-F 7am-7pm CST |

Application Description

The VAR application is a responsive web app built for mobile or desktop/laptop use. It allows Veterans who are in the VA health care system to directly schedule or request primary care and mental health appointments at VA facilities where they already receive care. VA scheduling clerks then book appointments based on a Veteran’s request. The VAR app also allows Veterans who are members of a Primary Care Panel to schedule and cancel their selected primary care appointments directly through the app. Unless the patient is able to book the appointment directly through the app, submitting a request does not mean an appointment has been booked until it has been confirmed by the VA. The VAR app addresses a critical need to facilitate and expedite Veteran appointment scheduling.

Study Objectives

In this coordinated effort, HFE will assist in exploring factors affecting acceptance and adoption of the VAR app by Veterans. These factors included: Performance Expectancy, Effort Expectancy, Social Influence and two constructs that are significant predictors of usage behavior: Intention to Use and Facilitating Conditions.1 Based on direction from Connected Care, HFE will focus on Effort Expectancy, or expectations on the ease of use of the system, especially during the initial period of use. HFE will also collect feedback from Veterans on how they feel the VAR app makes the scheduling process easier and how well the app improves their sense of access to care.

The following study objectives reflect an agreement on the purpose of conducting the study, the expected outcomes (i.e. the type of results that will be provided), and how the results are expected to be used. The Connected Care office worked with the VA Human Factors Engineering office to confirm the objectives for this study:

Explore the experience of Veterans in using the app to book appointments and communicate how the use of the app affects their sense of access to care.

Explore impressions of older Veterans who were not adequately studied in the remote usability evaluation conducted on VAR 2.0.

Determine the degree to which Veterans feel that the VAR app makes the scheduling process easier.

Determine the degree to which Veterans feel that the VAR app will be a valuable tool for comparison with initial impressions collected before issues were addressed.

Collect general feedback from participants about their experience using the app, and solicit recommendations for improvements that may help improve Veteran scheduling experiences.

Collect high level metrics on Veteran impressions that can be used in executive reporting. See proposed questionnaires below.

Verify that the VAR app meets basic usability requirements (see objective #3) on the variety of device types intended to be used by Veterans with the app including:

iOS (mobile and tablet)

Android (mobile and tablet)

Laptop/Desktop

Explore the learning curve associated with use and adoption of the app.

Participants will be asked to perform a second and third scheduling task to produce a curve to assess learning against task time and other metrics. (three data points)

A question will be added to the closing questionnaire assessing how Veterans felt they were able to learn the task after repeated attempts.

Verify that Veterans can locate the features to provide feedback to the VA about their use of the app and how it affects their sense of access to care.

Verify that the VAR app meets basic usability requirements in order to be deployed successfully across the VA health system (for example, we expect a Veteran to completely schedule an appointment in “2 minutes or less”, with “0 errors”, and with a mean System Usability Scale (SUS) score above “85”). The following metrics will be captured to support this objective:

Time on Task (reported independently of task success).

Number of Errors per Task.

Task Success or Failure.

Task Satisfaction via post task questionnaires.

Subjective Satisfaction: As a standard practice, the SUS questionnaire will be used to gather and report on subjective satisfaction. This number will be available for comparison against SUS scores collected from testing on VAR 2.0.

Verify that the VAR app displays no “Serious” usability issues as revealed by the evaluation. If serious issues are identified, they should be addressed or mitigated prior to deployment.

Collect information from Veterans regarding their ability to easily identify their clinics for scheduling.

Identify and prepare vignettes that tell a story of how VAR affects individual participants. These vignettes will be scrubbed of any personally identifiable information (PII).

In support of these objectives, this study will uncover areas where the app performed well – that is, effectively, efficiently, and with satisfaction – and areas where the app fails to meet the needs of the participants. These qualitative findings will be identified, documented and ranked for severity and priority. Findings will also be accompanied by actionable recommendations for improvement. The study will use a standard usability scale to evaluate task usability as well as other subjective questionnaires. Findings from this study will be used to improve the usability of the app prior to deployment of the app to additional locations.

Method Overview

Study Design

HFE will perform a usability test using formative and summative methods to deliver on the study objectives. HFE will meet with 25 Veterans in 1.5 hour one-on-one sessions. This study will be conducted in person at the HFE Informatics Research and Design Center (IRDC) with Veterans scheduling fictional appointments. Participants will HFE mobile and desktop devices and a functionally tested preliminary production installation of the VAR 3.0 app.

Tasks performed by participants will be designed around one realistic scheduling scenario and will exercise all of the core functionality of the VAR 3.0 app including directly scheduling an appointment, viewing a scheduled appointment, canceling a scheduled appointment, making an appointment request, providing feedback and locating guidance on urgent needs (see Table 1: Session Agenda & Timing Aid). These tasks mirror those included in the VAR 2.0 testing and will allow for comparison against metrics captured during the field usability study. The tasks and scenario will also be developed to explore the learning curve associated with using the app by asking for consecutive direct scheduling of appointments. The test scenario will be developed in collaboration with subject matter experts to ensure accuracy as well as to support appropriately realistic test data with no anomalies that could cause distractions during the test sessions.

Time |

On Hour |

On Half Hour |

Item |

5 min |

:00 |

:30 |

Introduction

|

10 min |

:05 |

:35 |

Opening Questionnaire

|

40 min |

:15 |

:45 |

Task Completion

|

5 min |

|

|

Pre-Task Briefing |

5 min |

|

|

Task 1A: Direct Scheduling: Primary Care #1 |

2 min |

|

|

Post Task Questionnaire |

2.5 min |

|

|

Task 1B: Direct Scheduling: Primary Care #2 |

2 min |

|

|

Post Task Questionnaire |

2.5 min |

|

|

Task 1C: Direct Scheduling: Primary Care #3 |

2 min |

|

|

Post Task Questionnaire |

0.5 min |

|

|

Task 2: View Scheduled Appointment |

2 min |

|

|

Post Task Questionnaire |

0.5 min |

|

|

Task 3: Cancel Appointment |

2 min |

|

|

Post Task Questionnaire |

2.5 min |

|

|

Task 4: Make Appointment Request: Mental Health |

2 min |

|

|

Post Task Questionnaire |

0.5 min |

|

|

Task 5: Send Feedback |

2 min |

|

|

Post Task Questionnaire |

0.5 min |

|

|

Task 6: Locate Guidance on Urgent Needs |

2 min |

|

|

Post Task Questionnaire |

5 min |

:55 |

:25 |

Usability Survey Instrument (SUS)

|

15 min |

:00 |

:30 |

Closing Questionnaire

|

5 min |

:15 |

:45 |

Closing

|

15 min |

|

|

Buffer time to account for in person logistics |

Table 1: Session Agenda & Timing Aid

Complete study materials can be found in Appendix A.

Test Location & Technical Configuration

Sessions will take place in person at the HFE Informatics Research and Design Center (IRDC) in Nashville, TN. Participants will be greeted and escorted to a usability lab designed to mimic a living room that is equipped with a two way mirror and video equipment. Participants will be provided with a pre-tested device that is similar to a device that he/she owns and would employ to use the VAR app. For example, participants who own and would use iPhones will be provided with an iPhone that has been tested to ensure that the VAR app functions on the device. Participants who would use a laptop will be provided with a laptop that has been tested to ensure that the VAR app functions using the browser as installed on the machine.

The configuration will differ slightly based on mobile device use or laptop use. The following diagram depicts the configuration for the task completion on a mobile device (see Figure 1).

Figure 1. Hardware and Software Configuration for Task Completion on a Mobile Device

Mobile devices will be equipped with a light weight recording sled (camera #1) to film users as they interact naturally with the devices and app, capturing on screen use and human behavior (e.g., where and how they tap/swipe, hover, hesitate and hold the device; see Figure 2).

Figure 2: Mr. Tappy Mobile Device Recording Sled 2

A second camera (camera #2) will be positioned to capture the face of the participant to gauge moments of expression and emotion, providing “picture in picture” video capture. Sessions (audio, video and screen actions) will be recorded using Morae™ software (v3.3.3) installed on an HFE laptop. Morae3 is usability software which captures pre-defined metrics for the tasks, including clicks, mouse movement and task time. After the session, the recording file will be transferred to the moderator via a secure File Transfer Protocol (FTP) utility for coding and analysis. SurveyMonkey will be used to capture questionnaire feedback provided by the participant via the device of choice.

Participants who choose to use a laptop to perform their session will interact directly with the HFE laptop running Morae, which will capture screen interactions. A camera (camera #1) will be positioned to capture the face of the participant to gauge moments of expression and emotion, providing “picture in picture” video capture. For consistency, SurveyMonkey will be used to capture questionnaire feedback provided by the participant via a web browser on the HFE laptop. The following diagram depicts the configuration for the task completion on a laptop (see Figure 3).

Figure 3. Hardware and Software Configuration for Task Completion on a Laptop

Due to the device configuration, two Morae recording configuration files will be created to capture screen video input. The configurations will be identical with the exception of the video inputs. Complete study materials, including Morae configuration files, can be found in Appendix A.

Participant Screening

A total of 25 participants will be recruited by HFE using an external recruiting vendor for the usability testing sessions, with additional participants recruited for practice or dry run purposes. Participants will be screened to include only those who would naturally gravitate to using the types of appointment scheduling services provided by VAR (e.g., those who use mobile apps or My HealtheVet regularly). The participants will be carefully recruited to ensure adequate coverage of iOS mobile device use (phone and tablet), Android mobile device use (phone and tablet) as well as desktop browser use. When screening for device types, participants will be asked which device they would use to schedule their appointment using an app like VAR. In this manner, recruited participants will paired with an HFE device that matches the device that they own and are familiar with and enable HFE to assess the findings for platform specific usability issues.

As much as possible within the constraints outlined above, recruited participants should represent a broad sampling of users to represent a mix of age, backgrounds and demographic characteristics conforming to the recruitment screener. In order to appropriately study the veteran population, participants will be stratified by age (above 65 and below 65) which is correlated with technology skill and allows for simple representation of the veteran population as a whole (half of which are below 65 and the other half are above). The recruiting team will conduct rolling recruiting to ensure that the minimum targets are met, including dropout which may occur mid-study.

The table below illustrates the types of users that may be recruited for this study. Additional types may be added as the testing scenarios are refined.

|

Group 1: iPhone Users |

Group 2: iPad Users |

Group 3: Android Phone Users |

Group 4: Android Tablet Users |

Group 5: Laptop Web Browser Users |

Participant Demographics |

At least 5 participants in each group conforming as closely as possible to the following:

|

||||

Table 2: Sample Recruitment Profile

HFE will schedule each participant to attend one individual UT session (90 minutes). Participant names will be replaced with Participant IDs so that an individual’s data cannot be tied back to individual identities.

Additional Demographics Questions

Demographics will be collected as part of the recruiting effort in order to save time during the sessions for more meaningful activities. Eight (8) questions must be asked in addition to the standard screener (herein referred to as the “demographics questionnaire”), which already includes the following information: Veteran/reservist status, VA employee, VA volunteer, gender, year of birth (age), state of residence, smartphone ownership (including OS), tablet ownership (including shared device, data plan, and brand), number of apps installed on smartphone and/or tablet, VA mobile apps installed, preferred platform, MHV usage (including frequency), participation in other studies, VSO membership, military branch, service era, disability status (including rating), use of VA mental health services, MHV Rx refill usage, pharmacies for medication refills, VHA services, pregnancy status, highest level of education, race/ethnicity.

The following additional demographics questions will be added to the opening questionnaire:

How would you rate your comfort level with using technology (such as your computer or mobile device that you will be using in this study)?

Very High (I seek out opportunities to learn and use new technologies)

High (I have no problem using new technologies when they are made available)

Medium (I am not the first person to log in but will eventually get there)

Low (I use technology only when I have to)

Very Low (I do everything I can to avoid the use of technology)

How would you rate your proficiency with using technology (such as your computer or mobile device that you will be using in this study)?

Expert (I bend technology to my will)

Advanced Intermediate (I can use master the basic and many of the advanced features)

Intermediate (I can master the basic but rarely use advanced features)

Advanced Beginner (I can use the basics but sometimes need help)

Beginner (I struggle with using technology and often ask for help)

Do you require the use of assistive technology (like screen readers or magnifiers) when using your computer or mobile device?

Yes

No

If yes, which needs do these assistive technologies support? (select all that apply)

Vision

Hearing

Cognitive

Mobility

Other

Have you been diagnosed with Post Traumatic Stress Disorder (PTSD)?

Yes

No

I prefer not to answer

If yes, do you feel that PTSD affects your ability to use technology (including web sites and/or mobile apps)?

Does not affect my use of technology

Mildly affects my use of technology

Affects my use of technology

Significantly affects my use of technology

Prevents my ability to use technology most of the time

I prefer not to answer or Not Applicable

Have you been diagnosed with Traumatic Brain Injury (TBI)?

Yes

No

I prefer not to answer

If yes, do you feel that your TBI affects your ability to use technology (including web sites and/or mobile apps)?

Does not affect my use of technology

Mildly affects my use of technology

Affects my use of technology

Significantly affects my use of technology

Prevents my ability to use technology most of the time

I prefer not to answer or Not Applicable

All participant data collected via this demographics questionnaire and via the screening & scheduling script provided to the recruiting team will be submitted to the study lead within 24 hours after the last participant is scheduled. Each participant record in the spreadsheet must be accompanied by the participant ID.

Participant IDs

Participant IDs will be generated to be unique and to include basic demographic information. This supports the test team in easily understanding key information about the participant for the purposes of ensuring that recruiting goals are met and that correct study configurations are prepared. Participant IDs will be formatted as follows:

SS.PP.DDD.X.YY.C.C (e.g. 02.01.TAB.M.46.C.N)

Session #: SS (static session number, does not change)

Participant #: PP (the number of the participant calculated as number complete plus one, N+1)

Device Type: DDD (IPH = iPhone, IPA = iPad, TAB = Samsung Tab4, GAL = Samsung Galaxy S5, LAP = Laptop)

Gender: X (M or F)

Age: YY (two-digit age)

Participant has a Caregiver: C (C or N)

Participant is a Caregiver: C (C or N)

Tasks

All participants will be directed to perform the same eight tasks in the same order. In this manner, HFE will be able to assess the degree to which the Veteran participant gains confidence and experience in using the app over the session. The tasks will exercise all of the major features of the application including directly booking appointments, viewing appointments (booked, cancelled and/or not booked), cancelling appointments, and making appointment requests as well as providing feedback on the app to the VA and determining what they should do if the scheduling were an urgent matter. All tasks will begin from the login screen to standardize for comparison between participants.

Task Descriptions (directions provided during task completion)

The following task information will be used to configure Morae for display of the tasks to the participants. Target Task Time and Success Criteria will be used for coding the results and will not be presented to the participant. Complete study materials can be found in Appendix A.

Task 1A: Direct Scheduling: Primary Care #1

Scenario: You have recently received an appointment reminder in the mail that your annual check-up is due. You see Dr. Kanelos, who works in Dr. Evans’ clinic, at the VA hospital in Cheyenne. You would like to schedule the appointment for the morning of August 22nd.

Task: Use the scheduling feature of this app, completely schedule your appointment directly with your primary care clinic.

Target Task Time: 2.5 minutes (estimate only)

Success Criteria: 1. Date and time is selected.

2. Appointment is successfully submitted.

3. Success message is displayed.

Task 1B: Direct Scheduling: Primary Care #2

Scenario: The reminder that you received about your upcoming checkup also included a reminder to schedule an appointment to have some annual bloodwork completed. According to the letter, you need to have the bloodwork completed at least one week before your annual exam appointment so that the doctor will have the results to review with you at your appointment. You can have the blood drawn by a tech at your primary care clinic.

Task: Use the scheduling feature of this app, completely schedule your lab appointment directly with your primary care clinic at least one week before the annual exam appointment you scheduled for August 22nd.

Target Task Time: 2.5 minutes (estimate only)

Success Criteria: 1. Date and time is selected.

2. Appointment is successfully submitted.

3. Success message is displayed.

Task 1C: Direct Scheduling: Primary Care #3

Scenario: You haven’t been taking one of your medications as well as you should have been and expect poor lab results to come back. So, you expect that Dr. Kanelos will want to follow up with you one month after the appointment that you just scheduled for your annual exam. You’re concerned that his calendar may fill up and decide to go ahead and schedule that additional follow up appointment now.

Task: Use the scheduling feature of this app to select times and completely schedule your follow up appointment directly with your primary care clinic.

Target Task Time: 2.5 minutes (estimate only)

Success Criteria: 1. Date and time is selected.

2. Appointment is successfully submitted.

3. Success message is displayed.

Task 2: View Scheduled Appointment

Scenario: Now that you’ve scheduled all of these appointments, you are writing down the appointments in your personal calendar and can’t remember the exact date and time of your lab work appointment.

Task: Use the features of this app, view the details of your lab work appointment to find the exact date and time of the appointment and to confirm that your submission has been received (e.g., your appointment has been successfully scheduled).

Target Task Time: 30 seconds (estimate only)

Success Criteria: 1. Correct appointment detail page is displayed.

2. Participant correctly identifies date and time of scheduled appointment.

Task 3: Cancel Appointment

Scenario: You are writing down the date and time of the follow up appointment in your personal calendar and realize that you have a vacation planned. You won’t be able to make it to the appointment after all and decide to just cross your fingers and hope that the lab work comes back clear and Dr. Kanelos doesn’t want a follow up.

Task: Use the features of this app to cancel the follow up appointment you made.

Target Task Time: 30 seconds (estimate only)

Success Criteria: 1. Correct appointment is cancelled.

3. Success message for cancellation is displayed.

Task 4: Make Appointment Request (Mental Health)

Scenario: You’ve been having trouble sleeping again and, while you’re using the app, you decide to go ahead and try to get an appointment with the mental health clinic you saw last year when you were having difficulty sleeping. You’re hoping that they’ll be able to refill the Ambien prescription that they gave you a year ago. But, you don’t remember the name of the doctor you saw and don’t really care who you see this time around, since you’re hoping for just a prescription.

Task: Use the features of this app to request that the mental health clinic call you to schedule your appointment by phone. For the purposes of this task, you may enter your phone number as 555-555-1212 and select your own preference for best time to call.

Target Task Time: 2.5 minutes (estimate only)

Success Criteria: 1. Date and time options are selected.

2. Appointment request is successfully submitted.

3. Success message is displayed.

Task 5: Send Feedback

Scenario: Since you’ve spent all of this time using the app, you have some ideas that you want to share with the VA about how to make the app better for other Veterans.

Task: Use the app to send your feedback to the VA.

Target Task Time: 30 seconds (estimate only)

Success Criteria: 1. Participant successfully locates the feedback form.

2. Feedback form is completed successfully.

Task 6: Locate Guidance on Urgent Needs

Scenario: Due to your medical conditions, you occasionally experience situations where you need urgent care. You wonder if you could or should use this app in those types of situations.

Task: Use the app to find information on what to do if your appointment is an urgent matter.

Target Task Time: 30 seconds (estimate only)

Success Criteria: 1. Participant correctly identifies what they should do if the appointment is an urgent matter (call 911).

Test Data

The testing will require that participants interact with identical data as they perform the standardized tasks. In order to accommodate for this, data will be identified within the testing environment and the test scenario will be developed for the tasks based on available data. The data will likely need to be augmented or corrected to ensure that it is complete (to support the tasks) and correct. The data will then be modified according to identified requirements and then cloned by a member of the development team to produce 25 identical records, one for use by each participant. In order to accommodate for use of data for test set up and validation, the data may need to be reset between sessions, or at the end of testing days, resulting in a reset of the data to be used by the participants.

Assumptions & Constraints

The following are assumptions and constraints of this study method, design or configuration:

The VAR App is available, stable, and properly configured to support tasks defined in this plan no later than 5/9/2016.

The testing data is available to the HFE team no later than 5/9/2016.

Veteran participants will be required to travel to the Informatics Research & Design Center in Nashville, TN. This may limit or constrain recruiting.

Risks & Limitations

The study design imposes a series of risks for consideration by the Program Office. They include:

Incomplete VAR 3.0 build. The build may not be complete prior to the testing sessions. Testing must be scheduled in order to complete the study in a timely manner as inform development prior to deployment. See schedule. As a result, usability findings may be limited or incomplete. Additional usability evaluation is also recommended in conjunction with any field testing.

Stability of Test Environment. The test will be conducted in a technical environment that may become unresponsive at times. Should this occur during a test session, it could result in in lost data or the need to reschedule the session. If this occurs, HFE will discuss schedule slippage with the Program Office. Together, the team will decide if we will stop the study or extend the schedule.

Network or System Latency. Remote UT introduces the strong possibility that the participant will experience latency during the session. This lag can impact quantitative metrics of task time and influence perceived participant satisfaction with the system. It can also potentially result in lost sessions or the need to reschedule sessions. Again, if this occurs, HFE and the Program Office will decide on next steps.

Test Accounts and Simulated Data. Test accounts with test data will be reviewed for relevance and accuracy. However, data will be fictional. HFE will review test data with a subject matter experts to perform the due diligence required to ensure data accuracy and avoid any distractions to the participant.

These risks and limitations will be recommunicated to the Program Office in conjunction with the final report.

Findings and Recommendations

The final report will include detailed findings that represent the observations, interpretations, impacts, and recommendations from the usability professionals involved with the study. Findings will be a combination of qualitative and quantitative. Each usability issue will have an assigned ranking and recommendations for improvements. When making recommendations for improvements, more than one will be included wherever possible, so developers have options to consider. Screen captures and/or video clips will be included to contribute to understanding of issues found. See Appendix B for a discussion on usability rankings.

User Performance Measures

The report issued on the results of this study will provide measures as follows:

Effectiveness – Objective measures of task success, task failures, and errors.

Efficiency – Objective measures of time on task and number of clicks/taps to complete each task.

Satisfaction – Subjective measures that express user satisfaction with the ease of use of the system.

Success criteria for each task will be developed in collaboration with the Program Office. Benchmark task times will be calculated by averaging the time for successful completion of the tasks by two expert users from the target audience as identified by the Program Office. Their averaged task times will be doubled to create the task time success thresholds. Task success will be determined by meeting all success criteria within the task time success threshold.

The table below outlines the user performance measures that will be reported as part of this study. Please note that, because of the small sample size, statistical significance will not be able to be inferred. However, the usability issue data provided (e.g., number and type of “errors”) will be defensible based on studies defining the prevalence of usability problems in populations of the size in this study. Detailed performance data, for each task and each participant, will be provided in the form of an Excel workbook along with the final report. At the request of Connected Care, measures related to task success and failure will be reported both as calculated with task time as a success criteria and without.

Measure |

Units (averages across all tasks and participants; as well as by scheduling type for comparative purposes) |

Effectiveness |

|

Task Success |

Ratio of Task Success (# of successes/# of attempts) Reported both with task time and without task time criteria |

Task Failure |

Ratio of Task Failure (# of failures/# of attempts) Reported both with task time and without task time criteria |

Task Errors |

Ratio of Errors (# of errors/# of attempts) |

Failed Tasks |

Mean # of Failed Tasks per Participant Reported both with task time and without task time criteria |

Error Count |

Total # of Unique Errors |

Efficiency |

|

Task Times |

Mean Time (in minutes or seconds) of Successful Attempts (per Task) |

Satisfaction |

|

Task Satisfaction |

Mean Response to Post Task Satisfaction Questionnaires (per Task) |

SUS |

System Usability Scale Score (see Appendix C) |

User Performance Measures

Schedule & Logistics

The following timeframes are provided to plan, execute and report on the study. This schedule is subject to change.

Milestone/Tasks |

Estimated Date 5 |

Approx. Duration |

Responsible |

Study Proposal (this document) |

4/27/2016 |

N/A |

HFE |

Dry Run |

7/27/2016 |

One 2-hour session |

HFE |

Scenarios/Tasks Working Session (to finalize materials) |

7/27/2016 |

One 2-hour session |

HFE, CC |

Verification of Test Configuration |

7/27/2016 |

One 2-hour session |

HFE, CC |

Participant Recruiting |

7/28/2016 – 8/10/2016 |

10 days |

HFE |

Study Plan Delivery |

7/29/2016 |

Within 1 week of test |

HFE |

Test Sessions |

8/4/2016 – 8/16/2016 |

10 days |

HFE |

Preliminary Findings |

8/12/2016 |

1 day |

HFE, CC |

Findings and Data Compilation (includes internal HFE peer review) |

8/17/2016 – 8/31/2016 |

10 days |

HFE |

Draft Report Briefing |

9/1/2016 |

2 hours |

HFE |

Final Report Delivery |

9/6/2016 |

3 days |

HFE |

Table 3: Proposed Study Schedule

This is the most accurate timeline possible at this time and is subject to change, depending on the availability of the VAR 3.0 application, supporting test data, successful completion of verification of testing configuration and the ability to recruit participants. The delivery of draft report and final report is notional and completely dependent on the completion of the minimum number of participants.

Verification of the test configuration will consist of a working session with the Connected Care Office to ensure that the test materials (including task success criteria), technical configuration and roles of all members of the study team (including the moderator and observers) do not require revisions prior to the first session with a participant. This session will last approximately one hour. During this session, the study team will also benchmark the task performance for the purposes of coding the results of the study. A study sponsor will be asked to act as the role of the participant (or designate someone else to do so) and complete the tasks. Data collected from this benchmark will be used to calculate ideal task times and success criteria. Inability to conduct this session successfully could seriously jeopardize the study schedule and require all participant interview sessions to be rescheduled.

Daily Updates

Daily updates on the progress of the study will be provided to the program office. The recruiter will provide HFE with daily statistics on the status of the recruiting effort to include the number of participants who have been contacted, the number who have refused participation and the number who have accepted participation. The study team will add statistics on the completion of the study sessions to include high level results from the completed sessions. This daily report will be provided to the program office by HFE for distribution as they see fit.

Interview Schedule Time Slots & Logistics

Sessions will be scheduled with at least 30 minutes in between each, for debrief by the administrator(s) and data logger(s), and to reset HFE test tools to the proper test conditions. A maximum of five participants will be scheduled for each test session day, over a two week testing period. Testing will continue until the recruiting requirements are complete. The following table provides a notional session schedule of available time slots. Please note the following:

Each session will last approximately 1 hour and 30 minutes. All times are CST.

Session time slots are provided for maximum flexibility in scheduling and are not provided so that all slots will be filled on all days.

Session time slots are provided early and late in the day to accommodate the needs of Veterans who work during normal business hours. These times slots are to be used only on an “as needed” basis. The majority of sessions should be scheduled within the core three sessions in the middle of the day.

The session schedule does not permit for a lunch break or other breaks for the facilitation team. As such, recruiters should schedule in such a manner so as to allow for breaks in the day. If it is necessary to schedule all five sessions in a single day, or more than three consecutive sessions, the recruiter must coordinate with the facilitator.

In order to ensure that the devices that are being used stay fully charged, the recruiter should avoid scheduling more than two consecutive sessions where the participant will use the same device (e.g., two sessions in a row where both participants are using iPhone).

When scheduling a participant, the recruiter will assign a participant ID following the guidance outlined in the corresponding participant ID section above. This participant ID will be included in all communications with the participant and with the study team. The participant ID will also be included in the WebEx session name and subsequent recording file name.

Week 1 Sessions |

|

Week 2 Sessions |

||||

|

|

|

|

Mon 8/8/2016 |

Participant ID |

User ID** |

|

|

|

|

8:00-9:30 am* |

21.PP.DDD.X.YY.C.C |

|

|

|

|

|

10:00-11:30 pm |

22.PP.DDD.X.YY.C.C |

|

|

|

|

|

12:00-1:30 pm |

23.PP.DDD.X.YY.C.C |

|

|

|

|

|

2:00-3:30 pm |

24.PP.DDD.X.YY.C.C |

|

|

|

|

|

4:00-5:30 pm* |

25.PP.DDD.X.YY.C.C |

|

Tues 8/2/2016 |

Participant ID |

User ID** |

|

Tues 8/9/2016 |

Participant ID |

User ID** |

8:00-9:30 am* |

01.PP.DDD.X.YY.C.C |

|

|

8:00-9:30 am* |

26.PP.DDD.X.YY.C.C |

|

10:00-11:30 pm |

02.PP.DDD.X.YY.C.C |

|

|

10:00-11:30 pm |

27.PP.DDD.X.YY.C.C |

|

12:00-1:30 pm |

03.PP.DDD.X.YY.C.C |

|

|

12:00-1:30 pm |

28.PP.DDD.X.YY.C.C |

|

2:00-3:30 pm |

04.PP.DDD.X.YY.C.C |

|

|

2:00-3:30 pm |

29.PP.DDD.X.YY.C.C |

|

4:00-5:30 pm* |

05.PP.DDD.X.YY.C.C |

|

|

4:00-5:30 pm* |

30.PP.DDD.X.YY.C.C |

|

Wed 8/3/2016 |

Participant ID |

User ID** |

|

Wed 8/10/2016 |

Participant ID |

User ID** |

8:00-9:30 am* |

06.PP.DDD.X.YY.C.C |

|

|

8:00-9:30 am* |

31.PP.DDD.X.YY.C.C |

|

10:00-11:30 pm |

07.PP.DDD.X.YY.C.C |

|

|

10:00-11:30 pm |

32.PP.DDD.X.YY.C.C |

|

12:00-1:30 pm |

08.PP.DDD.X.YY.C.C |

|

|

12:00-1:30 pm |

33.PP.DDD.X.YY.C.C |

|

2:00-3:30 pm |

09.PP.DDD.X.YY.C.C |

|

|

2:00-3:30 pm |

34.PP.DDD.X.YY.C.C |

|

4:00-5:30 pm* |

10.PP.DDD.X.YY.C.C |

|

|

4:00-5:30 pm* |

35.PP.DDD.X.YY.C.C |

|

Thurs 8/4/2016 |

Participant ID |

User ID** |

|

Thurs 8/11/2016 |

Participant ID |

User ID** |

8:00-9:30 am* |

11.PP.DDD.X.YY.C.C |

|

|

8:00-9:30 am* |

36.PP.DDD.X.YY.C.C |

|

10:00-11:30 pm |

12.PP.DDD.X.YY.C.C |

|

|

10:00-11:30 pm |

37.PP.DDD.X.YY.C.C |

|

12:00-1:30 pm |

13.PP.DDD.X.YY.C.C |

|

|

12:00-1:30 pm |

38.PP.DDD.X.YY.C.C |

|

2:00-3:30 pm |

14.PP.DDD.X.YY.C.C |

|

|

2:00-3:30 pm |

39.PP.DDD.X.YY.C.C |

|

4:00-5:30 pm* |

15.PP.DDD.X.YY.C.C |

|

|

4:00-5:30 pm* |

40.PP.DDD.X.YY.C.C |

|

Fri 8/5/2016 |

Participant ID |

User ID** |

|

Fri 8/12/2016 |

Participant ID |

User ID** |

8:00-9:30 am* |

16.PP.DDD.X.YY.C.C |

|

|

8:00-9:30 am* |

41.PP.DDD.X.YY.C.C |

|

10:00-11:30 pm |

17.PP.DDD.X.YY.C.C |

|

|

10:00-11:30 pm |

42.PP.DDD.X.YY.C.C |

|

12:00-1:30 pm |

18.PP.DDD.X.YY.C.C |

|

|

12:00-1:30 pm |

43.PP.DDD.X.YY.C.C |

|

2:00-3:30 pm |

19.PP.DDD.X.YY.C.C |

|

|

2:00-3:30 pm |

44.PP.DDD.X.YY.C.C |

|

4:00-5:30 pm* |

20.PP.DDD.X.YY.C.C |

|

|

4:00-5:30 pm* |

45.PP.DDD.X.YY.C.C |

|

|

|

|

||||

Week 3 Sessions |

|

|

||||

Mon 8/15/2016 |

Participant ID |

User ID** |

|

|

|

|

8:00-9:30 am* |

21.PP.DDD.X.YY.C.C |

|

|

|

|

|

10:00-11:30 pm |

22.PP.DDD.X.YY.C.C |

|

|

|

|

|

12:00-1:30 pm |

23.PP.DDD.X.YY.C.C |

|

|

|

|

|

2:00-3:30 pm |

24.PP.DDD.X.YY.C.C |

|

|

|

|

|

4:00-5:30 pm* |

25.PP.DDD.X.YY.C.C |

|

|

|

|

|

Tues 8/16/2016 |

Participant ID |

User ID** |

|

|

|

|

8:00-9:30 am* |

26.PP.DDD.X.YY.C.C |

|

|

|

|

|

10:00-11:30 pm |

27.PP.DDD.X.YY.C.C |

|

|

|

|

|

12:00-1:30 pm |

28.PP.DDD.X.YY.C.C |

|

|

|

|

|

2:00-3:30 pm |

29.PP.DDD.X.YY.C.C |

|

|

|

|

|

4:00-5:30 pm* |

30.PP.DDD.X.YY.C.C |

|

|

|

|

|

If possible, sessions may begin on Tuesday, 8/2 and Wednesday, 8/3. All sessions should be completed by Tuesday, 8/16. * Early morning and late afternoon timeslots should be scheduled only as necessary. ** User IDs, used by participants for logging into the system, will be entered by the testing team after participants are scheduled. |

||||||

Appendix A: Study Materials

Session Day Set Up Checklist

Lab preparation:

Coordinate front desk coverage for dates of study and procedure for welcoming participants and notifying facilitator of participant arrival. Prepare for early arrival of participants.

Place sign downstairs indicating usability testing location.

Ensure living room lab is clean and temperature and lighting are set to comfortable levels.

Remove any unnecessary cameras or other A/V equipment that is not being used for the study.

Prepare water bottles for offering to participants.

Mobile device preparation. Ensure all devices are:

Fully charged.

Successfully connected to the Wi-Fi.

Successfully connected to VA VPN (AnyConnect).

Successfully connected to the VAR app.

Placed adjacent to the facilitator’s seating in the living room lab for easy access during the session.

On the device, the four questionnaires are bookmarked (saved as shortcuts) for easy access.

Laptop preparation:

Close all open applications.

Connect computer to Wi-Fi and VPN.

Open Morae Recorder and study config file. Ensure that the recording is configured to save to your desktop (Modify Recording Details > Recording Folder field).

Camera preparation:

Ensure Mr. Tappy camera is functioning with Morae Recorder and positioned properly on the set up to capture full mobile screen and to minimize glare on the device screen.

Ensure secondary camera is functioning with Morae Recorder and positioned properly to capture facial expressions of participant (not positioned to capture the facilitator).

Paperwork preparation:

Retrieve a stack of business cards & notepad with pen.

Retrieve printed script and timing aid.

Retrieve copies of the A/V Consent Forms for completion by the participant in person.

Retrieve the task cards.

Retrieve list of logins.

Session Script: Mobile Device Use

Pre-Session Checklist

Place an A/V Consent Form on the clipboard and place with a pen (in the waiting area up front).

If applicable, move the coffee table back in front of the couch for easy access to mobile devices.

Confirm participant number and login/account information assigned to participant.

Place the task description cards in the correct order.

Create a login information card for the session and place on top of task cards.

Check recruiting information to determine which device the participant will be using and double check charge and connectivity on the device.

Que up the Opening Questionnaire, select the participant ID in the first question and mount the iPad on Mr. Tappy.

On the laptop:

Ensure Morae Recorder is still running and has correct config file loaded. The config file should be for mobile device use.

Kick off WebEx as Host and start the conference phone audio.

Share the laptop screen.

Start WebEx recorder and pause.

Immediately prior to the session, start the Morae recorder and un-pause the WebEx recorder.

Introduction (5 minutes)

[Escort the participant to the living room lab, providing restroom information along the way, and ask if he or she would care for a bottle of water. Once the participant is seated and comfortable, proceed with the script.]

I will be reading from a script in order to standardize the session for all participants.

I’d like to start by thanking you for participating in this study. We rely heavily on volunteers like you to make the VA’s systems better for Veterans. As you know, my name is [insert moderator name] and I’m from Human Factors Engineering and I am your facilitator. We also have [insert names of observers, note takers, etc.] who are on the phone and viewing us via remote technology. They will be observing today, taking notes and helping with the technology. Periodically, one of these people may interrupt us if there is an issue with the technology. For example, if they notice that you experience a technical difficulty or a camera stops functioning. If we have extra time at the end of the session, I may open for questions from these people. But, more than likely, you will only be interacting with me today.

[If participant is accompanied by a caregiver, add the following:

We’d like to thank you for accompanying your Veteran to his/her session today. We have incorporated several questions into our session today specifically to gather information from you, as his/her caregiver. However, we’d like to remind you that our Veteran is our primary participant today and we would like to receive his/her unbiased feedback. However, we do want to learn about how well the app works for you all as a team. In your relationship, who would be the most likely person to operate the device to use the Veteran Appointment Request app to manage appointments?

If the caregiver is the primary user, add the following:

When we start using the devices, I will ask you to operate.

If so, adjust the facial camera to capture both the Veteran and the caregiver.

NOTE: Attempt to defer any discussion on the caregiver relationship to collect the information within the opening questionnaire.]

Our session today will last no longer than 1 hour and 30 minutes. There are three sections to our session. First, we’d like to learn about your experiences scheduling appointments with the VA. In the second portion of our session, we would like you to try using a new VA appointment scheduling mobile app. During this portion, we will give you a device to use that can access a trial version of the app. The device should be similar to the one that you own and use. We’ll then sit with you while you use it to complete some fictional but realistic tasks using the app. We will wrap up with a final questionnaire designed to gather your impressions on the usability of the app and your potential use of it in the future.

Your participation is completely voluntary and you may withdraw at any time. All of the information that you provide will be kept confidential, and your name will not be associated with any feedback that you provide, verbally or via questionnaires.

Do you have any questions or concerns before we get started? [Address questions.]

Opening Questionnaire (10 minutes)

We will begin with questions about your experience scheduling appointments at the VA. Here is a [device being used] that you can use to complete the questionnaire.

[Double check that the opening questionnaire is loaded on the device and that the correct participant ID is selected in the first question in the questionnaire. Hand the setup to the participant. The device should be mounted to Mr. Tappy so that the observers can observe and take notes on responses.]

You can see that it is equipped with a camera that captures video of the screen while you work. You’ll be using this set up throughout the session. It is a little awkward but is the best way for us to capture your use of mobile devices for our analysis later. If we have any issues with the device or the cameras, I may have to ask you to hand it back to me so that I can make adjustments. Do you have any questions about how this set up works?

[Address any questions.]

[If the participant is accompanied by a caregiver, add the following:

As you input information on behalf of our Veteran, please make sure that you respond to questions with responses from our Veteran. I’d like you both to use the device as you would if you were using it together at home.]

Please go ahead and complete the questionnaire. Please speak out loud as you complete the questions by reading the question and stating your response out loud so that we can be sure to accurately capture your responses. We can discuss your responses as you work, if you would like.

Task Completion (40 minutes)

Thank you for completing the questionnaire.

[Make sure that the opening questionnaire is submitted.]

Please place the device on the table while we discuss the next part of the session. The VA has built a mobile app to help you book and request appointments. We are interested in how easy the app is for Veterans like you to use. We’d like to watch you try out the app based on some realistic scenarios. Hopefully, we’ll find out if there are elements that are frustrating or confusing. As you work, please let us know if you find anything hard to follow or if you’re not sure what to do next. We call this a usability test, but we are testing the app. So if you have trouble understanding something, that’s an indication that there’s a problem with the app and this is not a reflection on you. The purpose of our time together is to uncover usability problems with the app before it is released to other Veterans. You’re coming in with your white glove to look for our dust, not the other way around. So, there are no wrong answers to any questions we may ask. No one observing today was involved in the design of the app you are using, so please feel free to be as open and honest as possible.

We appreciate any comments you have about the app and ask that you “think out loud” as you are presented with the screens. In other words, I’d like for you to give me a “blow by blow” of what you are thinking as you work. For example, explain why you are tapping on what you are tapping on, why you are entering the information that you enter and, basically, what is going through your head as you work. It may be a bit awkward at first but it’s really very easy once you get used to it.

Basically we’d like any thought that comes into your head to come out your mouth. For example, if I were driving to work I might say as I get in my car, “I’m opening the car door and sitting down, my seat is really hot from sitting in the sun… I should put it in the shade tomorrow. I’m putting on my seat belt. I’m almost out of gas. So, I need to remember to go to the gas station tonight on my way home.” Hearing this type of narration helps usability experts know your concerns, any surprises or issues that might come up in the process and why you’re going through the process the way that you do.

[If the participant is accompanied by a caregiver, add the following:

We would like for both of you to “think out loud,” as you work. In this way, our usability experts can understand concerns of each of you as individuals as well as the two of you as a team.]

Do you have any questions about how to “think out loud”?

[Address any questions regarding think aloud protocol.]

We will ask you to perform 8 tasks using the app today. The tasks are based on a reasonable, but fictional set of scenarios. We need you to play along as if you were experiencing the scenario, even if parts of it do not apply to you personally. For each task, I will hand you a card describing the task. Before you start the task, I’d like to ask you to read the task description and then describe it to me so that we can make sure that you understand the task. This is important because we are not testing whether you understand the task. When you confirm that you understand, you will start. When you feel like you have completed the task or that you’d like to stop, please tell me. You may review the task card at any time while you work. After each task, you will hand me the device and I will bring up a questionnaire to gather information about your experience using the app to accomplish the task. We will then move on to the next task. We will complete all 8 tasks in this way.

Do you have any questions before we start? [Address any questions.]

[Hand the participant the task card.]

Here is the card for the [first, second, third, etc.] task. Please go ahead and read the task description. I’ll wait a moment while you read.

[While the participant reads, bring up VAR on the device and login using the participant account for the respective session. NOTE: You must manually enter the test URL each time due to system redirects to Launchpad (https://vet-int.mobilehealth.va.gov/var-web-3.0/).]

Can you please summarize the task for me?

[Address any questions or misunderstandings to ensure that the participant can recount an accurate understanding of the scenario and the task. For Task 1B, confirm that the participant understands that he/she should be scheduling another appointment with the primary care clinic.]

[Hand the participant the setup.]

Please go ahead and proceed with completing the task. When you feel like you have completed the task, please tell me that you are done. Don’t forget to speak aloud and give me a “blow by blow” of what you are thinking as you work.

[Avoid affecting the participant’s behavior and/or task time as much as possible. Prompt only for think aloud, if the participant stops narrating. Make notes of any questions for follow up after the task completion so as to avoid influencing use. If the participant asks for help, ask for them to show you what they would do if you were not there.]

Thank you for showing me how you would use the app for this task. Now, please hand me the device so that I can bring up some questions about the task.

[Queue up the post task questionnaire. Select the participant ID and the task #. Hand the setup back to the participant.]

Please go ahead and complete the questionnaire. As a reminder, please read aloud as you complete the questions so that we can be sure to accurately capture your responses. We can discuss your responses as you work, if you would like.

Thank you for completing the questionnaire.

[Take the setup from the participant and make sure that the questionnaire is submitted. Load up VAR and login and/or return to the home screen. NOTE: All tasks must start from the home screen for accurate comparison between participants.]

We will now move on to the next task.

[Repeat the script above (from the instruction “Hand the participant the task card.”) for each of the 8 tasks.]

SUS (5 minutes) & Closing Questionnaire (15 minutes)

Thank you for showing me how you would use the app. Please hand the device to me. We’ll wrap up today with a questionnaire about your impression of the app and any overall thoughts you have about it.

[Queue up the closing questionnaire and select the participant ID. Hand the setup back to the participant.]

Here’s the device that you can use to complete the questionnaire. As a reminder, please read aloud as you complete the questions so that we can be sure to accurately capture your responses.

[If the participant is accompanied by a caregiver, add the following:

As you input information on behalf of our Veteran, please make sure that you respond to questions with responses from our Veteran. I’d like you both to use the device as you would if you were using it together at home.]

We can discuss your responses as you work, if you would like.

[Take the setup from the participant and make sure that the questionnaire is submitted.]

Closing

[If time permits, open the call for questions from observers.]

[Remember to speak slowly.] This concludes our session today. We will be combining your feedback with that of other people who participate in this study. When all of the sessions are complete, we will be delivering a final report on the usability of the VAR app based on the combined feedback.

Once again, thank you for participating. Your feedback is invaluable in helping us to understand if the Veteran Appointment Request app is ready to be used by other Veterans. In fact, if you have any additional feedback after our session today that you would like me to consider for inclusion in the final report, please feel free to email me.

[Hand the participant facilitator’s business card.]

I would like to ask you for one more favor before we wrap up. Later today you will receive an email from me with a link to one more questionnaire. In an effort to continually improve how VHA Human Factors Engineering conducts studies, we would like your feedback on the session carried out by our team today, including my facilitation. The email from me will include a link to a questionnaire to gather that feedback. It is very short, including only a handful of questions, and should only take a couple of minutes. There will be a code in the email that you should use in the questionnaire to differentiate this study from other studies currently underway by Human Factors Engineering. It won’t identify your responses individually.

Do you have any remaining questions or comments for me today?

[Address any remaining questions.]

We are now going stop the recorder and save the recording file. For the recording, this is Session [state participant ID for the recorder; save the Morae recording with the participant ID as a suffix in the file name and stop the WebEx recorder].

Thank you again and have a fantastic day! I will go ahead and walk you out.

[Escort the participant back to the waiting room.]

Post-Session Checklist

Stop the WebEx recorder. Close the WebEx and hang up the phone.

Ensure that the Morae Recording File is saved with participant ID as the suffix to the file name.

Send the Morae Recording File via the CSRA Secure FTP utility (https://sfta.sra.com/).

Session Script: Laptop Use

Pre-Session Checklist

Move the coffee table so that the laptop desk is more accessible to the laptop.

Place an A/V Consent Form on the clipboard and place with a pen (in the waiting area up front).

Place the task description cards in the correct order.

Create a login information card for the session and place on top of task cards.

On the laptop:

Que up the Opening Questionnaire, select the participant ID in the first question.

Ensure Morae Recorder is still running and has correct config file loaded. The config file should be for laptop use.

Kick off WebEx as Host and start the conference phone audio.

Share the laptop screen.

Start WebEx recorder and pause.

Immediately prior to the session, start the Morae recorder and un-pause the WebEx recorder.

Introduction (5 minutes)

[Escort the participant to the living room lab, providing restroom information along the way, and ask if he or she would care for a bottle of water. Once the participant is seated and comfortable, proceed with the script.]

I will be reading from a script in order to standardize the session for all participants.

I’d like to start by thanking you for participating in this study. We rely heavily on volunteers like you to make the VA’s systems better for Veterans. As you know, my name is [insert moderator name] and I’m from Human Factors Engineering and I am your facilitator. We also have [insert names of observers, note takers, etc.] who are on the phone and viewing us via remote technology. They will be observing today, taking notes and helping with the technology. Periodically, one of these people may interrupt us if there is an issue with the technology. For example, if they notice that you experience a technical difficulty or a camera stops functioning. If we have extra time at the end of the session, I may open for questions from these people. But, more than likely, you will only be interacting with me today.

[If participant is accompanied by a caregiver, add the following:

We’d like to thank you for accompanying your Veteran to his/her session today. We have incorporated several questions into our session today specifically to gather information from you, as his/her caregiver. However, we’d like to remind you that the Veteran is our primary participant today and we would like to receive their unbiased feedback. However, we do want to learn about how well the app works for you all as a team. In your relationship, who would be the most likely person to operate the computer to use the Veteran Appointment Request app to manage appointments?

If the caregiver is the primary user, add the following:

When we start using the computer, I will ask you to operate.

If so, adjust the facial camera to capture both the Veteran and the caregiver.

NOTE: Attempt to defer any discussion on the caregiver relationship to collect the information within the opening questionnaire.]

Our session today will last no longer than 1 hour and 30 minutes. There are three sections to our session. First, we’d like to hear about your experiences scheduling appointments with the VA. In the second portion of our session, we would like you to try using a new VA appointment scheduling app. During this portion, we will ask you to use this laptop to access a trial version of the app. We’ll sit with you while you use it to complete some fictional but realistic tasks using the app. We will wrap up with a final questionnaire designed to gather your impressions on the usability of the app and your potential use of it in the future.

Your participation is completely voluntary and you may withdraw at any time. All of the information that you provide will be kept confidential, and your name will not be associated with any feedback that you provide, verbally or via questionnaires.

Do you have any questions or concerns before we get started? [Address questions.]

Opening Questionnaire (10 minutes)

We will begin with questions about your experience scheduling appointments at the VA. You should see that this laptop has the questionnaire up on the screen.

[Double check that the opening questionnaire is loaded on the laptop and that the correct participant ID is selected in the first question in the questionnaire.]

[If the participant is accompanied by a caregiver, add the following:

As you input information on behalf of our Veteran, please make sure that you respond to questions with responses from our Veteran. I’d like you both to use the computer as you would if you were using it together at home.]

Please go ahead and complete the questionnaire. Please speak out loud as you complete the questions by reading the question and stating your response out loud so that we can be sure to accurately capture your responses. We can discuss your responses as you work, if you would like.

Task Completion (40 minutes)

Thank you for completing the questionnaire.

[Make sure that the opening questionnaire is submitted.]

Let’s pause for a few minutes while I explain the next part of the session. The VA has built a mobile app to help you book and request appointments. We are interested in how easy the app is for Veterans like you to use. We’d like to watch you try out the app based on some realistic scenarios. Hopefully, we’ll find out if there are elements that are frustrating or confusing. As you work, please let us know if you find anything hard to follow or if you’re not sure what to do next. We call this a usability test, but we are testing the app. So if you have trouble understanding something, that’s an indication that there’s a problem with the app and this is not a reflection on you. The purpose of our time together is to uncover usability problems with the app before it is released to other Veterans. You’re coming in with your white glove to look for our dust, not the other way around. So, there are no wrong answers to any questions we may ask. No one observing today was involved in the design of the app you are using, so please feel free to be as open an honest as possible.

We appreciate any comments you have about the app and ask that you “think out loud” as you are presented with the screens. In other words, I’d like for you to give me a “blow by blow” of what you are thinking as you work. For example, explain why you are clicking on what you are clicking on, why you are entering the information that you enter and, basically, what is going through your head as you work. It may be a bit awkward at first but it’s really very easy once you get used to it.

Basically we’d like any thought that comes into your head to come out your mouth. For example, if I were driving to work I might say as I get in my car, “I’m opening the car door and sitting down, my seat is really hot from sitting in the sun… I should put it in the shade tomorrow. I’m putting on my seat belt. I’m almost out of gas. So, I need to remember to go to the gas station tonight on my way home.” Hearing this type of narration helps usability experts know your concerns, any surprises or issues that might come up in the process and why you’re going through the process the way that you do.

[If the participant is accompanied by a caregiver, add the following:

We would like for both of you to “think out loud,” as you work. In this way, our usability experts can understand concerns of each of you as individuals as well as the two of you as a team.]

Do you have any questions about how to “think out loud”?

[Address any questions regarding think aloud protocol.]

We will ask you to perform 8 tasks using the app today. The tasks are based on a reasonable, but fictional set of scenarios. We need you to play along as if you were experiencing the scenario, even if parts of it do not apply to you personally. For each task, I will hand you an index card describing the task. Before you start the task, I’d like to ask you to read the task description and then describe it to me so that we can make sure that you understand the task. This is important because we are not testing whether you understand the task. When you confirm that you understand the task, you will start. When you confirm that you understand, you will start. When you feel like you have completed the task or that you’d like to stop, please tell me. You may review the task card at any time while you work. After each task, I will bring up a questionnaire to gather information about your experience using the app to accomplish the task. We will then move on to the next task. We will complete all 8 tasks in this way.

Do you have any questions before we start? [Address any questions.]

[Hand the participant the task card.]

Here is the card for the [first, second, third, etc.] task. Please go ahead and read the task description. I’ll wait a moment while you read.

[While the participant reads, bring up VAR on the device and login using the participant account for the respective session. NOTE: You must manually enter the test URL each time due to system redirects to Launchpad (https://vet-int.mobilehealth.va.gov/var-web-3.0/).]

Can you summarize the task for me?

[Address any questions or misunderstandings to ensure that the participant can recount an accurate understanding of the scenario and the task. For Task 1B, confirm that the participant understands that he/she should be scheduling another appointment with the primary care clinic.]

Please go ahead and proceed with completing the task. When you feel like you have completed the task, please tell me that you are done. Don’t forget to speak aloud and give me a “blow by blow” of what you are thinking as you work.

[Avoid affecting the participant’s behavior and/or task time as much as possible. Prompt only for think aloud, if the participant stops narrating. Make notes of any questions for follow up after the task completion so as to avoid influencing use. If the participant asks for help, ask for them to show you what they would do if you were not there.]

Thank you for showing me how you would use the app for this task. Now, I’m going to bring up a questionnaire so that you can answer a few questions about the task.

[Queue up the post task questionnaire on the laptop selecting the participant ID and the task #.]

Please go ahead and complete the questionnaire. As a reminder, please read aloud as you complete the questions so that we can be sure to accurately capture your responses. We can discuss your responses as you work, if you would like.

Thank you for completing the questionnaire.

[Make sure that the questionnaire is submitted.]

We will now move on to the next task.

[Repeat the script above (from the instruction “Hand the participant the task card.”) for each of the 8 tasks.]

SUS (5 minutes) & Closing Questionnaire (15 minutes)

Thank you for showing me how you would use the app. We’ll wrap up today with a questionnaire about your impression of the app and any overall thoughts you have about it.

[Queue up the closing questionnaire, selecting the participant ID.]

Please complete the questionnaire. As a reminder, please read aloud as you complete the questions so that we can be sure to accurately capture your responses.

[If the participant is accompanied by a caregiver, add the following:

As you input information on behalf of our Veteran, please make sure that you respond to questions with responses from our Veteran. I’d like you both to use the computer as you would if you were using it together at home.]

We can discuss your responses as you work, if you would like.

[Make sure that the questionnaire is submitted.]

Closing

[If time permits, open the call for questions from observers.]

[Remember to speak slowly.] This concludes our session today. We will be combining your feedback with that of other people who participate in this study. When all of the sessions are complete, we will be delivering a final report on the usability of the VAR app based on the combined feedback.

Once again, thank you for participating. Your feedback is invaluable in helping us to understand if the Veteran Appointment Request app is ready to be used by other Veterans. In fact, if you have any additional feedback after our session today that you would like me to consider for inclusion in the final report, please feel free to email me.

[Hand the participant facilitator’s business card.]

I would like to ask you for one more favor before we wrap up. Later today you will receive an email from me with a link to one more questionnaire. In an effort to continually improve how VHA Human Factors Engineering conducts studies, we would like your feedback on the session carried out by our team today, including my facilitation. The email from me will include a link to a questionnaire to gather that feedback. It is very short, including only a handful of questions, and should only take a couple of minutes. There will be a code in the email that you should use in the questionnaire to differentiate this study from other studies currently underway by Human Factors Engineering. It will not identify your responses individually.

Do you have any remaining questions or comments for me today?

[Address any remaining questions.]

We are now going stop the recorder and save the recording file. For the recording, this is Session [state participant ID for the recorder; save the Morae recording with the participant ID as a suffix in the file name and stop the WebEx recorder].

Thank you again and have a fantastic day! I will go ahead and walk you out.

[Escort the participant back to the waiting room.]

Post-Session Checklist

Stop the WebEx recorder. Close the WebEx and hang up the phone.

Ensure that the Morae Recording File is saved with participant ID as the prefix to the file name.

Send the Morae Recording File via the CSRA Secure FTP utility (https://sfta.sra.com/).

Send follow up email to participant.

Morae Configuration

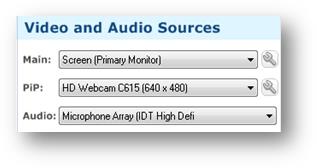

There are two VAR 3.0 Morae study configurations (see attachments). They are very basic in terms of “tasks” or in this case “task.” The difference in the configuration lies in the capture properties. The “laptop” version captures the laptops screen as the “Main” capture and the participants face as the Picture in Picture (PIP) or secondary capture. This differs from the mobile in that the mobile uses two external cameras one to capture the mobile devise via the Mr. Tappy mount and the participants face on the other. The resolutions settings for the configurations are as follows:

Figure 4: Morae Input Configuration for Mobile Device Use

Figure 5: Morae Input Configuration for Laptop Use