CAPSA Pilot Memo

OMB Memo CAPSA Pilot Test_revised 6-24-13.docx

Generic Clearance for Cognitive, Pilot and Field Studies for Bureau of Justice Statistics Data Collection Activities

CAPSA Pilot Memo

OMB: 1121-0339

MEMORANDUM

MEMORANDUM TO: Shelly Wilkie Martinez

Office of Statistical and Science Policy

Office of Management and Budget

THROUGH: Lynn Murray

Clearance Officer

Justice Management Division

Daniela Golinelli, Ph.D.

Chief, Corrections Statistics Program

Bureau of Justice Statistics

FROM: Lauren Glaze

Statistician and CAPSA Project Manager

Corrections Statistics Program

Bureau of Justice Statistics

DATE: May 23, 2013

SUBJECT: BJS Request for OMB Clearance for Pilot Test of the Census of Adult Probation Supervising Agencies (CAPSA), 2014 through the generic clearance agreement OMB Number 1121-0339

The Bureau of Justice Statistics (BJS) currently plans to conduct the Census of Adult Probation Supervising Agencies (CAPSA) in July of 2014. Prior to conducting the 2014 CAPSA national study, BJS is seeking approval under this generic clearance to conduct a pilot test of the CAPSA instrument to determine whether the instrument correctly screens adult probation supervising agencies into the survey, to test the instrument’s functionality, and to assess burden associated with completing the survey. The findings from the pilot test will be used to make the necessary changes to the survey instrument to enhance data quality, reduce burden, and increase efficiencies for the national study. BJS will submit to OMB a full clearance package for the 2014 CAPSA national study by January of 2014.

Introduction

The CAPSA will supplement and enhance BJS’s portfolio of community corrections surveys which comprise an important part of the Bureau’s mandate under the Omnibus Crime Control and Safe Streets Act of 1968, as amended (42 U.S.C. 3732), authorizing the Department of Justice (DOJ), BJS to collect this information. Specifically, the CAPSA will achieve the following four goals:

1. Develop a frame of all public and private adult probation supervising agencies. Currently, there is no comprehensive listing of all independent public and private entities that conduct probation supervision in the U.S. Through the Annual Probation Survey (APS), BJS has tracked the population of adults under probation supervision since 1980. However, the APS is designed to collect aggregate counts and therefore relies on central reporters (some of which are not supervising agencies) when possible to provide the population data for all or part of the state.

The CAPSA will go beyond the scope of the APS to identify all independent probation supervising agencies as defined by the following criteria: 1) supervises at least one probationer disposed or sentenced by an adult criminal court for a felony; 2) supervises at least one adult probationer who is required to regularly report to the agency either in person, via telephone, mail, or electronic means; and 3) is independent, meaning the agency has authority and/or operational responsibility to administer adult probation. The CAPSA roster of probation supervising agencies is still being developed by reviewing and compiling data from available resources (e.g., current APS list, commercial databases and directories; membership lists of professional associations; state and local government publications, websites, and other sources; U.S. Census Bureau’s Government Integrated Directory); the roster currently contains about 1,700 agencies. Once finalized, the roster of agencies will be screened to determine eligibility status for inclusion in the final CAPSA frame of adult probation supervising agencies.1 The CAPSA frame will also serve as an independent source to systematically assess the potential for coverage error in the APS, and potentially improve coverage. A comparison of the two lists could identify agencies (e.g., new agencies, completely independent agencies) that fall within the scope of the APS but for which their population counts are currently excluded.

2. Provide accurate and reliable statistics that describe the characteristics of probation supervising agencies.

Under Title 42, U.S.C Section 3732, BJS is directed to collect and analyze statistical information concerning the operations of the criminal justice system at the federal, state and local levels. BJS periodically collects information about the organization and operations of correctional facilities (e.g., jails and prisons) and parole agencies, but BJS has not collected this type of information about probation supervising agencies since 1991, primarily due to the complexities of the organization and amorphous nature of probation supervision.2 For example, the administration of probation varies, with its loci in either executive or judicial branches, and authority residing at state, county, judicial district, municipal, or other levels, often mixed within a single state. In addition, jurisdictions sometimes establish contracts with private agencies to supervise adult probationers. The practice of probation supervision also varies across and within states, with agencies implementing different methods of supervision, programs for offenders, techniques to assess risks/needs, and exercising, if applicable, various amounts of authority/discretion related to changing probation terms/ conditions of supervision. However, the probation population is the largest component of the correctional population, representing 57% of all offenders under correctional supervision.3 Therefore, it is critical to enhance our understanding of: 1) how changes in the probation population relate to shifts in policies and practices of supervising agencies, and 2) how those shifts are associated with different organizational structures of probation within and across states.

The census will collect information on key agency characteristics such as operational structure (e.g., public versus private agency status); branch and level of government; authority and operational responsibilities (e.g., budgetary, staffing, policy); populations supervised (e.g., felons on probation, misdemeanants on probation, parolees, juveniles); funding sources (e.g., federal, state, or local sources; supervision fees; offender fines); functions/methods of supervision (e.g. face-to-face visits, electronic monitoring or supervision, programs for sex offenders or probationers with mental illness); and supervision authority (e.g., impose conditions, extend probation term, impose short incarceration period). Using these data, BJS could begin to compare outcomes of probation supervision as a function of agency characteristics. More specifically, BJS could use the 2014 CAPSA data to determine if probation supervising agencies within particular organizational structures and/or that exercise particular practices and policies of probation supervision have lower rates of recidivism, as measured by the rate at which probationers are incarcerated. At multiple meetings and conferences attended by BJS, stakeholders such as the American Probation and Parole Association (APPA) and the National Institute of Corrections’ (NIC) State Executives of Probation and Parole Network have articulated that the need for this information is even more apparent now due to the fiscal challenges currently faced by many states. They have expressed that probation supervising agencies need this type of information to evaluate whether scarce resources are being used efficiently and effectively to reduce recidivism and enhance public safety.

3. Provide a frame for future research

The CAPSA frame and information about the probation supervising agencies will support the efforts at the Office of Justice Programs (OJP) to integrate evidence-based research into developing programs, with the primary goals of reducing recidivism and enhancing public safety, and prioritizing funding of programs. The CAPSA will not only provide additional information about probation and permit linking agency characteristics to outcomes of offenders, but it will also provide OJP a means with which to evaluate its programs that are specifically related to probation agencies and offenders. CAPSA statistics will provide the ability to identify potential problems of programs, for example as they relate to outcomes, and a way to track changes in the programs and outcomes over time.

BJS will use the CAPSA to lay a foundation for surveying probation supervising agencies periodically in the future to address other important operational characteristics or emerging issues in community corrections. One potential mechanism for such collections is supplemental collections to the APS; the CAPSA will provide the information necessary to identify sub-groups among the population of supervising agencies that would be eligible to receive particular supplements. For example, factors related to the workload of agencies (e.g., staffing or types and sizes of caseloads) are important to understanding agency operations and their impact on the size of the population, types of offenders supervised, and outcomes of supervision.4 BJS is currently designing the first supplement for the APS and will return to OMB in the fall of 2013/early 2014 for approval to pilot test the supplement under this generic clearance.

In addition to APS supplemental collections, BJS may use the CAPSA findings to develop new and/or support existing collections for additional research in community corrections.5 For example, stakeholders (e.g., the APPA Health and Safety Committee and the National Association of Probation Executives) have identified a pressing need for information on the characteristics and experiences of community corrections officers, specifically data on hazardous duty among the officers similar to that collected for police officers through the FBI’s Law Enforcement Officers Killed and Assaulted (LEOKA) data system. Such data are considered critical for improving the operations of community corrections and enhancing public safety. The CAPSA could provide a frame for the first-ever national survey of officers to better understand the types and characteristics of hazardous incidents they encounter while on the job. The benefits of collecting such officer data would include the ability to link the type of incident to officer characteristics (e.g., training and education, years in service, demographics) and agency characteristics (e.g., number of officers, size and characteristics of probation population, policies related to field visits or carrying a firearm etc.) from CAPSA. This type of analysis would assist in interpreting the hazardous duty data by assessing not only the different types of incidents encountered by different types of officers but also understanding the policies and practices of the agencies that could impact the risks to officers. This is information that community corrections administrators have said would assist them in their planning of officer programs and trainings as well as potentially demonstrating the need for additional resources, such as staff or protective equipment.

Request for Developmental Work

Under the generic clearance (OMB Number 1121-0339), BJS is seeking approval to conduct developmental research for the CAPSA through a pilot of the survey instrument to test its screening capability and functionality to determine the feasibility for the study on a national scale. Through a cooperative agreement with BJS, project staff from Westat and APPA will serve as the data collection agents for the pilot test. As explained previously, the findings from the pilot test will be used to make the necessary changes to the survey instrument to enhance functionality and data quality, reduce burden, and increase efficiencies for the national study. A full information clearance package for the 2014 CAPSA national study will be submitted by BJS to OMB by January of 2014.

The proposed pilot test will achieve the following goals:

Test the capability of the instrument to correctly screen out agencies from the initial CAPSA roster that are not independent adult probation supervising agencies (before they are asked the full complement of survey questions) and correctly screen into the final CAPSA frame those that are.

Determine the capacity of respondents to answer each question, identify sections of the questionnaire that are unclear, and examine the questions where problems of nonresponse or missing information might occur.

Test the functionality of the online survey tool, including survey response protocols for identifying agencies that are missing from the initial CAPSA roster to screen them to determine eligibility.

Determine the burden associated with the survey process, including time for reviewing instructions, searching existing data sources, gathering the data needed, and completing and reviewing responses.

As part of survey development to prepare for the pilot test, six public probation agencies (i.e., state or municipal) responded to draft questions and provided comments about survey content, terminology, and question wording. Key findings included the following:

Most study definitions (e.g., probation, supervision, subsidiary office) were well understood. However, some terms such as “top-administrative body,” “central office,” and asking if the budget was “federally-funded,” “state-funded,” posed challenges to respondents.

Respondents were often unclear about the definition of the focal agency. Issues associated with centralized authority and responsibility for direct supervision made it difficult to answer some questions without a more clear and applicable definition.

Use of a June 30 reference date worked well for all participants. Agencies maintain the data needed to answer the questions using that date because it corresponds to the end of their fiscal year and/or because it is a midyear point.

Having incorporated the lessons from the survey development activities, the proposed developmental work will allow BJS and Westat to assess if, and to what extent, the survey instruments must be further tailored to accommodate the various organizational structures of probation supervision found across the county. The probation supervision programs in New Jersey, Kentucky, Michigan, and Texas illustrate the variety and complexity of organizational structures. In New Jersey, probation is centrally administered through the judicial branch, specifically, by the Administrative Office of the Courts (AOC). The AOC establishes policies and procedures which are implemented in 15 probation districts across the state. Probation in Kentucky is also centrally administered, but through the executive branch rather than the judicial branch. Fifty-five field offices throughout the state adhere to the policies and procedures set forth by the Kentucky Department of Corrections (DOC). Michigan is more complicated as felony and misdemeanant probation are administered by separate bodies and through different branches of government. Felony probation is centrally administered by an executive branch agency, the state DOC. However, misdemeanant probation is decentralized and is the responsibility of approximately 80 district courts that oversee cases disposed in their districts. At the other end of the spectrum are states like Texas, where probation is completely decentralized. In Texas, more than 100 Community Supervision and Corrections Departments (CSCDs) operate independently from any state-level body. District and county court judges are responsible for the operations of each CSCD. In addition, jurisdictions, such as Georgia and Florida, establish contracts with private agencies to supervise adult probationers.

Sixty supervising probation agencies will be invited to participate in the pilot test. Westat will test the questionnaire for public agencies using a web-based data collection methodology with 48 public agencies (see Attachment A for the public agency questionnaire). Through a subcontract with Westat, APPA staff will test a paper survey version of the CAPSA questionnaire tailored to private agencies that perform probation functions, among 12 private agencies.6 (See Attachment B for the private company questionnaire; see page 5 for more information about the design and number of private agencies.)

The pilot for the CAPSA will require contacting a variety of agencies because of the inherent differences across the population of agencies, as previously described. (See below for more information about the sample size). Specifically, the pilot test will assess the extent to which the survey instruments successfully function in the following ways:

Accommodate the differences between agencies associated with branch of government (i.e., executive versus judicial), level of government (i.e., state versus local), and degree of centralization.

Elicit from respondents answers that serve to only screen out ineligible agencies and only screen in eligible agencies; the screening items must employ standardized definitions that control for the presence of idiosyncratic definitions that might be used by individual agencies.

Provide respondents with a means of responding (i.e., online or by telephone) based on their access to the Internet and the complexity of the response task. The experience of conducting the APS suggests that at least 10 percent of agencies will be unable to respond via the Internet. Regardless of response mode, respondents will be asked to review lists of known probation supervising agencies in their state and to report any additional agencies that are missing from the list. Respondents must be provided tools to complete this potentially complex task that minimize burden and maximize response and data quality.

To meet the challenges posed by these requirements, the pilot test design calls for surveying 48 public probation supervising agencies that represent the four branch-level configurations shown in Table 1 (next page). A purposive sample of agencies will be selected based on information from available sources (e.g., initial CAPSA roster, APS data from the 2012 survey year, and other sources). While the literature on optimal sample sizes for pilot tests is not extensive, the research that has been conducted recommends that a reasonable minimum sample size for survey or scale development is about 30 participants from the population of interest, but if different groups exist within the population, the literature recommends 12 participants per group.7 The sample sizes for the CAPSA pilot test of the survey instrument are based on the latter recommendation.

Table 1. Proposed target pilot sample of public agencies

-

Agency type

Number

Executive branch

24

State

12

Local

12

Judicial branch

24

State

12

Local

12

Total

48

As previously explained, data collection from the private agencies will be done via a paper questionnaire, with telephone follow-up for nonresponse. The rationale for using a different mode for the private agency collection is twofold:

The questionnaire for the private agencies will be different, and shorter, than the one used for the public agencies. This is due to the differences in the structure and organization between public and private agencies (i.e., less variation). For example, public agencies will not be asked about the branch of and level of government in which they are located.

Only up to 12 private agencies will be asked to participate in the pilot study. This sample size is based on the recommendations from the literature about optimal pilot sample sizes for survey development and the fact that the expected number of such agencies to screen on the initial CAPSA roster (less than 150) and the variation across the private agencies is significantly less than public agencies. The costs associated with programming an instrument are not warranted for this number of respondents in the pilot test.

The 12 private agencies will be selected based on the number of jurisdictions within which they operate and the relative size of the adult probation populations they supervise.

Data collection is proposed to begin in early July 2013 and end in September 2013. The pilot test will be implemented through several steps. First, a study packet will be mailed to the agency head that includes a cover letter describing the purpose of the study and the type of information that will be requested, and an Agency Information Form (AIF) on which the agency head can provide contact information for the respondent. The AIF sent to public agencies will ask the agency head to indicate if the respondent will be able to access the survey via the Internet. Agency heads will be asked to fax, email, or mail the AIF to Westat. (See Attachment C for contact materials.)

A study packet will be mailed to each designated respondent that includes a cover letter explaining the purpose of the study and a one-page bulleted summary describing the topics that will be addressed in the questionnaire. The packet will also contain a one-page set of study definitions and a one-page listing of known adult probation supervising agencies in the state. Respondents will be asked to review these prior to completing the survey in order to minimize time spent during the interview and to maximize the quality of the data provided. If the respondent is to complete the survey via the web, the cover letter will include instructions for logging in to the survey website (i.e., the ULR and unique agency PIN). (See Attachment C for respondent contact materials and web screen shots.) Approximately one week after the respondent mailing, Westat telephone interviewers will begin conducting interviews (using the web instrument) with those respondents that will not be able to complete the survey online (see Attachment C for telephone interview script).8

Also about one week after the respondent mailing, Westat will send a thank-you/reminder postcard to all public agencies assigned to the web mode (see Attachment C for postcard). This postcard will thank those who have completed the survey and encourage those who have not responded to complete the web survey or contact Westat to complete a telephone interview. Westat telephone interviewers will begin telephone prompting non-respondents approximately one month after the thank-you/reminder correspondence. (See Attachment C for prompting script.) These contacts will serve to verify receipt of materials, answer questions, determine and attempt to resolve potential problems with timely submission, and prompt for questionnaire completion.

Approximately two weeks after the respondent mailing, APPA staff will begin telephone follow-up activities with private agencies that have not responded to the mail survey request. The staff will verify receipt of the materials, answer questions, and attempt to conduct a telephone interview (using the paper questionnaire) with the respondent if they indicate a preference to complete the survey by telephone.

At the end of the data collection period, Westat (and APPA), on behalf of BJS, will send a thank-you letter to the agency head of all agencies that participated in the pilot and a copy of the letter to the respondent. (See Attachment C for thank-you letter.)

As surveys are submitted, Westat will review the data for consistency across items and with agency characteristics known from external sources. The results of these comparisons will be the basis for assigning cases for telephone follow-up. The primary goal of the follow-up activity is to identify and clarify the cause of unexpected or inconsistent responses, for example experiences of the agency that could not be conveyed using the scripted questions, lack of comprehension by the respondent, and items that did not apply to the responding agency. The trained interviewing staff will contact the respondents to discuss the inconsistencies; since the focus of these conversations is unique to each case, no scripted protocol has been prepared.

As the survey collects information about organizations and not individuals, Westat’s Institutional Review Board determined that the activities associated with data collection for this project are not considered human subjects research and obtaining informed consent is not required. However, all respondents will still be informed of the purpose of the collection, the voluntary nature of the study, and the time associated with participation in the contact materials, questionnaires, and telephone interview scripts. No incentives are planned.

According to Title 42 U.S.C. 3735, the information gathered in this data collection shall be used only for statistical or research purposes, and shall be gathered in a manner that precludes their use for law enforcement or any purpose relating to a particular individual other than statistical or research purposes. The data collected from the probation supervising agencies represent characteristics of the agencies. No individually identifiable information will be collected.

Data will be housed at Westat in Rockville, MD on Westat’s secure computer system which is password protected and data will be protected by access privileges assigned by the appropriate system administrator. All systems are backed up on a regular basis and are kept in a secure storage facility. Westat will transfer the data to BJS using Secure File Transfer Protocol (FTP). All files copied to Westat's Secure Transfer Site are securely stored and transferred using Federal Information Processing Standards (FIPS) 140-2 validated Advanced Encryption Standard (AES) encryption, recognized as the U.S. Federal government encryption standard. Westat will create a personal account for BJS with login ID and password. Westat will retain the data on site until the end of the grant, at which time the data will be destroyed.

Once the CAPSA data are made available to BJS to securely download, they will be physically stored at BJS which is located in a secure building that includes the DOJ’s, OJP offices. All OJP servers are backed up on a regular basis and stored in a secure location, specifically a locked room with access limited to only information technology personnel from OJP and requiring a badge swipe to enter. Technical control of the CAPSA pilot test data will be maintained through a system of firewalls and protections. Specifically, the data will be stored on a standard secure server behind the DOJ’s firewall and will be protected by access privileges assigned by the BJS information technology specialist.

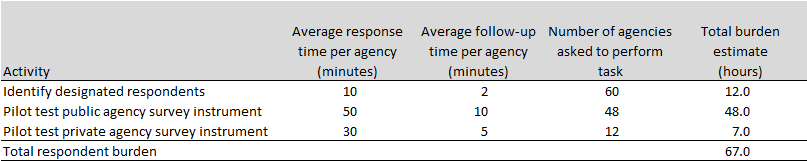

The estimated maximum time to conduct the CAPSA pilot study is about 67 hours. The burden hour estimates are shown in Table 2 (next page). They are divided across two pilot test tasks:

Identifying designated respondents (12.0 hours). All public and private agencies will be asked to identify a staff member who will serve as respondent. In some cases, this might be the agency head, but many times the agency head will designate this responsibility to a member of the agency staff. The agency head will be asked to consider who is most knowledgeable about the types of data requested on the survey when making the designation. Agency heads will be asked to provide contact information for the respondent to the Westat via fax (or telephone or email). This is estimated to take an average of 10 minutes per agency head. Two minutes, on average, is estimated for follow-up if the form is not returned by the due date. Westat will follow up with the agency by telephone to obtain the information.

Pilot testing the survey instruments with follow-up as needed (55.0 hours). The burden hour estimates are based on information collected during the instrument development activities. We anticipate that the average public agency burden will consist of 50 minutes to provide the initial survey response, including the time for reviewing instructions, searching existing data sources, gathering the data needed, and completing and reviewing the collection of information, and 10 minutes to clarify responses or provide data for items that were initially left blank through follow-up efforts. Since the private agency survey instrument is shorter than the public agency instrument, the burden estimate is lower for both the initial response (30 minutes) and follow-up (5 minutes).

Table 2. Estimated burden

Contact Information

Questions regarding any aspect of this project can be directed to:

Lauren Glaze

Statistician and CAPSA Project Manager

Bureau of Justice Statistics

801 7th St, NW

Washington, DC 20531

Office phone: 202-305-9628

E-mail: [email protected]

Attachments

See the list below for additional attachments.

Attachment A: CAPSA questionnaire for public agencies

Attachment B. CAPSA questionnaire for private agencies

Attachment C: All other pilot test materials; see table of contents first to find a particular document

1 If any potential supervising agencies that are missing from the initial roster are discovered during the course of screening and data collection, they too will be screened to determine eligibility.

2 The 1991 Census of Probation and Parole Agencies was BJS’s first and only census of probation agencies. The main goal was to establish a sampling frame of probation agencies and field offices to conduct the 1995 Survey of Adults on Probation (SAP), the first nationally representative survey of probationers.

3 Glaze, Lauren E. & Parks, E. (2012). Correctional Populations in the United States, 2011. Bureau of Justice Statistics, Washington, DC.

4 Given that the CAPSA questionnaire was designed to not only collect information about CAPSA-eligible agencies but also screen ineligible agencies out of the CAPSA, adding more questions to the CAPSA, especially questions that ask for counts such as the number of staff by position, would increase the burden and could potentially adversely affect response rates. Some decisions about which topics to address through the CAPSA or later through an APS supplement were based on these types of issues.

5 Prior to supplementing or implementing any new collections, BJS will submit a clearance package(s) to OMB for approval of such collections once BJS has developed the plans.

6 APPA was chosen for this task due to its status as the national professional organization for community corrections professionals and other stakeholders, including private agencies that perform probation functions. During the course of CAPSA development, BJS and Westat have discovered through meetings and conferences of community corrections groups that private agencies may be reluctant to share proprietary information about the probation functions they perform. In order to maximize the ability of CAPSA to evaluate the instrument and procedures that will be used with this group, APPA will conduct the pilot study activities and learn more about the willingness and ability of these agencies to provide data. For the national implementation of the 2014 CAPSA, APPA’s role will be to assist by soliciting support and encouraging participation by private agencies.

7 Johanson, George A. & Brooks, G.P. (2010). “Initial Scale Development: Sample Size for Pilot Studies.” Educational and Psychological Measurement, 70(3): 394-400. SAGE

8 Prior to data collection, the interviewers will receive training on the purpose and goals of CAPSA and the pilot test. Westat will use seasoned interviewers who have worked on other studies of probation agencies (e.g., APS) and have conducted telephone interviews and follow-up efforts.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Tim Smith |

| File Modified | 0000-00-00 |

| File Created | 2021-01-25 |

© 2026 OMB.report | Privacy Policy