CLPE_OMB Part A

CLPE_OMB Part A.docx

Comprehensive Literacy Program Evaluation: Striving Readers Implementation Study

OMB: 1850-0945

Office of Management and Budget Clearance Request:

Supporting Statement Part A—Justification (DRAFT)

Comprehensive Literacy Program Evaluation: Striving Readers Implementation Study

PREPARED BY:

American

Institutes for Research®

1000 Thomas Jefferson

Street, NW, Suite 200

Washington, DC 20007-3835

PREPARED FOR:

U.S. Department of Education

Institute of Education Sciences

August 2018

Office

of Management and Budget

Clearance Request

Supporting

Statement Part A

August 2018

Prepared

by: American Institutes for Research®

1000 Thomas Jefferson

Street NW

Washington, DC 20007-3835

202.403.5000 | TTY

877.334.3499

www.air.org

Contents

Supporting Statement for Paperwork Reduction Act Submission 2

1. Circumstances Making Collection of Information Necessary 3

3. Use of Improved Technology to Reduce Burden 11

4. Efforts to Avoid Duplication of Effort 12

5. Efforts to Minimize Burden on Small Businesses and Other Small Entities 12

6. Consequences of Not Collecting the Data 12

7. Special Circumstances Justifying Inconsistencies with Guidelines in 5 CFR 1320.6 12

8. Federal Register Announcement and Consultation 12

9. Payment or Gift to Respondents 13

10. Assurance of Confidentiality 13

12. Estimated Response Burden 15

13. Estimate of Annualized Cost for Data Collection Activities 18

14. Estimate of Annualized Cost to Federal Government 18

15. Reasons for Changes in Estimated Burden 18

16. Plans for Tabulation and Publication 18

17. Display of Expiration Date for OMB Approval 18

18. Exceptions to Certification for Paperwork Reduction Act Submissions 18

Introduction

This package requests clearance from the Office of Management and Budget to conduct data collection activities associated with the legislatively mandated evaluation of the Striving Readers Comprehensive Literacy (SRCL) program. The purpose of this evaluation is to provide information to policymakers, administrators, and educators regarding the implementation of the SRCL program, including grant award procedures, technical assistance, continuous improvement procedures, and literacy interventions at the school level. Data collection will include interviews with state-level grantees and district, school, and teacher surveys. In addition, the study team will conduct site visits to 50 schools and observe instruction in 100 classrooms using SRCL-funded literacy interventions. The study team also will collect and review grantee and subgrantee applications and comprehensive literacy plans.

Clearance is requested for the grantee interview, surveys, fidelity site visit components, and collection of extant data, including the purpose, sampling strategy, data collection procedures, and data analysis approach.

The complete OMB package contains two sections and a series of appendices, as follows:

OMB Clearance Request: Supporting Statement Part A—Justification [this document]

OMB Clearance Request: Supporting Statement Part B—Statistical Methods

Appendix

B—Subgrantee Questionnaire and Consent Form

Appendix

C—Principal Questionnaire and Consent Form

Appendix

D—Teacher Questionnaire and Consent Form

Appendix

E—Principal Interview Protocol and Consent Form

Appendix

F—Reading Specialist Interview Protocol and Consent

Form

Appendix G—Teacher Pre-/Post-Observation Interview

Protocol and Consent Form

Appendix H—Request for lists of

subgrantee districts and schools

Appendix I—Request for student achievement data

Appendix J—Request for teachers

rosters in schools sampled for survey administration

Supporting Statement for Paperwork Reduction Act Submission

This package requests clearance from the Office of Management and Budget (OMB) to conduct data collection activities for the legislatively mandated evaluation of the Striving Readers Comprehensive Literacy Program. The Institute of Education Sciences, within the U.S. Department of Education, awarded the “Comprehensive Literacy Program Evaluation” contract to conduct this evaluation to American Institutes for Research (AIR) and its partners Abt Associates, National Opinion Research Center (NORC) and Instructional Research Group (IRG) in May 2018.

In recent years, educational policy has focused on college and career readiness, but many U.S. students still do not acquire even basic literacy skills. Students living in poverty, students with disabilities, and English learners (ELs) are especially at risk. By grade 4, there is a substantial gap in reading achievement between students from high- and low-income families (as measured by eligibility for the national school lunch program). According to the National Assessment of Educational Progress, the average student from a high-income family is at about the 65th percentile of the distribution of reading achievement, whereas the average student from a low-income family is at about the 35th percentile. Gaps by grade 8 are only slightly smaller. Average grade 4 scores for students with disabilities (17th percentile) and ELs (18th percentile) are even lower than for low-income students.*

To narrow the gap in literacy between disadvantaged students and other students, in 2011 the federal government launched the Striving Readers Comprehensive Literacy (SRCL) program. SRCL is a discretionary federal grant program authorized as part of Title III of Division H of the Consolidated Appropriations Act of 2016 (P.L. 114-113) under the Title I demonstration authority (Part E, Section 1502 of the Elementary and Secondary Education Act (ESEA). The goal of SRCL is to advance the literacy skills, including preliteracy and reading and writing skills, of children from birth through grade 12, with a special focus on improving outcomes for disadvantaged children, including low-income children, ELs, and learners with disabilities. SRCL is designed to achieve these goals for children by awarding grants to state education agencies (SEAs) that in turn use their funds to support subgrants to local education agencies (LEAs) or other nonprofit early learning providers to implement high-quality literacy instruction in schools and early childhood education programs. Ultimately, this enhanced literacy instruction is the mechanism through which student reading and writing are expected to be improved.

This submission requests clearance to conduct data collection for an implementation evaluation of the SRCL grants given to 11 states in 2017, totaling $364 million. The implementation evaluation will describe the extent to which (a) the activities of the state grantees and of the funded local subgrantees meet the goals of the SRCL program, and (b) the extent to which the literacy instruction in the funded subgrantees’ activities reflects the SRCL grant program’s definition of high-quality, comprehensive literacy programming. Most SEAs are expected to give awards to subgrantees at the end of the first grant year, with LEAs implementing SRCL literacy instruction in a selected set of schools and early childhood centers the second and third grant years. The implementation study will cover SEA and LEA activities over the entire three-year grant period. The analyses for this evaluation will draw on the following data sources:

Grantee interviews

Surveys of subgrantees, principals, and teachers

Fidelity site visits, including classroom observations and interviews with principals, reading specialists, and teachers

Extant data, including documents (grantee applications, state requests for subgrant applications, subgrant applications, and literacy plans) and Reading/English language arts standardized test score data. The study team will also request grantees to provide lists of all subgrantees.

In addition to the implementation evaluation of SRCL, the Comprehensive Literacy Program Evaluation contract also includes the required national evaluation of the successor program to SRCL in the reauthorized ESEA called the Comprehensive Literacy State Development (CLSD) grants program. An additional OMB clearance package will be submitted for this component of the Comprehensive Literacy Program Evaluation in March 2020.

Justification

1. Circumstances Making Collection of Information Necessary

a. Statement of need for an evaluation of SRCL

The Elementary and Secondary Education Act (section 1502(b)) requires the U.S. Department of Education to evaluate demonstration projects supported under Section 1502, such as SRCL.

The SRCL program first awarded grants to six states from 2011 to 2015, but there is limited knowledge about program implementation and no knowledge about its impact on teachers and students. A previous report on the grantees in the first SRCL cohort found that grantees in the first SRCL cohort appear to have generally implemented each component of the program, and, consistent with the intent of the program, more than three-fourths of the students whom the grantees served were disadvantaged (Applied Engineering Management Corporation, 2016). However, there were no rigorous impact evaluations of the first SCRL cohort and no comprehensive, consistent reporting of grantees’ and subgrantees’ activities. This second round of awards to 11 SRCL grantees—ranging from $20 million to about $62 million—provides an opportunity to understand whether grantees have implemented the key components of the SRCL program as intended.

The Comprehensive Literacy Program Evaluation contract includes a robust implementation evaluation of the SRCL grants awarded to 11 states in 2017 and will cover SEA and LEA activities over the entire 3-year grant period. The evaluation’s data collection will permit an assessment of the extent to which the program is implemented as intended and the outcomes for students. Given the scope of the challenge and the federal investment in SRCL, it is critically important that policymakers, administrators, and educators have access to information on the implementation of these grants.

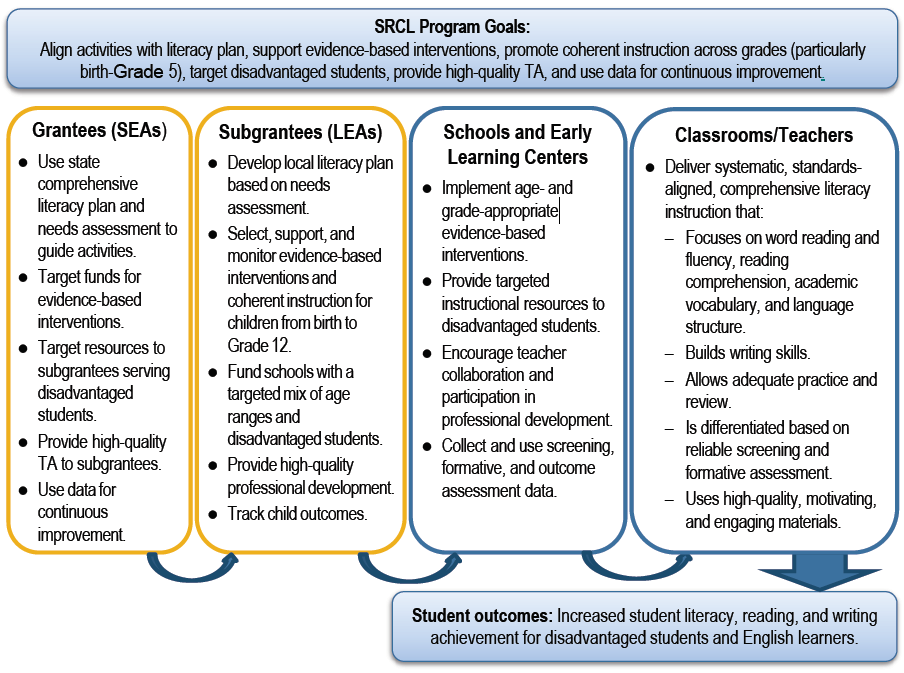

b. SRCL Logic Model

The evaluation is guided by a logic model of the SRCL program (Exhibit 1). The overarching goals of the program are delineated in the top box of the logic model. These are as follows:

Targeting of grantee funds to subgrantees that have proposed approaches to high-quality literacy instruction based on local plans that are aligned with the state literacy plan and are informed by local needs assessments;

Targeting of grantee funds to subgrantees that have proposed literacy interventions for students from birth through grade 12 with rigorous evidence of effectiveness with relevant ages and that are aligned across ages with a specific focus on the transition from preschool to kindergarten;

Targeting of grantee funds to subgrantees serving a greater number or percentage of disadvantaged students;

Provision of high-quality technical assistance to support implementation of selected literacy interventions; and

Collection and use of data to evaluate the implementation and outcomes of the literacy instruction being delivered, as well as to inform a continuous improvement process.

The body of the logic model includes four columns that outline the cascading set of activities and processes that the ED requires or expects of grantees and subgrantees in order to meet the SRCL program goals. The logic model illustrates, from left to right, expectations of state grantees, of subgrantees that are awarded funding by the grantees, and of local schools and early learning programs selected by the subgrantees to implement the SRCL evidence-based literacy instruction for students across the age span. The pathway implied in the logic model is as follows: If grantees and subgrantees enact the processes and activities laid out by the SRCL program, the result will be enhanced literacy instruction and, ultimately, improved student reading proficiency. High-quality literacy instruction is the most proximal or immediate goal of the SRCL activities at the state and local levels and is essentially the key mediator of the ultimate goal of SRCL: improved reading performance for students from birth through grade 12.

Exhibit 1. Preliminary SRCL Logic Model

The logic model reflects the core provisions of the SRCL program, which also serve as a basis for the data collection instruments associated with this study. The SRCL program established a set of requirements for SEAs to be eligible to receive funding. First, states must comply with statutory regulations for funds allocation: 95% of funds need to be allocated to subgrant awards, and of the subgranted funds, 15% need to be designated for serving children from birth to age 5, 40% to children from kindergarten through grade 5, and 40% to children in middle school and high school. In addition, states are required to develop a new or revised comprehensive literacy plan informed by a recent, comprehensive state needs assessment.

States must also comply with regulations related to funding of subgrantees. First, states are required to fund only those subgrantees that have a high-quality local literacy plan informed by a local needs assessment and aligned to the State Comprehensive Literacy Plan. Subgrantees’ local plans must include the following:

Literacy interventions and practices supported by moderate or strong evidence;

Professional development for program staff aligned with the proposed interventions and practices; and

Plan for how children’s outcomes will be measured to evaluate program effectiveness program and to determine what continuous improvement strategies may be necessary.

In selecting among eligible subgrantees, states are required to give priority to:

Subgrantees that serve greater numbers or percentages of disadvantaged children (as defined by the program), and

Subgrantees whose plan establishes an alignment of programs that serve children from birth through grade 5, including approaches and interventions that are appropriate for each age group and a description of how each approach and intervention will be used to improve school readiness and create seamless transitions for children across the continuum.

States are required to have a plan for three other activities:

Monitoring of subgrantee projects to ensure that the projects meet program goals;

Use of data (including results of monitoring and evaluations and other administrative data) to inform a continuous improvement process; and

Provision of technical assistance to subgrantees in identifying and implementing interventions supported by moderate or strong evidence of effectiveness.

As indicated in the description of how subgrantees are prioritized for funding, subgrantees that receive awards are responsible for four key activities that mirror the activities at the state level:

Developing a local, comprehensive literacy plan informed by a local needs assessment and aligned with the state literacy plan;

Selecting schools and early childhood education programs to implement evidence-based interventions and practices;

Supporting high-fidelity implementation of the interventions and practices by providing professional development to teachers and other school staff; and

Collecting data on student outcomes to support instruction and continuous improvement.

As a result of the activities of the SEAs and LEAs, schools and early learning programs are expected to implement high-quality literacy instruction using evidence-based literacy interventions. The instruction is expected to meet the following criteria:

Be age appropriate, systematic, and explicit;

Cover the pillars of reading: phonological awareness, phonic decoding, vocabulary, fluency, comprehension, language structure;

Demonstrate high fidelity of implementation;

Be informed by student assessments that generate information on student progress and needs;

Use highly engaging materials that motivate student engagement and learning; and

Provide frequent opportunities for students to practice reading and writing as part of instruction.

Ultimately, by implementing and supporting a progression of high-quality approaches to literacy instruction appropriate for each age group, the academic achievement of children in the LEAs will improve. Goals for students include increased oral language skills for preschool children and increased reading achievement of elementary, middle, and high school students.

c. Evaluation Questions and Study Design

This evaluation will address six primary questions; ED is requesting OMB clearance for the data collection activities associated with each. (See Exhibit 3 for more detailed study questions.)

How do SEAs make subgrant award decisions?

What technical assistance do SEAs provide for subgrantees?

How do SEAs inform continuous improvement and evaluate the effectiveness of subgrantee projects? What data do they collect?

How do subgrantees target SRCL awards to schools and early learning programs?

What literacy interventions and practices are used by SRCL schools and early learning programs?

What are the literacy outcomes for students in SRCL schools and early learning programs, as measured by existing state and/or local assessment data?

In brief terms, the SRCL implementation study will provide descriptive analyses of how the grant is unfolding at the state, district, school, and classroom levels, including topics such as grant administration, instructional practices, professional learning, and student outcomes. The study team will collect data from the universe of SEA grantees and LEA subgrantees, administer nationally representative surveys of principals and teachers, conduct fidelity site visits to 50 schools, and analyze student achievement data. The analysis approach is discussed below in section A.16 and Supporting Statement Part B.

d. Data Collection Needs/Plan/Schedule

The evaluation includes several complementary data collection activities that will allow the study team to address the evaluation questions. Exhibit 2 presents the data collection instruments, needs, respondents, modes, and schedule. Additional details about the data sources are provided in section 2 below.

Exhibit 2. Data Collection Needs

|

Data Collection Activity |

Data Need |

Respondent |

Mode |

Schedule |

Grantee interviews |

Information on subgrant award decisions, technical assistance, and support for continuous improvement |

State SRCL project directors |

Telephone interviews using semi-structured protocol |

Fall 2018 Fall 2019 |

|

Extant data |

Policy document review |

Review of grantee applications, state RFAs, subgrantee applications, and comprehensive literacy plans |

NA |

Retrieved from SEA websites |

Fall 2018 |

Request for subgrantee information |

List of all subgrantees and SRCL schools in a given state, including grades included in SRCL |

State education agencies, only if not available through websites |

Retrieved from SEA websites, email request if necessary |

Fall 2018 |

|

Request for student achievement data |

Reading/English language arts standardized test score data and student demographic information |

State education agencies or school districts |

Electronic communication request |

Fall

2018, |

|

SRCL surveys |

Subgrantee surveys |

Information on use of subgrant funds, targeting schools, activities in SRCL schools, continuous improvement |

SRCL subgrantee (consortia or district) project directors |

Online questionnaire |

Spring 2019 |

Principal surveys |

Activities in SRCL schools, including curricula, coaching, professional development, evidence for reading interventions, vertical alignment, use of data |

Principals

of SRCL-funded schools |

Online questionnaire |

Spring

2019 |

|

Teacher surveys |

Literacy instruction, differentiation, topics covered, use of engaging materials, professional development, coaching, use of data |

Teachers in SRCL schools and educators in SRCL early learning programs |

Online questionnaire |

Spring

2019 |

|

Teacher roster request |

List of teachers in each school sampled for surveys, to draw teacher sample |

Principal or school liaison in SRCL schools sampled for survey administration |

Electronic communication |

Winter 2019 |

|

Fidelity Site Visits |

Classroom observations |

Evidence-based intervention implementation fidelity |

NA |

In-person observation |

Spring 2020 |

Principal interviews |

Literacy instruction, literacy interventions or curricula, use of data, differentiation, SRCL funds, district literacy plan |

Principals in schools observed during fidelity site visits |

On-site interview |

Spring 2020 |

|

Reading Specialist interviews |

Coaching activities, literacy instruction, differentiation, use of curricula, literacy assessments, district literacy plan |

Reading specialists in schools observed during fidelity site visits |

On-site interview |

Spring 2020 |

|

Teacher interviews |

Literacy instruction, literacy curricula, use of data, differentiation, professional development |

Teachers whose classes are observed during fidelity site visits |

On-site interview |

Spring 2020 |

|

2. Use of Information

Data for the evaluation will be collected and analyzed by the contractor selected under contract 91990018C0020. The study team will use the data collected to prepare a report that clearly describes how the data address the key evaluation questions, highlights key findings of interest to policymakers and educators, and includes charts and tables. The report will be written in a manner suitable for distribution to a broad audience of policymakers and educators and will be accompanied by an implementation evaluation brief. The U.S. Department of Education (ED) will publicly disseminate the report through its website.

The data collected will be of immediate interest to and significance for policymakers and practitioners in that it will provide timely, detailed, and policy-relevant information on a major federal program. The study will offer unique insight on how grantees and subgrantees implement, evaluate, and seek to improve practices associated with SRCL grants. The data collected in the evaluation will be used to address the evaluation questions, as shown in Exhibit 3. In addition to the overarching study questions, more specific subquestions are associated with each. Details about each data source are discussed in the section following Exhibit 3. The Institute of Education Sciences requests clearance for all data collection activities with the exception of the classroom observations, which do not impose burden on the observed teachers. However, all data sources are included here to provide a comprehensive overview of the implementation study as a whole.

Exhibit 3. Evaluation Questions and Associated Data Sources

|

Study Components |

|||

Grantee Interviews |

Extant Data |

SRCL Surveys |

Fidelity Site Visits* |

|

1. How do state education agencies (SEAs) make subgrant award decisions? |

||||

1.1 How do SEAs determine the extent of alignment between the local literacy plan and the local needs assessment and state comprehensive literacy plan? |

ü |

Document review |

|

|

1.2 How do SEAs determine whether the proposed literacy interventions and practices across the age span (birth through grade 12) are supported by moderate or strong evidence of effectiveness? |

ü |

Document review |

|

|

1.3 How do SEAs target subgrantees that serve the greatest number of disadvantaged children? |

ü |

Document review |

|

|

1.4 How do SEAs target subgrantees that have aligned programs for children 0 to 5 years and children in kindergarten through grade 5? |

ü |

Document review |

|

|

1.5 In making awards, how do SEAs consider subgrantee plans for providing high-quality professional development to teachers, early childhood providers, other school staff, and/or parents? |

ü |

Document review |

|

|

2. What technical assistance do the SEAs provide to their subgrantees? |

||||

2.1 What technical assistance do SEAs provide on identifying, selecting, and implementing literacy interventions/practices supported by moderate or strong evidence? |

ü |

Document review |

Subgrantee |

|

2.2 What technical assistance do SEAs provide on developing a local literacy plan aligned with local needs and the state’s comprehensive literacy plan? |

ü |

Document review |

Subgrantee |

|

3. How do the SEAs inform continuous improvement and evaluate the effectiveness of subgrantees’ projects? |

||||

3.1 What data do SEAs collect to inform continuous improvement and evaluate effectiveness? |

ü |

Document review |

Subgrantee |

|

4. How do subgrantees target Striving Readers Comprehensive Literacy (SRCL) program awards to eligible schools and early learning programs? |

||||

4.1 What were the characteristics of the subgrantees, teachers, and students in funded subgrantees? |

ü |

|

Subgrantee |

|

4.2 What are the primary literacy-related activities that are being supported with subgrantees’ SRCL awards? |

ü |

|

Subgrante |

|

5. What literacy interventions and practices are used by SRCL schools and early learning programs? |

||||

5.1 To what extent are SRCL interventions and practices aligned with local needs and the SEA’s state comprehensive literacy plan? |

|

|

Subgrantee Principal |

|

5.2 To what extent are SRCL interventions and practices supported by relevant moderate or strong evidence? |

|

|

Subgrantee |

|

5.3 To what extent are SRCL interventions and practices differentiated and appropriate for children from birth through age 5 and children in kindergarten through grade 5? |

|

|

Principal |

ü |

5.4 To what extent do SRCL subgrantees use assessments to identify student needs, inform instruction, and monitor progress? |

|

|

Principal |

ü |

5.5 To what extent do SRCL subgrantees provide age-appropriate, explicit, and systematic instruction and frequent practice in reading and writing, including phonological awareness, phonic decoding, vocabulary, language structure, fluency, and comprehension? |

|

|

Teacher |

ü |

5.6 What is the fidelity of implementation of the SRCL interventions and practices? |

|

|

|

ü |

5.7 What professional development do teachers receive to support high-quality literacy instruction? |

|

|

Teacher |

|

6. What are the literacy outcomes for students in SRCL schools and early learning programs, as measured by existing state and/or local assessment data? |

||||

6.1 Do outcomes improve over time? Are outcomes better in SRCL-funded schools than in non-SRCL-funded schools? |

|

Student achievement data |

|

|

*The fidelity site visits include multiple data collection activities: classroom observations, principal interviews, reading specialist interviews, and teacher interviews.

Grantee interviews: The primary purpose of the grantee interviews is to understand the ways SEAs allocate subgrant awards and support subgrantees’ implementation. The grantee interview protocol will enable the study team to verify or clarify elements of the applications, explore the rationale for grantee approaches, and collect additional information about subgrantee award procedures and evidence supporting selected interventions.

Extant data: There are three main data requests for extant data, which will be used for analyses related to RQ1 and RQ6. These include a policy document review, subgrantee information request, and a request for student achievement data.

Policy document review: To address the subquestions for RQ1, the study team will retrieve grantee applications, state requests for applications for subgrants, comprehensive literacy plans, and other SRCL program documents from SEA websites. If necessary, the team will request that states provide specific information that is not available on SEA websites, but this will include fewer than nine respondents.

Subgrantee information request: To address subquestions for RQ1 and to ensure the study team has appropriate contact information for subgrantee survey administration, the study team will request complete lists of all awarded subgrantees in each state, including districts and consortia, as well as the schools funded by each subgrantee.

Student achievement data: To study the outcomes for students who attended SRCL schools, we will collect student-level data from each state. To permit us to compare outcomes for students at SRCL and non-SRCL schools, we will request data covering all schools in each state. For all students in grades 3 to 8, we will request deidentified data on student state math and English/language arts assessments, along with the name of the school attended and the following student background characteristics and program participation data: gender, ethnicity, race, English learner status, special education status, and eligibility for free or reduced-price lunch. These data will allow us to study students by subgroups in addition to examining all students in a school. We will request data on students with a common identifier across years, starting the 2017-2018 school year (the year before the SRCL funding began) and ending with the 2019-2020 school year.

Surveys: The contractors will administer three surveys for the SRCL evaluation: a subgrantee survey, principal survey, and teacher survey. To administer the school-level surveys, the study team will work with each sampled schools to identify a liaison at the school (the “school liaison”) that will support the study’s data collection activities during the 2018-19 and 2019-20 school years.

Subgrantee survey: This survey will be administered to all subgrantees, both consortia and individual districts. The survey instrument includes questions related to targeting of SRCL schools, use of SRCL funds, and district-level SRCL activities and supports.

Principal survey: The principal survey will be administered to 600 principals in all SRCL-funded states. This survey will be used to measure literacy practices and interventions, the principal’s own literacy-related professional development activities, the use of data for continuous improvement, and changes associated with the SRCL grant. This survey will be administered through an online platform in spring 2019 and 2020.

Teacher surveys: The teacher survey, administered to 3,700 teachers, will be used to measure literacy instruction and supports in SRCL schools. The survey will include, for example, items about teachers’ instructional practices, engaging literacy materials, literacy-related professional development, support from instructional coaches, use of data, and changes in literacy instruction from the prior year. Teacher surveys will be administered online in the spring of 2019 and spring of 2020. For teachers who do not respond to the online survey, the study team will mail a hard copy of the survey to their schools.

Teacher Rosters: To permit sampling of teachers for the survey, sampled schools will be asked to submit rosters of teachers employed. At the elementary school level, the study team will request the names of all teachers and reading specialists; at the secondary level the request will focus on English/language arts teachers, teachers who provide language support to English learners, and special educators who focus on literacy. Rosters will be requested in winter 2019.

Fidelity site visits: To measure the fidelity of implementation of literacy interventions supported by SRCL, the study team will identify the five most frequently-used literacy programs or curricula, based on the teacher survey. Data will be collected through classroom observations and interviews with principals, teachers, and reading specialists. Recruiting district and school sites will involve conversations with district and school administrators, which is included in the burden estimates for this study.

Interviews: The principal, reading specialist, and teacher interviews will detail the literacy context in each school, including time allocated to literacy, instructional practices, professional learning, coaching and other supports, challenges, and changes attributed to SRCL.

Classroom Observations: To describe the fidelity of implementation of the five most frequently-used interventions within the SRCL sampled of surveyed schools, the study team will conduct classroom observations in 100 classrooms within 50 schools, nested within 15 districts. Note that IES is not requesting clearance for this data collection because the observations do not impose burden, and the instruments have not yet been developed. To create fidelity measures for the interventions, the study team will seek existing measures from the developers or measures used in prior research. If not available, they will create new measures, in collaboration with the developers, using relevant instruction manuals, professional development materials, or other resources provided to schools using the interventions. The measures will detail the practices that define full implementation, define indicators, and set thresholds for adequate implementation.

Most of the data collection activities described above will generate self-reported data from grantees, subgrantees, principals, and teachers. These data will be supplemented with data collected directly by the implementation evaluation team that will allow us to make independent assessments of the extent to which grantees and subgrantees are meeting two of the SRCL goals, rather than relying exclusively on stakeholder reports. One form of independent verification is reviewing evidence supporting widely-used SRCL literacy interventions using What Works Clearinghouse (WWC) evidence standards. These reviews will be conducted by members of the study team who are certified WWC reviewers. These reviews will provide systematic assessments of the strength of the supporting evidence for the SRCL literacy interventions and practices (i.e., whether the literacy interventions are, in fact, supported by moderate or strong evidence as defined by ED). In addition, classroom observations (described above) will be developed for on-site observation of classrooms, using each for the five most frequently-used SRCL interventions or practices. The classroom observations will provide a second independent assessment of the extent to which SRCL grantees are meeting SRCL goals without relying on self-reported data.

3. Use of Improved Technology to Reduce Burden

The recruitment and data collection plans for this project reflect sensitivity to efficiency and respondent burden. The study team will use a variety of information technologies to maximize the efficiency and completeness of the information gathered for this study and to minimize the burden on respondents at the state, district, and school levels:

Use of extant data. When possible, data will be collected through ED and state websites and through sources such as EDFacts and other web‑based sources. For example, before undertaking data collection activities that impose any burden on respondents, the contractors will review grantee applications, subgrantee applications, and any additional information available on SEA or LEA websites to avoid asking questions that otherwise could be addressed through extant sources.

Online surveys. The subgrantee, principal, and teacher surveys will be administered through a web‑based platform to facilitate and streamline the response process.

Electronic submission of certain data. Grantees will be asked to electronically submit rosters of subgrantees and schools that are receiving SRCL funds.

Support for respondents. A toll‑free number and an email address will be available during the data collection process to permit respondents to contact members of the study team with questions or requests for assistance. The toll‑free number and email address will be included in all communication with respondents.

4. Efforts to Avoid Duplication of Effort

Whenever possible, the study team will use existing data including EDFacts, SRCL grantee and subgrantee applications, and federal monitoring reports. This will reduce the number of questions asked in the interviews and surveys, thus limiting respondent burden and minimizing duplication of previous data collection efforts and information.

5. Efforts to Minimize Burden on Small Businesses and Other Small Entities

The primary entities for the evaluation are state, district, and school staff. We will minimize burden for all respondents by requesting only the minimum data required to meet evaluation objectives. Burden on respondents will be further minimized through the careful specification of information needs. We will also keep our data collection instruments short and focused on the data of most interest.

6. Consequences of Not Collecting the Data

The data collection plan described in this submission is necessary for ED to respond to the legislative mandate for evaluating the SRCL program. The SRCL grant program represents a substantial federal investment, and failure to collect the data proposed through this study would limit ED’s understanding of how the program is implemented and how it supports literacy needs at the local level through comprehensive reading interventions. Understanding the strategies and approaches that the subgrantees and schools implement and how they use SRCL funds will enable federal policy makers and program managers to monitor the program and provide useful, ongoing guidance to states and districts.

7. Special Circumstances Justifying Inconsistencies with Guidelines in 5 CFR 1320.6

There are no special circumstances concerning the collection of information in this evaluation.

8. Federal Register Announcement and Consultation

Federal Register Announcement

ED published a 60-day Federal Register Notice on September 10, 2018, [83 FR 45617]. No substantive public comments have been received to date. The 30-day Federal Register Notice will be published to solicit additional public comments.

Consultations Outside the Agency

The study team has secured a technical working group (TWG) of researchers and practitioners to provide input on the data collection instruments developed for this study as well as other methodological design issues. The TWG consists of researchers with expertise in issues related to literacy, instruction, grant implementation, and evaluation methods. The study team will consult the TWG throughout the evaluation. TWG members included the following:

Kymyona Burk, Mississippi Department of Education

Cynthia Coburn, Northwestern University

Thomas Cook, George Washington University

Barbara Foorman, Florida State University

Pam Grossman, University of Pennsylvania

Carolyn Hill, MDRC

James Kim, Harvard University

Susanna Loeb, Brown University

Timothy Shanahan, Center for Literacy, University of Illinois at Chicago

Sharon Vaughn, University of Texas—Austin

9. Payment or Gift to Respondents

We are aware that teachers are the targets of numerous requests to complete data collection instruments on a wide variety of topics from state and district offices, independent researchers, and that ED and several decades of survey research support the benefits of offering incentives. Specifically, we propose incentives for the teacher surveys to partially offset respondents’ time and effort in completing the surveys. We propose offering a $25 incentive to teachers each time he or she completes a survey to acknowledge the 25 minutes required to complete each survey. This proposed amount is within the incentive guidelines outlined in the March 22, 2005 memo, “Guidelines for Incentives for NCEE Evaluation Studies,” prepared for OMB. Incentives are proposed because high response rates are needed to ensure the survey findings are reliable and data from the teacher survey are essential to understand literacy instruction and professional supports in SRCL-funded schools.

The study team has reviewed the research literature on the effectiveness of incentives in increasing survey response rates. In the Reading First Impact Study commissioned by ED (OMB control number 1850-0797), monetary incentives were found to have significant effects on response rates among teachers. A sub-study requested by OMB on the effect of incentives on survey response rates for teachers found significant increases when an incentive of $15 or $30 was offered to teachers as opposed to no incentive (Gamse et al., 2008). In another study, Rodgers (2011) offered adult participants $20, $30, or $50 in one wave of a longitudinal study and found that offering the highest incentive of $50 showed the greatest improvement in response rates and also had a positive impact on response rates for the next four waves. The total maximum cost of the incentives would be $185,000, assuming all 3,700 teachers in the 600 selected schools complete the survey in each of the two years.

10. Assurance of Confidentiality

All the contractors associated with this study are vitally concerned with maintaining the anonymity and security of their records. The project staff has extensive experience in collecting information and maintaining the confidentiality, security, and integrity of survey and interview data. All members of the study team have obtained their certification on the use of human subjects in research. This training addresses the importance of the confidentiality assurances given to respondents and the sensitive nature of handling data. The team also has worked with the Institutional Review Board at the American Institutes for Research (AIR, the prime contractor) to seek and secure approval for this study, thereby ensuring that the data collection complies with professional standards and government regulations designed to safeguard research participants.

The study team will conduct all data collection activities for this evaluation in accordance with all relevant regulations and requirements. These include the Education Sciences Institute Reform Act of 2002, Title I, Part E, Section 183, that requires “[all] collection, maintenance, use, and wide dissemination of data by the Institute … to conform with the requirements of section 552 of Title 5, United States Code, the confidentiality standards of subsections (c) of this section, and sections 444 and 445 of the General Education Provisions Act (20 U.S.C. 1232 g, 1232h).” These citations refer to the Privacy Act, the Family Educational Rights and Privacy Act, and the Protection of Pupil Rights Amendment.

Respondents will be assured that confidentiality will be maintained, except as required by law. The following statement will be included under the Notice of Confidentiality in all voluntary requests for data:

Information collected for this study comes under the confidentiality and data protection requirements of the Institute of Education Sciences (The Education Sciences Reform Act of 2002, Title I, Part E, Section 183). Responses to this data collection will be used only for statistical purposes. The reports prepared for this study will summarize findings across the sample and will not associate responses with a specific district, school or individual. We will not provide information that identifies you or your school or district to anyone outside the study team, except as required by law. Additionally, no one at your school or in your district will see your responses.

Aside from student achievement data (which will be collected without student identifying information) this study does not include the collection of sensitive information. All respondents will receive information regarding interview or survey topics, how the data will be used and stored, and how their confidentiality will be maintained. Individual participants will be informed that they may stop participating at any time. The goals of the study, the data collection activities, the risks and benefits of participation, and the uses for the data are detailed in an informed consent form that all participants will read and sign before they begin any data collection activities. The signed consent forms collected by the project staff will be stored in secure file cabinets at the contractors’ offices.

The following safeguards are routinely required of contractors for IES to carry out confidentiality assurance, and they will be consistently applied to this study:

All data collection employees sign confidentiality agreements that emphasize the importance of confidentiality and specify employees’ obligations to maintain it.

Personally identifiable information (PII) is maintained on separate forms and files, which are linked only by sample identification numbers.

Access to a crosswalk file linking sample identification numbers to personally identifiable information and contact information is limited to a small number of individuals who have a need to know this information

Access to hard copy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded materials are shredded.

Access to electronic files is protected by secure usernames and passwords, which are only available to approved users. Access to identifying information for sample members is limited to those who have direct responsibility for providing and maintaining sample crosswalk and contact information. At the conclusion of the study, these data are destroyed.

Sensitive data are encrypted and stored on removable storage devices that are kept physically secure when not in use.

The plan for maintaining confidentiality includes staff training regarding the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses. It also includes built-in safeguards concerning status monitoring and receipt control systems.

In addition, all electronic data will be protected using several methods. The contractors’ internal networks are protected from unauthorized access, including through firewalls and intrusion detection and prevention systems. Access to computer systems is password protected, and network passwords must be changed on a regular basis and must conform to the contractors’ strong password policies. The networks also are configured such that each user has a tailored set of rights, granted by the network administrator, to files approved for access and stored on the local area network. Access to all electronic data files associated with this study is limited to researchers on the data collection and analysis team.

Responses to these data will be used to summarize findings in an aggregate manner or will be used to provide examples of program implementation in a manner that does not associate responses with a specific site or individual. The circumstances of state‑level respondents are somewhat different: The state‑level interviews, by their nature, focus on policy topics that are in the public domain. Moreover, it would not be difficult to identify SRCL directors in each state and thus determine the identity of the state‑level respondents. Having acknowledged this, the study team will endeavor to protect the privacy of the state‑level interviewees and will avoid using their names in reports and attributing any quotes to specific individuals.

The National Opinion Research Center (NORC) is the contractor with primary responsibility for survey data collection and management. NORC maintains a long-standing adherence to protecting respondent confidentiality and has instituted stringent data security controls. All staff also must read and sign a legally binding pledge to uphold the confidentiality provisions established under the Privacy Act of 1974. Furthermore, all personally identifiable information will be removed from respondent data, and unique identification numbers will be assigned. To ensure computer and data security, NORC follows the NIST 800-53 R4 framework and complies with federal regulations as follows:

The web survey application runs as a two- or three-tier model: web server, application (app) server, and database. The app server and database servers are located on the NORC internal network. The web servers are separated by firewalls from the internet and the internal network.

All firewall rules are customized for each web server. Only the required ports are allowed through the firewall.

All web applications use HTTPS/TLS encryption.

All NORC servers follow the Center for Internet Security configuration standard.

All NORC servers run the McAfee® Antivirus Software. Updates are pushed out daily or when there is a critical update.

All NORC servers are physically located in a secured data center with card key access. The data center has its own cooling and environmental controls from the rest of the building.

NORC uses an intelligent log management system for all servers. The log management system monitors all servers in real time for errors.

NORC internally and externally monitors all web servers.

11. Sensitive Questions

No questions of a sensitive nature are included in this study.

12. Estimated Response Burden

It is estimated that the total hour burden for the data collections for the project is 2,082 hours, including 11 burden hours for the state-level interviews, 1,818 burden hours for the surveys, 33 hours for gaining the cooperation of the fidelity site visits, and 84 hours for school-level interviews. The total estimated cost of $68,010 is based on the estimated, average hourly wages of participants. Exhibit 4 summarizes the estimates of respondent burden for the various project activities.

For the 11 state-level grantee interviews, the study team expects to achieve response rates of 100% because grantees are required to cooperate in the national evaluation. The total burden estimates are 11 hours for these interviews (1 hour per interview, with all 11 subgrantees to be interviewed in 2 years).

For the surveys, we expect to achieve response rates of 92% for subgrantees, 85% for principals, and 85% for teachers. The total burden estimates are 138 hours for subgrantees (for a 30-minute survey), 170 hours for the principal survey (for a 20-minute survey, administered twice), and 1,310 hours for teachers (for a 25-minute survey, administered twice). In addition, we have added hours for securing teacher rosters from schools in order to sample teachers for the survey (200 hours).

The 33 hour burden estimate for the recruitment of the fidelity site-visit schools and their districts includes the following:

A half hour per district for each of 15 districts, and

A half hour per school for each of 50 schools.

The 84-hour burden estimate to conduct the fidelity site-visit data collection includes the following:

A single 30-minute interview with a principal at all 50 schools;

A single 45-minute interview with a reading specialist in all 50 schools; and

A 15-minute debrief interview with each teacher in 100 classrooms after the observation.

The total annual number of responses for this collection is 4,824. The total annual number of burden hours for this collection is 2,082 burden hours.

Exhibit 4. Summary of Estimated Response Burden

Data Collection Activity |

Data Collection Task |

Total Sample Size |

Estimated Response Rate |

Estimated Number of Respond-ents |

Time Estimate (in minutes) |

Total Hour Burden |

Hourly Rate |

Estimated Monetary Cost of Burden |

Extant data |

Request for subgrantee information |

11 |

100% |

11 |

20 |

4 |

$65.23 |

$239 |

Request for student data |

11 |

100% |

11 |

720 |

132 |

$65.23 |

$8,610 |

|

Grantee (SEA) interviews |

Interviews |

11 |

100% |

11 |

60 |

11 |

$65.23 |

$717 |

SRCL surveys |

Subgrantees (LEA) |

300 |

92% |

276 |

30 |

138 |

$44.23 |

$6,104 |

Principals |

600 |

85% |

510 |

20 |

170 |

$43.36 |

$7,371 |

|

Teachers |

3,700 |

85% |

3,145 |

25 |

1,310 |

$27.07 |

$35,462. |

|

Request for school rosters |

600 |

100% |

600 |

20 |

200 |

$27.07 |

$5,414 |

|

Recruitment for fidelity site visits |

District administrator conversation* |

15 |

100% |

15 |

30 |

8 |

$44.23 |

$334 |

School administrator conversation* |

50 |

100% |

50 |

30 |

25 |

$43.36 |

$1,084 |

|

Fidelity site visits

|

Principal interview |

50 |

100% |

50 |

30 |

25 |

$43.36 |

$1,084 |

Reading specialist interview |

50 |

90% |

45 |

45 |

34 |

$27.07 |

$914 |

|

Teacher interview |

100 |

100% |

100 |

15 |

25 |

$27.07 |

$677 |

|

TOTAL |

|

5,498 |

|

4,824 |

|

2,082 |

|

$68,010 |

* Because these are informal conversations, no protocols are associated with these activities.

13. Estimate of Annualized Cost for Data Collection Activities

No additional annualized costs for data collection activities are associated with this data collection beyond the hour burden estimated in item 12.

14. Estimate of Annualized Cost to Federal Government

The estimated cost to the federal government for this study, including development of the data collection plan and data collection instruments as well as data collection, analysis, and report preparation, is $9,013,993. Thus, the average annual cost to the federal government is $3,004,664.33.

15. Reasons for Changes in Estimated Burden

This is a new collection and there is an annual program change increase of 4,824 burden hours and 2,082 responses.

16. Plans for Tabulation and Publication

The contractor will use the data collected to prepare a report that clearly describes how the data address the key study questions, highlights key findings of interest to policymakers and educators, and includes charts and tables to illustrate the key findings. The report will be written in a manner suitable for distribution to a broad audience of policymakers and educators and will be accompanied by a one-page as well as a four-page summary brief. We anticipate that ED will clear and release this report by the fall of 2020. This final report and briefs will be made publicly available on both the ED website and on the AIR website.

17. Display of Expiration Date for OMB Approval

All data collection instruments will display the OMB approval expiration date.

18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions to the certification statement identified in Item 19, “Certification for Paperwork Reduction Act Submissions,” of OMB Form 83-I are requested.

References

Gamse, B.C., Jacob, R.T., Horst, M., Boulay, B., and Unlu, F. (2008). Reading First Impact Study Final Report (NCEE 2009-4038). Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education.

Rodgers, W. L., (2011) “Effects of Increasing the Incentive Size in a Longitudinal Study.” Journal of Official Statistics, 2(27), pp. 279-299.

* Author calculations are based on the Nation’s Report Card (see https://www.nationsreportcard.gov/reading_math_2015/#reading?grade=4).

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Information Technology Group |

| File Modified | 0000-00-00 |

| File Created | 2021-01-20 |

© 2026 OMB.report | Privacy Policy